Preamble

It’s been a rough few months, hasn’t it?

Recent events, including the FTX collapse and the Bostrom email/apology scandal, have led a sizeable portion of EAs to become disillusioned with or at least much more critical of the Effective Altruism movement.

While the current crises have made some of our movement’s problems more visible and acute, many EAs have become increasingly worried about the direction of EA over the last few years. We are some of them.

This document was written collaboratively, with contributions from ~10 EAs in total. Each of us arrived at most of the critiques below independently before realising through conversation that we were not “the only one”. In fact, many EAs thought similarly to us, or at least were very easily convinced once thoughts were (privately) shared.

Some of us started to become concerned as early as 2017, but the discussions that triggered the creation of this post happened in the summer of 2022. Most of this post was written by the time of the FTX crash, and the final draft was completed the very day that the Bostrom email scandal broke.[1] Thus, a separate post will be made about the Bostrom/FLI issues in around a week.

A lot of what we say is relevant to the FTX situation, and some of it isn’t, at least directly. In any case, it seems clear to us that the FTX crisis significantly strengthened our arguments.

We reached the point where we would feel collectively irresponsible if we did not voice our concerns some time ago, and now seems like the time where those concerns are most likely to be taken seriously. We voice them in the hope that we can change our movement for the better, and have taken pains to avoid coming off as “hostile” in any way.

Experience indicates that it is likely many EAs will agree with significant proportions of what we say, but have not said as much publicly due to the significant risk doing so would pose to their careers, access to EA spaces, and likelihood of ever getting funded again.

Naturally the above considerations also apply to us: we are anonymous for a reason.

This post is also quite very long, so each section has a summary at the top for ease of scanning, and we’ll break this post up into a sequence to facilitate object-level discussion.

Finally, we ask that people upvote or downvote this post on the basis of whether they believe it to have made a useful contribution to the conversation, rather than whether they agree with all of our critiques.

Summary

- The Effective Altruism movement has rapidly grown in size and power, and we have a responsibility to ensure that it lives up to its goals

- EA is too homogenous, hierarchical, and intellectually insular, with a hard core of “orthodox” thought and powerful barriers to “deep” critiques

- Many beliefs accepted in EA are surprisingly poorly supported, and we ignore entire disciplines with extremely relevant and valuable insights

- Some EA beliefs and practices align suspiciously well with the interests of our donors, and some of our practices render us susceptible to conflicts of interest

- EA decision-making is highly centralised, opaque, and unaccountable, but there are several evidence-based methods for improving the situation

Introduction

As committed Effective Altruists, we have found meaning and value in the frameworks and pragmatism of the Effective Altruism movement. We believe it is one of the most effective broadly-focused social movements, with the potential for world-historical impact.

Already, the impact of many EA projects has been considerable and inspiring. We appreciate the openness to criticism found in various parts of the EA community, and believe that EA has the potential to avoid the pitfalls faced by many other movements by updating effectively in response to new information.

We have become increasingly concerned with significant aspects of the movement over our collective decades here, and while the FTX crisis was a shock to all of us, we had for some time been unable to escape the feeling that something was going to go horribly wrong.

To ensure that EA has a robustly positive impact, we feel the need to identify the aspects of our movement that we find concerning, and suggest directions for reform that we believe have been neglected. These fall into three major categories:

- Epistemics

- Expertise & Rigour

- Governance & Power

We do not believe that the critiques apply to everyone and to all parts of EA, but to certain – often influential – subparts of the movement. Most of us work on existential risk, so the majority of our examples will come from there.[2]

Not all of the ~10 people that helped to write this post agree with all the points made within, both in terms of “goes too far” and “doesn’t go far enough”. It is entirely possible to strongly reject one or more of our critiques while accepting others.

In the same vein, we request that commenters focus on the high-level critiques we make, rather than diving into hyper-specific debates about one thing or another that we cited as an example.

Finally, this report started as a dozen or so bullet points, and currently stands at over 20,000 words. We wrote it out of love for the community, and we were not paid for any of its writing or research despite most of us either holding precarious grant-dependent gig jobs or living on savings while applying for funding. We had to stop somewhere. This means that many of the critiques we make could be explored in far, far more detail than their rendition here contains.

If you think a point is underdeveloped, we probably agree; we would love to see others take the points we make and explore them in greater depth, and indeed to do so ourselves if able to do so while also being able to pay rent.

We believe that the points we make are vital for the epistemic health of the movement, that they will make it more accessible and effective, and that they will enhance the ability of EA as a whole to do the most good.

Two Notes:

- Some of the issues we describe are based on personal experience and thus cannot be backed by citations. If you doubt something we assert, let us know and we’ll give as much detail as we can without compromising our anonymity or that of others. You can also just ask around: we witnessed most of the things we mention on multiple independent occasions, so they’re probably not rare.

- This post ties a lot of issues together and is thus necessarily broad, so we will have to make some generalisations, to which there will be exceptions.

Epistemics

Epistemic health is a community issue

Summary: The Collective Intelligence literature suggests epistemic communities should be diverse, egalitarian, and open to a wide variety of information sources. EA, in contrast, is relatively homogenous, hierarchical, and insular. This puts EA at serious risk of epistemic blind-spots.

EA highly values epistemics and has a stated ambition of predicting existential risk scenarios. We have a reputation for assuming that we are the “smartest people in the room”.

Yet, we appear to have been blindsided by the FTX crash. As Tyler Cowen puts it:

Hardly anyone associated with Future Fund saw the existential risk to… Future Fund, even though they were as close to it as one could possibly be.

I am thus skeptical about their ability to predict existential risk more generally, and for systems that are far more complex and also far more distant. And, it turns out, many of the real sources of existential risk boil down to hubris and human frailty and imperfections (the humanities remain underrated). When it comes to existential risk, I generally prefer to invest in talent and good institutions, rather than trying to fine-tune predictions about existential risk itself.

If EA is going to do some lesson-taking, I would not want this point to be neglected.

So, what’s the problem?

EA’s focus on epistemics is almost exclusively directed towards individualistic issues like minimising the impact of cognitive biases and cultivating a Scout Mindset. The movement strongly emphasises intelligence, both in general and especially that of particular “thought-leaders”. An epistemically healthy community seems to be created by acquiring maximally-rational, intelligent, and knowledgeable individuals, with social considerations given second place. Unfortunately, the science does not bear this out. The quality of an epistemic community does not boil down to the de-biasing and training of individuals;[3] more important factors appear to be the community’s composition, its socio-economic structure, and its cultural norms.[4]

The field of Collective Intelligence provides guidance on the traits to nurture if one wishes to build a collectively intelligent community. For example:

- Diversity

- Along essentially all dimensions, from cultural background to disciplinary/professional training to cognition style to age

- Egalitarianism

- People must feel able to speak up (and must be listened to if they do)

- Dominance dynamics amplify biases and steer groups into suboptimal path dependencies

- Leadership is typically best employed on a rotating basis for discussion-facilitation purposes rather than top-down decision-making

- Avoid appeals and deference to community authority

- Openness to a wide variety of sources of information

- Generally high levels of social/emotional intelligence

- This is often more important than individuals’ skill levels at the task in question

However, the social epistemics of EA leave much to be desired. As we will elaborate on below, EA:

- Is mostly comprised of people with very similar demographic, cultural, and educational backgrounds

- Places too much trust in (powerful) leadership figures

- Is remarkably intellectually insular

- Confuses value-alignment and seniority with expertise

- Is vulnerable to motivated reasoning

- Is susceptible to conflicts of interest

- Has powerful structural barriers to raising important categories of critique

- Is susceptible to groupthink

Decision-making structures and intellectual norms within EA must therefore be improved upon.[5]

What actually is “value-alignment”?

Summary: The use of the term “value-alignment” in the EA community hides an implicit community orthodoxy. When people say “value-aligned” they typically do not mean a neutral “alignment of values”, nor even “agreement with the goal of doing the most good possible”, but a commitment to a particular package of views. This package, termed “EA orthodoxy”, includes effective altruism, longtermism, utilitarianism, Rationalist-derived epistemics, liberal-technocratic philanthropy, Whig historiography, the ITN framework, and the Techno-Utopian Approach to existential risk.

The term “value-alignment” gets thrown around a lot in EA, but is rarely actually defined. When asked, people typically say something about similarity or complementarity of values or worldviews, and this makes sense: “value-alignment” is of course a term defined in reference to what values the subject is (un)aligned with. You could just as easily speak of alignment with the values of a political party or a homeowner’s association.[6]

However, the term’s usage in EA spaces typically has an implicit component: value-alignment with a set of views shared and promoted by the most established and powerful components of the EA community. Thus:

-

Value-alignment = the degree to which one subscribes to EA orthodoxy

-

EA orthodoxy = the package of beliefs and sensibilities generally shared and promoted by EA’s core institutions (the CEA, FHI, OpenPhil, etc.)[7]

- These include, but are not limited to:

- Effective Altruism

- i.e. trying to “do the most good possible”

- Longtermism

- i.e. believing that positively influencing the long-term future is a (or even the) key moral priority of our time

- Utilitarianism, usually Total Utilitarianism

- Rationalist-derived epistemics

- Most notably subjective Bayesian “updating” of personal beliefs

- Liberal-technocratic philanthropy

- A broadly Whiggish/progressivist view of history

- Best exemplified by Steven Pinker’s “Enlightenment Now”

- Cause-prioritisation according to the ITN framework

- The Techno-Utopian Approach to existential risk, which includes for instance, and in addition to several of the above:

-

Defining “existential risk” in reference to humanity’s “long-term potential” to generate immense amounts of (utilitarian) value by populating the cosmos with vast numbers of extremely technologically advanced beings

-

A methodological framework based on categorising individual “risks”[8], estimating for each a probability of causing an “existential catastrophe” within a given timeframe, and attempting to reduce the overall level of existential risk largely by working on particular “risks” in isolation (usually via technical or at least technocratic means)

-

Technological determinism, or at least a “military-economic adaptationism” that is often underpinned by an implicit commitment to neorealist international relations theory

-

A willingness to seriously consider extreme or otherwise exceptional actions to protect astronomically large amounts of perceived future value

-

- Effective Altruism

- There will naturally be exceptions here – institutions employ many people, whose views can change over time – but there are nonetheless clear regularities

- These include, but are not limited to:

Note that few, if any, of the components of orthodoxy are necessary aspects, conditions, or implications of the overall goal of “doing the most good possible”. It is possible to be an effective altruist without subscribing to all, or even any, of them, with the obvious exception of “effective altruism” itself.

However, when EAs say “value-aligned” they rarely seem to mean that one is simply “dedicated to doing the most good possible”, but that one subscribes to the particular philosophical, political, and methodological views packaged under the umbrella of orthodoxy.

We are incredibly homogenous

Summary: Diverse communities are typically much better at accurately analysing the world and solving problems, but EA is extremely homogenous along essentially all dimensions. EA institutions and norms actively and strongly select against diversity. This provides short-term efficiency at the expense of long-term epistemic health.

The EA community is notoriously homogenous, and the “average EA” is extremely easy to imagine: he is a white male[9] in his twenties or thirties from an upper-middle class family in North America or Western Europe. He is ethically utilitarian and politically centrist; an atheist, but culturally protestant. He studied analytic philosophy, mathematics, computer science, or economics at an elite university in the US or UK. He is neurodivergent. He thinks space is really cool. He highly values intelligence, and believes that his own is significantly above average. He hung around LessWrong for a while as a teenager, and now wears EA-branded shirts and hoodies, drinks Huel, and consumes a narrow range of blogs, podcasts, and vegan ready-meals. He moves in particular ways, talks in particular ways, and thinks in particular ways. Let us name him “Sam”, if only because there’s a solid chance he already is.[10]

Even leaving aside the ethical and political issues surrounding major decisions about humanity’s future being made by such a small and homogenous group of people, especially given the fact that the poor of the Global South will suffer most in almost any conceivable catastrophe, having the EA community overwhelmingly populated by Sams or near-Sams is decidedly Not Good for our collective epistemic health.

As noted above, diversity is one of the main predictors of the collective intelligence of a group. If EA wants optimise its ability to solve big, complex problems like the ones we focus on, we need people with different disciplinary backgrounds[11], different kinds of professional training, different kinds of talent/intelligence[12], different ethical and political viewpoints, different temperaments, and different life experiences. That’s where new ideas tend to come from.[13]

Worryingly, EA institutions seem to select against diversity. Hiring and funding practices often select for highly value-aligned yet inexperienced individuals over outgroup experts, university recruitment drives are deliberately targeted at the Sam Demographic (at least by proxy) and EA organisations are advised to maintain a high level of internal value-alignment to maximise operational efficiency. The 80,000 Hours website seems purpose-written for Sam, and is noticeably uninterested in people with humanities or social sciences backgrounds,[14] or those without university education. Unconscious bias is also likely to play a role here – it does everywhere else.

The vast majority of EAs will, when asked, say that we should have a more diverse community, but in that case, why are only a very narrow spectrum of people given access to EA funding or EA platforms? There are exceptions, of course, but the trend is clear.

It’s worth mentioning that senior EAs have done some interesting work on moral uncertainty and value-pluralism, and we think several of their recommendations are well-taken. However, the focus is firmly on individual rather than collective factors. The point remains that one cannot substitute a philosophically diverse community for an overwhelmingly utilitarian one where everyone individually tries to keep all possible viewpoints in mind. None of us are so rational as to obviate true diversity through our own thoughts.[15]

EA is very open to some kinds of critique and very not open to others

Summary: EA is very open to shallow critiques, but not deep critiques. Shallow critiques are small technical adjustments written in ingroup language, whereas deep critiques hint at the need for significant change, criticise prominent figures or their ideas, and can suggest outgroup membership. This means EA is very good at optimising along a very narrow and not necessarily optimal path.

EA prides itself on its openness to criticism, and in many areas this is entirely justified. However, willingness to engage with critique varies widely depending on the type of critique being made, and powerful structures exist within the community that reduce the likelihood that people will speak up and be heard.

Within EA, criticism is acceptable, even encouraged, if it lies within particular boundaries, and when it is expressed in suitable terms. Here we distinguish informally between “shallow critiques” and “deep critiques”.[16]

Shallow critiques are often:

- Technical adjustments to generally-accepted structures

- “We should rate intervention X 12% higher than we currently do.”

- Changes of emphasis or minor structural/methodological adjustments

- Easily conceptualised as “optimising” “updates” rather than cognitively difficult qualitative switches

- Written in EA-language and sprinkled liberally with EA buzzwords

- Not critical of capitalism

Whereas deep critiques are often:

-

Suggestive that one or more of the fundamental ways we do things are wrong

- i.e. are critical of EA orthodoxy

- Thereby implying that people may have invested considerable amounts of time/effort/identity in something when they perhaps shouldn’t have[17]

-

Critical of prominent or powerful figures within EA

-

Written in a way suggestive of outgroup membership

- And thus much more likely to be read as hostile and/or received with hostility

-

Political

- Or more precisely: of a different politics to the broadly liberal[18]-technocratic approach popular in EA

EA is very open to shallow critiques, which is something we absolutely love about the movement. As a community, however, we remain remarkably resistant to deep critiques. The distinction is likely present in most epistemic communities, but EA appears to have a particularly large problem. Again, there will be exceptions, but the trend is clear.

The problem is illustrated well by the example of an entry to the recent Red-Teaming Contest: “The Effective Altruism movement is not above conflicts of interest”. It warned us of the political and ethical risks associated with taking money from cryptocurrency billionaires like Sam Bankman-Fried, and suggested that EA has a serious blind spot when it comes to (financial) conflicts of interest.[19]

The article (which did not win anything in the contest) was written under a pseudonym, as the author feared that making such a critique publicly would incur a risk of repercussions to their career. A related comment provided several well-evidenced reasons to be morally and pragmatically wary of Bankman-Fried, got downvoted heavily, and was eventually deleted by its author.

Elsewhere, critical EAs report[20] having to develop specific rhetorical strategies to be taken seriously. Making deep critiques or contradicting orthodox positions outright gets you labelled as a “non-value-aligned” individual with “poor epistemics”, so you need to pretend to be extremely deferential and/or stupid and ask questions in such a way that critiques are raised without actually being stated.[21]

At the very least, critics have learned to watch their tone at all costs, and provide a constant stream of unnecessary caveats and reassurances in order to not be labelled “emotional” or “overconfident”.

These are not good signs.

Why do critical EAs have to use pseudonyms?

Summary: Working in EA usually involves receiving money from a small number of densely connected funding bodies/individuals. Contextual evidence is strongly suggestive that raising deep critiques will drastically reduce one’s odds of being funded, so many important projects and criticisms are lost to the community.

There are several reasons people may not want to publicly make deep critiques, but the one that has been most impactful in our experience has been the role of funding.[22]

EA work generally relies on funding from EA sources: we need to pay the bills, and the kinds of work EA values are often very difficult to fund via non-EA sources. Open Philanthropy, and previously FTX, has/had an almost hegemonic funding role in many areas of existential risk reduction, as well as several other domains. This makes EA funding organisations and even individual grantmakers extremely powerful.

Prominent funders have said that they value moderation and pluralism, and thus people (like the writers of this post) should feel comfortable sharing their real views when they apply for funding, no matter how critical they are of orthodoxy.

This is admirable, and we are sure that they are being truthful about their beliefs. Regardless, it is difficult to trust that the promise will be kept when one, for instance:

-

Observes the types of projects (and people) that succeed (or fail) at acquiring funding

- i.e. few, if any, deep critiques or otherwise heterodox/“heretical” works

-

Looks into the backgrounds of grantmakers and sees how they appear to have very similar backgrounds and opinions (i.e they are highly orthodox)

-

Experiences the generally claustrophobic epistemic atmosphere of EA

-

Hears of people facing (soft) censorship from their superiors because they wrote deep critiques of the ideas of prominent EAs

- Zoe Cremer and Luke Kemp lost “sleep, time, friends, collaborators, and mentors” as a result of writing Democratising Risk, a paper which was critical of some EA approaches to existential risk.[23] Multiple senior figures in the field attempted to prevent the paper from being published, largely out of fear that it would offend powerful funders. This saga caused significant conflict within CSER throughout much of 2021.

-

Sees the revolving door and close social connections between key donors and main scholars in the field

-

Witnesses grantmakers dismiss scientific work on the grounds that the people doing it are insufficiently value-aligned

- If this is what is said in public (which we have witnessed multiple times), what is said in private?

-

Etc.

Thus, it is reasonable to conclude that if you want to get funding from an EA body, you must not only try to propose a good project, but one that could not be interpreted as insufficiently “value-aligned”, however the grantmakers might define it. If you have an idea for a project that seems very important, but could be read as a “deep critique”, it is rational for you to put it aside.

The risk to one’s career is especially important given the centralisation of funding bodies as well as the dense internal social network of EA’s upper echelons.[24]

Given this level of clustering, it is reasonable to believe that if you admit to holding heretical views on your funding application, word will spread, and thus you will quite possibly never be funded by any other funder in the EA space, never mind any other consequences (e.g. gatekeeping of EA events/spaces) you might face. For a sizeable portion of EAs, the community forms a very large segment of one’s career trajectory, social life, and identity; not things to be risked easily.[25] For most, the only robust strategy is to keep your mouth shut.[26]

Grantmakers: You are missing out on exciting, high potential impact projects due to these processes. When the stakes are as high as they are, verbal assurances are unfortunately insufficient. The problems are structural, so the solutions must be structural as well.

We can’t put numbers on everything…

Summary: EA is highly culturally quantitative, which is optimal for some problem categories but not others. Trying to put numbers on everything causes information loss and triggers anchoring and certainty biases. Individual Bayesian Thinking, prized in EA, has significant methodological issues. Thinking in numbers, especially when those numbers are subjective “rough estimates”, allow one to justify anything comparatively easily, and can lead to wasteful and immoral decisions.

EA places an extremely high value on quantitative thinking, mostly focusing on two key concepts: expected value (EV) calculations and Bayesian probability estimates.

From the EA Forum wiki: “The expected value of an act is the sum of the value of each of its possible outcomes multiplied by their probability of occurring.” Bayes’s theorem is a simple mathematical tool for updating our estimate of the likelihood of an event in response to new information.

Individual Bayesian Thinking (IBT) is a technique inherited by EA from the Rationalist subculture, where one attempts to use Bayes’ theorem on an everyday basis. You assign each of your beliefs a numerical probability of being true and attempt to mentally apply Bayes’ theorem, increasing or decreasing the probability in question in response to new evidence. This is sometimes called “Bayesian epistemology” in EA, but to avoid confusing it with the broader approach to formal epistemology with the same name we will stick with IBT.

There is nothing wrong with quantitative thinking, and much of the power of EA grows from its dedication to the numerical. However, this is often taken to the extreme, where people try to think almost exclusively along numerical lines, causing them to neglect important qualitative factors or else attempt to replace them with doubtful or even meaningless numbers because “something is better than nothing”. These numbers are often subjective “best guesses” with little empirical basis.[27]

For instance, Bayesian estimates are heavily influenced by one’s initial figure (one’s “prior”), which, especially when dealing with complex, poorly-defined, and highly uncertain and speculative phenomena, can become subjective (based on unspecified values, worldviews, and assumptions) to the point of arbitrary.[28] This is particularly true in existential risk studies where one may not have good evidence to update on.

We assume that, with enough updating in response to evidence, our estimates will eventually converge on an accurate figure. However, this is dependent on several conditions, notably well-formulated questions, representative sampling of (accurate) evidence, and a rigorous and consistent method of translating real-world observations into conditional likelihoods.[29] This process is very difficult even when performed as part of careful and rigorous scientific study; attempting to do it all in your head, using rough-guess or even purely intuitional priors and likelihoods, is likely to lead to more confidence than accuracy.

This is further complicated by the fact that probabilities are typically distributions rather than point values – often very messy distributions that we don’t have nice neat formulae for. Thus, “updating” properly would involve manipulating big and/or ugly matrices in your head. Perhaps this is possible for some people.

A common response to these arguments is that Bayesianism is “how the mind really works”, and that the brain already assigns probabilities to hypotheses and updates them similarly or identically to Bayes’ rule. There are good reasons to believe that this may be true. However, the fact that we may intuitively and subconsciously work along Bayesian lines does not mean that our attempts to consciously “do the maths” will work.

In addition, there seems to have been little empirical study of whether Individual Bayesian Updating actually outperforms other modes of thought, never mind how this varies by domain. It seems risky to put so much confidence in a relatively unproven technique.

The process of Individual Bayesian Updating can thus be critiqued on scientific grounds, but there is also another issue with it and hyper-quantitative thinking more generally: motivated reasoning. With no hard qualitative boundaries and little constraining empirical data, the combination of expected value calculations and Individual Bayesian Thinking in EA allows one to justify and/or rationalise essentially anything by generating suitable numbers.

Inflated EV estimates can be used to justify immoral or wasteful actions, and somewhat methodologically questionable subjective probability estimates translate psychological, cultural, and historical biases into truthy “rough estimates” to plug into scientific-looking graphs and base important decisions upon.

We then try to optimise our activities using the numbers we have. Attempting to fine-tune estimates of the maximally impactful strategy is a great approach when operating within fairly predictable, well-described domains, but is a fragile and risky strategy when operating in complex and uncertain domains (like existential risk) even when you have solid reasons for believing that your numbers are good – what if you’re wrong? Robustness to a wide variety of possibilities is typically the objective of professionals in such areas, not optimality; we should ask ourselves why.

Such estimates can also trigger the anchoring bias, and imply to lay readers that, for example, while unaligned artificial intelligence may not be responsible for almost twice as much existential risk as all other factors combined, the ratio is presumably somewhere in that ballpark. In fact, it is debatable whether such estimates have any validity at all, especially when not applied to simple, short-term (i.e. within a year),[30] theoretically well-defined questions. Indeed, they do not seem to be taken seriously by existential risk scholars outside of EA.[31] The apparent scientific-ness of numbers can fool us into thinking we know much more about certain problems than we actually do.

This isn’t to say that quantification is inherently bad, just that it needs to be combined with other modes of thought. When a narrow range of thought is prized above all others, blind spots are bound to emerge, especially when untested and controversial techniques like Individual Bayesian Thinking are conflated (as they sometimes are by EAs) with “transparent reasoning” and even applied “rationality” itself.

Numbers are great, but they’re not the whole story.

…and trying to weakens our collective epistemics

Summary: Overly-numerical thinking lends itself to homogeneity and hierarchy. This encourages undue deference and opaque/unaccountable power structures. EAs assume they are smarter/more rational than non-EAs, which allows us to dismiss opposing views from outsiders even when they know far more than we do. This generates more homogeneity, hierarchy, and insularity.

Under number-centric thinking, everything is operationalised as (or is assigned) some value unless there is an overwhelming need or deliberate effort to think otherwise. A given value X is either bigger or smaller than another value Y, but not qualitatively different to it; ranking X with respect to Y is the only possible type of comparison. Thus, the default conceptualisation of a given entity is a point on a (homogenous) number line. In a culture strongly focused on maximising value (that “line goes up”), one comes to assume that this model fits everything: put a number on something, then make the number bigger.

For instance, (intellectual) ability is implicitly assumed within much of EA to be a single variable[32], which is simply higher or lower for different people. Therefore, there is no need for diversity, and it feels natural to implicitly trust and defer to the assessments of prominent figures (“thought leaders”) perceived as highly intelligent. This in turn encourages one to accept opaque and unaccountable hierarchies.[33]

This assumption of cognitive hierarchy contributes to EA’s unusually low opinion of diversity and democracy, which reduces the input of diverse perspectives, which naturalises orthodox positions, which strengthens norms against diversity and democracy, and so on.

Moreover, just as prominent EAs are assumed to be authoritative, the EA community’s focus on individual epistemics leads us to think that we, with our powers of rationality and Bayesian reasoning, must be epistemically superior to non-EAs. Therefore, we can place overwhelming weight on the views of EAs and more easily dismiss the views of the outgroup, or even disregard democracy in favour of an “epistocracy” in which we are the obvious rulers.[34]

This is a generator function for hierarchy, homogeny, and insularity. It is antithetical to the aim of a healthy epistemic community.

In fact, work on the philosophy of science in existential risk has convincingly argued that in a field with so few independent evidential feedback loops, homogeneity and “conservatism” are particularly problematic. This is because unlike other fields where we have a good idea of the epistemic landscape, the inherently uncertain and speculative nature of Existential Risk Studies (ERS) means that not only are we uncertain of whether we have discovered an epistemic peak, but what the topography of the epistemic landscape even looks like. Thus, we should focus on creating the conditions for creative science, rather than the conservative science that we (i.e. the EAs within ERS) are moving towards through our extreme focus on a narrow range of disciplines and methodologies .

The EA Forum structurally discourages deep critique

Summary: The EA Forum gives more “senior” users far more votes and hides unpopular comments. This combines with cultural factors to silence critics and encourage convergence on orthodox views.

The EA Forum is interesting because it formalises some of the maladaptive trends we have mentioned. The Forum’s karma system ranks comments on the basis of user popularity, and comments below a certain threshold are hidden from view. This greatly reduces the visibility of unpopular comments, i.e. those that received a negative reaction from their readers.

Furthermore, the greater a user’s overall karma score, the more impactful their votes, to the point where some users can have 10x or even 16x the voting power of others. Thus, more established, popular, engaged users are able to give their preferred comments a significant boost, and in some cases unilaterally drop comments they dislike (e.g. from their critics) below the threshold past which a comment is hidden from view and thus not seen by most people.

Due to popularity feedback loops present on internet fora (where low-karma comments are likely to be further downvoted[35] and vice versa) as well as the related issues of trust and deference, these problems are likely to be magnified over the course of a discussion.

New users, who reasonably expect voting to be one-person-one-vote, can mistakenly believe that a comment with -5 karma from 15 votes represents 10 downvotes and 5 upvotes, when it could just as easily be a result of 13 upvotes being overruled by strong downvotes from a couple of members of the EA core network.

These arrangements give more orthodox individuals and groups disproportionate power over online discourse, and can make people feel less comfortable sharing critical views. It is a generator function for groupthink.

Some admirable work has been done to improve the situation, for instance the excellent step to separate karma and agreement ratings, but this is not enough to solve the problem.

The most important solution is simple: one person, one vote. Beyond that, having an option to sort by controversial, not hiding low-karma comments, having separate agreement karma for posts as well as comments, and perhaps occasionally putting a low-ranking comment nearer the top when one sorts comments by “top scoring” so critical comments don’t get buried all seem like good ideas.

Expertise & Rigour

We need to value expertise and rigour more

Summary: EA mistakes value-alignment and seniority for expertise and neglects the value of impartial peer-review. Many EA positions perceived as “solid” are derived from informal shallow-dive blogposts by prominent EAs with little to no relevant training, and clash with consensus positions in the relevant scientific communities. Expertise is appealed to unevenly to justify pre-decided positions.

There are many very enthusiastic EAs who have started studying existential risk recently, which includes several of the authors of this post. This is a massive asset to the movement. However, given the (obviously understandable) inexperience of many newcomers, we must be wary of the power of received wisdom. Under the wrong conditions, newcomers can rapidly update in line with the EA “canon”, then speak with significant confidence about fields containing much more internal disagreement and complexity than they are aware of, or even where orthodox EA positions are misaligned with consensus positions within the relevant expert communities.

More specifically, EA shows a pattern of prioritising non-peer-reviewed publications – often shallow-dive blogposts[36] – by prominent EAs with little to no relevant expertise. These are then accepted into the “canon” of highly-cited works to populate bibliographies and fellowship curricula, while we view the topic as somewhat “dealt with”'; “someone is handling it''. It should also be noted that the authors of these works often do not regard them as publications that should be accepted as “canon”, but they are frequently accepted as such regardless.[37]

This is a worrying tendency, given that these works commonly do not engage with major areas of scholarship on the topics that they focus on, ignore work attempting to answer similar questions, nor consult with relevant experts, and in many instances use methods and/or come to conclusions that would be considered fringe within the relevant fields. These works do not face adequate scrutiny due to the aforementioned issues with raising critique as well as (usually) an extreme lack of relevant expertise in the EA community caused by its disciplinary homogeneity

Elsewhere, ideas like the ITN framework and differential technological development are taken as core parts of EA orthodoxy, even being used to make highly consequential funding and policy decisions. This is worrying given that both are problematic (the ITN framework, for example, neglects co-benefits, response risks, and tipping points) and neither have been subjected to significant amounts of rigorous peer review and academic discussion[38].

This is not at all to say that Google Docs and blogposts are inherently “bad”: they are very good for opening discussions and providing preliminary thoughts before in-depth studies. In fact, one thing EA does much better than academia is its lower barrier to entry to important conversations, which is facilitated by things like EA Forum posts. This is a wonderful force for scientific creativity. However, the fact remains that these posts are simply no substitute for rigorous studies subject to peer review (or genuinely equivalent processes) by domain-experts external to the EA community.

Moreover, there seem to be rather inconsistent attitudes to expertise in the EA community. When Stuart Russell argues that AI could pose an existential threat to humanity, he is held up as someone worth listening to –”He wrote the book on AI, you know!” However, if someone of comparable standing in Climatology or Earth-Systems Science, e.g. Tim Lenton or Johan Rockström, says the same for their field, they are ignored, or even pilloried.[39] Moderate statements from the IPCC are used to argue that climate change is “not an existential risk”, but given significant expert disagreement among experts on e.g. deep learning capabilities, it seems very unlikely that a consensus-based “Intergovernmental Panel on Artificial Intelligence” would take a stance anything like as extreme as that of most prominent EAs. This seems like a straightforward example of confirmation bias to us. To the extent that we defer to experts, we should be consistent and rigorous about how it is done.

Finally, we sometimes assume that somebody holding significant power must mean that their opinions are particularly valuable. Sam Bankman-Fried, for instance, was given a huge platform to speak both on behalf of and to the EA movement, e.g. a 3.5-hour interview on 80,000 Hours. He was frequently asked to share his beliefs on a range of complex topics, from AI safety to theories of history, even though his only distinction was (as we know now, fraudulently) making lots of money in crypto. To interview someone or to invite them to speak about a given topic implies that they are someone whose views are particularly worth listening to compared to others. We should be critical about how we make that judgement, especially given the seniority-expertise confusion we discussed above.

Neither value-alignment nor seniority are equivalent to expertise or skill, and our assessments of the quality of research works should be independent of the perceived value-alignment and name-recognition of their authors. We’re dealing with really big problems: let’s make sure we get it right.

We should probably read more widely

Summary: EA reading lists are typically narrow, homogenous, and biased, and EA has unusual social norms against reading more than a handful of specific books. Reading lists often heavily rely on EA Forum posts and shallow dives over peer-reviewed literature. EA thus remains intellectually insular, and the resulting overconfidence makes many attempts by external experts and newcomers to engage with the community exhausting and futile. This gives the false impression that orthodox positions are well-supported and/or difficult to critique.

EA reading lists are notorious for being homogenous, being populated overwhelmingly by the output of a few highly value-aligned thinkers (i.e. MacAskill, Ord, Bostrom, etc.), and paying little attention to alternative perspectives. Whilst these thinkers are highly impactful, they aren’t (and don’t claim to be) the singular authorities on the issues EAs are interested in.

This, plus our community’s general intellectual insularity, can cause new EAs to assume that little of worth has been said on some problems outside of EA. For instance, it is not uncommon for an EA that is very interested in existential risk to have never heard of many of the key papers, concepts, or authors in Existential Risk Studies, which is unsurprising when most of our reading lists ignore almost all academic papers not written by senior members of the Future of Humanity Institute.[40]

Conversely, our insularity plus the resistance to “deep critiques” causes people with expertise in neglected fields to either burn out and give up in exhaustion after a while, or avoid engaging in the first place. We are personally familiar with innumerable examples of this, from senior academics to 18-year-old undergraduates. Since they either avoid EA after brief exposure or have their contributions ignored or downvoted into the ground, we don’t even notice how much we are losing out on and how many opportunities we are missing.

Our problems concerning outside expertise and knowledge are compounded by EA’s odd cultural relationship to books: student groups are given money to bulk-order EA-friendly books and hand them out for free, but otherwise there seems to be a general feeling that reading books is rarely a good use of time in comparison to reading (EA-aligned) blogposts. This issue reached its extreme in Sam Bankman-Fried. From a now-deleted article in Sequoia:

“Oh, yeah?” says SBF. “I would never read a book.”

I’m not sure what to say. I’ve read a book a week for my entire adult life and have written three of my own.

“I’m very skeptical of books. I don’t want to say no book is ever worth reading, but I actually do believe something pretty close to that,” explains SBF. “I think, if you wrote a book, you fucked up, and it should have been a six-paragraph blog post.”

Most people don’t write blogposts, and some (most?) arguments are too complex and detailed to fit into blogposts. However, blogposts are very popular in the tech/rationalist spheres EA emerged from, and are extremely popular within EA. Thus, cultural forces once again push people away from potentially valuable outside ideas.

When those from outside EA have contributed to existential risk discussions, they have often had useful and insightful contributions. Thus, it is probably a good idea to assume that a lot of work outside of EA may have useful applications in an EA context. We are trying to deal with some of the most important issues in the world. We can't afford to assume that our little ecosystem has all the answers, because we don’t!

Luckily, there are expert opinion aggregation tools to rigorously combine the positions of many scholars. For instance, under the Delphi method, rounds of estimation and explanation are iterated in order to produce more reliable predictions. Participants are kept anonymous, and each participant does not know who made any given estimate or argument. This can counteract the negative impacts of experts’ personal or public stakes in certain ideas, and encourage participants to update their views freely. If we want to find the best available answers for our questions, we should look into the best-supported methods for generating bases of knowledge.

Other communities have been working on problems like the ones we focus on for decades: let’s hear what they have to say.

We need to stop reinventing the wheel

Summary: EA ignores highly relevant disciplines to its main area of focus, notably Disaster Risk Reduction, Futures Studies, and Science & Technology Studies, and in their place attempts to derive methodological frameworks from first principles. As a result, many orthodox EA positions would be considered decades out of date by domain-experts, and important decisions are being made using unsuitable tools.

EA is known for reinventing the wheel even within the EA community. This poses a significant problem given the stakes and urgency of problems like existential risk.

There are entire disciplines, such as Disaster Risk Reduction, Futures Studies, and Science and Technology Studies, that are profoundly relevant to existential risk reduction yet which have been almost entirely ignored by the EA community. The consequences of this are unsurprising: we have started near to the beginning of the history of each discipline and are slowly learning each of their lessons the hard way.

For instance, the approach to existential risk most prominent in EA, what Cremer and Kemp call the “Techno-Utopian Approach” (TUA), focuses on categorising individual hazards (called “risks” in the TUA),[41] attempting to estimate the likelihood that they will cause an existential catastrophe within a given timeframe, and trying to work on each risk separately by default, with a homogenous category of underlying “risk factors” given secondary importance.

However, such a hazard-centric approach was abandoned within Disaster Risk Reduction decades ago and replaced with one that places a heavy emphasis on the vulnerability of humans to potentially hazardous phenomena.[42] Indeed, differentiating between “risk” (the potential for harm), “hazards” (specific potential causes of harm) and “vulnerabilities” (aspects of humans and human systems that render them susceptible to the impacts of hazards) is one of the first points made on any disaster risk course. Reducing human vulnerability and exposure is generally a far more effective method of reducing risk posed by a wide variety of hazards, and far better accounts for “unknown unknowns” or “Black Swans”.[43]

Disaster risk scholarship is also revealing the growing importance of complex patterns of causation, the interactions between threats, and the potential for cascading failures. This area is largely ignored by EA existential risk work, and has been dismissed out of hand by prominent EAs.

As another example, Futures & Foresight scholars noted the deep limitations of numerical/probabilistic forecasting of specific trends/events in the 1960s-70s, especially with respect to long timescales as well as domains of high complexity and deep uncertainty[44], and low-probability high-impact events (i.e. characteristics of existential risk). Practitioners now combine or replace forecasts with qualitative foresight methods like scenario planning, wargaming, and Causal Layered Analysis, which explore the shape of possible futures rather than making hard-and-fast predictions. Yet, EA’s existential risk work places a massive emphasis on forecasting and pays little attention to foresight. Few EAs seem aware that “Futures Studies” as a discipline exists at all, and EA discussions of the (long-term) future often imply that little of note has been said on the topic outside of EA.[45]

These are just two brief examples.[46] There is a wealth of valuable insights and data available to us if we would only go out and read about them: this should be a cause for celebration!

But why have they been so neglected? Regrettably, it is not because EAs read these literatures and provided robust arguments against them; we simply never engaged with them in the first place. We tried to create the field of existential risk almost from first principles using the methods and assumptions that were already popular within our movement, regardless of whether they were suitable for the task.[47]

We believe there could be several disciplines or theoretical perspectives that EA, had it developed a little differently earlier on, would recognise as fellow travellers or allies. Instead, we threw ourselves wholeheartedly into the Founder Effect, and in our over-dependence on a few early canonical thinkers (i.e. MacAskill, Ord, Bostrom, Yudkowsky etc.), we thus far lost out on all that they have to offer.

This expands to a broader question: if we were to reinvent (EA approaches to) the field of Existential Risk Studies from the ground up, how confident are we that we would settle on our current way of doing things?

The above is not to say that all views within EA ought to always reflect mainstream academic views; there are genuine shortcomings to traditional academia. However, the sometimes hostile attitude EA has to academia has hurt our ability to listen to its contributions as well as those of experts in general.

Some ideas we should probably pay more attention to

Summary: Taster menu of topics directly applicable to existential risk work that EA pays little attention to: Vulnerability & Resilience, Complex (Adaptive) Systems, Futures & Foresight, Decision-Making under Deep Uncertainty/Robust Decision-Making, Psychology & Neuroscience, Science & Technology Studies, and the Humanities & Social Sciences in general.

So what are some areas that EA should take a greater notice of? Our list is far from exhaustive and is heavily focused on global catastrophic risk, but it seems like a good starting point. Naturally we welcome both suggestions for and constructive debates on the below.

Vulnerability and Resilience

Most communities trying to reduce risk focus on reducing human vulnerability and increasing societal resilience, rather than trying to fine-tune predictions of individual hazards, especially in areas full of unknown unknowns. It is possible that reducing the likelihood and magnitude of particular hazards may sometimes be the most effective way of reducing overall risk, but this should only be concluded after detailed assessment, rather than assumed a priori. In fact, our priors should be strongly against this claim given that it would imply that hazard-centric approaches are most suitable for existential risk scenarios (i.e. the areas of deepest uncertainty and highest complexity) which is the opposite of the trend seen in disaster/catastrophe risk more generally.

The principles of resilience include maintaining redundancy, diversity, and modularity, and ensuring that excessive connectivity doesn’t allow failures to cascade through a system (“systemic risk”). This is often achieved through self-organisation (as seen everywhere from ecosystems to democratic success stories) and institutional learning. Resilience is typically enhanced by popular participation in decision-making (consistent with collective intelligence, skin in the game, and the wisdom of the crowd), and enabling subsidiarity[48] (making decisions closest to where their impacts are, and where local knowledge can be effectively utilised).[49] Such multilevel governance, taking local knowledge into account, may be particularly valuable given our aforementioned problems around insularity and the dominance of the Global North. Consulting more widely, especially in areas of real vulnerability, may improve mitigation and adaptation strategies with respect to existential risk.

It is worth noting that the FTX crash is a perfect example of the fulfilment of a systemic, cascading risk that was unforeseen by EA and which EA was highly vulnerable to.

Complex (Adaptive) Systems

The siloed approach to existential risk, where the overwhelming majority of work focuses on reducing risk from one of the Big 4 Hazards[50] in isolation neglects emergent behaviour, feedback loops, interactions between cause areas, cascade/contagion effects, and the properties of complex adaptive systems more broadly. This is concerning because the likelihoods, magnitudes, and qualities of global catastrophic scenarios are determined by the structure of the current world-system, which is usefully conceptualised as a (staggeringly) complex adaptive system. Recent work from Len Fisher and Anders Sandberg, for example, highlights the advantages of analysing catastrophic threats as complex adaptive networks, work by Lara Mani, Asaf Tzachor, and Paul Cole has shown the issues with neglecting cascading catastrophic risk from volcanoes, and systems approaches are on the rise in Existential Risk Studies generally.[51]

There is a huge body of research on how to model and act within complex adaptive systems, including systems-dynamics, network-dynamics, and agent-based simulations, as well as qualitative approaches.

Complexity science has seen particularly extensive application in ecology and earth system science, where inherently interconnected systems vulnerable to tipping points, cascades, and collapse are common. Here, phenomena like temperature increases are best analysed as perturbations to the overall system state; one cannot simply add up the individual impacts of a predefined set of hazards.

For instance, recent work on catastrophic climate risk highlights the key role of cascading effects like societal collapses and resource conflicts. With as many as half of climate tipping points in play at 2.7°C - 3.4°C of warming and several at as low as 1.5°C, large areas of the Earth are likely to face prolonged lethal heat conditions, with innumerable knock-on effects. These could include increased interstate conflict, a far greater number of omnicidal actors, food-system strain or failure triggering societal collapses, and long-term degradation of the biosphere carrying unforeseen long-term damage e.g. through keystone species loss.[52]

Futures & Foresight

As mentioned above, foresight exercises – especially those conducted in groups – are the bread and butter of futures professionals. The emphasis is generally on qualitative or even narrative explorations of what the future might hold, with quantitative forecasting playing an important but not central role. You can’t put a probability on something if you don’t think of it in the first place,[53] and qualitative analysis often reveals that the something you were going to put a probability on isn’t a single distinct “something” at all.[54] In most cases, putting meaningful probabilities on events is impractical, and unnecessary for decision-making.

Futures Studies also includes large bodies of work on utopianism and socio-technical imaginaries, which seem vital given how much of EA’s existential risk work is premised on longtermism, a broadly utopian philosophy based on a particular image of the future.

Decision-Making under Deep Uncertainty/Robust Decision-Making

Robust decisions are designed to succeed largely independently of how the future plays out; this is achieved by preparing for things we cannot predict. When futures and risk professionals try to plan for an uncertain future, they typically do not try to perform fine-grained expected value calculations and optimise accordingly – “Predict and Act” – but construct plans that are robust to a wide variety of possible futures – “Explore and Adapt” – using simulations to explore the full parameter space and seeking agreement among stakeholders on particular decisions rather than particular models of the world. This approach vastly improves one’s performance when faced with Black Swans and unknown unknowns, and is much better at taking into account the positions of multiple stakeholders with differing value systems. These approaches are policy-proven (see the Colorado River Basin and Dutch “Room for the River” examples) and there is a wealth of literature on the subject, starting here and here.

Psychology and Neuroscience

By understanding the psychological processes that drive people’s behaviour, effective altruists and existential risk researchers can better predict how people will respond to various interventions and develop strategies that will be more likely to succeed. Additionally, psychology can provide valuable insights into how people perceive and respond to risk, which can help us better understand our audience and create effective strategies to reduce risk.

Elsewhere, neuroscientific studies have revealed the value of holistic/anti-reductionist thinking and embodied cognition, as well as significant areas in which EA’s Kahneman-derived emphasis on cognitive biases and dismissal of intuitive decision-making is misplaced.

Science and Technology Studies

Science and Technology Studies (STS) investigates the creation, development, and consequences of technologies with respect to history, society, and culture. Particularly relevant concepts include the “Risk Society”, which addresses how society organises itself in response to risk, and “Normal Accidents”, which contends that failures are inherent features of complex technical systems. Elsewhere, constructivist or co-productionist approaches to technology would provide valuable counterpoints to the implicit technological determinism of a large fraction of longtermist work.

The Humanities and Social Sciences

They exist! And are valuable!

Understanding how social change occurs will naturally be key to reducing risk, both in general (e.g. how do we build towards social tipping points, or communicate effectively?) and from ourselves (what risks are associated with utopian high-modernist movements? How do socio-economic conditions affect ideas about what counts as “rational” or “scientific”?).

Understanding how people have historically failed at the task of profoundly improving the world is vital if we want to avoid replicating those failures at larger scales.

Elsewhere, philosophies like critical realism may provide different epistemological and ontological bases for studying existential risk, and Kuhn-descended discussions of scientific paradigms helpfully highlight the contingent, cultural, and sometimes limited nature of science.

Studies of subjectivity, positionality, and postcolonialism provide useful insights about, for instance, how ideas of objectivity can be defined in terms that advantage those in power.

Also, much of the existential risk we face appears to arise from social phenomena, and thus it only seems rational to use the tools developed for such things.

Using the right (grantmaking) tools for the right (grantmaking) jobs

Summary: EA grantmaking methods have many advantages when applied to “classic” cause areas like endemic disease. However, current methods have significant methodological issues, and over-optimise in complex and uncertain environments like global catastrophic risk where robustness should be the primary objective. EA grantmaking should thus be decentralised and pluralised. Different methods should be trialled and rigorously evaluated.

Funding has a central role within EA, and a large proportion of EA institutions and projects would collapse if they were unable to secure funding from EA sources.

Open Philanthropy (OpenPhil) is by far the most powerful funding organisation in EA, so its cause prioritisations and decision-making frameworks have an extremely large influence on the direction of the movement.

We applaud essentially all of the cause areas OpenPhil funds[55] and the people we know at OpenPhil are typically intelligent, altruistic, and diligent.

Regardless of this, we will be using OpenPhil as a case study to explore two major problems with EA funding, both because of OpenPhil’s centrality, and because OpenPhil’s perspectives and practices are common across much of the rest of our movement, e.g. EA Funds.

The problems are:

- Our funding frameworks sometimes use inappropriate goals and tools

- It is socially and epistemically unhealthy for a movement to cultivate such a huge concentration of (unaccountable, opaque) power

We will discuss the former here, and explore the latter in subsequent sections.

The focus will be on the cause area of global catastrophic risk/existential risk/longtermism for two reasons: it’s the area most of us know the most about, and it’s where the issues we describe are most visible & impactful.

OpenPhil’s global catastrophic risk/longtermism funding stream is dominated by two hazard-clusters – artificial intelligence and engineered pandemics[56] – with little affordance given to other aspects of the risk landscape. Even within this, AI seems to be seen as “the main issue” by a wide margin, both within OpenPhil and throughout the EA community.

This is a problematic practice, given that, for instance:

-

The prioritisation relies on questionable forecasting practices, which themselves sometimes take contestable positions as assumptions and inputs

-

There is significant second-order uncertainty around the relevant risk estimates

-

The ITN framework has major issues, especially when applied to existential risk

-

It is extremely sensitive to how a problem is framed, and often relies on rough and/or subjective estimates of ambiguous and variable quantities

- This poses serious issues when working under conditions of deep uncertainty, and can allow implicit assumptions and subconscious biases to pre-determine the result

- Climate change, for example, is typically considered low-neglectedness within EA, but extreme/existential risk-related climate work is surprisingly neglected

- What exactly makes a problem “tractable”, and how do you rigorously put a number on it?

-

It ignores co-benefits, response risks, and tipping points

-

It penalises projects that seek to challenge concentrations of power, since this appears “intractable” until social tipping points are reached[57]

-

It is extremely difficult and often impossible to meaningfully estimate the relevant quantities in complex, uncertain, changing, and low-information environments

-

It focuses on evaluating actions as they are presented, and struggles to sufficiently value exploring the potential action space and increasing future optionality

-

-

Creativity can be limited by the need to appeal to a narrow range of grantmaker views[58]

-

The current model neglects areas that do not fit [neatly] into the two main “cause areas”, and indeed it is arguable whether global catastrophic risk can be meaningfully chopped up into individual “cause areas” at all

-

A large proportion (plausibly a sizeable majority, depending on where you draw the line) of catastrophic risk researchers would, and if you ask, do, reject[59]:

- The particular prioritisations made

- The methods used to arrive at those prioritisations, and/or

- The very conceptualisation of individual “risks” itself

-

It is the product of a small homogenous group of people with very similar views

- This is both a scientific (cf. collective intelligence/social epistemics and a moral issue

There are important efforts to mitigate some of these issues, e.g. cause area exploration prizes, but the central issue remains.

The core of the problem here seems to be one of objectives: optimality vs robustness. Some quick definitions (in terms of funding allocation):

- Optimality = the best possible allocation of funds

- In EA this is usually synonymous with “the allocation with the highest possible expected value”

- This typically has a unstated second component: “assuming that our information and our assumptions are accurate”

- Robustness = capacity of an allocation to maintain near-optimality given conditions of uncertainty and change

In seeking to do the most good possible, EAs naturally seek optimality, and developed grantmaking tools to this end. We identify potential strategies, gather data, predict outcomes, and take the actions that our models tell us will work the best.[60] This works great when you’re dealing with relatively stable and predictable phenomena, for instance endemic malaria, as well as most of the other cause areas EA started out with.

However, now that much of EA’s focus has turned on to global catastrophic risk, existential risk, and the long-term future, we have entered areas where optimality becomes fragility. We don’t want most of our eggs in one or two of the most speculative baskets, especially when those eggs contain billions of people. We should also probably adjust for the fact that we may over-rate the importance of things like AI for reasons discussed in other sections

Given the fragility of optimality, robustness is extremely important. Existential risk is a domain of high complexity and deep uncertainty, dealing with poorly-defined low-probability high-impact phenomena, sometimes covering extremely long timescales, with a huge amount of disagreement among both experts and stakeholders along theoretical, empirical, and normative lines. Ask any risk analyst, disaster researcher, foresight practitioner, or policy strategist: this is not where you optimise, this is where you maintain epistemic humility and cover all your bases. Innumerable people have learned this the hard way so we don’t have to.

Thus, we argue that, even if you strongly agree with the current prioritisations / methods, it is still rational for you to support a more pluralist and robustness-focused approach given the uncertainty, expert disagreement, and risk management best-practices involved.

As well as a general diversification of the grantmaking community and a deliberate effort to value critical and community-external projects, a larger number and variety of funding sources and methods would likely be a good idea, especially if this was used as an opportunity to evaluate a range of different options.

There have been laudable efforts to decentralise grantmaking, e.g. the FTX Future Fund’s re-granting scheme. However, regrantors were picked by the central organisation (and tended to subscribe to all or most of EA orthodoxy), and even then grants still required approval from the central organisation. An admirable step in the right direction, to be sure, but in our view there is room to take several more.

One interesting route for us to explore might be lottery funding, where projects are chosen at random after an initial pass to remove bad-faith and otherwise obviously low-quality proposals. This solves a surprisingly large number of problems in grantmaking and science funding (eliminating bias and scientific conservatism, for example), and has been supported by multiple philosophers of science in existential risk.

OpenPhil’s and wider EA’s funding practices have many advantages: for instance, they require far less admin than conventional scientific funding, which accelerates progress and maximises the time researchers spend researching rather than applying for the opportunity to do so. This is great, but there is room for improvement, largely boiling down to our aforementioned problems with intellectual openness and wheel-reinventing, where we instinctively use the (grantmaking) tools that we have lying around when we enter a field rather than taking a step back and asking what the best way forward is in our new environment.

On another note, there does not seem to be any good information on whether grantmakers are effective or improving at forecasting the success of projects. Given that this is an extremely difficult and impactful task, it seems reasonable that there should be a significant level of oversight and transparency.

Intermission

The councillor comes with his battered old suit

And his head all filled with plans

Says "It's not for myself, nor the fame or wealth

But to help my fellow man."

Fist in the air and the first to stand

When the Internationale plays

Says "We'll break down the walls of the old Town Hall,

Fight all the life-long day!"

Ten years later, where is he now?

He's ditched all the old ideas

Milked all the life from the old cash cow

Now he's got a fine career

Now he's got a fine career.

A Fine Career – Chumbawamba

Governance & Power

We align suspiciously well with the interests of tech billionaires (and ourselves)

Summary: [61] EA is largely reliant on the goodwill of a small number of tech billionaires, and as a result fails to question the practice of elite philanthropy as well as the ways by which these billionaires acquired their wealth. Our cause prioritisations align suspiciously well with the interests and desires of both tech billionaires and ourselves. We are not above motivated reasoning.

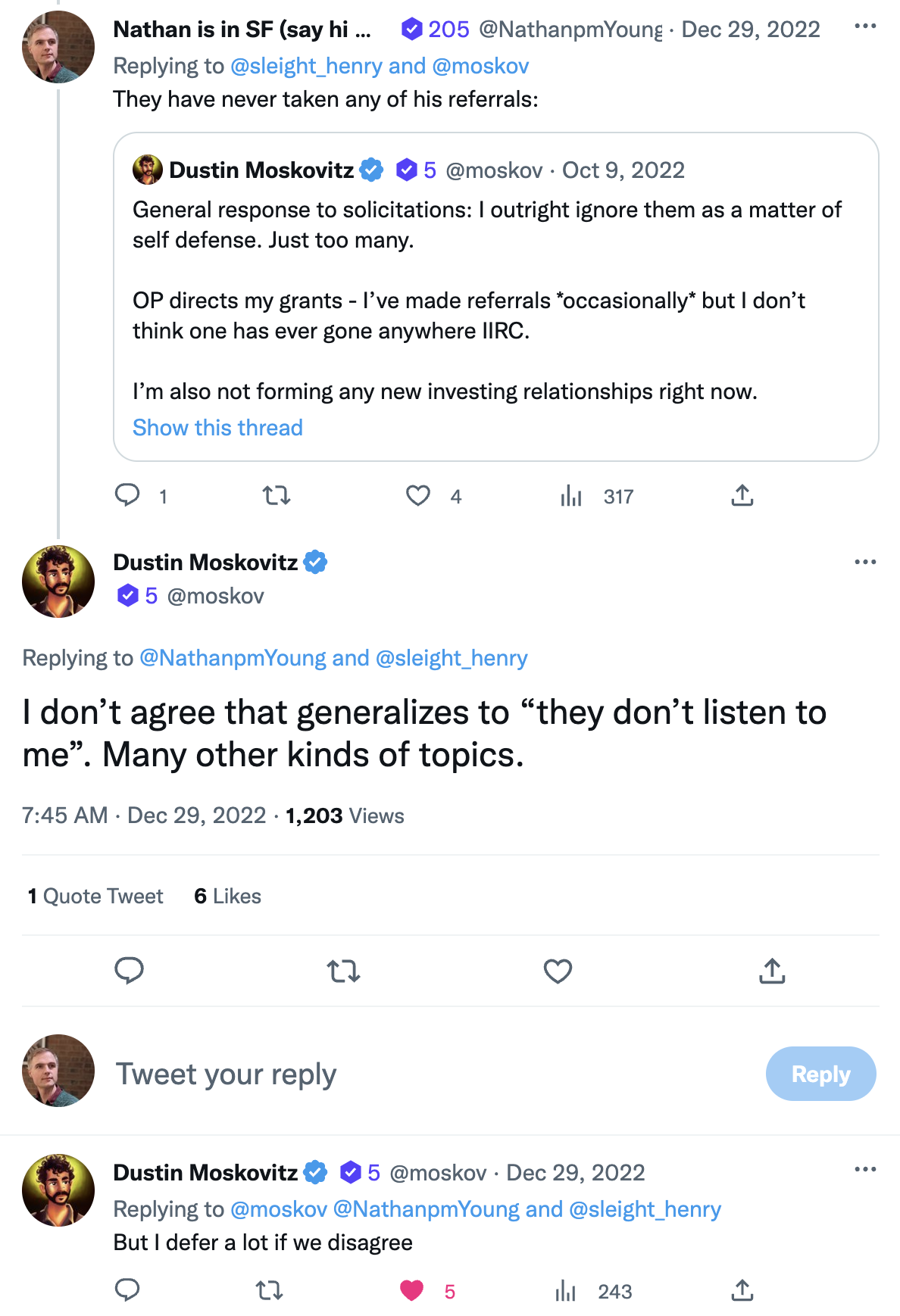

EA is reliant on funding, and the vast majority of funds come from a handful of tech billionaires: Dustin Moskowitz and Cari Tuna got most of their wealth through Facebook (now Meta) and Asana, Vitalik Buterin has Ethereum, and Sam Bankman-Fried had FTX.

Elite philanthropy has faced numerous criticisms, from how it boosts and solidifies the economic and political power of the ultra-wealthy to the ways in which it undermines democracy and academic freedom. This issue has been studied and discussed at extreme length, so we will not expand further on the basic point, but recent events strongly suggest that EA should re-examine its relationship to the practice and seriously consider other sources of funding.

Furthermore, becoming a billionaire often involves a lot of unethical or risk-seeking behaviour, and according to some ethical codes the very act of being a billionaire is immoral in itself. The sources of EA funds in particular can sometimes be morally questionable. Cryptocurrency is of debatable social value, is full of money laundering, fraud and scams, and has been created and promoted as a deliberate political project to dodge taxes, concentrate power in the hands of the ultra-wealthy, and financialise an ever-growing proportion of human life.[62] As for Facebook, there is unfortunately an abundance of evidence that its impact on the world is likely to be net-negative.

The Effective Altruism movement is not above conflicts of interest. Relying on a small number of ultra-wealthy members of the tech sector incentivises us to accept or even promote their political, philosophical, and cultural beliefs, at the expense of the rigorous critical examination EA prides itself on. This may undermine even the most virtuous movement over the long term. Indeed, EA institutions and leaders rarely if ever interrogate the processes and structures that donors rely upon (digital surveillance, “Web 3.0”, neoliberal capitalism, and so on). The question of whether, for instance, making large quantities of money in the tech industry should give somebody the right to exercise significant control over the future of humanity is answered with an implicit but resounding “Yes.”

Our models sometimes even assume that (an American corporation) creating an “aligned” AGI, the fulfilment of Silicon Valley’s (not to mention much of the Pentagon’s…) collective dreams, will solve all other major problems.[63]

Indeed, it is possible that certain members of the EA leadership were aware of Sam Bankman-Fried’s unethical practices some time ago and were seemingly unable or unwilling to do anything about it. Additionally, Bankman-Fried is not the only morally questionable billionaire to have been courted by EA (e.g. Ben Delo).

It is worth noting that the areas EA focuses on most intensely (the long-term future and existential risk, and especially AI risk within that) align remarkably well with the sorts of things tech billionaires are most concerned about: longtermism is the closest thing to “doing sci-fi in real life”[64], existential catastrophes are one of the few ways in which wealthy people[65] could come to harm, and AI is the threat most interesting to people who made their fortunes in computing.

Fears about technological stagnation and slowed population growth receive pride of place in key EA texts, which strikingly parallel elite worries about increased labour costs.

Most of the proposed interventions also reflect the interests of Silicon Valley. Differential technological development, energy innovation, and high-tech solutions to pandemics are all favoured a priori. There is little to no support for bans on AGI projects, nor moratoria on Lethal Autonomous Weapons Systems, facial recognition, or new fossil fuel infrastructure. Similarly, priority concerns for the long-term future focus on economic elite interest areas like technological progress and GDP growth over other issues that are at least as critical but would undermine the power and/or status of wealthy philanthropists, like workplace democratisation or wealth redistribution. Again, it is not that any of these positions are inherently wrong because they align with elite interests, just that this is a bias we really need to be aware of.

Contrast the AI situation to climate change, routinely dismissed in EA, where the problems are messy, often mundane, predominantly political, and put the very concept of economic growth under debate, and where the greatest risk is posed to poor people from the Global South. Compare also with issues like global poverty, which very few people within EA are directly affected by (and which the funders are not by definition!) and which has come to be deemed “lower impact” within some of EA.[66]

Interestingly, a huge proportion of EA’s intellectual infrastructure can be traced back to the academic climate of the USA during the Cold War, where left-wing thinkers were eradicated from (analytic) philosophy by McCarthyist purges, Robert McNamara pushed for “rationalisation” and quantification throughout the US establishment, and the RAND Corporation developed concepts like Rational Choice Theory, Operations Research, and Game Theory. Indeed, the current President and CEO of RAND, Jason Matheny, is a CSET founder and former FHI researcher. Aside from the Silicon Valley influences (from which we get the blogposts, Californian Ideology, and most of the technofetishism), EA’s intellectual heritage is largely one of philosophy and economics intentionally stripped of their ability to challenge the status quo. As ever, that’s not to say that things like analytic philosophy or Game Theory are inherently evil or anything – they’re really quite good for some things – just that they are the tools we have for specific historical and political reasons, they are not the only ones available, and we should be critical of how and where we employ them.

The relative prioritisations we describe also fit rather well with the disciplinary and cultural backgrounds of us EAs. It seems that our subjectively-generated quantifications just so happened to have led us to conclude that the best way to improve (or even save) the world is to pay analytic philosophers and computer scientists like us large sums of money to work on the problems we read about in our favourite sci-fi novels.