Ben_West

Bio

Non-EA interests include chess and TikTok (@benthamite). We are probably hiring: https://www.centreforeffectivealtruism.org/careers

Posts 75

Comments814

Topic contributions4

Thanks for sharing this; I was originally confused by the complaint because I didn't understand why a nonprofit making unprofitable investments would be cause for concern, but it sounds like your speculation is that the stuff about these being bad investments is included just to prevent the life sciences companies from claiming that the equity Latona received was of reasonably equivalent value and therefore they don't have to give the money back?

My impression is that, while corporate spinoffs are common, mergers are also common, and it seems fairly normal for investors to believe that corporations substantially larger than EA are more valuable as a single entity than as independent pieces, giving some evidence for superlinear returns.

But my guess is that this is extremely contingent on specific facts about how the corporation is structured, and it's unclear to me whether EA has this kind of structure. I too would be interested in research on when you can expect increasing versus decreasing marginal returns.

Thanks Michael! This is a great comment. (And I fixed the link, thanks for noting that.)

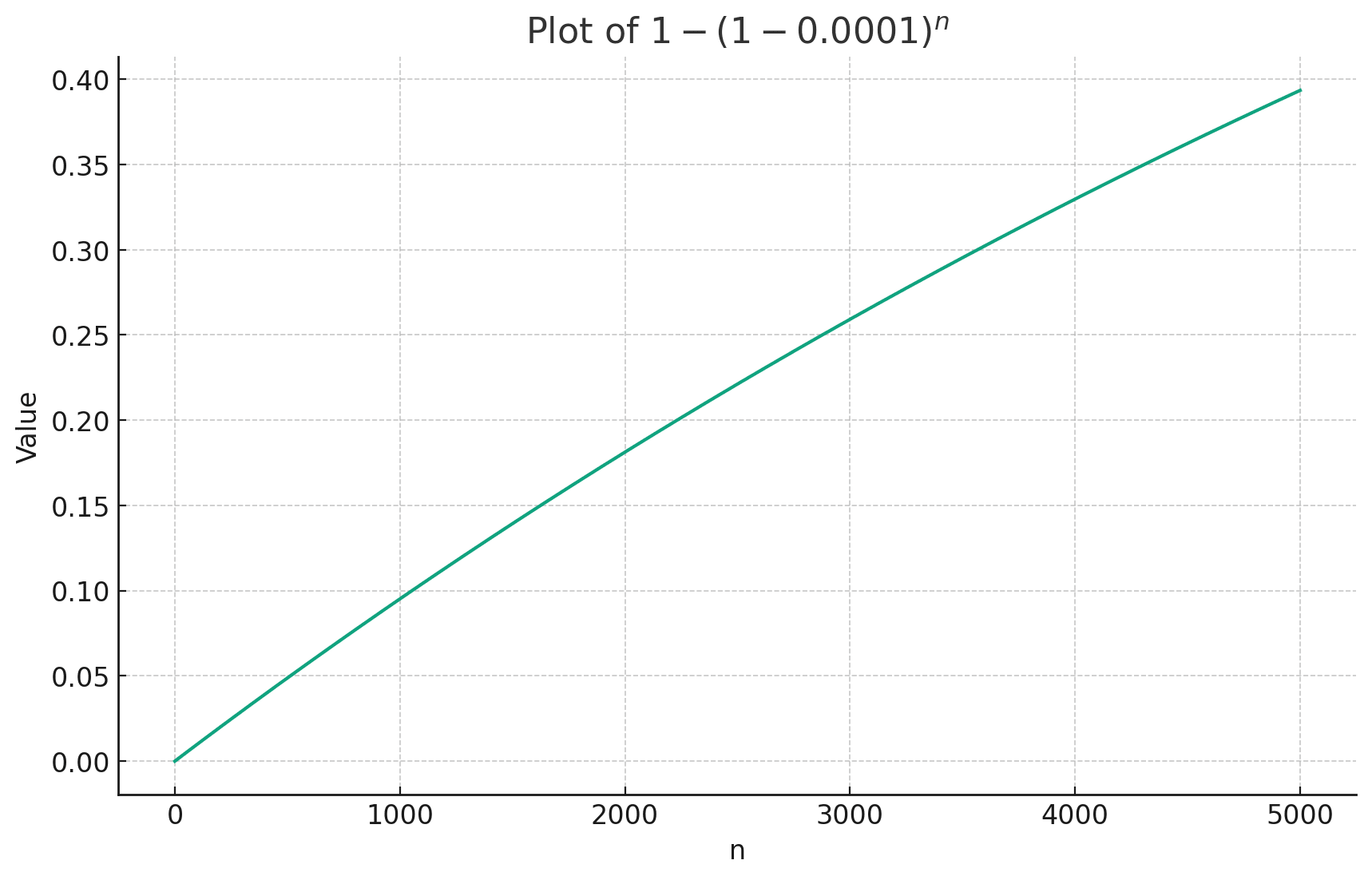

My anecdotal experience with hiring is that you are right asymptotically, but not practically. E.g. if you want to hire for some skill that only one in 10,000 people have, you get approximately linear returns to growth for the size of community that EA is considering:

And you can get to very low probabilities easily: most jobs are looking for candidates with a combination of: a somewhat rare skill, willingness to work in an unusual cause area, willingness to work in a specific geographic location, etc. and multiplying these all together gets small quickly.

It does feel intuitively right that there are diminishing returns to scale here though.

Thanks for writing this! I agree that we should be doing more social media; as Shakeel mentioned, this is a major part of our comms strategy.

In case you haven't seen it: a few of us did an experiment on TikTok last year. I think it was nice, but seemed unlikely to be the best use of my time, and haven't pursued it much.

Glad you like the dashboard! Credit for that is due to @Angelina Li .

I think we are unlikely to share names of the people we talk to (mostly because it would be tedious to create a list of everyone and get approval from them, and presumably some of them also wouldn’t want to be named) but part of the motivation behind EA Strategy Fortnight was to discuss ideas about the future of EA (see my post Third Wave Effective Altruism), which heavily overlaps with the questions you asked. This includes:

- Rob (CEA’s Head of Groups) and Jessica (Uni Groups Team Lead) wrote about some trade-offs between EA and AI community building.

- Multiple non-CEA staff also discussed this, e.g. Kuhanj and Ardenlk

- Regarding “negative impacts to non-AI causes”: part of why I was excited about the EA’s success no one cares about post (apart from trolling Shakeel) was to get more discussion about how animal welfare has been benefited from being part of EA. Unfortunately, the other animal welfare posts that were solicited for the Fortnight have had delays in publishing (as have some GH&WB posts), but hopefully they will eventually be published.

- My guess is that you commenting about why having these posts being public would be useful to you might increase the likelihood that they eventually get published, so I would encourage you to share that, if you indeed do think it would be useful.

- I also personally feel unclear about how useful it is to share our thinking in public; if you had concrete stories of how us doing this in the past made your work more impactful I would be interested to hear them. The two retrospectives make me feel like Strategy Fortnight was nice, but I don't have concrete stories of how it made the world better.

- There were also posts about effective giving, AI welfare, and other potential priorities for EA.

On comms and brand, Shakeel (CEA’s Head of Comms) is out at the moment, but I will prompt him to respond when he returns.

On the list of focus areas: thanks for flagging this! We’ve added a note with a link to this post stating that we are exploring more AI things.

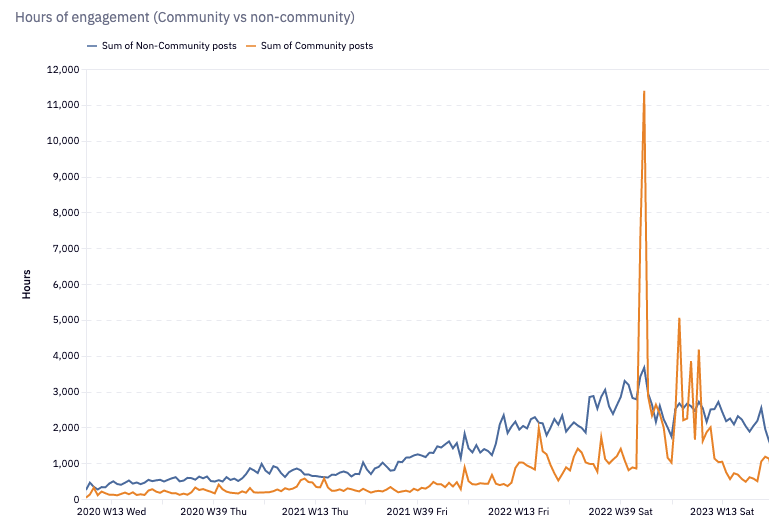

Thanks for the suggestion! This comment from Lizka contains substantial discussion of community versus noncommunity; for convenience, I will reproduce one of the major graphs here:

You can see that there was a major spike around the FTX collapse, and another around the TIME and Bostrom articles, which might make the "average" unintuitive, since it's heavily determined by tail events.

Thanks Jim! I think this points in a useful direction, but I'm not sure I would describe this argument as "debunked". Instead, I think I would say that the following claim from you is the crux:

As an example of why this claim is not obviously true: Quicksort is provably the most efficient way to sort a list, and I'm fairly confident it doesn't involve suffering. If you told me that you had an algorithm which suffered while sorting a list, I would feel fairly confident that this algorithm would be less efficient than quicksort (i.e suffering is anti-correlated with efficiency).

Will this anti-correlation generalize to more complex algorithms? I don't really know. But I would be surprised if you were >90% confident that it would not.