Pablo

Bio

Every post, comment, or Wiki edit I authored is hereby licensed under a Creative Commons Attribution 4.0 International License.

Posts 41

Comments1138

Topic contributions4110

A recurrent disappointment with EA Forum comments related to the karma system is the frequency with which they assume that a particular explanation of the karma score of a given comment is correct, without even considering alternative explanations. One doesn't have to be very creative or contrarian to think of plausible reasons users may have disagreed-voted with the statement "CEA is widely perceived as grossly ineffective whereas AMF is perceived as highly effective" other than that "CEA staff have so much karma that they can tank any comment with a single downvote".

I'm puzzled by this reply. You acknowledge that you misremembered, but in your edited comment you continue to state that "here's a whole chapter in superintelligence on human intelligence enhancement via genetic selection".

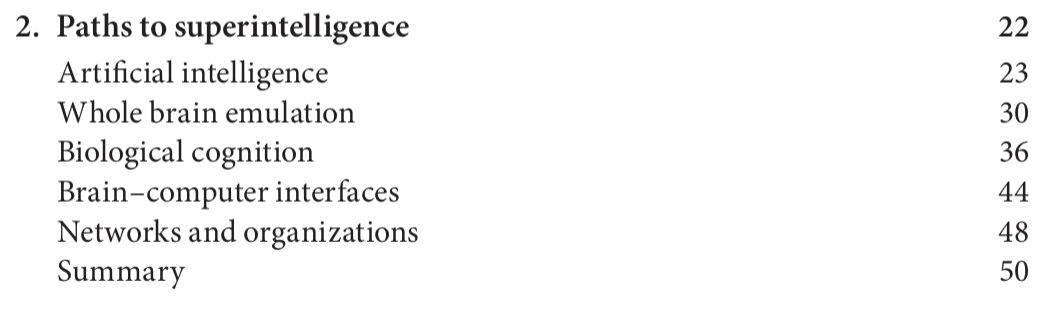

The chapter in question is called "Paths to superintelligence", and is divided into five sections, followed by a summary. The sections are called "Artificial intelligence", "Whole brain emulation", "Biological cognition", "Brain–computer interfaces" and "Networks and organizations". It is evident that the whole chapter is not devoted to human intelligence enhancement via genetic selection.

Separately, you make this statement immediately after claimimg that "there is a lot of support for what could be described as 'liberal eugenics' among these communities", and you offer this claim as evidence of such support. Yet, as it should again be clear even from casual inspection of the abstract with which the chapter begins, Bostrom's aim here is to discuss how superintelligence might be developed, not to argue that we should develop superintelligence, let alone argue that we should develop superintelligence via genetic selection. (Insofar as Bostrom has expressed views about the desirability rather than the probability of cognitive enhancement in general and genetic enhancement specifically—later in the book and elsewhere in his writings—, his views are nuanced and complex.)

Note that this is a different Peter Singer. Here is the entry for the relevant Peter Singer. According to it, Singer had 77 co-authors.

You are right that overall we focus more on negatives than positives. We believe this is justified since organizations are already incentivized to make the positive case for themselves, and routinely do so in public announcements as well as private recruitment and funding pitches.

As a potential consumer of your critiques/evaluations, I would prefer that you distribute your focus exactly to the degree that you believe it to be warranted in light of your independent impression of the org in question, rather than try to rectify a possible imbalance by deliberately erring in the opposite direction.

As Aaron notes, the "Petrov Day" tradition started with a post by Yudkowsky. It is indeed somewhat strange that Petrov was singled out like this, but I guess the thought was that we want to designate one day of the year as the "do not destroy the world day", and "Petrov Day" was as good a name for it as any.

Note that this doesn't seem representative of the degree of appreciation for Petrov vs. Arkhipov within the EA community. For example, the Future of Humanity Institute has both a Petrov Room and an Arkhipov Room (a fact that causes many people to mix them up), and the Future of Life Award was given both to Arkhipov (in 2017) and to Petrov (in 2018).

I think Arkhipov's actions are in a sense perhaps even more consequential than Petrov's, because it was truly by chance that he was present in that particular nuclear submarine, rather than in any of the other subs from the flotilla. This fact justifies the statement that, if history had repeated itself, the decision to launch a nuclear torpedo would likely not have been vetoed. The counterfactual for Petrov is not so clear.

Having listened to several episodes, I can strongly recommend this podcast. One of the very best.