WilliamKiely

Bio

Participation3

You can send me a message anonymously here: https://www.admonymous.co/will

Posts 14

Comments413

Thinking out loud about credences and PDFs for credences (is there a name for these?):

I don't think "highly confident people bare the burden of proof" is a correct way of saying my thought necessarily, but I'm trying to point at this idea that when two people disagree on X (e.g. 0.3% vs 30% credences), there's an asymmetry in which the person who is more confident (i.e. 0.3% in this case) is necessarily highly confident that the person they disagree with is wrong, whereas the the person who is less confident (30% credence person) is not necessarily highly confident that the person they disagree with is wrong. So maybe this is another way of saying that "high confidence requires strong evidence", but I think I'm saying more than that.

I'm observing that the high-confidence person needs an account of why the low-confidence person is wrong, whereas the opposite isn't true.

Some math to help communicate my thoughts: The 0.3% credence person is necessarily at least 99% confident that a 30% credence is too high. Whereas a 30% credence is compatible with thinking there's, say, a 50% chance that a 0.3% credence is the best credence one could have with the information available.

So a person who is 30% confident X is true may or may not think that a person with a 0.3% credence in X is likely reasonable in their belief. They may think that that person is likely correct, or they may think that they are very likely wrong. Both possibilities are coherent.

Whereas the person who credence in X is 0.3% necessarily believes the person whose credence is 30% is >99% likely wrong.

Maybe another good way to think about this:

If my point-estimate is X%, I can restate that by giving a PDF in which I give a weight for all possible estimates/forecasts from 0-100%.

E.g. "I'm not sure if the odds of winning this poker hand are 45% or 55% or somewhere in between; my point-credence is about 50% but I think the true odds may be a few percentage points different, though I'm quite confident that the odds are not <30% or >70%. (We could draw a PDF)."

Or "If I researched this for an hour I think I'd probably conclude that it's very likely false, or at least <1%, but on the surface it seems plausible that I might instead discover that it's probably true, though it'd be hard to verify for sure, so my point-credence is ~15%, but after an hour of research I'd expect (>80%) my credence to be either less than 3% or >50%.

Is there a name for the uncertainty (PDF) about one's credence?

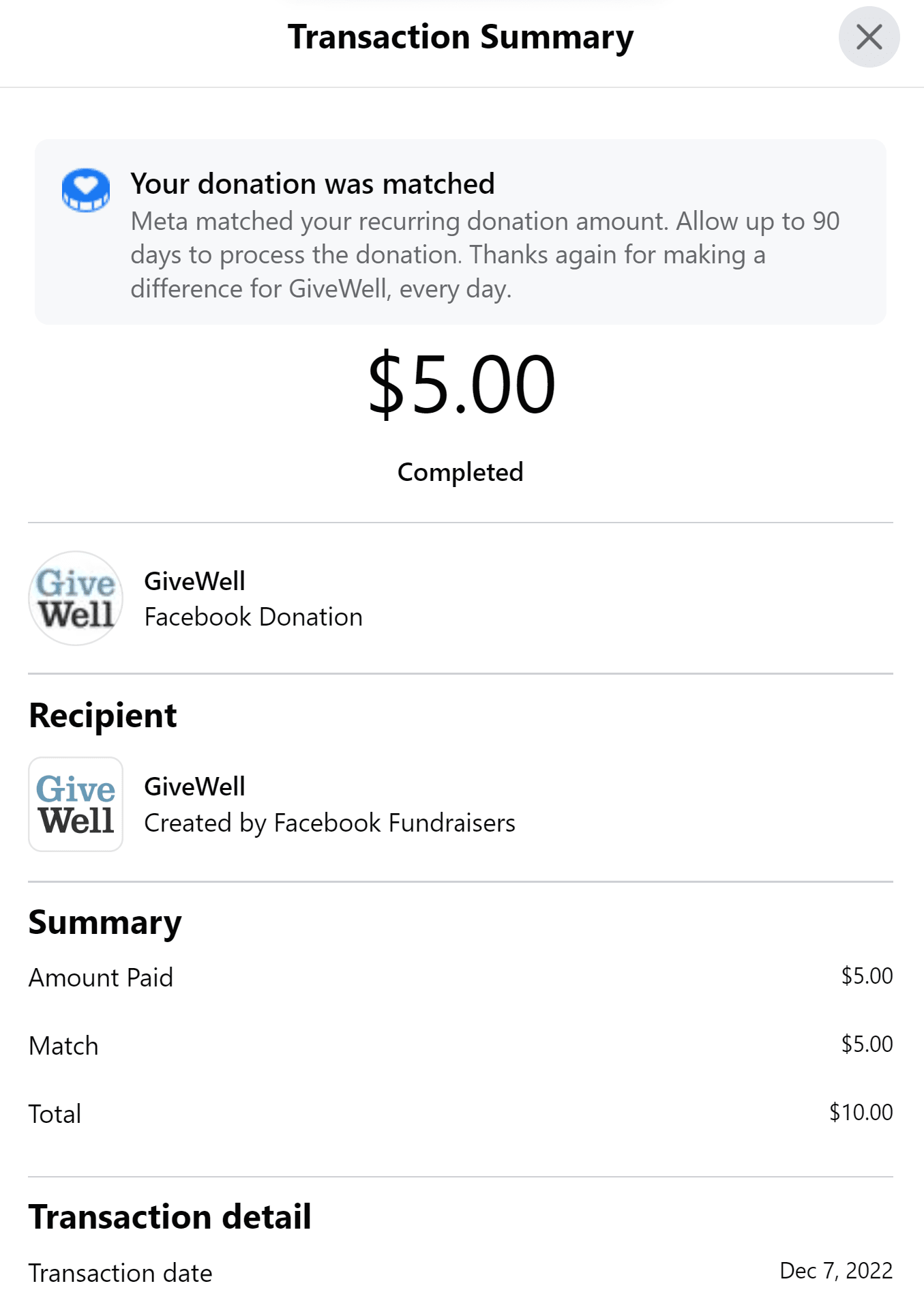

I'm not sure. I think you are the first person I heard of saying they got matched. When I asked in the EA Facebook group for this on December 15th if anyone got matched, all three people who responded (including myself) reported that they were double-charged for their December 15th donations. Initially we assumed the second receipt was a match, but then we saw that Facebook had actually just charged us twice. I haven't heard anything else about the match since then and just assumed I didn't get matched.

I felt a [...] profound sense of sadness at the thought of 100,000 chickens essentially being a rounding error compared to the overall size of the factory farming industry.

Yes, about 9 billion chickens are killed each year in the US alone, or about 1 million per hour. So 100,000 chickens are killed every 6 minutes in the US (and every 45 seconds globally). Still, it's a huge tragedy.

For me I don't think having a concrete picture of the mechanism for how AI could actually kill everyone ever felt necessary to viscerally believing that AI could kill everyone.

And I think this is because every since I was a kid, long before hearing about AI risk or EA, the long-term future that seemed most intuitive to me was a future without humans (or post-humans).

The idea that humanity would go on to live forever and colonize the galaxy and the universe and live a sci-fi future has always seemed too fantastical to me to assume as the default scenario. Sure it's conceivable--I've never assumed it's extremely unlikely--but I have always assumed that in the median scenario humanity somehow goes extinct before ever getting to make civilizations in hundreds of billions of star systems. What would make us go extinct? I don't know. But to think otherwise would be to think that all of us today are super special (by being among the first 0.000...001% (a significant number of 0s) of humans to ever live). And that has always felt like an extraordinary thing to just assume, so my intuitive, gut, visceral belief has always been that we'll probably go extinct somehow before achieving all that.

So when I learned about AI risk I intellectually though "Ah, okay, I can see how something smarter than us that doesn't share our goals could cause our extinction; so maybe AI is the thing that will prevent us from making civilizations on hundreds of billions of stars."

I don't know when I first formulated a credence that AI would cause doom, but I'm pretty sure that I always viscerally felt that AI could cause human extinction ever since first hearing an argument that it could.

(The first time I heard an argument for AI risk was probably in 2015, when I read HPMOR and Superintelligence; I don't recall knowing much at all about EY's views on AI until Jan-Mar 2015 when I read /r/HPMOR and people mentioned AI) I think reading Superintelligence the same year I read HPMOR (both in 2015) was roughly the first time I thought about AI risk. Just looked it up actually: From my Goodreads I see that I finished reading HPMOR on March 4th, 2015, 10 days before HPMOR finished coming out. I read it in a span of a couple weeks and no doubt learned about it via a recommendation that stemmed from my reading of HPMOR. So Superintelligence was my first exposure to AI risk arguments. I didn't read a lot of stuff online at that time; e.g. I didn't read anything on LW that I can recall.)