Bob Jacobs 🔸

Bio

Participation3

I co-started Effectief Geven (Belgian effective giving org), am a volunteer researcher at SatisfIA (AI-safety org) and a volunteer writer at GAIA (Animal welfare org).

If you're interested in philosophy and mechanism design, consider checking out my blog.

I'm a student of moral science at the university of Ghent. I also started and ran EA Ghent from 2020 to 2024, at which point I quit in protest over the Manifest scandal (and the reactionary trend it highlighted). I now no longer consider myself an EA (but I'm still part of GWWC and EAA, and if the rationalists split off I'll definitely join again).

Possible conflict of interests: I have never received money from EA, but could plausibly be biased in favor of the organizations I volunteer for.

How others can help me

A paid job or a good conversation

How I can help others

philosophical research, sociological research, graphic design, mechanism design, translation, literature reviews, forecasting (top 20 on metaculus).

Send me a request and I'll probably do it for free.

Posts 19

Comments128

Topic contributions10

Are you an EU citizen? If so, please sign this citizen’s initiative to phase out factory farms (this is an approved EU citizen’s initiative, so if it gets enough signatures the EU has to respond):

stopcrueltystopslaughter.com

It also calls for reducing the number of animal farms over time, and introducing more incentives for the production of plant proteins.

(If initiatives like these interest you, I occasionally share more of them on my blog)

EDIT: If it doesn't work, try again in a couple hours/days. The collection has just started and the site may be overloaded. The deadline is in a year, so no need to worry about running out of time.

Hi Arturo,

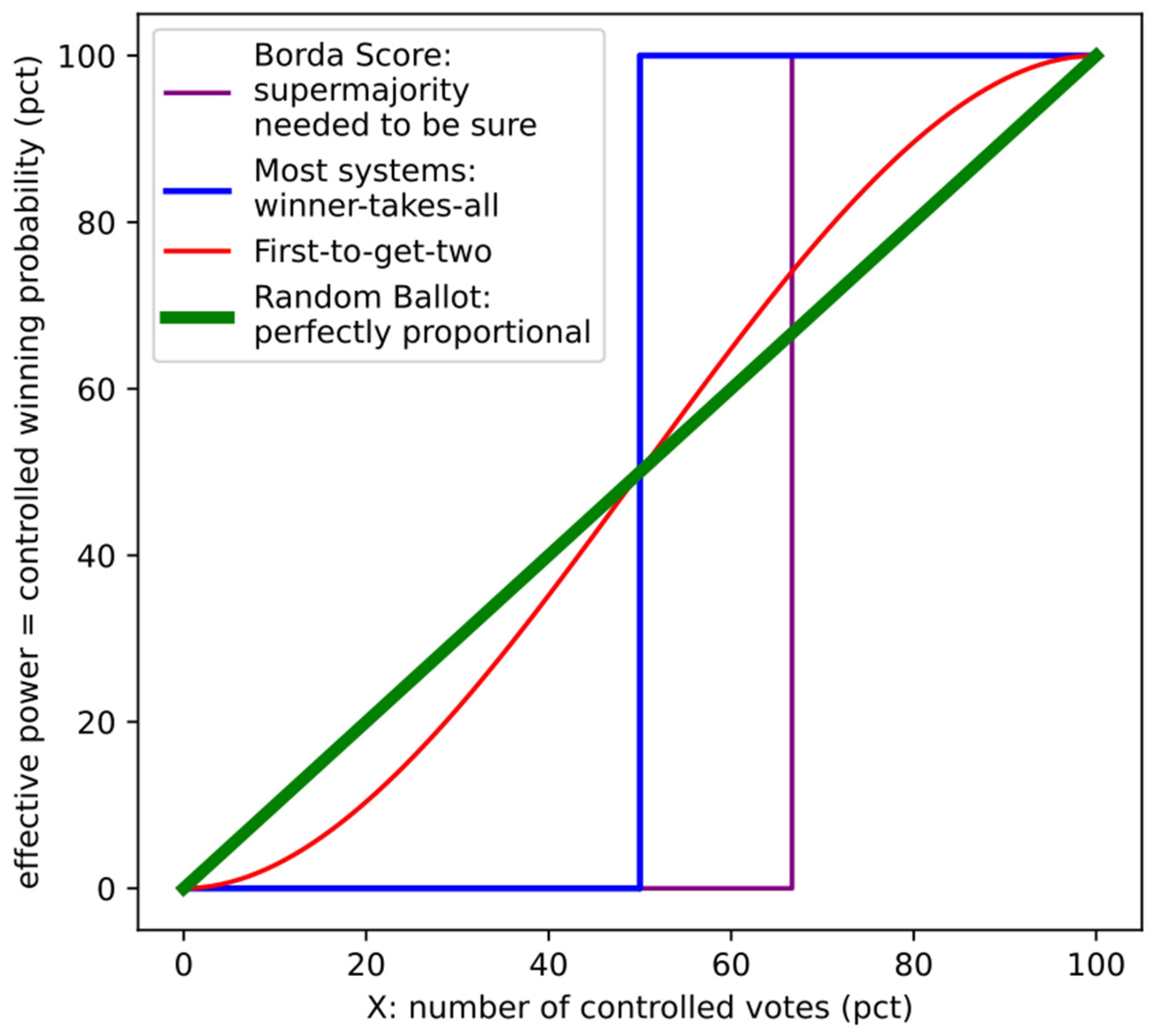

You might be interested in this graph, from me and Jobst's paper: "Should we vote in non-deterministic elections?"

It visualizes the effective power groups of voters have in proportion to their percentage of the votes. So for most winner-takes-all systems (conventional voting systems) it is a step function; if you have 51% of the vote you have 100% of the power (blue line).

Some voting systems try to ameliorate this by requiring a supermajority; e.g. to change the constitution you need 2/3rds of the votes. This slows down legislation and also doesn't really change the problem of proportionality.

Our paper talks about non-deterministic voting systems, systems that incorporate an element of chance. The simplest version would be the "random ballot"; everyone sends in their ballot, then one is drawn at random. This system is perfectly proportional. A voting bloc with 49% of the votes is no longer in power 0% of the time, but is now in power 49% of the time (green line).

Of course not all non-deterministic voting systems are as proportional as the random ballot. For example, say that instead of picking one ballot at random, we keep drawing ballots at random until we have two that pick the same candidate. Now you get something in between the random ballot and a conventional voting systems (red line).

There are an infinite amount of non-deterministic voting systems so these are just two simple examples and are not the actual non-deterministic voting systems we endorse. For a more sophisticated non-deterministic voting system you can take a look at MaxParC.

Also, as you may have noticed, EAs are mostly focused on individualistic interventions and are not that interested in this kind of systemic change (I don't bother with my papers on this forum). If you want to discuss these types of ideas you might have more luck on the voting subreddit, the voting theory forum, or the electo wiki.

and they seem to be down on socialism, except maybe some non-mainstream market variants.

I did try to find a survey for sociology, political science, and economics, not only today but also when I was writing my post on market socialism (I too wondered whether economists are more in favor of market socialism), but I couldn't really find one. My guess is that the first two would be more pro-socialism and the last more anti, although it probably also differs from country to country depending on their history of academia (e.g. whether they had a red scare in academia or not).

I feel like this is the kind of anti science/empiricism arrogance that philosophers are often accused of

This is probably partly because of the different things they're researching. Economics tends to look at things that are easier to quantify, like GDP and material goods created, which capitalism is really good at, while philosophers tend to look at things that capitalism seems to be less good at, like alienation, which is harder to quantify (though proxies like depression, suicide and loneliness do seem to be increasing).

Not to mention, they might agree on the data but disagree on what to value. Rust & Schwitzgebel (2013) did a survey of philosophy professors specializing in ethics, philosophy professors not specializing in ethics, and non-philosophy professors. 60% of ethicists felt eating meat was wrong, while just under 45% of non-ethicists agreed, and only 19.6% of non-philosophers thought so. I personally think one of the strongest arguments against capitalism is the existence of factory farms. With such numbers, it seems plausible that while an average economist might think of the meat industry as a positive, the average philosopher might think of it as a negative (thinking something akin to this post).

Let me try to steelman this:

We want people to learn new things, so we have conferences where people can present their research. But who to invite? There are so many people, many of whom have never done any studies.

Luckily for us, we have a body of people that spend their lives researching and checking each other's research: Academia. Still, there are many academics, and there's only so many time slots you can assign before you're filled up; ideally, we'd be representative.

So now the question becomes: why was the choice made to spend so many of the limited time slots on "scientific racists", which is a position that's virtually universally rejected by professional researchers, while topics like "socialism", which has a ton of support in academia (e.g., the latest philpapers survey found that when asked about their politics, a majority of philosophers selected "socialism" and only a minority selected "capitalism" or "other"), tend to get little to no time allotted to them at these conferences?

For one thing, I'm not sure if I want to concede the point that it is the "maximally truth-seeking" thing to risk that a community evaporatively cools itself along the lines we're discussing.

Another way to frame it is through the concept of collective intelligence. What is good for developing individual intelligence may not be good for developing collective intelligence.

Think, for example, of schools that pit students against each other and place a heavy emphasis on high-stakes testing to measure individual student performance. This certainly motivates people to personally develop their intellectual skills; just look at how much time, e.g. Chinese children are spending on school. But is this better for the collective intelligence?

High-stakes testing often leads to a curriculum that is narrowly focused on intelligence-focused skills that are easily measurable by tests. This can limit the development of broader, harder-to-measure social skills that are vital for collective intelligence, such as communication, group brainstorming, deescalation, keeping your ego in check, empathy...

And such a testing-focused environment can discourage collaborative learning experiences because the focus is on individual performance. This reduction in group learning opportunities and collaboration limits overall knowledge growth.

It can exacerbate educational inequalities by disproportionately disadvantaging students from lower socio-economic backgrounds, who may have less access to test preparation resources or supportive learning environments. This can lead to a segmented education system where collective intelligence is stifled because not all members have equal opportunities to contribute and develop.

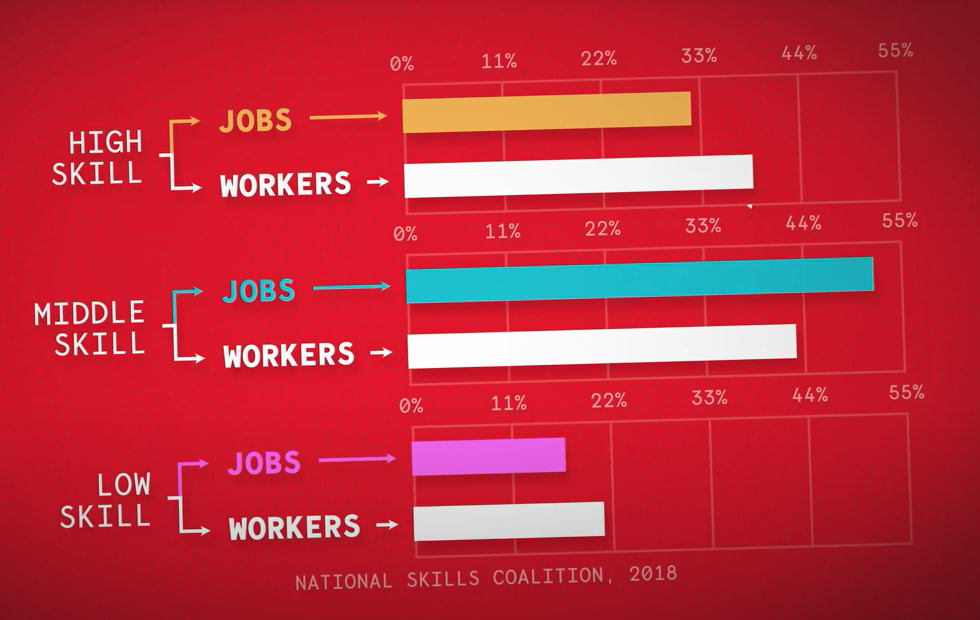

And what about all the work that needs to be done that is not associated with high intelligence? Students who might not excel in what a given culture considers high-intelligence (such as the arts, practical skills, or caretaking work) may feel undervalued and disengage from contributing their unique perspectives. Worse, if they continue to pursue individual intelligence, you might end up with a workforce that has a bad division of labor, despite having people that theoretically could have taken up those niches. Like what's happening in the US:

If you want to have more truth-seeking, you first have to make sure that your society functions. (E.g. if everyone is a college professor, who's making the food?)

To have a collective be maximally truth-seeking in the long run, you have to not solely focus on truth-seeking.

eventually SJP-EA morphs into bog-standard Ford Foundation philanthropy

This seems unlikely to me for several reasons, foremost amongst them that they would lose interest in animal welfare. Do you think that progressives are not truly invested in it, and that it's primarily championed by their skeptics? Because the data seems to indicate the opposite.

I appreciate what Rutger Bregman is trying to do, and his work has certainly had a big positive impact on the world, almost certainly larger than mine at least. But honestly, I think he could be more rigorous. I haven't looked into his 'school for moral ambition' project, but I have read (the first half) of his book "humankind", and despite vehemently agreeing with the conclusion, I would never recommend it to anyone, especially not anyone who has done any research before.

There seems to be some sort of trade-off between wide reach and rigor. I noticed a similar thing with other EA public intellectuals, like for example with Sam Harris and his book "The Moral Landscape" (I haven't read any of his other books, mostly because this one was just so riddled with sloppy errors), and Steven Pinker's "Enlightenment Now" (Haven't read any of his other books either, again because of errors in this book). (Also, I've seen some clips of them online, and while that's not the best way to get information about someone, they didn't raise my opinion of them, to say the least).

Pretty annoying overall. At least Bregman is not prominently displayed on the EA People page like they are (even though what I read of his book was comparatively better). I would delete them off of it, but last time I removed SBF and Musk from it, that edit got downvoted and I had to ask a friend to upvote it (and this was after SBF was detained, so I don't think a Harris or Pinker edit would fare much better). Pretty sad, because I think EA has much better people to display than a lot of individuals on that page. Especially considering some of them (like Harris and Pinker) currently don't even identify as EA.

A bit strong, but about right. The strategy the rationalists describe seems to stem from a desire to ensure their own intellectual development, which is, after all, the rationalist project. By disregarding social norms you can start conversing with lots of people about lots of stuff you otherwise wouldn't have been able to. Tempting, however, my own (intellectual) freedom is not my primary concern; my primary concern is the overall happiness (or feelings, if you will) of others, and certain social norms are there to protect that.

Here's one data point; I was consistently in the top 25 on metaculus for a couple years. I would never attend a conference where a "scientific racist" gave a talk.

I made two visual guides that could be used to improve online discussions. These could be dropped into any conversation to (hopefully) make the discussion more productive.

The first is an update on Grahams hierarchy of disagreement

I improved the lay-out of the old image and added a top layer for steelmanning. You can find my reasoning here and a link to the pdf-file of the image here.

The second is a hierarchy of evidence:

I added a bottom layer for personal opinion. You can find the full image and pdf-file here.

Lastly I wanted to share the Toulmin method of argumentation, which is an excellent guide for a general pragmatic approach to arguments