Just as the 2022 crypto crash had many downstream effects for effective altruism, so could a future crash in AI stocks have several negative (though hopefully less severe) effects on AI safety.

Why might AI stocks crash?

The most obvious reason AI stocks might crash is that stocks often crash.

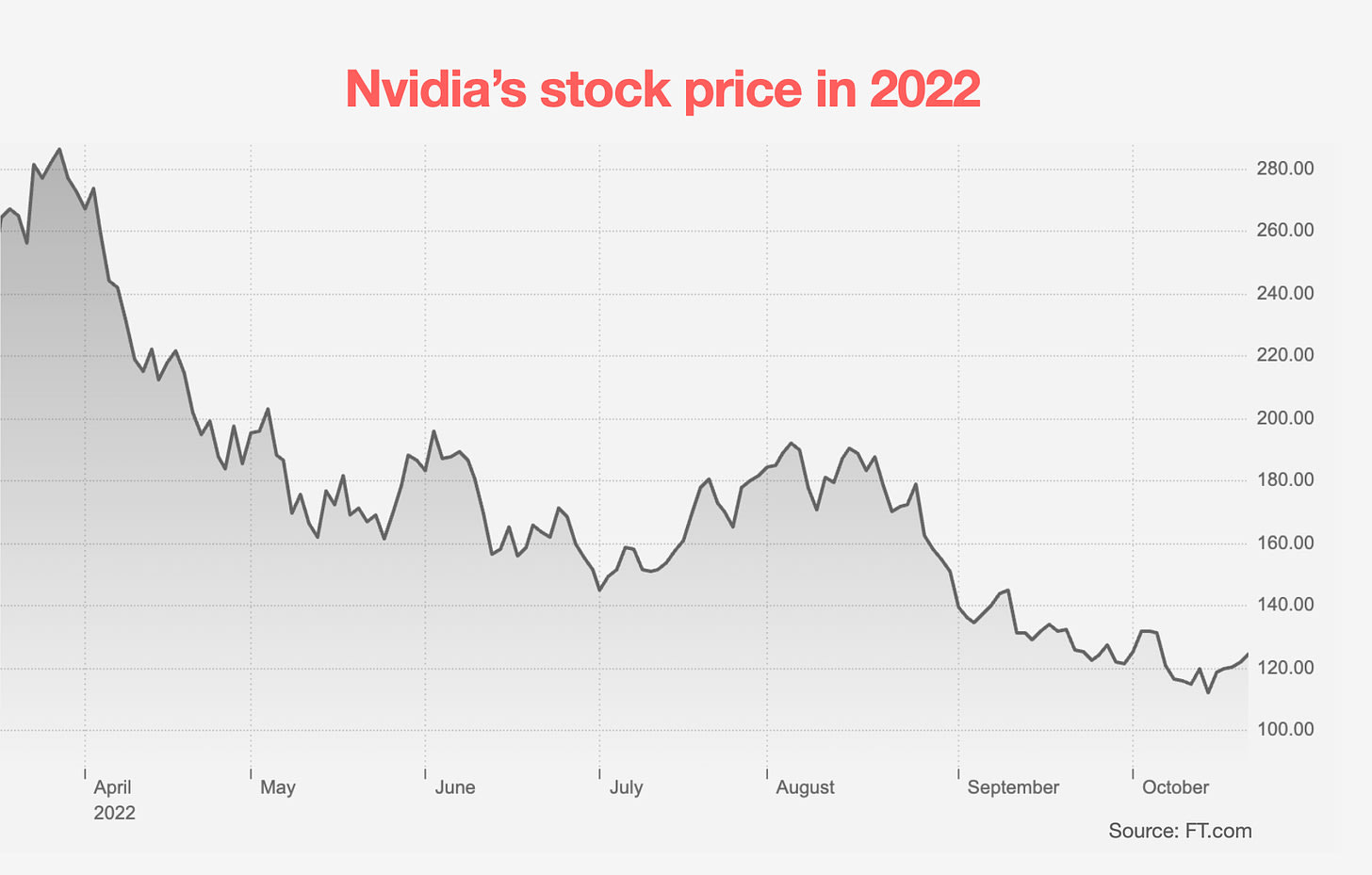

Nvidia’s price fell 60% just in 2022, along with other AI companies. It also fell more than 50% in 2020 at the start of the COVID outbreak, and in 2018. So, we should expect there’s a good chance it falls 50% again in the coming years.

Nvidia’s implied volatility is about 60%, which means – even assuming efficient markets – it has about a 15% chance of falling more than 50% in a year.

And more speculatively, booms and busts seem more likely for stocks that have gone up a ton, and when new technologies are being introduced.

That’s what we saw with the introduction of the internet and the dot com bubble, as well as with crypto. (I'd love to see more base rate estimates here.)

(Here are two attempts to construct economic models for why. This phenomenon also seems related to the existence of momentum in financial prices, as well as bubbles in general.)

Further, as I argued, current spending on AI chips requires revenues from AI software to reach hundreds of billions within a couple of years, and (at current trends) approach a trillion by 2030. There’s plenty of scope to not hit that trajectory, which could cause a sell off.

Note the question isn’t just whether the current and next generation of AI models are useful (they definitely are), but rather:

- Are they so useful their value can be measured in the trillions?

- Do they have a viable business model that lets them capture enough of that value?

- Will they get there fast enough relative to market expectations?

My own take is that the market is still underpricing the long term impact of AI (which is why I about half my equity exposure is in AI companies, especially chip makers), and I also think it’s quite plausible that AI software will be generating more than a trillion dollars of revenue by 2030.

But it also seems like there’s a good chance that short-term deployment isn’t this fast, and the market gets disappointed on the way. If AI revenues merely failed to double in a year, that could be enough to prompt a sell off.

I think this could happen even if capabilities keep advancing (e.g. maybe because real world deployment is slow), though a slow down in AI capabilities and new “AI winter” would also most likely to cause a crash.

A crash could also be caused by a broader economic recession, rise in interest rates, or anything that causes investors to become more risk-averse – like a crash elsewhere in the market or geopolitical issue.

The end of stock bubbles often have no obvious trigger. At some point, the stock of buyers gets depleted, prices start to move down, and that causes others to sell, and so on.

Why does this matter?

A crash in AI stocks could cause a modest lengthening of AI timelines, by reducing investment capital. For example, startups that aren’t yet generating revenue could find it hard to raise from VCs and fail.

A crash in AI stocks (depending on its cause) might also tell us that market expectations for the near-term deployment of AI have declined.

This means it’s important to take the possibility of a crash into account when forecasting AI, and in particular to be cautious about extrapolating growth rates in investment from the last year or so indefinitely forward.

Perhaps more importantly, just like the 2022 crypto crash, an AI crash could have implications for people working on AI safety.

First, the wealth of many donors to AI safety is pretty correlated with AI stocks. For instance as far as I can tell Good Ventures still has legacy investments in Meta, and others have stakes in Anthropic. (In some cases people are deliberately mission hedging.)

Moreover, if AI stocks crash, it’ll most likely be at a time when other stocks (and especially other speculative investments like crypto) are also falling. Donors might see their capital halve.

That means an AI crash could easily cause a tightening in the funding landscape. This tightening probably wouldn’t be as big as 2023, but may still be noticeable. If you’re running an AI safety org, it’s important to have a plan for this.

Second, an AI crash could cause a shift in public sentiment. People who’ve been loudly sounding caution about AI systems could get branded as alarmists, or people who fell for another “bubble”, and look pretty dumb for a while.

Likewise, it would likely become harder to push through policy change for some years as some of the urgency would drop out of the issue.

I don’t think this response will necessarily be rational – I’m just saying it’s what many people will think. A 50% decline in AI stock prices could maybe lengthen my estimates for when transformative AI will arrive by a couple of years, but it wouldn’t have a huge impact on my all considered view about how many resources should go into AI safety.

Finally, don’t forget about second order effects. A tightening in the funding landscape means projects get cut, which hurts morale. A turn in public sentiment against AI safety slows progress and leads to more media attacks…which also hurts morale. Lower morale leads to further community drama…which leads to more media attacks. And so on. In this way, an economic issue can go on to cause a much wider range of problems.

One saving grace is that these problems will be happening at a time when AI timelines are lengthening, and so hopefully the risks are going down — partially offsetting the damage. (This is the whole idea of mission hedging – have more resources if AI progress is more rapid than expected, and have less otherwise.) However, we could see some of these negative effects without much change in timelines. And either way it could be a difficult experience for those in AI safety, so it's worth being psychologically prepared, and taking cheap steps to become more resilient.

What can we do about this, practically? I’m not sure there’s that much, but being on record that a crash in AI stocks is possible seems helpful. It also makes me want to be more cautious about hyping short term capabilities, since that makes it sound like the case for AI Safety depends on them. If you’re an advocate it could be worth thinking about what you’d do if there were an abrupt shift in public opinion.

It’s easy to get caught up in the sentiment of the day. But sentiment can shift, quickly. And shifts in sentiment can easily turn into real differences in economic reality.

This was originally posted on benjamintodd.substack.com. Subscribe there to get all my new posts.

Unpopular opinion (at least in EA): it not only looks bad, but it is bad that this is the case. Divest!

AI safety donors investing in AI capabilities companies is like climate change donors investing in oil companies or animal welfare donors investing in factory farming (sounds a bit ridiculous when put like that, right? Regardless of mission hedging arguments).

(It's bad because it creates a conflict of interest!)

"I donate to AI safety and governance" [but not enough to actually damage the bottom lines of the big AI companies I've invested in.]

"Oh, no of course I intend to sell all my AI stock at some point, and donate it all to AI Safety." [Just not yet; it's "up only" at the moment!]

"Yes, my timelines stretch into the 2030s." [Because that's when I anticipate that I'll be rich from my AI investments.]

"I would be in favour of a Pause, if I thought it was possible." [And I could sell my massive amounts of non-publicly-traded Anthropic stock at another 10x gain from here, first.]

You could invest in AI stocks through a donor-advised fund or private foundation to reduce the potential for personal gain and so COIs.

Yes, but the COIs extend to altruistic impact too. Like - which EA EtG-er wouldn't want to be able to give away a billion dollars? Having AI stocks in your DAF still biases you toward supporting the big AI companies, and against trying to stop AGI/ASI development altogether (when that may well actually be the most high impact thing to do, even if it means you never get to give away a billion dollars).

How much impact do you expect such a COI to have compared to the extra potential donations?

For reference:

And how far do you go in recommending divestment from AI to avoid COIs?

Well, the bottom line is extinction, for all of us. If the COIs block enough people from taking sufficient action, before it's too late, then that's what happens. The billions of EA money left in the bank as foom-doom hits will be useless. Might as well never have been accumulated in the first place.

I'll also note that there are plenty of other potential good investments out there. Crypto has gone up about as much as AI stocks in general over the last year, and some of them (e.g. SOL) have gone up much more than NVDA. There are promising start-ups in many non-AI areas. (Join this group to see more[1]).

To answer your bottom two questions:

1. I think avoiding stock-market-wide index funds is probably going too far (as they are neutral about AI - if AI starts doing badly, e.g. because of regulation, then the composition of the index fund will change to reflect this).

2. I wouldn't recommend this as a strategy, unless they are already on their way down and heavy regulation looks imminent.

But note that people are still pitching the likes of Anthropic in there! I don't approve of that.

Bitcoin is only up around 20% from its peaks in March and November 2021. It seems far riskier in general than just Nvidia (or SMH) when you look over longer time frames. Nvidia has been hit hard in the past, but not as often or usually as hard.

Smaller cap cryptocurrencies are even riskier.

I also think the case for outperformance of crypto in general is much weaker than for AI stocks, and it has gotten weaker as institutional investment has increased, which should increase market efficiency. I think the case for crypto has mostly been greater fool theory (and partly as an inflation hedge), because it's not a formally productive asset and its actual uses seem overstated to me. And even if crypto were better, you could substantially increase (risk-adjusted) returns by also including AI stocks in your portfolio.

I'm less sure about private investments in general, and they need to be judged individually.

I don't really see why your point about the S&P500 should matter. If I buy 95% AI stocks and 5% other stuff and don't rebalance between them, AI will also have a relatively smaller share if it does relatively badly, e.g. due to regulation.

Maybe there's a sense in which market cap-weighting from across sectors and without specifically overweighting AI/tech is more "neutral", but it really just means deferring to market expectations, market time discount rates and market risk attitudes, which could differ from your own. Equal-weighting (securities above a certain market cap or asset classes) and rebalancing to maintain equal weights seems "more neutral", but also pretty arbitrary and probably worse for risk-adjusted returns.

Furthermore, I can increase my absolute exposure to AI with leverage on the S&P500, like call options, margin or leveraged ETFs. Maybe I assume non-AI stocks will do roughly neutral or in line with the past, or the market as a whole will do so assuming AI progress slows. Then leverage on the S&P500 could really just be an AI play.

My main point is that it's not really about performance, it's about ethics and conflicts of interest. Investing in Index funds is much more neutral from this perspective, given that AI companies are part of society and we can't divorce ourselves from them completely because we can't divorce ourselves from society completely (the analogy of the vegan accepting mail from their non-vegan delivery person comes to mind).

The S&P500 might be closer to neutral, but it's still far from neutral. If you want to actually be neutral, you'd want to avoid positive exposure to AI companies. You might even want to be net short AI companies, to offset indirect exposure to AI companies through productivity gains from AI.

There are multiple options here that are much more neutral on AI than say the S&P 500, some very straightforward and convenient:

Thanks for these. I'll note though that personally, I have ~0 stock market exposure (majority of my wealth is in crypto and startups).

Separately, arguing for being able to beat the EMH with AI, but not crypto, seems a bit idiosyncratic. Why not any other sector? Why does it have to be the one we are particularly concerned about having massive negative externalities? Why aren't there climate change worriers making similar investments in new oil and gas drilling, or vegans making similar investments in meat companies?

I guess you might say "because if alignment is solved, then TAI will be worth a lot of money". But it's complete speculation to say that alignment is even possible to solve, given our current situation. Much bigger speculation than investing in crypto imo. And the markets clearly aren't expecting TAI to happen at all.

AI will be worth a lot of money (and "transformative", maybe not to the extent meant in defining "TAI") before alignment is much of an issue. Tasks will be increasingly automated, even before most jobs (let alone ~all jobs) can be fully automated, and prices will increase with increasing expectations of automation as it becomes more apparent (their expectations currently seem too low).

Do you expect that between now and TAI that would kill everyone, AI stocks won't outperform?

Give me decent arguments for substantial outperformance and I'd invest (although probably still keep AI to diversify). I think there are decent arguments for AI. There seems to be pretty limited upside in oil and gas and meat, and I don't have any particular disagreements with standard projections, or any apparent edge for these. If you find some edge, let us know!

No, I expect they will, but it might be only a matter of months before doom in those cases (i.e. AI capabilities continue to advance at a rapid pace, AI capable of automating most work tasks happens, stocks skyrocket; but AI is also turned on AI development, and recursive-self improvement kicks in, doom follows shortly after).

Are you in the HSEACA fb group? Here's a tip[1]: the relatively new crypto, WART. Heuristics: recommended by same guy that picked the 1000x (KASPA) and 200x (CLORE); geniuinely new algo (CPU/GPU combined mining; working on an in-browser node); fair launch and tokenomics (no premine); enthusiastic developers who are crypto enthusiasts doing it for fun (currently worth very little); enthusiastic community; bad at marketing (this is an advantage at this stage; more focus on product). Given it has only a ~$1.5M marketcap now, there is a lot of potential upside. I'd say >10% chance of >100x; 1000x not out of the question. Timeframe: 6-18 months.

Another: Equator Therapeutics[2].

Usual caveats apply to these: not investment advice, do your own research, don't put in more than you can afford to lose (significant chance they go to ~0), don't blame me for any losses, etc.

Join the group to see more.

I understand the reservation about donation from AI companies cause of conflict of interest, but I still think the larger driver of this intervention area (AI Cause Area) should largely be this Company... who else got the fund that could drive it? who else get the ideological initiatives necessary for changes in this area?

While it may be counterintuitive to have them on board, they are still the best bet for now.

One quick point is divesting, while it would help a bit, wouldn't obviously solve the problems I raise – AI safety advocates could still look like alarmists if there's a crash, and other investments (especially including crypto) will likely fall at the same time, so the effect on the funding landscape could be similar.

With divestment more broadly, it seems like a difficult question.

I share the concerns about it being biasing and making AI safety advocates less credible, and feel pretty worried about this.

On the other side, if something like TAI starts to happen, then the index will go from 5% AI-companies to 50%+ AI companies. That'll mean AI stocks will outperform the index by ~10x or more, while non-AI stocks will underperform by 2x or more.

So by holding the index, you'd be forgoing 90%+ of future returns (in the most high leverage scenarios), and being fully divested, giving up 95%+.

So the costs are really big (far far greater than divesting from oil companies).

Moreover, unless your p(doom) is very high, it's plausible a lot of the value comes from what you could do in post-TAI worlds. AI alignment isn't the only cause to consider.

On balance, it doesn't seem like the negatives are so large as to reduce the value of your funds by 10x in TAI worlds. But I feel uneasy about it.

I would encourage EAs to go even further against the EMH than buying AI stocks. EAs have been ahead of the curve on lots of things, so we should be able to make even better returns elsewhere, especially given how crowded AI is now. It's worth looking at the track record of the HSEACA investing group[1], but, briefly, I have had 2 cryptos that I learnt about in there in the last couple of years go up 1000x and 200x respectively (realising 100x and 50x gains respectively, so far in the case of the second). Lots of people also made big money shorting stock markets before the Covid crash, and there have been various other highly profitable plays, and promising non-AI start-ups posted about. There are plenty of other opportunities out there that are better than investing in AI, even from a purely financial perspective. More EAs should be spending time seeking them out, rather than investing in ethically questionable companies that go against their mission to prevent x-risk, and are very unlikely to provide significant profits that are actually usable before the companies they come from cause doom, or collapse in value from being regulated to prevent doom.

Would actually be great if someone did an analysis of this sometime!

It is! In fact, I think non-doom TAI worlds are highly speculative[1].

I've still not seen any good argument for them making up a majority of the probability space, in fact.

What impact do you expect a marginal demand shift of $1 million (or $1 billion) in AI stocks to have on AI timelines? And why?

(Presumably the impact on actual investments in AI is much lower, because of elasticity, price targets for public companies, limits on what private companies intend to raise at a time.)

Or is the concern only really COIs?

The concern is mainly COIs, then bad PR. The direct demand shift could still be important though, if it catalyses further demand shift (e.g. divestment from apartheid South Africa eventually snowballed into having a large economic effect).

Furthermore, if you're sufficiently pessimistic about AI alignment, it might make sense to optimize for a situation where we get a crash and the longer timeline that comes with it. ("Play to your outs"/condition on success.)

That suggests a portfolio that's anticorrelated with AI stocks, so you can capitalize on the longer-timelines scenario if a crash comes about.

Spitballing: EA entrepreneurs should be preparing for one of two worlds:

i) Short timelines with 10x funding of today

ii) Longer timelines with relatively scarce funding

Scarce relative to the current level or just < 10x the current level?

"Nvidia’s implied volatility is about 60%, which means – even assuming efficient markets – it has about a 15% chance of falling more than 50% in a year.

And more speculatively, booms and busts seem more likely for stocks that have gone up a ton, and when new technologies are being introduced."

Do you think the people trading the options setting that implied volatility are unaware of this?

Agree it's most likely already in the price.

Though I'd stand behind the idea that markets are least efficient when it comes to big booms and busts involving large asset classes (in contrast to relative pricing within a liquid asset class), which makes me less inclined to simply accept market prices in these cases.

If I understand correctly, you are interpreting the above as stating that the implied volatility would be higher in a more efficient market. But I originally interpreted it as claiming that big moves are relatively more likely than medium-small moves compared to other options with the same IV (if that makes any sense)

Taking into account volatility smiles and all the things that I wouldn't think about, as someone who doesn't know much about finance and doesn't have Bloomberg Terminal, is there an easy way to answer the question "what is the option-prices-implied chance of NVDA falling below 450 in a year?"

I see that the IV for options with a strike of 450 next year is about ~70% for calls and ~50% for puts. I don't know how to interpret that, but even using 70%, this calculator gives me a ~16% chance, so would it be fair to say that traders think there's a ~15% chance of NVDA falling below 450 in a year?

In general, I think both here and in the accompanying thread finance professionals might be overestimating people's average familiarity with the field.

The IV for puts and calls at a given strike and expiry date will be identical, because one can trivially construct a put or a call from the other by trading stock, and the only frictions are the cost of carry.

The best proxy for probability an option will expire in the money is the delta of the option.

Thank you. Here's an explanation from Wikipedia for others like me new to this.

Looking at the delta here and here, the market would seem to imply a ~5% chance of NVDA going below 450, which is not consistent with the ~15% in the article derived from the IV. Is it mostly because of a high risk-free interest rate?

I wonder which value would be more calibrated, or if there's anything I could read to understand this better. It seems valuable to be able to easily find rough market-implied probabilities for future prices.

Generally I just wouldn't trust numbers from Yahoo and think that's the Occam's Razor explanation here.

Delta is the value I would use before anything else since the link to models of reality is so straightforward (stock moves $1 => option moves $0.05 => clearly that's equivalent to making an extra dollar 5% of the time)

Just had another glance at this and I think the delta vs implied vol piece is consistent with something other than a normal/log normal distribution. Consider: the price is $13 for the put, and the delta is 5. This implies something like - the option is expected to pay off a nonzero amount 5% of the time, but the average payoff when it does is $260 (despite the max payoff definitionally being 450). So it looks like this is really being priced as crash insurance, and the distribution is very non normal (i.e. circumstances where NVDA falls to that price means something weird has happened)

Can you explain? I see why the implied vols for puts and calls should be identical, but empirically, they are not—right now calls at $450 have an implied vol of 215% and puts at $450 have an implied vol of 158%. Are you saying that the implied vol from one side isn't the proper implied vol, or something?

Right now the IV of June 2025 450 calls is 53.7, and of puts 50.9, per Bloomberg. I've no idea where your numbers are coming from, but someone is getting the calculation wrong or the input is garbage.

The spread in the above numbers is likely to do with illiquidity and bid ask spreads more than anything profound.

Could be interesting to see some more thinking about investments that have short-to-medium-term correlations with long-term-upside-capturing/mission-hedging stocks that don't themselves have these features (as potential complementary shorts).

You could look for investments that do neutral-to-well in a TAI world, but have low-to-negative correlation to AI stocks in the short term. That could reduce overall portfolio risk but without worsening returns if AI does well.

This seems quite hard, but the best ideas I've seen so far are:

However, all of these have important downsides and someone would need to put billions of dollars behind them to have much impact on the overall portfolio.

(Also this is not investment advice and these ideas are likely to lose a lot of money in many scenarios.)

Benjamin - thanks for a thoughtful and original post. Much of your reasoning makes sense from a strictly financial, ROI-maximizing perspective.

But I don't follow your logic in terms of public sentiment regarding AI safety.

Your wrote 'Second, an AI crash could cause a shift in public sentiment. People who’ve been loudly sounding caution about AI systems could get branded as alarmists, or people who fell for another “bubble”, and look pretty dumb for a while.'

I don't see why an AI crash would turn people against AI safety concerns.

Indeed, a logical implication of our 'Pause AI' movement, and the public protests against AI companies, is that (1) we actually want AI companies to fail, because they're pursuing AGI recklessly, (2) we are doing our best to help them to fail, to protect humanity, (3) we are stigmatizing people who invest in AI companies as unethical, and (4) we hope that the value of AI companies, and the Big Tech companies associated with them, plummets like a rock.

I don't think EAs can have it both ways -- profiting from investments in reckless AI companies, while also warning the public about the recklessness of those companies. There might be a certain type of narrow, short-sighted utilitarian reasoning in which such moral hypocrisy makes sense. But to most people, who are intuitive virtue ethicists and/or deontologists, investing in companies that impose extinction risk on our species, just in hopes that we can make enough money to help mitigate those extinction risks, will sound bizarre, contradictory, and delusional.

If we really want to make money, just invest like normal people in crypto when prices are low, and sell when prices are high. There's no need to put our money into AI companies that we actually want to fail, for the sake of human survival.

I should maybe have been more cautious - how messaging will pan out is really unpredictable.

However, the basic idea is that if you're saying "X might be a big risk!" and then X turns out to be a damp squib, it looks like you cried wolf.

If there's a big AI crash, I expect there will be a lot of people rubbing their hands saying "wow those doomers were so wrong about AI being a big deal! so silly to worry about that!"

That said, I agree if your messaging is just "let's end AI!", then there's some circumstances under which you could look better after a crash e.g. especially if it looks like your efforts contributed to it, or it failed due to reasons you predicted / the things you were protesting about (e.g. accidents happening, causing it to get shut down).

However, if the AI crash is for unrelated reasons (e.g. the scaling laws stop working, it takes longer to commercialise than people hope), then I think the Pause AI people could also look silly – why did we bother slowing down the mundane utility we could get from LLMs if there's no big risk?

Thanks for the post, Ben!

I like that Founders Pledge's Patient Philanthropy Fund (PPF) invests in "a low-fee Global Stock Index Fund". I also have all my investments in global stocks (Vanguard FTSE All-World UCITS ETF USD Acc).

A crash in the stock market might actually increase AI arms races if companies don't feel like they have the option to go slow.

Here is some data indicating that time devoted to AI in earnings calls peaked in 2023 and has dropped significantly since then.

According to the Gartner hype cycle, new technologies are usually overhyped, and massive hype is typically followed by a period of disillusionment. I don't know if this claim is backed by solid data, however. The wikipedia page cites this LinkedIn post, which discusses a bunch of counterexamples to the Gartner hype cycle. But none of the author's counterexamples take the form of "technology generates massive hype, hype turns out to be fully justified, no trough of disillusionment". Perhaps the iPhone would fall in this category?

Interesting. I guess a key question is whether another wave of capabilities (e.g. gpt-5, agent models) comes in soon or not.

Executive summary: A potential crash in AI stocks, while not necessarily reflecting long-term AI progress, could have negative short-term effects on AI safety efforts through reduced funding, shifted public sentiment, and second-order impacts on the AI safety community.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.