Vasco Grilo🔸

Bio

Participation4

I am open to work. I see myself as a generalist quantitative researcher.

How others can help me

You can give me feedback here (anonymous or not).

You are welcome to answer any of the following:

- Do you have any thoughts on the value (or lack thereof) of my posts?

- Do you have any ideas for posts you think I would like to write?

- Are there any opportunities you think would be a good fit for me which are either not listed on 80,000 Hours' job board, or are listed there, but you guess I might be underrating them?

How I can help others

Feel free to check my posts, and see if we can collaborate to contribute to a better world. I am open to part-time volunteering and paid work. In this case, I typically ask for 20 $/h, which is roughly equal to 2 times the global real GDP per capita.

Posts 153

Comments1814

Topic contributions26

Thanks, Lauren!

Firstly, I can only speak for the animal advocacy space but there are a very limited number of high impact roles for people to pivot into…

I think this applies more broadly. Overwhelmingly based on data about global health and development interventions, Benjamin Todd concludes "it’s defensible to say that the best of all interventions in an area are about 10 times more effective than the mean, and perhaps as much as 100 times". If so, and jobs are uniformly distributed across interventions, a person in a random job within an area donating 10 % of their gross salary to the best interventions in the area can have 1 (= 0.1*10) to 10 (= 0.1*100) times as much impact from donations as from their direct work. In reality, there will be more jobs in less cost-effective interventions, as the best interventions only account for a small fraction of the overall funding. Based on Benjamin's numbers, if there are 10 times as many people in jobs as cost-effective as a random one as in the best jobs, a person in a random job within an area donating 10 % of their gross salary to the best interventions in the area would be 10 (= 1*10) to 100 (= 10*10) times as impactful as a person with the same job not donating.

Thanks for the interest, Michael!

I got the cost-effectiveness estimates I analysed in that post about global health and development directly from Ambitious Impact (AIM), and the ones about animal welfare adjusting their numbers based on Rethink Priorities' median welfare ranges[1].

I do not have my cost-effectiveness estimates collected in one place. I would be happy to put something together for you, such as a sheet with the name of the intervention, area, source, date of publication, and cost-effectiveness in DALYs averted per $. However, I wonder whether it would be better for you to look into sets of AIM's estimates respecting a given stage of a certain research round. AIM often uses them in weighted factor models to inform which ones to move to the next stage or recommend, so they are supposed to be specially comparable. In contrast, mine often concern different assumptions simply because they span a long period of time. For example, I now guess disabling pain is 10 % as intense as I assumed until October.

I could try to quickly adjust all my estimates such that they all reflect my current assumptions, but I suspect it would not be worth it. I believe AIM's estimates by stage of a particular research round would still be more methodologically aligned, and credible to a wider audience. I am also confident that a set with all my estimates, at least if interpreted at face value, much more closely follow a Pareto, lognormal or loguniform distribution than a normal or uniform distribution. I estimate broiler welfare and cage-free campaigns are 168 and 462 times as cost-effective as GiveWell’s top charities, and that the Shrimp Welfare Project (SWP) has been 64.3 k times as cost-effective as such charities.

- ^

AIM used to assume welfare ranges conditional on sentience equal to 1 before moving to estimating the benefits of animal welfare interventions in suffering-adjusted days (SADs) in 2024. I believe the new system still dramatically underestimates the intensity of excruciating pain, and therefore the cost-effectiveness of interventions decreasing it. I estimate the past cost-effectiveness of SWP is 639 DALY/$. For AIM’s pain intensities, and my guess that hurtful pain is as intense as fully healthy life, I get 0.484 DALY/$, which is only 0.0757 % (= 0.484/639) of my estimate. Feel free to ask Vicky Cox, senior animal welfare researcher at AIM, for the sheet with their pain intensities, and the doc with my suggestions for improvement.

Regarding your future work I'd like to see section, maybe Vasco's corpus of cost-effectiveness estimates would be a good starting point. His quantitative modelling spans nearly every category of EA interventions, his models are all methodologically aligned (since it's just him doing them), and they're all transparent too (unlike the DCP estimates).

Thanks for the suggestion, Mo! More transparent methodologically aligned estimates:

- The Centre for Exploratory Altruism Research (CEARCH) has a sheet with 23 cost-effectiveness estimates across global health and development, global catastrophic risk, and climate change.

- They are produced in 3 levels of depth, but they all rely on the same baseline methodology.

- You can reach out to @Joel Tan🔸 to know more.

- Ambitious Impact (AIM) has produced hundreds of cost-effectiveness estimates across global health and development, animal advocacy, and "EA meta".

- They are produced in different levels of depth. I collected 44 regarding the interventions recommended for their incubation programs until 2024[1]. However, they have more public estimates concerning interventions which made it to the last stage (in-depth report), but were not recommended, and way more internal estimates. Not only from in-depth reports of interventions which were not recommended[2], but also from interventions which did not make it to the last stage.

- You can reach out to Morgan Fairless, AIM's research director, to know more, and ask for access to AIM's internal estimates.

Estimates from Rethink Priorities' cross-cause cost-effectiveness model are also methodologically aligned within each area, but they are not transparent. No information at all is provided about the inputs.

AIM's estimates respecting a given stage of a certain research round[3] will be especially comparable, as AIM often uses them in weighted factor models to inform which ones to move to the next stage or recommend. So I think you had better look into such sets of estimates over one covering all my estimates.

Thanks, yams.

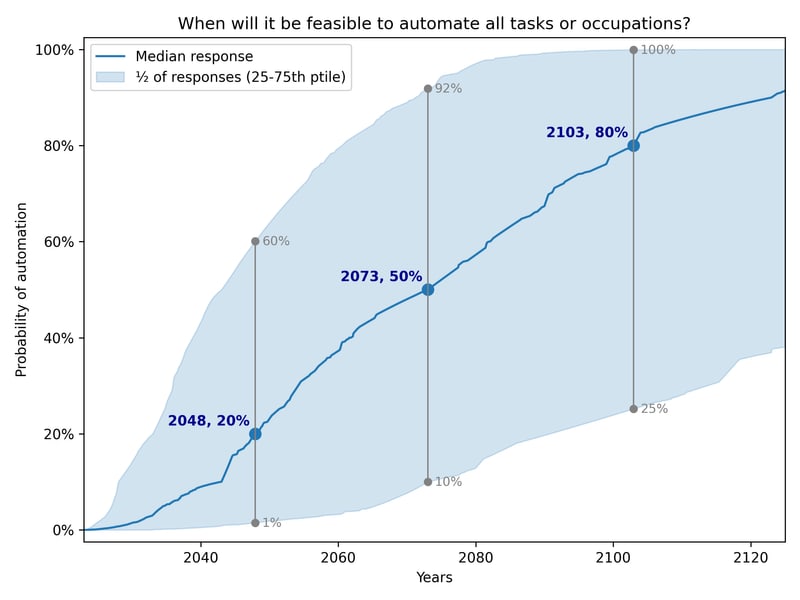

I am also potentially open to a bet where I transfer money to the person bullish on AI timelines now. I bet Greg Coulbourn 10 k$ this way. However, I would have to trust the person betting with me more than in the case of the bet I linked to above. On this, money being less valuable after superintellgence (including due to supposedly higher risk of death) has the net effect of moving the break-even resolution date forward. As I say in the post I linked to, "We can agree on another resolution date such that the bet is good for you". The resolution date I proposed (end of 2028) was supposed to make the bet just slightly positive for people bullish on AI timelines. However, my views are closer to those of the median expert in 2023, whose median date of full automation was 2073.

Thanks for sharing! I would not be surprised if the effects of global warming on wild animals were larger than the suffering of farmed animals. However, it is super unclear whether wild animals have positive or negative lives, including r-selected ones. So I think it makes sense to prioritise learning more about the effects on wild animals, such as by donating to the Wild Animal Initiative (WAI), instead of betting a cooler world results in less animals with negative lives. More broadly, if climate change is super bad due to a specific problem (wild animal welfare, water scarcity, conflict, soil erosion, or other), I believe it is better to target that problem more directly/explicitly and without constraints instead of via decreasing greenhouse gas (GHG) emissions, which narrows the number of available interventions a lot.

@SummaryBot , have you considered summarising this post, which was just shared as a classic Forum post on the last EA Forum Digest?

- Evaluating more fine-grained causes, respondents estimated that 26.8% should go to work focused on AI, 15.7% to Global health, 14.7% to Farm animal welfare, 10.2% to building EA and related communities, and 8.2% to Biosecurity (in addition to smaller percentages to many other causes).

So the respondents would like to see 1.82 (= 0.268/0.147) and 1.07 (= 0.157/0.147) times as much resources going into AI and global health as into farm animal welfare. These numbers imply it is good to move donations from global health to farm animal welfare (which I agree with), and from this to AI (which I disagree with). Of the amount granted by Open Philanthropy in 2024, I estimate:

- 16.0 % went to farm animal welfare:

- 2.59 % to "Alternatives to Animal Products".

- 2.70 % to "Broiler Chicken Welfare".

- 0.203 % to "Cage-Free Reforms".

- 10.5 % to "Farm Animal Welfare".

- 0.0136 % to "Fish Welfare".

- 46.6 % went to global health, 2.91 (= 0.466/0.160) times as much as to farm animal welfare (significantly more than 1.07 times as much):

- 10.3 % to "GiveWell-Recommended Charities".

- 1.83 % to "Global Aid Policy".

- 0.172 % to "Global Health & Development".

- 0.672 % to "Global Health & Wellbeing".

- 12.2% to "Global Health R&D".

- 11.1 % to "Global Public Health Policy".

- 5.25 % to "Human Health and Wellbeing".

- 4.93 % to "Scientific Research".

- 0.0926 % to "South Asian Air Quality".

- 17.8 % went to "Potential Risks from Advanced AI", 1.11 (= 0.178/0.160) times as much as to farm animal welfare (significantly less than 1.82 times as much). There are other focus areas which cover AI, but that is the major one, so the takeaway will remain the same.

Hi David,

You and readers may be interested in Towards A Global Ban On Industrial Animal Agriculture By 2050: Legal Basis, Precedents, And Instruments.