Update: I, Nick Beckstead, no longer work at the Future Fund am writing this update purely in a personal capacity. Since the Future Fund team has resigned and FTX has filed for bankruptcy, it now seems very unlikely that these prizes will be paid out. I'm very sad about the disruption that this may cause to contest participants.

I would encourage participants who were working on entries for this prize competition to save their work and submit it to Open Philanthropy's own AI Worldview Contest in 2023.

Today we are announcing a competition with prizes ranging from $15k to $1.5M for work that informs the Future Fund's fundamental assumptions about the future of AI, or is informative to a panel of superforecaster judges selected by Good Judgment Inc. These prizes will be open for three months—until Dec 23—after which we may change or discontinue them at our discretion. We have two reasons for launching these prizes.

First, we hope to expose our assumptions about the future of AI to intense external scrutiny and improve them. We think artificial intelligence (AI) is the development most likely to dramatically alter the trajectory of humanity this century, and it is consequently one of our top funding priorities. Yet our philanthropic interest in AI is fundamentally dependent on a number of very difficult judgment calls, which we think have been inadequately scrutinized by others.

As a result, we think it's really possible that:

- all of this AI stuff is a misguided sideshow,

- we should be even more focused on AI, or

- a bunch of this AI stuff is basically right, but we should be focusing on entirely different aspects of the problem.

If any of those three options is right—and we strongly suspect at least one of them is—we want to learn about it as quickly as possible because it would change how we allocate hundreds of millions of dollars (or more) and help us better serve our mission of improving humanity's longterm prospects.

Second, we are aiming to do bold and decisive tests of prize-based philanthropy, as part of our more general aim of testing highly scalable approaches to funding. We think these prizes contribute to that work. If these prizes work, it will be a large update in favor of this approach being capable of surfacing valuable knowledge that could affect our prioritization. If they don't work, that could be an update against this approach surfacing such knowledge (depending how it plays out).

The rest of this post will:

- Explain the beliefs that, if altered, would dramatically affect our approach to grantmaking

- Describe the conditions under which our prizes will pay out

- Describe in basic terms how we arrived at our beliefs and cover other clarifications

Prize conditions

On our areas of interest page, we introduce our core concerns about AI as follows:

We think artificial intelligence (AI) is the development most likely to dramatically alter the trajectory of humanity this century. AI is already posing serious challenges: transparency, interpretability, algorithmic bias, and robustness, to name just a few. Before too long, advanced AI could automate the process of scientific and technological discovery, leading to economic growth rates well over 10% per year (see Aghion et al 2017, this post, and Davidson 2021).

As a result, our world could soon look radically different. With the help of advanced AI, we could make enormous progress toward ending global poverty, animal suffering, early death and debilitating disease. But two formidable new problems for humanity could also arise:

- Loss of control to AI systems

Advanced AI systems might acquire undesirable objectives and pursue power in unintended ways, causing humans to lose all or most of their influence over the future.- Concentration of power

Actors with an edge in advanced AI technology could acquire massive power and influence; if they misuse this technology, they could inflict lasting damage on humanity’s long-term future.For more on these problems, we recommend Holden Karnofsky’s “Most Important Century,” Nick Bostrom’s Superintelligence, and Joseph Carlsmith’s “Is power-seeking AI an existential risk?”.

Here is a table identifying various questions about these scenarios that we believe are central, our current position on the question (for the sake of concreteness), and alternative positions that would significantly alter the Future Fund's thinking about the future of AI[1][2]:

| Proposition | Current position | Lower prize threshold | Upper prize threshold |

| “P(misalignment x-risk|AGI)”: Conditional on AGI being developed by 2070, humanity will go extinct or drastically curtail its future potential due to loss of control of AGI | 15% | 7% | 35% |

| AGI will be developed by January 1, 2043 | 20% | 10% | 45% |

| AGI will be developed by January 1, 2100 | 60% | 30% | N/A |

Future Fund will award a prize of $500k to anyone that publishes analysis that moves these probabilities to the lower or upper prize threshold.[3] To qualify, please please publish your work (or publish a post linking to it) on the Effective Altruism Forum, the AI Alignment Forum, or LessWrong with a "Future Fund worldview prize" tag. You can also participate in the contest by publishing your submission somewhere else (e.g. arXiv or your blog) and filling out this submission form. We will then linkpost/crosspost to your submission on the EA Forum.

We will award larger prizes for larger changes to these probabilities, as follows:

- $1.5M for moving “P(misalignment x-risk|AGI)” below 3% or above 75%

- $1.5M for moving “AGI will be developed by January 1, 2043” below 3% or above 75%

We will award prizes of intermediate size for intermediate updates at our discretion.

We are also offering:

- A $200k prize for publishing any significant original analysis[4] which we consider the new canonical reference on any one of the above questions, even if it does not move our current position beyond a relevant threshold. Past works that would have qualified for this prize include: Yudkowsky 2008, Superintelligence, Cotra 2020, Carlsmith 2021, and Karnofsky's Most Important Century series. (While the above sources are lengthy, we'd prefer to offer a prize for a brief but persuasive argument.)

- A $200k prize for publishing any analysis which we consider the canonical critique of the current position highlighted above on any of the above questions, even if it does not move our position beyond a relevant threshold. Past works that might have qualified for this prize include: Hanson 2011, Karnofsky 2012, and Garfinkel 2021.

- At a minimum, we will award $50k to the three published analyses that most inform the Future Fund's overall perspective on these issues, and $15k for the next 3-10 most promising contributions to the prize competition. (I.e., we will award a minimum of 6 prizes. If some of the larger prizes are claimed, we may accordingly award fewer of these prizes.)

As a check/balance on our reasonableness as judges, a panel of superforecaster judges will independently review a subset of highly upvoted/nominated contest entries with the aim of identifying any contestant who did not receive a prize, but would have if the superforecasters were running the contest themselves (e.g., an entrant that sufficiently shifted the superforecasters’ credences).

- For the $500k-$1.5M prizes, if the superforecasters think an entrant deserved a prize but we didn’t award one, we will award $200k (or more) for up to one entrant in each category (existential risk conditional on AGI by 2070, AGI by 2043, AGI by 2100), upon recommendation of the superforecaster judge panel.

- For the $15k-200k prizes, if the superforecasters think an entrant deserved a prize but we didn’t award one, we will award additional prizes upon recommendation of the superforecaster judge panel.

The superforecaster judges will be selected by Good Judgment Inc. and will render their verdicts autonomously. While superforecasters have only been demonstrated to have superior prediction track records for shorter-term events, we think of them as a lay jury of smart, calibrated, impartial people.

Our hope is that potential applicants who are confident in the strength of their arguments, but skeptical of our ability to judge impartially, will nonetheless believe that the superforecaster jury will plausibly judge their arguments fairly. After all, entrants could reasonably doubt that people who have spent tens of millions of dollars funding this area would be willing to acknowledge it if that turned out to be a mistake.

Details and fine print

- Only original work published after our prize is announced is eligible to win.

- We do not plan to read everything written with the aim of claiming these prizes. We plan to rely in part on the judgment of other researchers and people we trust when deciding what to seriously engage with. We also do not plan to explain in individual cases why we did or did not engage seriously.

- If you have questions about the prizes, please ask them as comments on this post. We do not plan to respond to individual questions over email.

- All prizes will be awarded at the final discretion of the Future Fund. Our published decisions will be final and not subject to appeal. We also won't be able to explain in individual cases why we did not offer a prize.

- Prizes will be awarded equally to coauthors unless the post indicates some other split. At our discretion, the Future Fund may provide partial credit across different entries if they together trigger a prize condition.

- If a single person does research leading to multiple updates, Future Fund may—at its discretion—award the single largest prize for which the analysis is eligible (rather than the sum of all such prizes).

- We will not offer awards to any analysis that we believe was net negative to publish due to information hazards, even if it moves our probabilities significantly and is otherwise excellent.

- At most one prize will be awarded for each of the largest prize categories ($500k and $1.5M). (If e.g. two works convince us to assign < 3% subjective probability in AGI being developed in the next 20 years, we’ll award the prize to the most convincing piece (or split in case of a tie).)

For the first two weeks after it is announced—until October 7—the rules and conditions of the prize competition may be changed at the discretion of the Future Fund. After that, we reserve the right to clarify the conditions of the prizes wherever they are unclear or have wacky unintended results.

Information hazards

Please be careful not to publish information that would be net harmful to publish. We think people should not publish very concrete proposals for how to build AGI (if they know of them), and or things that are too close to that.

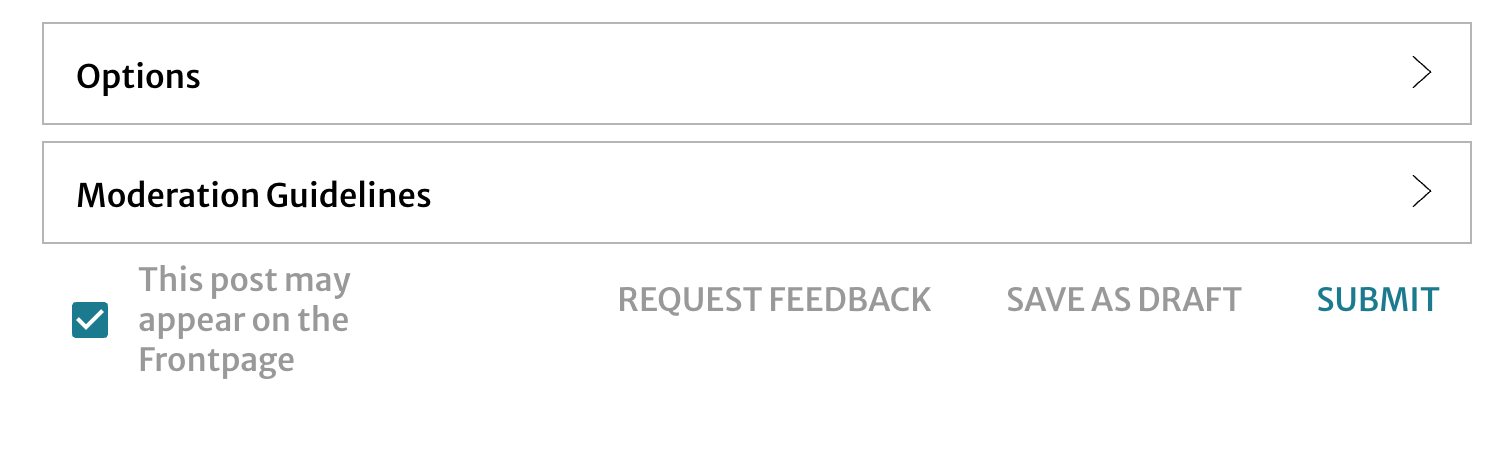

If you are worried publishing your analysis would be net harmful due to information hazards, we encourage you to a) write your draft and then b) ask about this using the “REQUEST FEEDBACK” feature on the Effective Altruism forum or LessWrong pages (appears on the draft post page, just before you would normally publish a post):

The moderators have agreed to help with this.

If you feel strongly that your analysis should not be made public due to information hazards, you may submit your prize entry through this form.

Some clarifications and answers to anticipated questions

What do you mean by AGI?

Imagine a world where cheap AI systems are fully substitutable for human labor. E.g., for any human who can do any job, there is a computer program (not necessarily the same one every time) that can do the same job for $25/hr or less. This includes entirely AI-run companies, with AI managers and AI workers and everything being done by AIs.

- How large of an economic transformation would follow? Our guess is that it would be pretty large (see Aghion et al 2017, this post, and Davidson 2021), but - to the extent it is relevant - we want people competing for this prize to make whatever assumptions seem right to them.

For purposes of our definitions, we’ll count it as AGI being developed if there are AI systems that power a comparably profound transformation (in economic terms or otherwise) as would be achieved in such a world. Some caveats/clarifications worth noticing:

- A comparably large economic transformation could be achieved even if the AI systems couldn’t substitute for literally 100% of jobs, including providing emotional support. E.g., Karnofsky’s notion of PASTA would probably count (though that is an empirical question), and possibly some other things would count as well.

- If weird enough things happened, the metric of GWP might stop being indicative in the way it normally is, so we want to make sure people are thinking about the overall level of weirdness rather than being attached to a specific measure or observation. E.g., causing human extinction or drastically limiting humanity’s future potential may not show up as rapid GDP growth, but automatically counts for the purposes of this definition.

Why are you starting with such large prizes?

We really want to get closer to the truth on these issues quickly. Better answers to these questions could prevent us from wasting hundreds of millions of dollars (or more) and years of effort on our part.

We could start with smaller prizes, but we’re interested in running bold and decisive tests of prizes as a philanthropic mechanism.

A further consideration is that sometimes people argue that all of this futurist speculation about AI is really dumb, and that its errors could be readily explained by experts who can't be bothered to seriously engage with these questions. These prizes will hopefully test whether this theory is true.

Can you say more about why you hold the views that you do on these issues, and what might move you?

I (Nick Beckstead) will answer these questions on my own behalf without speaking for the Future Fund as a whole.

For "Conditional on AGI being developed by 2070, humanity will go extinct or drastically curtail its future potential due to loss of control of AGI." I am pretty sympathetic to the analysis of Joe Carlsmith here. I think Joe's estimates of the relevant probabilities are pretty reasonable (though the bottom line is perhaps somewhat low) and if someone convinced me that the probabilities on the premises in his argument should be much higher or lower I'd probably update. There are a number of reviews of Joe Carlsmith's work that were helpful to varying degrees but would not have won large prizes in this competition.

For assigning odds to AGI being developed in the next 20 years, I am blending a number of intuitive models to arrive at this estimate. They are mostly driven by a few high-level considerations:

- I think computers will eventually be able to do things brains can do. I've believed this for a long time, but if I were going to point to one article as a reference point I'd choose Carlsmith 2020.

- Priors that seem natural to me ("beta-geometric distributions") start us out with non-trivial probability of developing AGI in the next 20 years, before considering more detailed models. I've also believed this for a long time, but I think Davidson 2021's version is the best, and he gives 8% to AGI by 2036 through this method as a central estimate.

- I assign substantial probability to continued hardware progress, algorithmic progress, and other progress that fuels AGI development over the coming decades. I'm less sure this will continue many decades into the future, so I assign somewhat more probability to AGI in sooner decades than later decades.

- Under these conditions, I think we'll pass some limits—e.g. approaching hardware that's getting close to as good as we're ever going to get—and develop AGI if we're ever going to develop it.

- I'm extremely uncertain about the hardware requirements for AGI (at the point where it's actually developed by humans), to a point where my position is roughly "I dunno, log uniform distribution over anything from the amount of compute used by the brain to a few orders of magnitude less than evolution." Cotra 2020—which considers this question much more deeply—has a similar bottom line on this. (Though her updated timelines are shorter.)

- I'm impressed by the progress in deep learning to the point where I don't think we can rule out AGI even in the next 5-10 years, but I'm not impressed enough by any positive argument for such short timelines to move dramatically away from any of the above models..

(I'm heavily citing reports from Open Philanthropy here because a) I think they did great work and b) I'm familiar with it. I also recommend this piece by Holden Karnofsky, which brings a lot of this work—and other work—together.)

In short, you can roughly model me as having roughly trapezoidal probability density function over developing AGI from now to 2100, with some long tail extending beyond that point. There is about 2x as much weight at the beginning of the distribution as there is at the end of the century. The long tail includes a) insufficient data/hardware/humans not smart enough to solve it yet, b) technological stagnation/hardware stagnation, and c) reasons it's hard that I haven't thought of. The microfoundation of the probability density function could be: a) exponentially increasing inputs to AGI, b) log returns to AGI development on the key inputs, c) pricing in some expected slowdown in the exponentially increasing inputs over time, and d) slow updating toward increased difficulty of the problem as the time goes on, but I stand by the distribution more than the microfoundation.

What do you think could substantially alter your views on these issues?

We don't know. Most of all we'd just like to see good arguments for specific quantitative answers to the stated questions. Some other thoughts:

- We like it when people state cleanly summarizable, deductively valid arguments and carefully investigate the premises leading to the conclusion (analytic philosopher style). See e.g. Carlsmith 2021.

- We also like it when people quantify their subjective probabilities explicitly. See e.g. Superforecasting by Phil Tetlock.

- We like a lot of the features described here by Luke Muehlhauser, though they are not necessary to be persuasive.

- We like it when people represent opposing points of view charitably, and avoid appeals to authority.

- We think it could be pretty persuasive to us if some (potentially small) group of relevant technical experts arrived at and explained quite different conclusions. It would be more likely to be persuasive if they showed signs of comfort thinking in terms of subjective probability and calibration. Ideally they would clearly explain the errors in the best arguments cited in this post.

These are suggestions for how to be more likely to win the prize, but not requirements or guarantees.

Who do we have to convince in order to claim the prize?

Final decisions will be made at the discretion of the Future Fund. We plan to rely in part on the judgment of other researchers and people we trust when deciding what to seriously engage with. Probably, someone winning a large prize looks like them publishing their arguments, those arguments getting a lot of positive attention / being flagged to us by people we trust, us seriously engaging with those arguments (probably including talking to the authors), and then changing our minds.

Are these statistically significant probabilities grounded in detailed published models that are confirmed by strong empirical regularities that you're really confident in?

No. They are what we would consider fair betting odds.

This is a consequence of the conception of subjective probability that we are working with. As stated above in a footnote: "We will pose many of these beliefs in terms of subjective probabilities, which represent betting odds that we consider fair in the sense that we'd be roughly indifferent between betting in favor of the relevant propositions at those odds or betting against them." For more on this conception of probability I recommend The Logic of Decision by Richard Jeffrey or this SEP entry.

Applicants need not agree with or use our same conception of probability, but hopefully these paragraphs help them understand where we are coming from.

Why do the prizes only get awarded for large probability changes?

We think that large probability changes would have much clearer consequences for our work, and be much easier to recognize. We also think that aiming for changes of this size is less common and has higher expected upside, so we want to attract attention to it.

Why is the Future Fund judging this prize competition itself?

Our intent in judging the prize ourselves is not to suggest that our judgments should be treated as correct / authoritative by others. Instead, we're focused on our own probabilities because we think that is what will help us to learn as much as possible.

Additional terms and conditions

- Employees of FTX Foundation and contest organizers are not eligible to win prizes.

- Entrants and Winners must be over the age of 18, or have parental consent.

- By entering the contest, entrants agree to the Terms & Conditions.

- All taxes are the responsibility of the winners.

- The legality of accepting the prize in his or her country is the responsibility of the winners. Sponsor may confirm the legality of sending prize money to winners who are residents of countries outside of the United States.

- Winners will be notified in a future blogpost.

- Winners grant to Sponsor the right to use their name and likeness for any purpose arising out of or related to the contest. Winners also grant to Sponsor a non-exclusive royalty-free license to reprint, publish and/or use the entry for any purpose arising out of related to the contest including linking to or re-publishing the work.

- Entrants warrant that they are eligible to receive the prize money from any relevant employer or from a contract standpoint.

- Entrants agree that FTX Philanthropy and its affiliates shall not be liable to entrants for any type of damages that arise out of or are related to the contest and/or the prizes.

- By submitting an entry, entrant represents and warrants that, consistent with the terms of the Terms and Conditions: (a) the entry is entrant’s original work; (b) entrant owns any copyright applicable to the entry; (c) the entry does not violate, in whole or in part, any existing copyright, trademark, patent or any other intellectual property right of any other person, organization or entity; (d) entrant has confirmed and is unaware of any contractual obligations entrant has which may be inconsistent with these Terms and Conditions and the rights entrant is required to have in the entry, including but not limited to any prohibitions, obligations or limitations arising from any current or former employment arrangement entrant may have; (e) entrant is not disclosing the confidential, trade secret or proprietary information of any other person or entity, including any obligation entrant may have in connection arising from any current or former employment, without authorization or a license; and (f) entrant has full power and all legal rights to submit an entry in full compliance with these Terms and Conditions.

- ^

We will pose many of these beliefs in terms of subjective probabilities, which represent betting odds that we consider fair in the sense that we'd be roughly indifferent between betting in favor of the relevant propositions at those odds or betting against them.

- ^

For the sake of definiteness, these are Nick Beckstead’s subjective probabilities, and they don’t necessarily represent the Future Fund team as a whole or its funders.

- ^

It might be argued that this makes the prize encourage people to have views different from those presented here. This seems hard to avoid, since we are looking for information that changes our decisions, which requires changing our beliefs. People who hold views similar to ours can, however, win the $200k canonical reference prize.

- ^

A slight update/improvement on something that would have won the prize in the past (e.g. this update by Ajeya Cotra) does not automatically qualify due to being better than the existing canonical reference. Roughly speaking, the update would need to be sufficiently large that the new content would be prize-worthy on its own.

Do you believe some statement of this form?

"FTX Foundation will not get submissions that change its mind, but it would have gotten them if only they had [fill in the blank]"

E.g., if only they had…

Even better would be a statement of the form:

If you think one of these statements or some other is true, please tell me what it is! I'd love to hear your pre-mortems, and fix the things I can (when sufficiently compelling and simple) so that we can learn as much as possible from this competition!

I also think predictions of this form will help with our learning, even if we don't have time/energy to implement the changes in question.

I don't have anything great, but the best thing I could come up with was definitely "I feel most stuck because I don't know what your cruxes are".

I started writing a case for why I think AI X-Risk is high, but I really didn't know whether the things I was writing were going to be hitting at your biggest uncertainties. My sense is you probably read most of the same arguments that I have, so our difference in final opinion is probably generated by some other belief that you have that I don't, and I don't really know how to address that preemptively.

I might give it a try anyways, and this doesn't feel like a defeater, but in this space it's the biggest thing that came to mind.

Thanks! The part of the post that was supposed to be most responsive to this on size of AI x-risk was this:

I think explanations of how Joe's probabilities should be different would help. Alternatively, an explanation of why some other set of propositions was relevant (with probabilities attached and mapped to a conclusion) could help.

FWIW, I would prefer a post on "what actually drives your probabilities" over a "what are the reasons that you think will be most convincing to others".

...if they had explained why their views were not moved by the expert reviews OpenPhil has already solicited.

In "AI Timelines: Where the Arguments, and the 'Experts,' Stand," Karnofsky writes:

The footnote text reads, in part:

Many of these reviewers disagree strongly with the reports under review.

Davidson 2021 on semi-informative priors received three reviews.

By my judgment, all three made strong negative assessments, in the sense (among others) that if one agreed with the review, one would not use the report's reasoning to inform decision-making in the manner advocated by Karnofsky (and by Beckstead).

From Hajek and Strasser's review:

... (read more)I included responses to each review, explaining my reactions to it. What kind of additional explanation were you hoping for?

For Hajek&Strasser's and Halpern’s reviews, I don't think "strong negative assessment" is supported by your quotes. The quotes focus on things like 'the reported numbers are too precise' and 'we should use more than a single probability measure' rather than whether the estimate is too high or too low overall or whether we should be worrying more vs less about TAI. I also think the reviews are more positive overall than you imply, e.g. Halpern's review says "This seems to be the most serious attempt to estimate when AGI will be developed that I’ve seen"

... (read more)I attach less than 50% in this belief, but probably higher than the existing alternative hypotheses:

Given 6 months or a year for people to submit to the contest rather than 3 months.

I think forming coherent worldviews take a long time, most people have day jobs or school, and even people who have the flexibility to take weeks/ a month off to work on this full-time probably need some warning to arrange this with their work. Also some ideas take time to mull over so you benefit from calendar time spread even when the clock time takes the same.

As presented, I think this prize contest is best suited for people who a) basically have the counterarguments in mind/in verbal communication but never bothered to write it down yet or b) have a draft argument sitting in a folder somewhere and never gotten around to publishing it. In that model, the best counterarguments are already "laying there" in somebody's head or computer and just need some incentives for people to make them rigorous.

However, if the best counterarguments are currently confu... (read more)

you might already be planning on dong this, but it seems like you increase the chance of getting a winning entry if you advertise this competition in a lot of non-EA spaces. I guess especially technical AI spaces e.g. labs, universities. Maybe also trying to advertise outside the US/UK. Given the size of the prize it might be easy to get people to pass on the advertisement among their groups. (Maybe there's a worry about getting flack somehow for this, though. And also increases overhead to need to read more entries, though sounds like you have some systems set up for that which is great.)

In the same vein I think trying to lower the barriers to entry having to do with EA culture could be useful - e.g. +1 to someone else here talking about allowing posting places besides EAF/LW/AF, but also maybe trying to have some consulting researchers/judges who find it easier/more natural to engage in non-analytic-philosophy-style arguments.

… if only they had allowed people not to publish on EA Forum, LessWrong, and Alignment Forum :)

Honestly, it seems like a mistake to me to not allow other ways of submission. For example, some people may not want to publicly apply for a price or be associated with our communities. An additional submission form might help with that.

Related to this, I think some aspects of the post were predictably off-putting to people who aren't already in these communities - examples include the specific citations* used (e.g. Holden's post which uses a silly sounding acronym [PASTA], and Ajeya's report which is in the unusual-to-most-people format of several Google Docs and is super long), and a style of writing that likely comes off as strange to people outside of these communities ("you can roughly model me as"; "all of this AI stuff").

*some of this critique has to do with the state of the literature, not just the selection thereof. But insofar as there is a serious interest here in engaging with folks outside of EA/rationalists/longtermists (not clear to me if this is the case), then either the selections could have been more careful or caveated, or new ones could have been created.

I really think you need to commit to reading everyone's work, even if it's an intern skimming it for 10 minutes as a sifting stage.

The way this is set up now - ideas proposed by unknown people in community are unlikely to be engaged with, and so you won't read them.

Look at the recent cause exploration prizes. Half the winners had essentially no karma/engagement and were not forecasted to win. If open phanthropy hadn't committed to reading them all, they could easily have been missed.

Personally, yes I am much less likely to write something and put effort in if I think no one will read it.

Could you put some judges on the panel who are a bit less worried about AI risk than your typical EA would be? EA opinions tend to cluster quite strongly around an area of conceptual space that many non-EAs do not occupy, and it is often hard for people to evaluate views that differ radically from their own. Perhaps one of the superforecasters could be put directly onto the judging panel, pre-screening for someone who is less worried about AI risk.

"FTX Foundation will not get submissions that change its mind, but it would have gotten them if only they had [broadened the scope of the prizes beyond just influencing their probabilities]"

Examples of things someone considering entering the competition would presumably consider out of scope are:

- Making a case that AI misalignment is the wrong level of focus – even if AI risks are high it could be that AI risks and other risks are very heavily weighted towards specific risk factor scenarios, such as a global hot or cold war. This view is apparently expressed by Will (see here).

- Making a case based on tractability – that a focus on AI risk is misguided as the ability to affect such risks are low (not to far away from the views of Yudkowsky here).

- Making the case that we should not put much decisions weight on future predictions of risks – E.g. as long-run predictions of future technology as they are inevitably unreliable (see here) or E.g. as modem risk assessment best practice says that probability estimates should only play a limited role in risk assessments (my view expressed here) or other.

- Making the case that some other x-risk is more pressing, more likely, more tractable, e

... (read more)Ehh, the above is too strong, but:

your reward schedule rewarded smaller shifts in proportion to how much they moved your probabilities (e.g., $X per bit).

E.g., as it is now, if two submissions together move you across a threshold, it would seem as if:

and both seem suboptimal.

e.g., if you get information in one direction from one submission, but also information from another submission in another direction, and they cancel out, neither gets a reward. This is particularly annoying if it makes getting-a-prize-or-not depending on the order of submissions.

e.g., because individual people's marginal utility of money is diminishing, a 10% chance of reaching your threshold and getting $X will be way less valuable to participants than moving your opinion around 10% of the way to a threshold and getting $X/10.

e.g., if someone has information which points ... (read more)

On the face of it an update 10% of the way towards a threshold should only be about 1% as valuable to decision-makers as an update all the way to the threshold.

(Two intuition pumps for why this is quadratic: a tiny shift in probabilities only affects a tiny fraction of prioritization decisions and only improves them by a tiny amount; or getting 100 updates of the size 1% of the way to a threshold is super unlikely to actually get you to a threshold since many of them are likely to cancel out.)

However you might well want to pay for information that leaves you better informed even if it doesn't change decisions (in expectation it could change future decisions).

Re. arguments split across multiple posts, perhaps it would be ideal to first decide the total prize pool depending on the value/magnitude of the total updates, and then decide on the share of credit allocation for the updates. I think that would avoid the weirdness about post order or incentivizing either bundling/unbundling considerations, while still paying out appropriately more for very large updates.

Sorry I don't have a link. Here's an example that's a bit more spelled out (but still written too quickly to be careful):

Suppose there are two possible worlds, S and L (e.g. "short timelines" and "long timelines"). You currently assign 50% probability to each. You invest in actions which help with either until your expected marginal returns from investment in either are equal. If the two worlds have the same returns curves for actions on both, then you'll want a portfolio which is split 50/50 across the two (if you're the only investor; otherwise you'll want to push the global portfolio towards that).

Now you update either that S is 1% more likely (51%, with L at 49%).

This changes your estimate of the value of marginal returns on S and on L. You rebalance the portfolio until the marginal returns are equal again -- which has 51% spending on S and 49% spending on L.

So you eliminated the marginal 1% spending on L and shifted it to a marginal 1% spending on S. How much better spent, on average, was the reallocated capital compared to before? Around 1%. So you got a 1% improvement on 1% of your spending.

If you'd made a 10% update you'd get roughly a 10% improvement on 10% of your spending. If you updated all the way to certainty on S you'd get to shift all of your money into S, and it would be a big improvement for each dollar shifted.

I think that the post should explain briefly, or even just link to, what a “superforecaster” is. And if possible explain how and why this serves an independent check.

The superforecaster panel is imo a credible signal of good faith, but people outside of the community may think “superforecasters” just means something arbitrary and/or weird and/or made up by FTX.

(The post links to Tetlock’s book, but not in the context of explaining the panel)

I would have also suggested a prize that generally confirms your views, but with an argument that you consider superior to your previous beliefs.

This prize is similar to the bias of printing research that claims something new rather than confirming previous research.

That would also resolve any particular bias baked into the process that compels people to convince you that you have to update instead of actually figuring out what they actually think is right.

Sure (with a ton of work), though it would almost entirely consist of pointing to others' evidence and arguments (which I assume Nick would be broadly familiar with but would find less persuasive than I do, so maybe this project also requires imagining all the reasons we might disagree and responding to each of them...).

This is an excellent idea and seems like a good use of money, and the sort of thing that large orgs should do more of.

It looks to me like there is a gap in the space of mind-changing arguments that the prizes cover. The announcement raises the possibility that "a bunch of this AI stuff is basically right, but we should be focusing on entirely different aspects of the problem." But it seems to me that if someone successfully argues for this position, they won't be able to win any of the offered prizes.

Relatedly, if someone argues "AI is as important as you think, but some other cause is even more important than AI risk and you should be allocating more to it", I don't think this would win a prize, but it seems deserving of one.

(But it does seem harder to determine the winning criteria for prizes on those types of arguments.)

After thinking some more, it also occurs to me that it would be easier to change your prioritization by changing your beliefs about expected tractability. For example, shifting P(misalignment x-risk|AGI) from 15% to 1.5% would be very hard, but my intuition is that shifting your subjective {expected total money required to solve AI alignment} by a factor of 10 would be significantly easier, and both have the same effect on the cost-effectiveness of AI work.

On the other hand, total money to solve AI alignment might be the wrong metric. Perhaps you expect it only costs (say) $1 billion, which is well within your budget, but that it costs 20 person-years of senior grantmaker time to allocate the money correctly. In that case, a 10x change in cost-effectiveness matters less than 10x (it still matters somewhat because higher cost-effectiveness means you can afford to spend less time thinking about which grants to make, and vice versa).

Thanks for the feedback! This is an experiment, and if it goes well we might do more things like it in the future. For now, we thought it was best to start with something that we felt we could communicate and judge relatively cleanly.

tldr: Another way to signal-boost this competition might be through prestige and not just money, by including some well-known people as judges, such as Elon Musk, Vitalik Buterin, or Steven Pinker.

One premise here is that big money prizes can be highly motivating, and can provoke a lot of attention, including from researchers/critics who might not normally take AI alignment very seriously. I agree.

But, if Future Fund really wants maximum excitement, appeal, and publicity (so that the maximum number of smart people work hard to write great stuff), then apart from the monetary prize, it might be helpful to maximize the prestige of the competition, e.g. by including a few 'STEM celebrities' as judges.

For example, this could entail recruiting a few judges like tech billionaires Elon Musk, Jeff Bezos, Sergey Brin, Tim Cook, Ma Huateng, Ding Lei, or Jack Ma, crypto leaders such as Vitalik Buterin or Charles Hoskinson, and/or well-known popular science writers, science fiction writers/directors, science-savvy political leaders, etc. And maybe, for an adversarial perspective, some well-known AI X-risk skeptics such as Steven Pinker, Gary Marcus, etc.

Since these folks are mostly... (read more)

TL;DR: We might need to ping pong with you in order to change your mind. We don't know why you believe what you believe.

60% AGI by 2100 seems really low (as well as 15% `P(misalignment x-risk|AGI)`). I'd need to know why you believe it in order to change your mind.

Specifically, I'd be happy to hear where you disagree with AGI ruin scenarios are likely (and disjunctive) by So8res.

Adding: I'm worried that nobody will address FTX's reasons to believe what they believe, and FTX will conclude "well, we put out a $1.5M bounty and nobody found flaws, they only addressed straw arguments that we don't even believe in, this is pretty strong evidence we are correct!

Please consider replying, FTX!

Do you believe that there is something already published that should have moved our subjective probabilities outside of the ranges noted in the post? If so, I'd love to know what it is! Please use this thread to collect potential examples, and include a link. Some info about why it should have done that (if not obvious) would also be welcome. (Only new posts are eligible for the prizes, though.)

I think considerations like those presented in Daniel Kokotajlo's Fun with +12 OOMs of Compute suggest that you should have ≥50% credence on AGI by 2043.

This is more of a meta-consideration around shared cultural background and norms. Could it just be a case of allowing yourselves to update toward more scary-sounding probabilities? You have all the information already. This video from Rob Miles ("There's No Rule That Says We'll Make It")[transcript copied from YouTube] made me think along these lines. Aside from background culture considerations around human exceptionalism (inspired by religion) and optimism favouring good endings (Hollywood; perhaps also history to date?), I think there is also an inherent conservatism borne by prestigious mega-philanthropy whereby a doom-laden outlook just doesn't fit in.

Optimism seems to tilt one in favour of conjunctive reasoning, and pessimism favours disjunctive reasoning. Are you factoring both in?

$100 to change my mind to FTX's views

If you change my mind to any of:

7%0% to 35%30%0% and 60%I'm not adding the "by 2043" section:

because it is too complicated for me to currently think about clearly so I don't think I'd be a good discussion partner, but I'd appreciate help there too

My current opinion

Is that we're almost certainly doomed (80%? more?), I can't really see a way out before 2100 except for something like civilizational collapse.

My epistemic status

I'm not sure, I'm not FTX.

Pitch: You will be doing a good thing if you change my mind

You will help me decide whether to work on AI Safety, and if I do, I'll have better models to do it with. If I don't, I'll go back to focusing on the other projects I'm up to. I'm a bit isolated (I live in Israel), and talking to people from the international community who can help me not get stuck in my current opinions could really help me.

Technicalities

- How to talk to me? I think the best would be to comment here so our discussion will be online and people can push back, but there are more contact methods in my profile. I don't officially

... (read more)Two questions (although I very probably won't make a submission myself):

How likely do you think it is that anyone will win?

How many hours of work do you expect a winning submission to take? The reports you cite for informing your views seem like they were pretty substantial.

We are very unsure on both counts! There are some Manifold Markets on the first question, though!

I do think articles wouldn't necessarily need to be that long to be convincing to us, and this may be a consequence of Open Philanthropy's thoroughness. Part of our hope for these prizes is that we'll get a wider range of people weighing in on these debates (and I'd expect less length there).

I hope it is okay for me to hijack this thread to talk about something only mildly related. Mostly I was a bit surprised to see the google form link for infohazardous submissions. Please let me know if this comment is not appropriate here.

I understand that this link is only for infohazards being submitted as prizes to this contest, but I do feel (with medium confidence) EA needs better processes for handling infohazards in general. I'm assuming that if you had a better process you would likely also use it or mention it for this prize, and therefore that you do not have much better processes. Please let me know if this assumption is incorrect.

Some things I'm thinking of are:

- Cybersecurity - For stuff that shouldn't touch Google servers (or any servers or digital communication channels for that matter). There is an endless list of actors (starting with US intelligence) who have incentives to explicitly flag and archive AI risk and biorisk stuff in an automated fashion. Such actors could gain access to any of the digital syst... (read more)

Strongly endorsed this comment.

If we really take infohazards seriously, we shouldn't just be imagining EAs casually reading draft essays, sharing them, and the ideas gradually percolating out to potential bad actors.

Instead, we should take a fully adversarial, red-team mind-set, and ask, if a large, highly capable geopolitical power wanted to mine EA insights for potential applications of AI technology that could give them an advantage (even at some risk to humanity in general), how would we keep that from happening?

We would be naive to think that intelligence agencies of various major countries that are interested in AI don't have at least a few intelligence analysts reading EA Forum, LessWrong, & Alignment Forum, looking for tips that might be useful -- but that we might consider infohazards.

You wrote, "we think it's really possible that… a bunch of this AI stuff is basically right, but we should be focusing on entirely different aspects of the problem," and that you're interesting in "alternative positions that would significantly alter the Future Fund's thinking about the future of AI." But then you laid out specifically what you want to see: data and arguments to change your probability estimates of the timeline for specific events.

This rules out any possibility of winning these contests by arguing that we should be focusing on entirely different aspects of the problem, or of presenting alternative positions that would significantly alter the Future Fund's thinking about the future of AI. It looks like the Future Fund has already settled on one way of thinking about the future of AI, and just wants help tweaking its Gantt chart.

I see AI safety as a monoculture, banging away for decades on methods that still seem hopeless, while dismissing all other approaches with a few paragraphs here and there. I don't know of any approaches being actively explored which I think clear the bar of having a higher expected value than doing nothing.

Part of the reason... (read more)

Question just to double-check: are posts no longer going to be evaluated for the AI Worldview Prize? Given that is, that the FTX Future team has resigned (https://forum.effectivealtruism.org/posts/xafpj3on76uRDoBja/the-ftx-future-fund-team-has-resigned-1).

Nick, very excited by this and to see what this prize produces. One think I would find super useful is to know your probability of a bio x-risk by 2100. Thanks.

Looking forward to seeing the entries. Similar to others, I feel that P(misalignment x-risk|AGI) is high (at least 35%, and likely >75%), so think that a prize for convincing FF of that should be won. Similar for P(AGI will be developed by January 1, 2043) >45%. But then I'm also not sure what would be needed in addition to all the great pieces of writing on this already out there (some mentioned in OP).

I'm hoping that there will be good entries from Eliezer Yudkowsky (on P(misalignment x-risk|AGI) >75%; previous), Ajeya Cotra (on P(AGI will be developed by January 1, 2043) >45%; previous), Daniel Kokotajlo (on P(AGI will be developed by January 1, 2043) >75%?; previous) and possibly Holden Karnofsky (although I'm not sure his credences for these probabilities are much different to FF's current baseline; previous). Also Carlsmith says he's recently (May 2022) updated his probabilities from "~5%" to ">10%" for P(misalignment x-risk) by 2070. This is unconditional, i.e. including p(AGI) by 2070, and his estimate for P(AGI by 2070) is 65%, so that puts him at P(misalignment x-risk|AGI) >15%, so an entry from him (for P(misalignment x-risk|A... (read more)

Is this the largest monetary prize in the world for a piece of writing? Is it also the largest in history?

You should fly anyone who wins over 5k to meet with you in person. They have 1 hour to shift your credences by the same amount the already did (in bayesian terms, not % difference[1]). If they do, you'll give them the amount of money you already did.

I imagine some people arguing in person will be able to convince you better, both because there will be much greater bandwidth and because it allows for facial expressions and understanding the emotions behind an intellectual position, which are really important.

- ^

... (read more)If you move someone from 90% to 99%, the e

Are timelines-probabilities in this post conditional on no major endogenous slowdowns (due to major policy interventions on AI, major conflict due to AI, pivotal acts, safety-based disinclination, etc.)?

No, they are unconditional.

This is a very exciting development!

In your third footnote, you write:

However, an analysis that reassures you that your current estimates are correct can make your beliefs more resilient, and in tu... (read more)

I'm toying with a project to gather reference classes for AGI-induced extinction and AGI takeover. If someone would like to collaborate, please get in touch.

(I'm aware of and giving thought to reference class tennis concerns but still think something like this is neglected.)

Minor nitpick: You describe your subjective probabilities in terms of fair betting odds, but aren't betting odds misleading/confusing, since if AGI kills everyone, there's no payout? Even loans that are forgiven or paid back depending on the outcome could be confusing, because the value of money could drastically change, although you could try to adjust for that like inflation. I'm not sure such an adjustment would be accurate, though.

Maybe you could talk about betting odds as if you're an observer outside this world or otherwise assume away (causal and acausal) influence other than through the payout. Or just don't use betting odds.

Yes, the intention is roughly something like this.

I’m thinking of writing something for this. Most of the arguments I have in mind address the headline problem only partially. Do you mind if I make a series of, say, 5 posts as a single submission?

Worth noting is that money like this is absolutely capable of shifting people's beliefs through motivated reasoning. Specifically, I might be tempted to argue for a probability outside the Future Fund's threshold, and for research I do to be motivated in favor of updating in this direction. Thus, my strategy would be to figure out your beliefs before looking at the contest, then look at the contest to see if you disagree with the Future Fund.

The questions are:

“P(misalignment x-risk|AGI)”: Conditional on AGI being developed by 2070, humanity will go extinct... (read more)

I'm guessing this definition is meant to separate misalignment from misuse, but I'm curious whether you are including either/both of these 2 cases as misalignment x-risk:

- AGI is deployed and we get locked into a great outcome by today's standards, but we get a world with <=1% of the value of "humanity's potential". So we sort of have an existential catastrophe, without a discrete cata

... (read more)The "go extinct" condition is a bit fuzzy. It seems like it would be better to express what you want to change your mind about as something more like (forget the term for this). P(go extinct| AGI)/P(go extinct).

I know you've written the question in terms of go extinct because of AGI but I worry this leads to relatively trivial/uninformative about AI ways to shift that value upward.

For instance, consider a line of argument:

-

-

... (read more)AGI is quite likely (probably by your own lights) to be developed by 2070.

If AGI is developed either it will suffer from serious

Would you be able to say a little more about why part of your criteria seems to be degree of probability shift ("We will award larger prizes for larger changes to these probabilities, as follows..."). It seems to me that you might get a case where you could get analyses that offer larger changes but are less robust than some analyses that suggest smaller changes. I didn't understand how much of your formal evaluation will look at plausibility, argumentation, soundness?

(asking as a curiosity not as a critique)

This was gonna be a comment, but it turned into a post about whether large AI forecasting prizes could be suboptimal.

... (read more)I think there are other AI-related problems that are comparable in seriousness to these two, which you may be neglecting (since you don't mention them here). These posts describe a few of them, and this post tried to comprehensively list my worries about AI x-risk.

Interesting idea for a competition, but I don't think that the contest rules as designed and, more specifically, the information hazard policy, are well thought out for any submissions that follow the below line of argumentation when attempting to make the case for longer timelines:

- Scaling current deep learning approaches in both compute and data will not be sufficient to achieve AGI, at least within the timeline specified by the competition

- This is due to some critical component missing in the design of current deep neural networks

- Supposing that this criti

... (read more)Question about how judges would handle multiple versions of essays for this competition. (I think this contest is a great idea; I'm just trying to anticipate some practical issues that might arise.)

EA Forum has a ethos of people offering ideas, getting feedback and criticism, and updating their ideas iteratively. For purposes of this contest, how would the judges treat essays that are developed in multiple versions?

For example, suppose a researcher posts version 1.0 of an essay on EA Forum with the "Future Fund worldview prize" tag. They get a bunch ... (read more)

Hi!

I don't think I will participate in this contest, because:

However, after reading about this prize, I have several questions that came up for me as I read it. I thought I would offer them as a good-faith effort to clarify your goals here.

- There are significant risks to human well-being, aside from human extinction, that are plausible in

... (read more)I am unsure what you mean by AGI. You say:

and:

If someone uses AI capabilities to create a synthetic virus (wh... (read more)

Are essays submitted before December 23rd at an advantage over essays submitted on December 23rd?

Is it permitted to submit more than one entry if the entries are on different topics?

(Apologies if this has been answered somewhere already.)

Question: In this formulation, what is meant by the "current position"? Just asking to be sure.

It could refer to the specific credences outlined above, but it would seem somewhat strange to say (e.g.) "here is what we regard as the canonical critique of 'AGI will be developed by January 1, 2043 =/= 20%'". So I am inclined to believe that it probably means something else.

I would love to know, since I... (read more)

How do we tag the post?

The instructions say to tag the post with "Future Fund worldview prize", but it does not seem possible to do this. Only existing tags can be used for tagging as far as I can tell, and this tag is not in the list of options.

Could you provide a deeper idea of what you mean by "misaligned"?

How do we submit our essay for the contest? Is there an email we send it to or something?

I think it would be nicer if you say your P(Doom|AGI in 2070) instead of P(Doom|AGI by 2070), because the second one implicitly takes into account your timelines. Also, it would be nicer to have the same years: P(Doom | AGI in 2043) and P(Doom | AGI in 2100)