Disclaimer: this is not a project from Alliance to Feed the Earth in Disasters (ALLFED).

Summary

- Global warming increases the risk from climate change. This “has the potential to result in—and to some extent is already resulting in—increased natural disasters, increased water and food insecurity, and widespread species extinction and habitat loss”.

- However, I think global warming also decreases the risk from food shocks caused by abrupt sunlight reduction scenarios (ASRSs), which can be a nuclear winter, volcanic winter, or impact winter[1]. In essence, because low temperature is a major driver for the decrease in crop yields that can lead to widespread starvation (see Xia 2022, and this post from Luisa Rodriguez).

- Factoring in both of the above, my best guess is that additional emissions of greenhouse gases (GHGs) are beneficial up to an optimal median global warming in 2100 relative to 1880 of 3.3 ºC, after which the increase in the risk from climate change outweighs the reduction in that from ASRSs. This suggests delaying decarbonisation is good at the margin if one trusts (on top of my assumptions!):

- Metaculus’ community median prediction of 2.41 ºC.

- Climate Action Tracker’s projections of 2.6 to 2.9 ºC for current policies and action.

- Nevertheless, I am not confident the above conclusion is resilient. My sensitivity analysis indicates the optimal median global warming can range from 0.1 to 4.3 ºC. So the takeaway for me is that we do not really know whether additional GHG emissions are good/bad.

- In any case, it looks like the effect of global warming on the risk from ASRSs is a crucial consideration, and therefore it must be investigated, especially because it is very neglected. Another potentially crucial consideration is that an energy system which relies more on renewables, and less on fossil fuels is less resilient to ASRSs.

- Robustly good actions would be:

- Improving civilisation resilience.

- Prioritising the risk from nuclear war over that from climate change (at the margin).

- Keeping options open by:

- Not massively decreasing/increasing GHG emissions.

- Researching cost-effective ways to decrease/increase GHG emissions.

- Learning more about the risks posed by ASRSs and climate change.

Introduction

In the sense that matters most for effective altruism, climate change refers to large-scale shifts in weather patterns that result from emissions of greenhouse gases such as carbon dioxide and methane largely from fossil fuel consumption. Climate change has the potential to result in—and to some extent is already resulting in—increased natural disasters, increased water and food insecurity, and widespread species extinction and habitat loss.

In What We Owe to the Future (WWOF), William MacAskill argues “decarbonisation [decreasing GHG emissions] is a proof of concept for longtermism”, describing it as a “win-win-win-win-win”. In addition to (supposedly) improving the longterm future:

- “Moving to clean energy has enormous benefits in terms of present-day human health. Burning fossil fuels pollutes the air with small particles that cause lung cancer, heart disease, and respiratory infections”.

- “By making energy cheaper [in the long run], clean energy innovation improves living standards in poorer countries”.

- “By helping keep fossil fuels in the ground, it guards against the risk of unrecovered collapse”.

- “By furthering technological progress, it reduces the risk of longterm stagnation”.

I agree decarbonisation will eventually be beneficial, but I am not sure decreasing GHG emissions is good at the margin now. As I said in my hot takes on counterproductive altruism:

- Mitigating global warming decreases the chances of crossing a tipping point which leads to a moist or runaway greenhouse effect [i.e. severe climate change], but increases the severity of ASRSs [which can be a nuclear winter, volcanic winter, or impact winter, and lead to widespread starvation; see Xia 2022, and this post from Luisa Rodriguez].

- The major driver for the decrease in [crop] yields during an ASRS is the lower temperature, so starting from a higher baseline temperature would be helpful.

- One might argue the severity of ASRSs is only a function of the temperature reduction, not of the final temperature, on the basis that yields are roughly directly proportional to temperature in ºC. However, this is not the case.

- The typical base temperature of cool-season plants is 5 ºC. So, based on the heuristic of growing degree-days, a reduction from 10 ºC to 5 ºC leads to a 100 % reduction in yields, not 50 % as suggested by a direct proportionality between temperature in ºC and yields.

In this analysis, I estimated the optimal global warming to decrease the reduction in the value of the future due to both climate change and the food shocks caused by ASRSs. Note such warming may well not be optimal from the point of view of maximising gross world product (GWP) in the nearterm (e.g. in 2100).

Methods

I calculated the reduction in the value of the future as a function of the median global warming in 2100 relative to 1880 from the sum between:

- The reduction in the value of the future due to the food shocks caused by ASRSs between 2024 and 2100.

- The reduction in the value of the future due to climate change.

The data and calculations are in this Sheet (see tab “TOC”) and this Colab[2]. You can change the variables scale_ASRS and scale_climate_change to scale the reduction in the value of the future due to ASRSs, and climate change for 2.41 ºC by a constant factor. For instance, setting those variables to 2 would make:

- ASRSs 2 times as bad for any median global warming.

- Climate change 2 times as bad for a median global warming of 2.41 ºC.

I modelled all variables as independent distributions, and ran a Monte Carlo simulation with 100 k samples per variable to get the results. Owing to the independence assumption, my model implicitly considers climate change does not impact the risk from ASRSs via increased risk from nuclear war. I believe this is about right, in agreement with Chapter 12 of John Halstead’s report on climate change and longtermism:

- Most of the indirect risk from climate change flows through unaligned artificial intelligence, engineered pandemics, and unforeseen anthropogenic risks, whose existential risk between 2021 and 2120 is guessed by Toby Ord in The Precicipe to be 100, 33.3, and 33.3 times that of nuclear war. Nevertheless, there is significant uncertainty around these estimates[3].

- Conflicts between India and China/Pakistan are the major driver for the risk from climate change, but these only have 7.15 % (= (160 + 350 + 165)/9,440) of the global nuclear warheads according to these data from Our World in Data (OWID).

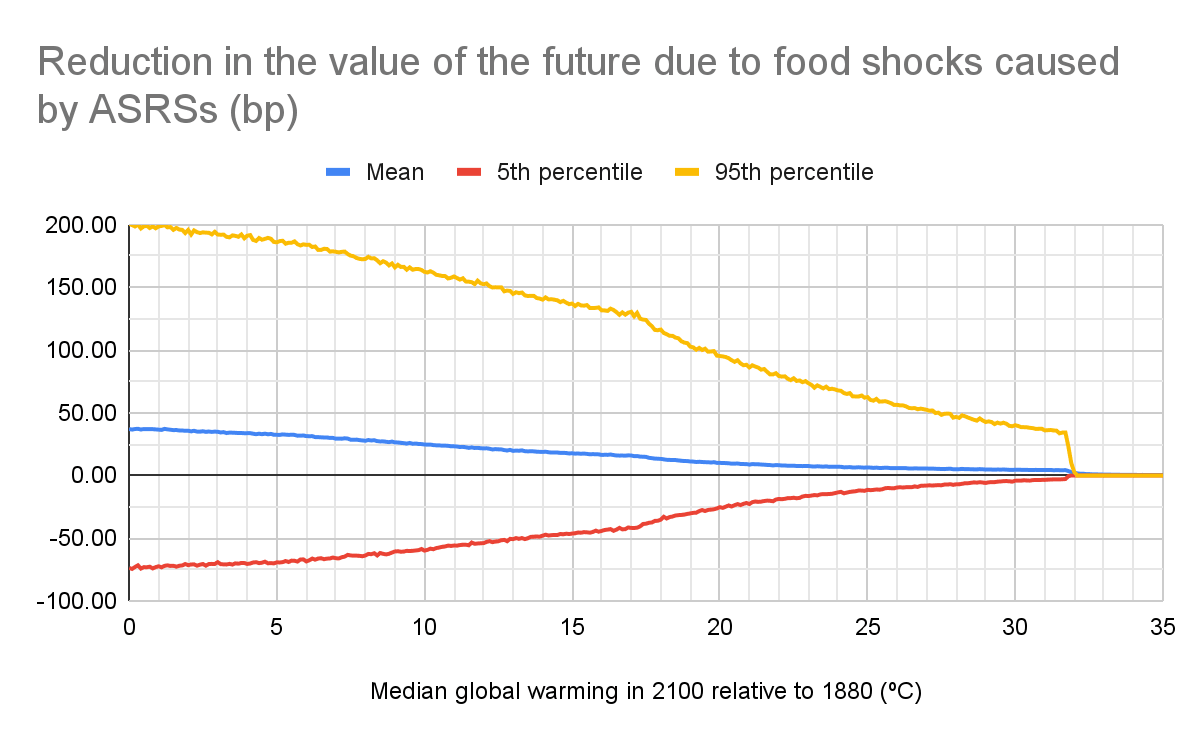

Abrupt sunlight reduction scenarios

I computed the reduction in the value of the future due to the food shocks caused by ASRSs between 2024 and 2100:

- Using this baseline model, for which:

- The mean reduction is 37.2 basis points (bp) (5th to 95th percentile, -73.1 to 199). This is 4.83 (-9.50 to 25.9) times that of Toby given in Table 6.1 of The Precipice for the existential risk from nuclear war[4].

- The actual global warming relative to 1880 is 1 ºC (= 0.32 + 0.68) according to these data from OWID. This refers to the year of 2010 studied in Xia 2022, which I used to model the calorie production without adaptation in the worst year of the ASRSs (see details here).

- Inputting into the baseline model a soot ejected into the stratosphere equal to the maximum between 0 and the difference between the soot ejection in the baseline model and its effective reduction due to global warming beyond that of the baseline model[5]. I estimated this reduction from the product between:

- The soot ejection required to neutralise the median global warming in 2100 relative to 1880 beyond that of the baseline model, linearly interpolating between (see table below):

- 0 Tg[6] for 0 ºC.

- 5, 16, 27, 37, 47 and 150 Tg for the cropland 2 m air temperature reduction given in Figure 1a of Xia 2022.

- 15,000 Tg for the continental temperature reduction of 28 ºC mentioned in Bardeen 2017 for the impact winter of the Cretaceous–Paleogene extinction event (which extinguished the dinosaurs).

- The effective reduction in the soot ejection as a fraction of the above, which I defined as a uniform distribution ranging from 0 to 1. Consequently, for example, for a median global warming beyond that of the baseline model of 2.36 ºC, and an ASRS of 10 Tg:

- The effective reduction in the soot ejection due to global warming would be a uniform distribution ranging from 0 to 5 Tg, which is the soot ejection that results in a maximum temperature reduction of 2.36 ºC. At one extreme, the effective reduction in the soot ejection is null, which means the median global warming has no effect, and therefore the maximum temperature reduction caused by the ASRS is all that matters. At the other extreme, the coldest temperature of the ASRS is all that matters.

- The soot ejection to be inputted into the baseline model would be 10 Tg minus the uniform distribution of 0 to 5 Tg regarding the effective soot reduction. This equals a uniform distribution ranging from 5 to 10 Tg.

- The soot ejection required to neutralise the median global warming in 2100 relative to 1880 beyond that of the baseline model, linearly interpolating between (see table below):

My methodology relies on the results of the climate and crop models of Xia 2022 for a single level of global warming (1 ºC), and then adjusts them via an effective soot reduction. Ideally, one should run the climate and crop models for each level of global warming, since the climate response caused by ASRSs depends on the pre-catastrophe global mean temperature. As an example of why this might be relevant, I do not know whether there is a good symmetry between the regional effects of global cooling and warming.

Soot ejected into the stratosphere (Tg) | Maximum temperature reduction (ºC) |

|---|---|

0 | 0 |

5 | 2.36 |

16 | 4.60 |

27 | 6.46 |

37 | 8.35 |

47 | 8.82 |

150 | 15.8 |

15,000 | 28 |

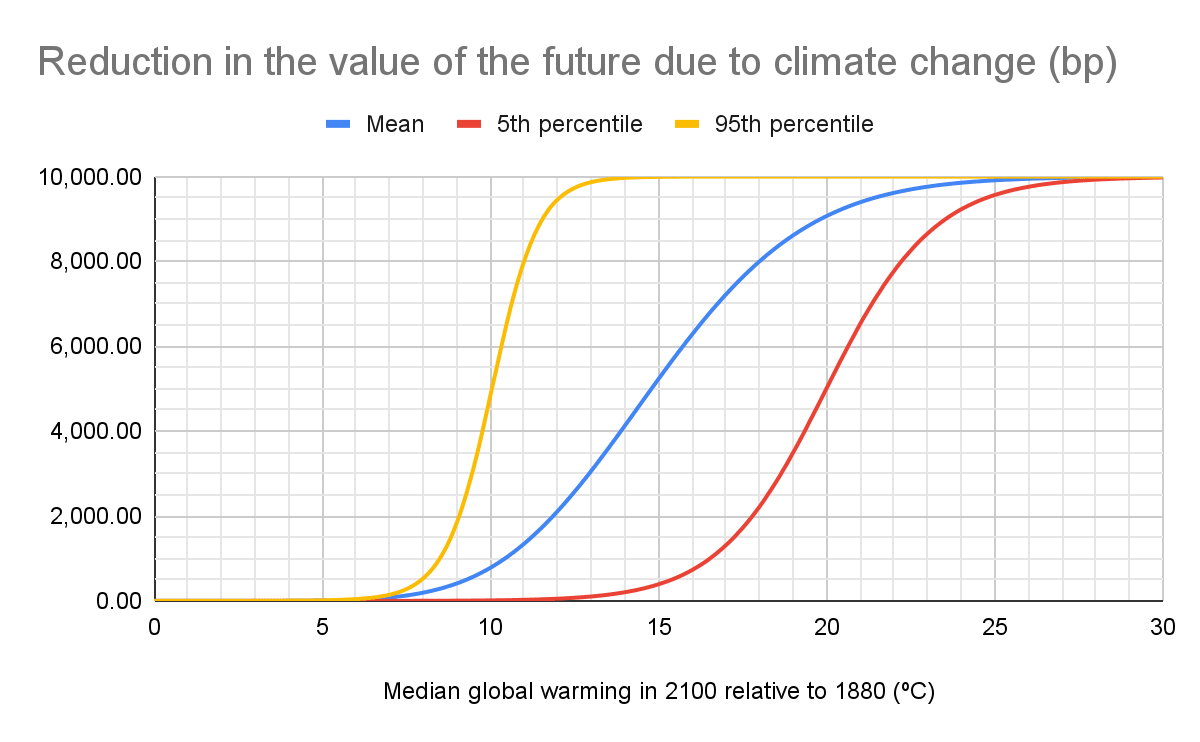

Climate change

I obtained the reduction in the value of the future due to climate change from a logistic function (S-curve), which:

- Tends to 1 as the median global warming in 2100 relative to 1880 increases to infinity.

- Equals 0.5 for a median global warming in 2100 relative to 1880 represented by a normal distribution with 5th and 95th percentiles equal to 10 and 20 ºC.

- I would have ideally used a point in the right tail, for which the reduction is higher than the 0.5 of the inflection point, to achieve a better fit (see Sandberg 2021). However, that would make the calculation of the parameters of the logistic function way more computationally costly[7].

- For context, the global mean temperature was about 15 ºC (the mean of the above) higher than the current one 90 and 250 million years ago (see Figures 19 to 21 of Scotese 2021).

- What worries me the most is that, according to Schneider 2019 (FAQ here), “stratocumulus decks become unstable and break up into scattered clouds when CO2 levels rise above 1,200 ppm”. “This instability triggers a surface warming of about 8 K [warming of 8 ºC] globally and 10 K in the subtropics”.

- Has a logistic growth rate defined based on the above, and setting the reduction in the value of the future due to climate change for a median global warming in 2100 relative to 1880 of 2.41 ºC to a lognormal distribution with:

- Mean of 0.368 bp, which I got aggregating 3 forecasts with the geometric mean of odds (as recommended by default by Jaime Sevilla):

- The order of magnitude of John’s best guess for the indirect risk of existential catastrophe due to climate change of 0.1 bp[8] (search for “the indirect risk of” here), which is also John’s upper bound for the “direct extinction risk”.

- 10 % of 80,000 Hours’ upper bound for the contribution of climate change to other existential risks, which is “something like 1 in 10,000 [1 bp]”. The best guess being 10 % of the upper bound feels reasonable, and results in an estimate of 0.1 bp, as estimated by John.

- Probability of climate change being a cause of human extinction by 2300 according to Good Judgment Inc’s climate superforecasting, which is “0.05%” considering the median prediction between 0 and 1 %. I assumed the 5 forecasts (out of 26) above 1 % to be poorly calibrated outliers (which Jaime recommends excluding).

- 95th percentile 100 times as high as the 5th percentile, since 2 orders of magnitude between an optimistic and pessimistic estimate feels reasonable.

- Mean of 0.368 bp, which I got aggregating 3 forecasts with the geometric mean of odds (as recommended by default by Jaime Sevilla):

Note the reduction in the value of the future due to climate change for an actual global warming of 2.41 ºC would be much lower than the aforementioned 0.368 bp, but a similar median warming allows for higher levels of actual warming, which are the driver for the overall risk. I used 2.41 ºC as the reference median warming in line with this Metaculus’ community prediction (on 11 April 2023).

There is significant uncertainty about the shape of the damage from climate change, but there is consensus that it increases more than linearly with warming[9] before ceiling effects, and therefore relying on an S-curve seems appropriate. However, since my logistic function is always positive:

- There will still be a reduction in the value of the future for a null median global warming in 2100 relative to 1880 of zero, which might seem strange. However, the actual warming can still be high even if there is a 50 % chance that it will be negative/positive.

- It implies cooling the Earth to absolute zero only leads to marginal reduction in the value of the future, which is arguably not the case!

One factor which makes my logistic function underestimate the risk from climate change is assuming it is impossible for it to be beneficial. In reality, I think it can, at least for low levels of median global warming, maybe because of carbon dioxide fertilisation (which I have ignored). Note:

- There is a 1/3 chance of the food shocks caused by ASRSs being beneficial in my baseline model, which assumes a median global warming of 1 ºC.

- The lower estimate of Bressler 2021 for the 2020 mortality cost of carbon is -75.7 % (= -1.71/2.26) the mean.

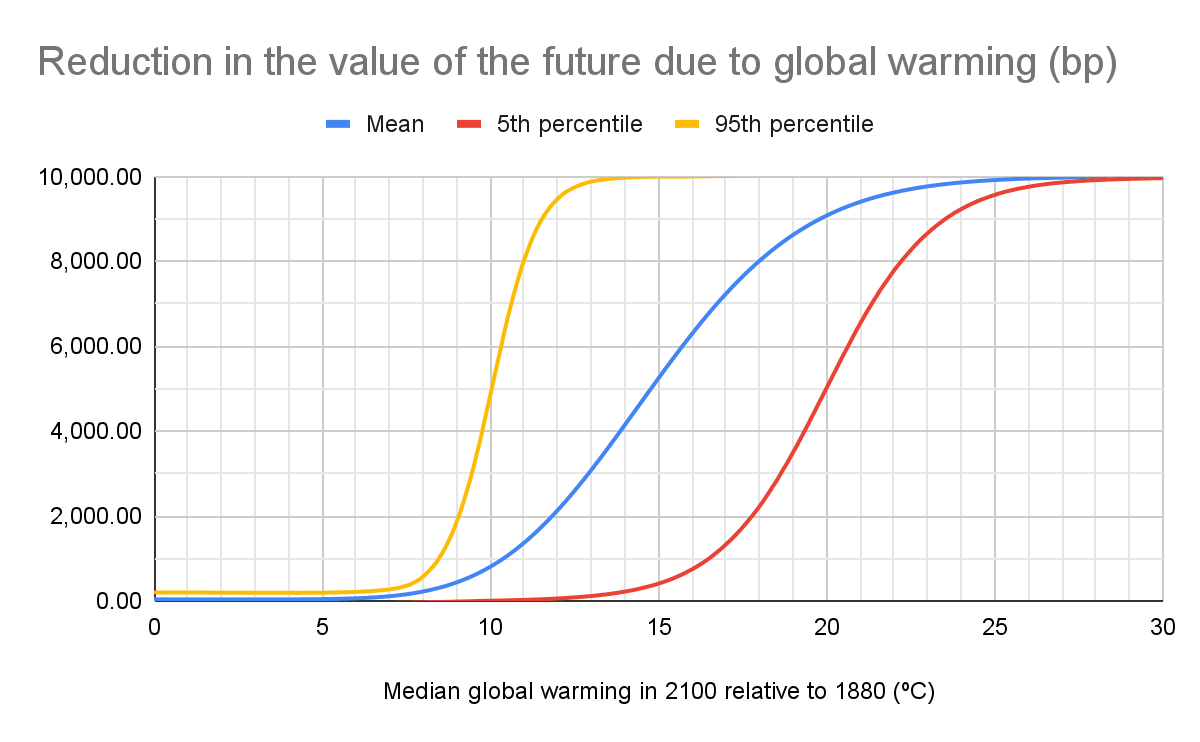

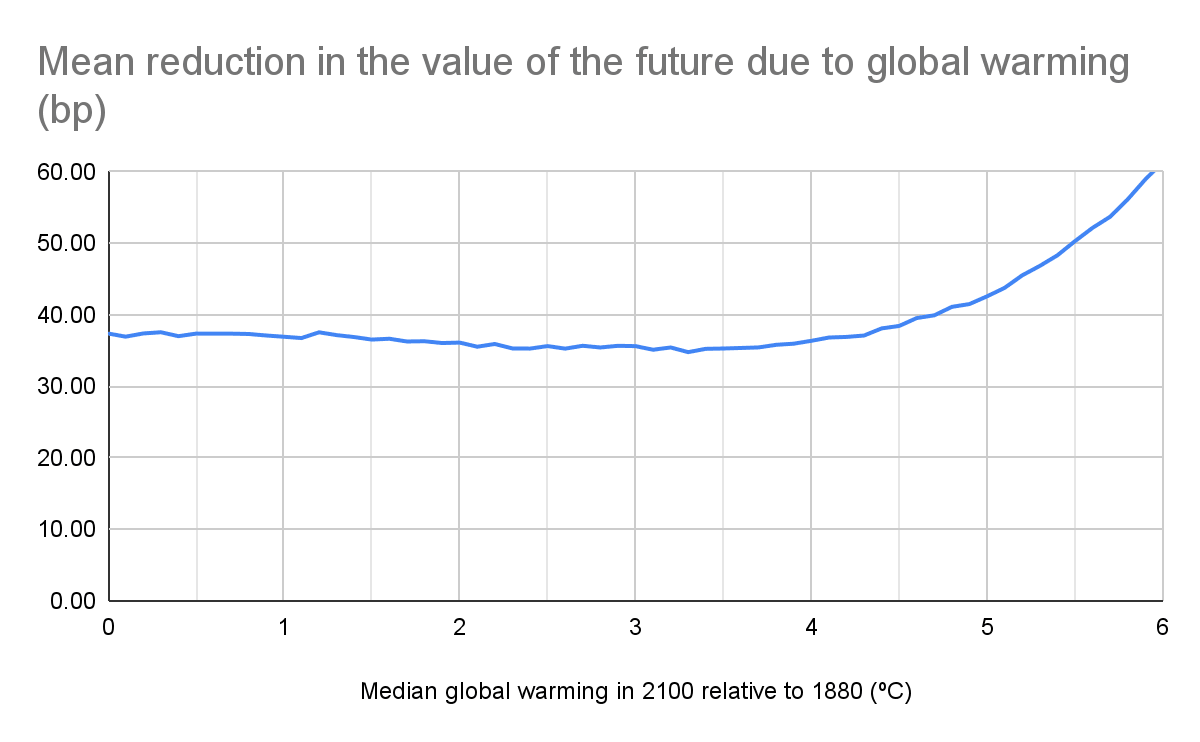

Results

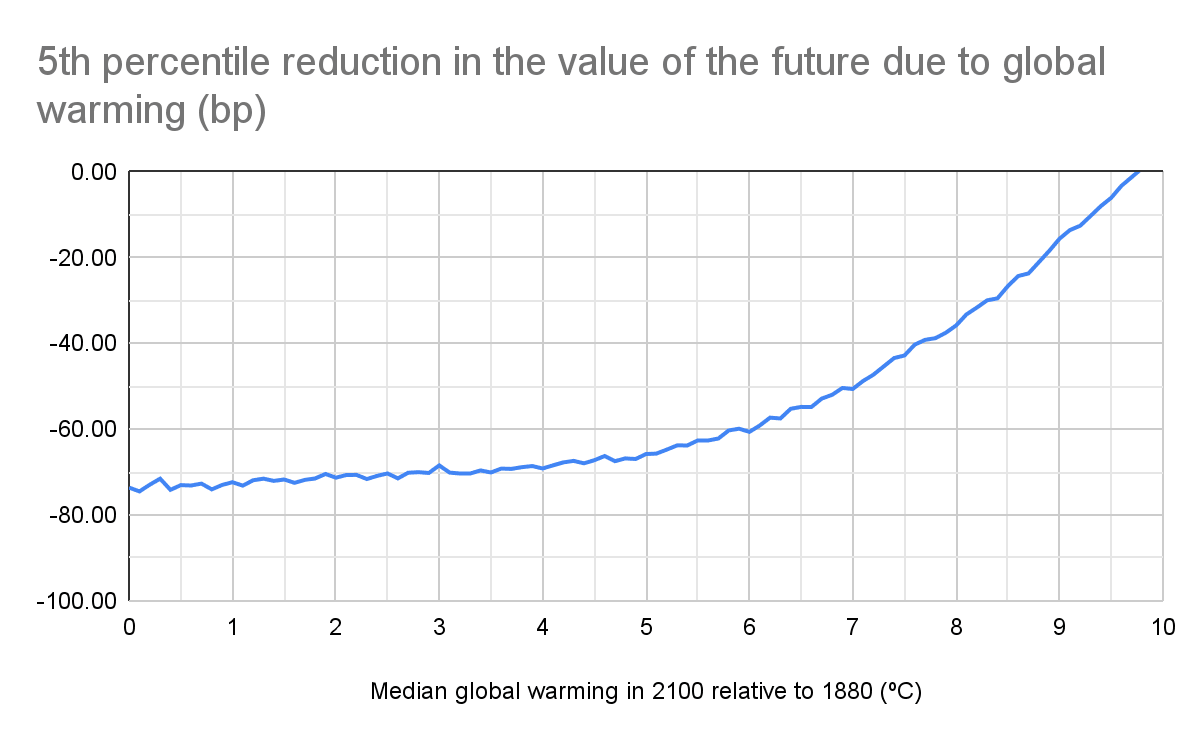

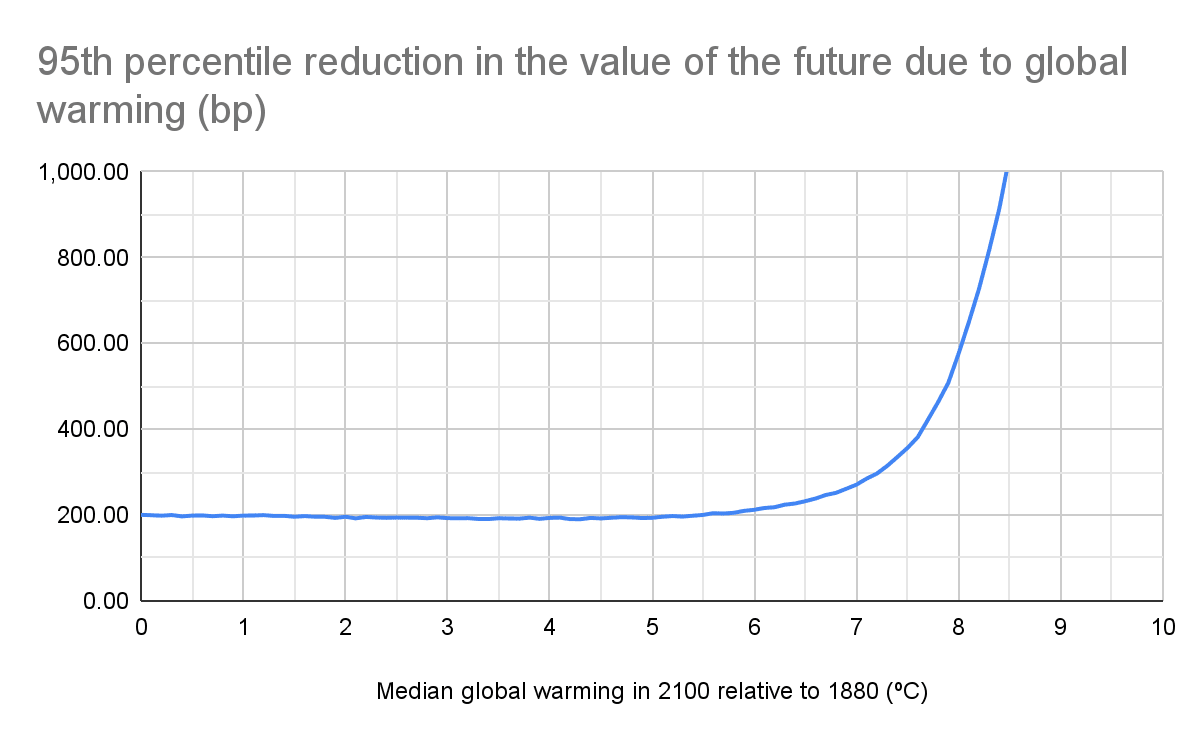

The key results are in the table below, and illustrated in the following figures. After these, I also present a short sensitivity analysis. The results plotted in the figures are in this Sheet (see tab “TOC”).

Key results

In this table, median global warming refers to the one in 2100 relative to 1880.

Metric | Mean | 5th percentile | 95th percentile |

|---|---|---|---|

Optimal median global warming (ºC) | 3.3 | 0.1 | 4.3 |

Reduction in the value of the future for the optimal median global warming (bp) | 34.8 | -74.1 | 191 |

Climate change

Note the above curves refer to a reduction in the longterm future potential, not in the GWP.

Abrupt sunlight reduction scenarios

The sharp variation starting at 31.8 ºC of median global warming in 2100 results from the soot ejected into the stratosphere as a function of the maximum temperature reduction increasing much faster after 150 Tg (which causes a maximum temperature reduction of 15.8 ºC; see last table here). This leads to a sharp increase in the effective reduction in the soot ejection, and therefore the reduction in the value of the future due to food shocks caused by ASRSs quickly approaches 0.

Global warming

The figures below refer to the reduction in the value of the future due to both food shocks caused by ASRSs between 2024 and 2100, and climate change.

Sensitivity analysis

Change | Optimal median global warming in 2100 relative to 1880 (ºC) | ||

|---|---|---|---|

Mean | 5th percentile | 95th percentile | |

None | 3.3 | 0.1 | 4.3 |

Risk from ASRSs 10 % as high | 2.3 | 0.1 | 2.1 |

Risk from ASRSs 10 times as high | 4.3 | 0.1 | 5.3 |

Risk from climate change 10 % as high for 2.41 ºC | 3.3 | 0.1 | 4.3 |

Risk from climate change 10 times as high for 2.41 ºC | 0.1 | 0.1 | 2.1 |

Discussion

Optimal median global warming and crucial considerations

My best guess is that additional GHG emissions are beneficial up to an optimal median global warming in 2100 relative to 1880 of 3.3 ºC, after which the increase in the risk from climate change outweighs the reduction in that from ASRSs. This suggests delaying decarbonisation is good at the margin if one trusts (on top of my assumptions!):

- Metaculus’ community median prediction of 2.41 ºC.

- Climate Action Tracker’s projections of 2.6 to 2.9 ºC for current policies and action.

Nevertheless, I am not confident the above conclusion is resilient. My sensitivity analysis indicates the optimal median global warming can range from 0.1 to 4.3 ºC, after which the reduction in the value of the future due to climate change starts to be material. The higher bound for the expected optimal median global warming would be lower/higher if the risk from climate change increased faster/slower than the exponential implied by my logistic function (for low levels of median global warming). The takeaway for me is that we do not really know whether additional GHG emissions are good/bad.

Note the cost-effectiveness of decreasing GHG emissions would be null for the optimal median global warming (by definition). The higher the cost-effectiveness bar, the more the median global warming would have to rise above the optimal value for the reduction in GHG emissions to be sufficiently effective.

In any case, it looks like the effect of global warming on the risk from ASRSs is a crucial consideration, and therefore it must be investigated, especially because it is very neglected. It is not mentioned in Kemp 2022, Founders Pledge’s report on climate philanthropy, nor John’s book-length report on climate change and longtermism. I am not sure whether the crucial consideration falls outside of the scope of these pieces, but I believe it should be addressed somewhere.

Another potentially crucial consideration is that an energy system which relies more on renewables, and less on fossil fuels is less resilient to ASRSs.

- According to Metaculus’ median community predictions, the share of the world’s primary energy coming from fossil fuels will be 33.7 % and 8.89 % in 2052 and 2122, which are much lower than the 82.3 % of 2021.

- This matters because solar radiation and precipitation would decrease during ASRSs, as plotted in Figure 1 of Xia 2022, which means there would be less solar energy, and probably less hydropower.

- Additionally, the global wind patterns might change such that there is less wind overall, or the windy regions move, or become too cold for wind turbines to operate.

- Geothermal and nuclear energy would not be impacted, so I like that these tend to be more supported than renewables (at the margin) by Founders Pledge, but I do not know whether the benefits are enough to outweigh the unclear consequences of mitigating global warming.

Implications

In Chapter 10 of WWOF, William suggests 3 rules of thumb for acting under uncertainty:

- “Take actions that we can be comparatively confident are good”.

- “Try to increase the number of options open to us”.

- “Try to learn more”.

I believe decreasing GHG emissions would be robustly good if the median global warming in 2100 relative to 1880 were much higher than 3.3 ºC (my best guess for the optimal value), but this is far from true. My analysis is not anything close to definite, but the fact it ignores many factors arguably implies I am underestimating the uncertainty of the matter, in which case the plausible range for the optimal median global warming should be even wider. One can reject this conclusion, and argue that decarbonising faster is good by postulating a strong prior that the optimal median global warming is lower than around 2.4 ºC, but would that really be reasonable? I do not think so, because reality just seems too complex for one to be that confident.

Robustly good actions would be:

- Improving civilisation resilience, which I am comparatively confident is good[10]. For example, via research and development of resilient foods. Solar geoengineering may be a way of getting the best of both worlds too, although it requires careful implementation.

- It can:

- Selectively cool down the regions adversely affected by climate change induced by global warming.

- Quickly be interrupted in the event of an ASRS to counter the decrease in temperature caused by it.

- However:

- If it is stopped because of another global catastrophe like a pandemic, temperature would rapidly increase, thus causing a double catastrophe as discussed in Baum 2013. This “demonstrates the value of integrative, systems-based global catastrophic risk analysis”.

- Schneider 2020 argues it “is not a fail-safe option to prevent global warming because it does not mitigate risks to the climate system that arise from direct effects of greenhouse gases on cloud cover”.

- Further discussion is in Tang 2021, and this episode of the 80,000 Hours podcast with Kelly Wanser, who “founded SilverLining — a nonprofit organization that advocates research into climate interventions, such as seeding or brightening clouds, to ensure that we maintain a safe climate”.

- Not the relevant comparison, but careful solar geoengineering would be better to counter climate change than intentionally causing a nuclear winter!

- It can:

- Prioritising the risk from nuclear war, which is the major driver for the risk from ASRSs, over that from climate change (at the margin). For instance, lobbying for arsenal limitation looks like a really cost-effective intervention (pragmatic limits are discussed in Pearce 2018).

- Keeping options open by:

- Not massively decreasing/increasing GHG emissions. This does not actually require any action, as the current best guesses for the median global warming in 2100 relative to 1880 fall well within the plausible range for the optimal value.

- Researching cost-effective ways to decrease/increase GHG emissions. I think Founders Pledge’s report on climate philanthropy can be useful for both options. It was written with the goal of decreasing the risk from climate change in mind, but preventing cost-effective reductions of GHG emissions would be a way of cost-effectively increasing them.

- Learning more about the risks posed by ASRSs and climate change. For example, to better quantify the reduction in the value of the future due to:

- The food shocks caused by ASRSs, study the climate and agricultural response to soot ejections into the stratosphere as a function of the initial mean global temperature, using climate and crop models.

- Climate change, explicitly model how a higher mean global temperature would increase the risk from unaligned artificial intelligence, engineered pandemics, and unforeseen anthropogenic risks.

Acknowledgements

Thanks to David Denkenberger, Johannes Ackva, John Halstead, and Alexey Turchin for feedback on the draft[11].

- ^

Technically, a nuclear/volcanic/impact winter is a type of climate change too, but the term climate change throughout my text refers to the adverse effects of global warming.

- ^

For me, the running time is 3 min.

- ^

As mentioned, my estimate for the risk from ASRSs is 4.83 (-9.50 to 25.9) times that of Toby for the existential risk from nuclear war.

- ^

Converting Toby’s estimate to the period of 2024 to 2100 assuming constant annual risk.

- ^

I computed the global warming beyond that of the baseline model from the maximum between 0 and the difference between the median global warming in 2062 (= (2024 + 2100)/2) relative to 1880 and 1 ºC. 2062 is the year in the middle of the period from 2024 to 2100 for which I studied the risk from ASRSs. 1 ºC is the actual global warming in my baseline model (2010) relative to 1880.

- ^

1 Tg equals 1 million tonnes.

- ^

If I had defined the logistic function without using its inflection point, I would have to solve a nonlinear system of 2 equations for each Monte Carlo sample to get the logistic growth rate, and median global warming for which there is a 50 % reduction in the value of the future (T0). Since I defined this a priori, I was able to directly obtain the logistic growth rate from ln(1/“reference reduction in the value of the future” - 1)/(T0 - “reference median global warming”).

- ^

From here, John “assume[s] that all of the risk stems from the India v Pakistan and India v China conflicts, and in turn that most of the risk of existential catastrophe stems from AI, biorisk and currently unforeseen technological risks [as stated by Toby Ord in The Precipice]”.

- ^

See figure in section “Social cost” of Revesz 2014, which I came across via Founders Pledge’s report on climate philanthropy (search for “non-linearity of climate damage”).

- ^

Although there is a 1/3 chance of mitigating the food shocks caused by ASRSs being harmful in my baseline model, which assumes a median global warming of 1 ºC.

- ^

Names ordered by descending relevance of contributions.

To complement my initial comment, here are some things that struck me reviewing that I think should be considered in a more extensive treatment of this question (which is the main conclusion I agree with, this looks like a crucial consideration worth of study):

(iii) Work that looks, on the face of it, far more rigorous comes to vastly different conclusions on the plausibility of nuclear winter scenarios (https://agupubs.onlinelibrary.wiley.com/doi/full/10.1002/2017JD027331).

Even if one puts some credence into the Robock group being right, one should heavily discount it given that the only other effort modeling this comes to vastly different conclusions.

An apples to apples comparison here would be comparing the nuclear winter estimate to a climate change scenario that assumes an extreme emissions scenario (say RCP 8.5) and no adaptation at all.

Great feedback, Johannes! Some thoughts below.

Luisa Rodriguez (which is an EA-aligned source) estimated 5.5 billion deaths for a US-Russia nuclear war:

Xia 2022 gets 5 billion people without food at the end of year 2 (the worst) for the most severe nuclear winter of 150 Tg, so the expected death toll will be lower than Luisa's (since 150 Tg is a worst case scenario).

If I assume the risk from ASRSs is 1/4.83 as high, since my estimate for the risk from ASRSs is 4.83 times as high as Toby Ord's estimate for the existential risk from nuclear war (an EA-aligned source), I get an optimal median global warming in 2100 of 2.3 ºC. Trusting this value, and the current predictions for global warming (roughly, 2 to 3 ºC by 2100), decreasing emissions would not be much better/worse than neutral.

Would you agree with the statement below?

It would be good to hear from @Luisa_Rodriguez on this -- my recollection is that she also became a lot more skeptical of the Robock estimates so I am not sure she would still endorse that figure.

For example, after the post you cite, she wrote (emphasis mine):

Given the conjunctive nature of the risk (many unlikely conditions all need to be true, e.g. reading the Reisner et al work), I would not be shocked at all if the risk from nuclear winter would be < 1/100 than the estimate of the Robock group.

In any case, my main point is more that if one looked into the nuclear winter literature with the same rigor that John has looked into climate risk, one would not come out anywhere close to the estimates of the Robock group as the median and it is quite possible that a much larger downward adjustment then a 5x discount is warranted.

I agree with your last statement indeed, I was trying to sketch out that we would need a lot more work to come to a different conclusion.

Agreed. FYI, I am using a baseline soot distribution equal to a lognormal whose 5th and 95th percentiles match the lower and upper bound of the 90 % confidence interval provided by Luisa in that post (highlighted below):

The median of my baseline soot distribution is 30.4 Tg (= (14*66)^0.5), which is 4.15 (= 30.4/7.32) times Metaculus' median predicton for the "next nuclear conflict". It is unclear what "next nuclear conflict" refers to, but it sounds less severe than "global thermonuclear war", which is the term Metaculus uses here, and what I am interested in. I have asked for a clarification of the term "next nuclear conflict" in November (in the comments), but I have not heard back.

Agree with Johannes here on the bias in much of the nuclear winter work (and I say that as someone who thinks catastrophic risk from nuclear war is under-appreciated). The political motivations are fairly well-known and easy to spot in the papers

Thanks for the feedback, Christian!

I find it interesting that, despite concerns about the extent to which Roblock's group is truth-seeking, Open Philanthropy granted it 2.98 M$ in 2017, and 3.00 M$ in 2020. This does not mean Roblock's estimates are unbiased, but, if they were systemically off by multiple orders of magnitude, Open Philanthropy would presumably not have made those grants.

I do not have a view on this, because I have not looked into the nuclear winter literature (besides very quickly skimming some articles).

I don't think you can make that inference. Like, it is some evidence, but not extremely strong one.

Agreed. Carl Schuman at hour 1:02 at the 80k podcast even notes:

https://80000hours.org/podcast/episodes/carl-shulman-common-sense-case-existential-risks/

Rob Wiblin: I see. So because there’s such a clear motivation for even an altruistic person to exaggerate the potential risk from nuclear winter, then people who haven’t looked into it might regard the work as not super credible because it could kind of be a tool for advocacy more than anything.

Carl Shulman: Yeah. And there was some concern of that sort, that people like Carl Sagan, who was both an anti-nuclear and antiwar activist and bringing these things up. So some people, particularly in the military establishment, might have more doubt about when their various choices in the statistical analysis and the projections and assumptions going into the models, are they biased in this way? And so for that reason, I’ve recommended and been supportive of funding, just work to elaborate on this. But then I have additionally especially valued critical work and support for things that would reveal this was wrong if it were, because establishing that kind of credibility seemed very important. And we were talking earlier about how salience and robustness and it being clear in the minds of policymakers and the public is important.

Note earlier in the conversation demonstrating Schulman influenced the funding decision for the Rutgers team from open philanthropy:

"Robert Wiblin: So, a couple years ago you worked at the Gates Foundation and then moved to the kind of GiveWell/Open Phil cluster that you’re helping now."

Notably, Reisner is part of Los Alamos in the military establishment. They build nuclear weapons there. So both Reisner and Robock from Rutgers have their own biases.

Here's a peer-reviewed perspective that shows the flaws in both perspectives on nuclear winter as being too extreme:

https://www.tandfonline.com/doi/pdf/10.1080/25751654.2021.1882772

I recommend Lawrence livermore paper on the topic: https://www.osti.gov/biblio/1764313

It seems like a much less biased middle ground, and generally shows that nuclear winter is still really bad, on the order of 1/2 to 1/3 as "bad" as Rutgers tends to say it is.

Here is one attempt to compare apples to apples. According to the Metaculus' community, the probability of global population decreasing by at least 95 % by 2100 is:

In agreement with these, I set the reduction in the value of the future for a median global warming in 2100 relative to 1880 of 2.4 ºC due to:

These resulted in an optimal median global warming in 2100 of 2.3 ºC[1], so Metaculus' predictions suggest decreasing emissions is not much better/worse than neutral. This conclusion is not resilient, but is some evidence that comparing apples to apples does not lead to a median global warming in 2100 much different from the one we are heading towards.

It is unclear to me whether Metaculus' community is overestimating/underestimating the risk of nuclear war relative to that of climate change. However, I think comparing the risk from climate change and nuclear war based on the probability of a reduction of at least 95 % of the global population will tend to underestimate the risk from nuclear war, because:

Note this is likely an overestimate, since the 3rd factor I used to calculate the risk from climate change based on Metaculus' predictions is artificially limited to 1 %.

Thanks for doing this (upvoted!).

This moves me somewhat, though I would still really love to see a serious examination of nuclear winter to get better estimates, getting to 5% of 95% population loss conditional on nuclear war seems really high esp given it does not seem to condition on great power war alone.

Agreed!

I have clarified above the 5 % is conditional on a nuclear war causing a population loss of at least 10 % (not just a nuclear war).

Thanks, this makes a lot of sense then!

I have recently noticed Metaculus allows for predictions as low as 0.1 %. I do not know when this was introduced, but, if long ago and forecasters are aware of it, 0.0228 % chance for a 95 % population loss due to climate change may not be an overestimate.

It was less than 1 year ago, I would guess around 6 months ago.

Thanks! In that case, 92.5 % (= 160/173) of the predictions for a population loss of 95 % due to climate change given a 10 % loss due to climate change were made with the 1 % lower limit. So I assume 0.0228 % chance for a 95 % population loss due to climate change is still an overestimate.

I think that last point is really quite important. There hasn't really been any good quantification of climate and xrisk (John's work is good but fwiw I think his standard and type of evidence he requires would mean that most meaningful contributors to xrisk would be lost bc they are low enough probability or not concrete enough to be captured in his analysis)

For reference, I think these are the groups looking into this (you had already mentioned the 1st 2):

The approaches of Mills 2014 and Reisner 2018 are compared in Hess 2021. Wagman 2020 also arrives to a significantly more optimistic conclusion that Robock's group (emphasis mine):

However, it is important to have in mind that an ASRS would also cause disruptions to the energy system, which would tend to make it worse.

Relatedly, I recently came across this nice post by Naval Gazing (via XPT's report, I think). The author's conclusions suggest Toon 2008 (the study whose results are used to model the 150 Tg scenario in Xia 2022) overestimates the soot ejected into the stratosphere by a factor of 191 (= 1.5*2*2*(1 + 2)/2*(2 + 3)/2*(4 + 13)/2):

I have not fact-checked the post, but I encouraged the author to crosspost it to EA Forum, and offered to do it myself.

I think this would be v valuable to post as it own post given the situation that still a lot of trust is put into the nuclear winter literature from Robock, Toon et al.

Done!

Just a side note. The study you mention as especially rigorous in 1) iii) (https://agupubs.onlinelibrary.wiley.com/doi/full/10.1002/2017JD027331) was made in Los Alamos Labs, an organization who job it is to make sure that the US has a large and working stockpile of nuclear weapons. It is financed by the US military and therefore has a very clear inventive to talk down the dangers of nuclear winter. Due to this reason this study has been mentioned as not to be trusted by several well connected people in the nuclear space I talked to.

An explanation of why it makes sense to talk down the risk of nuclear winter, if you want to have a working deterrence is describe here: https://www.jhuapl.edu/sites/default/files/2023-05/NuclearWinter-Strategy-Risk-WEB.pdf

Yes, I am aware of this and if this space was closer to my grantmaking, I'd be excited to fund a fully neutral study into these questions.

That said, the extremely obvious bias in the stuff of the Robock and Toon papers should still lead one to heavily discount their work.

As @christian.r who is a nuclear risk expert noted in another thread, the bias of Robock et al is also well-known among experts, yet many EAs still seem to take them quite seriously which I find puzzling and not really justifiable.

Here's hoping that the new set of studies on this funded by FLI (~$4 million) will shed light on the issue within the next few years.

https://futureoflife.org/grant-program/nuclear-war-research/

Yeah fair enough. I personally, view the Robock et al. papers as the "let's assume that everything happens according to the absolute worst case" side of things. From this perspective they can be quite helpful in getting an understanding of what might happen. Not in the sense that it is likely, but in the sense of what is even remotely in the cards.

Yeah, that seems the best use of these estimates.

I am still a bit skeptical because I don't think it would be surprising if the worst case of what can actually happen is actually much less worse than what Robock et al model. I think the search process for that literature was more "worst chain we can imagine and get published", i.e. I don't think it is really inherently bound to anything in the real world (different from, say, things that are credibly modeled by different groups and the differences are about plausibility of different parameter estimates).

Yes, since the case for nuclear winter is quite multiplicative, if too many pessimistic assumptions were stacked together, the final result would be super pessimistic. Luísa and Denkenberger 2018 modelled variables as distributions to mitigate this, and did arrive to more optimistic estimates. From Fig. 1 of Toon 2008, the soot ejected into the stratosphere accounting for only the US and Russia is 55.0 Tg (= 28.1 + 26.9). Luísa estimated 55 Tg to be the 92th percentile assuming "the nuclear winter research comes to the right conclusion":

Denkenberger 2018 estimated 55 Tg to be the 80th percentile:

However, Luísa and Denkenberger 2018 still broadly relied on the nuclear winter literature. Johannes commented he "would not be shocked at all if the risk from nuclear winter would be < 1/100 than the estimate of the Robock group", which would be in agreement with Bean's BOTEC.

Quick updated. I made a comment with estimates for the probability of the amounts of soot injected into the stratosphere studied in Xia 2022.

While I have some disagreements with the details of the analysis which I hope to write up in a separate comment soon, I think this piece overall is effective altruism at its best -- asking an important question that is outside the mainstream discussion and trying to get traction on it in a very transparent way.

Thanks, Johannes!

Great to have more discussion on this topic. Some thoughts... (1) the effects of climate change are not a probabilty but already occurring so may not be understood as hierarcharally similar to the risk of an ASRS that has not still occurred; (2) an ASRS could be more probable in a world exposed to higher tensions related with food shocks occurring as a result of climate change; (3) specifically thinking on the model results, I wonder if any of these models has been able to dissect the effect of lower temperatures to those related with lower radiation and lower rainfall associated with the ASRS (otherwise overall effects could be underestimated); (4) I also wonder about the reliability of these models to represent the effect of temperature reductions vs. to represent the effect of radiation reductions (probably more accurate in representing T reduction and thus, other effects of an ASRS not attenuated by global warming - reduced rainfall, radiation, UV- , still important in crop failure).

Thanks for sharing your thoughts, Mariana!

Agreed:

However, I only focussed on the extreme effects of climate change, which are hypothetical as the risks of ASRSs. It also believe that, even from a neartermist perspective, it is also pretty unclear whether climate change is good/bad due to effects on wild animals.

That makes sense, but I do not think it is a major issue:

I think (3) and (4) are great points!

My model does not have an explicit distinction between the effects of temperature, and those of radiation and rainfall. However, the effective soot reduction due to global warming is proportional to a uniform distribution ranging from 0 to 1. The greater the importance of the temperature variation (as opposed to those of radiation and rainfall), the narrower and closer to one the distribution should be. It arguably makes sense to use a wide distribution given our uncertainty. However:

It's great to see a principled and unapologetic criticism of a common EA policy preference.

It would be surprising if all of one's policy preferences before becoming EA ended up still being net good after incorporating EA-associated ideas in moral philosophy like sentientism and longtermism. You could say that becoming EA should make us "rethink" our "priorities" :P

For those interested in more evaluation of the knock-on effects of climate change, I highly recommend Brian Tomasik's essays on evaluating the net impact of climate change on wild animal suffering.

Thanks, Ariel!