This post will be direct because I think directness on important topics is valuable. I sincerely hope that my directness is not read as mockery or disdain towards any group, such as people who care about AI risk or religious people, as that is not at all my intent. Rather my goal is to create space for discussion about the overlap between religion and EA.

–

A man walks up to you and says “God is coming to earth. I don’t know when exactly, maybe in 100 or 200 years, maybe more, but maybe in 20. We need to be ready, because if we are not ready then when god comes we will all die, or worse, we could have hell on earth. However, if we have prepared adequately then we will experience heaven on earth. Our descendants might even spread out over the galaxy and our civilization could last until the end of time.”

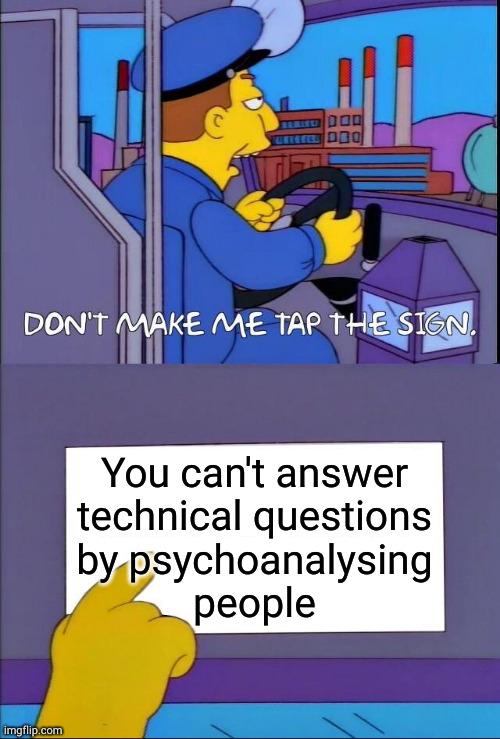

My claim is that the form of this argument is the same as the form of most arguments for large investments in AI alignment research. I would appreciate hearing if I am wrong about this. I realize when it’s presented as above it might seem glib, but I do think it accurately captures the form of the main claims.

Personally, I put very close to zero weight on arguments of this form. This is mostly due to simple base rate reasoning: humanity has seen many claims of this form and so far all of them have been wrong. I definitely would not update much based on surveys of experts or elites within the community making the claim or within adjacent communities. To me that seems pretty circular and in the case of past claims of this form I think deferring to such people would have led you astray. Regardless, I understand other people either pick different reference classes or have inside view arguments they find compelling. My goal here is not to argue about the content of these arguments, it’s to highlight these similarities in form, which I believe have not been much discussed here.

I’ve always found it interesting how EA recapitulates religious tendencies. Many of us literally pledge our devotion, we tithe, many of us eat special diets, we attend mass gatherings of believers to discuss our community’s ethical concerns, we have clear elites who produce key texts that we discuss in small groups, etc. Seen this way, maybe it is not so surprising that a segment of us wants to prepare for a messiah. It is fairly common for religious communities to produce ideas of this form.

–

I would like to thank Nathan Young for feedback on this. He is responsible for the parts of the post that you liked and not responsible for the parts that you did not like.

Here are a couple thoughts on messianic-ness specifically:

I am interpreting you as saying:

"Messianic stories are a human cultural universal, humans just always fall for this messianic crap, so we should be on guard against suspiciously persuasive neo-messianic stories, like that radio astronomy might be on the verge of contacting an advanced alien race, or that we might be on the verge of discovering that we live in a simulation." (Why are we worried about AI and not about those other equally messianic possibilities? Presumably AI is the most plausible messianic story around? Or maybe it's just more tractable since we're designing the AI vs there's nothing we can do about aliens or simulation overlords.)

But per my second bullet point, I don't think that Messianic stories are a huge human universal. I would prefer a story where we recognize that Christianity is by far the biggest messianic story out there, and it is probably influencing/causing the perceived abundance of other messianic stories in culture (like all the messianic tropes in literature like Dune, or when people see political types like Trump or Obama or Elon as "savior figures"). This leads to a different interpretation:

"AI might or might not be a real worry, but it's suspicious that people are ramming it into the Christian-influenced narrative format of the messianic prophecy. Maybe people are misinterpreting the true AI risk in order to fit it into this classic narrative format; I should think twice about anthropomorphizing the danger and instead try to see this as a more abstract technological/economic trend."

This take is interesting to me, as some people (Robin Hanson, slow takeoff people like Paul Christiano) have predicted a more decentralized version of the AI x-risk story where there is a lot of talk about economic doubling times and whether humans will still complement AI economically in the far future, instead of talking about individual superintelligent systems making treacherous turns and being highly agentic. It's plausible to me that the decentralized-AI-capabilities story is underrated because it is more complicated / less viral / less familiar a narrative. These kinds of biases are definitely at work when people, eg, bizarrely misinterpret AI worry as part of a political fight about "capitalism". It seems like almost any highly-technical worry is vulnerable to being outcompeted by a message that's more based around familiar narrative tropes like human conflict and good-vs-evil morality plays.

But ultimately, while interesting to think about, I'm not sure how far this kind of "base-rate tennis" gets us. Maybe we decide to be a little more skeptical of the AI story, or lean a little towards the slow-takeoff camp. But this is a pretty tiny update compared to just learning about different cause areas and forming an inside view based on the actual details of each cause.