Jackson Wagner

Bio

Engineer working on next-gen satellite navigation at Xona Space Systems. I write about effective-altruist and longtermist topics at nukazaria.substack.com, or you can read about videogames like Braid and The Witness at jacksonw.xyz

Posts 14

Comments281

The Christians in this story who lived relatively normal lives ended up looking wiser than the ones who went all-in on the imminent-return-of-Christ idea. But of course, if christianity had been true and Christ had in fact returned, maybe the crazy-seeming, all-in Christians would have had huge amounts of impact.

Here is my attempt at thinking up other historical examples of transformative change that went the other way:

-

Muhammad's early followers must have been a bit uncertain whether this guy was really the Final Prophet. Do you quit your day job in Mecca so that you can flee to Medina with a bunch of your fellow cultists? In this case, it probably would've been a good idea: seven years later you'd be helping lead an army of 100,000 holy warriors to capture the city of Mecca. And over the next thirty years, you'll help convert/conquer all the civilizations of the middle east and North Africa.

-

Less dramatic versions of the above story could probably be told about joining many fast-growing charismatic social movements (like joining a political movement or revolution). Or, more relevantly to AI, about joining a fast-growing bay-area startup whose technology might change the world (like early Microsoft, Google, Facebook, etc).

-

You're a physics professor in 1940s America. One day, a team of G-men knock on your door and ask you to join a top-secret project to design an impossible superweapon capable of ending the Nazi regime and stopping the war. Do you quit your day job and move to New Mexico?...

-

You're a "cypherpunk" hanging out on online forums in the mid-2000s. Despite the demoralizing collapse of the dot-com boom and the failure of many of the most promising projects, some of your forum buddies are still excited about the possibilities of creating an "anonymous, distributed electronic cash system", such as the proposal called B-money. Do you quit your day job to work on weird libertarian math problems?...

People who bet everything on transformative change will always look silly in retrospect if the change never comes. But the thing about transformative change is that it does sometimes occur.

(Also, fortunately our world today is quite wealthy -- AI safety researchers are pretty smart folks and will probably be able to earn a living for themselves to pay for retirement, even if all their predictions come up empty.)

The following is way more speculative and wacky than the proven benefits of family planning that you point out above, but I think it's interesting that there is some evidence that changing family norms around marriage / children / etc might have large downstream effects on culture, in a way that potentially suggests "discouraging cousin marriage" as an intervention to increase openness / individualism / societal-level trust: https://forum.effectivealtruism.org/posts/h8iqvzGQJ9HiRTRja/new-cause-radio-ads-against-cousin-marriage-in-lmic

Well, I wrote this script and not the other one, and I think the idea of publishing draft scripts on the Forum just never occurred to RationalAnimations before? (Even though it seemed like a natural step to me, and indeed it has been helpful.) So naturally I will advocate doing it with future scripts. We already have a couple other ways of getting feedback including internal feedback, working with relevant organizations (eg for this Charter Cities script we got some comments from people associated with the charter cities institute, etc), but the more the merrier when you are trying to get something finalized before animating it for a large audience.

Yes, I think RationalAnimations is actually planning to do an episode on GiveDirectly sometime soon, which is why I nod towards the idea of interventions like cash transfers and bednets at the very beginning of the script -- the GiveDirectly video will probably come out first, so then in the beginning of the Charter Cities video we'll be able to make some visual allusions and put an on-screen title-card link to the GiveDirectly video.

- Since "Why The West Rules" is pretty big on understanding history through quantifying trends and then trying to understand when/why/how important trends sometimes hit a ceiling or reverse, it might be interesting to ask some abstract questions about how much we can really infer from trend extrapolation, what dangers to watch out for when doing such analysis, etc. Or as Slate Star Codex entertainingly put it, "Does Reality Drive Straight Lines On Graphs, Or Do Straight Lines On Graphs Drive Reality?" How often are historical events overdetermined? (Why The West Rules is already basically about this question as applied to the Industrial Revolution. But I'd be interested in getting his take more broadly -- eg, if the Black Death had never happened, would Europe have been overdue for some kind of pandemic or malthusian population collapse eventually? And of course, looking forward, is our civilization's exponential acceleration of progress/capability/power overdetermined even if AI somehow turns out to be a dud? Or is it plausible that we'll hit some new ceiling of stagnation?)

- On the idea of an intelligence explosion in particular, it would be fascinating to get Morris's take on some of the prediction / forecasting frameworks that rationalists have been using to understand AI, like Tom Davidson's "compute-centric framework" (the Slate Star Codex popularization that I read has a particularly historical flavor!), or Aeja Cotra's Bio Anchors report. It would probably be way too onerous to ask Ian Morris to read through these reports before coming on the show, but maybe you could try to extract some key questions that would still make sense even without the full CCF or Bio Anchors framework?

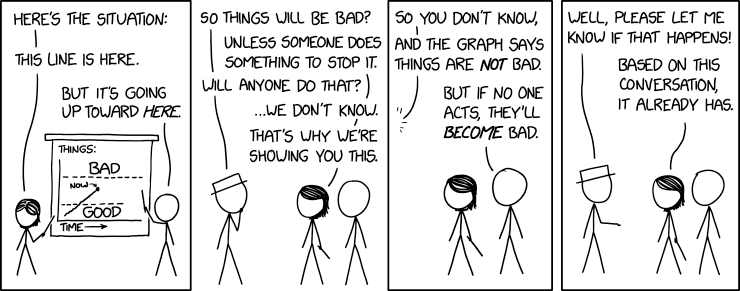

- Not sure exactly how to phrase this question, but I might also ask him how likely he thinks it is that the world will react to the threat of AI in appropriate ways -- will governments recognize the problem and cooperate to try and solve it? (As for instance governments were somewhat able to adapt to nuclear weapons, adopting an uneasy norm that all wars must now be "limited wars" and mostly cooperating on an international regime of nuclear nonproliferation enforcement.) Will public opinion recognize the threat of AI and help push government actions in a good direction, before it's too late? In light of previous historical crises (pandemics both old and recent, world wars, nuclear weapons, climate change, etc), to what extent does Morris think humanity will "rise to the challenge", versus to what extent do you sometimes just run into a problem that is way beyond your state-capacity to solve? TL;DR I would basically like you to ask Ian Morris about this xkcd comic:

- Personally if I had plenty of time, I would also shoot the shit about AI's near-term macro impacts on the economy. (Will the labor market see "technological unemployment" or will the economy run hotter than ever? Which sectors might see lots of innovation, versus which might be difficult for AI to have an impact on? etc.) I don't really expect Morris to have any special expertise here, but I am just personally confused about this and always enjoy hearing people's takes.

- Not AI related, but it would be interesting to get a macro-historical perspective on the current geopolitical outlook on the trajectories of major powers -- China, the USA, Europe, Russia, India, etc. How does he see the relationship between China and the USA evolving over coming decades? What does the Ukraine war mean for Russia's long-term future? Will Europe ever be able to rekindle enough dynamism to catch back up with the USA? And how might these traditional geopolitical concerns affect and/or be affected by the impacts of an ongoing slow-takeoff intelligence explosion? (Again, not really expecting Morris to be an impeccable world-class geopolitical strategist, but he probably knows more than I do and it would be interesting to hear a macro-historical perspective.)

- Not a question to ask Ian Morris, but if you've read "Why The West Rules", you might appreciate this short (and loving) parody I wrote, chronicling a counterfactual Song Dynasty takeoff fueled by uranium ore: "Why The East Rules -- For Now".

Finally, thanks so much for running the 80,000 Hours podcast! I have really appreciated it over the years, and learned a lot.

@MvK and @titotal , here is the new section about political tractability:

"A bigger problem is political feasibility. The whole concept of giving a city the ability to write its own rules is to make reform easier, but in order to get that ball rolling, you first need to find a nation willing to give away lots of their own regulation-writing authority in order to enable your charter city project. This isn’t completely unheard of -- in many ways, charter cities are just a bigger and bolder version of “Special Economic Zones”, where a port might be granted lower tarrifs or streamlined permitting for the sake of spurring industrial development. Nevertheless, asking for broad autonomy to create an entire city is a tall order.

Indeed, Paul Romer was originally involved in efforts to create charter cities in Madagascar and Honduras, but later abandoned both projects. Despite being invited by each country’s president, the idea became politically controversial in both nations, and the project in Madagascar fell apart when the president’s party was voted out of power. In Honduras, a law authorizing charter cities was passed after years of political wrangling, but Paul Romer distanced himself from the result, saying that Honduran corporate special interests had corrupted his original vision."

(With footnotes going to https://nationalpost.com/news/year-in-ideas-professor-touts-special-economic-zones-known-as-charter-cities and https://devpolicy.org/why-charter-cities-have-failed-20190716/ )

I'm still thinking about what from the existing draft could be cut or condensed, if you have any suggestions!

Yes, in response to MvK's comment, I am reworking the script to add a section (in-between "objection: why whole new cities?" and "wider benefits") about political feasibility, where I will talk about how Paul Romer abandoned the idea after delays and failed projects in Honduras and Madagascar. I'll add another comment here when I update this Forum post with the new draft.

Do you have any suggestions as to which parts of the draft could be cut or made shorter? The current post is already getting a little long compared to our ideal video length of 10-15 mins.

As I mention in my reply to MvK above, I agree that I don't think charter city efforts should literally be funded by EA megadonors or ranked as top charities by GiveWell; I just think they are a potentially helpful idea that the EA movement should support when convenient / be friendly towards. Instead, since charter cities double as a "lets all get rich" thing, they can be mostly funded by investors (just like how investors already fund lots of international development projects -- factories, etc).

Also agree that the benefit vs tractability of charter cities faces the tradeoff you describe, where a country with TERRIBLE governance would never even approve a charter city or let it persist for long, and a country with great governance doesn't "need" a charter city because it's already making relatively wise policy decisions.

But this is almost a fully general argument against every type of political/economic reforms. Of course political tractability is a problem with political reforms! Nevertheless, there are a lot of countries in a middle zone between terrible and great governance, where reforms (including charter city legislation) might be possible and might also bring significant benefits.

(Personally, since I am most excited about the potential for new types of institutional innovation and the effects of governance competition, I actually think it would be very beneficial to start a few charter cities and do more policy experiments even in developed countries with good institutions -- places like the USA, Sweden, Japan, wherever. For instance, I would love to see a large city in the USA that used a Georgist land tax instead of an income tax to generate revenue, and had more liberal regulation of new medical technology but perhaps stricter regulations on food & environmental quality, and where prediction markets were legal, and etc. But this felt like too much of a niche opinion for an intro video about the charter cities concept which is usually focused on developing countries.)

Thanks for this feedback! For more context, the tone of the video is intended to be a kind of middle ground between persuasion and EA-Forum-style information, which I'd describe as "introducing people to a cool and intriguing new idea, as food for thought". (I also see this video as "making sure RationalAnimations has enough interesting variety to keep pulling in new viewers, even though many of our upcoming videos are going to be about more-technical AI alignment topics".) So, the video is definitely trying to be informative and somewhat evenhanded rather than a purely persuasive advertisement for charter cities. But we also want to be less hedgy than if we were writing on the Forum or directly responding to stuff like Rethink's report. I am ideally aiming for the same kind of positive-but-not-propaganda, "here's an inspiring new EA project" vibes of something like Vox's "Future Perfect" column on EA topics. (But, like I said, I am a huge fan of charter cities, so it's plausible that I've steered too close to propaganda!)

Some thoughts below, arranged in order from "this will definitely influence how I revise the script" to "this won't fit in the script but I just want to argue about / advocate for charter cities because it's fun":

Political Tractability

I actually had sections in an earlier draft that brought up objections related to SEZ's and political tractability, but these ended up getting cut for space. Now I am thinking that I should add back in at least some stuff about tractability -- maybe a couple sentences along the lines of "Of course, if the motivation behind charter cities was to get around the political difficulties of fighting to pass lots of little reforms, consider that it might be even harder to pass one BIG reform to create the city -- especially when creating a charter city requires the host government to voluntarily give up some of its authority to set regulations...", and then mentioning how Romer was involved in those efforts in madagascar which ended up going nowhere.

(Personally, I think that political tractability is definitely an issue with charter cities, but political tractability is also an issue for lots of promising policy interventions in the developing world, and also for many interventions in the "improving institutional decisionmaking" space (like switching to improved voting systems, legalizing prediction markets, etc). The hope of many charter city advocates is that, once there are a few successful projects to point to, political tractability will increase. Over the coming decade, we'll find out if they're right or not!)

Or are you perhaps thinking of tractability from a different perspective, less about the political difficulty of getting a host government to agree to allow charter cities, more about the intrinsic difficulty of managing city development? I feel like there are lots of historical examples of city development going successfully, and the bigger question mark is how to get the authority to design your own governing institutions and write your own regulations. But I would welcome more thoughts on this.

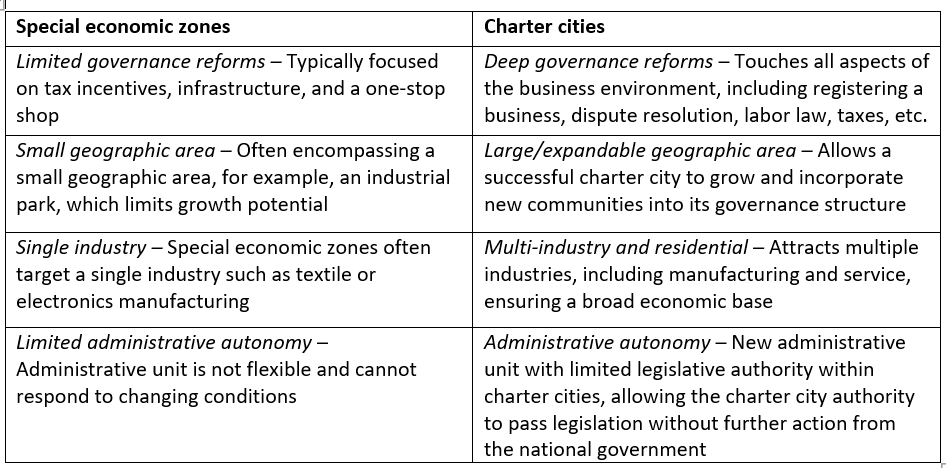

Special Economic Zones

As for Special Economic Zones, I am worried that lots of RationalAnimations viewers won't be familiar with them, so mentioning them either in support ("It might seem crazy to carve out a special area where normal regulations don't apply, but actually lots of developing countries do this all the time in SEZs!") or in opposition ("This idea is just SEZs all over again, and SEZs haven't changed the world." [except for the couple times when they have changed the world, like in Shenzhen]) For what it's worth, here are the differences that charter city advocates point to between an ideal charter city and a model SEZ:

It might be worth mentioning SEZs somehow, if only briefly ("...charter cities are in some ways a variation on the existing concept of special economic zones, but they envision deeper governance reforms and a larger physical area..."). I'll try to think about this more.

High Cost of Building New Cities

"Large development cost" feels like a very legitimate concern from the perspective of OpenPhil considering whether to charitably fund a charter city project themselves, but not as much of a drawback for individuals who want to get excited about the idea and maybe work to make charter cities a reality. In my experience, most charter city concepts are expected to be funded by private investment, and the whole thing would be a moneymaking enterprise (just like how large development projects like Hudson Yards in NYC are funded by private developers). To the extent that I think EA should be helping the charter cities movement, it is by doing high-leverage and public-goods-provision things like spreading awareness of the idea (hence this video), maybe providing support for organizations that try to solve coordination problems between potential charter city stakeholders/developers, etc -- not by spending billions of dollars to build the actual infrastructure for some new-city project. So it makes sense why this was in the Rethink report, but I don't think it would make as much sense in the video.

Uncertainty of Indirect Effects

Rethink says they couldn't find an argument that the indirect effects of charter cities (like what I describe in my "Wider Benefits" section) would be large. I guess I agree with Rethink that these benefits are uncertain, but they are also what I find most inspiring and compelling about charter cities -- for instance, the potential for charter cities to come up with totally new governing institutions that could then inspire many nations around the world. I think a "hits-based approach" is worthwhile here: it'll be hard to figure out how big the spillover effects of charter cities will be without just trying it a couple times, so I feel like somebody should try it (although again, it shouldn't literally be funded by EA megadonors), and we EAs should support them where we can! On a more practical note, RationalAnimations covers a lot of speculative far-future topics (longtermism, AI, theories about "grabby aliens", upcoming videos about whole brain emulation, etc), so I almost feel like the fact that charter cities are big, risky bet, is kind of understood from the context of the channel! One of the worries I had with this script is that even the wildest charter-city projects (like Prospera) might bore viewers with bunch of in-the-weeds policy details about development economics and libertarianism, when they come to the channel to learn about big melodramatic sci-fi topics like human extinction and plans to colonize the galaxy!

Overall, I guess I am also just pretty convinced by the basic intuition that it's often important/effective to improve long-run economic growth, even if it's hard to do or uncertain. For more info on this, see the post "Growth and the case against randomista development" -- the fact that this is one of the most upvoted Forum posts of all time indicates to me that many people think economic growth interventions are underrated within EA!

Anyways, thanks again for your feedback, and thanks doubly for reading this comment! I will try to work some mention of SEZs and tractability into the draft.

To answer with a sequence of increasingly "systemic" ideas (naturally the following will be tinged by by own political beliefs about what's tractable or desirable):

There are lots of object-level lobbying groups that have strong EA endorsement. This includes organizations advocating for better pandemic preparedness (Guarding Against Pandemics), better climate policy (like CATF and others recommended by Giving Green), or beneficial policies in third-world countries like salt iodization or lead paint elimination.

Some EAs are also sympathetic to the "progress studies" movement and to the modern neoliberal movement connected to the Progressive Policy Institute and the Niskasen Center (which are both tax-deductible nonprofit think-tanks). This often includes enthusiasm for denser ("yimby") housing construction, reforming how science funding and academia work in order to speed up scientific progress (such as advocated by New Science), increasing high-skill immigration, and having good monetary policy. All of those cause areas appear on Open Philanthropy's list of "U.S. Policy Focus Areas".

Naturally, there are many ways to advocate for the above causes -- some are more object-level (like fighting to get an individual city to improve its zoning policy), while others are more systemic (like exploring the feasibility of "Georgism", a totally different way of valuing and taxing land which might do a lot to promote efficient land use and encourage fairer, faster economic development).

One big point of hesitancy is that, while some EAs have a general affinity for these cause areas, in many areas I've never heard any particular standout charities being recommended as super-effective in the EA sense... for example, some EAs might feel that we should do monetary policy via "nominal GDP targeting" rather than inflation-rate targeting, but I've never heard anyone recommend that I donate to some specific NGDP-targeting advocacy organization.

I wish there were more places like Center for Election Science, living purely on the meta level and trying to experiment with different ways of organizing people and designing democratic institutions to produce better outcomes. Personally, I'm excited about Charter Cities Institute and the potential for new cities to experiment with new policies and institutions, ideally putting competitive pressure on existing countries to better serve their citizens. As far as I know, there aren't any big organizations devoted to advocating for adopting prediction markets in more places, or adopting quadratic public goods funding, but I think those are some of the most promising areas for really big systemic change.