Summary

- Satisfaction with the EA community

- Reported satisfaction, from 1 (Very dissatisfied) to 10 (Very satisfied), in December 2023/January 2024 was lower than when we last measured it shortly after the FTX crisis at the end of 2022 (6.77 vs. 6.99, respectively).

- However, December 2023/January 2024 satisfaction ratings were higher than what people recalled their satisfaction being “shortly after the FTX collapse” (and their recalled level of satisfaction was lower than what we measured their satisfaction as being at the end of 2022).

- We think it’s plausible that satisfaction reached a nadir at some point later than December 2022, but may have improved since that point, while still being lower than pre-FTX.

- Reasons for dissatisfaction with EA:

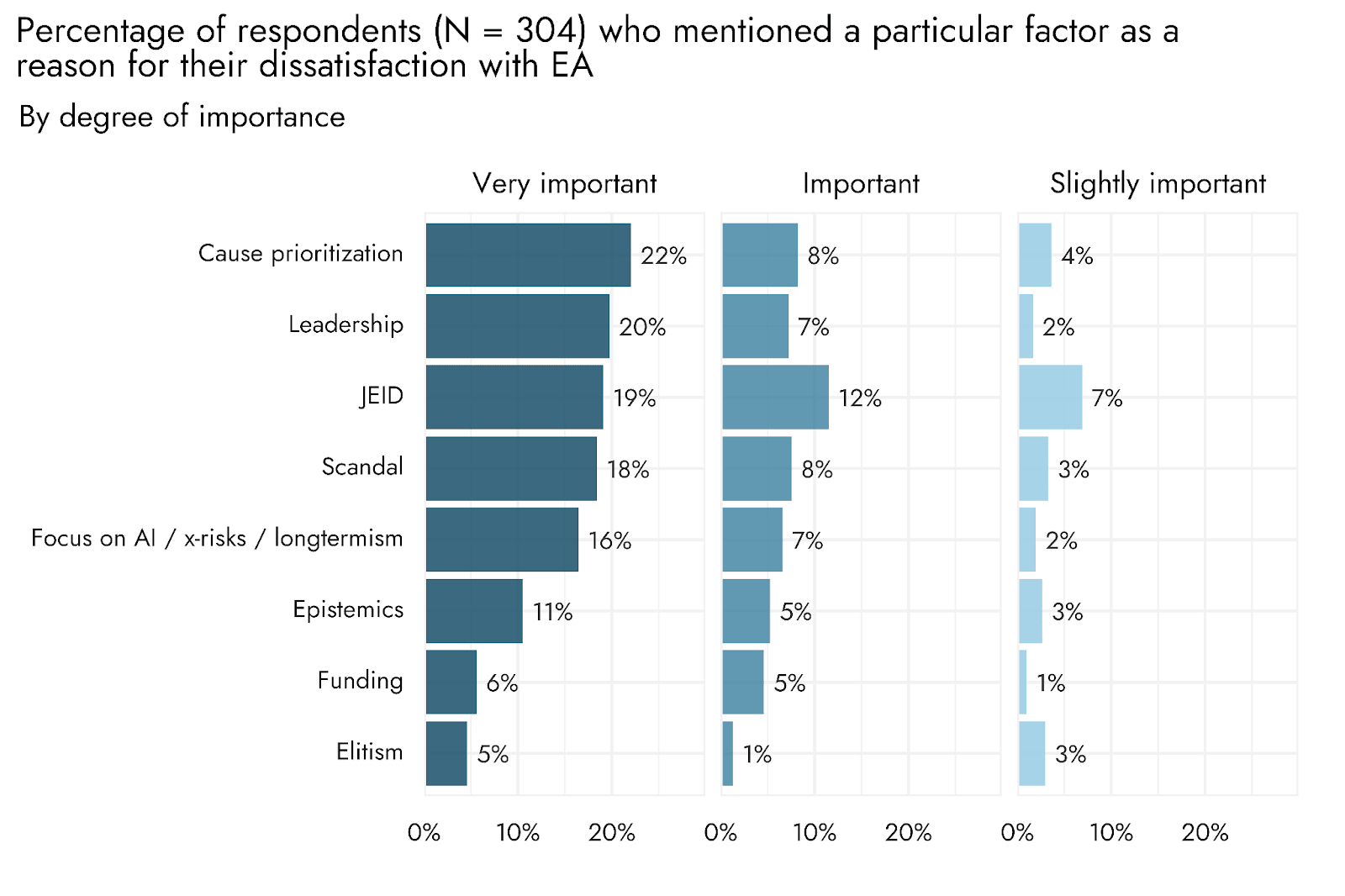

- A number of factors were cited a similar number of times by respondents as Very important reasons for dissatisfaction, among those who provided a reason: Cause prioritization (22%), Leadership (20%), Justice, Equity, Inclusion and Diversity (JEID, 19%), Scandals (18%) and excessive Focus on AI / x-risk / longtermism (16%).

- Including mentions of Important (12%) and Slightly important (7%) factors, JEID was the most commonly mentioned factor overall.

- Changes in engagement over the last year

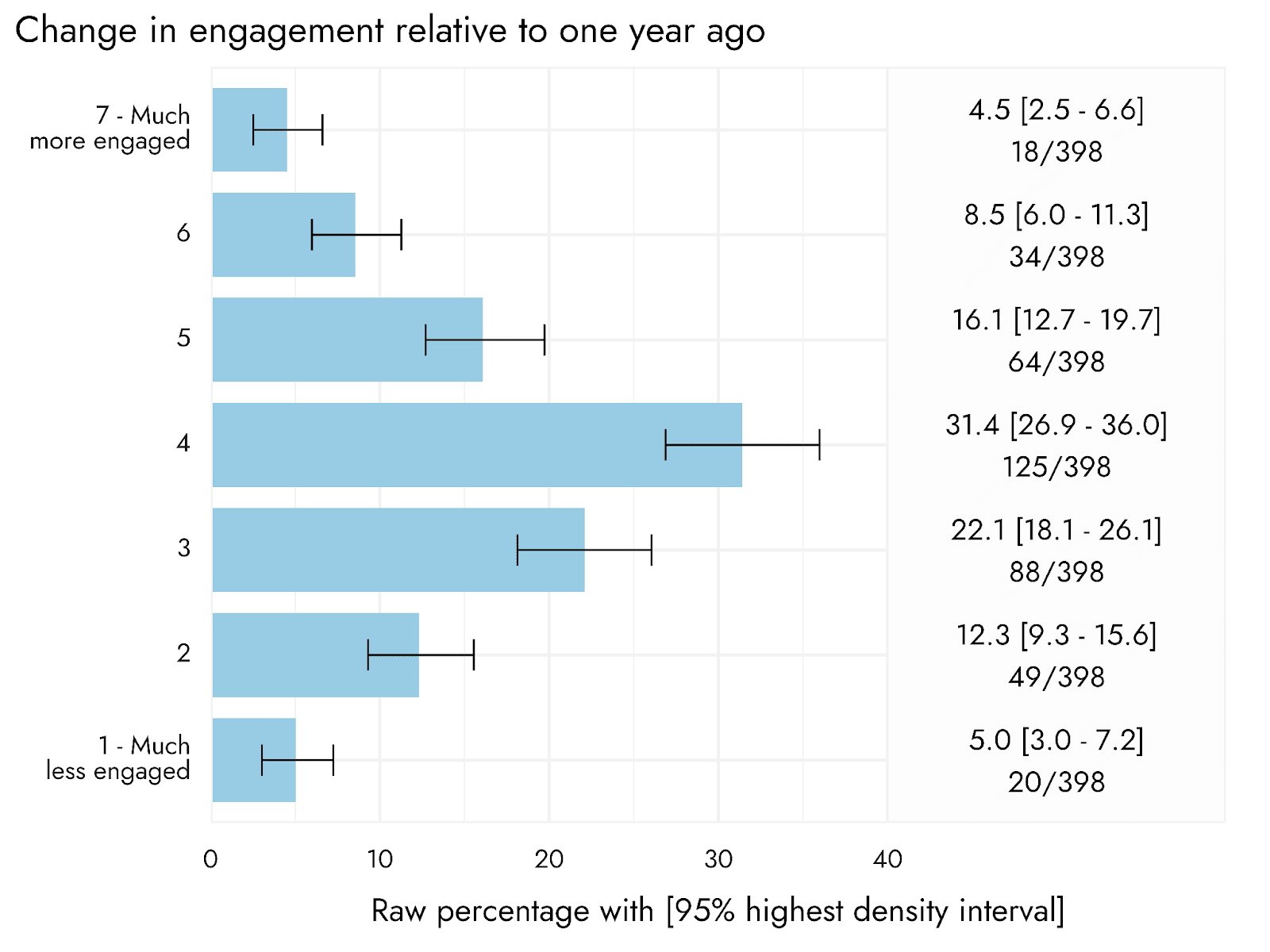

- 39% of respondents reported getting at least slightly less engaged, while 31% reported no change in engagement, and 29% reported increasing engagement.

- Concrete changes in behavior

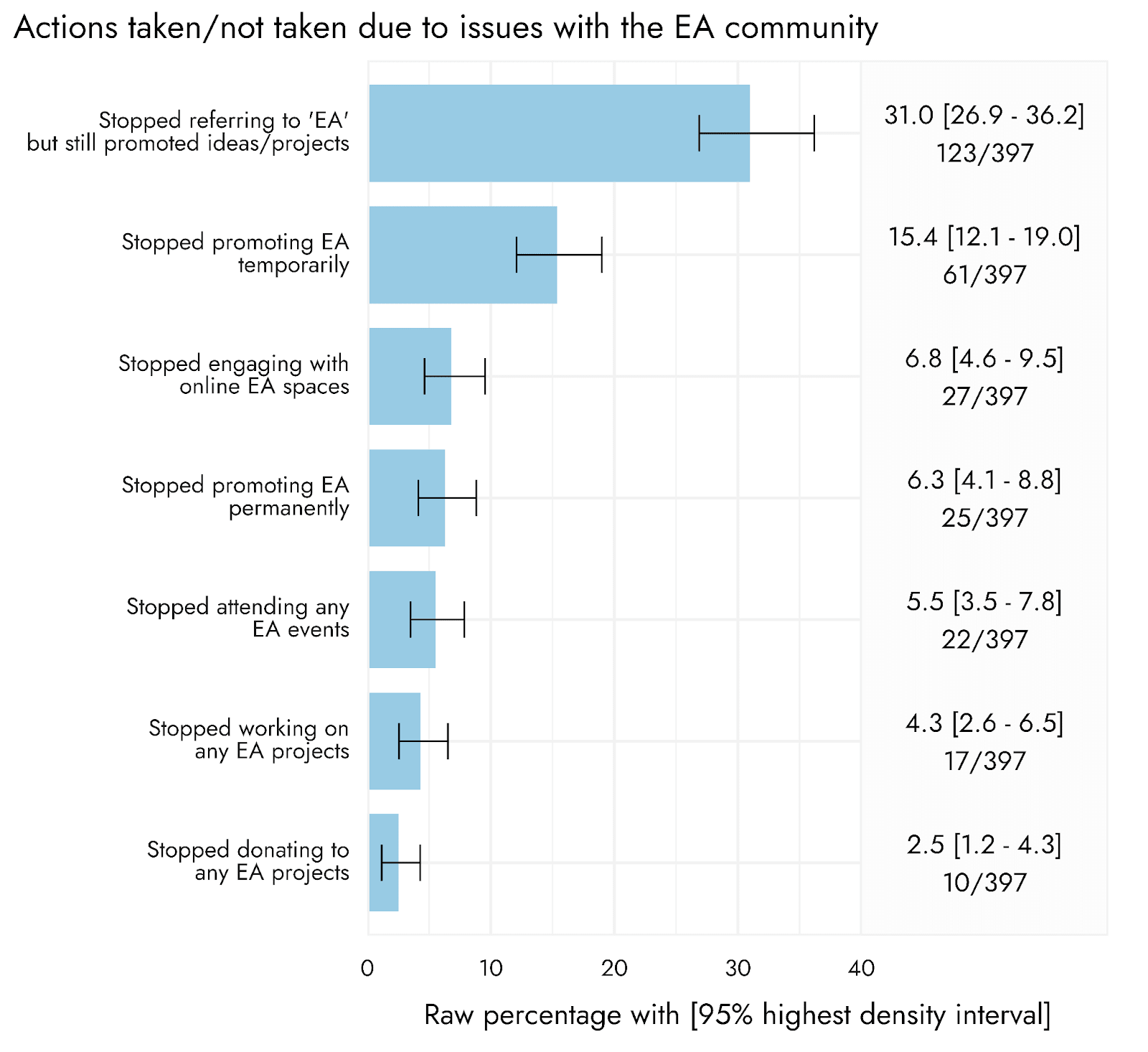

- 31% of respondents reported that they had stopped referring to “EA” while still promoting EA projects or ideas, and 15% that they had temporarily stopped promoting EA. Smaller percentages reported other changes such as ceasing to engage with online EA spaces (6.8%), permanently stopping promoting EA ideas or projects (6.3%), stopping attending EA events (5.5%), stopping working on any EA projects (4.3%) and stopping donating (2.5%).

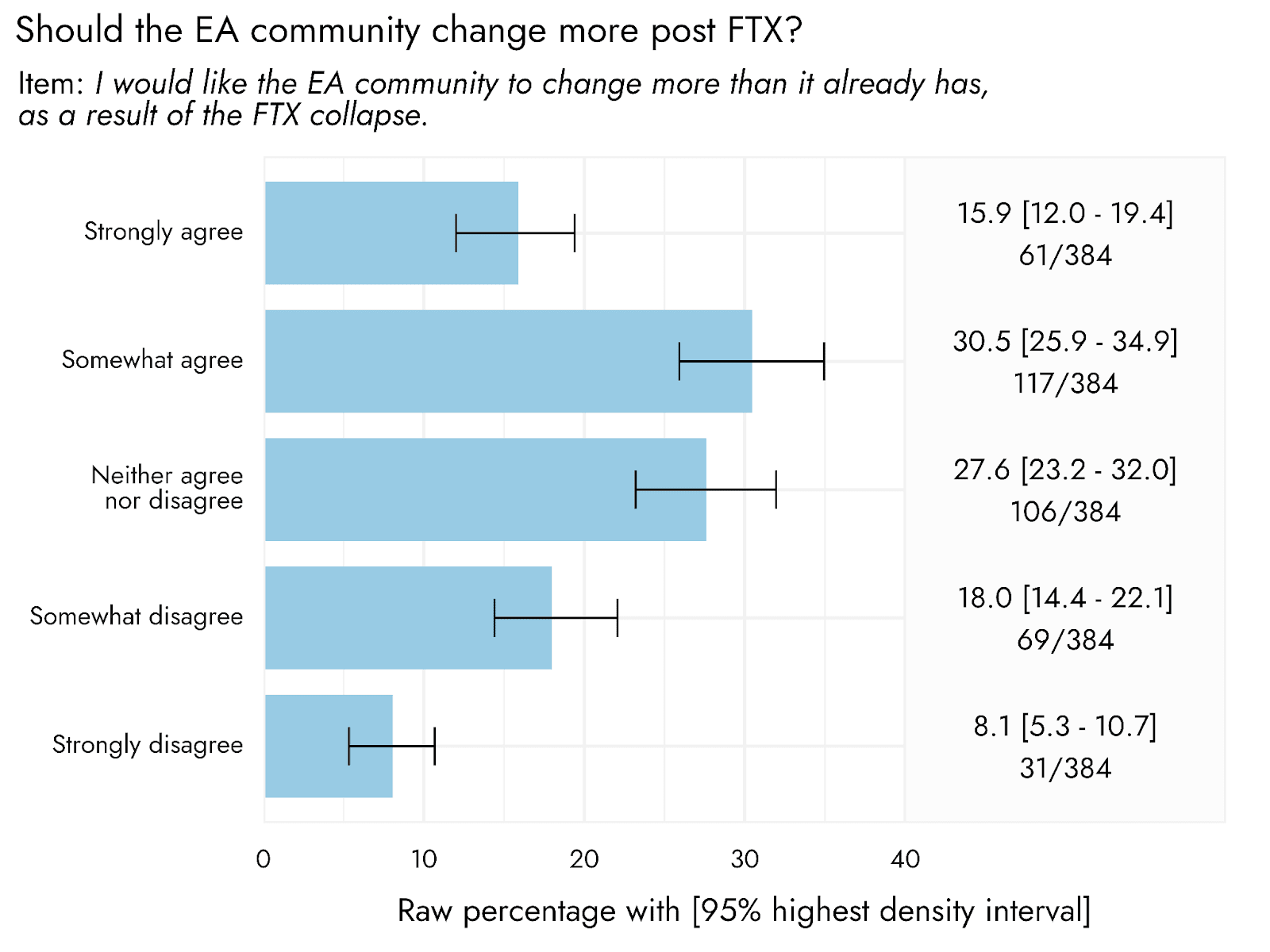

- Desire for more community change as a result of the FTX collapse

- 46% of respondents at least somewhat agreed that they would like to see the EA community change more than it already has, as a result of the FTX collapse, while 26% somewhat or strongly disagreed.

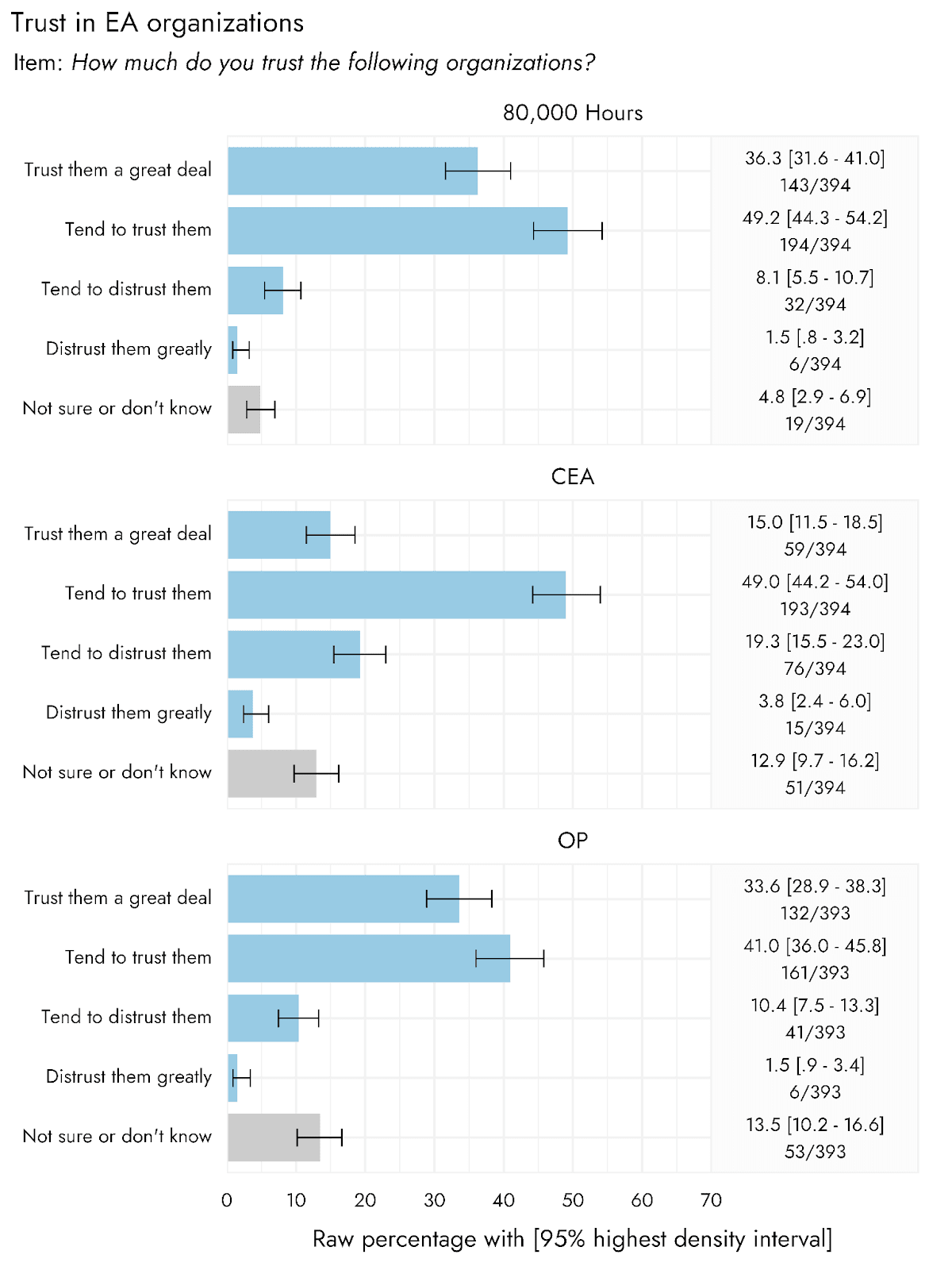

- Trust in EA organizations

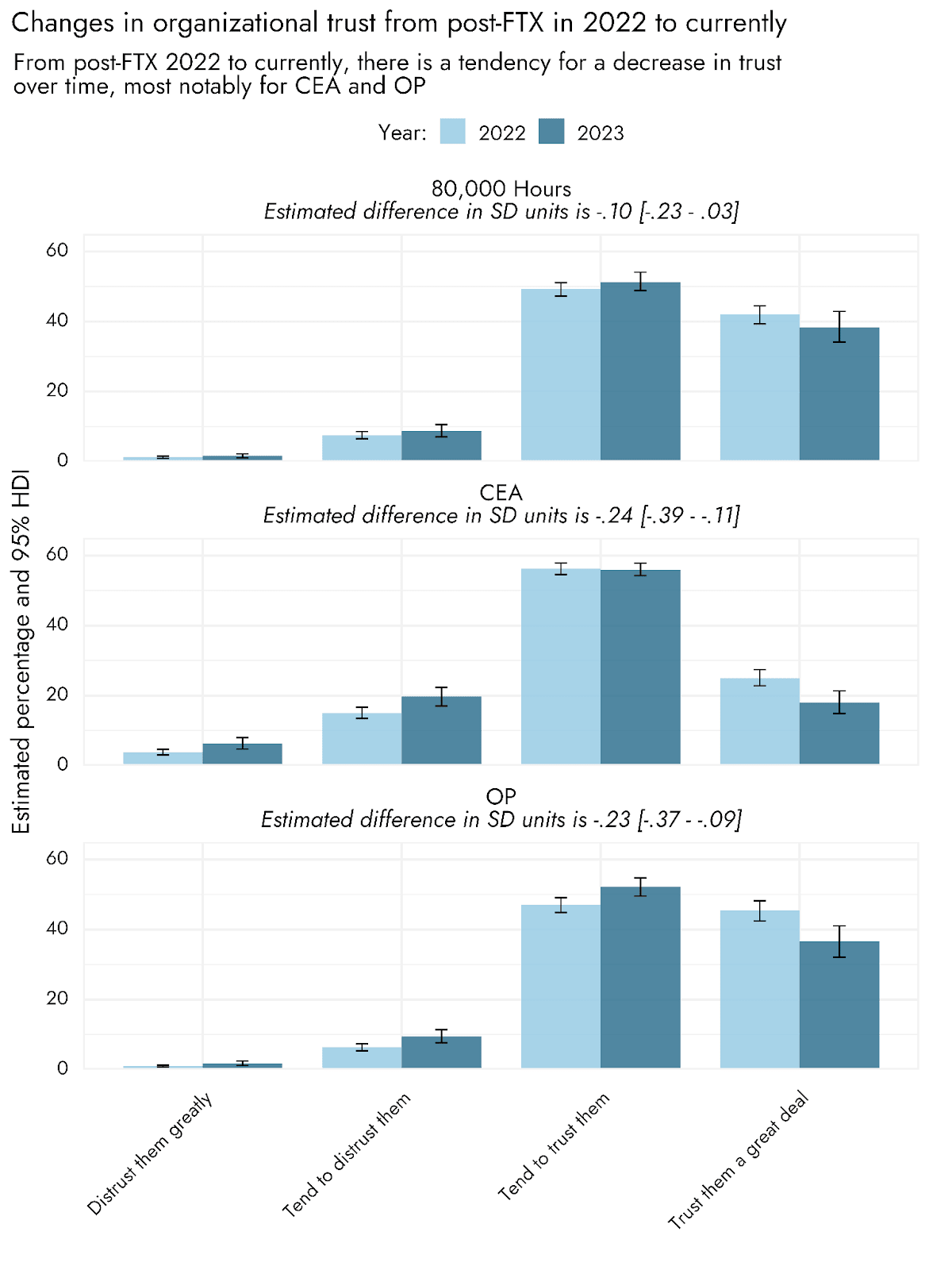

- Reported trust in key EA organizations (Center for Effective Altruism, Open Philanthropy, and 80,000 Hours) were slightly lower than in our December 2022 post-FTX survey, though the change for 80,000 Hours did not reliably exclude no difference.

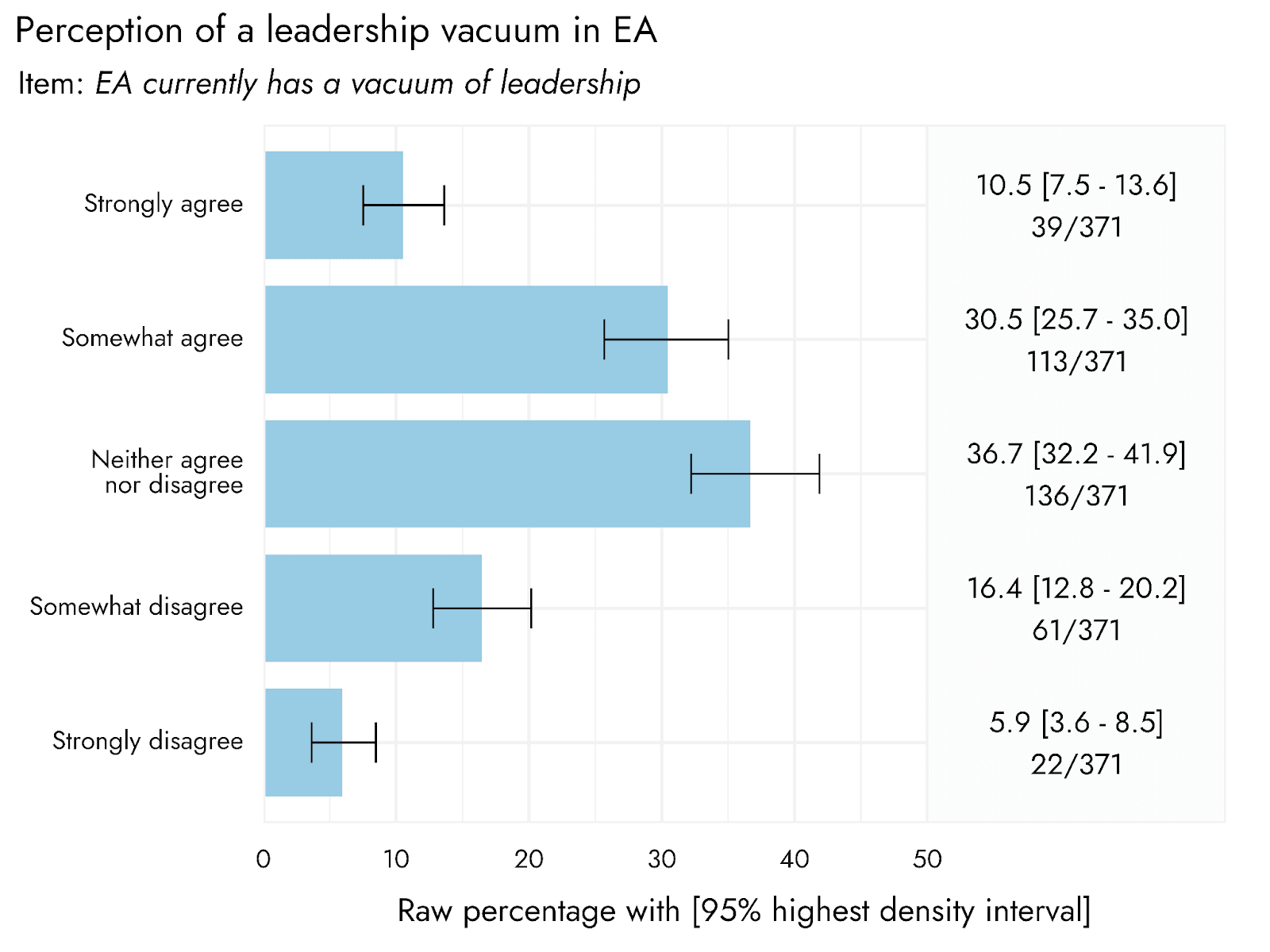

- Perceived leadership vacuum

- 41% of respondents at least somewhat agreed that ‘EA currently has a vacuum of leadership’, while 22% somewhat or strongly disagreed.

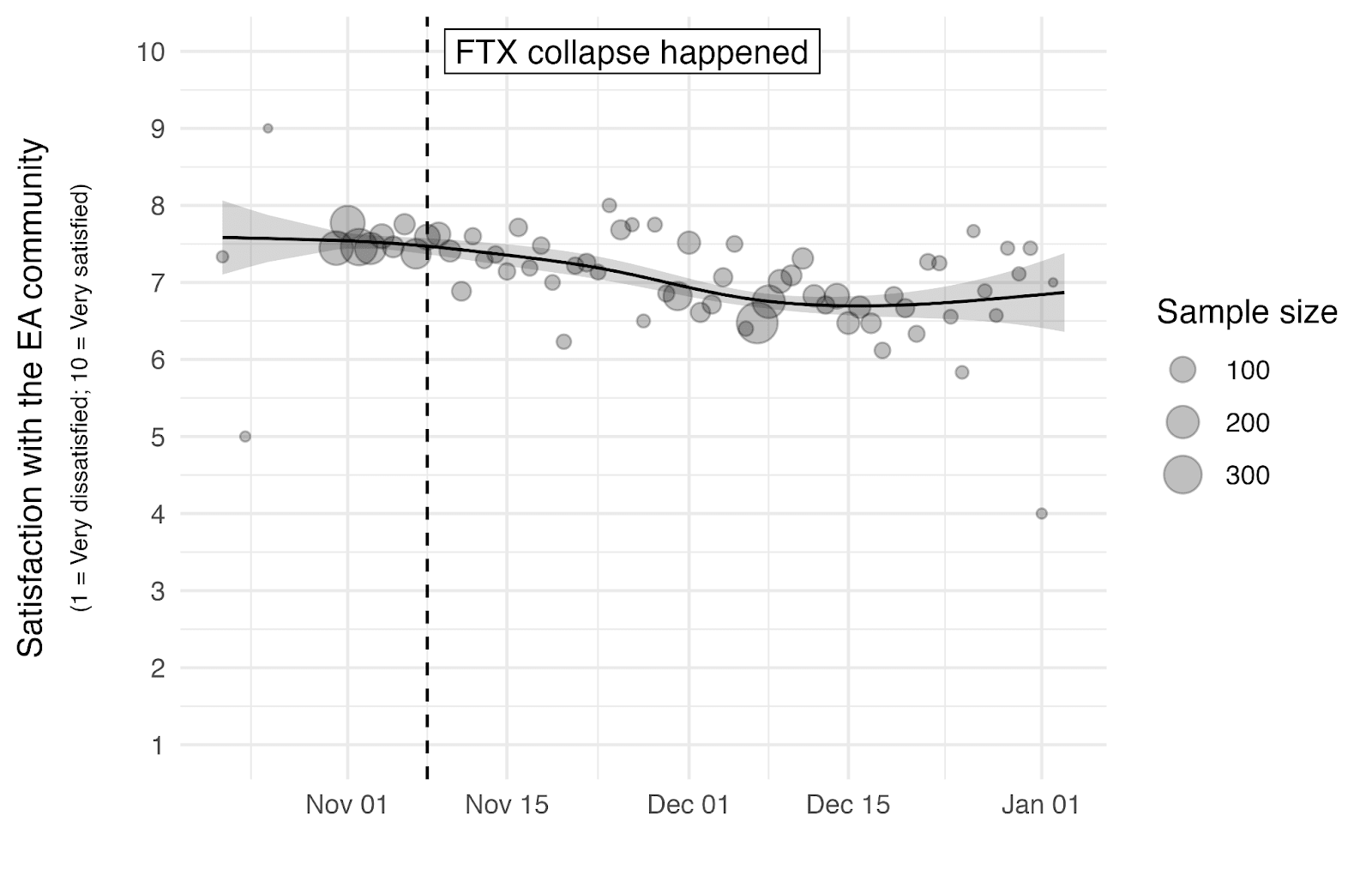

As part of the EA Survey, Rethink Priorities has been tracking community health related metrics, such as satisfaction with the EA community. Since the FTX crisis in 2022, there has been considerable discussion regarding how that crisis, and other events, have impacted the EA community. In the recent aftermath of the FTX crisis, Rethink Priorities fielded a supplemental survey to assess whether and to what extent those events had affected community satisfaction and health. Analyses of the supplemental survey showed relative reductions in satisfaction following FTX, while absolute satisfaction was still generally positive.

In this post, we report findings from a subsequent EA community survey, with data collected between December 11th 2023 and January 3rd 2024.[1]

Community satisfaction over time

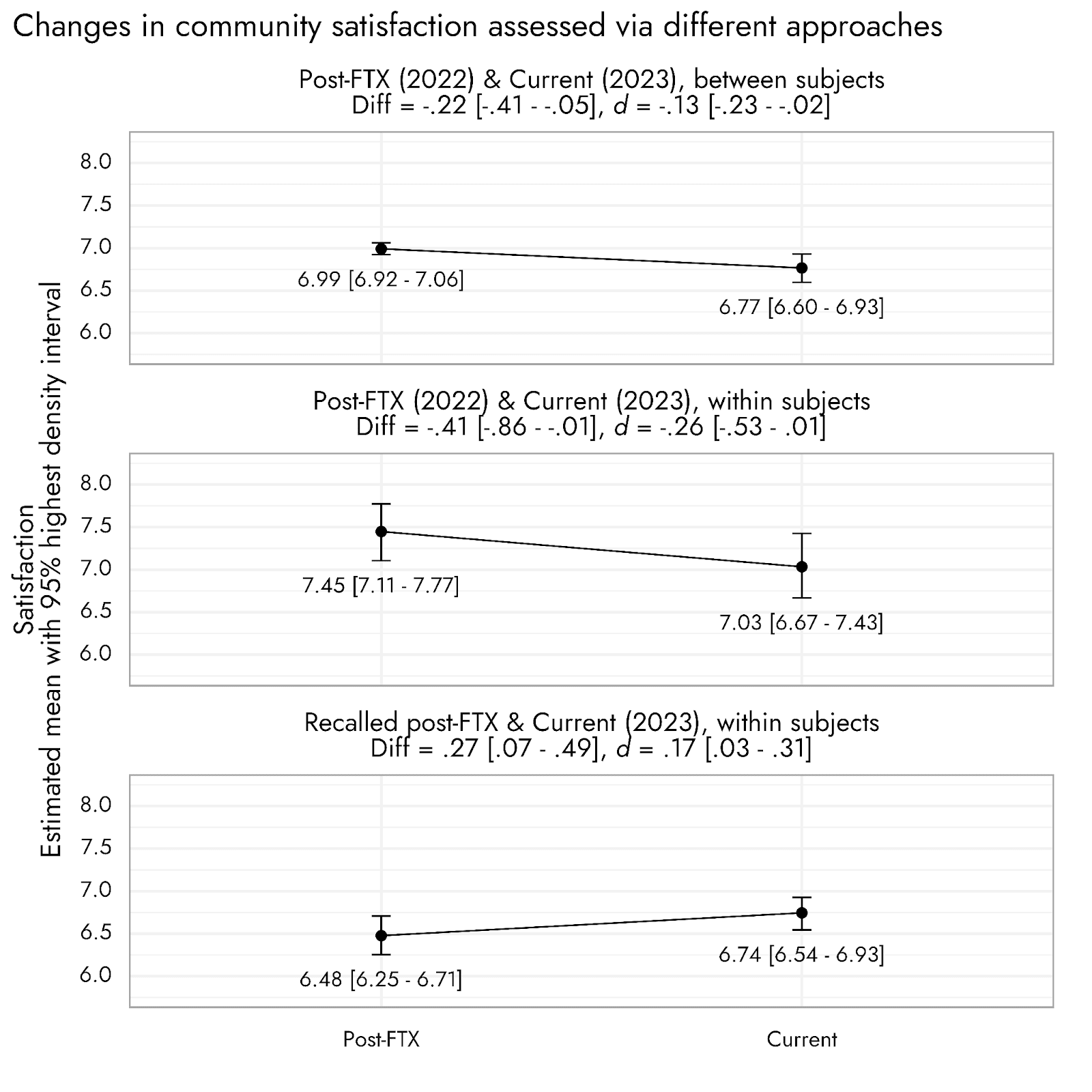

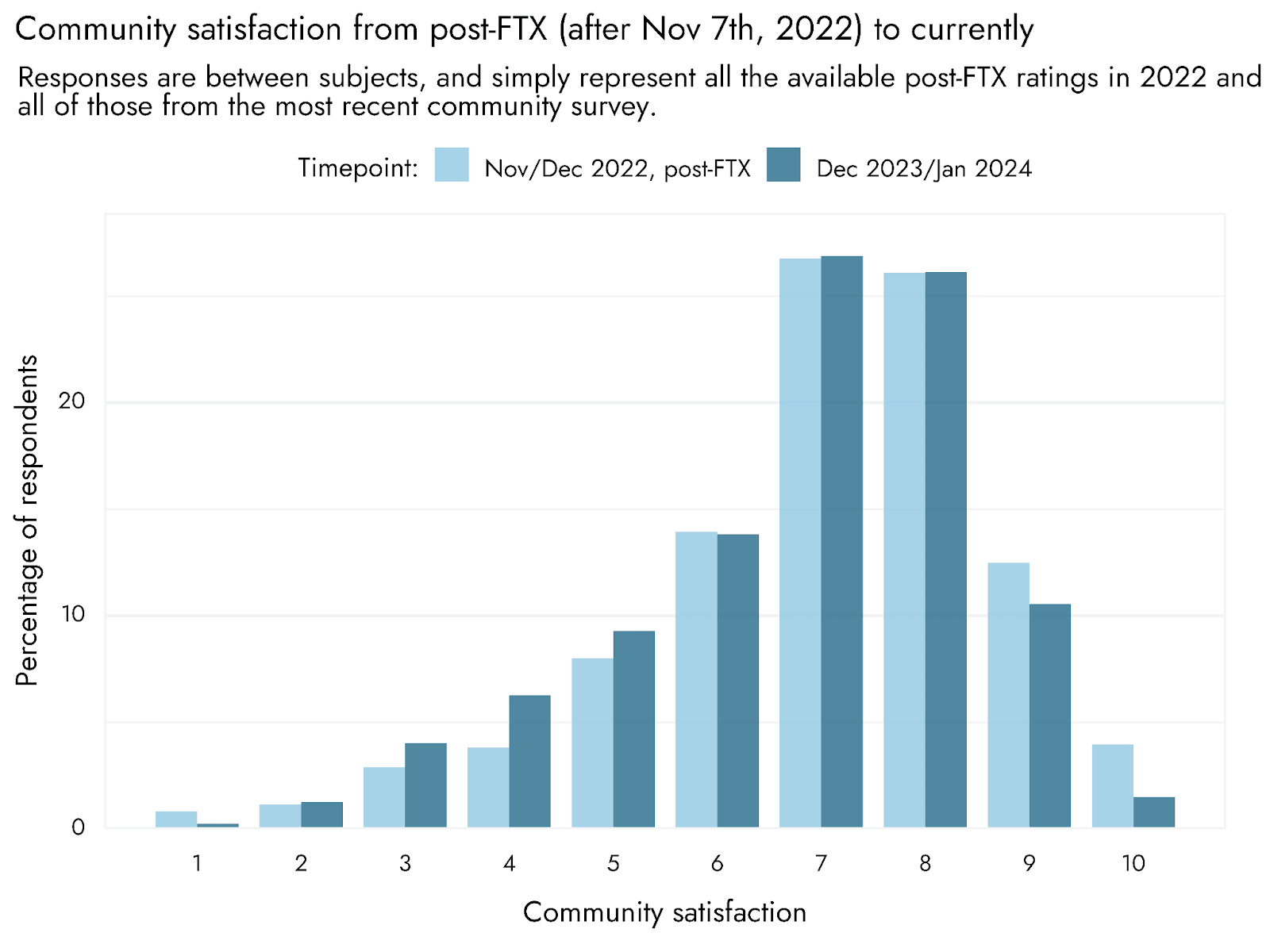

There are multiple ways to assess community satisfaction over time, so as to establish possible changes following the FTX crisis and other subsequent negative events. We have 2022 data pre-FTX and shortly after FTX, as well as the recently-acquired data from 2023-2024, which also includes respondents’ recalled satisfaction following FTX.[2] Satisfaction ratings were provided on a 1-10 scale, with 1 being Very dissatisfied, and 10 being Very satisfied.

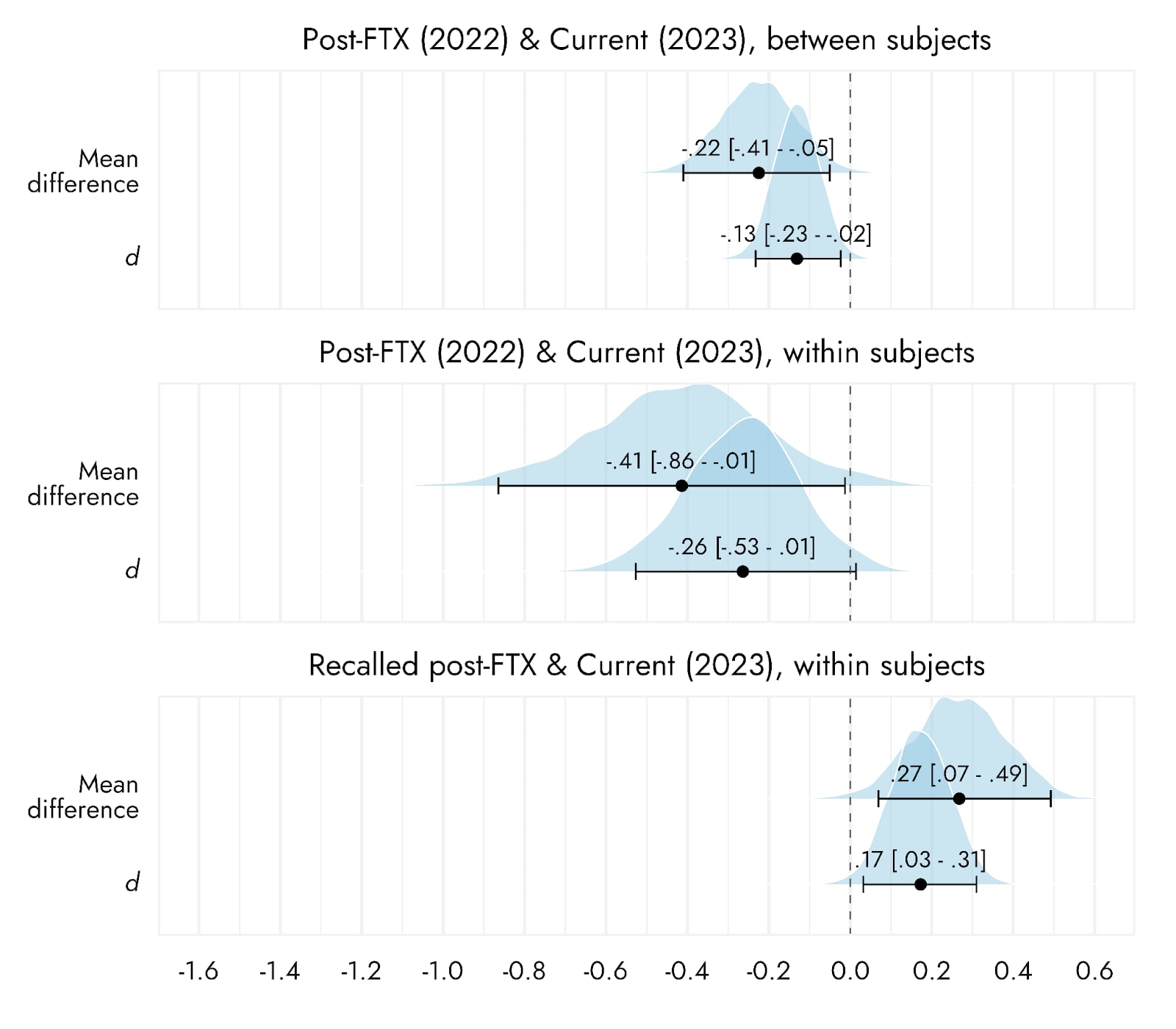

Comparing satisfaction levels of all respondents who completed the survey in 2022 (post-FTX) to all those who completed this followup survey at the end of 2023, we see a decrease in satisfaction (-.22 [-.41 - -.05] on the satisfaction scale, or d = -.13 [-.23 - -.02]). Comparing reported satisfaction only for respondents who we could individually match across both surveys, we likewise observed a decrease (-.41 points [-.86 - -.01], d = -.26 [-.53 - .01]).[3]

However, as an additional measure, we also asked respondents to report, to the best of their ability, what they “recall [their] satisfaction with the EA community to have been shortly after the FTX collapse at the end of 2022?”. Interestingly, compared to recalled levels of satisfaction post-FTX, there is a small increase in satisfaction ratings in the followup survey in late 2023 (.27 [.07 - .49] points, d = .17 [.03 - .31]). In addition, we can observe that respondents’ recalled post-FTX satisfaction was lower than their actual, observed satisfaction post-FTX, in the 2022 survey (recorded satisfaction was 7.4 [7.1 - 7.8], whereas recalled was 6.9 [6.5 - 7.3], d = -.37 [-.67 - -.11]).

One possible interpretation of these results is that respondents are simply mistaken about their level of satisfaction post-FTX (retrospectively imagining it to be lower than it was). If so, then even if individuals believe that their satisfaction has increased since immediately after FTX, we might conclude their satisfaction has actually worsened. However, another possibility is that when respondents report their recalled satisfaction “shortly after the FTX collapse”, they may be thinking of a time after FTX when they had learned more details of the FTX scandal, when their satisfaction was near its lowest. Our reasoning is that immediately after the FTX collapse, people would likely not have been aware of the full implications of the scandal, but as more details came out satisfaction may have further decreased. If so, when people recall their level of satisfaction post-FTX, it may be natural to recall their satisfaction after they learned more details about the scandal (when their satisfaction was lower), rather than a time shortly after the collapse when their satisfaction may have been higher. Indeed, our earlier post-FTX survey (below) showed a greater decline in satisfaction happening some weeks after the FTX collapse. If so, then we think a plausible explanation for our pattern of findings is that satisfaction has improved somewhat relative to its lowest point post-FTX, but that it remains below the immediate post-FTX period and considerably lower than the pre-FTX period.

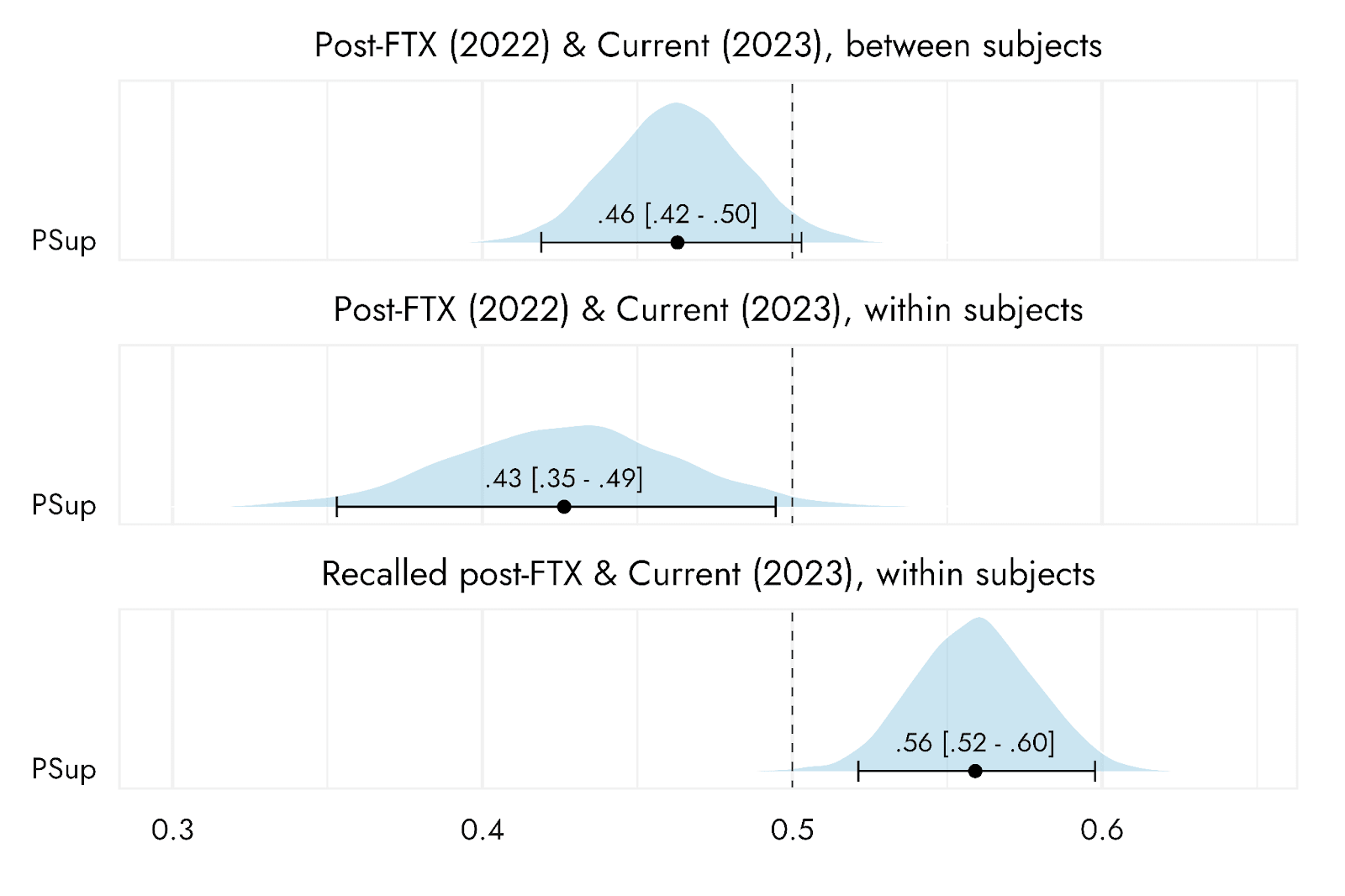

Effect sizes for these different comparisons are presented in the appendix, and a sense of the magnitude of the effect from post-FTX relative to currently can be gotten from the figure below.

Reasons for dissatisfaction with the EA community

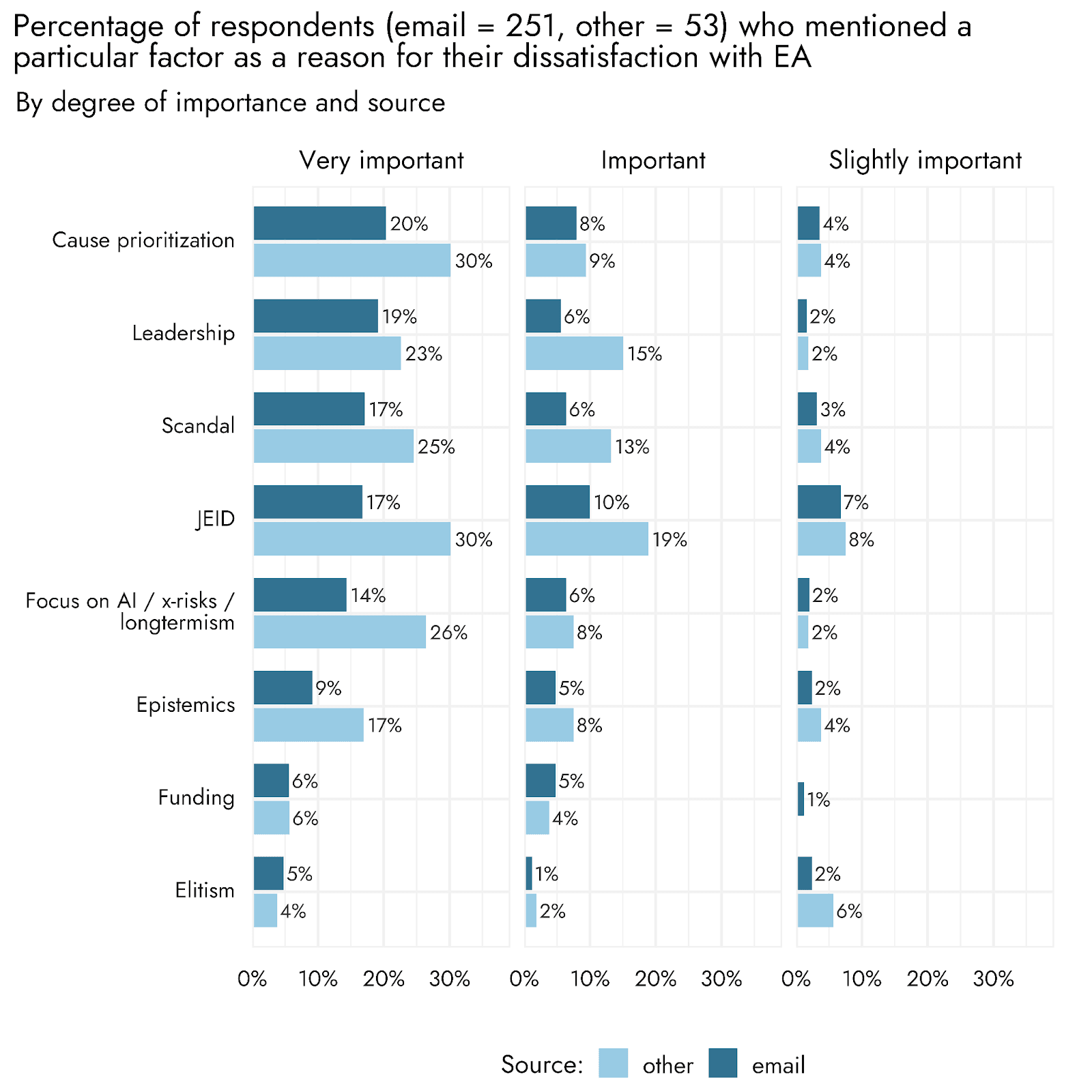

Regardless of their reported satisfaction levels, respondents were also given the opportunity to provide reasons for having low satisfaction with the EA community, separating these into Very important, Important, and Slightly important factors. Of the 398 people taking the survey, 304 (76%) provided a qualitative response. Those who provided responses, as would be expected, had lower levels of satisfaction than those who did not, on average (-1.15 [-1.54 - -.78] points on the 10pt scale). Responses were qualitatively coded with reference to a range of data-driven common factors of interest that arose across multiple responses. Responses could be coded as fitting multiple categories simultaneously. These factors, and their meanings, were:

- Cause prioritization: References to concerns about how causes are prioritized in the EA community, such as general concerns about cause prioritization and specific concerns such as an overemphasis on certain causes (e.g., AI risk) and ideas (e.g., longtermism).

- Leadership: References to issues pertaining to leadership in the EA community, such as concerns about specific individuals, the involvement of EA leadership in controversial situations, lack of accountability and transparency of EA leadership, centralization of power, and poor decision making.

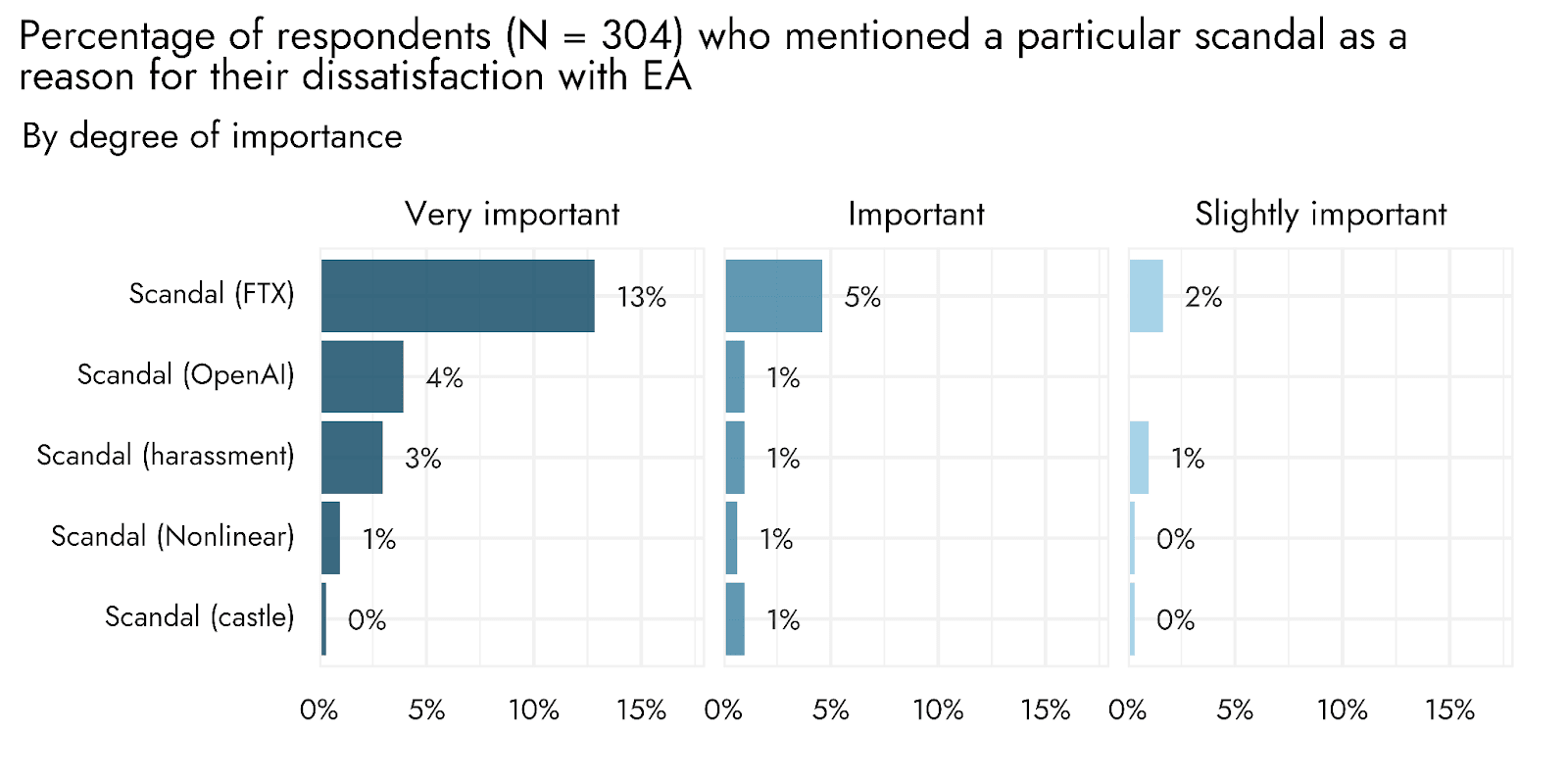

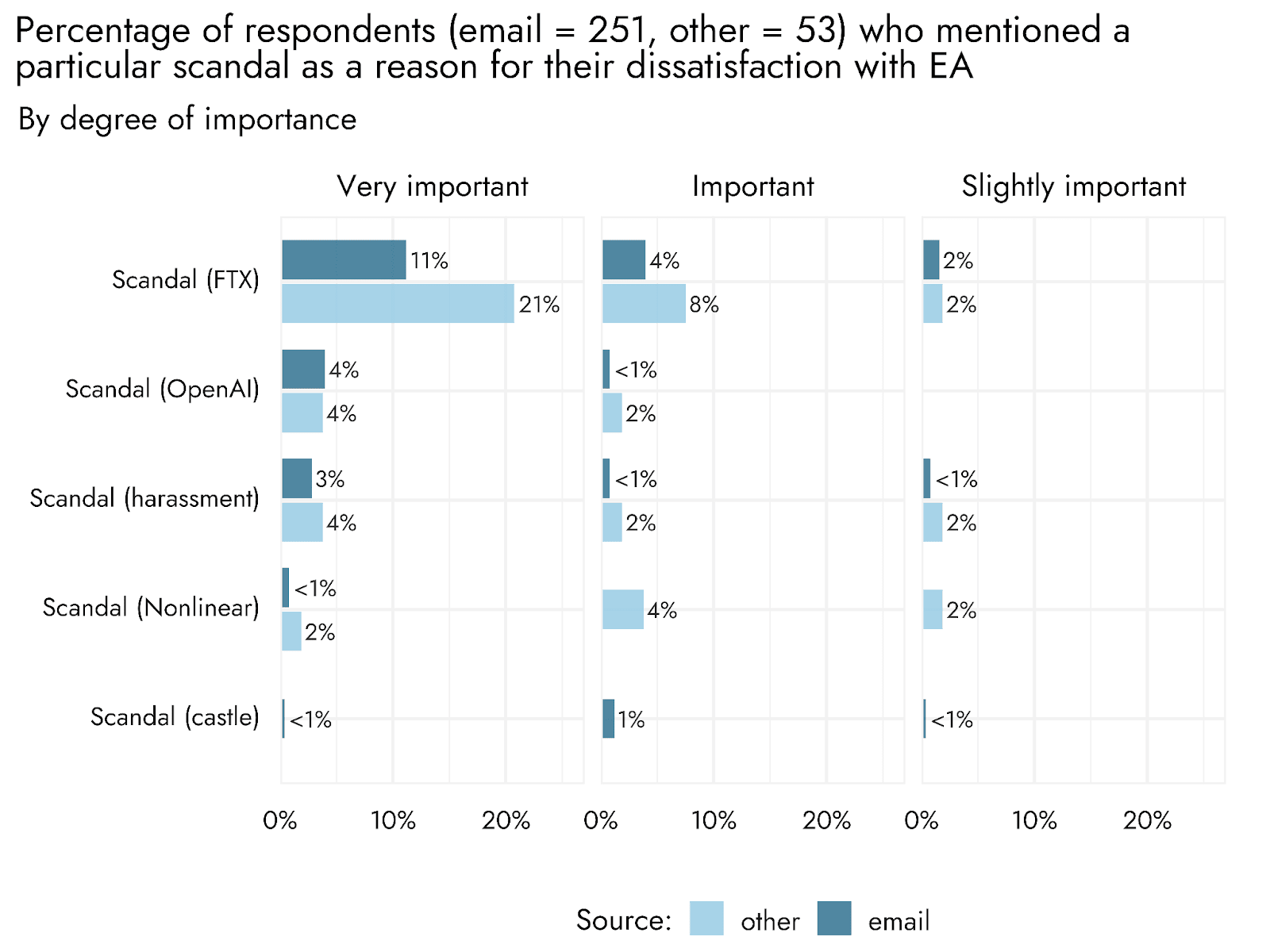

- Scandals: References to a specific scandal in EA (e.g., FTX, sexual harassment, OpenAI board[4]) or a topic indicative of a scandal (e.g., fraud). Notably, some responses highlighted not only the occurrence of the scandal as a relevant factor but also the handling of it, citing issues related to transparency, accountability, communication, and lack of institutional changes.

- Justice, Equity, Inclusion, & Diversity (JEID): includes references to wanting to see more diversity (e.g., gender, age, race, country), as well as more general complaints about racism, sexism or excessive focus on the US/UK or young people, and about community responses to these issues. This also included a small number of comments (1.9% of responses[5]) objecting to too much focus on such issues.

- Focus on AI risks/x-risks/longtermism: Mainly a subset of the cause prioritization category, consisting of specific references to an overemphasis on AI risk and existential risks as a cause area, as well as longtermist thinking in the EA community.

- Epistemics: References to epistemological issues in EA, including group think, overconfidence, unwarranted deference, and failure to consider alternative views or ideas.

- Funding: References to issues related to funding in EA, such as the centralization of funding, post-FTX funding challenges, its impact on cause areas, and funding procedures (e.g., transparency and communication).

- Elitism: References to elitist issues in EA, such as focus on certain schools and educational backgrounds.

The results show a number of factors are roughly equally often mentioned as causes of low satisfaction with EA. Concern about Cause prioritization (22%) was the most commonly mentioned as a Very important factor, followed by concerns about Leadership (20%), JEID (19%), Scandals (18%) and excessive Focus on AI / x-risk / longtermism.[6] Counting responses across all levels of importance, JEID was the most commonly cited category (mentioned in 38% of responses), but otherwise the ordering of categories was fairly similar.[7]

These results suggest that reasons for dissatisfaction with EA are not dominated by a single factor. Likewise, this means that the FTX crisis itself was not the dominant factor mentioned as a reason for dissatisfaction with EA, though it was the most commonly mentioned factor within the scandal category, being cited as Very important by 13% of those who provided a qualitative response. Approximately a third as many (4%) referenced OpenAI as Very important, 3% scandals involving harassment, 1% Nonlinear, and less than 1% the ‘castle’ (Wytham Abbey) (see footnote 4). It’s worth noting that these results are probably influenced by recency bias to some extent, with more recent events likely being more salient to respondents.

Changes in EA engagement

We additionally asked respondents to indicate the extent to which, if at all, they had increased or decreased their engagement with EA, relative to a year previously. The largest single option chosen was 4, which can be interpreted as no change (31%), although on net respondents indicated a tendency towards lesser rather than greater engagement: 39% of respondents reported being at least slightly less engaged, whereas 29% of respondents reported being at least slightly more engaged (the percentage difference between decreasing vs. increasing engagement was 10.19 [2.60 - 18.36], with 1.36 [1.06 - 1.70] times as many respondents decreasing than increasing).

Changes in EA-related behaviors

In addition to examining changes in satisfaction, we also examined reported changes in specific behaviors related to effective altruism. This follows requests and suggestions from community members, who highlighted possible changes in behavior after FTX (for example here). We asked respondents whether or not they had ceased to engage in any of several EA-related behaviors, such as stopping working on all EA-related projects, or ceasing to donate to an EA-aligned organization. These results may be skewed in an optimistic direction due to selection effects: those who dramatically reduced their engagement with the community would presumably be much less likely to answer the survey. However, they may provide useful insight into changes among those who remain engaged with the community.

In line with Luke Freeman’s observations about reduced willingness to promote EA in the wake of FTX, the most commonly reported change was 31% of respondents stating they had stopped referring to ‘EA’, while still promoting EA ideas and projects. Another sizable percentage (15%) reported temporarily stopping promotion of EA. However, only 6% reported having stopped promoting EA projects, ideas or actions permanently.

Smaller minorities of respondents also reported that they had stopped engaging with EA spaces (7%) or attending EA events (6%), permanently stopped promoting EA (6%), entirely ceased working on all EA projects (4%), and stopped donating to EA projects (3%) (note that the term ‘any’ in the graph means they no longer give to/attend/work on any projects). While we refer to these as ‘smaller minorities’ given the smaller absolute percentages, this is not to say that such changes are small in terms of the impact such changes might have.

Perception of issues in the EA community

Besides respondents’ satisfaction, and changes in their own behaviors or levels of engagement, we asked respondents for their attitudes towards certain aspects of the EA community, and their perception of possible issues.

Leadership vacuum

Firstly, respondents were asked to rate the extent to which they agreed or disagreed with the statement: “EA currently has a vacuum of leadership”, from Strongly disagree to Strongly agree. The modal response was Neither agree nor disagree, but a sizable percentage of people either somewhat agreed (30%) or strongly agreed (11%), relative to just 16% somewhat disagreeing and 6% strongly disagreeing.

Desire for more community change following FTX

When asked if they would like to see the EA community change more than it already has in the aftermath of the FTX collapse, the modal response was ‘Somewhat agree’ (30%), with a further 16% of people choosing ‘Strongly agree’. In contrast, 18% somewhat disagreed and only 8% strongly disagreed.

Trust in EA-related organizations

For three high-profile EA-related organizations (80,000 Hours, Center for Effective Altruism - CEA, and Open Philanthropy - OP), respondents were asked to indicate their level of trust. For all three, the majority of respondents either tended to trust or trusted them a great deal. However, CEA had notably lower levels of trust than the other two organizations. Treating the trust items as ordinal and excluding ‘Don’t know’ responses, we estimate that trust for CEA is .88 [.69 - 1.07] standard deviation units lower than for 80,000 Hours, and .81 [.62 - 1.00] standard deviation units lower than for Open Philanthropy. No reliable difference in trust between 80,000 Hours and Open Philanthropy was observed (80,000 Hours was .07 [-.11 - .25] higher).

We were also able to assess estimates of trust in these organizations currently, relative to when these questions were first asked in the recent aftermath of the FTX collapse in 2022. There were not a sufficient number of respondents to match, so we have simply compared all the responses from 2022 with all those of this most recent supplemental survey. Results for both OP and CEA evinced a decline in trust, with 80,000 Hours tending in this direction but not reliably excluding an absence of any change over time.

Appendix

Effect sizes for satisfaction over time

Figure A1. Estimated mean differences between time points and corresponding effect sizes (Cohen’s d within subjects)

Figure A1 shows estimates in terms of Cohen’s d. In Figure A2, we supplement estimates of effect size based upon numeric ratings with an ordinal approach, in which we simply assess whether scores tended to increase or decrease at different time points. When scores do not change at all, or equal numbers of people increase as decrease (i.e., there is no clear effect), Probability of Superiority (PSup) will be .5. As increasing proportions of respondents have scores that decrease from the earlier to the later time point, PSup will approach 1, and conversely approaches 0 as more scores indicate a decrease. A PSup of .56, for example, indicates that about 56% of respondents are expected to have greater satisfaction scores currently, relative to their recalled post-FTX score.

Figure A2. Probability of superiority (PSup) comparisons at different time points.

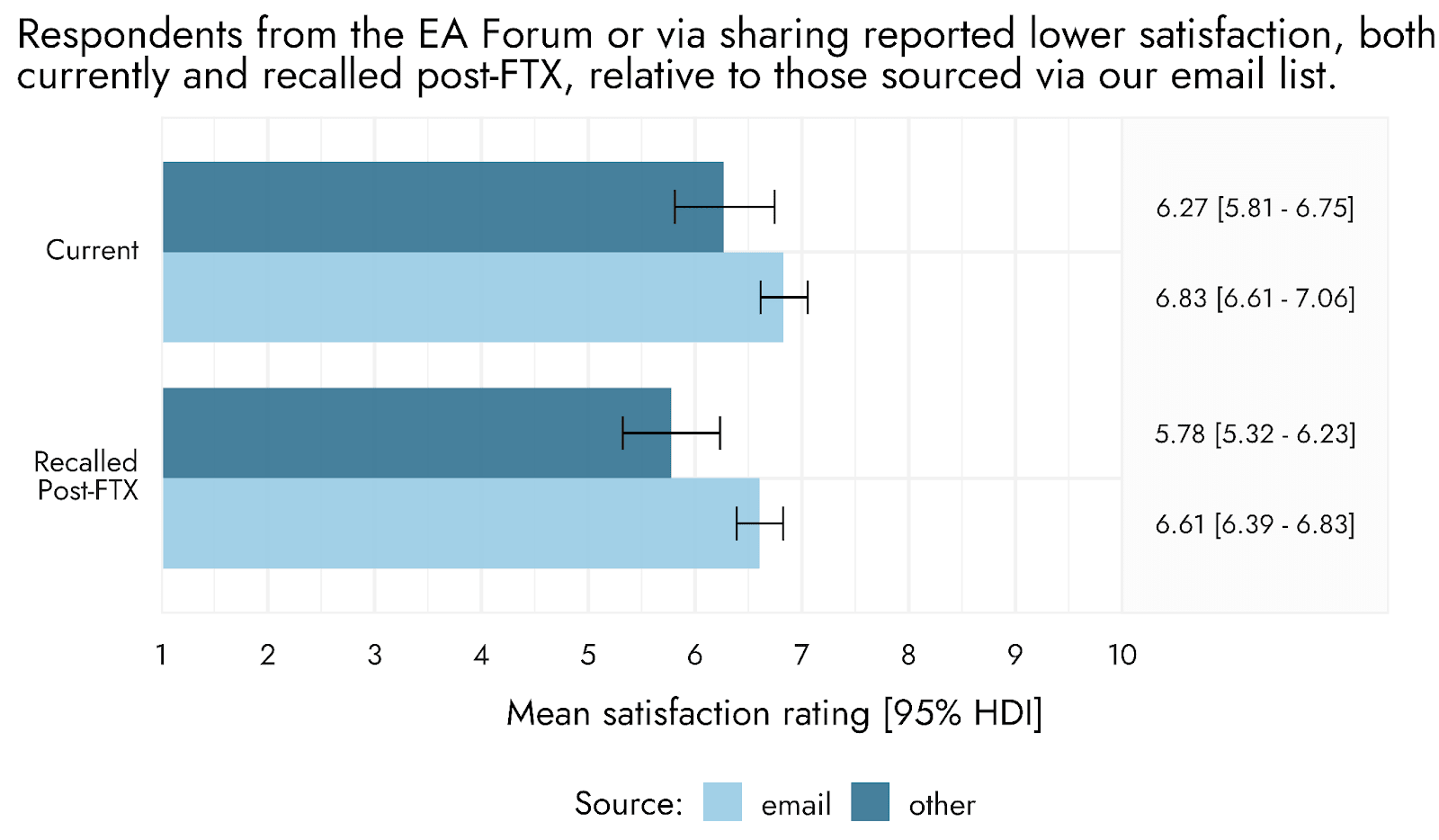

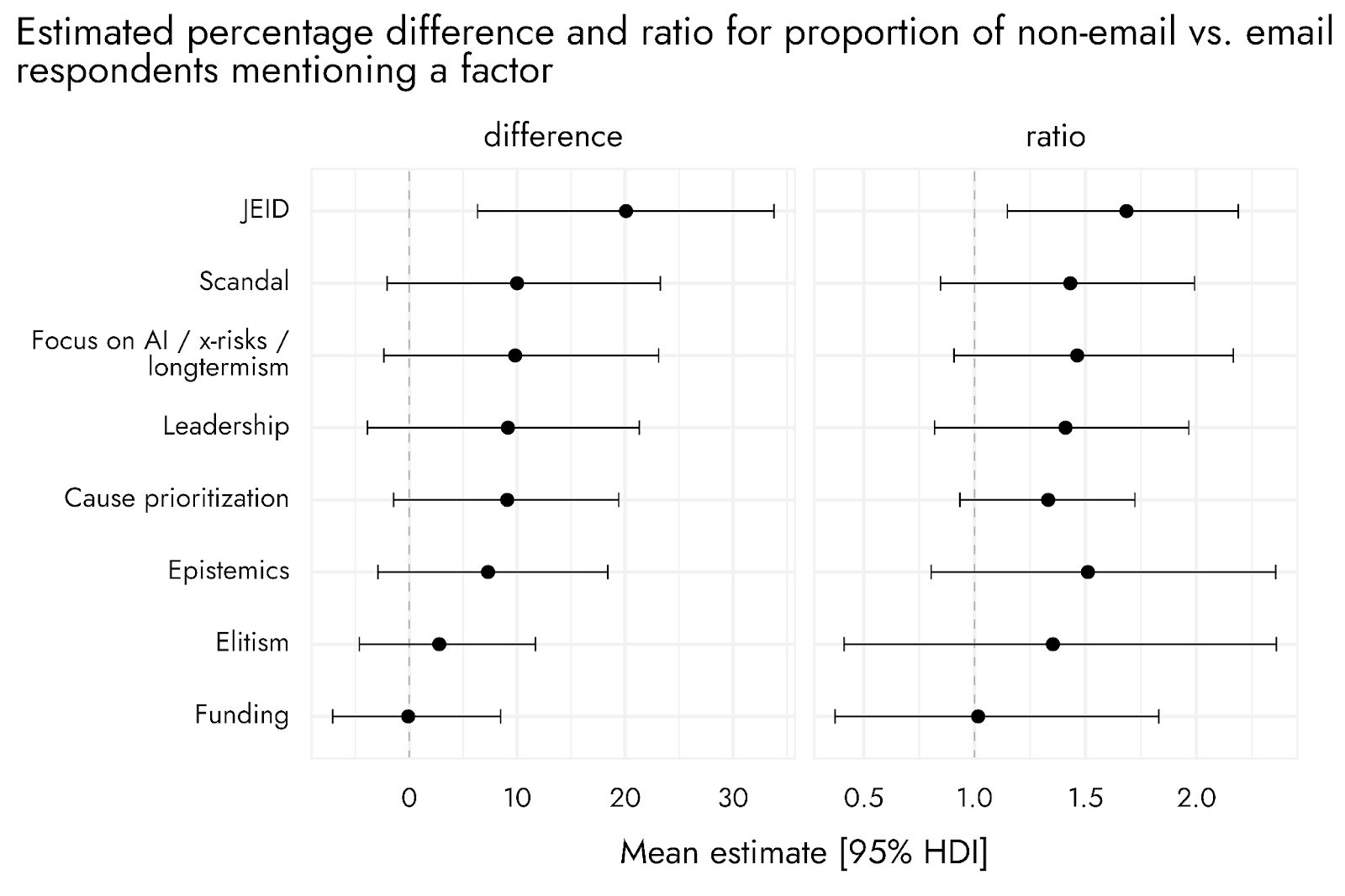

Email vs non-email referrers

We examined differences between respondents who were referred from the email sent to respondents from the previous EA Survey vs those who were referred from another source (primarily the Forum, plus a small number from the ‘sharing’ link respondents were invited to use if sharing the survey with others). A priori, we would expect people who opted in to receive an email about followup surveys to be slightly more satisfied than the average EAS respondents. And we would expect that people referred from the Forum post might be slightly disproportionately more concerned about harassment and JEID issues (since the Forum post also mentioned the Harassment survey we were simultaneously distributing for CEA) and to be generally less satisfied.

In line with these hypothesized differences, we do observe that respondents referred by the email were more satisfied on average than other respondents, both now and in terms of their recalled post-FTX satisfaction, though both groups show increases relative to their recalled satisfaction.

Respondents from other sources were also more likely to mention most factors for dissatisfaction, across most categories, particularly related to JEID and FTX.

Acknowledgments

This post was written by David Moss, Jamie Elsey and Willem Sleegers. We would like to thank Peter Wildeford and William McAuliffe for review of and suggestions to the final draft of this post.

- ^

This community survey was not a full-scale EA Survey: we are sensitive to issues of survey fatigue in the community and the efforts to which community members and leaders go in order to spread a full EA survey. This reduces the sample size (n=398) and potentially the representativeness of the supplementary survey, so we should get more informative data in the full EA Survey to be run later this year. This survey was administered through an email which was sent to previous survey-takers who provided consent to receive followup surveys, plus a Forum announcement, which means that recruitment was through a narrower set of channels than the full EA Survey (see the Appendix for some discussion of differences between sources, though this is limited by the small sample size for sources other than email). That said, we observed minimal differences between the composition of the last EA Survey and supplementary survey in terms of engagement and gender.

- ^

For data collected in 2022, we count responses gathered on or before November 7th as being pre-FTX, and responses after November 7th as post-FTX. The FTX crisis, and its fallout, of course spans across time, though we believe that November 7th represents a reasonable inflection point (e.g., it is the date of the collapse of FTX’s FTX token). We recognise other dates or a span of dates could be appropriate (e.g., FTX’s bankruptcy filings on 11th of November), but different date choices do not materially affect the trends in the outcomes we observe in this report.

- ^

It is important to bear in mind the various confounds that might affect these results. The comparison of all respondents who answered the 2022 survey to those who answered this followup survey might be affected by compositional differences between the two samples (i.e. if respondents who answered this followup survey differ from those who answered the earlier main survey). As we noted in our earlier report, individuals who are particularly dissatisfied with EA may be less likely to complete the survey (whether they have completely dropped out of the community or not), although the opposite effect (more dissatisfied respondents are more motivated to complete the survey to express their dissatisfaction) is also plausible.

Similarly, if respondents who we were able to track across both surveys differ from other respondents, then the trend observed within this group of subjects may not reflect the trend observed in the wider community. As noted below, we do observe some signs that these matched respondents differ from the wider sample, with both their post-FTX (2022) and 2023 levels of satisfaction with the community being higher.

- ^

OpenAI board is in reference to the attempted ousting of Sam Altman from OpenAI by the board of directors. Nonlinear refers to allegations of employee mistreatment/poor conduct by Nonlinear or the community response to these. ‘Castle’/Wytham Abbey refers to the purchase of a historic abbey in Oxford as a conference venue for the EA community.

- ^

Though we would not expect this to necessarily reflect the ratio between people on either side of debates about these issues, were people asked explicitly about them.

- ^

The Cause Prioritization and Focus on AI categories were largely, but not entirely, overlapping. The responses within the Cause Prioritization category which did not explicitly refer to too much focus on AI, were focused on insufficient attention being paid to other causes, primarily animals and GHD.

- ^

As these are percentages of those who provided a comment explaining their reasons for low satisfaction, it should not be inferred that these proportions of the whole EA community endorse these concerns.

Great post! Another excellent example of the invaluable work Rethink Priorities does.

My observations and takeaways from this latest survey are:

Many thanks!

All behaviour changes were correlated with each other (except for stopping referring to EA, while still promoting it, which was associated with temporarily stopping promoting EA, but somewhat negatively associated with other changes).

All behaviour changes were associated with lower satisfaction, with most behavioural changes common only among people with satisfaction below the midpoint, and quite rare with satisfaction above the midpoint (again, with the exception of stopping referring to EA, while still promoting it, which was more common across levels).

People who reported a behavioural change were more likely, on the whole, to mention factors as reasons for dissatisfaction. (When interpreting these it's important to account for the fact that people being more/less likely to mention a factor at a particular importance level might be explained by them being less/more likely to mention it at a different importance level, with less difference in terms of their overall propensity to mention it).

Similarly, there was no obvious pattern of particular factors being associated with lower satisfaction. In general, people who mentioned any given factor were less satisfied.

In principle, we could do more to assess whether any particular factors predict particular behavioural changes, controlling for relevant factors, but it might make more sense to wait for the next full iteration of the EA Survey, when we'll have a larger sample size, and can ask people explicitly whether each of these things are factors (rather than relying on people spontaneously mentioning them.

For the other measures, differences are largely as expected, i.e. people who made a behaviour change are more likely to desire more community change, more likely to strongly agree there's a leadership vacuum,[1] and trust was higher among people who had not made a behaviour change.

I still agree with this, unfortunately, we've been unsuccessful in securing any funding for more analysis of community growth metrics.

I personally don't put too much weight on this question. I worry that it's somewhat leading, and that people who are generally more dissatisfied are more likely to agree with it, but it's unclear that leadership vacuum is really an active concern for people or that it's what's driving people's dissatisfaction.

Thanks so much for this additional data and analysis! Really interesting stuff here. To me, the most interesting things are:

Agreed. I think that people temporarily stopping promoting EA is compatible with people who are still completely on board with EA, deciding that it's strategically unwise to publicly promote it, at a time when there's lots of negative discussion of it in the media. Likewise with still promoting EA, but stopping referring to it as "EA", which also showed high levels across the board.

I think the prevalence of these behaviours points to the importance of more empirical research on the EA brand and how it compares to alternative brands or just referring to individual causes or projects (see our proposal here). I think it's entirely possible that the term "EA" itself has been tarnished and that people do better to promote ideas and projects without explicitly branding them as EA. But there's a real cost to just promoting things piecemeal or using alternative terms (e.g. "have you heard of "high impact careers" / "existential security"?"), rather than referring to a unified established brand. So it's not clear a priori whether this is a positive move.

Agreed. One possible explanation, other than it just being a co-incidence of factors, is that the FTX crisis and subsequent revelations dented faith in EA leadership, and made people more receptive to other concerns. (I think historically, much of the community has been extremely deferential to core EA orgs and ~ assumed they know what they're doing come what may).

Certainly it's true that many of the other factors e.g. dissatisfaction with cause prioritisation, diversity, and elitism had been cited for a while. It's also true that even before FTX (though it still holds for 2022), people who had been in the community longer tended to be less satisfied with the community, even though higher engagement was associated with higher satisfaction.[1] While the implications of this for the average satisfaction level of the community depend on how many newer vs older EAs we have at a given time, this is compatible with a story where EAs generally become less satisfied with the community over time.

Note that this is the opposite direction to what you'd see if less satisfied people drop out, leaving more satisfied people remaining in earlier cohorts. That said the linked analyses (for individual years) can't rule out the possibility that earlier cohorts have just always been distinctively less satisfied, which would require a comparison across years.

David - this is a helpful and reasonable comment.

I suspect that many EAs tactically and temporarily suppressed their use of EA language after the FTX debacle, when they knew that EA had suffered a (hopefully transient) setback.

This may actually be quite analogous to the cyclical patterns of outreach and enthusiasm that we see in crypto investing itself. The post-FTX 2022-2023 bear market in crypto was reflected in a lot of 'crypto influencers' just not talking very much about crypto for a year or two, when investor sentiment was very low. Then, as the price action picked up in the last half of 2023 through now, and optimism returned, and the Bitcoin ETFs got approved by the SEC, people started talking about crypto again. So it has gone, with every 4-year-cycle in crypto.

The thing to note here is that in the dark depths of the 'crypto winter' (esp. early 2023), it seemed like confidence and optimism might never return. (Which is, of course, why token prices were so low). But, things did improve, as the short-term sting of the FTX scandal faded.

So, hopefully, things might go with EA itself, as we emerge from this low point in our collective sentiment.

Excellent points, everything you write here makes a lot of sense to me. I really hope you’re able to find funding for the proposal to research the EA brand relative to other alternatives. That seems like a really fundamental issue to understand, and your proposed study could provide a lot of valuable information for a very modest price.

Was there any attempt to deal with the issue that people that left EA were probably far less likely to see and take the survey?

I mean, I can't think of an easy way to do so, but it might be worth noting.

We did note this explicitly:

I don't think there's any feasible way to address this within this, smaller, supplementary survey. Within the main EA Survey we do look for signs of differential attrition.

As I mentioned in point 3 of this comment:

This suggests we could crudely estimate the selection effects of people dropping out of the community and therefore not answering the survey by assuming that there was a similar increase in scores of 1 and 2 as there was for scores of 3-5. My guess is that this would still understate the selection bias (because I’d guess we’re also missing people who would have given ratings in the 3-5 range), but it would at least be a start. I think it would be fair to assume that people who would have given satisfaction ratings of 1 or 2 but didn’t bother to complete the survey are probably also undercounted in the various measures of behavioral change.

This is a neat idea, but I think that's probably putting more weight on the (absence) of small differences at particular levels of the response scale than the smaller sample size of the Extra EA Survey will support. If we look at the CIs for any individual response level, they are relatively wide for the EEAS, and the numbers selecting the lowest response levels were very low anyway.

That makes sense. That said, while it might not be possible to quantify the extent of selectin bias at play, I do think the combination of a) favoring simpler explanations and b) the pattern I observed in the data makes a pretty compelling case that dissatisfied people being less likely to take the survey is probably much more of an issue than dissatisfied people being more likely to take the survey to voice their dissatisfaction.

Fantastic work. Would you be able to, if you think it is advisable, to have some sort of "adjusted JEID" score? I am thinking that since EA is mostly white and male, that if the community, in its current form, had been more "equally distributed across gender, race, etc", that the JEID concerns would have loomed even larger?

Very simplified, something like if 20% of respondents identified as POC, and JEID issues were raised by 15% of respondents, that we could do something like "if EA was 50% POC, the JEID issues would be raised by 37.5% of respondents". This is wrong math, I know, but meant to give an example to point in the direction of what I am thinking about.

And if any readers did not pick up on this already - I am potentially very interested to chip in on any JEID efforts where you think perhaps my privileges (or lay DEI expertise) might be of some use.

Thanks Ulrik!

We can provide the percentages broken down by different groups. I would advise against thinking about this in terms of 'what would the results be if weighted to match non-actual equal demographics' though: (i) if the demographics were different (equal) then presumably concern about demographics would be different [fewer people would be worried about demographic diversity if we had perfect demographic diversity], and (ii) if the demographics were different (equal) then the composition of the different demographic groups within the community would likely be different [if we had a large increase in the proportion of women / decrease in the proportion of men, the people making up those groups would plausibly differ from the current groups].

That said, people identified as a woman or anything other than a man, were more likely to mention JEID as at last of somewhat importance, and they were also more likely to mention cause prioritization and excessive focus on AI/x-risk/longtermism as a concern. Conversely, men were more likely to refer to scandals, leadership and epistemics.

I would be even more cautious about interpreting the differences based on race due to the low sample size (the total number would be much larger in the full EA Survey), and the fact that the composition of non-white respondents as a group differs from what you would see in a 'perfectly equal demographics' scenario (i.e. more Asian, unequal representation across countries).

I appreciate the effort to make the general consensus around trust towards EA-orgs more legible.

Given the criticism that the three mentioned receive (much of it directed towards CEA), it's interesting (and IMO good) to see that most people emailed are broadly trusting of them.

Does "mainly a subset" mean that a significant majority of responses coded this way were also coded as cause prio?

I'm trying to understand if the cause prio category not being much bigger than this category implies that "general concerns about cause prioritization and specific concerns such as an overemphasis on certain causes . . . and ideas" other than AI/x-risk/longtermism were fairly infrequent.

That's right, as we note here:

Specifically, of those who mention Cause Prioritization, around 68% were also coded as part of the AI/x-risk/longtermism category. That said, a large portion of the remainder mentioned "insufficient attention being paid to other causes, primarily animals and GHD" (which one may or may not think is just another side of the same coin). Conversely, around 8% of comments in the AI/x-risk/longtermism category were not also classified as Cause Prioritization (for example, just expressing annoyance about supporters of certain causes wouldn't count as about Cause Prioritization per se).

So over 2/3rds of Cause Prioritization was explicitly about too much AI/x-risk/longtermism. A large part of the remainder is probably connected, as part of a 'too much x-risk/too little not x-risk' category. The overlap between categories is probably larger than implied by the raw numbers, but we had to rely on what people actually wrote in their comments, without making too many suppositions.

Thanks for doing this! Very interesting.

Would it be possible to stratify the results (particularly the satisfaction and "reasons for dissatisfaction") by engagement level?

Possibly the sample size isn't large enough to do this, but it would be interesting to see, if possible.

Thanks!

For satisfaction, we see the following patterns.

For reasons for dissatisfaction, there are a few systematic differences across engagement levels:

Executive summary: A recent survey found that satisfaction with the EA community has declined since the FTX crisis, though it may have improved from its lowest point. Respondents cited various reasons for dissatisfaction and reported reduced engagement.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.