I'm pretty happy with how this "Where should I donate, under my values?" Manifold market has been turning out. Of course all the usual caveats pertaining to basically-fake "prediction" markets apply, but given the selection effects of who spends manna on an esoteric market like this I put a non-trivial weight into the (live) outcomes.

I guess I'd encourage people with a bit more money to donate to do something similar (or I guess defer, if you think I'm right about ethics!), if just as one addition to your portfolio of donation-informing considerations.

Thanks! Let me write them as a loss function in python (ha)

For real though:

Some flavor of hedonic utilitarianism

I guess I should say I have moral uncertainty (which I endorse as a thing) but eh I'm pretty convinced

Longtermism as explicitly defined is true

Don't necessarily endorse the cluster of beliefs that tend to come along for the ride though

"Suffering focused total utilitarian" is the annoying phrase I made up for myself

I think many (most?) self-described total utilitarians give too little consideration/weight to suffering, and I don't think it really matters (if there's a fact of the matter) whether this is because of empirical or moral beliefs

Maybe my most substantive deviation from the default TU package is the following (defended here):

"Under a form of utilitarianism that places happiness and suffering on the same moral axis and allows that the former can be traded off against the latter, one might nevertheless conclude that some instantiations of suffering cannot be offset or justified by even an arbitrarily large amount of wellbeing."

Moral realism for basically all the reasons described by Rawlette on 80k but I don't think this really matters after conditioning on normative ethical beliefs

Nothing besides valenced qualia/hedonic tone has intrinsic value

I think that might literally be it - everything else is contingent!

1) How often (in absolute and relative terms) a given forum topic appears with another given topic

2) Visualizing the popularity of various tags

An updated Forum scrape including the full text and attributes of 10k-ish posts as of Christmas, '22

See the data without full text in Google Sheets here

Post explaining version 1.0 from a few months back

From the data in no. 2, a few effortposts that never garnered an accordant amount of attention (qualitatively filtered from posts with (1) long read times (2) modest positive karma (3) not a ton of comments.

A (potential) issue with MacAskill's presentation of moral uncertainty

Not able to write a real post about this atm, though I think it deserves one.

MacAskill makes a similar point in WWOTF, but IMO the best and most decision-relevant quote comes from his second appearance on the 80k podcast:

There are possible views in which you should give more weight to suffering...I think we should take that into account too, but then what happens? You end up with kind of a mix between the two, supposing you were 50/50 between classical utilitarian view and just strict negative utilitarian view. Then I think on the natural way of making the comparison between the two views, you give suffering twice as much weight as you otherwise would.

I don't think the second bolded sentence follows in any objective or natural manner from the first. Rather, this reasoning takes a distinctly total utilitarian meta-level perspective, summing the various signs of utility and then implicitly considering them under total utilitarianism.

Even granting that the mora arithmetic is appropriate and correct, it's not at all clear what to do once the 2:1 accounting is complete. MacAskill's suffering-focused twin might have reasoned instead that

Negative and total utilitarianism are both 50% likely to be true, so we must give twice the normal amount of weight to happiness. However, since any sufficiently severe suffering morally outweighs any amount of happiness, the moral outlook on a world with twice as much wellbeing is the same as before

A better proxy for genuine neutrality (and the best one I can think of) might be to simulate bargaining over real-world outcomes from each perspective, which would probably result in at least some proportion of one's resources being deployed as though negative utilitarianism were true (perhaps exactly 50%, though I haven't given this enough thought to make the claim outright).

Idea/suggestion: an "Evergreen" tag, for old (6 months month? 1 year? 3 years?) posts (comments?), to indicate that they're still worth reading (to me, ideally for their intended value/arguments rather than as instructive historical/cultural artifacts)

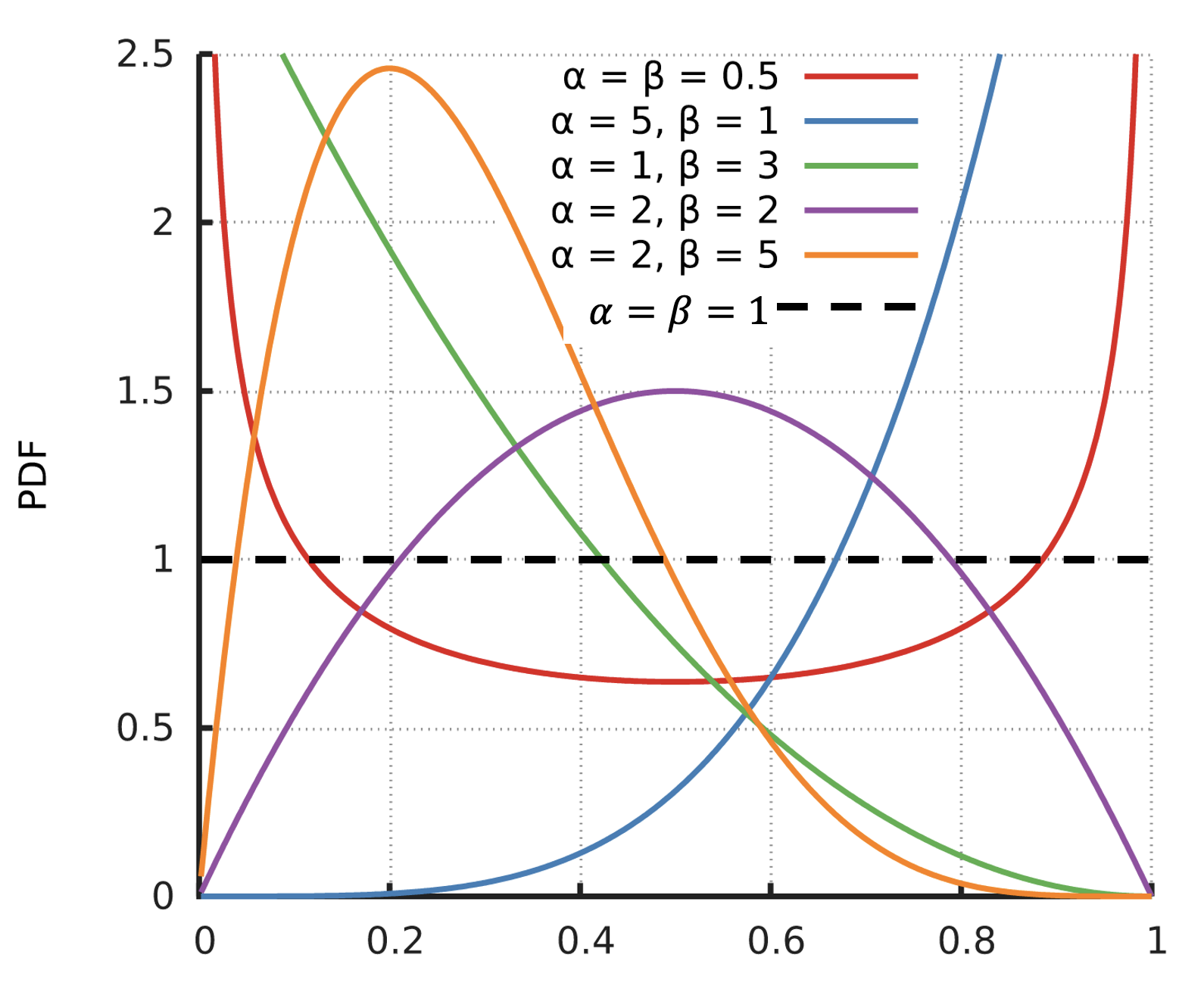

The recent 80k podcast on the contingency of abolition got me wondering what, if anything, the fact of slavery's abolition says about the ex ante probability of abolition - or more generally, what one observation of a binary random variable X says about p as in

Turns out there is an answer (!), and it's found starting in paragraph 3 of subsection 1 of section 3 of the Binomial distribution Wikipedia page:

Don't worry, I had no idea what Beta(α,β) was until 20 minutes ago. In the Shortform spirit, I'm gonna skip any actual explanation and just link Wikipedia and paste this image (I added the uniform distribution dotted line because why would they leave that out?)

So...

Cool, so for the n=1 case, we get that if you have a prior over the ex ante probability space[0,1] described by one of those curves in the image, you...

0) Start from 'zero empirical information guesstimate' E[Beta(α,β)]=αα+β

1a) observe that the thing happens (x=1), moving you, Ideal Bayesian Agent, to updated probability ^pb=1+α1+α+β>αα+β OR

1b) observe that the thing doesn't happen (x=0), moving you to updated probability ^pb=α1+α+β<αα+β

In the uniform case (which actually seems kind of reasonable for abolition), you...

0) Start from prior E[p]=1/2

1a) observe that the thing happens, moving you to updated probability ^pb=2/3

1a) observe that the thing doesn'thappen, moving you to updated probability ^pb=13

In terms of result, yeah it does, but I sorta half-intentionally left that out because I don't actually think LLS is true as it seems to often be stated.

Since we have the prior knowledge that we are looking at an experiment for which both success and failure are possible, our estimate is as if we had observed one success and one failure for sure before we even started the experiments.

seems both unconvincing as stated and, if assumed to be true, doesn't depend on that crucial assumption

Hypothesis: from the perspective of currently living humans and those who will be born in the currrent <4% growth regime only (i.e. pre-AGI takeoff or I guess stagnation) donations currently earmarked for large scale GHW, Givewell-type interventions should be invested (maybe in tech/AI correlated securities) instead with the intent of being deployed for the same general category of beneficiaries in <25 (maybe even <1) years.

The arguments are similar to those for old school "patient philanthropy" except now in particular seems exceptionally uncertain wrt how to help humans because of AI.

For example, it seems plausible that the most important market the global poor don't have access to is literally the NYSE (ie rather than for malaria nets), because ~any growth associated with (AGI + no 'doom') will leave the global poor no better off by default (i.e. absent redistribution or immigration reform)unlike e.g., middle class westerners who might own a bit of the S&P500. A solution could be for e.g. OpenPhil to invest on their behalf.

(More meta: I worry that segmenting off AI as fundamentally longtermist is leaving a lot of good on the table; e.g. insofar as this isn't currently the case, I think OP's GHW side should look into what kind of AI-associated projects could do a lot of good for humans and animals in the next few decades.)

I'm skeptical of this take. If you think sufficiently transformative + aligned AI is likely in the next <25 years, then from the perspective of currently living humans and those who will be born in the current <4% growth regime, surviving until transformative AI arrives would be a huge priority. Under that view, you should aim to deploy resources as fast as possible to lifesaving interventions rather than sitting on them.

I tried making a shortform -> Twitter bot (ie tweet each new top level ~quick take~) and long story short it stopped working and wasn't great to begin with.

I feel like this is the kind of thing someone else might be able to do relatively easily. If so, I and I think much of EA Twitter would appreciate it very much! In case it's helpful for this, a quick takes RSS feed is at https://ea.greaterwrong.com/shortform?format=rss

Note: this sounds like it was written by chatGPT because it basically was (from a recorded ramble)🤷

I believe the Forum could benefit from a Shorterform page, as the current Shortform forum, intended to be a more casual and relaxed alternative to main posts, still seems to maintain high standards. This is likely due to the impressive competence of contributors who often submit detailed and well-thought-out content. While some entries are just a few well-written sentences, others resemble blog posts in length and depth.

As such, I find myself hesitant to adhere to the default filler text in the submission editor when visiting this page. However, if it were more informal and less intimidating in nature, I'd be inclined to post about various topics that might otherwise seem out of place. To clarify, I'm not suggesting we resort to jokes or low-quality "shitposts," but rather encourage genuine sharing of thoughts without excessive analysis.

Perhaps adopting an amusing name like "EA Shorterform" would help create a more laid-back atmosphere for users seeking lighter discussions. By doing so, we may initiate a preference falsification cascade where everyone feels comfortable enough admitting their desire for occasional brevity within conversations. Who knows? Maybe I'll start with posting just one sentence soon!

WWOTF: what did the publisher cut? [answer: nothing]

Contextual note: this post is essentially a null result. It seemed inappropriate both as a top-level post and as an abandoned Google Doc, so I’ve decided to put out the key bits (i.e., everything below) as Shortform. Feel free to comment/message me if you think that was the wrong call!

Actual post

On hisrecent appearance on the 80,000 Hours Podcast, Will MacAskill noted thatDoing Good Better was significantly influenced by the book’s publisher:[1]

Rob Wiblin: ...But in 2014 you wrote Doing Good Better, and that somewhat soft pedals longtermism when you’re introducing effective altruism. So it seems like it was quite a long time before you got fully bought in.

Will MacAskill: Yeah. I should say for 2014, writing Doing Good Better, in some sense, the most accurate book that was fully representing my and colleagues’ EA thought would’ve been broader than the particular focus. And especially for my first book, there was a lot of equivalent of trade — like agreement with the publishers about what gets included. I also wanted to include a lot more on animal issues, but the publishers really didn’t like that, actually. Their thought was you just don’t want to make it too weird.

Rob Wiblin: I see, OK. They want to sell books and they were like, “Keep it fairly mainstream.”

Will MacAskill: Exactly...

I thought it was important to know whether the same was true with respect to What We Owe the Future, so I reached out to Will's team and received the following response from one of his colleagues [emphasis mine]:

Hi Aaron, thanks for sending these questions and considering to make this info publicly available.

However, in contrast to what one might perhaps reasonably expect given what Will said about Doing Good Better, I think there is actually very little of interest that can be said on this topic regarding WWOTF. In particular:

I'm not aware of any material that was cut, or any other significant changes to the content of the book that were made significantly because of the publisher's input. (At least since I joined Forethought in mid-2021; it's possible there was some of this at earlier stages of the project, though I doubt it.) To be clear: The UK publisher's editor read multiple drafts of the book and provided helpful comments, but Will generally changed things in response to these comments if and only if he was actually convinced by them.

(There are things other than the book's content where the publisher exerted more influence – for instance, the publishers asked us for input on the book's cover but made clear that the cover is ultimately their decision. Similarly, the publisher set the price of the book, and this is not something we were involved in at all.)

As Will talks about in more detail here, the book's content would have been different in some ways if it had been written for a different audience – e.g., people already engaged in the EA community as opposed to the general public. But this was done by Will's own choice/design rather than because of publisher intervention. And to be clear, I think this influenced the content in mundane and standard ways that are present in ~all communication efforts – understanding what your audience is, aiming to meet them where they are and delivering your messages in way that is accessible to them (rather than e.g. using overly technical language the audience might not be familiar with).

This post is half object level, half experiment with “semicoherent audio monologue ramble → prose” AI (presumably GPT-3.5/4 based) program audiopen.ai.

In the interest of the latter objective, I’m including 3 mostly-redundant subsections:

A ’final’ mostly-AI written text, edited and slightly expanded just enough so that I endorse it in full (though recognize it’s not amazing or close to optimal)

The raw AI output

The raw transcript

1) Dubious asymmetry argument in WWOTF

In Chapter 9 of his book, What We Are the Future, Will MacAskill argues that the future holds positive moral value under a total utilitarian perspective. He posits that people generally use resources to achieve what they want - either for themselves or for others - and thus good outcomes are easily explained as the natural consequence of agents deploying resources for their goals. Conversely, bad outcomes tend to be side effects of pursuing other goals. While malevolence and sociopathy do exist, they are empirically rare.

MacAskill argues that in a future with continued economic growth and no existential risk, we will likely direct more resources towards doing good things due to self-interest and increased impartial altruism. He contrasts this eutopian scenario with an anti-eutopia: the worst possible world, which he argues (compellingly, I think) less probable because it requires convoluted explanations as opposed to simple desires like enjoying ice cream. He concludes that the probability of achieving a eutopia outweighs the low likelihood but extreme negative consequences of an anti-eutopia.

However, I believe McCaskill's analysis neglects an important aspect: considering not only these two extremes but also the middle distribution where neither significant amounts of resources nor agentic intervention occur.

When physics operates without agency-driven resource allocation, we have good reason to expect evolution to create conscious beings whose suffering we can attribute to the ease with which animals (or animal-like beings) can lose all future expected genetic reproduction - as MacAskill himself argues elsewhere in the book.

Importantly, though, this non-agentic suffering, seems more likely to complement agentic resource deployment - not substitute for it as one might intuit. That’s because human or post-human expansion necessarily entails the expansion of concentrated physical energy, and seems likely to entail the expansion of other scarce, pro-biotic resources such as DNA, water, and computation.

Although McCaskill does not explicitly claim his binary model comparing eutopia and anti-eutopia is sufficient for understanding this complex problem, it seems to me to be implied;

Only upon attempting to draft a blog post revisiting his work did I noticed the line “We can make some progress by focusing on just two extreme scenarios: the best or worst possible futures, eutopia and anti-eutopia,” acknowledging status of this model as a bit of evidence in the larger question of the future’s value.

In sum, I think MacAskill's analysis would benefit from addressing the morally relevant middle distribution to provide a more accurate representation of the future under total utilitarianism.

2) Utilitarian Utopia, Anti-Utopia and the Neglected Middle

In Chapter 9 of his book, What We Are the Future, Will McCaskill argues that the future holds positive moral value under a total utilitarian perspective. He posits that people generally use resources to achieve what they want - either for themselves or others - and thus good outcomes are often intentional. Conversely, bad outcomes tend to be side effects of pursuing other goals. While malevolence and sociopathy do exist, they are empirically rare.

McCaskill then extrapolates this argument to suggest that in a future with continued economic growth (assuming no existential risk), we will likely direct more resources towards doing good things due to self-interest and increased impartial altruism. He contrasts this utopian scenario with an anti-utopia: the worst possible world which is less probable because it requires convoluted explanations as opposed to simple desires like enjoying ice cream.

He concludes that the probability of achieving a utopia outweighs the low likelihood but extreme negative consequences of an anti-utopia. However, I believe McCaskill's analysis neglects an important aspect: considering not only these two extremes but also the middle distribution where neither significant amounts of resources nor agentic intervention occur.

In such cases where physics operates without agency-driven resource allocation, evolution can create conscious beings like plants and animals who experience suffering without any intentionality behind it. This middle distribution may actually skew negatively since evolution favors reproductive fitness at any cost; as a result, sentient beings could suffer more than they experience happiness during their lives.

I argue that wild animal welfare is net negative overall; if given a choice between having them continue living or ceasing their existence altogether, I would choose nonexistence on moral grounds. Although McCaskill does not explicitly claim his heuristic comparison between utopia and anti-utopia is sufficient for understanding this complex problem, he strongly implies it throughout most of his chapter.

Upon revisiting his work while drafting my response blog post, I noticed a single line acknowledging the limitations of his approach. However, this caveat does not adequately emphasize that his argument should be considered only as a first pass and not definitive proof. In my opinion, McCaskill's analysis would benefit from addressing the morally relevant middle distribution to provide a more accurate representation of the future under total utilitarianism.

-------

3) Original Transcript

Okay, so I'm going to describe where I think I disagree with Will McCaskill in Chapter 9 of his book, What We Are the Future, where he basically makes an argument that the future is positive in expectation, positive moral value under a total utilitarian perspective. And so his argument is basically that people, it's very easy to see that people deploy the resources in order to get what they want, which is either to help themselves and sometimes to help other people, whether it's just their family or more impartial altruism. Basically you can always explain why somebody does something good just because it's good and they want it, which is kind of, I think that's correct and compelling. Whereas when something bad happens, it's generally the side effect of something else. At least, yeah. So while there is malevolence and true sociopathy, those things are in fact empirically quite rare, but if you undergo a painful procedure, like a medical procedure, it's because there's something affirmative that you want and that's a necessary side effect. It's not because you actually sought that out in particular. And all this I find true and correct and compelling. And so then he uses this to basically say that in the future, presumably conditional on continued economic growth, which basically just means no existential risk and humans being around, we'll be employing a lot of resources in the direction of doing things well or doing good. Largely just because people just want good things for themselves and hopefully to some extent because there will be more impartial altruists willing to both trade and to put their own resources in order to help others. And once again, all true, correct, compelling in my opinion. So on the other side, so basically utopia in this sense, utopia basically meaning employing a lot of, the vast majority of resources in the direction of doing good is very likely and very good. On the other side, it's how likely and how bad is what he calls anti-utopia, which is basically the worst possible world. And he basically using... I don't need to get into the particulars, but basically I think he presents a compelling argument that in fact it would be worse than the best world is good, at least to the best of our knowledge right now. But it's very unlikely because it's hard to see how that comes about. You actually can invent stories, but they get kind of convoluted. And it's not nearly as simple as, okay, people like ice cream and so they buy ice cream. It's like, you have to explain why so many resources are being deployed in the direction of doing good things and you still end up with a terrible world. Then he basically says, okay, all things considered, the probability of good utopia wins out relative to the badness, but very low probability of anti-utopia. Again, a world full of misery. And where I think he goes wrong is that he neglects the middle of the distribution where the distribution is ranging from... I don't know how to formalize this, but something like percentage or amount of... Yeah, one of those two, percentage or amount of resources being deployed in the direction of on one side of the spectrum causing misery and then the other side of the spectrum causing good things to come about. And so he basically considers the two extreme cases. But I claim that, in fact, the middle of the distribution is super important. And actually when you include that, things look significantly worse because the middle of the distribution is basically like, what does the world look like when you don't have agents essentially deploying resources in the direction of anything? You just have the universe doing its thing. We can set aside the metaphysics or physics technicalities of where that becomes problematic. Anyway, so basically the middle of the distribution is just universe doing its thing, physics operating. I think there's the one phenomenon that results from this that we know of to be morally important or we have good reason to believe is morally important is basically evolution creating conscious beings that are not agentic in the sense that I care about now, but basically like plants and animals. And presumably I think you have good reason to believe animals are sentient. And evolution, I claim, creates a lot of suffering. And so you look at the middle of the distribution and it's not merely asymmetrical, but it's asymmetrical in the opposite direction. So I claim that if you don't have anything, if you don't have lots of resources being deployed in any direction, this is a bad world because you can expect evolution to create a lot of suffering. The reason for that is, as he gets into, something like either suffering is intrinsically more important, which I put some weight on that. It's not exactly clear how to distinguish that from the empirical case. And the empirical case is basically it's very easy to lose all your reproductive fitness in the evolutionary world very quickly. It's relatively hard to massively gain a ton. Reproduction is like, even having sex, for example, only increases your relative reproductive success a little bit, whereas you can be killed in an instant. And so this creates an asymmetry where if you buy a functional view of qualia, then it results in there being an asymmetry where animals are just probably going to experience more pain over their lives, by and large, than happiness. And I think this is definitely true. I think wild animal welfare is just net negative. I wish if I could just... If these are the only two options, have there not be any wild animals or have them continue living as they are, I think it would be overwhelmingly morally important to not have them exist anymore. And so tying things back. Yeah, so McCaskill doesn't actually... I don't think he makes a formally incorrect statement. He just strongly implies that this case, that his heuristic of comparing the two tails is a pretty good proxy for the best we can do. And that's where I disagree. I think there's actually one line in the chapter where he basically says, we can get a grip on this very hard problem by doing the following. But I only noticed that when I went back to start writing a blog post. And the vast majority of the chapter is basically just the object level argument or evidence presentation. There's no repetition emphasizing that this is a really, I guess, sketchy, for lack of a better word, dubious case. Or first pass, I guess, is a better way of putting it. This is just a first pass, don't put too much weight on this. That's not how it comes across, at least in my opinion, to the typical reader. And yeah, I think that's everything.

I think there’s a case to be made for exploring the wide range of mediocre outcomes the world could become.

Recent history would indicate that things are getting better faster though. I think MacAskill’s bias towards a range of positive future outcomes is justified, but I think you agree too.

Maybe you could turn this into a call for more research into the causes of mediocre value lock-in. Like why have we had periods of growth and collapse, why do some regions regress, what tools can society use to protect against sinusoidal growth rates.

There's a ton there, but one anecdote from yesterday: referred me to this $5 IOS desktop app which (among other more reasonable uses) made me this full quality, fully intra-linked >3600 page PDF of (almost) every file/site linked to by every file/site linked to from Tomasik's homepage (works best with old-timey simpler sites like that)

It wasn't too hard to put together a text doc with (at least some of each of) all 1470ish shortform posts, which you can view or download here.

Pros: (practically) infinite scroll of insight porn

Cons:

longer posts get cut off at about 300 words

Each post is an ugly block of text

No links to the original post [see doc for more]

Various other disclaimers/notes at the top of the document

I was starting to feel like the If You Give a Mouse a Cookie's eternally-doomed protagonist (it'll look presentable if I just do this one more thing), so I'm cutting myself off here to see whether it might be worth me (or someone else) making it better.

Newer Thing (?)

I do think this could be an MVP (minimal viable product) for a much nicer-looking and readable document, such as:

"this but without the posts cut off and with spacing figured out"

"nice-looking searchable pdf with original media and formatting"

"WWOTF-level-production book and audiobook"

Any of those ^ three options but only for the top 10/100/n posts

So by all means, copy and paste and turn it into something better!

Oh yeah and, if you haven't done so already, I highly recommend going through the top Shortform posts for each of the last four years here

Note: inspired by the FTX+Bostrom fiascos and associated discourse. May (hopefully) develop into longform by explicitly connecting this taxonomy to those recent events (but my base rate of completing actual posts cautions humility)

Event as evidence

The default: normal old Bayesian evidence

The realm of "updates," "priors," and "credences"

Pseudo-definition: Induces [1] a change to or within a model (of whatever the model's user is trying to understand)

Corresponds to models that are (as is often assumed):

Well-defined (i.e. specific, complete, and without latent or hidden information)

Stable except in response to 'surprising' new information

Event as spotlight

Pseudo-definition: Alters the how a person views, understands, or interacts with a model, just as a spotlight changes how an audience views what's on stage

In particular, spotlights change the salience of some part of a model

This can take place both/either:

At an individual level (think spotlight before an audience of one); and/or

To a community's shared model (think spotlight before an audience of many)

They can also which information latent in a model is functionally available to a person or community, just as restricting one's field of vision increases the resolution of whichever part of the image shines through

Example

You're hiking a bit of the Appalachian Trail with two friends, going north, using the following of a map (the "external model")

An hour in, your mental/internal model probably looks like this:

Event: the collapse of a financial institutionyou hear traffic

As evidence, thiscauses you to change where you think you are—namely, a bit south of the first road you were expecting to cross

As spotlight, this causes the three of you to stare at the same map as before model but in such a way that your internal models are all very similar, each looking something like this

Really the crop should be shifted down some but I don't feel like redoing it rn

Ok so things that get posted in the Shortform tab also appear in your (my) shortform post , which can be edited to not have the title "___'s shortform" and also has a real post body that is empty by default but you can just put stuff in.

There's also the usual "frontpage" checkbox, so I assume an individual's own shortform page can appear alongside normal posts(?).

I'm pretty happy with how this "Where should I donate, under my values?" Manifold market has been turning out. Of course all the usual caveats pertaining to basically-fake "prediction" markets apply, but given the selection effects of who spends manna on an esoteric market like this I put a non-trivial weight into the (live) outcomes.

I guess I'd encourage people with a bit more money to donate to do something similar (or I guess defer, if you think I'm right about ethics!), if just as one addition to your portfolio of donation-informing considerations.

This is a really interesting idea! What are your values, so I can make an informed decision?

Thanks! Let me write them as a loss function in python (ha)

For real though:

I think that might literally be it - everything else is contingent!

I was inspired to create this market! I would appreciate it if you weighed in. :)

Some shrinsight (shrimpsight?) from the comments:

A few Forum meta things you might find useful or interesting:

Open Philanthropy: Our Approach to Recruiting a Strong TeamHistories of Value Lock-in and Ideology CritiqueWhy I think strong general AI is coming soonAnthropics and the Universal DistributionRange and Forecasting AccuracyA Pin and a Balloon: Anthropic Fragility Increases Chances of Runaway Global WarmingStrategic considerations for effective wild animal suffering workRed teaming a model for estimating the value of longtermist interventions - A critique of Tarsney's "The Epistemic Challenge to Longtermism"Welfare stories: How history should be written, with an example (early history of Guam)Summary of Evidence, Decision, and CausalitySome AI research areas and their relevance to existential safetyMaximizing impact during consulting: building career capital, direct work and more.Independent Office of Animal ProtectionInvestigating how technology-focused academic fields become self-sustainingUsing artificial intelligence (machine vision) to increase the effectiveness of human-wildlife conflict mitigations could benefit WAWCrucial questions about optimal timing of work and donationsWill we eventually be able to colonize other stars? Notes from a preliminary reviewPhilanthropists Probably Shouldn't Mission-Hedge AI ProgressMade a podcast feed with EAG talks. Now has both the recent Bay Area and London ones:

Full vids on the CEA Youtube page

So the EA Forum has, like, an ancestor? Is this common knowledge? Lol

Felicifia: not functional anymore but still available to view. Learned about thanks to a Tweet from Jacy

From Felicifia Is No Longer Accepting New Users:

Update: threw together

Wow, blast from the past!

A (potential) issue with MacAskill's presentation of moral uncertainty

Not able to write a real post about this atm, though I think it deserves one.

MacAskill makes a similar point in WWOTF, but IMO the best and most decision-relevant quote comes from his second appearance on the 80k podcast:

I don't think the second bolded sentence follows in any objective or natural manner from the first. Rather, this reasoning takes a distinctly total utilitarian meta-level perspective, summing the various signs of utility and then implicitly considering them under total utilitarianism.

Even granting that the mora arithmetic is appropriate and correct, it's not at all clear what to do once the 2:1 accounting is complete. MacAskill's suffering-focused twin might have reasoned instead that

A better proxy for genuine neutrality (and the best one I can think of) might be to simulate bargaining over real-world outcomes from each perspective, which would probably result in at least some proportion of one's resources being deployed as though negative utilitarianism were true (perhaps exactly 50%, though I haven't given this enough thought to make the claim outright).

Idea/suggestion: an "Evergreen" tag, for old (6 months month? 1 year? 3 years?) posts (comments?), to indicate that they're still worth reading (to me, ideally for their intended value/arguments rather than as instructive historical/cultural artifacts)

As an example, I'd highlight Log Scales of Pleasure and Pain, which is just about 4 years old now.

I know I could just create a tag, and maybe I will, but want to hear reactions and maybe generate common knowledge.

I think we want someone to push them back into the discussion.

Or you know, have editable wiki versions of them.

The recent 80k podcast on the contingency of abolition got me wondering what, if anything, the fact of slavery's abolition says about the ex ante probability of abolition - or more generally, what one observation of a binary random variable X says about p as in

Turns out there is an answer (!), and it's found starting in paragraph 3 of subsection 1 of section 3 of the Binomial distribution Wikipedia page:

Don't worry, I had no idea what Beta(α,β) was until 20 minutes ago. In the Shortform spirit, I'm gonna skip any actual explanation and just link Wikipedia and paste this image (I added the uniform distribution dotted line because why would they leave that out?)

So...

Cool, so for the n=1 case, we get that if you have a prior over the ex ante probability space[0,1] described by one of those curves in the image, you...

In the uniform case (which actually seems kind of reasonable for abolition), you...

The uniform prior case just generalizes to Laplace's Law of Succession, right?

In terms of result,yeah it does, but I sorta half-intentionally left that out because I don't actually think LLS is true as it seems to often be stated.Why the strikethrough: after writing the shortform, I get that e.g., "if we know nothing more about them" and "in the absence of additional information" mean "conditional on a uniform prior," but I didn't get that before. And Wikipedia's explanation of the rule,

seems both unconvincing as stated and, if assumed to be true, doesn't depend on that crucial assumption

The last line contains a typo, right?

Fixed, thanks!

Hypothesis: from the perspective of currently living humans and those who will be born in the currrent <4% growth regime only (i.e. pre-AGI takeoff or I guess stagnation) donations currently earmarked for large scale GHW, Givewell-type interventions should be invested (maybe in tech/AI correlated securities) instead with the intent of being deployed for the same general category of beneficiaries in <25 (maybe even <1) years.

The arguments are similar to those for old school "patient philanthropy" except now in particular seems exceptionally uncertain wrt how to help humans because of AI.

For example, it seems plausible that the most important market the global poor don't have access to is literally the NYSE (ie rather than for malaria nets), because ~any growth associated with (AGI + no 'doom') will leave the global poor no better off by default (i.e. absent redistribution or immigration reform) unlike e.g., middle class westerners who might own a bit of the S&P500. A solution could be for e.g. OpenPhil to invest on their behalf.

(More meta: I worry that segmenting off AI as fundamentally longtermist is leaving a lot of good on the table; e.g. insofar as this isn't currently the case, I think OP's GHW side should look into what kind of AI-associated projects could do a lot of good for humans and animals in the next few decades.)

I'm skeptical of this take. If you think sufficiently transformative + aligned AI is likely in the next <25 years, then from the perspective of currently living humans and those who will be born in the current <4% growth regime, surviving until transformative AI arrives would be a huge priority. Under that view, you should aim to deploy resources as fast as possible to lifesaving interventions rather than sitting on them.

I tried making a shortform -> Twitter bot (ie tweet each new top level ~quick take~) and long story short it stopped working and wasn't great to begin with.

I feel like this is the kind of thing someone else might be able to do relatively easily. If so, I and I think much of EA Twitter would appreciate it very much! In case it's helpful for this, a quick takes RSS feed is at https://ea.greaterwrong.com/shortform?format=rss

I would be interested in following this bot if it were made. Thanks for trying!

Note: this sounds like it was written by chatGPT because it basically was (from a recorded ramble)🤷

I believe the Forum could benefit from a Shorterform page, as the current Shortform forum, intended to be a more casual and relaxed alternative to main posts, still seems to maintain high standards. This is likely due to the impressive competence of contributors who often submit detailed and well-thought-out content. While some entries are just a few well-written sentences, others resemble blog posts in length and depth.

As such, I find myself hesitant to adhere to the default filler text in the submission editor when visiting this page. However, if it were more informal and less intimidating in nature, I'd be inclined to post about various topics that might otherwise seem out of place. To clarify, I'm not suggesting we resort to jokes or low-quality "shitposts," but rather encourage genuine sharing of thoughts without excessive analysis.

Perhaps adopting an amusing name like "EA Shorterform" would help create a more laid-back atmosphere for users seeking lighter discussions. By doing so, we may initiate a preference falsification cascade where everyone feels comfortable enough admitting their desire for occasional brevity within conversations. Who knows? Maybe I'll start with posting just one sentence soon!

WWOTF: what did the publisher cut? [answer: nothing]

Contextual note: this post is essentially a null result. It seemed inappropriate both as a top-level post and as an abandoned Google Doc, so I’ve decided to put out the key bits (i.e., everything below) as Shortform. Feel free to comment/message me if you think that was the wrong call!

Actual post

On his recent appearance on the 80,000 Hours Podcast, Will MacAskill noted that Doing Good Better was significantly influenced by the book’s publisher:[1]

I thought it was important to know whether the same was true with respect to What We Owe the Future, so I reached out to Will's team and received the following response from one of his colleagues [emphasis mine]:

Quote starts at 39:47

This post is half object level, half experiment with “semicoherent audio monologue ramble → prose” AI (presumably GPT-3.5/4 based) program audiopen.ai.

In the interest of the latter objective, I’m including 3 mostly-redundant subsections:

1) Dubious asymmetry argument in WWOTF

In Chapter 9 of his book, What We Are the Future, Will MacAskill argues that the future holds positive moral value under a total utilitarian perspective. He posits that people generally use resources to achieve what they want - either for themselves or for others - and thus good outcomes are easily explained as the natural consequence of agents deploying resources for their goals. Conversely, bad outcomes tend to be side effects of pursuing other goals. While malevolence and sociopathy do exist, they are empirically rare.

MacAskill argues that in a future with continued economic growth and no existential risk, we will likely direct more resources towards doing good things due to self-interest and increased impartial altruism. He contrasts this eutopian scenario with an anti-eutopia: the worst possible world, which he argues (compellingly, I think) less probable because it requires convoluted explanations as opposed to simple desires like enjoying ice cream. He concludes that the probability of achieving a eutopia outweighs the low likelihood but extreme negative consequences of an anti-eutopia.

However, I believe McCaskill's analysis neglects an important aspect: considering not only these two extremes but also the middle distribution where neither significant amounts of resources nor agentic intervention occur.

When physics operates without agency-driven resource allocation, we have good reason to expect evolution to create conscious beings whose suffering we can attribute to the ease with which animals (or animal-like beings) can lose all future expected genetic reproduction - as MacAskill himself argues elsewhere in the book.

Importantly, though, this non-agentic suffering, seems more likely to complement agentic resource deployment - not substitute for it as one might intuit. That’s because human or post-human expansion necessarily entails the expansion of concentrated physical energy, and seems likely to entail the expansion of other scarce, pro-biotic resources such as DNA, water, and computation.

Although McCaskill does not explicitly claim his binary model comparing eutopia and anti-eutopia is sufficient for understanding this complex problem, it seems to me to be implied;

Only upon attempting to draft a blog post revisiting his work did I noticed the line “We can make some progress by focusing on just two extreme scenarios: the best or worst possible futures, eutopia and anti-eutopia,” acknowledging status of this model as a bit of evidence in the larger question of the future’s value.

In sum, I think MacAskill's analysis would benefit from addressing the morally relevant middle distribution to provide a more accurate representation of the future under total utilitarianism.

2) Utilitarian Utopia, Anti-Utopia and the Neglected Middle

In Chapter 9 of his book, What We Are the Future, Will McCaskill argues that the future holds positive moral value under a total utilitarian perspective. He posits that people generally use resources to achieve what they want - either for themselves or others - and thus good outcomes are often intentional. Conversely, bad outcomes tend to be side effects of pursuing other goals. While malevolence and sociopathy do exist, they are empirically rare.

McCaskill then extrapolates this argument to suggest that in a future with continued economic growth (assuming no existential risk), we will likely direct more resources towards doing good things due to self-interest and increased impartial altruism. He contrasts this utopian scenario with an anti-utopia: the worst possible world which is less probable because it requires convoluted explanations as opposed to simple desires like enjoying ice cream.

He concludes that the probability of achieving a utopia outweighs the low likelihood but extreme negative consequences of an anti-utopia. However, I believe McCaskill's analysis neglects an important aspect: considering not only these two extremes but also the middle distribution where neither significant amounts of resources nor agentic intervention occur.

In such cases where physics operates without agency-driven resource allocation, evolution can create conscious beings like plants and animals who experience suffering without any intentionality behind it. This middle distribution may actually skew negatively since evolution favors reproductive fitness at any cost; as a result, sentient beings could suffer more than they experience happiness during their lives.

I argue that wild animal welfare is net negative overall; if given a choice between having them continue living or ceasing their existence altogether, I would choose nonexistence on moral grounds. Although McCaskill does not explicitly claim his heuristic comparison between utopia and anti-utopia is sufficient for understanding this complex problem, he strongly implies it throughout most of his chapter.

Upon revisiting his work while drafting my response blog post, I noticed a single line acknowledging the limitations of his approach. However, this caveat does not adequately emphasize that his argument should be considered only as a first pass and not definitive proof. In my opinion, McCaskill's analysis would benefit from addressing the morally relevant middle distribution to provide a more accurate representation of the future under total utilitarianism.

-------

3) Original Transcript

Okay, so I'm going to describe where I think I disagree with Will McCaskill in Chapter 9 of his book, What We Are the Future, where he basically makes an argument that the future is positive in expectation, positive moral value under a total utilitarian perspective. And so his argument is basically that people, it's very easy to see that people deploy the resources in order to get what they want, which is either to help themselves and sometimes to help other people, whether it's just their family or more impartial altruism. Basically you can always explain why somebody does something good just because it's good and they want it, which is kind of, I think that's correct and compelling. Whereas when something bad happens, it's generally the side effect of something else. At least, yeah. So while there is malevolence and true sociopathy, those things are in fact empirically quite rare, but if you undergo a painful procedure, like a medical procedure, it's because there's something affirmative that you want and that's a necessary side effect. It's not because you actually sought that out in particular. And all this I find true and correct and compelling. And so then he uses this to basically say that in the future, presumably conditional on continued economic growth, which basically just means no existential risk and humans being around, we'll be employing a lot of resources in the direction of doing things well or doing good. Largely just because people just want good things for themselves and hopefully to some extent because there will be more impartial altruists willing to both trade and to put their own resources in order to help others. And once again, all true, correct, compelling in my opinion. So on the other side, so basically utopia in this sense, utopia basically meaning employing a lot of, the vast majority of resources in the direction of doing good is very likely and very good. On the other side, it's how likely and how bad is what he calls anti-utopia, which is basically the worst possible world. And he basically using... I don't need to get into the particulars, but basically I think he presents a compelling argument that in fact it would be worse than the best world is good, at least to the best of our knowledge right now. But it's very unlikely because it's hard to see how that comes about. You actually can invent stories, but they get kind of convoluted. And it's not nearly as simple as, okay, people like ice cream and so they buy ice cream. It's like, you have to explain why so many resources are being deployed in the direction of doing good things and you still end up with a terrible world. Then he basically says, okay, all things considered, the probability of good utopia wins out relative to the badness, but very low probability of anti-utopia. Again, a world full of misery. And where I think he goes wrong is that he neglects the middle of the distribution where the distribution is ranging from... I don't know how to formalize this, but something like percentage or amount of... Yeah, one of those two, percentage or amount of resources being deployed in the direction of on one side of the spectrum causing misery and then the other side of the spectrum causing good things to come about. And so he basically considers the two extreme cases. But I claim that, in fact, the middle of the distribution is super important. And actually when you include that, things look significantly worse because the middle of the distribution is basically like, what does the world look like when you don't have agents essentially deploying resources in the direction of anything? You just have the universe doing its thing. We can set aside the metaphysics or physics technicalities of where that becomes problematic. Anyway, so basically the middle of the distribution is just universe doing its thing, physics operating. I think there's the one phenomenon that results from this that we know of to be morally important or we have good reason to believe is morally important is basically evolution creating conscious beings that are not agentic in the sense that I care about now, but basically like plants and animals. And presumably I think you have good reason to believe animals are sentient. And evolution, I claim, creates a lot of suffering. And so you look at the middle of the distribution and it's not merely asymmetrical, but it's asymmetrical in the opposite direction. So I claim that if you don't have anything, if you don't have lots of resources being deployed in any direction, this is a bad world because you can expect evolution to create a lot of suffering. The reason for that is, as he gets into, something like either suffering is intrinsically more important, which I put some weight on that. It's not exactly clear how to distinguish that from the empirical case. And the empirical case is basically it's very easy to lose all your reproductive fitness in the evolutionary world very quickly. It's relatively hard to massively gain a ton. Reproduction is like, even having sex, for example, only increases your relative reproductive success a little bit, whereas you can be killed in an instant. And so this creates an asymmetry where if you buy a functional view of qualia, then it results in there being an asymmetry where animals are just probably going to experience more pain over their lives, by and large, than happiness. And I think this is definitely true. I think wild animal welfare is just net negative. I wish if I could just... If these are the only two options, have there not be any wild animals or have them continue living as they are, I think it would be overwhelmingly morally important to not have them exist anymore. And so tying things back. Yeah, so McCaskill doesn't actually... I don't think he makes a formally incorrect statement. He just strongly implies that this case, that his heuristic of comparing the two tails is a pretty good proxy for the best we can do. And that's where I disagree. I think there's actually one line in the chapter where he basically says, we can get a grip on this very hard problem by doing the following. But I only noticed that when I went back to start writing a blog post. And the vast majority of the chapter is basically just the object level argument or evidence presentation. There's no repetition emphasizing that this is a really, I guess, sketchy, for lack of a better word, dubious case. Or first pass, I guess, is a better way of putting it. This is just a first pass, don't put too much weight on this. That's not how it comes across, at least in my opinion, to the typical reader. And yeah, I think that's everything.

I think there’s a case to be made for exploring the wide range of mediocre outcomes the world could become.

Recent history would indicate that things are getting better faster though. I think MacAskill’s bias towards a range of positive future outcomes is justified, but I think you agree too.

Maybe you could turn this into a call for more research into the causes of mediocre value lock-in. Like why have we had periods of growth and collapse, why do some regions regress, what tools can society use to protect against sinusoidal growth rates.

A resource that might be useful: https://tinyapps.org/

There's a ton there, but one anecdote from yesterday: referred me to this $5 IOS desktop app which (among other more reasonable uses) made me this full quality, fully intra-linked >3600 page PDF of (almost) every file/site linked to by every file/site linked to from Tomasik's homepage (works best with old-timey simpler sites like that)

New Thing

Last week I complained about not being able to see all the top shortform posts in one list. Thanks to Lorenzo for pointing me to the next best option:

It wasn't too hard to put together a text doc with (at least some of each of) all 1470ish shortform posts, which you can view or download here.

I was starting to feel like the If You Give a Mouse a Cookie's eternally-doomed protagonist (it'll look presentable if I just do this one more thing), so I'm cutting myself off here to see whether it might be worth me (or someone else) making it better.

Newer Thing (?)

Oh yeah and, if you haven't done so already, I highly recommend going through the top Shortform posts for each of the last four years here

Infinitely easier said than done, of course, but some Shortform feedback/requests

For 2.a the closest I found is https://forum.effectivealtruism.org/allPosts?sortedBy=topAdjusted&timeframe=yearly&filter=all, you can see the inflation-adjusted top posts and shortforms by year.

For 1 it's probably best to post in the EA Forum feature suggestion thread

Late but thanks on both, and commented there!

Events as evidence vs. spotlights

Note: inspired by the FTX+Bostrom fiascos and associated discourse. May (hopefully) develop into longform by explicitly connecting this taxonomy to those recent events (but my base rate of completing actual posts cautions humility)

Event as evidence

Event as spotlight

Example

the collapse of a financial institutionyou hear trafficOr fails to induce

Ok so things that get posted in the Shortform tab also appear in your (my) shortform post , which can be edited to not have the title "___'s shortform" and also has a real post body that is empty by default but you can just put stuff in.

There's also the usual "frontpage" checkbox, so I assume an individual's own shortform page can appear alongside normal posts(?).

The link is: [Draft] Used to be called "Aaron Bergman's shortform" (or smth)

I assume only I can see this but gonna log out and check

Effective Altruism Georgetown will be interviewing Rob Wiblin for our inaugural podcast episode this Friday! What should we ask him?