Aaron Bergman

Bio

Participation4

I graduated from Georgetown University in December, 2021 with degrees in economics, mathematics and a philosophy minor. There, I founded and helped to lead Georgetown Effective Altruism. Over the last few years recent years, I've interned at the Department of the Interior, the Federal Deposit Insurance Corporation, and Nonlinear, a newish longtermist EA org.

I'm now doing research thanks to an EA funds grant, trying to answer hard, important EA-relevant questions. My first big project (in addition to everything listed here) was helping to generate this team Red Teaming post.

Blog: aaronbergman.net

How others can help me

- Suggest action-relevant, tractable research ideas for me to pursue

- Give me honest, constructive feedback on any of my work

- Introduce me to someone I might like to know :)

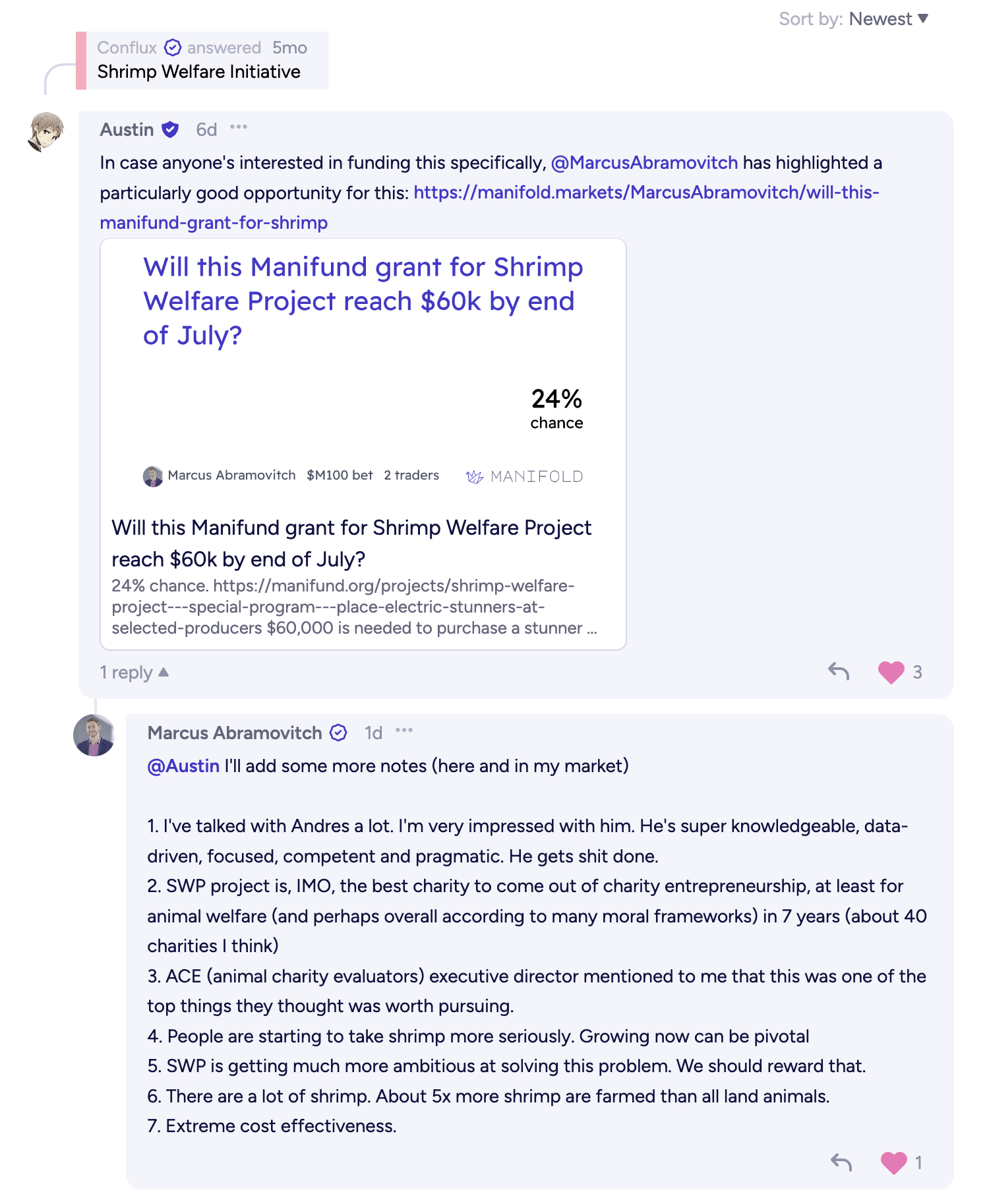

- Convince me of a better marginal use of small-dollar donations than giving to the Fish Welfare Initiative, from the perspective of a suffering-focused hedonic utilitarian.

- Offer me a job if you think I'd be a good fit

- Send me recommended books, podcasts, or blog posts that there's like a >25% chance a pretty-online-and-into-EA-since 2017 person like me hasn't consumed

- Rule of thumb standard maybe like "at least as good/interesting/useful as a random 80k podcast episode"

How I can help others

- Open to research/writing collaboration :)

- Would be excited to work on impactful data science/analysis/visualization projects

- Can help with writing and/or editing

- Discuss topics I might have some knowledge of

- like: math, economics, philosophy (esp. philosophy of mind and ethics), psychopharmacology (hobby interest), helping to run a university EA group, data science, interning at government agencies

Posts 14

Comments111

Idea/suggestion: an "Evergreen" tag, for old (6 months month? 1 year? 3 years?) posts (comments?), to indicate that they're still worth reading (to me, ideally for their intended value/arguments rather than as instructive historical/cultural artifacts)

As an example, I'd highlight Log Scales of Pleasure and Pain, which is just about 4 years old now.

I know I could just create a tag, and maybe I will, but want to hear reactions and maybe generate common knowledge.

Thanks! Let me write them as a loss function in python (ha)

For real though:

- Some flavor of hedonic utilitarianism

- I guess I should say I have moral uncertainty (which I endorse as a thing) but eh I'm pretty convinced

- Longtermism as explicitly defined is true

- Don't necessarily endorse the cluster of beliefs that tend to come along for the ride though

- "Suffering focused total utilitarian" is the annoying phrase I made up for myself

- I think many (most?) self-described total utilitarians give too little consideration/weight to suffering, and I don't think it really matters (if there's a fact of the matter) whether this is because of empirical or moral beliefs

- Maybe my most substantive deviation from the default TU package is the following (defended here):

- "Under a form of utilitarianism that places happiness and suffering on the same moral axis and allows that the former can be traded off against the latter, one might nevertheless conclude that some instantiations of suffering cannot be offset or justified by even an arbitrarily large amount of wellbeing."

- Moral realism for basically all the reasons described by Rawlette on 80k but I don't think this really matters after conditioning on normative ethical beliefs

- Nothing besides valenced qualia/hedonic tone has intrinsic value

I think that might literally be it - everything else is contingent!

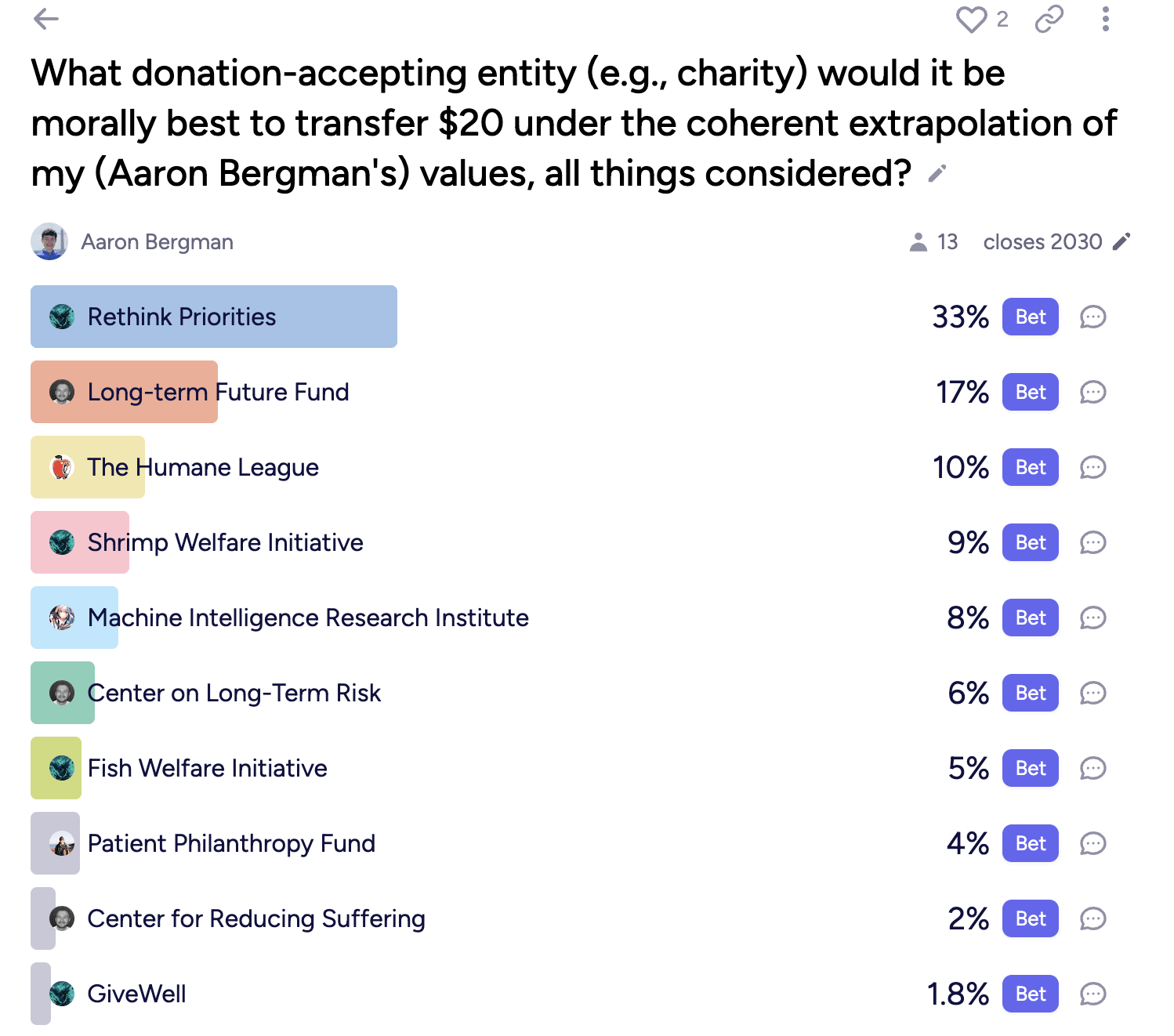

I'm pretty happy with how this "Where should I donate, under my values?" Manifold market has been turning out. Of course all the usual caveats pertaining to basically-fake "prediction" markets apply, but given the selection effects of who spends manna on an esoteric market like this I put a non-trivial weight into the (live) outcomes.

I guess I'd encourage people with a bit more money to donate to do something similar (or I guess defer, if you think I'm right about ethics!), if just as one addition to your portfolio of donation-informing considerations.

Just chiming in to say I have a similar situation, although less extreme. Was vegan for 4 years and eventually concluded it wasn’t sustainable or realistic for me. Main animal products I buy are grass fed beef, grass fed whey protein, eggs from brands that at least go to decent lengths to make themselves seem non-horrible (3rd party humane certified, outdoor access) and a bit of conventional dairy (cheese, butter). I’d be lying if I said I’ve never bought anything “worse” than those, though.

I’ve definitely thought about this and short answer: depends on who “we” is.

A sort of made up particular case I was imagining is “New Zealand is fine, everywhere else totally destroyed” because I think it targets the general class of situation most in need of action (I can justify this on its own terms but I’ll leave it for now)

In that world, there’s a lot of information that doesn't get lost: everything stored in the laptops and servers/datacenters of New Zealand (although one big caveat and the reason I abandoned the website is that I lost confidence that info physically encoded in eg a cloud server in NZ would be de facto accessible without a lot of the internet’s infrastructure physically located elsewhere), everything in all its university libraries, etc.

That is a gigantic amount of info, and seems to pretty clearly satisfy the “general info to rebuild society” thing. FWIW I think this holds if only a medium size city were to remain intact, not certain if it’s say a single town in Northern Canada, probably not a tiny fishing village, but in the latter case it’s hard to know what a tractable intervention would be.

But what does get lost? Anything niche enough not to be downloaded on a random NZers computer or in a physical book in a library. Not everything I put in the archive, to be sure, but probably most of it.

Also, 21GB of the type of info I think you’re getting at is in the “non EA info for the post apocalypse folder” because why not! :)

What’s are some questions you hope someone’s gonna ask that seem relatively unlikely to get asked organically?

Bonus: what are the answers to those questions?