Should we break up Google DeepMind?

Regulators should review the 2014 DeepMind acquisition. When Google bought DeepMind in 2014, no regulator, not the FTC, not the EC's DG COMP, nor the CMA, scrutinized the impact. Why? AI startups have high value but low revenues. And so they avoid regulation (and tax, see below). Buying start-ups with low revenues flies under the thresholds of EU merger regulation[1] or the CMA's 'turnover test' (despite it being a 'relevant enterprise' under the National Security and Investment Act). In 2020, the FTC ordered Big Tech to provide info on M&A from 2010-2019 that it didn't report (UK regulators should urgently do so as well given that their retrospective powers might only be 10 years).[2]

Regulators should also review the 2023 Google-DeepMind internal merger. DeepMind and Google Brain are key players in AI. In 2023, they merged into Google DeepMind. This compromises independence, reduces competition for AI talent and resources, and limits alternatives for collaboration partners.

Though they are both part of Google, regulators can scrutinize this, regardless of corporate structure. For instance, UK regulators have intervened in M&A of enterprises already under common ownership—especially in Tech (cf UK regulators ordered FB to sell GIPHY).

And so, regulators should consider breaking up Google Deepmind as per recent proposals:

- A new paper 'Unscrambling the eggs: breaking up consummated mergers and dominant firms' by economists at Imperial cites Google DeepMind as a firm that could be unmerged. [3]

- A new Brookings paper also argues that if other means to ensure fair markets fail, then as a last resort, foundation model firms may need to be broken up on the basis of functions, akin to how we broke up AT&T.[4]

- Relatedly, some top economists agree that we should designate Google Search as 'platform utilities' and break it apart from any participant on that platform, most agree that we should explore this further to weigh costs and benefits.[5]

- Indeed, the EU accuses Google of abusing dominance in ad tech and may force it to sell parts of its firm.[6]

- Kustomer, a firm of a similar size to DeepMind bought by Facebook, recently spun out again and shows this is possible.

- Finally, DeepMind itself has in the past tried to break away from Google.[7]

Since DeepMind's AI improves all Google products, regulators should work cross-departmentally to scrutinize both mergers above on the following grounds:

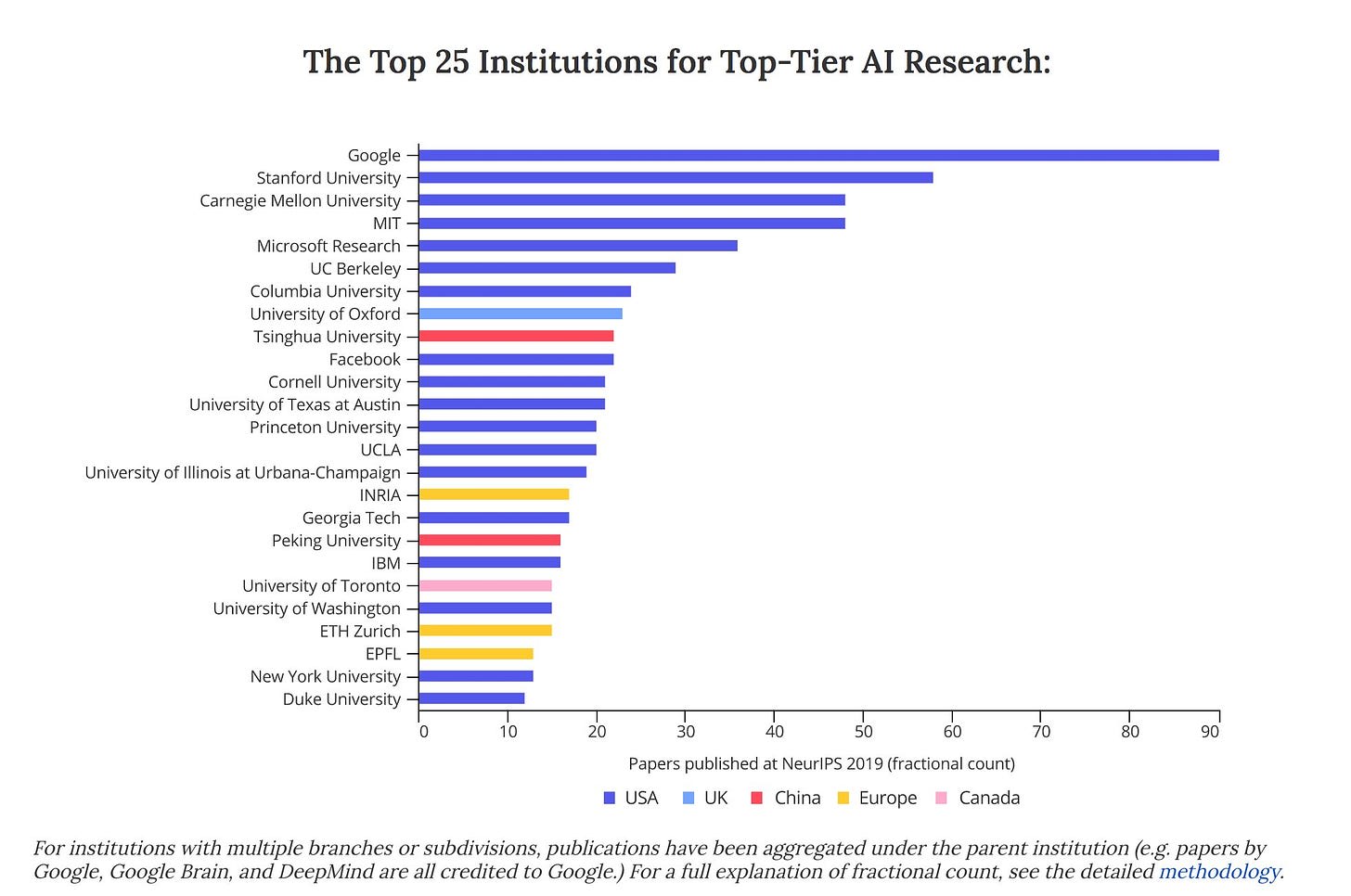

- Market dominance: Google dominates the field of AI, surpassing all universities in terms of high-quality publications:

- Tax avoidance: Despite billions in UK profits yearly, Google is only taxed $60M.[9] DeepMind's is only taxed ~$1M per year on average. [10],[11] We should tax them more fairly. DeepMind's recent revenue jump is due to creative accounting, as it doesn't have many revenue streams, but almost all are based on how much Google arbitrarily pays for internal services. Indeed, Google just waived $1.5B in DeepMind's 'startup debt' [12],[13] despite DeepMind's CEO boasting that they have a unique opportunity as part of Google and its dozens of billion user products by immediately shipping their advances into[14] and saving Google hundreds of millions in energy costs.[15] About 85% of the innovations causing the recent AI boom came from Google DeepMind.[16] DeepMind also holds 560 patents,[17] and this IP is very hard to value and tax. Such a bad precedent might cause either more tax avoidance by OpenAI, Microsoft AI, Anthropic, Palantir, and A16z setting up UK offices, or it will give Google an unfair edge over these smaller firms).

- Public interest concerns: DeepMind's AI improves YouTube's algorithm and thus DeepMind indirectly polarizes voters.[18] Regulators should investigate plurality concerns.

- Consumer harm: Google has violated data privacy commitments and consumer protection laws in the past. Regulators have investigated Google for tracking users and perhaps breaching EU law,[19] [20] and misleading users about location data collection.[21] DeepMind, too, got NHS data from 1.6M patients,[22] conditional on strictly controlled access and not connecting it to Google services.[23] But then DeepMind violated the agreement by putting mammograms on the cloud.

- National security grounds: since Google is a key US defense contractor, the UK could break up DeepMind under the new UK National Security and Investment Act[24] (though the initial acquisition might not qualify as the act only applies retrospectively since 2020).[25] Regulators have also stopped Nvidia from buying the chip manufacturer Arm on national security grounds since the chip industry is critical for international security: the chip export ban on China is 'the most aggressive US foreign policy of the last 20 years'[26]. Regulators must work with other departments (e.g. defense) to investigate international security implications of Arm's future IPO (e.g. foreign ownership etc.).

A Google DeepMind merger probe would raise questions about its future in and with the UK. DeepMind contributes 80% to the UK's 8% of citations in top AI papers.[8]

How far should AI firms be integrated into the state, or even nationalized, as a matter of industrial or security policy, as it works on ever more sensitive and powerful AI?

The West competes with China for AI supremacy, which may translate to military dominance. Google DeepMind is a key contributor to AI research, which also benefits science (cf. Alphafold). Would breaking up Google help or hurt these AI efforts?

Smith[27] considers relevant historical cases:

- Bell Labs, the corporate lab responsible for many major mid-20th-century innovations (e.g. radio astronomy, transistors, lasers, solar cells, information theory, Unix, C++, etc. led to ten Nobels). This was funded by Bell's telecom monopoly profits. But after the US broke up Bell, Bell Labs died, which led to a decline in innovations from the labs, which had been freely licensed at low prices due to a deal with the government. And so, US telecom innovation fell behind China's state-supported giant Huawei. Similarly, breaking up Google might lead to cheaper ads, at the expense of US leadership in the key technology of our day.

- Intel's dominance in the chips market led to complacency and neglect of emerging markets in low-power chips, foundry manufacturing, and GPUs for AI. And so, Intel's position has declined, and it relies on firms vulnerable to geopolitics (e.g. TSMC). Google might sink into a similar inertia: its profits come mostly from ads, unlike competitors like Amazon and Microsoft who have diversified into cloud computing etc. Google needs to focus on AI efforts more aggressively, which has so far been academic, while Microsoft aggressively develops its partner OpenAI. If we break off Google's ad business, we might incentivize it to invest more in other business models, and that includes prioritizing AI R&D.

- Microsoft. After threatening to break up Microsoft, the US eventually settled by limiting the firm's profits from its operating system monopoly. Despite initial struggles in search etc, Microsoft later recovered by investing in R&D, and became a key player in cloud computing, and helped develop OpenAI. Thus, breaking up Google may be worse for addressing anti-competition practices in ads, or AI, than simply ordering Google to cease these practices or cap its profits from its monopoly. This would improve the ad market while allowing Google to continue operating and developing new tech.

I suspect the primary reasons you want to break up Deepmind from Google is to:

Perhaps that goes without saying, but I think it's worth explicitly mentioning. In a world without AI risk, I don't believe you would be citing various consumer harms to argue for a break up.

The traditional argument for breaking up companies and preventing mergers is to reduce the company's market power, increasing consumer surplus. In this case, the implicit reason for breaking up Deepmind is to decrease its competitiveness thus reducing consumer surplus.

I think it's perfectly fine to argue for this, I just really want us to be explicit about it.

Huh, fwiw I thought this proposal would increase AI risk, since it would increase competitive dynamics (and generally make coordinating on slowing down harder). I at least didn't read this post as x-risk motivated (though I admit I was confused what it's primary motivation was).

I read it as aiming to reduce AI risk by increasing the cost of scaling.

I also don't see how breaking deepmind off from Google would increase competitive dynamics. Google, Microsoft, Amazon and other big tech partners are likely to be pushing their subsidiaries to race even faster since they are likely to have much less conscientiousness about AI risk than the companies building AI. Coordination between DeepMind and e.g. OpenAI seems much easier than coordination between Google and Microsoft.

Less than a year ago Deepmind and Google Brain were two separate companies (both making cutting-edge contributions to AI development). My guess is if you broke off Deepmind from Google you would now just pretty quickly get competition between Deepmind and Google Brain (and more broadly just make the situation around slowing things down a more multilateral situation).

But more concretely, anti-trust action makes all kinds of coordination harder. After an anti-trust action that destroyed billions of dollars in economic value, the ability to get people in the same room and even consider coordinating goes down a lot, since that action itself might invite further anti-trust action.

AI labs tend to partner with Big Tech for money, data, compute, scale etc. (e.g. Google Deepmind, Microsoft/OpenAI, and Amazon/Anthropic). Presumably to compete better? If they they're already competing hard now, then it seems unlikely that they'll coordinate much on slowing down in the future.

Also, it seems like a function of timelines: antitrust advocates argue that breaking up firms / preventing mergers would slow industry down in the short-run but speed up in the long-run by increasing competition, but if competition is usually already healthy, as libertarians often argue, then antitrust interventions might slow down industries in the long-run.

I also think that it's far from given that the option which would minimise consumer harm from monopoly would also minimise pressure to race.

An AI research institute spun off by the regulator under pressure to generate business models to stay viable is plausibly a lot more inclined to 'race', than an AI research institute swimming in ad money which can earn its keep by incrementally improving search, ads and phone UX and generating good PR with its more abstract research along the way. Monopolies are often complacent about exploiting their research findings, and Google's corporate culture has historically not been particularly compatible with launching sort of military or enterprise tooling that represents the most obviously risky use of 'AI'.

There are of course arguments the other way (Google has a lot more money and data than putative spinouts) but people need to predict what a divested DeepMind would do before concluding breaking up Google is a safety win.

I only said we should look into this more and have reviewed the pros and cons from different angles (e.g. not only consumer harms). As you say, the standard argument is that breaking up monopolists like Google increases consumer surplus and this might also apply here.

But I'm not sure in how far, in the short and long-run, this increases/decreases AI risks and/or race dynamics and within the west or between countries. This approach might be more elegant than Pausing AI, which definitely reduces consumer surplus.

Since this is tagged "Existential risk": What does this have to do with existential risk? Or is it not supposed to be about existential risk, not even indirectly? As far as I can tell, the article does not talk about existential risk. I could make my own guesses and association of this topic with existential risk, but I would prefer if this is spelled out.

I broadly think it's cool to be raising novel (to me) possibilities like this, and I think you've done a good job of illustrating that it's not obviously out of line with existing practice. Thanks for writing it!

Minor formatting / typographical things: I think the image is misplaced from where the text refers to it. Also, weirdly, a lot of the single quotation marks in the text are duplicated?

Do you have a call to action here? Are you expecting that someone reading this on the forum has any ability to make it more (or less) likely to happen?

AI policy folks and research economists could engage with the arguments and the cited literature.

Grassroots folks like Pause AI sympathizers could put pressure on politicians and regulators to investigate this more (some claims, like the tax avoidance stuff seems most robustly correct and good).

At least from an AI risk perspective, it's not at all clear to me that this would improve things as it would lead to a further dispersion of this knowledge outward.

Executive summary: Regulators should review Google's acquisition of DeepMind in 2014 and their recent internal merger in 2023, and consider breaking up Google DeepMind due to concerns about market dominance, tax avoidance, public interest, consumer harm, and national security.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.