Every time I come across an old post in the EA forum I wonder if the karma score is low because people did not get any value from it or if people really liked it and it only got a lower score because fewer people were around to upvote it at that time. Fortunately, you can send queries to the EA Forum API to get the data that could answer this question. In the following post I will describe how karma and post controversy (measured by the amount of votes a post got and the relation of downvotes to upvotes, more detailed explanation below) developed over time in the EA forum and provide a list of the best rated posts relative to the amount of activity in the forum. Before you read the post, take a moment to think about the following questions:

- What is your best guess for the most well received post, relative to the amount of activity in the forum at the time it was posted?

- Do you think the controversy of posts in the EA Forum has decreased or increased over time or stayed the same?

Karma

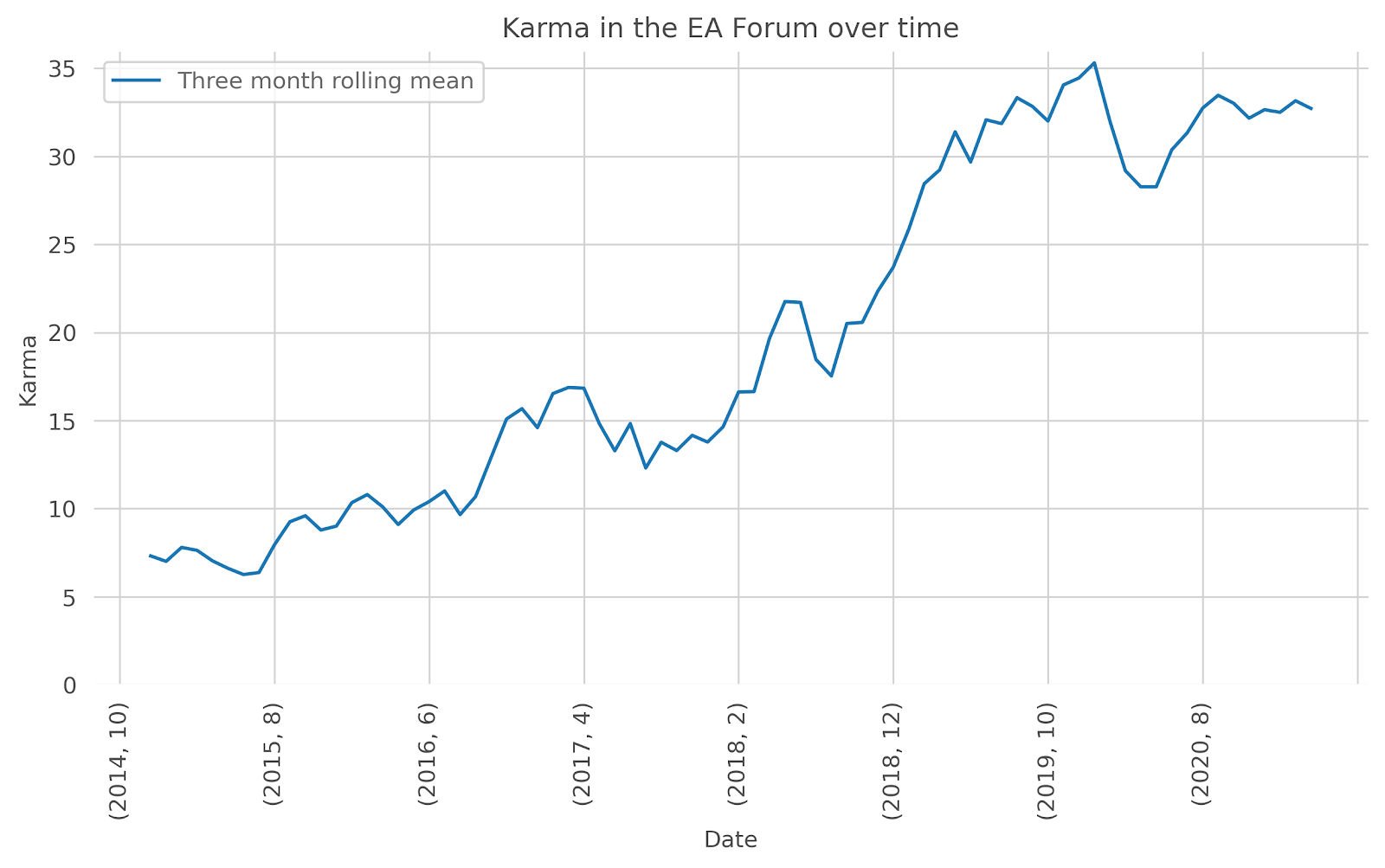

I got the metadata of the posts by simply sending a query to GraphiQL. Unfortunately, this apparently maxes out at 5000 posts, but this also seems to be around the same number of posts in the forum overall. Therefore, likely some random posts were excluded from this analysis, but the overall trend should be right. Still, I would be interested in how to circumvent this for future analysis. After bringing the answer of the query into a reasonable format I could calculate the average monthly karma of posts (you can find the data and code used here). If we plot the average monthly karma, we can see a steady increase over time. Interestingly, it seems to have stagnated a bit in the last ~ two years. This might be partly explained by the increase in the amount of posts, as 2020 saw more than three times as much posts as 2018 did.

We can use this mean monthly karma to scale all our posts by simply dividing the karma every post has by the mean karma of the month it was published. This allows us to determine which posts received the most praise in relation to how active the forum was at the time it was published. Here are the top 15 posts of the EA Forum for 2014 - March 2021 according to this metric:

- 15.Why I'm concerned about Giving Green

- 14.EA Hotel with free accommodation and board for two years

- 13.Six Ways To Get Along With People Who Are Totally Wrong*

- 12.EA Diversity: Unpacking Pandora's Box

- 11.The Privilege of Earning To Give

- 10.The case of the missing cause prioritisation research

- 09.Problem areas beyond 80,000 Hours' current priorities

- 08.Introducing Probably Good: A New Career Guidance Organization

- 07.Lessons from my time in Effective Altruism

- 06.Reducing long-term risks from malevolent actors

- 05.EAF’s ballot initiative doubled Zurich’s development aid

- 04.After one year of applying for EA jobs: It is really, really hard to get hired by an EA organisation

- 03.My mistakes on the path to impact

- 02.Growth and the case against randomista development

- 01.Effective Altruism is a Question (not an ideology)

At first glance this list seems pretty similar to the one you get if you simply order the forum by top post. However, the post that was best received in relation to the activity in the forum at that time is from 2014 and the posts on 11, 12 and 13 are all from 2015. Still, most of the best received posts are from 2020 and 2021. This is especially interesting, as we also saw in the earlier figure that the overall karma of the post stagnated at the same time. Apparently, we are getting more really good and more really unremarkable posts as the forum keeps on growing.

Controversy

As I had already extracted all the data, I thought it might also be interesting to take a look at how controversial posts are in the EA Forum. I simply calculated controversy the same way reddit does:

if else

This means a post is more controversial when it has a) many votes overall and b) the more those votes are split equally between the number of upvotes and downvotes.

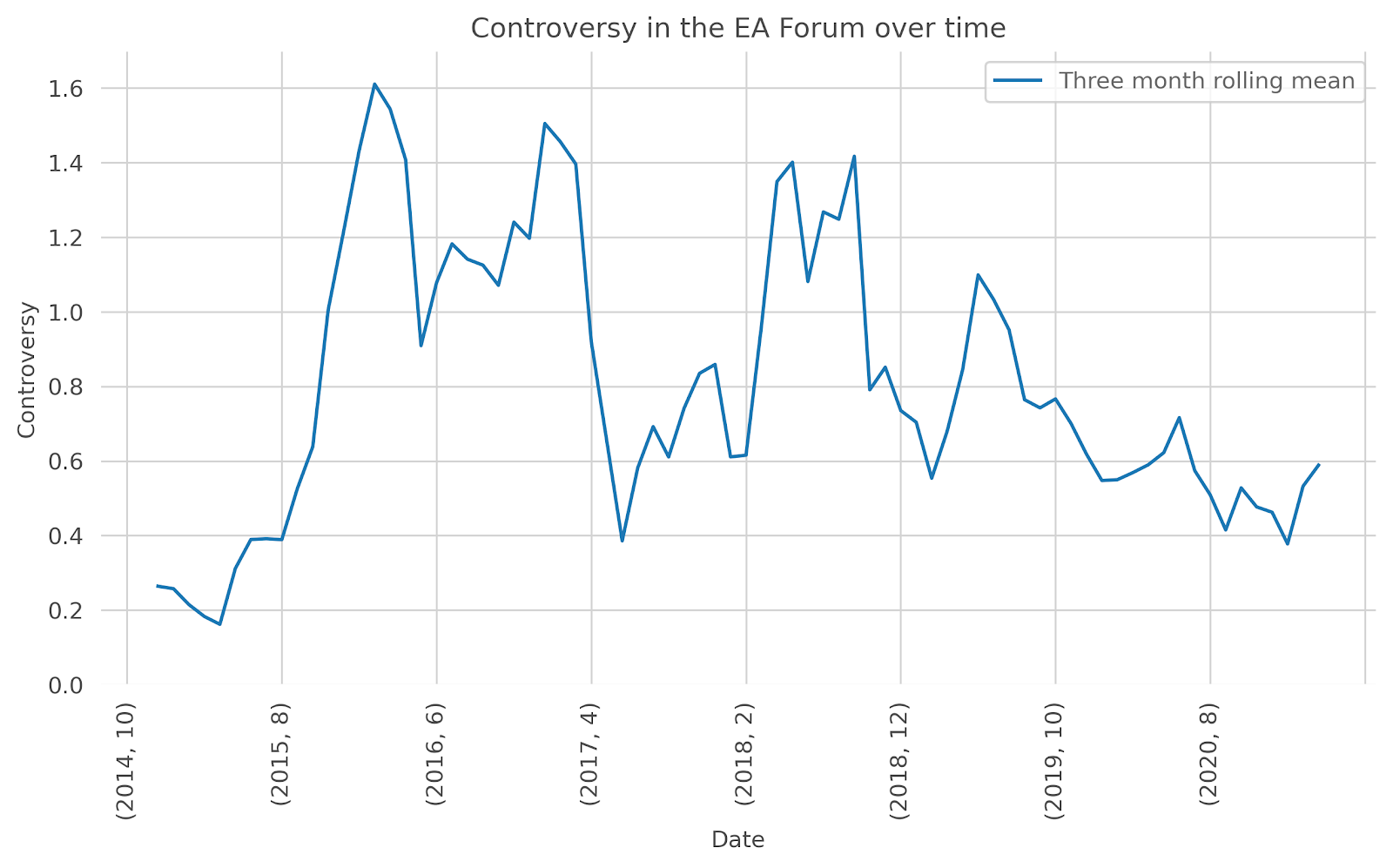

The controversy score in the EA Forum is quite low, as most posts are either well received or don’t get much votes at all. The results here show that there are several distinct spikes in how controversial the average post in the forum was in 2016 and 2018. After that the controversy continuously decreased. As I only really started to engage with EA in 2018 I do not have a good overview of what might have caused these spikes. Maybe some people who have been around for longer can shed a light on this.

This analysis also provided me with a list of the most controversial posts of the EA Forum. I do not link them here directly, as those posts already created a lot of discussion and it might be counterproductive to start them again. However, they might be valuable for some and you can PM me if you want the list (or look in the repository I linked earlier). Many of those posts seem to handle sensitive issues like criticising organizations, norms or individual people, which might be difficult to handle in a way that only few will find disagreeable. Before looking at the list I would have expected that common partisan topics would be the most controversial. I am not sure what to make of this. Maybe it is this just natural for any community that critique is always difficult to swallow or those critiques might just not have been written carefully enough (if have not read most of them yet). This might have been the case as several of the most well received posts in the forum criticize ideas and institutions as well.

Overall, I think we can gain some interesting insights by taking a deeper look at the metadata of the forum. Especially the trend that controversy seemed to have decreased over time seems relevant to me and I am curious if this trend will continue.

Thanks to Magdalena Wache, Max Räuker and Ekaterina Illin for providing feedback on this post.

Thanks for this, pretty interesting analysis.

The other thing going on here is that the karma system got an overhaul when forum 2.0 launched in late 2018, giving some users 2x voting power and also introducing strong upvotes. Before that, one vote was one karma. I don't remember exactly when the new system came in, but I'd guess this is the cause of the sharp rise on your graph around December 2018. AFAIK, old votes were never re-weighted, which is why if you go back through comments on old posts you'll see a lot of things with e.g. +13 karma and 13 total votes, a pattern I don't recall ever seeing since.

Partly as a result, most of the karma old posts have will have been from people going back and upvoting them later once the new system was impemented, e.g. from memory my post from your list was around +10 for most of its life, and has drifted to its current +59 over the past couple of years.

This jumps out to me because I'm pretty sure that post was not a particularly high-engagement post even at the time it was written, but it's the second-highest 2015 post on your list. I think this is because it's been linked back to a fair amount and so can partially benefit from the karma inflation.

(None of which is meant to take away from the work you've done here, just providing some possibly-helpful context.)

For what it's worth, I generally downvote a post only when I think "This post should not have been written in the first place", and relatedly I will often upvote posts I disagree with.

If that's typical, then the "controversial" posts you found may be "the most meta-level controversial" rather than "the most object-level controversial", if you know what I mean.

That's still interesting though.

The EA Forum could maybe fairly trivially collect some data on this by sending an alert randomly to a subset of instances of up/down votes across the user population that collects feedback on the reasons for the up/down vote. Obviously it would need to be balanced by ensuring not to cause too much friction for users.

This is really cool, thank you! :-)

One thought: it is much easier now than it used to be to look at highly upvoted posts. In the old forum old popular posts simply fell by the wayside, now you can sort by them. We also now have the favourites section which encourages people to read highly upvoted posts they haven't read yet.

So I think highly popular posts now look more popular than they really are compared to the past even according to your metric.

Also, I'm proud to say I guessed the most well received forum post according to your metric correctly!

You can query by year, and then aggregate the years. From a past project, in nodejs:

Neat, thank you!

Thanks for this.

Without having the data, it seems the controversy graph could be driven substantially by posts which get exactly zero downvotes.

Almost all posts get at least one vote (magnitude >= 1), and balance>=0, so magnitude^balance >=1. Since the controversy graph goes below 1, I assume you are including the handling which sets controversy to zero if there are zero downvotes, per the Reddit code you linked to.

e.g. if a post has 50 upvotes:

0 downvotes --> controversy 0 (not 1.00)

1 downvote --> controversy 1.08

2 downvotes --> controversy 1.17

10 downvotes --> controversy 2.27

so a lot of the action is in whether a post gets 0 downvotes or at least 1, and we know a lot of posts get 0 downvotes because the graph is often below 1.

If this is a major contributor, the spikes would look different if you run the same calculation without the handling (or, equivalently, with the override being to 1 instead of 0). This discontinuity also makes me suspect that Reddit uses this calculation for ordering only, not as a cardinal measure -- or that zero downvotes is an edge case on Reddit!

That's a valid point. Here's the controversy graph if you exclude all posts that don't have any downvotes:

Overall trend seems to be similar though. And it makes me even more interested what happened in 2018 that sparked so much controversy^^

First of all, thanks for posting this, I think it's interesting to see some analysis on this topic I actually just yesterday thought about when I looked at an old post. I don't know if you already tried looking at this and/or whether it is even possible to do this, but I think an interesting metric would be something like "number of people who upvoted (or downvoted?) divided by number of unique people who have viewed the article". I doubt that would perfectly fix the "old posts' votes are underrepresented" (if, for example, there are any kind of chronological snobbery or "old news = boring news" biases).

Is it possible to see how many unique users have viewed an article?

You can ask the API for "viewCount". However, it seems to always return "null". Not sure if this means that you aren't allowed to query for this or if the problem is just me not getting the queries right^^

Yep, it's an admin-only property. Sorry for the confusion!

Just out of curiosity: Why is this admin-only?

Worried about goodharting effects. I expect authors and others would start using number of views as a quality signal and start optimizing towards more views. But I, having access to that signal, am confident it really very much isn't a good quality signal, and if LW and the EA Forum had a gradient that would incrementally just push towards more of the posts that get a lot of views, this would really destroy a lot of the value of a lot of posts.

Makes sense. Thanks for the detailed answer.

Thanks for writing this. The link to the Github repository returns a 404 error. Maybe it's not set to public?

That seems to have been the case. Sorry about that. Does it work now?

Yes, thanks!

Great. Thanks :)

Not sure how much it matters, but if you weight vote balances by forum activity during month of publication, you aren't controlling for votes outside month of publication. This means that older posts that have received a second wind of upvotes will be ranked higher.

I expect that the variance has fluctuated over time. If this were true, something like standard deviation away from the mean would be significantly more informative than simple adjustment on the basis of the mean.

FWIW, I also expect https://forum.effectivealtruism.org/posts/ngdvhPQ2NaLHCpG9T/getting-a-feel-for-changes-of-karma-and-controversy-in-the?commentId=fcEudfKQDzgZsnhhW that the point made in this comment is valid, and therefore it may be ~impossible (unless the timing of votes is preserved) to understand reception at-the-time.