How should we expect AI to unfold over the coming decades? In this article, I explain and defend a compute-based framework for thinking about AI automation. This framework makes the following claims, which I defend throughout the article:

- The most salient impact of AI will be its ability to automate labor, which is likely to trigger a productivity explosion later this century, greatly altering the course of history.

- The availability of useful compute is the most important factor that determines progress in AI, a trend which will likely continue into the foreseeable future.

- AI performance is likely to become relatively predictable on most important, general measures of performance, at least when predicting over short time horizons.

While none of these ideas are new, my goal is to provide a single article that articulates and defends the framework as a cohesive whole. In doing so, I present the perspective that Epoch researchers find most illuminating about the future of AI. Using this framework, I will justify a value of 50% for the probability of Transformative AI (TAI)[1] arriving before 2043.

Summary

The post is structured as follows.

In part one, I will argue that what matters most is when AI will be able to automate a wide variety of tasks in the economy. The importance of this milestone is substantiated by simple models of the economy that predict AI could greatly accelerate the world economic growth rate, dramatically changing our world.

In part two, I will argue that availability of data is less important than compute for explaining progress in AI, and that compute may even play an important role driving algorithmic progress.

In part three, I will argue against a commonly held view that AI progress is inherently unpredictable, providing reasons to think that AI capabilities may be anticipated in advance.

Finally, in part four, I will conclude by using the framework to build a probability distribution over the date of arrival for transformative AI.[1]

Part 1: Widespread automation from AI

When discussing AI timelines, it is often taken for granted that the relevant milestone is the development of Artificial General Intelligence (AGI), or a software system that can do or learn “everything that a human can do.” However, this definition is vague. For instance, it's unclear whether the system needs to surpass all humans, some upper decile, or the median human.

Perhaps more importantly, it’s not immediately obvious why we should care about the arrival of a single software system with certain properties. Plausibly, a set of narrow software programs could drastically change the world before the arrival of any monolithic AGI system (Drexler, 2019). In general, it seems more useful to characterize AI timelines in terms of the impacts AI will have on the world. But, that still leaves open the question of what impacts we should expect AI to have and how we can measure those impacts.

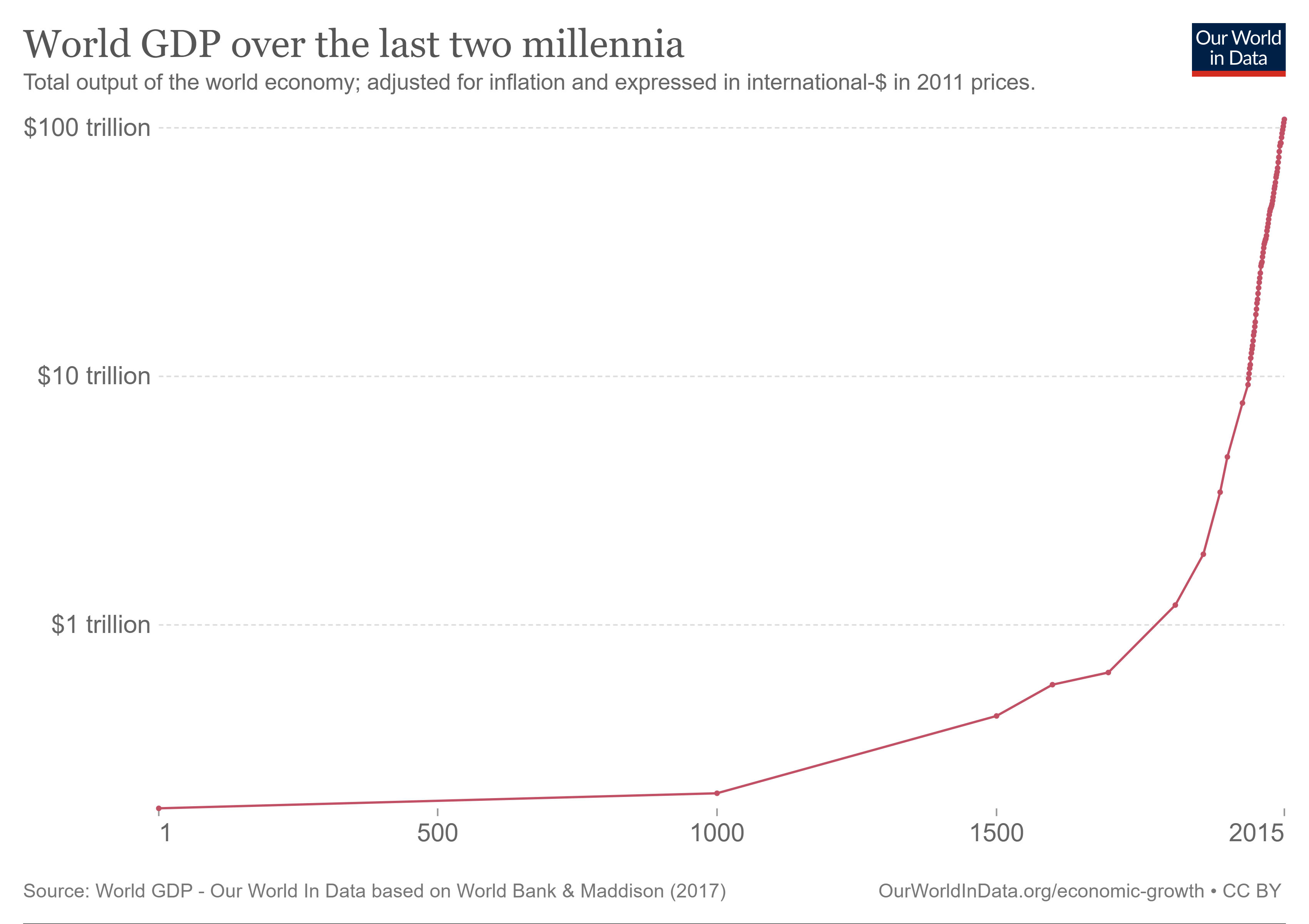

As a starting point, it seems that automating labor is likely to be the driving force behind developing AI, providing huge and direct financial incentives for AI companies to develop the technology. The productivity explosion hypothesis says that if AI can automate the majority of important tasks in the economy, then a dramatic economic expansion will follow, increasing the rate of technological, scientific, and economic growth by at least an order of magnitude above its current rate (Davidson, 2021).

A productivity explosion is a robust implication of simple models of economic growth models, which helps explain why the topic is so important to study. What's striking is that the productivity explosion thesis appears to follow naturally from some standard assumptions made in the field of economic growth theory, combined with the assumption that AI can substitute for human workers. I will illustrate this idea in the following section.[2]

But first, it is worth contrasting this general automation story with alternative visions of the future of AI. A widely influential scenario is the hard takeoff scenario as described by Eliezer Yudkowsky and Nick Bostrom (Yudkowsky 2013, Bostrom 2014). In this scenario, the impacts of AI take the form of a localized takeoff in which a single AI system becomes vastly more powerful than the rest of the world combined; moreover, this takeoff is often predicted to happen so quickly that the broader world either does not notice it occurring until it's too late, or the AI cannot otherwise be stopped. This scenario is inherently risky because the AI could afterwards irrevocably impose its will on the world, which could be catastrophic if the AI is misaligned with human values.

However, Epoch researchers tend to be skeptical of this specific scenario. Many credible arguments have been given against the hard takeoff scenario (e.g., Christiano 2018, Grace 2018). We don't think these objections rule out the possibility, but they make us think that a hard takeoff is not the default outcome (<20% probability). Instead of re-hashing the debate, I'll provide just a few points that we think are important to better understand our views.

Perhaps the most salient reason to expect a hard takeoff comes from the notion of recursive self-improvement, in which an AI can make improvements to itself, causing it to become even better at self-improvement, and so on (Good 1965). However, while the idea of accelerating change resulting from AIs improving AIs seems likely to us, we don't think there are strong reasons to believe that this recursive improvement will be unusually localized in space. Rather than a single AI agent improving itself, we think this acceleration will probably be more diffuse, and take the form of AIs accelerating R&D in a general sense. We can call this more general phenomenon recursive technological improvement, to distinguish it from the narrow case of recursive self-improvement, in which a single AI "fooms" upwards in capabilities, very quickly outstripping the rest of the world combined (e.g. in a matter of weeks).

There are many reasons for thinking that AI impacts will be diffuse rather than highly concentrated. Perhaps most significantly, if the bottleneck to AI progress is mostly compute rather than raw innovative talent, it seems much harder for initially-powerless AI systems to explode upwards in intelligence without having already taking over the semiconductor industry. Later, in part two, I will support this premise by arguing that there are some prima facie reasons to think that compute is the most important driver of AI progress.

Simple models of explosive growth

In the absence of technological innovation, we might reasonably expect output to scale proportionally to increases in inputs. For example, if you were to double the number of workers, double the number of tools, buildings, and roads that the workers rely on, and so on, then outputs would double as well. That's what it would mean for returns to scale to be constant.

However, doubling the number of workers also increases the rate of idea production, and thus innovation. This fact is important because of a key insight — often attributed to Paul Romer — that ideas are non-rivalrous. The non-rivalry of ideas means that one person can use an idea without impinging on someone else's use of that idea. For example, your use of the chain rule doesn't prevent other people from using the chain rule. This means that doubling the inputs to production should be expected to more than double output, as workers adopt innovations created by others. Surprisingly, this effect is very large even under realistic assumptions in which new ideas get much harder to find over time (Erdil and Besiroglu 2023 [link forthcoming]).

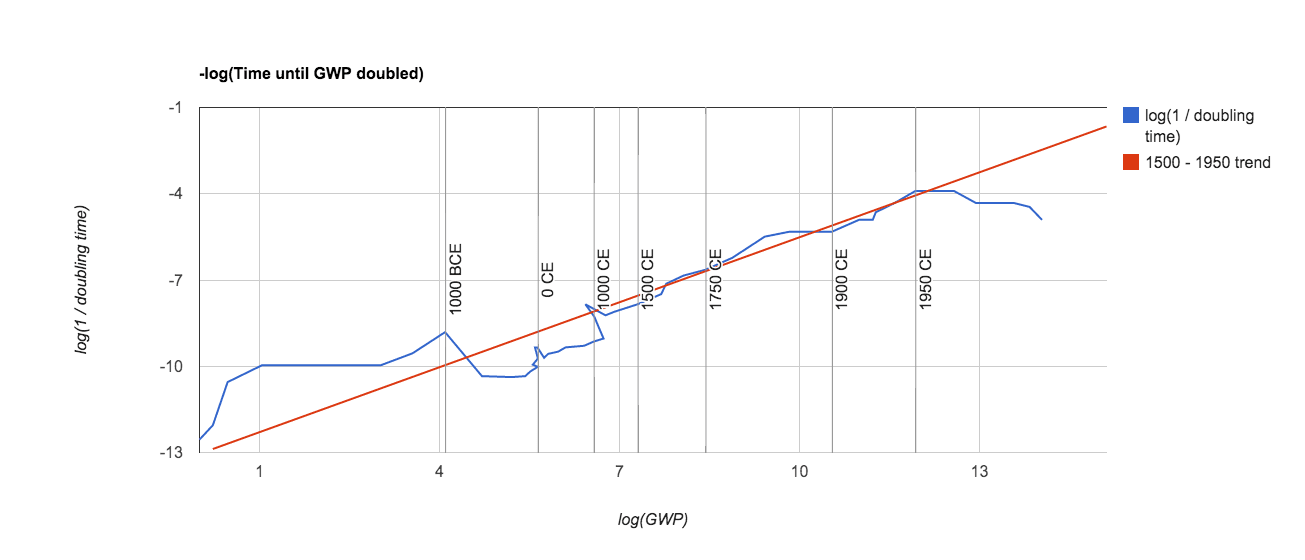

Given increasing returns to scale and the fact that inputs can accumulate and be continually reinvested over time, the semi-endogenous model of economic growth says that we should see a productivity explosion as a consequence of population growth in the long run, tending towards higher levels of economic growth over time.

This model appears credible because it offers a simple explanation for the accelerated growth in human history, while also providing a neat explanation for why this acceleration appeared to slow down sometime in the mid-20th century.

The slowdown we observed around the mid-20th century straightforwardly follows from the semi-endogenous model once the demographic transition is taken into account, which uncoupled the link between population growth and economic growth, resulting in declining fertility and, ultimately, declining rates of economic growth.

By contrast, since computing hardware manufacturing is not bound by the same constraints as human population growth, an AI workforce can expand very quickly — much faster than the time it takes to raise human children. Perhaps more importantly, software can be copied very cheaply. The 'population' of AI workers can therefore expand drastically, and innovate in the process, improving the performance of AIs at the same time their population expands.

It therefore seems likely that, unless we coordinate to deliberately slow AI-driven growth, the introduction of AIs that can substitute for human labor could drastically increase the growth rate of the economy, at least before physical bottlenecks prevent further acceleration, which may only happen at an extremely high level of growth by current standards.

Strikingly, we can rescue the conclusion of explosive growth even if we dispense with the assumption of technological innovation. Consider that Carlsmith (2020) estimated that the human brain uses roughly FLOP per second. If it became possible to design computer software that was as economically useful as the human brain per unit of FLOP, that would suggest we could expand the population of human-equivalent workers as quickly as we can manufacture new computing hardware. Assuming the current price of compute stays constant, at roughly FLOP/$, re-investing perhaps 45% of economic output into new computing hardware causes economic growth to exceed 30% in this simple model. Obviously, if FLOP prices decrease over time, this conclusion becomes even stronger.

Of course, knowing that advanced AI can eventually trigger explosive growth doesn't tell us much about when we should expect that to happen. To predict when this productivity explosion will happen, we'll need to first discuss the drivers of AI progress.

Part 2: A compute-centered theory of AI automation

There appear to be three main inputs to performance in the current AI paradigm of deep learning: algorithmic progress, compute, and data. Leaving aside algorithmic progress for now, I'll present a prima facie case that compute will ultimately be most important for explaining progress in the foreseeable future.

At the least, there seem to be strong reasons to think that growth in compute played a key role in sustaining AI progress in the past. Almost all of the most impressive AI systems in the last decade, such as AlphaGo Zero and GPT-4, were trained using an amount of compute that would have been prohibitively expensive in, say, 1980. Historically, many AI researchers believed that creating general AI would be more about coming up with the right theories of intelligence, but over and over again, researchers eventually found that impressive results only came after the price of computing fell far enough that simple, "blind" techniques began working (Sutton 2019).

In the last year, we’ve seen predictions that the total amount of data available on the internet could constrain AI progress in the near future. However, researchers at Epoch believe there are a number of strong reasons to doubt these predictions.

Most importantly, according to recent research on scaling laws, if compute is allocated optimally during training runs, we should expect parameter counts and training data to grow at roughly the same rate (Hoffmann et al. 2022). Since training compute is proportional to the number of parameters times the number of training tokens, this implies that data requirements will grow at roughly half the growth rate of compute. As a result, you should generally expect compute to be a greater bottleneck relative to data.

More detailed investigations have confirmed this finding, with Villalobos et al. 2022 estimating that there is enough low-quality internet data to sustain current trends in dataset size growth until at least 2030 and possibly until 2050. [3]

It is also possible that we can generate nearly unlimited synthetic data, borrowing compute to generate any necessary data (Huang et al. 2022, Eldan and Li 2023). Another possibility is that training on multi-modal data, such as video and images, could provide the necessary “synergy,” where performance on one modality is improved by training on the other and vice versa (Aghajanyan et al. 2023). This would allow models to be trained at much larger scales without being bottlenecked by limited access to textual data. This possibility is lent credibility by research showing that training on multiple modalities may be more beneficial for learning concepts than training on text alone, above a certain scale.

Broadly speaking, researchers at Epoch currently think that the total availability of data will not constrain general AI progress in the foreseeable future. Nonetheless, the idea of a general data bottleneck is important to distinguish from the idea that AI will be bottlenecked by data on particular tasks. While we don't seem likely to run out of internet data in the medium-term future, it seems relatively more likely that widespread automation will be bottlenecked by data on a subset of essential tasks that require training AI on scarce, high-quality data sources.

If we become bottlenecked by essential but hard-to-obtain data sources, then AI progress will be constrained until these bottlenecks are lifted, delaying the impacts of AI. Nonetheless, the possibility of a very long delay, such as one lasting 30 years or more, appears less plausible in light of recent developments in language models.

For context, in the foundation models paradigm, models are trained in two stages, consisting of a pre-training stage in which the model is trained on a vast, diverse corpus, and a fine-tuning stage in which the model is trained on a narrower task, leveraging its knowledge from the pre-training data (Bommasani et al. 2021). The downstream performance of foundation models seems to be well-described by a scaling law for transfer, as a function of pre-training data size, fine-tuning data size, and the transfer gap (Hernandez et al. 2021, Mikami et al. 2021). The transfer gap is defined as the maximum possible benefit of pre-training.

If the transfer gap from one task to another is small, then compute is usually more important for achieving high performance than fine-tuning data. That’s because, if the transfer gap is small, better performance can be efficiently achieved by simply training longer on the pre-training distribution with more capacity, and transferring more knowledge to the fine-tuning task. Empirically, it seems that the transfer gap between natural language tasks is relatively small since large foundation models like GPT-4 can obtain state-of-the-art performance on a wide variety of tasks despite minimal task-specific fine-tuning (OpenAI 2023).

Of course, the transfer gap between non-language tasks may be larger than what we’ve observed for language tasks. In particular, the transfer gap from simulation to reality in robotics may be large and hard to close, which many roboticists have claimed before (e.g., Weng 2019). However, there appear to be relatively few attempts to precisely quantify the size of the transfer gap in robotics, and how it's changing over time for various tasks (Paull 2020). Until we better understand the transfer gap between robotic tasks, it will be hard to make confident statements about what tasks might be limited more by data than compute.

That said, given the recent impressive results in language models, it is likely that the transfer gap between intellectual tasks is not prohibitively large. Therefore, at least for information-based labor, compute rather than data will be the more important bottleneck when automating tasks. Since nearly 40% of jobs in the United States can be performed entirely remotely, and these jobs are responsible for a disproportionate share of US GDP (Neiman et al. 2020), automating only purely intellectual tasks would plausibly increase growth in output many-fold, having a huge effect on the world.

What about algorithmic progress?

The above discussion paints an incomplete picture of AI progress, as it neglects the role of algorithmic progress. Algorithmic progress lowers the amount of training compute necessary to achieve a certain level of performance over time and, in at least the last decade, it’s been very rapid. For example, Erdil and Besiroglu 2022 estimated that the training compute required to reach a fixed level of performance on ImageNet has been cutting in half roughly every nine months, albeit with wide uncertainty over that value.

In fact, algorithmic progress has been found to be similarly as important as compute for explaining progress across a variety of different domains, such as Mixed-Integer Linear Programming, SAT solvers, and chess engines -- an interesting coincidence that can help shed light on the source of algorithmic progress (Koch et al. 2022, Grace 2013). From a theoretical perspective, there appear to be at least three main explanations of where algorithmic progress ultimately comes from:

- Theoretical insights, which can be quickly adopted to improve performance.

- Insights whose adoption is enabled by scale, which only occurs after there's sufficient hardware progress. This could be because some algorithms don't work well on slower hardware, and only start working well once they're scaled up to a sufficient level, after which they can be widely adopted.

- Experimentation in new algorithms. For example, it could be that efficiently testing out all the reasonable choices for new potential algorithms requires a lot of compute.

Among these theories, (1) wouldn't help to explain the coincidence in rates mentioned earlier. However, as noted by, e.g., Hanson 2013, theories (2) and (3) have no problem explaining the coincidence since, in both cases, what ultimately drove progress in algorithms was progress in hardware. In that case, it appears that we once again have a prima facie case that compute is the most important factor for explaining progress.

Nonetheless, since this conclusion is speculative, I recommend we adopt it only tentatively. In general, there are still many remaining uncertainties about what drives algorithmic progress and even the rate at which it is occurring.

One important factor affecting our ability to measure algorithmic progress is the degree to which algorithmic progress on one task generalizes to other tasks. So far, much of our data on algorithmic progress in machine learning has been on ImageNet. However, there seem to be two ways of making algorithms more efficient on ImageNet. The first way is to invent more efficient learning algorithms that apply to general tasks. The second method is to develop task-specific methods that only narrowly produce progress on ImageNet.

We care more about the rate of general algorithmic progress, which in theory will be overestimated by measuring the rate of algorithmic progress on any specific narrow task. This consideration highlights one reason to think that estimates overstate algorithmic progress in a general sense.

Part 3: Predictability of AI performance

Even if compute is the ultimate driver of progress in machine learning, with algorithmic progress following in lockstep, in order to forecast when to expect tasks to be automated, we first need some way of mapping training compute to performance.

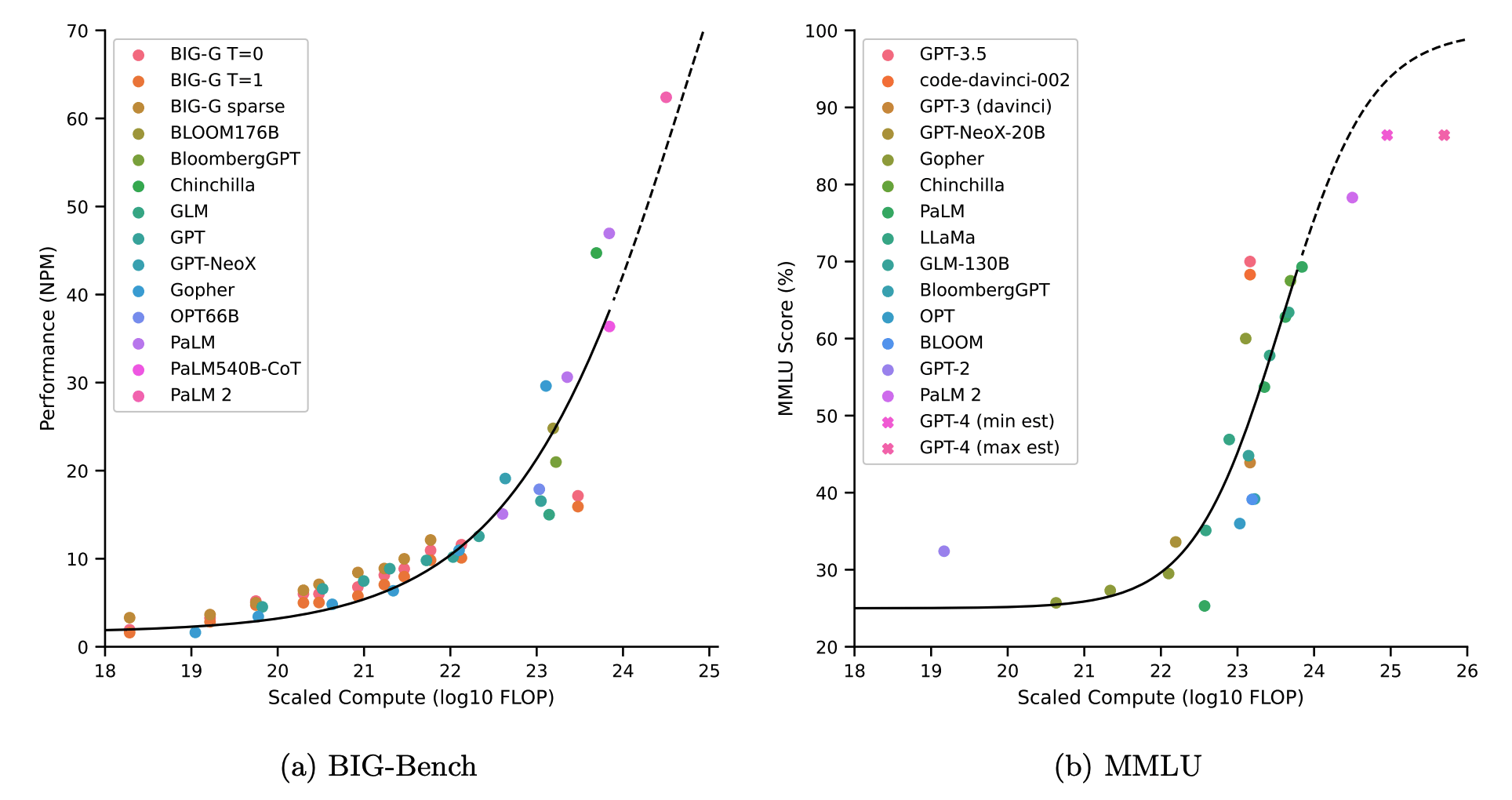

Fortunately, there are some reasons to think that such a method can be developed. Despite some claims that AI performance is inherently unpredictable as a function of scale, there are reasons to think this claim is overstated. Recently, Owen 2023 [link forthcoming] found that for most tasks he considered, AI performance can be adequately retrodicted by taking into account upstream loss as predicted by neural scaling laws. This approach was unique because it leveraged more information than prior research, which mostly tried to retrodict performance as a function of model scale, leaving aside the role of training data size.

Perhaps the most significant barrier standing in the way of predicting model performance is the existence of emergent abilities, defined as abilities that suddenly appear at certain model scales and thus cannot be predicted by extrapolating performance from lower scales (Wei et al. 2022). There is currently an active debate about the prevalence and significance of emergent abilities, with some declaring that supposedly emergent abilities are mostly a mirage (Schaeffer et al. 2023).

Overgeneralizing a bit, at Epoch we are relatively more optimistic that AI performance is predictable, or at least will become fairly predictable in the near-term future. While this is a complex debate relying on a number of underexplored lines of research, we have identified some preliminary reasons for optimism.

Why predicting AI performance may be tractable

For many examples of emergence, it appears to result from the fact that performance on the task is inherently discontinuous. For example, there is a discrete difference between being unable to predict the next number in the sequence produced by the middle-square algorithm, and being able to predict the next number perfectly (which involves learning the algorithm). As a result, it is not surprising that models might learn this task suddenly at some scale.

However, most tasks that we care about automating, such as scientific reasoning, do not take this form. In particular, it seems plausible that for most important intellectual tasks, there is a smooth spectrum between "being able to reason about the subject at all" and "being able to reason about the subject perfectly." This model predicts that emergence will mostly occur on tasks that won't lead to sudden jumps in our ability to automate labor.

Moreover, economic value typically does not consist of doing only one thing well, but rather doing many complementary things well. It appears that emergence is most common on narrow tasks rather than general tasks, which makes sense if we view performance on general tasks as an average performance over a collection of narrow subtasks.

For example, Owen 2023 [link forthcoming] found that performance appeared to increase smoothly on both an average of BIG-bench and MMLU tasks as a function of scale — both of which included a broad variety of tasks requiring complex reasoning abilities to solve. These facts highlight that we may see very smooth increases in average performance over the collection of all tasks in the economy as a function of scale.

It is also important to note that additional information beyond training inputs can be incorporated to predict model performance. For example, Jason Wei points out that people have yet to fully explore using surrogate metrics to predict performance on primary metrics. [4]

Predicting performance via a theoretical model

Of course, even if AI performance is, in principle, predictable as a function of scale, we lack data on how AIs are currently improving on the vast majority of tasks in the economy, hindering our ability to predict when AI will be widely deployed. While we hope this data will eventually become available, for now, if we want to predict important AI capabilities, we are forced to think about this problem from a more theoretical point of view.

The "Direct Approach" is my name for a theoretical model that attempts to shed some light on the problem of predicting transformative AI that seeks to complement other models, mainly Ajeya Cotra's biological anchors approach.

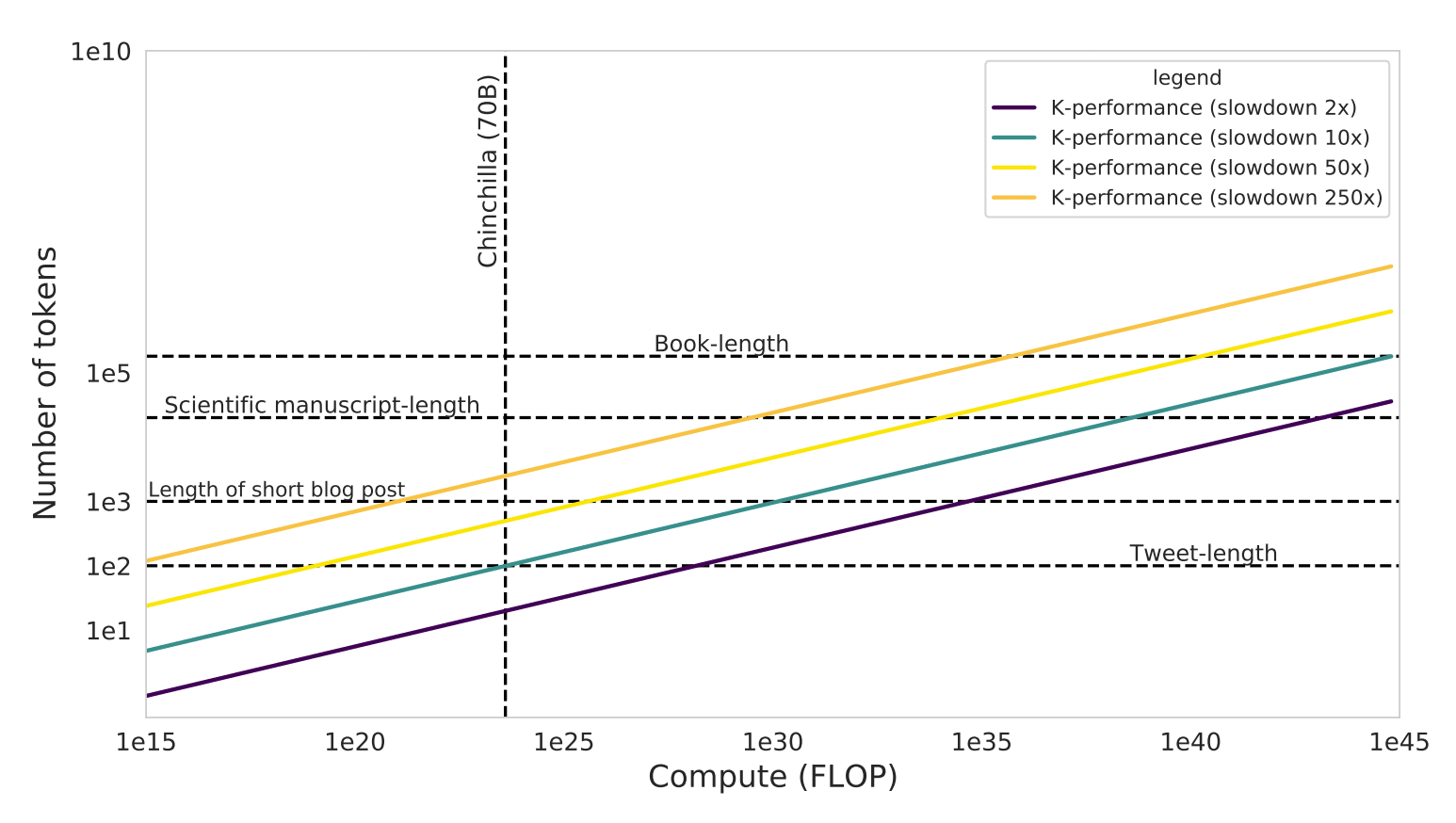

The name comes from the fact that it attempts to forecast advanced AI by interpreting empirical scaling laws directly. The following is a sketch of the primary assumptions and results of the model, although we painted a more comprehensive picture in a recent blog post (here's the full report).

In my view, it seems that the key difficulty in automating intellectual labor is getting a machine to think reliably over long sequences. By “think reliably,” I mean “come up with logical and coherent reasons that cohesively incorporate context and arrive at a true and relevant conclusion.” By “long sequences,” I mean sufficiently long horizons over which the hardest and most significant parts of intellectual tasks are normally carried out.

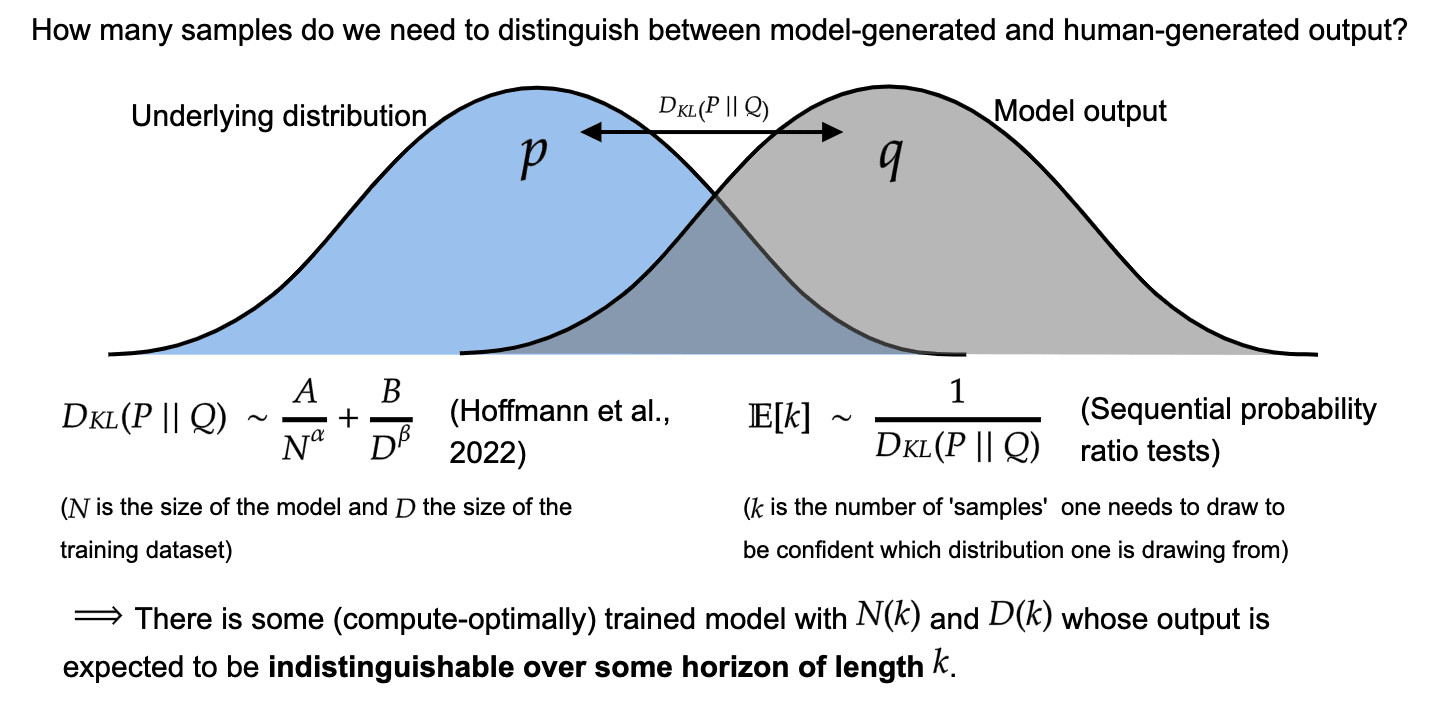

While this definition is not precise, the idea can be made more amenable to theoretical analysis by introducing a simple framework for interpreting the upstream loss of models on their training distribution.

The fundamental assumption underlying this framework is the idea that indistinguishability implies competence. For example, if an AI is able to produce mathematics papers that are indistinguishable from human-written mathematics papers, then the AI must be at least as competent as human mathematicians. Otherwise, there would be some way of distinguishing the AI’s outputs from the human outputs.

With some further mathematical assumptions, we can use scaling laws to calculate how many tokens it will take, on average, to distinguish between model-generated outputs and outputs in the training distribution, up to some desired level of confidence. [5]

Given the assumption that indistinguishability implies competence, this method permits a way of upper bounding the training compute required to train a model to reach competence over long horizon lengths, i.e., think reliably over long sequences. The model yields an upper bound rather than a direct estimate, since alternative ways of creating transformative AI than emulating human behavior using deep learning may be employed in the future.

These results can be summarized in the following plot, which shows how distinguishability evolves as a function of compute, given 2022 algorithms. The two essential parameters are (1) the horizon length over which you think language models must be capable of performing reliable high-quality reasoning before they can automate intellectual labor, and (2) the ability of humans to discriminate between outputs relative to an ideal predictor (called the "slowdown" parameter), which sets a bound on how good humans are at picking out "correct" results. Very roughly, lower estimates in the slowdown correspond to thinking that model outputs must be indistinguishable according to a more intelligent judge in order for the model to substitute for real humans, with slowdown=1 being an ideal Bayesian judge.

Since the Direct Approach relies on Hoffmann et al. 2022, which trained on general internet data, it might not apply to more complex distributions. That said, in practice, power law scaling has been found to be ubiquitous across a wide variety of data distributions, and even across architectures (Henighan et al. 2020, Tay et al. 2022). While the coefficients in these power laws are often quite different, the scaling exponents appear very similar across data distributions (Henighan et al. 2020). Therefore, although one's estimate using the Direct Approach may systematically be biased in light of the simplicity of data used in the Hoffmann et al. 2022 study, this bias can plausibly be corrected by simply keeping the bias in mind (for example, by adding 2 OOMs of compute to one's estimate).

After taking into account this effect, I personally think the Direct Approach provides significant evidence that we can probably train a model to be roughly indistinguishable from a human over sequences exceeding the average scientific manuscript using fewer than ~ FLOP, using 2022 algorithms. Notably, this quantity is much lower than the evolutionary anchor of ~ FLOP found in the Bio Anchors report; and it's still technically a (soft) upper bound on the true amount of training compute required to match human performance across a wide range of tasks.

Part 4: Modeling AI timelines

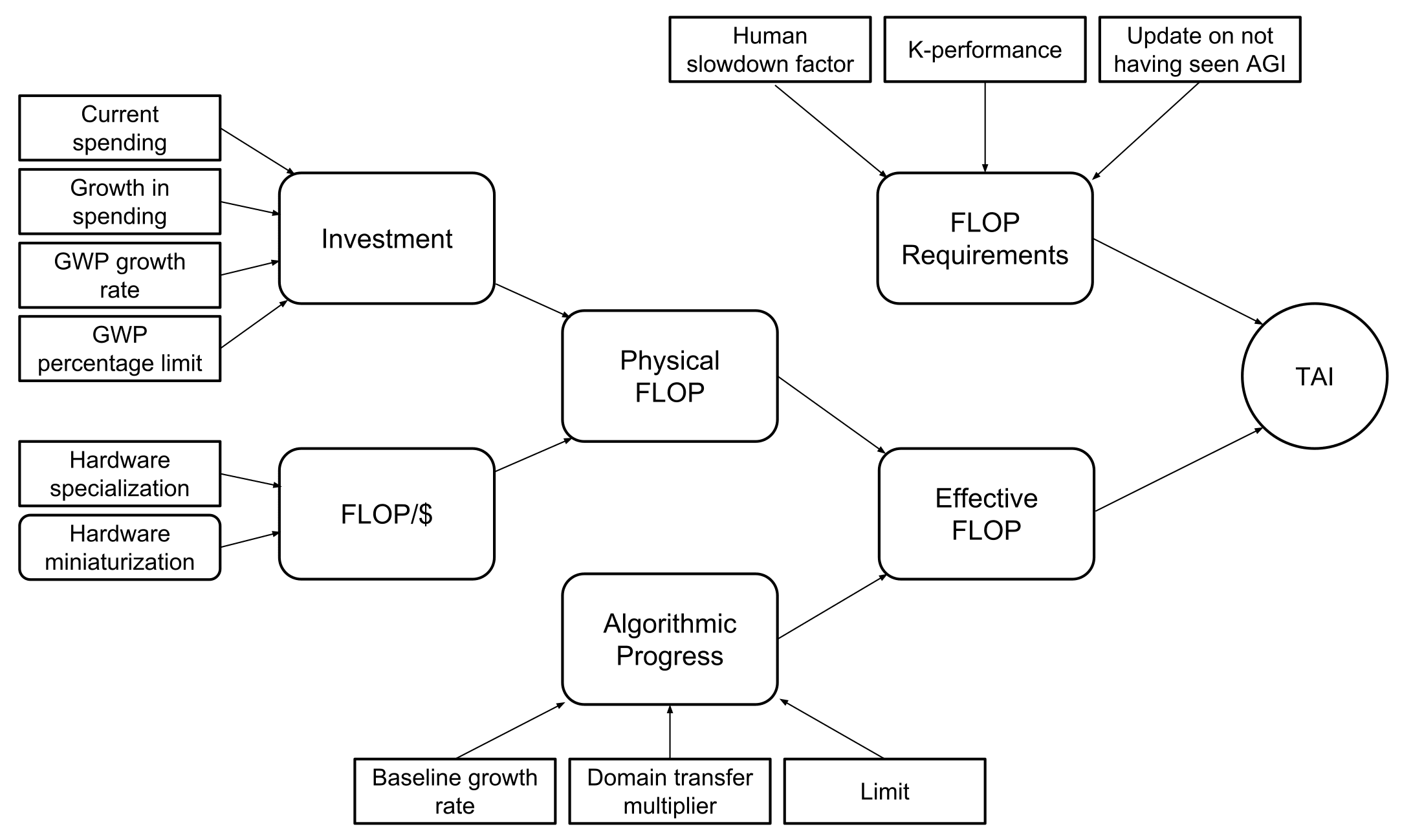

If compute is the central driving force behind AI, and transformative AI (TAI) comes out of something looking like our current paradigm of deep learning, there appear to be a small set of natural parameters that can be used to estimate the arrival of TAI. [1] These parameters are:

- The total training compute required to train TAI

- The average rate of growth in spending on the largest training runs, which plausibly hits a maximum value at some significant fraction of GWP

- The average rate of increase in price-performance for computing hardware

- The average rate of growth in algorithmic progress

Epoch has built an interactive model that allows you to plug in your own estimates over these parameters into this model, and obtain a distribution over the arrival of TAI.

The interactive model provides some default estimates for each of the parameters. We employ the Direct Approach to estimate a distribution over training compute requirements, although the distribution given by Biological Anchors can easily be used in its place. We use historical data to estimate parameter values. In our experience, given reasonable parameter values, the model typically reveals medium-length timelines, in the range of 15-45 years from now.

Nonetheless, this model is conservative for two key reasons. The first is that the Direct Approach only provides an upper bound on the training compute required to train TAI, rather than a direct estimate. The second reason is that this model assumes that the rate of growth in spending, average progress in price-performance, and growth in algorithmic progress will continue at roughly the same rates as in the past. However, if AI expands economic output, it could accelerate the growth rate of each of these parameters.

Addressing how the gradual automation of tasks can speed up the trends so far would take a more careful analysis than I can present in this article. To get an intuition of how strong the effect is, we can study the model developed by Davidson (2023). Under the default parameter choice, full automation is reached around 2040, or 17 years from now. If we disable the effects of partial automation, [6] this outcome is achieved in 2053, or 30 years from now.

Against very short timelines

The compute-centric framework also provides some evidence against very short timelines (<4 years). One simple argument involves looking at the stock of current compute, and seeing whether transformative growth is possible if we immediately received the software for AGI at the compute-efficiency matching the human brain. Note that this is a bold assumption unless you think AGI is imminent, yet even in this case, transformative growth is doubtful in the very short term.

To justify this claim, consider that NVIDIA's H100 80 GB GPUs currently cost about $30k. Given that NVIDIA dominates the AI-relevant hardware market, we can infer that, since its data center revenue is currently on the order of $15 billion per year, and given the compound annual growth rate in its revenue at ~21.3% since 2012 plus rapid hardware progress in the meantime, there's likely less than $75 billion dollars worth of AI hardware currently in the world right now on par with the H100 80 GB. Since the H100 80 GB GPU can use at most ~4000 TFLOP/s, this means that all the AI hardware in the world together can produce at most about FLOP/s.

The median estimate of the compute for the human brain from Carlsmith (2020) is ~ FLOP/s, which means that if we suddenly got the software for AGI with a compute-efficiency matching the human brain, we could at most sustain about 10 million human-equivalent workers given the current stock of hardware.

Even if you are inclined to bump up this estimate by 1-2 orders of magnitude given facts like (1) an AGI could be as productive as a 99th percentile worker, and (2) AGI would never need to sleep and could work at full-productivity throughout the entire day, the potential AGI workforce deployed with current resources would still be smaller than the current human labor force, which is estimated at around 3 billion.

Moreover, nearly all technologies experience a lag between when they're first developed and when they become adopted by billions of people. Although technology adoption lags have fallen greatly over time, the evidence from recent technologies indicates that the lag for AI will likely be more than a year.

Therefore, it appears that even if we assume that the software for AGI is right around the corner, as of 2023, computer hardware manufacturing must be scaled up by at least an order of magnitude, and civilization will require a significant period of time before AI can be fully adopted. Given that a typical semiconductor fab takes approximately three years to build, it doesn't seem likely that explosive growth (>30% GWP growth) will happen within the next 4 years.

Note that I do think very near-term transformative growth is plausible if the software for superintelligence is imminent.[7] However, since current AI systems are not close to being superintelligent, it seems that we would need a sudden increase in the rate of AI progress in order for superintelligence to be imminent. The most likely cause of such a sudden acceleration seems to be that pre-superintelligent systems could accelerate technological progress. But, as I have just argued above, a rapid general acceleration of technological progress from pre-superintelligent AI seems very unlikely in the next few years.

My personal AI Timelines

As of May 2023, I think very short (<4 years) TAI timelines are effectively ruled out (~1%) under current evidence, given the massive changes that would be required to rapidly deploy a giant AI workforce, the fact that AGI doesn't appear imminent, and the arguments against hard takeoff, which I think are strong. I am also inclined to cut some probability away from short timelines given the lack of impressive progress in general-purpose robotics so far, which seems like an important consideration given that the majority of labor in the world currently requires a physical component. That said, assuming no substantial delays or large disasters such as war in the meantime, I believe that TAI will probably arrive within 15 years.[8] My view here is informed by my distribution over TAI training requirements, which is centered somewhere around FLOP using 2023 algorithms with a standard deviation of ~3 OOMs. [9]

However, my unconditional view is somewhat different. After considering all potential delays (including regulation, which I think is likely to be substantial) and model uncertainty, my overall median TAI timeline is more like 20 years from now, with a long tail extending many decades into the future.

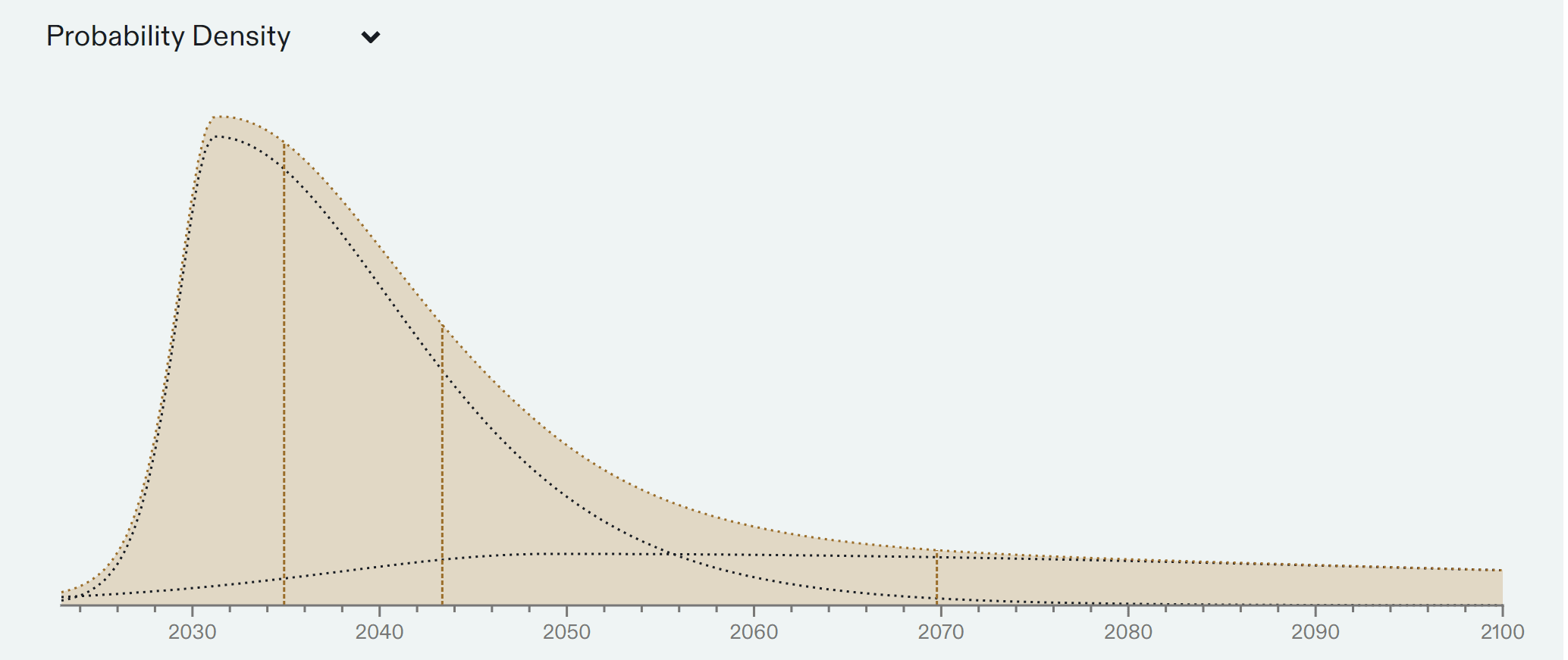

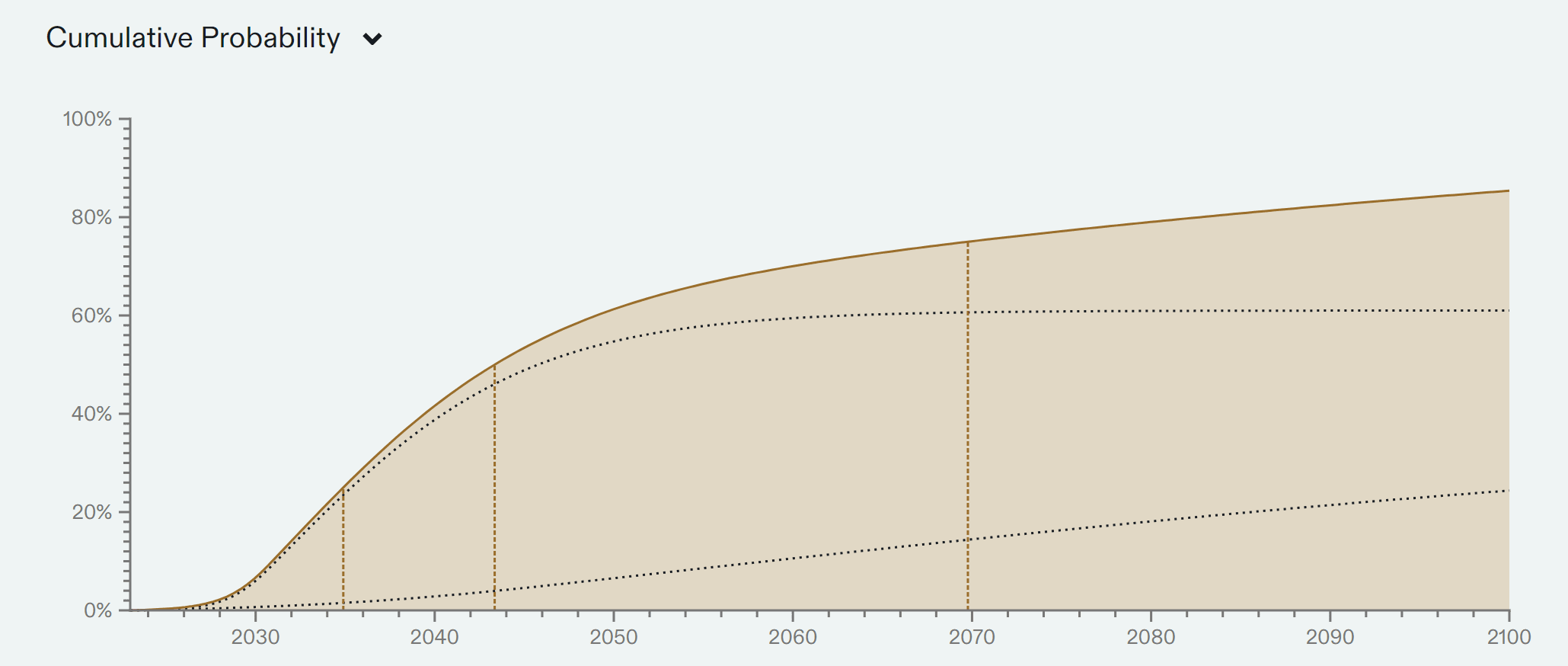

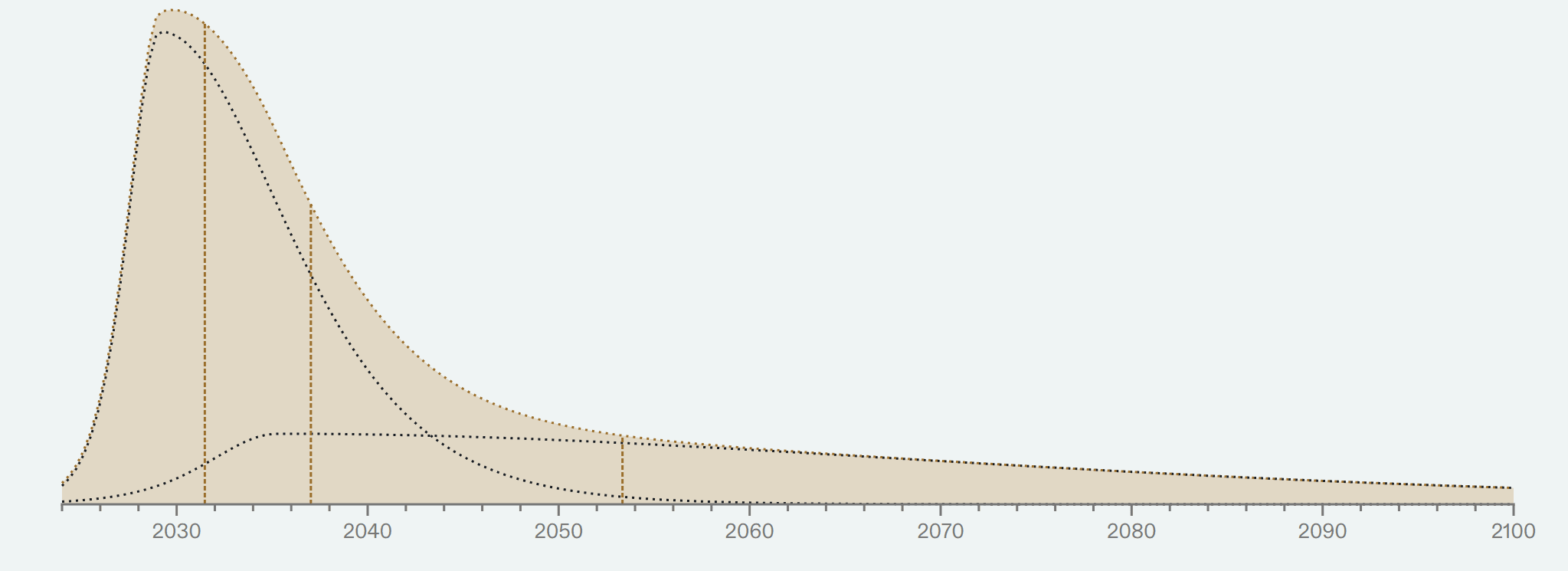

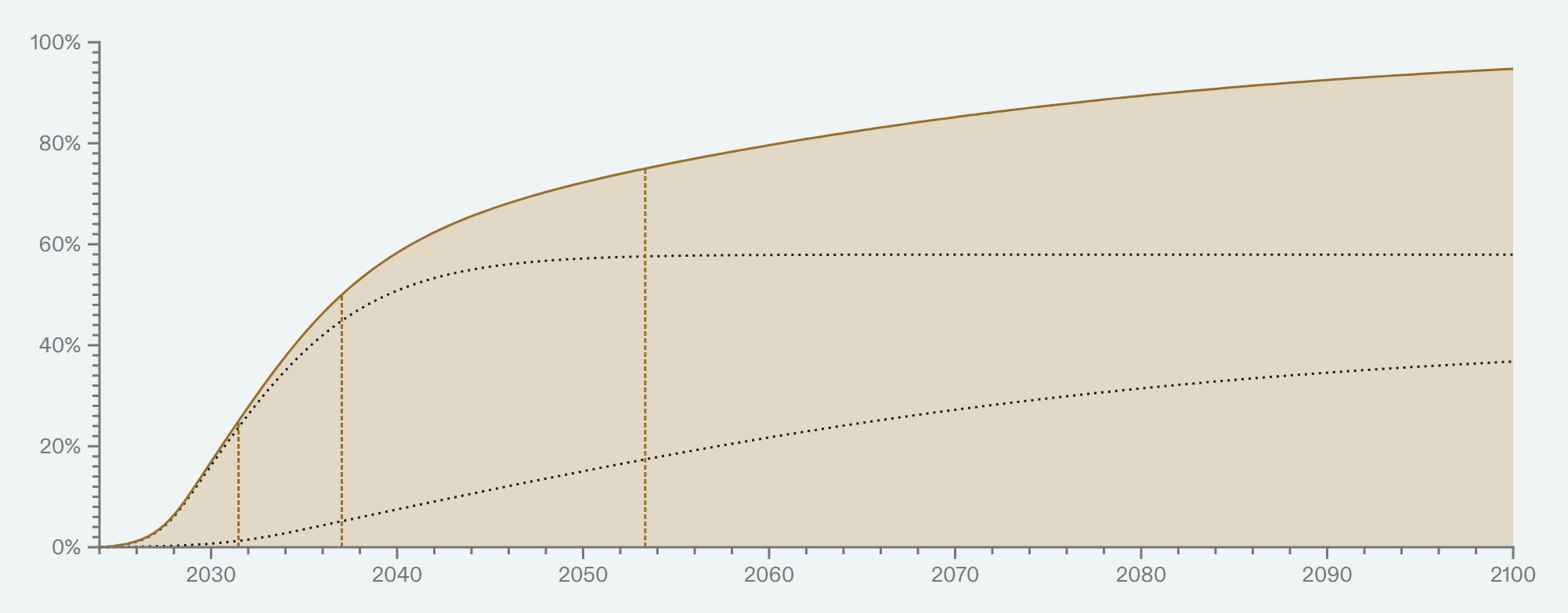

To sum up what I think all this evidence points to, I plotted a probability distribution to represent my beliefs over the arrival of TAI below. Note the fairly large difference between the median (2043) and the mode (2031). [10]

Given this plot, we can look at various years and find my unconditional probability of TAI arriving before that year. See footnote [11]. For reference, there is a 50% chance that TAI will arrive before 2043 in this distribution.

Conclusion

Assuming these arguments hold, it seems likely that transformative AI will be developed within the next several decades. While in this essay, I have mostly discussed the potential for AI to accelerate economic growth, other downstream consequences from AI, such as value misalignment and human disempowerment, are worth additional consideration, to put it mildly (Christiano 2014, Ngo et al. 2022).

I thank Tamay Besirogly, Jaime Sevilla, David Owen, Ben Cottier, Anson Ho, David Atkinson, Eduardo Infante-Roldan and the rest of the Epoch team for their support and suggestions through this article. Adam Papineau provided copy-writing.

- ^

Following Karnofsky 2016 and Cotra 2020, we will define TAI in terms of potential transformative consequences from advanced AI — primarily accelerated economic growth — but with two more conditions that could help cover alternative plausible scenarios in which AI has extreme and widespread consequences.

Definition of TAI: let's define the year of TAI as the first year following 2022 during which any of these milestones are achieved:

1. Gross world product (GWP) exceeds 130% of its previous yearly peak value

2. World primary energy consumption exceeds 130% of its previous yearly peak value

3. Fewer than one billion biological humans remain alive on EarthThe intention of the first condition is highlighted throughout this article, as I describe the thesis that the most salient effect of AI will be its ability to automate labor, triggering a productivity explosion.

The second milestone is included in order to cover scenarios in which the world is rapidly transformed by AI but this change is not reflected in GDP statistics -- for example, if GDP is systematically and drastically mismeasured. The third milestone covers scenarios in which AI severely adversely impacts the world, even though it did not cause a productivity explosion. More specifically, I want to include the hard takeoff scenario considered by Eliezer Yudkowsky and Nick Bostrom, as it's hard to argue that AI is not "transformative" in this case, even if AI does not cause GWP or energy consumption to expand dramatically.

- ^

For a more in-depth discussion regarding the productivity explosion hypothesis, I recommend reading Davidson 2021, or Trammell and Korinek 2020.

- ^

This conclusion was somewhat conservative since it assumed that we will run out of data after the number of tokens seen during training exceeds our total stock of textual internet data. However, while current large language models are rarely trained over more than one epoch (i.e., one cycle through the full training dataset), there don't appear to be any strong reasons why models can't instead be trained over more than one epoch, which was standard practice for language models before about 2020 (Komatsuzaki 2019).

While some have reported substantial performance degradation while training over multiple epochs (Hernandez et al. 2022), other research teams have not (Taylor et al. 2022). Since we do not at the moment see any strong theoretical reason to believe that training over multiple epochs is harmful, we suspect that performance segregation from training on repeated data is not an intractable issue. However, it may be true that there is only a slight benefit from training over multiple epochs, which would make this somewhat of a moot point anyway (Xue 2023).

- ^

To help explain the utility of surrogate metrics, here's a potential example. It might be that while accuracy on multi-step reasoning tasks improves suddenly as a function of scale, performance on single-step reasoning improves more predictably. Since multi-stage reasoning can plausibly be decomposed into a series of independent single-step reasoning steps, we'd expect a priori that performance on multi-step reasoning might appear to "emerge" somewhat suddenly at some scale as a consequence of the mathematics of successive independent trials. If that's true, researchers could measure progress on single-step reasoning and use that information to forecast when reliable multi-step reasoning will appear.

- ^

The central idea is that given model scaling data, we can estimate how the reducible loss falls with scale, which we can interpret as the KL-divergence of the training distribution from the model. Loosely speaking, since KL-divergence tells us the discrimination information between two distributions, we can use it to determine how many samples, on average, are required to distinguish the model from the true distribution.

- ^

This is achieved by setting the training FLOP gap parameter equal to 1 in the takeoff speeds model.

- ^

We will follow Bostrom 2003 in defining a superintelligence as "any intellect that is vastly outperforms the best human brains in practically every field, including scientific creativity, general wisdom, and social skills."

- ^

Here are my personal TAI timelines conditional on no coordinated delays, no substantial regulation, no great power wars or other large exogenous catastrophes, no global economic depression, and that my basic beliefs about reality are more-or-less correct (e.g. I'm not living in a simulation or experiencing a psychotic break).

- ^

I'll define transformative FLOP requirements as the size of the largest training run in the year prior to the year of TAI (definition given in footnote 1) assuming that no action is taken to delay TAI, such as substantial regulation.

- ^

Note that this distribution was changed slightly in light of some criticism in the comments. I think the previous plot put an unrealistically low credence on TAI arriving before 2030.

- ^

Year P(TAI < Year) 2027 1% 2030 7% 2035 26% 2040 42% 2043 50% 2045 54% 2050 61% 2060 67% 2070 75% 2080 79% 2090 82% 2100 85%

I haven't read all of your posts that carefully yet, so I might be misunderstanding something, but generally, it seems to me like this approach has a some "upper bound" modeling assumptions that are then used as an all things considered distribution.

My biggest disagreement is that I think that your distribution over FLOPs [1] required for TAI (pulled from here), is too large.

My reading is that this was generated assuming that we would train TAI primarily via human imitation, which seems really inefficient. There are so many other strategies for training powerful AI systems, and I expect people to transition to using something better than imitation. For example, see the fairly obvious techniques discussed here.

GPT-4 required ~25 log(FLOP)s, and eyeballing it, the mode of this distribution seems to be about ~35 log(FLOP)s, so that is +10 OOMs as the median over GPT-4. The gap between GPT-3 and GPT-4 is ~2 OOMs, so this would imply a median of 5 GPT-sized jumps until TAI. Personally, I think that 2 GPT jumps / +4 OOMs is a pretty reasonable mode for TAI (e.g. the difference between GPT-2 and GPT-4).

In the 'against very short timelines' section, it seems like your argument mostly routes through it being computationally difficult to simulate the entire economy with human level AIs, because of inference costs. I agree with this, but think that AIs won't stay human level for very long, because of AI-driven algorithmic improvements. In 4 year timelines worlds, I don't expect the economy to be very significantly automated before the point of no return, I instead expect it to look more like faster and faster algorithmic advances. Instead of deploying 10 million human workers dispersed over the entire economy, I think this would look more like deploying 10 million more AGI researchers, and then getting compounding returns on algorithmic progress from there.

I generally don't see this argument. Automating the entire economy != automating ML research. It remains quite plausible to me that we reach superintelligence before the economy is 100% automated.

I'm assuming that this is something like 2023-effective flops (i.e. baking in algorithmic progress, let me know if I'm wrong about this).

I disagree. I'm not using this distribution as an all-things-considered view. My own distribution over the training FLOP for transformative AI is centered around ~10^32 FLOP using 2023 algorithms, with a standard deviation of about 3 OOM.

I think this makes sense after applying a few OOM adjustment downwards to the original distribution, taking into account the fact that it's an upper bound.

I agree this is confusing because of the timelines model I linked to in the post, but the timelines model I linked to is not actually presenting my own view. I contrasted that model with my all-things-considered view at the end of this post, noting that the model seemed conservative in some respects, but aggressive in other respects (since it doesn't take into account exogenous delays, or model uncertainty).

I'm talking about effective flop, not physical FLOP. Plausibly the difference in effective FLOP between GPT-3 and GPT-4 is more like 3 OOMs rather than 2 OOMs. With that in mind, my median of 10^32 2023 FLOP for training transformative AI doesn't seem obviously too conservative to me. It would indicate that we're about 2.3 more jumps the size of GPT-3 --> GPT-4 away from transformative AI, and my intuition seems quite in line with that.

Thanks for the numbers!

For comparison, takeoffspeeds.com has an aggressive monte-carlo (with a median of 10^31 training FLOP) that yields a median of 2033.7 for 100% automation — and a p(TAI < 2030) of ~28%. That 28% is pretty radically different from your 2%. Do you know your biggest disagreements with that model?

The 1 OOM difference in training FLOP presumably doesn't explain that much. (Although maybe it's more, because takeoffspeeds.com talks about "AGI" and you talk about "TAI". On the other hand, maybe your bar for "transformative" is lower than 100% automation.)

Some related responses to stuff in your post:

You argued that AI labor would be small in comparison to all of human labor, if we got really good software in the next 4 years. But if we had recently gotten such insane gains in ML-capabilities, people would want to vastly increase investment in ML-research (and hardware production) relative to everything else in the world. Normally, labor spent on ML research would lag behind, because it takes a long time to teach a large number of humans the requisite skills. But for each skill, you'd only need to figure out how to teach AI about it once, and then all 10 million AIs would be able to do it. (There would certainly be some lag, here, too. Your posts says "lag for AI will likely be more than a year", which I'm sympathetic to, but there's time for that.)

When I google "total number of ml researchers", the largest number I see is 300k and I think the real answer is <100k. So I don't think a huge acceleration in AI-relevant technological progress before 2030 is out of the question.

(I think it's plausible we should actually be thinking about the best ML researchers rather than just counting up the total number. But I don't think it'd be crazy for AIs to meet that bar in the hypothetical you paint. Given the parallelizability of AI, it's both the case that (i) it's worth spending much more effort on teaching skills to AIs, and (ii) that it's possible for AIs to spend much more effective time on learning.)

Mostly not ML research.

Also, if the AIs are bottlenecked by motor skills, humans can do that part. When automating small parts of the total economy (like ML research or hardware production), there's room to get more humans into those industries to do all the necessary physical tasks. (And at the point when AI cognitive output is large compared to the entire human workforce, you can get a big boost in total world output by having humans switch into just doing manual labor, directed by AIs.)

I can see how stuff like regulation would feature in many worlds, but it seems high variance and like it should allow for a significant probability of ~no delay.

Also, my intuition is that 2% is small enough in the relevant context that model uncertainty should push it up rather than down.

I think my biggest disagreement with the takeoff speeds model is just that it's conditional on things like: no coordinated delays, regulation, or exogenous events like war, and doesn't take into account model uncertainty. My other big argument here is that I just think robots aren't very impressive right now, and it's hard to see them going from being unimpressive to extremely impressive in just a few short years. 2030 is very soon. Imagining a even a ~4 year delay due to all of these factors produces a very different distribution.

Also, as you note, "takeoffspeeds.com talks about "AGI" and you talk about "TAI". I think transformative AI is a lower bar than 100% automation. The model itself says they added "an extra OOM to account for TAI being a lower bar than full automation (AGI)." Notably, if you put in 10^33 2022 FLOP into the takeoff model (and keep in mind that I was talking about 2023 FLOP), it produces a median year of >30% GWP growth of about 2032, which isn't too far from what I said in the post:

I added about four years to this 2032 timeline due to robots, which I think is reasonable even given your considerations about how we don't have to automate everything -- we just need to automate the bottlenecks to producing more semiconductor fabs. But you could be right that I'm still being too conservative.

Cool, I thought that was most of the explanation for the difference in the median. But I thought it shouldn't be enough to explain the 14x difference between 28% and 2% by 2030, because I think there should be a ≥20% chance that there are no significant coordinated delays, regulation, or relevant exogenous events if AI goes wild in the next 7 years. (And that model uncertainty should work to increase rather than decrease the probability, here.)

If you think robotics would definitely be necessary, then I can see how that would be significant.

But I think it's possible that we get a software-only singularity. Or more broadly, simultaneously having (i) AI improving algorithms (...improving AIs), (ii) a large fraction of the world's fab-capacity redirected to AI chips, and (iii) AIs helping with late-stage hardware stuff like chip-design. (I agree that it takes a long time to build new fabs.) This would simultaneously explain why robotics aren't necessary (before we have crazy good AI) and decrease the probability of regulatory delays, since the AIs would just need to be deployed inside a few companies. (I can see how regulation would by-default slow down some kinds of broad deployment, but it seems super unclear whether there will be regulation put in place to slow down R&D and internal deployment.)

Update: I changed the probability distribution in the post slightly in line with your criticism. The new distribution is almost exactly the same, except that I think it portrays a more realistic picture of short timelines. The p(TAI < 2030) is now 5% [eta: now 7%], rather than 2%.

That's reasonable. I think I probably should have put more like 3-6% credence before 2030. I should note that it's a bit difficult to tune the Metaculus distributions to produce exactly what you want, and the distribution shouldn't be seen as an exact representation of my beliefs.

I don't understand this. Why would there be a 2x speedup in algorithmic progress?

Sorry, that was very poor wording. I meant that 2023 FLOP is probably about equal to 2 2022 FLOP, due to continued algorithmic progress. I'll reword the comment you replied to.

Nice, gotcha.

Incidentally, as its central estimate for algorithmic improvement, the takeoff speeds model uses AI and Efficiency's ~1.7x per year, and then halves it to ~1.3x per year (because todays' algorithmic progress might not generalize to TAI). If you're at 2x per year, then you should maybe increase the "returns to software" from 1.25 to ~3.5, which would cut the model's timelines by something like 3 years. (More on longer timelines, less on shorter timelines.)

I like this post a lot but I will disobey Rapoport's rules and dive straight into criticism.

I think this is a poor way to describe a reasonable underlying point. Heavier-than-air flying machines were pursued for centuries, but airplanes appeared almost instantly (on a historic scale) after the development of engines with sufficient power density. Nonetheless, it would be confusing to say "flying is more about engine power than the right theories of flight". Both are required. Indeed, although the Wright brothers were enabled by the arrival of powerful engines, they beat out other would-be inventors (Ader, Maxim, and Langley) who emphasized engine power over flight theory. So a better version of your claim has to be something like "compute quantity drives algorithmic ability; if we independently vary compute (e.g., imagine an exogenous shock) then algorithms follow along", which (I think) is what you arguing further in the post.

But this also doesn't seem right. As you observe, algorithmic progress has been comparable to compute progress (both within and outside of AI). You list three "main explanations" for where algorithmic progress ultimately comes from and observe that only two of them explain the similar rates of progress in algorithms and compute. But both of these draw a causal path from compute to algorithms without considering the (to-me-very-natural) explanation that some third thing is driving them both at a similar rate. There are a lot of options for this third thing! Researcher-to-researcher communication timescales, the growth rate of the economy, the individual learning rate of humans, new tech adoption speed, etc. It's plausible to me that compute and algorithms are currently improving more or less as fast as they can, given their human intermediaries through one or all of these mechanisms.

The causal structure is key here, because the whole idea is to try and figure out when economic growth rates change, and the distinction I'm trying to draw becomes important exactly around the time that you are interested in: when the AI itself is substantially contributing to its own improvement. Because then those contributions could be flowing through at least three broad intermediaries: algorithms (the AI is writing its own code better), compute (the AI improves silicon lithography), or the wider economy (the AI creates useful products that generate money which can be poured into more compute and human researchers).

Humans have been automating mechanical task for many centuries, and information-processing tasks for many decades. Moore's law, the growth rate of the thing (compute) that you ague drives everything else, has been stated explicitly for almost 58 years (and presumably applicable for at least a few decade before that). Why are you drawing a distinction between all the information processing that happened in the past and "AI", which you seem to be taking as a basket of things that have mostly not had a chance to be applied yet (so no data)?

This list is missing the crucial parameters that would translate the others into what we agree is most notable: economic growth. I think needs to be discussed much more in section 4 for it to be a useful summary/invitation to the models you mention.

I agree. A better phrasing could have emphasized that, although both theory and compute are required, in practice, the compute part seems to be the crucial bottleneck. The 'theories' that drive deep learning are famously pretty shallow, and most progress seems to come from tinkering, scaling, and writing code to be more efficient. I'm not aware of any major algorithmic contribution that would not have been possible if it were not for some fundamental analysis from deep learning theory (though perhaps these happen all the time and I'm just not sufficiently familiar to know).

I think the alternative theory of a common cause is somewhat plausible, but I don't see any particular reason to believe in it. If there were a common factor that caused progress in computer hardware and algorithms to proceed at a similar rate, why wouldn't other technologies that shared that cause grow at similar rates?

Hardware progress has been incredibly fast over the last 70 years -- indeed, many people say that the speed of computers is by far the most salient difference between the world in 1973 and 2023. And yet algorithmic progress has apparently been similarly rapid, which seems hard to square with a theory of a general factor that causes innovation to proceed at similar rates. Surely there are such bottlenecks that slow down progress in both places, but the question is what explains the coincidence in rates.

I expect innovation in AI in the future will take a different form than innovation in the past.

When innovating in the past, people generally found a narrow tool or method that improved efficiency in one narrow domain, without being able to substitute for human labor across a wide variety of domains. Occasionally, people stumbled upon general purpose technologies that were unusually useful across a variety of situations, although by-and-large these technologies are quite narrow compared to human resourcefulness. By contrast, I think it's far more plausible that ML foundation models allow us to create models that can substitute for human labor across nearly all domains at once, once a sufficient scale is reached. This would happen because sufficiently capable foundation models can be cheaply fine-tuned to provide a competent worker for nearly any task.

This is essentially the theory that there's something like "general intelligence" which causes human labor to be so useful across a very large variety of situations compared to physical capital. This is also related to transfer learning and task-specific bottlenecks that I talked about in Part 2. Given that human population growth seems to have caused a "productivity explosion" in the past (relative to pre-agricultural rates of growth), it seems highly probable that AI could do something similar in the future if it can substitute for human labor.

That said, I'm sympathetic to the model that says future AI innovation will happen without much transfer to other domains, more similar to innovation in the past. This is one reason (among many) that my final probability distribution in the post has a long tail extending many decades into the future.

I'm also a little surprised you think that modeling when we will have systems using similar compute as the human brain is very helpful for modeling when economic growth rates will change. (Like, for sure someone should be doing it, but I'm surprised you're concentrating on it much.) As you note, the history of automation is one of smooth adoption. And, as I think Eliezer said (roughly), there don't seem to be many cases where new tech was predicted based on when some low-level metric would exceed the analogous metric in a biological system. The key threshold for recursive feedback loops (*especially* compute-driven ones) is how well they perform on the relevant tasks, not all tasks. And the way in which machines perform tasks usually looks very different than how biological systems do it (bird vs. airplanes, etc.).

If you think that compute is the key bottleneck/driver, then I would expect you to be strongly interested in what the automation of the semiconductor industry would look like.

In this post, when I mentioned human brain FLOP, it was mainly used as a quick estimate of AGI inference costs. However, different methodologies produce similar results (generally within 2 OOMs). A standard formula to estimate compute costs is 6*N per forward pass, where N is the number of parameters. Currently the largest language models have are estimated to be between 100 billion to 1 trillion parameters, which would work out to being 6e11 to 6e12 FLOP/forward pass.

The chinchilla scaling law suggests that inference costs will grow at about half the rate of training compute costs. If we take the estimate of 10^32 training FLOP for TAI (in 2023 algorithms) that I gave in the post, which was itself partly based on the Direct Approach, then we'd expect inference costs to grow to something like 1e15-1e16 per forward pass, although I expect subsequent algorithmic progress will bring this figure down, depending on how much algorithmic progress translates into data efficiency vs. parameter efficiency. A remaining uncertainty here is how a single forward pass for a TAI model will compare to one second of inference for humans, although I'm inclined to think that they'll be fairly similar.

From Birds, Brains, Planes, and AI:

I listed this example in my comment, it was incorrect by an order of magnitude, and it was a retrodiction. "I didn't look up the data on Google beforehand" does not make it a prediction.

Yeah sorry, I didn't mean to say this directly contradicted anything you said. It just felt like a good reference that might be helpful to you or other people reading the thread. (In retrospect, I should have said that and/or linked it in response to the mention in your top-level comment instead.)

(Also, personally, I do care about how much effort and selection is required to find good retrodictions like this, so in my book "I didn't look up the data on Google beforehand" is relevant info. But it would have been way more impressive if someone had been able to pull that off in 1890, and I agree this shouldn't be confused for that.)

Re "it was incorrect by an order of magnitude": that seems fine to me. If we could get that sort of precision for predicting TAI, that would be awesome and outperform any other prediction method I know about.

Excellent post.

I want to highlight something that I missed on the first read but nagged me on the second read.

You define transformative AGI as:

1. Gross world product (GWP) exceeds 130% of its previous yearly peak value

2. World primary energy consumption exceeds 130% of its previous yearly peak value

3. Fewer than one billion biological humans remain alive on Earth

You predict when transformative AGI will arrive by building a model that predicts when we'll have enough compute to train an AGI.

But I feel like there's a giant missing link - what are the odds that training an AGI causes 1, 2, or 3?

It feels not only plausible but quite likely to me that the first AGI will be very expensive and very uneven (superhuman in some respects and subhuman in others). An expensive, uneven AGI may years or decades to self-improve to the point that GWP or energy consumption rises by 30% in a year.

It feels like you are implicitly ascribing 100% probability to this key step.

This is one reason (among others) that I think your probabilities are wildly high. Looking forward to setting up our bet. :)

Thanks for the comment.

I think you're right that my post neglected to discuss these considerations. On the other hand, my bottom-line probability distribution at the end of the post deliberately has a long tail to take into account delays such as high cost, regulation, fine-tuning, safety evaluation, and so on. For these reasons, I don't think I'm being too aggressive.

Regarding the point about high cost in particular: it seems unlikely to me that TAI will have a prohibitively high inference cost. As you know, Joseph Carlsmith estimated brain FLOP with a central estimate of 10^15 FLOP/s. This is orders of magnitude higher than the cost of LLMs today, and it would still be comparable to prevailing human wages at current hardware prices. In addition, there are more considerations that push me towards TAI being cheap:

I'm aware that you have some counterarguments to these points in your own paper, but I haven't finished reading it yet.

I thought it would be useful to append my personal TAI timelines conditional on no coordinated delays, no substantial regulation, no great power wars or other large exogenous catastrophes, no global economic depression, and that my basic beliefs about reality are more-or-less correct (e.g. I'm not living in a simulation or experiencing a psychotic break).

As you can see, this results in substantially different timelines. I think these results might be more useful for gauging how close I think we are to developing the technologies for radically transforming our world. I've added these plots in a footnote.

Does this model take into consideration potential architectural advances of the size of transformers? (Or of other, non-architectural, advances of this size?)

Interesting and useful report, thanks for compiling it. I'm going to push back against the "Against very short timelines" section, staying mostly[1] within your framework.

I think that the most salient definition of TAI is your 3rd: "Fewer than one billion biological humans remain alive on Earth". This because, as you say, the "downstream consequences from [T]AI, such as value misalignment and human disempowerment, are worth additional consideration, to put it mildly".

As Thomas Larsen and Lukas Finnveden mention in their comments, the automation of AI research by AI is what we need to look out for. So it's not inference on the scale of the global human labour market that we should be looking at when estimating global available compute, but rather training of the largest foundation model. If we take the total of 10^22 FLOP/s estimated to be available, and use that for training over ~4 months (10^7 seconds), we get a training run of of 10^29 FLOP. Which is 10,000x or 4 OOM greater than GPT-4. Or, approx the difference between GPT-2 and GPT-4. This is within credible estimates[2] of what is needed for AGI.

Even if we adjust downward an OOM to factor in a single actor likely not being able to buy up more than 10% of the global supply of GPUs before pushing the price up beyond what they can afford (say $10B[3]), we still have a training run of of 10^28 FLOP being possible this year[4]. At this level, how confident would you be that the resulting model can't be used to dramatically speed up AI/ML research to the point of making AGI imminent?

In fact, if we factor in algorithmic progress going in lockstep with compute increases (which as you say is plausible[5]), then we get another 3 OOMs for free[6]. So, we have 10^31 FLOP equivalent being basically available now to anyone rich enough.

Overall I think this means we are in fact in an emergency situation.[7]

The only departure really is the estimated FLOPs needed for TAI/AGI, which I take from the AGISF article lined in footnote 2 below.

See footnote [9] of the linked article.

Note the estimates of ~$50-$75B worth of compute being available[8]. And the fact that large trillion-dollar companies like Microsoft and Google, and large governments like the US and China can easily afford to spend $10B on this.

I will also note that Google's private TPU clusters are not included in the compute estimates, and they may well already be training Gemini with 10^26 to 10^27 FLOP using TPUv5s. Note further that Gemini is multimodal, and may include robotics, considered to be a potential bottleneck in the OP.

Epoch estimates 2.5x/year increase in algorithmic efficiency, exceeding the growth in compute efficiency. GPU price performance is given as 1.3x/year. But we can adjust down due to the narrow/general distinction you mention.

This would be more or less immediately unlocked by (use of) the powerful new AI trained with 10^28 FLOP.

In fact, the 10^28 estimate is 1 OOM worse (higher) than I estimated there ("100x the compute used for GPT-4" being available now). 10^31 is 4 OOM worse!

These estimates are conservative - upper bounds in terms of GPUs sold; the 1 OOM downward adjustment can also be used to offset this fact.

Thanks. My section on very short timelines focused mainly on deployment, rather than training. I actually think it's at least somewhat likely that ~AGI will be trained in the next 4 years, but I expect a lot of intermediate steps between the start of that training run and when we see >30% world GDP growth within one year.

My guess is that the pre-training stage of such a training run will take place over 3-9 months, with 6-12 months of fine-tuning, and safety evaluation. Then, deployment itself likely adds another 1-3 years to the timeline even without regulation, as new hardware would need to be built or repurposed to support the massive amounts of inference required for explosive growth, and people need time to adjust their workflows to incorporate AIs. Even if an AGI training run started right now, I would still bet against it increasing world GDP growth to >30% in 2023, 2024, 2025, and 2026. The additional considerations of regulation and the lack of general-purpose robotics pushes my credence in very short timelines to very low levels, although my probability distribution begins to sharply increase after 2026 until hitting a peak in ~2032.

I think the 10^22 FLOP/s figure is really conservative. It made some strong assumptions like:

My guess is that a single actor (even the US Government) could collect at most 30% of existing hardware at the moment, and I would guess that anyone who did this would not immediately spend all of their hardware on a single training run. They would probably first try a more gradual scale-up to avoid wasting a lot of money on a botched ultra-expensive training run. Moreover, almost all commercial actors are going to want to allocate a large fraction -- probably the majority -- of their compute to inference simultaneously with training.

My central estimate of the amount of FLOP that any single actor can get their hands on right now is closer to 10^20 FLOP/s, and maybe 10^21 FLOP/s for the US government if for some reason we suddenly treated that as a global priority.

If I had to guess, I'd predict that the US government could do a 10^27 FLOP training run by the end of next year, with some chance of a 10^28 training run within the next 4 years. A 10^29 FLOP training run within 4 years doesn't seem plausible to me even with US government-levels of spending, simply because we need to greatly expand GPU production before that becomes a realistic option.

I don't think that's realistic in a very short period of time. In the post, I suggested that algorithmic progress either comes from from insights that are enabled by scale, or algorithmic experimentation. If progress were caused by algorithmic experimentation, then there's no mechanism by which it would scale with training runs independent of a simultaneous scale-up of experiments.

It also seems unlikely that we'd get 3 OOMs for free if progress is caused by insights that are enabled by scale. I suspect that if the US government were to dump 10^28 FLOP on a single training run in the next 4 years, they would use a tried-and-tested algorithm rather than trying something completely different to see if it works on a much greater scale than anything before. Like I said above, it seems more likely to me that actors will gradually scale up their compute budgets over the next few years to avoid wasting a lot of money on a single botched training run (though by "gradual" I mean something closer to 1 OOM per year than 0.2 OOMs per year).

Thanks. From an x-risk perspective, I don' think deployment or >30% GDP growth are that important or necessary to be concerned with. A 10^29 FLOP training run is an x-risk itself in terms of takeover risk from inner misalignment during training, fine-tuning or evals (lab leak risk).

In terms of physical takeover, repurposing via hacking is one vector a rogue AI may use.

Regulation - hopefully this will slow things down, but for the sake of argument (i.e. in order to argue for regulation) it's best to not incorporate it into this analysis. The worry is what could happen if we don't have urgent global regulation!

General-purpose robotics - what do you make of Google's recent progress (e.g. robots learning all the classic soccer tactics and skills from scratch)? And the possibility of Gemini being able to control robots? [mentioned in my footnote 4 above]

Yes, although as mentioned there are private sources of compute too (e.g. Google's TPUs). But I guess those don't amount 10^22 FLOP.

What does the NSA have access to already?

Epoch says on your trends page: "4.2x/year growth rate in the training compute of milestone systems". Do you expect this trend to break in less than 4 years? AMD are bringing out new AI GPUs (MI300X) to compete with NVidia later this year. There might be a temporary shortage now, but is it really likely to last for years?

Following the Epoch trends, given 2x10^25 FLOP in 2022 (GPT-4), that's 2.6x10^28 in 2027, a factor of 4 away from 10^29. Factor in algorithmic improvements and that's 10^29 or even 10^30. To me it seems that 10^29 in 4 years (2027) is well within reach even for business as usual.

As I say in my footnote 6 above: algorithmic progress would be more or less immediately unlocked by (use of) the powerful new AI trained with 10^28 FLOP.

1 OOM per year (as per Epoch trends, inc. algorithmic improvement) is 10^29 in 2027. I think another factor to consider is that given we are within grasping distance of AGI, people (who are naive to the x-risk) will be throwing everything they've got at crossing the line as fast as they can, so we should maybe expect an acceleration because of this.

I'm not convinced that AI lab leaks are significant sources of x-risks, but I can understand your frustration with my predictions if you disagree with that. In the post, I mentioned that I disagree with hard takeoff models of AI, which might explain our disagreement.

I'm not sure about that. It seems like you might still want to factor in the effects of regulation into your analysis even if you're arguing for regulation. But even so, I'm not trying to make an argument for regulation in this post. I'm just trying to predict the future.

I think this result is very interesting, but my impression is that the result is generally in line with the slow progress we've seen over the last 10 years. I'll be more impressed when I start seeing results that work well in a diverse array of settings, across multiple different types of tasks, with no guarantees about the environment, at speeds comparable to human workers, and with high reliability. I currently don't expect results like that until the end of the 2020s.

I wouldn't be very surprised if the 4.2x/year trend continued for another 4 years, although I expect it to slow down some time before 2030, especially for the largest training run. If it became obvious that the trend was not slowing down, I would very likely update towards shorter timelines. Indeed, I believe the trend from 2010-2015 was a bit faster than from 2015-2022, and from 2017-2023 the largest training run (according to Epoch data) went from ~3*10^23 FLOP to ~2*10^25 FLOP, which was only an increase of about 0.33 OOMs per year.

But I was talking about physical FLOP in the comment above. My median for the amount of FLOP required to train TAI is closer to 10^32 FLOP using 2023 algorithms, which was defined in the post in a specific way. Given this median, I agree there is a small (perhaps 15% chance) that TAI can be trained at 10^29 2023 FLOP, which means I think there's a non-negligible chance that TAI could be trained in 2027. However, I expect the actual explosive growth part to happen at least a year later, though, for reasons I outlined above.

Let's see what happens with the Gemini release.

Overall, I think Epoch should be taking more of a security mindset approach with regard to x-risk in it's projections. i.e. not just being conservative in a classic academic prestige-seeking sense, but looking at ways that uncontrollable AGI could be built soon (or at least sooner) that break past trends and safe assumptions, and raising the alarm where appropriate.

I can only speak for myself here, not Epoch, but I don't believe in using the security mindset when making predictions. I also dispute the suggestion that I'm trying to be conservative in a classic academic prestige-seeking sense. My predictions are simply based on what I think is actually true, to the best of my abilities.

Fair enough. I do say "projection" rather than prediction though - meaning that I think a range of possible futures should be looked at (keeping in mind a goal of working to minimise existential risk), rather than just trying to predict what's most likely to happen or "on the mainline".

I always feel kind of uneasy about how the term "algorithmic progress" is used. If you find an algorithm with better asymptotics, then apparent progress depends explicitly on the problem size. MILP seems like a nice benchmark because it's NP-hard in general, but then again most(?) improvements are exploiting structure of special classes of problems. Is that general progress?

I definitely agree with the last sentence, but I'm still not sure how to think about this. I have the impression that, typically, some generalizable method makes a problem feasible, at which point focused attention on applying related methods to that problem drives solving it towards being economical. I suppose for this framework we'd still care more about the generalizable methods, because those trigger the starting gun for each automatable task?

I don't understand where the $75 billion is coming from. It looks like you are dividing the $15 billion by the 21.3%, but that doesn't make sense as the 21.3% is revenue growth. Surely market share needs to come into it somewhere?

The $75 billion figure is an upper bound for how many AI GPUs could have been sold over the last ~10 years (since the start of the deep learning revolution), adjusted by hardware efficiency. There are multiple ways to estimate how many AI GPUs have been sold over the last 10 years, but I think any reasonable estimate of this figure will give you something lower than $75 billion, making the figure conservative.

To give a little bit more rigor, let's take the number of $4.28 billion from NVIDIA's most recent quarterly data center revenue and assume that this revenue number has grown at 4.95% every quarter for the last 10 years, to match the 21.3% yearly growth number in the post (note: this is conservative, since the large majority of the data center revenue has been in the last few years). For hardware progress, let's also assume that FLOP/$ has been increasing at a rate of 7% per quarter, which would mean a doubling time of 2.5 years, which is roughly equal to the trend before hardware acceleration for deep learning.

Combining these facts, we can start with $4.28B(1.0495+0.07)40=$0.0468B as an estimate of NVIDIA's AI quarterly revenue 10 years ago adjusted for hardware efficiency, and sum over the next 40 quarters of growth to get $56.37 billion. NVIDIA is responsible for about 80% of the market share, at least according to most sources, so let's just say that the true amount is $56.36∗10.8=$70.45B.