I like Open Phil's worldview diversification. But I don't think their current roster of worldviews does a good job of justifying their current practice. In this post, I'll suggest a reconceptualization that may seem radical in theory but is conservative in practice. Something along these lines strikes me as necessary to justify giving substantial support to paradigmatic Global Health & Development charities in the face of competition from both Longtermist/x-risk and Animal Welfare competitor causes.

Current Orthodoxy

I take it that Open Philanthropy's current "cause buckets" or candidate worldviews are typically conceived of as follows:

- neartermist - incl. animal welfare

- neartermist - human-only

- longtermism / x-risk

We're told that how to weigh these cause areas against each other "hinge[s] on very debatable, uncertain questions." (True enough!) But my impression is that EAs often take the relevant questions to be something like, should we be speciesist? and should we only care about present beings? Neither of which strikes me as especially uncertain (though I know others disagree).

The Problem

I worry that the "human-only neartermist" bucket lacks adequate philosophical foundations. I think Global Health & Development charities are great and worth supporting (not just for speciesist presentists), so I hope to suggest a firmer grounding. Here's a rough attempt to capture my guiding thought in one paragraph:

Insofar as the GHD bucket is really motivated by something like sticking close to common sense, "neartermism" turns out to be the wrong label for this. Neartermism may mandate prioritizing aggregate shrimp over poor people; common sense certainly does not. When the two come apart, we should give more weight to the possibility that (as-yet-unidentified) good principles support the common-sense worldview. So we should be especially cautious of completely dismissing commonsense priorities in a worldview-diversified portfolio (even as we give significant weight and support to a range of theoretically well-supported counterintuitive cause areas).

A couple of more concrete intuitions that guide my thinking here: (1) fetal anesthesia as a cause area intuitively belongs with 'animal welfare' rather than 'global health & development', even though fetuses are human. (2) It's a mistake to conceive of global health & development as purely neartermist: the strongest case for it stems from positive, reliable flow-through effects.

A Proposed Solution

I suggest that we instead conceive of (1) Animal Welfare, (2) Global Health & Development, and (3) Longtermist / x-risk causes as respectively justified by the following three "cause buckets":

- Pure suffering reduction

- Reliable global capacity growth

- High-impact long-shots

In terms of the underlying worldview differences, I think the key questions are something like:

(i) How confident should we be in our explicit expected value estimates? How strongly should we discount highly speculative endeavors, relative to "commonsense" do-gooding?

(ii) How does the total (intrinsic + instrumental) value of improving human lives & capacities compare to the total (intrinsic) value of pure suffering reduction?

[Aside: I think it's much more reasonable to be uncertain about these (largely empirical) questions than about the (largely moral) questions that underpin the orthodox breakdown of EA worldviews.]

Hopefully it's clear how these play out: greater confidence in EEV lends itself to supporting longshots to reduce x-risk or otherwise seek to improve the long-term future in a highly targeted, deliberate way. Less confidence here may support more generic methods of global capacity-building, such as improving health and (were there any promising interventions in this area) education. Only if you're both dubious of longshots and doubt that there's all that much instrumental value to human lives do you end up seeing "pure suffering reduction" as the top priority.[1] But insofar as you're open to pure suffering reduction, there's no grounds for being speciesist about it.

Implications

- Global health & development is actually philosophically defensible, and shouldn't necessarily be swamped by either x-risk reduction or animal welfare. But it requires recognizing that the case for GHD requires a strong prior on which positive "flow-through" effects are assumed to strongly correlate with traditional neartermist metrics like QALYs. Research into the prospects for improved tracking and prediction of potential flow-through effects should be a priority.

- In cases where the correlation transparently breaks down (e.g. elder care, end-of-life care, fetal anesthesia, dream hedonic quality, wireheading, etc.), humanitarian causes should instead need to meet the higher bar for pure suffering reduction - they shouldn't be prioritized above animal welfare out of pure speciesism.[2]

- If we can identify other broad, reliable means to boosting global capacity (maybe fertility / population growth?),[3] then these should trade off against Global Health & Development (rather than against x-risk reduction or other longshots).

- ^

It's sometimes suggested that an animal welfare focus also has the potential for positive flow-through effects, primarily through improving human values (maybe especially important if AI ends up implementing a "coherent extrapolation" of human values). I think that's an interesting idea, but it sounds much more speculative to me than the obvious sort of capacity-building you get from having an extra healthy worker in the world.

- ^

This involves some revisions from ordinary moral assumptions, but I think a healthy balance: neither the unreflective dogmatism of the ineffective altruist, nor the extremism of the "redirect all GHD funding to animals" crowd.

- ^

Other possibilities may include scientific research, economic growth, improving institutional decision-making, etc. It's not clear exactly where to draw the line for what counts as "speculative" as opposed to "reliably" good, so I could see a case for a further split between "moderate" vs "extreme" speculativeness. (Pandemic preparedness seems a solid intermediate cause area, for example - far more robust, but lower EV, than AI risk work.)

It doesn't seem conservative in practice? Like Vasco, I'd be surprised if aiming for reliable global capacity growth would look like the current GHD portfolio. For example:

I'd guess most proponents of GHD would find (1) and (2) particularly bad.

I also think it misses the worldview bucket that's the main reason why many people fund global health and (some aspects of) development: intrinsic value attached to saving [human] lives. Potential positive flowthrough effects are a bonus on top of that, in most cases.

From an EA-ish hedonic utilitarianism perspective this dates right back to Singer's essay about saving a drowning child. Taking that thought experiment in a different direction, I don't think many people - EA or otherwise - would conclude that the decision on whether to save the child or not should primarily be a tradeoff between the future capacity of the child and the amount of aquatic suffering a corpse to feed upon could alleviate.

I think they'd say the imperative to save the child's life wasn't in danger of being swamped by the welfare impact on a very large number of aquatic animals or contingent on that child's future impact, and I suspect as prominent an anti-speciesist as Singer would agree.

(Placing a significantly lower or zero weight on the estimated suffering experienced by a battery chicken or farmed shrimp is a sufficient but not necessary condition to favour lifesaving over animal suffering reduction campaigns. Though personally I do, and actually think the more compelling ethical arguments for prioritising farm animal welfare are deontological ones about human obligations to stop causing suffering)

I didn't say your proposal was "bad", I said it wasn't "conservative".

My point is just that, if GHD were to reorient around "reliable global capacity growth", it would look very different, to the point where I think your proposal is better described as "stop GHD work, and instead do reliable global capacity growth work", rather than the current framing of "let's reconceptualize the existing bucket of work".

Thanks for these ideas, this is an interesting perspective.

I'm a little uncertain about one of your baseline assumptions here.

"We're told that how to weigh these cause areas against each other "hinge[s] on very debatable, uncertain questions." (True enough!) But my impression is that EAs often take the relevant questions to be something like, should we be speciesist? and should we only care about present beings? Neither of which strikes me as especially uncertain (though I know others disagree)."

I think I disagree with this framing and/or perhaps there might be a bit of unintentional strawmanning here? Can you point out the EAs or EA arguments (perhaps on the forum) that distinguish between the strength of these worldviews that are explicitly speciesist? Or only care about present beings?

Personally I'm focused largely on GHD (while deeply respecting other worldviews) not because I'm speciest, but because I currently think the experience of being human might be many orders of magnitude more valuable than any other animal (I reject hedonism), and also that even assuming hedonism I'm not yet convinced by Rethink Priorities amazing research which places the moral weights of... (read more)

Hi Nick, I'm reacting especially to the influential post, Open Phil Should Allocate Most Neartermist Funding to Animal Welfare, which seems to me to frame the issues in the ways I describe here as "orthodox". (But fair point that many supporters of GHD would reject that framing! I'm with you on that; I'm just suggesting that we need to do a better job of elucidating an alternative framing of the crucial questions.)

Thanks, yeah, this could be another crucial question: whether there are distinctive goods, intrinsic to (typical) human lives, that are just vastly more important than relieving suffering. I have some sympathy for this view, too. But it faces the challenge that most people would prioritize reducing their own suffering over gaining more "distinctive goods" (they wouldn't want to extend their life further if half the time would be spent in terrible suffering, for example). So either you have to claim that most people are making a prudential error here (and really they should care less about their own suffering, relative to distinctive huma... (read more)

Nice post, Richard!

I think it would be a little bit of a surprising and suspicious convergence if the best interventions to improve human health (e.g. GiveWell's top charities) were also the best to reliably improve global capacity. Some areas which look pretty good to me on this worldview:

Maybe this is a nitpick, but I wonder whether it would be better to say "global human health and development/wellbeing" instead of "global health and development/wellbeing" whenever animals are not being considered. I estimated the scale of the annual welfare of all farmed animals is 4.64 times than of all humans, and that of all wild animals 50.8 M times that of all humans.

Fwiw, I think Greg's essay is one of the most overweighted in forum history (as in, not necessarily overrated, but people put way too much weight in its argument). It's a highly speculative argument with no real-world grounding, and in practice we know that of many well-evidenced socially beneficial causes that do seem convergently beneficial in other areas: one of the best climate change charities seems to be the among the best air pollution charities; deworming seems to be beneficial for education (even if the magnitude might have been overstated); cultivated meat could be a major factor in preventing climate change (subject to it being created by non-fossil-fuel-powered processes).

Each of these side effects have asterisks by them, and yet I find it highly plausible that an asterisked side-effect of a well-evidenced cause could actually turn out to be a much better intervention than essentially evidence-free work done on the very long term - especially when the latter is ... (read more)

I don't think these examples illustrate that "bewaring of suspicious convergence" is wrong.

For the two examples I can evaluate (the climate ones), there are co-benefits, but there isn't full convergence with regards to optimality.

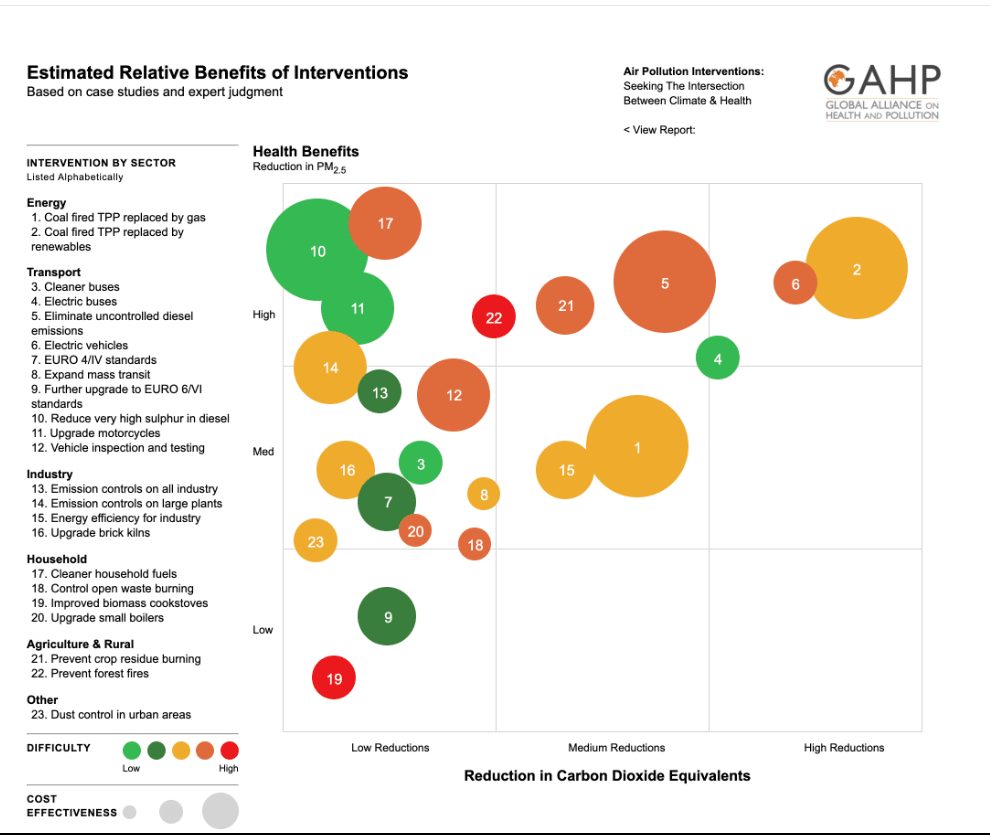

On air pollution, the most effective intervention for climate are not the most effective intervention for air pollution even though decarbonization is good for both.

See e.g. here (where the best intervention for air pollution would be one that has low climate benefits, reducing sulfur in diesel; and I think if that chart were fully scope-sensitive and not made with the intention to showcase co-benefits, the distinctions would probably be larger, e.g. moving from coal to gas is a 15x improvement on air pollution while only a 2x on emissions):

And the reason is that different target metrics (carbon emissions, reduced air pollution mortality) are correlated, but do not map onto each other perfectly and optimizing for one does not maximize the other.

Same thing with alternative proteins, where a strategy focused on reducing animal suffering would likely (depending on moral weights) prioritize APs for chicken, whereas a climate-focused strategy would c... (read more)

To clarify, how are we defining "capacity" here? Even assuming economic productivity has something to do with it, it doesn't follow that saving a life in a high-income country increases it. For example, retired and disabled persons generally don't contribute much economic productivity. At the bottom of the economic spectrum in developed countries, many people outside of those categories consume significantly more economic productivity than they produce. If one is going to go down this path (which I do not myself support!), I think one has to bite the bullet and emphasize saving the lives of economically productive members of the high-income country (or kids/young adults who one expects to become economically productive).

I agree that the "longtermism"/"near-termism" is a bad description of the true splits in EA. However I think that your proposed replacements could end up imposing one worldview on a movement that is usually a broad tent.

You might not think speciesm is justified, but there are plenty of philosophers who disagree. If someone cares about saving human lives, without caring overmuch if they go on to be productive, should they be shunned from the movement?

I think the advantage of a label like "Global health and development" is that is doesn't require a super specific worldview: you make your own assumptions about what you value, then you can decide for yourself whether GHD works as a cause area for you, based on the evidence presented.

If I were picking categories, I'd simply be more specific with categories, and then further subdivide them into "speculative" and "grounded" based on their level of weirdness.

Grounded GHD would be malaria nets, speculative GHD would be, like, charter cities or something

Grounded non-human suffering reduction would be reducing factory farming, speculative non-human suffering reduction looks at shrimp suffering

Grounded X-risk/catastrophe reduction would be pandemic prevention, speculative x-risk/catastrophe reduction would be malevolent hyper-powered AI attacks.

To clarify: I'm definitely not recommending "shunning" anyone. I agree it makes perfect sense to continue to refer to particular cause areas (e.g. "global health & development") by their descriptive names, and anyone may choose to support them for whatever reasons.

I'm specifically addressing the question of how Open Philanthropy (or other big funders) should think about "Worldview Diversification" for purposes of having separate funding "buckets" for different clusters of EA cause areas.

This task does require taking some sort of stand on what "worldviews" are sufficiently warranted to be worth funding, with real money that could have otherwise been used elsewhere.

Especially for a dominant funder like OP, I think there is great value in legibly communicating its honest beliefs. Based on what it has been funding in GH&D, at least historically, it places great value on saving lives as ~an end unto itself, not as a means of improving long-term human capacity. My understanding is that its usual evaluation metrics in GH&D have reflected that (and historic heavy dependence on GiveWell is clearly based on that). Coming up with some sort of alternative rationale that isn't the actual rationale doesn't feel honest, transparent, or . . . well, open.

In the end, Open Phil recommends grants out of Dustin and Cari's large bucket of money. If their donors want to spend X% on saving human lives, it isn't OP's obligation to backsolve a philosophical rationale for that preference.

I'm suggesting that they should change their honest beliefs. They're at liberty to burn their money too, if they want. But the rest of us are free to try to convince them that they could do better. This is my attempt.

(Minor edits.)

Some possibilities to consider in >100 years that could undermine the reliability of any long-term positive effects from helping the global poor:

- Marginal human labour will (quite probably, but not with overwhelming probability?) have no, very little or even negative productive value due to work being fully or nearly fully automated. The bottlenecks to production will just be bottlenecks for automation, e.g. compute, natural resources, energy, technology, physical limits and the preferences of agents that decide how automation is used. Humans will compete over the little share of useful work they can do, and may even push to do more work that will in fact be counterproductive and better off automated. What we do today doesn't really affect long-run productive capacity with substantial probability (or may even be negative for it). This may be especially true for people and their descendants who are less likely to advance the technological frontier in the next 100 years, like the global poor.

- Biological humans (and our largely biological or more biological-like descendants) may compete over resources with artificial moral patients who can generate personal welfare valu

... (read more)Thanks for this! I agree that apart from speciesism, there isn't a good reason to prioritize GHD over animal welfare if targeting suffering reduction (or just directly helping others).

Would you mind expanding further on the goals of the "reliable global capacity growth" cause bucket? It seems to me that several traditionally longtermist / uncategorized cause areas could fit into this bucket, such as:

Under your categorization, would these be included in GHD?

It also seems that some traditionally GHD charities would fall into the "suffering reduction" bucket, since their impact is focused on directly helping others:

Under your categorization, would these be included in animal welfare?

Also, would you recommend that GHD charity evaluators more explicitly change their optimization target from metrics which measure directly helping others / suffering reduction (QALYs, WELLBYs) to "global capacity growth" metrics? What might these metrics look like?

As a quick comment, I think something else that distinguishes GHD and animal welfare is that the global non-EA GHD community seems to me the most mature and "EA-like" of any of the non-EA analogues in other fields. It's probably the one that requires the most modest departure from conventional wisdom to justify it.

Thanks for this! I would be curious to know what you think about the tension there seems to be between allocating resources to Global health & development (or even prioritizing it over Animal Welfare) and rejecting speciesism given The Meat eater problem.

Quoting this paragraph and bolding the bit that I want to discuss:

I think the intuition here is that sometimes we should trust the output of common sense, even if the process to get there seems wrong. In general this is a pretty good and often underappreciated heuristic, but I think that's really only because in many cases the output of common sense will have been subject to some kind of alternative pressure towards accuracy, as in the seemingly-excessive traditional process to prepare manioc that in fact destroyed cyanide in it, despite the... (read more)

This just depends on what you think those EEVs are. Long-serving EAs tend to lean towards thinking that targeted efforts towards the far future have higher payoff, but that also has a strong selection effect. I know many smart people with totalising consequentialist sympathies who are sceptical enough of the far future that they prefer to donate to GHD causes. None of them are at all active in the EA movement, and I don't think that's coincidence.

why do you think that the worldviews need strong philosophical justification? it seems like this may leave out the vast majority of worldviews.

The massive error bars around how animal well-being/suffering compares to that of humans means it's an unreliable approach to reducing suffering.

Global development is a prerequisite for a lot of animal welfare work. People struggling to survive don't have time to care about the wellbeing of their food.

Another relevant post not already shared here, especially for life-saving interventions: Assessing the case for population growth as a priority.

I have a piece I'm writing with some similar notes to this, may I send it to you when I'm done?

Relatedly, I have just published a post on Helping animals or saving human lives in high income countries seems better than saving human lives in low income countries?.

... (read more)Love the post, don't love the names given.

I think "capacity growth" is a bit too vague, something like "tractable, common-sense global interventions" seems better.

I also think "moonshots" is a bit derogatory, something like "speculative, high-uncertainty causes" seems better.

Suppose someone were to convince you that the interventions GiveWell pursues are not the best way to improve "global capacity", and that a better way would be to pursue more controversial/speculative causes like population growth or long-run economic growth or whatever. I just don't see EA reorienting GHW-worldview spending toward controversial causes like this, ever. The controversial stuff will always have to compete with animal welfare and AI x-risk. If your worldview categorization does not always make the GHW worldview center on non-controversial stuff, it is practically meaningless. This is why I was so drawn to this post - I think you correctly point out that "improving the lives of current humans" is not really what GHW is about!

The non-controversial stuff doesn't have to be anti-malaria efforts or anything that GiveWell currently pursues; I agree with you there that we shouldn't dogmatically accept these current causes. But you should really be defining your GHW worldview such that it always centers on non-controversial stuff. Is this kind of arbitrary? You bet! As you state in this post, there are at least some reasons to stay away from weird causes, so it might not be totally arbitrary. But honestly it doesn't matter whether it's arbitrary or not; some donors are just really uncomfortable about pursuing philosophical weirdness, and GHW should be for them.

This is an interesting and thoughtful post.

One query: to me, the choice to label GHD as "reliable human capacity growth" conflicts with the idea you state of GHD sticking to more common-sense/empirically-grounded ideas of doing good.

Isn't the capacity growth argument presuming a belief in the importance of long-run effects/longtermism? A common-sense view on this to me feels closer to a time discounting argument (the future is too uncertain so we help people as best we can within a timeframe that we can have some reasonable level of confidence that we affect).

It seems like about half the country disagrees with that intuition?

I'd be curious if you have any thoughts on how your proposed refactoring from [neartermist human-only / neartermist incl. AW / longtermist] -> [pure suffering reduction / reliable global capacity growth / moonshots] might change, in broad strokes (i.e. direction & OOM change), current

Or maybe these are not the right questions to ask / I'm looking at the wrong things, since you seem to be mainly aiming ... (read more)

I like the general framing & your identification of the need for more defensibility in these definitions. As someone more inclined toward GHD, I don't do so because I see it as a more likely way of ensuring flow-through value of future lives, but I still do place moral weight on future lives.

My take tends more toward (with the requisite uncertainty) not focusing on longtermist causes because I think they might be completely intractable, and as such we're better off focusing on suffering reduction in the present and the near-term future (~100 year... (read more)

I really like this post, but I think the concept of buckets is a mistake. It implies that a cause has a discrete impact and "scores zero" on the other 2 dimensions, while in reality some causes might do well on 2 dimensions (or at least non-zero).

I also think over time, the community has moved more towards doing vs. donating, which has brought in a lot of practical constraints. For individuals this could be:

And also for the community:

- "which causes can we convince outsiders to

... (read more)Would you be up for spelling out the problem of "lacks adequate philosophical foundations"?

What criteria need to be satisfied for the foundations to be adequate, to your mind?

Do they e.g. include consequentialism and a strong form of impartiality?

(Minor edits.)

... (read more)To help carve out the space where GiveWell recommendations could fit, considering flow-through effects:

Assuming some kind of total utilitarianism or similar, if you don't give far greater intrinsic value to the interests of humans over nonhuman animals in practice, you'd need to consider flow-through effects over multiple centuries or interactions with upcoming technologies (and speculate about those) to make much difference to the value of GiveWell recommendations and for them to beat animal welfare interventions. For the average life we could save soon, ... (read more)