[EDIT (2024-03-15): I changed the original title from "There are no massive differences in impact between individuals" to "Critique of the notion that impact follows a power-law distribution". More explanation in this footnote[1].]

[EDIT (2024-03-27): Since publishing this essay, I have somewhat updated my views on the topic. I continue to endorse the majority of what is written below, though I would no longer phrase some of the conclusions as strongly/decisively as before. I have decided to leave the text largely as originally published, except for a modification in the Summary (clearly marked). For a more detailed account of my belief updates, alongside links to comments and external resources that prompted and informed my reconsiderations, see the Appendix.]

"It is very easy to overestimate the importance of our own achievements in comparison with what we owe others."

- attributed to Dietrich Bonhoeffer, quoted in Tomasik 2014(2017)

Summary

- In this essay, I argue that it is not always useful to think about social impact from an individualist standpoint.

- [This bullet point was edited on 2024-03-27: the original text is in strikethrough, followed by the version I now endorse; see Appendix for more details]

I claim that there are no massive differences in impact between individual interventions, individual organisations, and individual people, because impact is dispersed acrossI argue that the claim that there are massive differences in impact between individual interventions, individual organisations, and individual people is complicated and possibly problematic, because impact is dispersed across- all the actors that contribute to the outcomes before any individual action is taken,

- all the actors that contribute to the outcomes after any individual action is taken, and

- all the actors that shape the taking of any individual action in the first place.

- I raise some concerns around adverse effects of thinking about impact as an attribute that follows a power law distribution and that can be apportioned to individual agents:

- Such a narrative discourages actions and strategies that I consider highly important, including efforts to maintain and strengthen healthy communities;

- Such a narrative may encourage disregard for common-sense virtues and moral rules;

- Such a narrative may negatively affect attitudes and behaviours among elites (who aim for extremely high impact) as well as common people (who see no path to having any meaningful impact); and

- Such a narrative may disrupt basic notions of moral equality and encourage a differential valuation of human lives in accordance with the impact potential an individual supposedly holds.

- I then reflect on the sensibility and usefulness of apportioning impact to individual people and interventions in the first place, and I offer a few alternative perspectives to guide our efforts to do good effectively.

- In the beginning, I give some background on the origin of this essay, and in the end, I list a number of caveats, disclaimers, and uncertainties to paint a fuller picture of my own thinking on the topic. I highly welcome any feedback in response to the essay, and would also be happy to have a longer conversation about any or all of the ideas presented - please do not hesitate to reach out in case you would like to engage in greater depth than a mere Forum comment :)!

Context

I have developed and refined the ideas in the following paragraphs at least since May 2022 - my first notes specifically on the topic were taken after I listened to Will MacAskill talk about “high-impact opportunities” at the opening session of my first EA Global, London 2022. My thoughts on the topic were mainly sparked by interactions with the effective altruism community (EA), either in direct conversations or through things that I read and listened to over the last few years. However, I have encountered these arguments outside EA as well, among activists, political strategists, and “regular folks” (colleagues, friends, family). My journal contains many scattered notes, attesting to my discomfort and frustration with the - in my view, misguided - belief that a few individuals can (and should) have massive amounts of influence and impact by acting strategically. This text is an attempt to pull these notes together, giving a clear structure to the opposition I feel and turning it into a coherent argument that can be shared with and critiqued by others.

Impact follows a power law distribution: The argument as I understand it

“[T]he cost-effectiveness distributions of the most effective interventions and policies in education, health and climate change, are close to power-laws [...] the top intervention is 2 or almost 3 orders of magnitude (i.e. a factor 100 and almost 1000) more cost-effective than the least effective intervention.” - Stijn, 2021

“If you’re trying to tackle a problem, it’s vital to look for the very best solutions in an area, rather than those that are just ‘good.’ This contrasts with the common attitude that what matters is trying to ‘make a difference,’ or that ‘every little bit helps.’ If some solutions achieve 100 times more per year of effort, then it really matters that we try to find those that make the most difference to the problem.” - Todd, 2021(2023)

My understanding of the narrative that I disagree with and seek to tackle in this essay is as follows. First, the basic case for the impact maximisation imperative/goal (which I am broadly sympathetic to, though with some reservations on points e-g):

- a) There are many problems in the world - poverty and ill-health, mistreatment of non-humans, war and violence, natural catastrophes, and risks related to the climate crisis, pandemics, weapons of mass destruction, and rapid technological advancements, to name just a few.

- b) It would be better (by the standards of a wide range of different ethical beliefs and worldviews[2]) if there were fewer problems, or less severe problems.

- c) There are things people like us could do to reduce the severity of many of these problems,

- d) but we cannot solve all of them immediately.

- e) If possible, it is better to focus our energies on the actions that will lead to the greatest reduction in problem severity across the range of problems we care about.

- f) We have the tools and information to roughly estimate the severity of different problems, the success of past actions in addressing these problems, and the success chances of future actions.

- g) Conclusion: We should use tools and information at our disposal to figure out which actions, or sets of actions / strategies, are most promising when it comes to reducing a large share of the problems our world faces, and we should then take those actions.

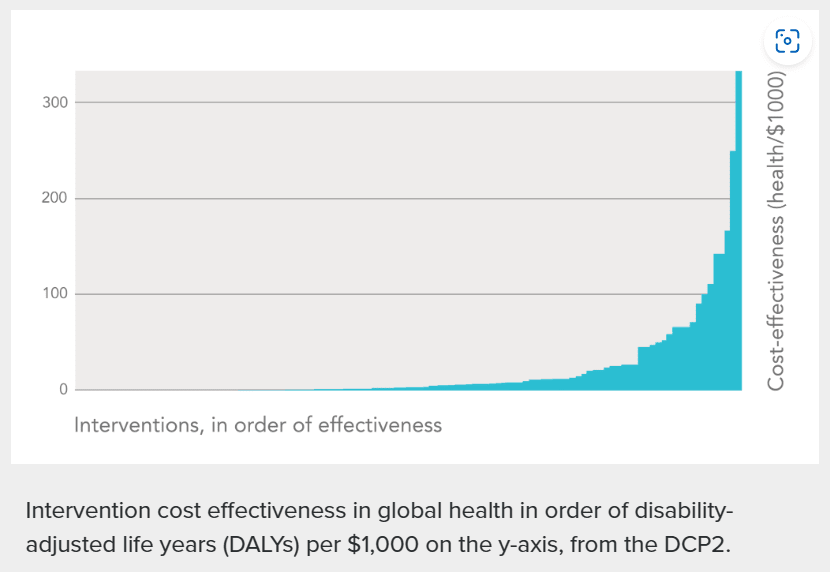

In addition to this, people in effective altruism, or in the impact maximisation space more broadly, have latched on to the idea that there are huge potential differences in the value (success in reducing global problem severity) of different approaches to doing good. This is often described as a “power law distribution of impact”, illustrated with something like Figure 1, and driven home by numerical claims such as “the top intervention is 2 or almost 3 orders of magnitude (i.e. a factor 100 and almost 1000) more cost-effective than the least effective intervention” (Stijn, 2021).

Figure 1: Screenshot taken from Todd, 2021 (2023), “The Best Solutions Are Far More Effective Than Others”, 80,000 Hours.

As far as I can tell, the “power law claim” first emerged explicitly in the field of global health and development, based on a study by Ord (2013). It has been reexamined and somewhat moderated (Todd 2023), but the core message - some interventions are magnitudes more promising than others - was retained and even extended to other domains: from education, social programmes, and CO2 emissions reductions policies to efforts to change habits of meat consumption and voter turnout (Todd 2021(2023)). From anecdotal/observational evidence, the idea also seems common when people talk about approaches for reducing existential and global catastrophic risks, whether they are focusing on artificial intelligence, bioengineering and bioterrorism, nuclear weapons, or other related risks. Likewise, I have encountered the notion of widely differing impact potential in discussions around political/policy work more generally.

This seems off…

There are numerous value-laden and controversial assumptions in the basic case for impact maximisation that I laid out above, but I will not tackle these directly here (suffice it to say that I broadly agree with claims a-d, and have reservations about e-g, though I do think they have some validity). Instead, I focus on the specific claim that there are huge (orders of magnitude) differences in the value of different attempts to do good.

I agree that there is a massive difference between actions/strategies that are net-negative, neutral, and net-positive; in other words, I do agree that it is really important to figure out whether an action or strategy actually contributes to solving a problem at all, and whether it may cause unintended negative effects. However, I do not agree that there are vast differences in value among those actions and strategies that have crossed the bar of having a significant positive impact on the world; and I do not agree that some individuals are orders of magnitude more important for making the world better than everyone else.

In addition, I believe that an excessive focus on “securing high impact opportunities” is unhelpful to our collective effort of making the world a better place. I am slightly confused about whether that latter criticism is better framed as an empirical disagreement (about the consequences of employing the “power law distribution of impact” narrative), a normative concern (about the values and ideas that are conveyed by that narrative), or a conceptual issue (about the appropriateness and usefulness of the perspective as a model to understand the world). I have tried to tease out the specific points I take issue with, and I’m curious to hear in comments and feedback whether the write-up that follows seems coherent or whether you think I’m turning in circles and should have chosen a different categorisation.

Empirical problem: Impact results from numerous inputs and thus cannot be attributed straightforwardly to any one action

“No one changes the world alone, and no one doesn't change it at all.” - Hank Green (2017)

For most conceivable outcomes, it seems misleading to suggest that they are the result of one action, one individual, or one organisation. Impact does not seem to be a property that can sensibly be assigned to an individual. If an individual (or organisation) takes an action, there a number of reasons why I think that the subsequent consequences/impact can't solely be attributed to that one individual:

- The action's consequences will depend a lot on how other people act, and on other features of the environment. Impact is thus the result of collective (often long-time) efforts, diffused in an unspecifiable manner across numerous individuals.

For instance, the discovery of the polio vaccine by itself doesn't do much good. The impact it has depends on how the vaccine is distributed and administered, which depends on numerous state and private actors. It also depends on popular attitudes towards the vaccine (willingness/eagerness to make use of it), which depend on probably even more actors and environmental factors. I have great respect and gratitude for the people who produced the polio vaccine for the first time; but I don't think it makes sense to take the number of lives saved/improved and stuff all of that into their individual impact scorecard. If we must attribute quantified individual credit to the inventors of the vaccine (and I will argue below that probably we shouldn’t try to in the first place), it will have to be much smaller than the total good that resulted from the vaccine, as that total volume of impact would need to be distributed across all those actors influencing the trajectory of the vaccine’s administration (and across the people who influenced the developers themselves and their enabling environment, see points 2) and 3) below). - Whether or not an action can be taken in the first place depends on the environment and on the actions of other people.

For instance, most people will probably put the US president into the category “high-impact individual.” And there are certainly many impactful things the president can do that are not accessible to most other people. But for achieving most goals that can be said to really matter - a better healthcare system that actually improves wellbeing in the country; effective technology policy that actually reduces risks and/or advances life-improving technological developments; a sufficient response to the climate crisis; etc. - presidents themselves will tell you how incredibly constrained they are in bringing about these outcomes. The impact a president can have through sensible policies is determined by the actions of many other individuals (domestically and internationally), and it is also determined by the culture he or she operates in (the ideas that are considered normal, palatable, or even just conceivable). Yes, this individual can have an impact through their actions, but only in conjunction with the actions of many others. If we tried to account for all individuals that form part of the president’s enabling infrastructure (again, I will argue below that we probably can’t and should not try), I am sceptical whether the individualised impact that remains with the president’s actions truly is orders of magnitude higher than that of many other people. - An individual’s decision to take an action is influenced by numerous factors, all of which partake in the impact that results from the decision.

The individual taking an action is not a blank slate. She herself is influenced by numerous factors, including other people. Arguably, the impact from an individual's action can also be attributed to those people who influenced the individual into taking the action in the first place. The number of influencing factors is usually huge - from parents and others contributing to the individual's education/socialisation to friends and colleagues to people who are strategically trying to influence the individual all the way to culture, artists and storytellers who shape the ideas and intuitions that determine what seems sensible and normatively desirable to the individual.

All of this leads me to doubt whether impact can be empirically attributed to individuals at all, which I will try to flesh out in more detail below. Importantly for this section, it also leads to me suspect that, if an individualised impact attribution were brute-forced in spite of the empirical and conceptual difficulties, the eventual impact left with any one individual would not be a massive outlier (orders of magnitude apart from everybody else), because impact would be dispersed across a) all the actors that contribute to the outcomes before and after any individual action is taken, and across b) all the actors that shape the taking of any individual action in the first place.

Adverse effects: Does this idea/perspective encourage elitism, anti-democratic sentiment, and a differential valuation of human lives?

One of the concerns I have with the narrative that impact follows a power law distribution is that I’ve seen this idea used as a (strong) argument to discourage individuals from taking actions that I think should be considered highly valuable. More specifically, I have the impression that talk about “high impact opportunities” often (though not necessarily) goes hand-in-hand with a rather naive consequentialist attitude that pays attention mainly to direct, and often easily measurable, effects (discussed, among others, in Mandefro 2023). For instance, jobs that I would consider vital for a resilient society and an effective collective effort to make the world better - thoughtful teachers and social workers, caring doctors and nurses, responsive and diligent civil servants -, are often discounted by those people who claim that individuals can and should aim to achieve magnitudes more in terms of positive impact than the average person (e.g., Lewis 2015). Likewise, a focus on maximising the impact of individual actions can easily lead to the discounting of acts that are meaningful only if performed persistently by a large group of people - casting a vote, participating in protests, signing petitions, engaging in dinner table conversations, and so on. If I am right that impact does not actually follow a power law distribution and that the jobs & acts just mentioned are actually much closer in importance to the “highest possible life paths” than suggested, this type of discouragement based on misleading claims seems at least mildly counterproductive for the goal of making the world better.

Relatedly, I am concerned that the belief in high impact differentials, and a concomitant neglect for the (indirect, longrunning) impact of small actions, can lead individuals to disregard common-sense virtues and rules of behaviour if they stand in the way of a perceived “high impact opportunity.” While I certainly wouldn’t argue that common-sense morality is a sure guide to always doing “the right thing,” I do think that adopting a general disposition to (try to) act virtuously and to consistently - though not blindly - abide by certain behavioural heuristics (e.g., respect all people around you; don’t be disingenuous; be truth-seeking; etc. etc.) is warranted. If the pursuit of maximum impact leads to a complete[3] sidelining of considerations around how to be a reliable, trustworthy, and constructive member of your communities, I think you’re paying a cost that’s higher than you should be willing to accept.[4]

"There is nothing more corrupting, nothing more destructive of the noblest and finest feelings of our nature, than the exercise of unlimited power."

Putting debates around the actual distribution of impact to the side, I am worried about normative side-effects that seem concerning even if the underlying narrative is an apt description of the world. I fully acknowledge that the side-effects described in the next few paragraphs are not strictly implied by the claim that there can be magnitudes of difference in the impact two individuals have. But I find myself with an intuition and a plausible-sounding narrative that links claims around “power law distributions of impact” to the normative concerns I’m about to discuss. If there is anything to that intuition and such a link does exist in practice (even if only weakly), then I would argue that this gives good reason to pause and reconsider the use and propagation of the “power law” narrative.

I am concerned that the idea of huge impact differentials between actions reinforces the belief that a few vanguard individuals can fix the world - or fix some of the major problems we see in the world. I am concerned that this vanguardism weakens demands for measures that would strengthen communities, improve democratic culture and practice, and enhance our collective action capabilities, because strong communities, democratic participation, and effective collective action seem less vital if we can hope for a small group of elites to contribute the vast majority of inputs towards “making the world better.”

I am also concerned that a belief in a small-ish group of individual saviours could lead to hubris (Effectiviology n.d.) and an unwarranted sense of confidence and control (Fast et al. 2009), lust for power (Weidner & Purohit 2009; more polemically: Walton 2022), or burnout among the supposed highest-impact individuals and to perceived disempowerment, helpless indifference, or angry resistance among those who seem to be left out from that select group (Harrison 2023). All of this seems quite corrosive to societal decision-making processes, both at an individual level (people’s cognitive abilities to make sound decisions are hampered by the emotions listed before) and at the collective level (people are less willing and able to work together constructively if they are arrogant, worn-out, hopeless, mindlessly angry, and/or deeply distrusting of their fellow citizens). It also just seems quite undesirable to live in a world full of self-absorbed elites on the one hand, and disillusioned common people on the other.

“You’re probably among the 0.1% most highly productive individuals [...] You’re probably worth the same as three average EAs”

- Anonymous effective altruist (conversation I overheard at an EA community event)

Lastly, I am concerned about implicit, or sometimes quite explicit, judgments about the value of individuals when comparing them based on impact metrics (illustrated, for instance, by Lipshitz 2018, full book pdf). I think that there is a danger of switching from “some actions are more important than others” to “some people - the ones best-able to perform high-impact actions - are more important and thus more valuable than others.” I think that this is a problem because it undermines very basic community principles around solidarity and mutual respect, which I believe are vital for individual flourishing as well as sustainable collective problem-solving capabilities. This seems to be a thorny problem whenever impact is measured and attributed to individuals, but I imagine it will be worse the larger we believe potential differences in impact to be.

Conceptual problem: If we want to actually see the emergence of a better world, individualised impact calculations are not the best path to action

“Do your little bit of good where you are; it is those little bits of good put together that overwhelm the world.” - Archbishop Desmond Tutu

While examining the empirical validity of claims around the supposed “power law distribution of social impact,” I was growing increasingly sceptical about the goal of attributing impact scores to individual actions and individual people in the first place. As I was trying to argue that the results of any given action need to be distributed across a wide collection of contributing and enabling actions, I kept wanting to write (or shout at my screen): “But isn’t this a futile and misleading exercise? Impact simply isn’t concentrated in one individual. How can we even speak about the impact of one individual person in isolation, when their actions and their actions’ effects can only ever be observed in an interactive environment? Isn’t it fundamentally wrong-headed to think about achieving positive change in the world from an isolated, individualised standpoint?”[5]

The seemingly plausible (?) possibility of adverse effects - that an emphasis on high impact differentials may discourage bottom-up and collective action; that it may neglect and thus weaken the social fabric which we need as a backbone to and prime enabler of a thriving world - reinforces my sense that an emphasis on individual impact is not the best ingredient for an effective and sustainable movement strategy to make the world better.

As an alternative, I believe we - people trying to address pressing global problems - would do better to conceive of impact as the product of a large set of actions and people, not a quantity that can be apportioned sensibly to any one individual. We would probably benefit from a good deal of humility about the influence of any one action and from a steadfast (and simplicity-resistant) awareness of the complex environment we operate in. While analyses about the marginal benefits of two specific competing actions, roughly holding all other factors equal, may well retain their uses in some situations (see below), we might reconsider whether less atomised perspectives are better suited to guiding decision-making in other situations.

For instance, when finding myself in the possession of consequential information about a topic of public interest, maybe I should ask myself “What would I want the majority of other people to do in such a situation? Would I want them to retain their secret information to maximise their own level of influence within a certain sphere? Or would I want them to share the information publicly?” Or maybe, in deciding how to treat people around me, I should wonder which actions and attitudes make for a constructive collaborator, a reliable friend, or a respecting colleague, rather than calculating how best I can use my connections and “relationships” to catapult myself into the (possibly imaginary) category of super-high-impact-individuals - not (just) because there may be an intrinsic moral value in virtuous behaviour but also and especially because such virtuous behaviour may be one of the essential building blocks to achieving our optimal collective impact potential. And maybe, when choosing a job or making a career move, it is enough to ask “Which kinds of contribution do we as a community really need, which of those do we currently lack, and which am I well-placed to deliver?” rather than seeking to answer “Which position do I need to reach in order to be in the 99th percentile of an imaginary and narrowly-conceptualised global impact distribution?”[6]

Caveats and disclaimers

I wrote this essay primarily as a reflection on my own thoughts. As I outlined the caveats and uncertainties below, I did not necessarily have an audience in mind and some of the paragraphs that follow may sound a bit aimless / redundant. But I think, or hope, that these meta-considerations may give readers additional insights into my thinking on the topic, allowing them to engage more fruitfully with my thinking and with my preceding arguments.

I can’t claim credit: People, including self-identified effective altruists, have discussed all of this already

Many of the claims I make above have been articulated by other people, quite possibly in more eloquent and straightforward terms. In this essay’s spirit of not apportioning individual credit where individual credit is not due, I felt compelled to add this subsection to acknowledge and reference at least some of the many writers who have expressed these ideas before me (and who have influenced my thinking on the topic).

Many people have highlighted how complex dynamics make impact evaluations exceedingly difficult (to name just a few: Justis 2022, EA Forum; Karnofsky 2011(2016), GiveWell blog; Wiblin 2016, talk at an EA Global conference; Reddel 2023, EA Forum; Smith 2019, former GiveWell analyst; Kozyreva et al. 2019, “Interpreting Uncertainty: A Brief History of Not Knowing”; Griffes 2017, EA Forum), and some have challenged the notion that there are huge differences in impact between interventions or organisations (Tomasik 2017, EA Forum “classic repost”).

Several authors have warned of adverse effects from the quest for super-high-impact opportunities, describing how individualised impact models can often account for our inability to pursue collective action effectively (Srinivasan 2015; Chappell 2022; Jenn 2023, “2. Non-Profits Shouldn’t Be Islands"; Remmelt 2021, “5. We view individuals as independent”; Lowe 2023; Lowe 2016).

Lastly, the students of meta-ethics among you will probably have noticed that my description of alternative decision frameworks draws heavily on established ethical doctrines. In my example of what to do when acquiring secretive information that could either be used for private influence or released to the public, my question is clearly reminiscent of Kant’s Categorical Imperative (see Britannica n.d.)[7] and of rule-consequentialist arguments (e.g., Burch-Brown 2014). In my discussions around virtuous behaviour, I present a drastically shortened reproduction of the theory of “virtue consequentialism” (Bradley 2005), which has been endorsed in spirit though not in name by several “non-naive” consequentialists in the effective altruism space (e.g., Chappell 2022; Schubert and Caviola 2021; Oakley 2015, talk at an EA Global conference; Bykvist 2024, EA Stockholm's Nordic Talks Series) as well as by proponents of common sense morality and advocates for notions of basic human dignity across the social impact ecosystem (European Commission 2022; Jenn 2023). And in calling on people to pursue the more humble quest of finding one’s place in a collective project rather than striving to become an individual of outstanding impact, I employ notions familiar from satisficing theory (Slote and Pettit 1984; Ben-Haim 2021) and the literature on collective rationality (Finkel et al. 1989 (pdf); Gilbert 2006 (pdf); Byron 2008).

I don’t mean to throw the baby out with the bathwater: Looking for actions with an exceptionally high impact is not always entirely misguided

While this post clearly advocates for a reconsideration of the dominance of individualised impact evaluations within debates around how to make the world better, I do not want to denigrate considerations about individual contexts altogether. I think that it is probably often good and non-harmful to evaluate different actions with an eye to the impact each one is likely to have. I also think it can be sensible to rank some life and career paths in accordance with how much impact they may roughly afford (differentiating, for instance, between a net-negative role of a snakeoil salesperson, the probably neutral role of someone working a “bullshit job”[8]; and the range of societally beneficially roles that lie beyond). But the crucial point I am trying to make in this essay is that this should not be the only lens to apply, that we should not forget about indirect effects and cumulative dynamics which individualised evaluation seem poorly-equipped to capture, and that we should not feel overly confident in how granular our impact attributions can be even if we put our best minds and methods to the task.

Remaining uncertainties and confusion

As mentioned before, I have spent quite some time thinking, reading and talking about the arguments in this essay. I think that I made decent progress on streamlining my ideas into a relatively coherent account. Even so, I remain uncertain about my final stance and its practical implications:

- What is the crux between me and the people who buy into the narrative of power law distributions in impact?

- To the extent that disagreements with my arguments persist after people have read and sincerely engaged with what I said here: How much should that shift my own confidence in my views, if at all?

- I seem to[9] disagree fundamentally with one of effective altruism’s core propositions. What does or should that imply for my engagement with the community?

- Is it dishonest if I apply to EA-ish positions, events, or funding programmes without alerting the applications committee very explicitly about that disagreement?

- Is the disagreement a significant barrier to effective collaborations between myself and EA-minded people or organisations?

- Conversely, is there some particular added imperative for me to engage in EA discussions, events, and initiatives, for the sake of raising intellectual diversity in these spaces and/or for the sake of exposing myself to cognitively challenging environments?

- How much of my reasoning is a result of post hoc rationalisation of intuitions and cultural biases that I hold? If my thoughts on this were prompted by a cultural bias / unsubstantiated intuition, but I now cannot find major flaws in the reasoning I developed upon reflection, should I still be sceptical about my current views and conclusions?

- Is every argument emerging from motivated/prejudiced reasoning poor or dubious? Or can an argument be sound, even if it was developed with the conclusion already in mind?

- How different is this process from “the normal” belief formation process? How often do we form beliefs around important questions without having an intuition for the conclusion in mind a priori?

Acknowledgements

In the spirit of the essay, it would seem appropriate that I acknowledge and give credit to all the people who influenced my thinking and writing on this topic. But this list would be ridiculously long, burdensome to write, and time-consuming to get permission for (from all the people who I would name). I’ll thus refrain from naming anyone. Let it be known that I have benefitted from many, many people’s input to write this essay - whether they engaged with me directly on the topic, provided reading material to feed my thinking and help me defuse my confusion, shaped my epistemics and philosophy through conversations on other topics, or influenced my values and beliefs in yet more indirect ways. I am indebted to them all, and any good that this essay may accomplish is not credited to myself alone but must also be added to their impact scorecards (if keeping score is insisted upon in the first place).

Also worth noting: credits for the cover image go to Ian Stauffer (photo taken from Unsplash)

Appendix: Shifts in my thinking since publishing this post

Update 2024-03-27: Since publishing this essay, I have somewhat updated my views on the topic. I continue to endorse the majority of what is written above, though I would no longer phrase some of the conclusions as strongly/decisively as before. I have decided to leave the text largely as originally published, but inserted a modification in the Summary. This Appendix contains a more detailed account of how my thinking has evolved since publishing the essay.

Differences in perspectives on impact assessment/modeling are a pretty big deal for thinking about the issues I address in the essay

- I remain at least a little confused about what the perspectives are and how they each are justified/motivated

- I would like to have a clear overview of different perspectives and my view on their respective value (logically/epistemologically and practically)

- Some resources to describe different perspectives on research evaluation:

- “Five Mistakes in Moral Mathematics” (Parfit, book chapter, 1986, link to pdf)

- “Shapley values: Better than counterfactuals” (NunoSempere, EA Forum, 2019) [Thanks to Alex Semendinger for linking to this source in a comment and for sparking an interesting short exchange with Dawn Drescher and Sam_Coggins in the respective comment thread]

- “Triple counting impact in EA” (Joey, EA Forum, 2018)

- “The counterfactual impact of agents acting in concert ([anonymous], EA Forum, 2018)

- “The inefficacy objection to consequentialism and the problem with the expected consequences response” (Bryant Budolfson, journal article, 2018)

- “Collective Moral Obligations: ‘We-Reasoning’ and the Perspective of the Deliberating Agent” (Schwenkenbecher, journal article, 2019)

- “How you can help, without making a difference” and “Collective harm and the inefficacy problem” (Nefsky, journal articles, 2016 and 2019 respectively)

- “Contract Ethics and the Problem of Collective Action” (Stolt, PhD thesis, 2016)

- Also: many comments to this post have been helpful in pushing me to think more deeply about this topic and highlighting some interesting possible lenses and relevant questions; thanks to all of them!

- If I were to write the essay again, this would probably be one of the central themes (alongside adverse effects, which I continue to consider important)

On the empirical validity of the power law distribution of social impact

- I’ve tentatively updated to the view that for some types of decision situations, counterfactual impact (at least to the extent that we can reasonably measure it) follows a power law distribution. [Thanks to comments by Jeff Kaufman, Denis, Larks, Oscar Delaney and Brad West, whose specific examples and pointed questions prompted and aided my reconsideration of this point]

- I think that considerations around supporting and enabling actions flatten the distribution significantly compared to a more naive evaluation, but that it may well often remain power law distributed. [Thanks to Owen Cotton-Barrat for spelling out a similar thought in comments.]

- I think that considerations around uncertainty flatten the distribution of expected impact, possibly rendering it non-power-law distributed in many cases, but probably not in all. At least, there will still be cases where the expected impact of a solidly good action is clearly magnitudes smaller than the impact of one of the best actions (e.g., donations to AMF, which can simply be scaled up to be orders of magnitude higher). [Thanks to Owen Cotton-Barrat, Denis and Jeff Kaufman for spelling out a similar thought in comments.]

- I retain the view that considerations around enabling and supporting actions are important and under-appreciated in most EA writing and conversations I have encountered. In part, as stated above, this is because they affect our view of counterfactual impact differences. But more strongly, I value these considerations a) because they may help ameliorate or guard against the adverse effects I mention; and b) because they open up avenues for considering alternative models of impact, which I think may be highly valuable complements to and sometimes appropriate substitutes for counterfactual reasoning.

On conceptual issues

- I remain confused

- The last few days have spurred a lot of further thinking on this topic, and I’m grateful for all the comments which have contributed to that. I think I know have a more well-considered take on the topic than before, which I consider progress. But I’m still relatively far from a good grasp of the topic overall and my views in particular. The quest for clarity will continue.

- I (tentatively?) continue to think that purely individualised perspectives on impact lack something relevant:

- They make it hard to recognize/conceptualize the importance of engaging in collective projects where one person’s individual, expected, and measurable counterfactual impact may be low (or unclear, because expectations depend too much on subjective plausibility judgements regarding the long-running or higher-order effects of different actions), but the expected impact of the collective project can be vast.

- They make it easy to dismiss and discount the thousands (sometimes millions) of people whose supportive and enabling services we all depend on to have any meaningful impact. These people may be replaceable from a counterfactual point of view, but they are not irrelevant - if we lived in a world without communities of people performing these supportive roles, we would be screwed. This seems an important fact to keep in mind when looking at the world and at efforts to make it better.

Practical consequences for my thinking & actions

- I will talk about the topic with a few more people, I will read, and I will attempt to further reflect and puzzle out my thinking on this topic

- I will consider applying counterfactual reasoning more seriously as one of the perspectives in my repertoire. Upon reflection, I can’t deny that I’ve been doing that throughout the last few years already, though more cautiously/humbly and with force on my decisions than I’ve seen in other EAs. I intend to retain that caution and readiness to switch to other perspectives to inform my decisions. But I think it would be useful to be more explicit about the ways in which I do use counterfactual impact evaluations when making decisions.

- The value of writing up, sharing, and discussing my thoughts on thorny questions has increased in my mind. I did consider this valuable before, but the experience with this post (and its many constructive comments) has demonstrated that it is probably more valuable than I previously thought.

- ^

Update 2024-03-15: I was alerted to possible problems with the title by this comment, questioning whether the original title ("There are no massive differences in impact between individuals") matched the actual content of the post. Following a bit of discussion on that comment (see comment thread), I have come to the conclusion that the original title was indeed suboptimal. Quoting from a comment I left below:

"I can see how my chosen title may be flawed because a) it leaves out large parts of what the post is about (adverse effects, conceptual debate); and b) it is stronger than my actual claim"

I hope the revised title does a better job communicating what this post is about. (And I hope I'm not violating some unwritten norm against changing the title of a post a day after publication?)

- ^

This holds for a variety of values a person may hold, encompassing at least the following set of intrinsic goals: wellbeing, absence of (extreme) suffering, flourishing, rights-fulfilment, justice, species survival.

- ^

As a friend who graciously reviewed this essay has rightly pointed out, virtues and behavioural heuristics are not absolute. In many real-life situations, the attempt to act virtuously will require context-specific evaluations to figure out which action is actually most in line with the person one wants to be. That means that even very common-sensical and usually acceptable heuristics such as “Thou shalt not lie” cannot be adopted as general and universally applicable rules; there will be situations when lying is the best choice one has. I think the crucial point is that it’s important to pay serious attention to questions of virtue and the cumulative consequences of this or that principle, not so much to live dogmatically by certain dead-set rules.

- ^

To tie this in with a real-life example that will be familiar to many readers on this Forum: Similar notions around naive utilitarianism and the “the importance of integrity, honesty, and the respect of common-sense moral constraints” (MacAskill 2022) have been discussed at length in the aftermath of the FTX debacle, where a self-avowed utilitarian from within the effective altruism community committed major financial fraud, incurring massive costs for customers and clients; see, for instance, Samuel 2022, evhub 2022, and Karnofsky 2022.

- ^

I imagine some readers will respond with sympathy to my claims around the difficulties of empirical measurement, but will reject the conclusion that difficulty equates futility. Readers may claim that it is possible to build models that reduce complexity to such an extent that all of the intervening variables mentioned above are neutralised. Certainly, building such models is possible - it is, from everything I’ve seen, the common practice in quantitative impact models, estimations, and assessments. But can it be done without completely losing touch with reality? Can there be models that are sufficiently simple to allow for individualised impact estimates, but which remain sufficiently true to the underlying reality to yield meaningful results about the world? I am yet to see an impact evaluation that achieves this feat, apportioning individual impact estimates in an epistemically trustworthy manner that doesn’t simply gloss over complex dynamics because they are too hard to conceptualise (let alone measure).

- ^

If you found the previous few sentences confusing and/or somewhat incomplete, let me tell you: I have already advanced miles from the muddled and incoherent state of mind that I started with around a week ago (when I began reflecting on this essay). I can see how my thinking and writing on this point does not yet approach a fully developed argument for or against a specific conceptual framework. But it seems to me (and maybe I’m just trapped in my own brain and unable to imagine what my text will sound like to others) that I have at least reached a stage where my words are able to gesture at a comprehensible concern, or where they may even be able to inspire alternative ways of looking at things.

- ^

I very intentionally write “reminiscent of.” By no means do mea to suggest that I fully understand, let alone pretend to reproduce or even elaborate on, Kantian ethics.

- ^

Neutral for the world as a whole; I think Graeber argues convincingly that working a bullshit job is usually quite harmful to the worker herself.

- ^

Not at all sure how many people in the wider EA community would partially or fully agree with the points I make in this essay. I’d be curious to hear more on that through comments or private messages in response to this post. That said, in spite of some cautionary and sympathetic statements by people at the core of the EA community (Todd 2023, Karnofsky 2022), it seems clear that my post is in tension with the claim that there are enormous differences between interventions to make the world better and that individuals should seek to maximise for the highest-impact options they can achieve - a claim is at the core of most official self-descriptions of effective altruists and core effective altruist organisations (e.g.: Centre for Effective Altruism, see Prioritization bullet point).

I think it might be helpful to look at a simple case, one of the best cases for the claim that your altruistic options differ in expected impact by orders of magnitude, and see if we agree there? Consider two people, both in "the probably neutral role of someone working a 'bullshit job'". Both donate a portion of their income to GiveWell's top charities: one $100k/y and the other $1k/y. Would you agree that the altruistic impact of the first is, ex-ante, 100x that of the second?

This is a good question. I think, if we assume everything else equal (neither got the money by causing harm, both were influenced by roughly the same number of actors to be able and willing to donate their money), then I think I agree that the altruistic impact of the first is 100x that of the second.

I am not entirely sure what that implies for my own thinking on the topic. On the face of it, it clearly contradicts the conclusion in my Empirical problem section. But it does so without, as far as I can tell, addressing the subpoints I mention in that section. Does that mean the subpoints are not relevant to the empirical claim I make? They seem relevant to me, and that seems clear in examples other than the one you presented. I'm confused, and I imagine I'll need at least a few more days to figure out how the example you gave changes my thinking.

Update: I am currently working on a Dialogue post with JWS to discuss their responses to the essay above and my reflections since publishing it. I imagine/hope that this will help streamline my thinking on some of the issues raised in comments (as well as some of the uncertainties I had while writing the essay). For that reason, I'll hold of... (read more)

I'm from a middle-income country, so when I first seriously engaged with EA, I remember how the fact that my order-of-magnitude lower earnings vs HIC folks proportionately reduced my giving impact made me feel really sad and left out.

It's also why the original title of your post – the post itself is fantastic; I resonate with a lot of the points you bring up – didn't quite land with me, so I appreciate the title change and your consideration in thinking through Jeff's example.

I find myself sympathetic to a lot of what you write, while being in disagreement with some of your top-level conclusions (in some cases as-written; in some cases more a disagreement with the vibe of what's being said).

To elaborate:

- I think that you're primarily pointing at a bunch of problems that can come from people inhabiting the "power-law distribution" perspective on impact and pursuing the tails

- I think that these are real and important problems, and I think that they are sometimes underappreciated in EA circles, and sometimes things would be better if people less inhabited this mentality

- Structurally, these problems give us some reason to reduce emphasis on the claim, but they don't (by themselves) cast doubt on the claim

- You have one argument casting doubt on the claim (what you call the "empirical problem" of the difficulty of impact attribution)

- I basically agree that this is an issue which muddies the waters, and somewhat levels the distribution of impact compared to a more naive analysis

- However, I think that after you sort through this kind of consideration you would be able to recover some version of the power law claim basically intact

- To my mind the stronger reason for sc

... (read more)Perhaps we could promote the questions:

and not the question:

Similar reframes might acknowledge that some efforts help facilitate large benefits, while also acknowledging all do-gooding efforts are ultimately co-dependent, not simply additive*? I like the aims of both of you, including here and here, to capture both insights.

(*I'm sceptical of the simplification that "some people are doing far more than others". Building on Owen's example, any impact of 'heavy lifting' theoretical physicists seems unavoidably co-dependent on people birthing and raising them, food and medical systems keeping them alive, research systems making their research doable/credible/usable, people not misusing their research to make atomic weapons, etc. This echos the points made in the 'conceptual problem' part of the post)

Thanks for writing this! "EA is too focused on individual impact" is a common critique, but most versions of it fall flat for me. This is a very clear, thorough case for it, probably the best version of the argument I've read.

I agree most strongly with the dangers of internalizing the "heavy-tailed impact" perspective in the wrong way, e.g. thinking "the top people have the most impact -> I'm not sure I'm one of the top people -> I won't have any meaningful impact -> I might as well give up." (To be clear, steps 2, 3, and 4 are all errors: if there's a decent chance you're one of the top, that's still potentially worth going for. And even if not--most people aren't--that doesn't mean your impact is negligible, and certainly doesn't mean doing nothing is better!)

I mostly disagree with the post though, for some of the same reasons as other commenters. The empirical case for heavy-tailed impact is persuasive to me, and while measuring impact reliably seems practically very hard / intractable in most cases, I don't think it's in principle impossible (e.g. counterfactual reasoning and Shapley values).

I'm also wary of arguments that have the form "even if X is true, believing / ... (read more)

I don't see Shapley values mentioned anywhere in your post. I think you've made a mistake in attributing the values of things multiple people have worked on, and these would help you fix that mistake.

I'm not sure about the factual/epistemic aspects of it, but there is at least some element here that seems at least somewhat accurate.

It has always struck me as a bit odd to glorify an individual for accomplishing X or donating Y, when they are only able to do that because of the support they have received from others. To be trivially simplistic: could I have done any of the so-called impressive things that I have done without support from a wide away of sources (stable childhood home, accessible public schools of decent quality, rule of law, guidance from mentors and friends, etc.). Especially in the context of EA, in which so many of us are so incredibly privileged and fortunate (even if we are only comparing within our own countries). So many people in EA come from wealthy families[1], attended prestigious schools, and earn far more than the median income for their country.

I sometimes look at people that I view as successful within a particular scope and I wonder "if my parents could have afforded tutors for me would I have ended up more like him?" or "if someone had introduced me to [topic] at age 13 would I have ended up a computer engineer?" or "If my family had lived in and ... (read more)

I think there are several different activities that people call "impact attribution", and they differ in important ways that can lead to problems like the ones outlined in this post. For example:

I think the fact that any action relies enormously on context, and on other people's previous actions, and so on, is a strong challenge to the second point, but I'd argue it's the first point that should actually influence my decision-making. If other people have already done a lot of work towards a goal, but I have the opportunity to take an action that changes whether their work succeeds or fails, then for sure I shouldn't get moral credit for the entire project, but when asking questions like "should I take this action or some other?" or "what kinds of costs should I be willing to bear to ensure this happens?", I should be using the full difference between success and failure as my benchmark. (That said, if "failure" means "someone else has to take this action instead" rather than "it's as if none of the work was done", the benchmark should be comparing with that instead, so you need to ensure you are comparing the most realistic alternative scenarios you can.)

I wonder if the purpose for which we are assessing impact might be relevant here. As Joseph's comment implies, sometimes people rely on assessments of impact to "glorify" certain individuals. I think some of your critiques have particular force when someone is using impact to do something of that nature. The issues you describe cause many impact assessments to be biased significantly upward, and I think it is almost always better to err on the side of humility when heaping glory on high-status individuals.

At the same time, there are a number of reasons I might be trying to assess impact for which your critiques seem less relevant. For instance, if I'm deciding what career to pursue, the idea that "the impact from an individual's action can also be attributed to those people who influenced the individual into taking the action in the first place" isn't really relevant to the decisionmaking process. Likewise, if I were trying to decide whether to spend resources influencing someone else's career decision, I know that the prior and current influence of the person's parents, teachers, peers, (possibly) religious community, etc. would play a huge role in the outcome. But I don't see why it would be wise to decide whether to spend those resources on that task only after re-allocating most of the impact from the possible better career choice to those other influences.

Wow, Sarah, what a wonderful essay!

(don't feel obliged to read or reply to this long and convoluted comment, just sharing as I've been pondering this since our discussion)

As I said when we spoke, there are some ideas I don’t agree with, but here you have made a very clear and compelling case, which is highly valuable and thought-provoking.

Let me first say that I agree with a lot of what you write, and my only objections to the parts I agree with would be that those who do not agree maybe do very simplistic analyses. For example, anyone who thinks tha... (read more)

Regarding the impact attribution point-

You simply need to try to evaluate the world that would have transpired if not for a specific agent(s) actions. In the case of your vaccine creation and distribution, let's take the individual or team that created the initial vaccine and the companies (and their employees) that manufacture and distribute the vaccines.

If the individual or team did not did not create the initial vaccine, it likely would have been discovered later. On the other hand, if the manufacturers and distributors did not go into that manufacturin... (read more)

I think counterfactual analysis as a guide to making decisions is sometimes (!) a useful approach (especially if it is done with appropriate epistemic humility in light of the empirical difficulties).

But, tentatively, I don't think that it is a valid method for calculating the impact an individual has had (or can be expected to have, if you calculate ex ante). I struggle a bit to put my thinking on this into words, but here's an attempt: If I say "Alec [random individual] has saved 1,000 lives", I think what I mean is "1,000 people now live because of Alec alone". But if Alec was only able to save those lives with the help of twenty other people, and the 1,000 people would now be dead were it not for those twenty helpers, then it seems wrong to me to claim that the 1,000 survivors are alive only because of Alec - even if Alec played a vital role in the endeavour and if it would have been impossible to replace Alec by some other random individual. And just because any one of the twenty people were easily replaceable, I don't think that they all suddenly count for nothing/very little in the impact evaluation; the fact seems to remain that Alec would not have been able to have a... (read more)

Thanks for writing this! I’ve long been suspicious of this idea but haven’t got round to investigating the claim itself, and my skepticism of it, fully, so I super appreciate you kicking off this discussion.

I also identify with ‘do I disagree with this empirically or am I just uneasy with the vibes/frame, how to tease those apart, ?'

For people who broadly agree with the idea that Sarah is critiquing: what do you think is the best defence of it, arguing from first principles and data as much as possible?

I have a couple of other queries/scepticisms about the... (read more)

I agree with most of what you write and share similar analyses. Because I still think that there is a lot of value in the EA community, I currently keep supporting it and engaging in it. But I also see the imperative to bring in further perspectives into the community. This can be quite straining in my experience, so I kind of 'choose my battles' given my capacities to contribute to alleviating ideological biases in the EA community.

So thanks for your post and putting in the work to keeping these discussions going as well!

Could you point me to the discussion of meat consumption in this source? I can't seem to find it. Thanks!

Wow great essay Sarah, very thought-provoking and relevant I thought.

I have lots of things to say, I will split them into separate comments in case you want to reply to specific parts (but feel free to reply to none of it, especially given I see you have a dialogue coming soon). Or we can just discuss it all on our next call :) But I thought I would write them down while I remember.

I found the ideas in the post/comments clarifying and appreciate the considered, collaborative and humble spirit with which the post and most, if not all, comments were written. In alignment with the post's ideas, I hope this doesn't come across as over-attribution of impacts to individuals! I just appreciate the words people added here, the environment supporting them, and the people that caringly facilitated both

This might be a bit cute but I reckon the 1970 song 'Strangers' by 'The Kinks' illustrates some of the points in the post/comments quite nicely (explained by the songwriter here)

Great post, Sarah! I strongly upvoted it.

I think the possibility of quite harmful outcomes will tend to be associated with that of quite beneficial outcomes, so the tails will partially cancel out, which contributes towards mitigating impac... (read more)

I gave this a downvote for the clickbait title which from the outline doesn't seem to match the actual argument. Apologies if this seems unfair, titles like this are standard in journalism, but I hope this doesn't become standard in EA as it might affect our epistemics. This is not a comment on the quality of the post itself.