Background: I'm currently writing a PhD in moral philosophy on the topic of moral progress and moral circle expansion (you can read a bit about it here and here). I recently was at three EA-related conferences: EA Global London 2024, a workshop on AI, Animals, and Digital Minds at the London School of Economics, and the Oxford Workshop on Global Priorities Research. Particularly this year, I learned a lot and was mostly shaken up by the topics of invertebrate welfare and AI sentience, which are the focus of this post. I warn you, the ethics are going to get weird.

Introduction.

This is post is a combination of academic philosophy and a more personal reflection on the anxious, dizzying existential crisis I'm facing recently, due to the weight of suffering that I believe might be contained in the universe, which I believe is morally and overwhelmingly important, and for which I feel mostly powerless about. It is a mix of philosophical ideas and a personal, subjective experience trying to digest very radical changes to my view of the world.

The post will be as follows. First, I explain my moral view: classical utilitarianism, which you could also call impartialist sentientism. Then I broaden and weaken the view, and explain that even if you don't subscribe to this moral view, you should still worry about these things. The next section very briefly explains the empirical case for invertebrate and AI sentience.

Although I'm riddled with uncertainty, I think it is likely that some invertebrate species are sentient, and that future AIs could be sentient. This combines in weird ways with moral impartiality and longtermism, since I don't think it matters where and when this happens, they're still morally weighty.

This worry then explodes into moral concern about very weird topics: astronomical numbers of alien invertebrate welfare across the universe, and astronomical numbers of sentient AI developed by either us in the future or aliens, and other very weird topics that I find hard to grasp.

I talk about these topics in a more personal way, particularly ideas of alienation and anxiety over these topics. Then I worry about potential avenues of where this "train to crazy town" is headed, such as: blowing up the universe, extreme moral offsetting by spreading certain invertebrate species across the universe, tiling the universe with hedonium, worries about Pascal's Mugging, and infinite ethics.

Then I take a step back, compose myself, and get back to work. How should these ideas change how we ought to act, in terms of charitable donations, or lines of research and work? What are some actionable points?

Finally, I recommend some readings if you're interested in these topics, since there's a lot of research coming up on this topic. I conclude that the future of normative ethics will be very weird.

Impartialist Sentientism.

Currently, the moral theory I place the highest credence on is total hedonist utilitarianism (what you would call classical, Singerite, or Benthamite utilitarianism), but I believe a lot of these arguments go through even if you aren't, because the amount of value posited by impartialist hedonist utilitarianism tends to trump other considerations, among other things. Just in case you're not up to speed with moral philosophy, let me give a broad background overview on the moral philosophy. If you're well acquainted, feel free to skip to the next section.

This theory breaks down into the following elements:

By "pain" or "suffering" I mean "any negatively valenced experience", that is, any experience judged to be negative by the subject undergoing it. By "pleasure" or "happiness" I mean "any positively valenced experience", that is, any experience judged to be positive by the subject undergoing it. These are very broad definitions, which helps raise the plausibility of this hedonism.

Pain matters a lot. In particular, strong sharp pains are excruciating and disabling. Torture and disease can really ruin the day or the life of the sentient being undergoing it. For these reasons, theories that widely ignore intense or extreme suffering seem morally implausible. The nugget of truth from suffering-focused ethics is that pain matters more than pleasure in practice, because sentient beings have higher biological capacities for pain than for pleasure.

Pleasure is what we live for. If we lived in an anhedonic world, that is, a world with no states that we judged to be in some way pleasant and positive, we would not reproduce, and we would likely die out. I think it's likely that our brains pump us with hormones like serotonin and dopamine so that our lives an on the net positive. If this was removed, we would stop being motivated to act (to eat food, to reproduce, etcetera).

A unit of suffering matters, no matter where it is located. Think along the lines of Peter Singer's drowning child thought experiment (Singer, 1972). If you found a child drowning in front of you, you would be morally obligated to save them. If you have the chance to help somebody that is far, but it's similarly easy and cheap for you to save them, you would also be morally obligated to save them.

I believe that appealing to our psychological propensities ("humans are egoist", "I don't care about humans far away") is a worrying rationalization, and opens the door to biases that are morally irrelevant. Doing an "out of sight, out of mind" trick about the people starving far away is not morally right, even if that is what most people actually do. It's like burrowing your head into the sand like an ostrich, trying to solve cognitive dissonance by rejecting the new moral beliefs. You can ignore people far away, but that doesn't mean you should.

I'm not going to rehash Singer's paper here, but if you are not convinced, here is an excellent summary and reading guide on his paper by Utilitarianism.net.

A unit of suffering matters, no matter when it is located. Think longtermism (e.g. Greaves and MacAskill 2021, MacAskill 2022). This is discounted for uncertainty, so if I am not sure whether my action will be good or bad, that is a worthwhile consideration to discount for.

But what this position doesn't accept is pure moral discounting for moral value of future beings. The suffering that Cleopatra underwent is as morally weighty as yours, neither her nor your suffering is discounted because of when it happened. But also, the moral suffering that somebody in the year 4.000, or 4 million, will suffer (that is, once the discounting for uncertainty is taken into account) is as morally weighty as yours. Most moral philosophers think that pure time discounting is morally unjustifiable, and the moral (normative) tenets of longtermism follow from this.

A unit of suffering matters, no matter who experiences it. Think anti-speciesism (e.g. Singer in "All Animals are Equal" from Practical Ethics, 1979). Suffering underwent by a human, cow, fish, and fruit fly are equally worthwhile. A caveat, however. I am unsure whether small fish and insects can suffer, and how much. Many small animals might be incapable of suffering, being more similar to a Roomba, like an automatic system that goes away from certain environments that cause pain or discomfort.

Also, I don't know how to compare the suffering of a human, who has 86 billion neurons, to a goldfish with 1.5 billion neurons, to a fruit fly with 100,000 neurons. In particular, we need to study more about how neuron count scales or relates to capacities for valenced states like pain. I'm not very knowledgeable on this topic. However, keep in mind that neuron counts might not be good proxies for valenced experiences of pleasure and pain.

I believe that the genuinely moral point of view is impartial. (e.g. read more on Impartiality at the Stanford Encyclopedia of Philosophy) Most people care more about friends and family than about unknown strangers. This is a descriptive fact. But I think that doesn't mean that you should give them greater moral importance than unknown strangers. While your affections for those people are higher, that doesn't mean that their importance and moral weight increases.

You can get very similar conclusions without such strong premises.

Plot twist: You don't actually need all of these premises to reach very similar conclusions.

So now let's weaken my moral premises, and show you how it still would change your moral considerations quite radically. Even if you give moderate weight to animals, it still drives you to revolutionary conclusions that change your morality dramatically.

Weakening Hedonism. We don't have to hold that pain and pleasure are the only things that matter in life. But any theory should allow space for them are very important. Suffering is so bad that it completely ruins your life. This is the insight of suffering-focused ethics. Just compare your best experiences, such as having an orgasm or having a child, to the worst experiences you can imagine. I don't want to be gruesome, I could describe such experiences in several paragraphs, but that would be extremely gorey. Just imagine many terrible forms of torture inflected on you at the same time, like being burned alive, for your entire life. Extreme pain is crippling, and also destroys autonomy, virtue, and other things that other moral theories care about.

Weakening Longtermism. Even if we didn't consider the long-term future, there are currently septillions of invertebrates out there in the wild. Issues related to the non-identity problem don't really arise, since I believe we don't care so much about the particular identity of invertebrates, but about the overall welfare of their populations. Though one limitation is that we are not good about knowing how the benefit them at scale right now.

Weakening Impartiality. Even if you don't buy pure impartiality, as long as you give a substantial amount of weight to animals, they will likely outweigh human interests. For example, even if you value a cow's or a chicken's interest at 0.1% of a human interest, that is, that you need 1,000 cows or chickens units of utility to count as much as a human unit of utility, they would still make you go vegetarian. (I borrow this idea from Michael Huemer's Dialogues in Ethical Vegetarianism)

I think this idea of discounting is actually morally implausible and unjustified. It just relies on the status quo bias of speciesism, or focuses on capacities that are morally irrelevant. But I could grant around a 1-to-1000 discounting, if only for the sake of discussion. Still, the argument would likely go through, given the massive numbers of animals and their intense suffering.

Weakening Moral Realism. You don't need to buy into moral realism, because you might think that moral realism is metaphysically or epistemologically weird and suspicious. I'm not a moral realist in a strong sense. You can just buy into the idea that some ethical principles make for a good society.

For example, we could accept the following principle: "If there is a x100 multiplier of how much good you can do, rather than benefitting yourself, you should do it." (This is like a combination of Singer's argument in Famine, Affluence and Morality, with MacAskill's x100 multiplier in Doing Good Better)

This principle doesn't appeal to any strange metaphysical properties, it just seems like a reasonable principle to adopt for a good society. If you can give up 1$ to give 100$ to someone else, you should do it. (Or technically, I should say 1 unit of utility for 100 units of utility, which doesn't suffer from diminishing marginal utility like money does).

Perhaps you don't buy the x100 multiplier, but in cases like animals, we could adopt a stronger form of discounting like a x1,000 or x10,000 multiplier and the argument would go through. For example, the benefit we obtain for about 10 minutes from eating an animal are minuscule compared to the amount of suffering they go through their entire lives. A similar case can be made for reducing wild animal suffering.

Weakening Totalist Axiologies in Population Ethics. I get the impression that you don't have to buy into Total Utilitarianism to have very dramatic action with invertebrate and AI sentience, even if some proposals might end up being different. You could accept Average Utilitarianism, but still, a lot of the focus would be making their lives positive welfare, or controlling populations to prevent lives with deeply negative welfare.

Precautionary Principles push us in this direction. We don't know what moral theory is better or true, there is a lot of moral disagreement. What to do? One introductory argument for the topic of Moral Uncertainty is the following. Imagine you're choosing what to have for lunch. You can either get the meat sandwich or the veggie sandwich. You slightly prefer the meat sandwich, but briefly stop to consider the morality of the situation. You're uncertain as whether kantian deontology or utilitarianism is true. Kantian deontology doesn't care whether you pick the meat sandwich or the veggie sandwich, it thinks both options are allowed. Utilitarianism thinks eating meat is terrible, picking the meat sandwich would be really, really bad. An uncertain agent in this situation should hedge their bets, be risk averse, and have the vegetarian sandwich. This reflects a reasonable, precautionary attitude towards avoiding possible harms, given moral uncertainty.

Astronomical Stakes Arguments push us in this direction. One key principle that Greaves and MacAskill (2021) use to push for longtermism is the following:

Stakes-Sensitivity Principle: When the axiological stakes are very high, non-consequentialist constraints and prerogatives become insignificant in comparison to consequentialist considerations.

It means even if you are a deontologist, you will be carried away from your deontology by the immense, astronomical weight of suffering. As John Rawls put it:"One should not be misled by the somewhat more common view that utilitarianism is a consequentialist view and that any ethical theory which gives some weight to consequences is thereby a version of utilitarianism. This is a mistake. All ethical theories worth serious consideration give some weight to consequences in judging rightness. A theory that did not do this would simply be implausible on its face."

Rawls points out that any ethical theory that completely disregards the consequences of actions is not tenable. But Rawls didn't realize how much of a weakness this is. Dramatic, high-stakes scenarios can override deontological rights, which become defeasible.

And even if they don't buy into this argument in its straightforward way (e.g. you follow Mogensen 2019), you would still have to grant the state of affairs with higher utility is axiologically better, even if it's not morally obligatory.

You can't ignore invertebrate and AI sentience.

Let's now move on to empirical facts, the ones that I have changed my mind about recently, and that triggered the feeling of existential anxiety.

This section is heavily inspired on the following talk from EA Global London 2024. If you're interested in this topic, you alternatively want to watch it for a good overview of what I'll be talking about here:

Invertebrate Sentience.

Likelihood of Invertebrate Sentience.

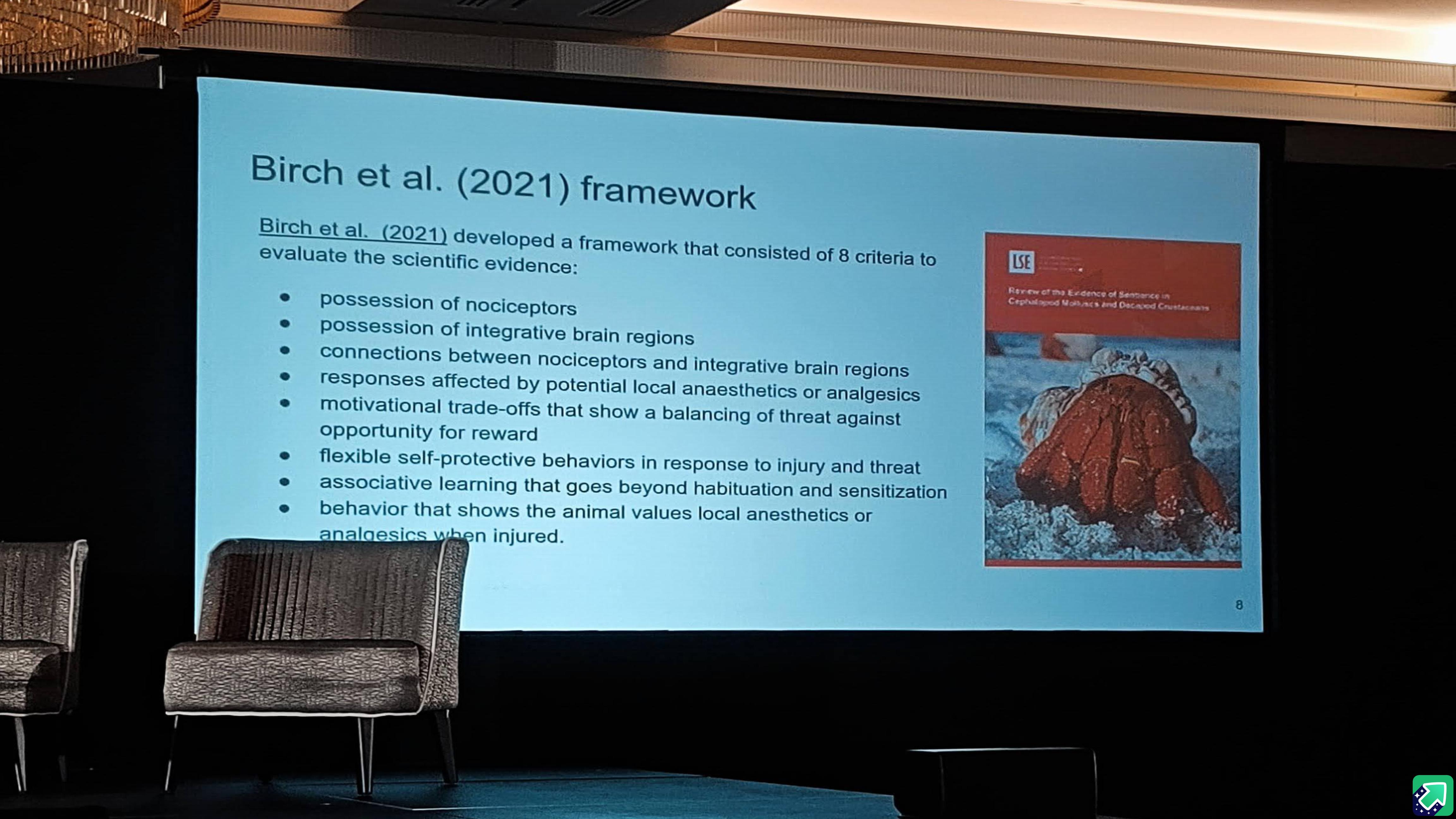

There is a decently high probability that octopuses and crabs (Cephalopod Molluscs and Decapod Crustaceans) are sentient, that they experience pleasure and pain (Birch et al., 2021). As a result of this report, the UK has protected their welfare in their most recent Animal Welfare Act.

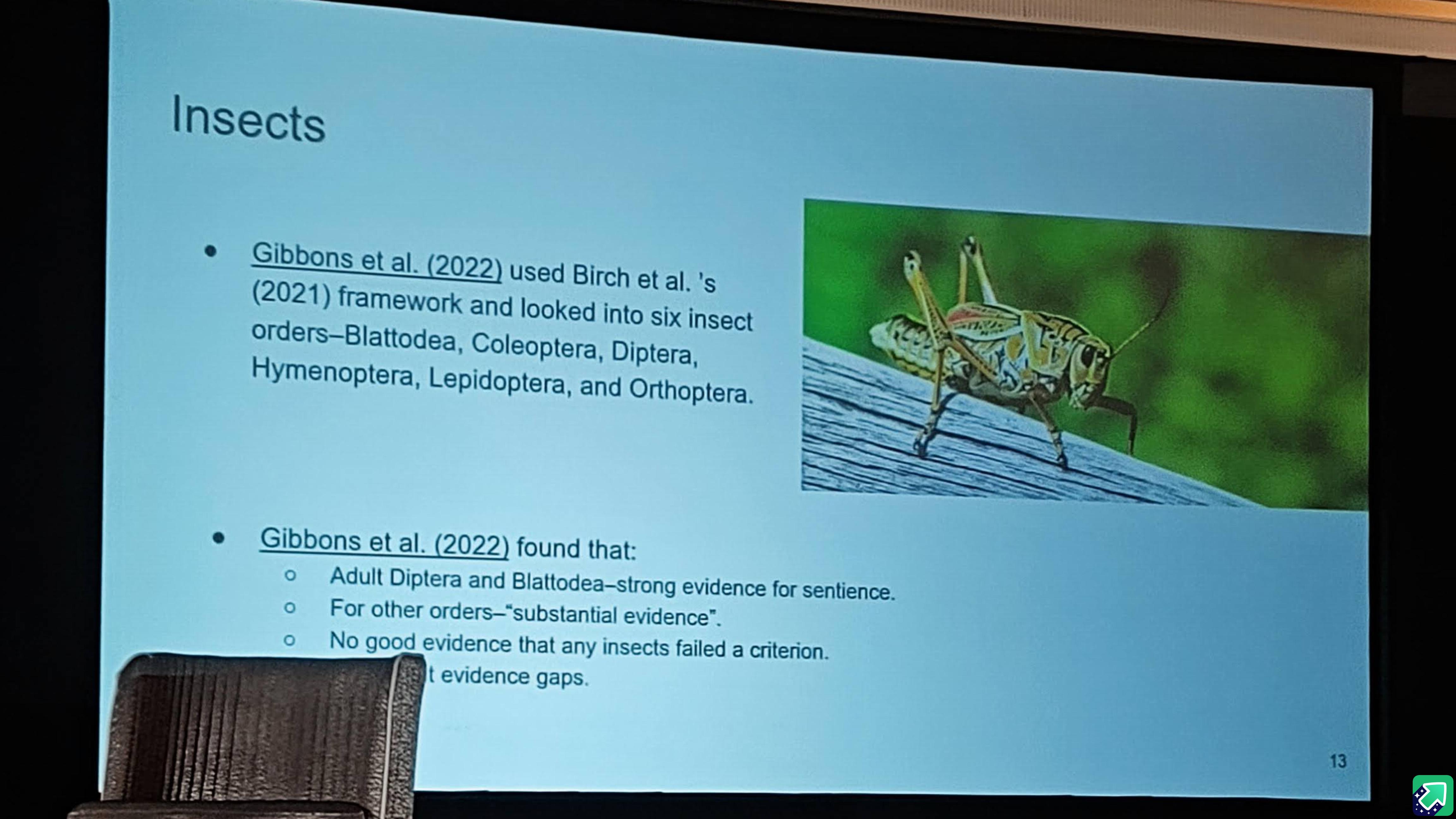

More recently, Gibbons et al. (2022) have used Birch et al.'s framework to study six species of insects. To summarize their conclusion, they found strong evidence for pain experiences in flies, mosquitoes, cockroaches and termites (Diptera, Blattodea). It found substantial evidence in adult bees, wasps, ants, sawflies, crickets, grasshoppers, butterflies and moths (Hymenoptera, Orthoptera, Lepidoptera) some evidence in adult beetles (Coleoptera). They found no good evidence against sentience in any insect at any life stage. Lower rating reflected absence of evidence, rather than negative evidence, so we need further research on insects. But Gibbons' et al. report already seems quite interesting and compelling to me.

There is also the The New York Declaration on Animal Consciousness that launched earlier this year, signed by over 250 academics who do related work on these issues, which includes the following two principles:

"Second, the empirical evidence indicates at least a realistic possibility of conscious experience in all vertebrates (including reptiles, amphibians, and fishes) and many invertebrates (including, at minimum, cephalopod mollusks, decapod crustaceans, and insects). Third, when there is a realistic possibility of conscious experience in an animal, it is irresponsible to ignore that possibility in decisions affecting that animal. We should consider welfare risks and use the evidence to inform our responses to these risks."

So there is non-negligible chance that insects matter morally because they are sentient.

What's the takeaway from this? There could be a range of takeaways, depending on your moral beliefs, but many of the most reasonable conclusions would have very radical results on your morality.

Say this report means you consider there's a 1% chance that insects are sentient. These are not extremely low, Pascal's Mugging numbers. Chances like one in a thousand is like car crashes when drunk driving, it's something we ban because of a low risk, such as one-in-a-thousand, of a great harm like killing or injuring another person. It is similarly unwise to exclude invertebrates from our moral circle because the chance for sentience is one-in-a-hundred or one-in-a-thousand (I borrow this analogy from Jeff Sebo).

I personally lean towards closer to >10% credence that some species of insects are sentient. Maybe this seems too high for the evidence we currently have, but I am taking what I think future evidence show in the future already into consideration. It seems to me that insects exhibit nociception and other important markers that signal pain. Pain also seems like something that would arise quite early in the evolutionary line. I predict that further research will raise an initial 1% conservative credence significantly.

Sheer Numbers.

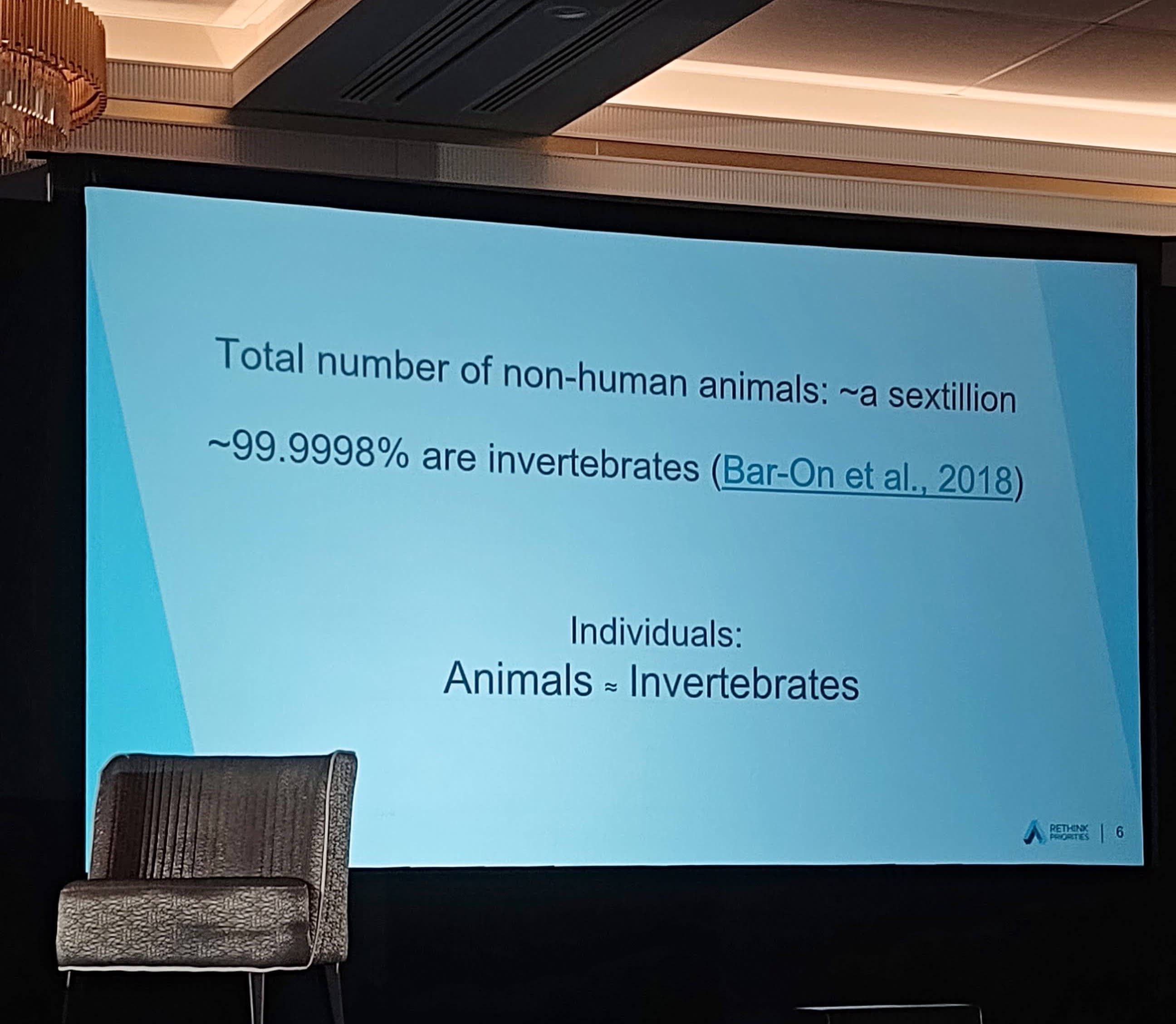

According to a very rough estimate by the Smithsonian Museum, there are quintillion (10^19) insects alive today. According to Bar-On et al. (2018), there are about a sextillion (10^21) animals on Earth, of which 99.9998% are invertebrates. Just to give a closer idea, this means that insects outnumber us humans by a factor of roughly 1 billion-to-1 (if they are a quintillion), to a factor of roughly 100-billion-to-1 (if they are a sextillion).

Magnitude of Pain.

Many of these animals suffer a lot. Certainly, it seems life in the wild is more cruel, in terms of hunger and disease, than life for us humans. It seems like there's growing consensus that many wild animals live net-negative lives (see e.g. Horta 2010). A particular factor here has to do with r-selection, a reproductive strategy that involves having large numbers of offspring, but where most of them die off before reaching adulthood.

You can also read more and see some videos on the situations of animals in the wild in Animal Ethics.

Regarding the exact magnitude of their pain, there is a lot of uncertainty. It doesn't seem like the intensity of pain would scale with neuron count in a straightforward way. I think we simply need more research. But it seems increasingly clear that they exhibit relevant signs like nociception and other markers of sentience present in other species of animals.

Subjective Experience.

Furthermore, it is possible that insects might have more mental experiences per second than humans. I am no expert in this literature, but it seems a likely possibility. Intuitively, perhaps this helps explain why bugs have such fast reaction speeds. But if insects subjectively experience a lot of things per second, then when they get into a situation of suffering, it is much worse than we might have expected. They would suffer greater subjective amounts of pain as the same injury would be in a human. Perhaps take a look at Mogensen 2023 and the bibliography he cites for more on this topic.

All of this combined might mean that invertebrates like insects, when we put all of their population on Earth together, might be more morally important than humans, at least in terms of intrinsic value. This is shocking in terms of moral ramifications. It should make us reprioritize many things.

AI Sentience.

Besides invertebrates, there is non-negligible chance that future AIs might be sentient.

Likelihood of AI Sentience.

A lot of questions here relate to philosophy of mind. Perhaps the most important is: Is functionalism true? Is a brain made out of sillicon atoms rather than carbon atoms, but arranged in exactly the same way, sentient? Maybe, I'm very uncertain. Consciousness might not be substrate independent, that is, the materials that the brain is made out of might change whether there is subjective experience or not. But is this simply "substratism", or discrimination on the basis of physical substrate?

Can microchips, even when they are arranged very differently than our brains, be sentient, or are the realizers of consciousness only in a narrow band of possible arrangements? Again, I'm very uncertain.

How could we tell, anyway? An AI could argue with us for years that they are sentient, and if we were to put it into a robot body, it could exhibit nociception, getting away from sources of pain. But it could just be imitating human or animal behavior, and actually be a p-zombie, with no subjective experience at all. (Plausibly, you don't think the roomba in your house, when it gets away after bumping into the wall, is sentient in any way... But would complex future AI be like this?)

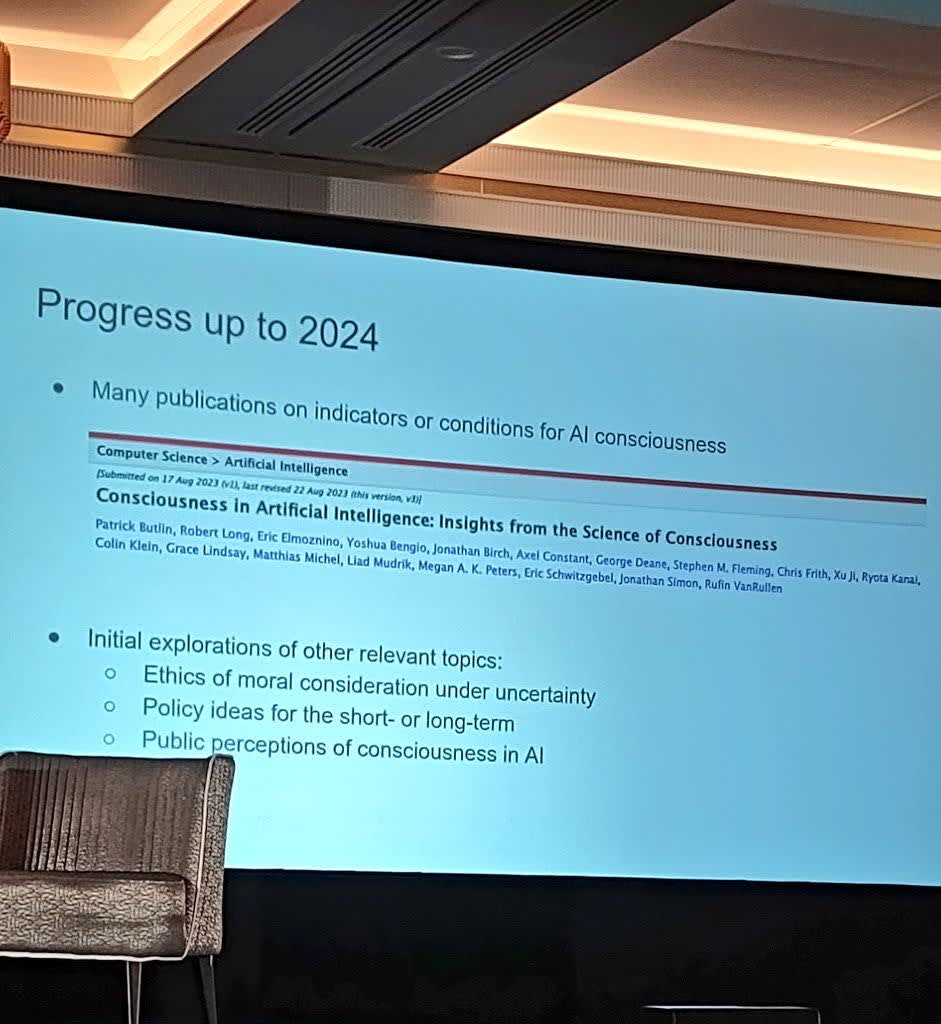

I am more uncertain about AI than I am about invertebrates. At least with invertebrates, we share common ancestry, evolutionary pressures, and biological makeup. With AI, the fact that it's a topic in philosophy of mind rather than neuroscience makes it very untractable, it's hard to verify or refute any hypothesis. Here is a nice discussion by Peter Godfrey Smith on biology, evolution, and AI sentience. You can also read it as an article. There's also been a big report on this topic by Patrick Butlin, Robert Long et al. if you're interested.

Sheer Numbers.

The possibility of digital sentience could easily mean a massive population of sentient beings that dwarfs the number of humans within a few decades. We might have the possibility of creating an astronomical amount of sentient AI systems that suffer incredible amounts, or that have their preferences constantly frustrated, and without abilities such as nociception for communicating their suffering to us. These could be very simple AI systems, so they would be relatively light to copy to many devices. If a copy of a simple sentient AI is a few gigabytes, that could mean more than trillions of additional sentient beings in a few decades.

Magnitude of Pain.

Suffering seems particularly bad and easy to create in comparison to happiness or pleasure, and we won't understand sentient AI systems deeply enough to make them happy for a while. There are also no biological constraints to their possible suffering, if we build them wrong. We could end up building massive neural centers for pain in their artificial minds. We would need an area of study close to a "neuroscience of AI sentience". This might give us a new precautionary argument for delaying AI development for a few decades.

Subjective Experience.

AIs might have many more mental experiences per second than a human being, as these systems could process valenced subjective experiences much faster than any human, due to fast processing speeds. I hadn't considered this fact until this recent talk from Aditya SK from Animal Ethics at a recent workshop, but it might really change things in terms of amount of possible suffering, since they could experience an amount of subjective suffering orders of time more, in the same amount of objective time.

Anyways, to put everything together, there seem to be a variety of possibilities:

Simple Sentient AI. S-risk Scenario. I am particularly worried about this case, particularly, making more than billions of copies of very simple sentient AIs that are able to suffer. | Complex Sentient AI. This is a possibility if sentience requires a relatively high level of complexity to arise. It might be very bad, but not the worst case, since we might not be able to create astronomical numbers of sentient beings with ease. |

Simple Non-Sentient AI. This is your house roomba. It hits the wall and it reroutes to keep cleaning your floor. I'm not particularly worried about this case. | Complex Non-Sentient AI. This is the case if, no matter how complicated we make an AI, consciousness never arises. It keeps being a tool like a computer. |

The possibility that perhaps worries me most is the first, AIs that are very simple, and thus very light to copy around. Imagine if those machines only have a few weights, but those weights make them sentient, and they suffer a lot of pain per second. That's a truly terrifying prospect.

Overall summary:

| Invertebrate Sentience | AI Sentience | |

| Likelihood | >1%, already reasonably captured by a precautionary principle. | A lot more uncertainty due to important unsolved questions in philosophy of mind. |

| Sheer Numbers | Around quintillions (10^19). | None now, potentially far more than quintillions in the future. |

| Magnitude of Pain | Existence is likely to be net negative. Hunger, starvation, and death by the elements is common, their way of reproduction leads most of them to an early death. | I worry particularly about s-risks, since they might not exhibit aversion to pain (nociception). |

| Subjective Experience | Might experience reality faster than humans, which means more subjective pain per second. | Would likely experience reality much faster than humans, perhaps orders of magnitudes faster. Would make s-risks more dangerous. |

Are longtermists still thinking to small? Aliens, Impartiality, and The Point of View of the Universe.

Just to highlight them again, here are two simple areas of concern that I've thought about while listening to talks on wild animal suffering or AI sentience, and how they interact with longtermism and moral impartiality in explosive ways (thus moral circle explosion). I'm sure there could be more. So please, feel free to bring up other scenarios that might be worth considering.

Invertebrate Extraterrestrial Wild Animal Suffering. AKA Alien Bugs.

If invertebrates in outer space are similar to the ones on Earth, then we have moral patients that are suffering across the universe and we might want to reach and help in some way. We might want to plan for how to help them, making sure that interventions on wild animal suffering are net-positive.

There could also be extraterrestrial civilizations out there with digital beings out there that could be undergoing extreme suffering and that we should aim to help somehow.

Simulated simple minds and S-risks.

If sentient minds are cheap and easy to create, we might end up creating astronomical numbers of moral patients, and not even know that they are moral patients because we think they are mere tools. Then, we might end up making them suffer a lot, and not even realize it because they might even lack the nociception to communicate it to us.

I don't believe that current AIs are sentient, but perhaps future ones with different architectures could be. There is just a lot of uncertainty in philosophy of mind about the nature of subjective phenomenal consciousness.

Existential Anxiety - "Help! My train to crazy town has no brakes!"

Let me now briefly explore the psychological aspects of holding weird moral views. Some people have described moral views like utilitarianism as a train to crazy town. Ajeya Cotra introduced the idea as the following:

"When the philosopher takes you to a very weird unintuitive place — and, furthermore, wants you to give up all of the other goals that on other ways of thinking about the world that aren’t philosophical seem like they’re worth pursuing — they’re just like, stop… I sometimes think of it as a train going to crazy town, and the near-termist side is like, I’m going to get off the train before we get to the point where all we’re focusing on is existential risk because of the astronomical waste argument. And then the longtermist side stays on the train, and there may be further stops."

I fear some people might have taken this idea as a "get out of jail free card" from serious moral theorizing. That you can stop from considering ideas that are too weird.

You can do that, but it doesn't mean you should. I worry that there might not be rational brakes to prevent you from reaching crazy town, at least if you are intellectually and morally honest. You can't just "get out" of the discussion, borrow your head and ignore the weight of suffering in the universe.

Moral alienation.

Doing moral philosophy as a job is weird. It can be deeply alienating from society and the people around you. I accept some weird things that go counter of the moral beliefs of most people. I also believe my amount of "weird beliefs" will grow even further over time, particularly if I keep researching moral philosophy. I find writing moral philosophy to be quite detached from the moral emotions that might originate some of the insights and motivations for the discussion. Moral philosophy can be personally transformative. It can also be deeply disturbing.

This is particularly difficult and taxing if you are not a cheeseburger ethicist. That is, if you care about enacting the moral beliefs you hold, or at least approximating those ideals a little bit. For me, ethics is not just a fun intellectual exercise or puzzle. Ethics is a guide for how to live, for what actions you should take, or might even be obligated to take.

Sadly, the other option seems to me intellectually dishonest, like denying where the arguments lead. It would be both intellectually irresponsible and immoral for me to just burrow my head on the ground like an ostrich, and live my life as usual.

Moral anxiety.

Since starting my work and research on the moral circle, the number of moral patients that I believe are in the universe has ballooned, or simply exploded in size. Since reading Singer when I began my undergrad, I already believed in some brand of utilitarianism, cosmopolitanism, and believed that farmed animals matter. I just didn't know adding longtermism, decision theory, and new empirical evidence about the likelyhood of sentience in invertebrates and AI systems could be so dramatic. The issues stack in a multiplicative, rather than additive way.

Emotionally, I miss simpler times, when I cared only for the people around me, when I cared about what political party was in power, or for the global poor, or for factory farmed animals. Emotionally, I would like to burrow my head on the ground. I want to go back to when I thought that the worst things in the world were unemployment, world hunger, or malaria.

Of course, I still do, I try to "purchase my fuzzies and utilons separately". I still go to political protests. I also believe that me and other EAs do way more than the average person towards improving the world. But I am also feeling the pull of the demandingness objection. I could always do more.

Stops I fear: Blowing Up The Universe, Extreme Moral Offsetting, Tiling the Universe with Hedonium, Pascal's Mugging, Infinite Ethics.

There are further stops on the train to crazy town. Here are some even more extreme examples that seem to be either ramifications or considerations worth keeping in mind.

Should we just blow up the universe? It is possible or even likely that wild animal suffering is greater than wild animal happiness. This might mean that the universe is negative in terms of welfare. The brute, initial gut-reaction is to just kill (mercifully, if possible) life across the universe. But that was just my initial reaction, or the reaction of some utilitarians when confronted with wild animal suffering for the first time. Let's see if we can do better than that.

Should we outweigh and offset the suffering in the universe? Perhaps we could populate the universe with invertebrates or sentient AI that only has very positively valenced experiences. It seems like it would make humanity net-positive for the universe.

Of course, we might wonder, how far can we reach in the universe? And ponder on the limits to space travel. But the more likely bottleneck seems to be how fast we can create sentient beings that experience very net-positive lives. We would like to locate a species that reproduces very fast, can survive on very little and in a very varied variety of environments, and experiences enormous joy very easily, and doesn't suffer from similar pain.

Should we tile the universe with hedonium? If you buy into my version of classical total utilitarianism, this seems to be the ultimate conclusion. We should aim to populate the universe with happy invertebrates, or with computer simulations of very simple AIs that feel extreme joy or fulfill their preferences. You can read Bostrom's Astronomical Waste argument regarding this issue.

If you have accepted a weakened theory, then maybe not. But it's worth thinking about what's the "endgame" of your theory of axiological value. I feel many axiological theories have weird conclusions that stray far from the status quo, but that sometimes only total hedonistic utilitarians are scrutinized, since the ultimate ramifications of their views are clearer than other ethical theories. That doesn't mean that other theories don't have insanely weird ramifications, though.

Pascal's Mugging and Fanaticism. There are some gambles out there that we could play, that would redirect massive amounts of resources from us humans to nonhuman animals that we are not even sure are sentient. For example, Jeff Sebo (2023) discusses the extremely unlikely but non-zero possibility that microbes are sentient. Or you could go crazy extreme, and, following some version of panpsychism, posit that subatomic particles could have sentient, valenced states. It seems like a feature of bayesian rationality that we shouldn't discard such possibilities as outright zero credence, but place some very tiny credence upon them. But, given the extremely high numbers of microbes or subatomic particles, we could end up redirecting all our resources to them.

Infinite ethics. Maybe the universe is spatially infinite, with alien beings distributed along. It seems irrational to place non-zero credence on such a thing. Just accepting this as a possibility breaks traditional utilitarianism, since it doesn't matter what you do anymore. Even if you killed a million people, you'd be substracting a finite number from infinity, which is still infinity.

There are some proposals of spacetime-bounded utilitarianism to avoid accepting such issues. At least, it seems it might be reasonable to limit it to beings that we can at affect in-principle (the observable universe) or in practice (a massive sphere of moral concern situated around Earth). I think these fringe scenarios go beyond my mathematical and philosophical abilities, and seem perhaps too detached from my normal experience of ethics to motivate me into action, so I leave this topic to other researchers.

Some good news?

I don't want to be fully negative. So let me outline where I might be wrong, or how we might get lucky and have relatively good moral implications or paths forward.

The history of ethics is still young. The distinction between total and average utilitarianism, for example, has existed for just 40 years, since Parfit's Reasons and Persons. We are likely very wrong about ethics, and as we do more research in moral philosophy, perhaps assisted by superintelligent AIs, we might find out new moral concepts and brilliant ideas that solve a lot of current problems and change our views. It is very hard to see where things will head, but it seems smart to keep options open rather than locking us in a path where we can only fulfill a single ethical theory.

The math could be on our side. Maybe we get lucky. Maybe the intersection between invertebrates and philosophy of mind remains inscrutable, so we never get a good grasp of their sentience. So let's say we attribute 0.01% credence to their sentience. And then, maybe bugs have extremely low capacity for welfare, because experiences of pleasure and pain scale sharply and exponentially with mental complexity, so that an invertebrate with 100,000 neurons can only feel like 0.0001% of the pain that a human can, since we have 86 billions neurons. We might also be lucky that there are almost no sentient aliens out there in outer space, so we should prioritize focusing on life on Earth. Overall, I am skeptical that the real numbers are actually that low, but maybe we just luck out, and this allows us to deprioritize invertebrates.

Spreading genetically modified animals across the universe. It is likely that multiplying invertebrates takes relatively little energy compared to larger animals. It is also likely that, in a few centuries, we will have relatively good technologies for modifying genes, so that we can pick the mental experiences we want those animals to have. In that case, we could prevent any experiences of extreme pain, reduce them into very mild pain, and make them feel extreme joy most of the time.

Sentient AIs could be developed with the capacity for feeling pleasure without the equivalent capacity to feel pain. That is, if we learn enough about AI interpretability, we could try to make AIs that can only feel pleasure or good experiences. Then we could populate the universe with digital minds that have joyful experiences, without the worry of spreading any form of pain.

Longtermism through insects. Humans in the short term, invertebrates and AIs in the long-term? Here is an argument for why we need to preserve humanity, even if our intrinsic value is smaller than invertebrates and AIs. We need humanity to survive because we need to protect the future, make sure that many invertebrates can, in fact, exist, and that their existence are net positive value. This gives us another argument for existential risk reduction. On Earth, we are the only ones smart enough to prevent invertebrate lives from being net-negative, the only ones capable of creating net-positive artificial sentience, and the only ones capable of spreading positive-welfare through outer space.

These are just some ways that we might get lucky and have relatively good moral implications or paths forward. But even most of those "happy" results go through routes that are weird. It leads to the conclusion that the reason we might want to help humanity survive is to help invertebrates or sentient AIs spread and flourish.

What should I do? Giving money, effort, work, time, across cause areas.

As an EA, one of the hardest questions is where to give. So far, I separate my charitable donations into three buckets. I give to the Against Malaria Foundation for human-centric, global health and development donations. I also give to animals, usually Faunalytics, Humane League, or a similar top recommended charity by Animal Charity Evaluators. Regarding longtermism, since there is no "GiveWell for AI" or "GiveWell for Longtermism", I give to the EA Long-Term Future Fund.

Why do I bring up my donations? Well, the issue is determining how much money to allocate to each of the buckets in a non-arbitrary way. So far, I have no principled way of doing it. If I do it by expected value, then it seems I should give all of my charitable money to longtermism, since on the margin that seems most impactful. If I'm risk averse, perhaps a split between AI and animals. And if I add moral uncertainty, where I add some other moral theories into the mix (e.g. contractualism), perhaps that helps give tiny little slice to Against Malaria Foundation.

But the exact numbers are extremely nebulous to me. As to how much to allocate to each bucket, I have no idea. Perhaps somebody who is good with Excel spreadsheets can help the EA community figure this out.

Another thing, on the more emotional side, is that it feels horrible to say to most people that you're allocating the majority of your money to long-shots that could slightly move the needle from preventing human extinction in order to help tile the universe with hedonium. It's not a pretty idea to sell.

But by giving, I don't mean just giving money to charity. I mean, following the advice of 80.000 Hours, how to allocate my time, career, and effort. Sometimes it's also useful to think about the questions in terms of cause prioritization. What if I was leading the EA movement? What if I was president of a country? How would I locate my resources?

I've gone to political protests in the past. Was that a waste of time, given the amount of suffering of the universe? I know that the weight of suffering of humans isn't reduced by alien invertebrate suffering. But, in some way, it seems harder to prioritize them. After all, we need to distribute money, time, resources in smart ways, right?

I'm baffled, I feel somewhat anxious, uneasy. I feel bad about any time I waste that isn't related to astronomical numbers. And it's also hard to know where the line between real issues and mere intellectual exercise lies. I used to think that "wild animal welfare" was an intellectual exercise. Currently I think that alien invertebrate welfare is a real issue, but that infinite ethics is a pure intellectual exercise. But who knows, I might be wrong. I find that prospect alienating and anxiety-inducing.

Stepping back and strategizing. Some actionable points.

Even through existential moral anxiety, we need to step back and strategize. As a way to deal with these long-term issues, we need to find long-term strategies. Hopefully I convinced you that the topic is Neglected and Important. We could also make it more Tractable with further research.

So, to wrap up, I want to promote good interesting recent work on these areas, in order to further build a group of researchers and a research agenda around these topics. If there is something important I missed, let me know and I can update my post.

Invertebrate Sentience.

The people at my department (LSE Philosophy) are doing great work on this topic under the Foundations of Animal Sentience led by Jonathan Birch. In the US, I can highlight the work of Jeff Sebo and his department, which just received a big endowment, so we would expect it to expand and grow very soon. Daniela Waldhorn and Bob Fischer from Rethink Priorities are also doing cool work, check out their Moral Weights Project or their Estimates of Invertebrate Sentience, for example. And check out the Welfare Footprint Project too.

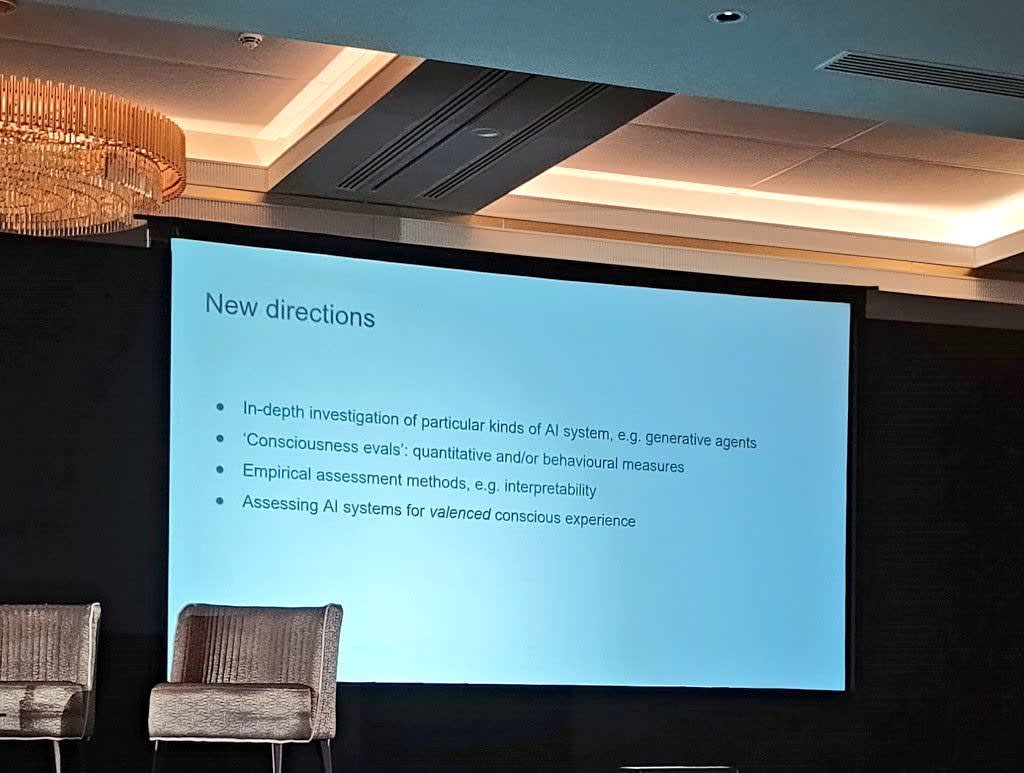

AI Sentience.

If you work on philosophy of mind, it seems interesting to investigate the topic of AI sentience. Could AI systems have valenced experiences? Particularly, the discussion over functionalism vs. substrate-dependence seems relevant. The talk by Peter Godfrey Smith might be a good place to start. There is also the recent report by Patrick Butlin, Robert Long, et al., and you can see some discussion of that report here. Also, here is a research agenda on digital minds by Sentience Institute and here is another by the Global Priorities Institute.

Policy-Making and Advocacy.

We also need to convert knowledge on these topics into points for practical policy-making and advocacy. Though perhaps this advocacy should be targeted to particular groups, such as AI researchers, AI policy people, and some Animal Welfare organizations, since the ideas are still fringe and niche. So far, I haven't found many resources on this. But consider checking out Animal Ethics on Strategic Considerations for Effective Wild Animal Suffering Work, which has some pointers on advocacy, and some blog posts by Sentience Institute that touch on some of these areas.

Conclusion.

I'm aware I covered a lot of topics, and that the post is a bit of a disjointed mess. More than anything, it's a bit of an anxious feeling about how morality can become weird and induce off-putting sources of stress.

I will be okay. I need some time to digest changes of moral views, calm down, and intellectualize them. It is just plain weird that alien invertebrates or sentient AIs might be by far the most important moral subjects in the universe. I didn't sign up for this when I read Peter Singer years ago!

In some ways, I'm baffled by the levity in which moral philosophers and many people treat their discipline. Morality is not a game. For many, it's life or death.

A big wave is coming. The 21st century might be the weirdest century in human history. The history of ethics has just begun.

Some key readings on the topic.

Sebo, J. (2023). The Rebugnant Conclusion: Utilitarianism, Insects, Microbes, and AI Systems. Ethics, Policy and Environment 26 (2):249-264.

Sebo, J., Long, R. (2023) Moral consideration for AI systems by 2030. AI Ethics.

Sebo, J. (forthcoming) Moral Circle Explosion in The Oxford Handbook of Normative Ethics.

Sebo, J. (forthcoming) The Moral Circle (book).

Jeff Sebo on digital minds, and how to avoid sleepwalking into a major moral catastrophe. The 80.000 Hours Podcast.

Fischer, B., Sebo, J. (2024). Intersubstrate Welfare Comparisons: Important, Difficult, and Potentially Tractable. Utilitas 36 (1):50-63.

Jonathan Birch - The Edge of Sentience: Risk and Precaution in Humans, Other Animals, and AI (book). Free to download when it releases in August, so subscribe to the mailing list here.

O’Brien, G. D. (2024). The Case for Animal-Inclusive Longtermism. Journal of Moral Philosophy.

Saad, B., & Bradley, A. (2022). Digital suffering: why it’s a problem and how to prevent it. Inquiry.

Animal Ethics - Introduction to Wild Animal Suffering: A Guide to the Issues.

Animal Ethics - The New York Declaration on Animal Consciousness stresses the ethical implications of animal consciousness.

Groff, Z., & Ng, Y.K. (2019). Does suffering dominate enjoyment in the Animal Kingdom? An update to welfare biology. Biology & Philosophy, 34(4), 40.

Horta, O. (2010). Debunking the idyllic view of natural processes: Population dynamics and suffering in the wild. Telos: Revista Iberoamericana de Estudios Utilitaristas, 17(1), 73–90.

Schukraft, J. (2020). Differences in the intensity of valenced experience across species. EA Forum.

Tomasik, B. (2015). The importance of wild animal suffering.

Tomasik, B. (2009) Do Bugs Feel Pain?

Faria C. (2022) Animal Ethics in the Wild: Wild Animal Suffering and Intervention in Nature. Cambridge University Press.

I have nothing to say about the philosophical arguments. But I think it's important to adopt mental frameworks that are compatible with you having a fulfilling life. To me, that is the highest moral principle, but that's why I'm not a moral philosopher. To you, who cares more about moral truth seeking, I appeal to the life jacket heuristic; you should always put the life jacket on yourself first, and then others, because you're no good to other people if you don't take care of yourself first. A mental framework that leaves you incapacitated by moral anxiety is detrimental to your ability to help all sentient creatures.

Concretely, I encourage you not to think yourself into nightmares. If your views push you to internalize a moral conclusion, you can take that conclusion without internalizing the moral panic that prompted that conclusion. You can decide that AI sentience and invertebrate welfare are the top moral priorities without waking up in a cold sweat about them. You can think about possibilities like alien welfare as questions to be answered rather than sources of anxiety.

And if you fail to live up to your moral standards, be disappointed, strive to be better, but don't doom spiral. Ultimately, we are human and fragile. Don't break yourself with the weight of being something more.

A couple of further considerations, or "stops on the crazy train", that you may be interested in:

(These were written in an x-risk framing, but implications for s-risk are fairly straightforward.)

As far as actionable points, I've been advocating working on metaphilosophy or AI philosophical competence, as a way of speeding up philosophical progress in general (so that it doesn't fall behind other kinds of intellectual progress, such as scientific and technological progress, that seem likely to be greatly sped up by AI development by default), and improving the likelihood that human-descended civilization(s) eventually reach correct conclusions on important moral and philosophical questions, and will be motivated/guided by those conclusions.

In posts like this and this, I have lamented the extreme neglect of this field, even among people otherwise interested in philosophy and AI, such as yourself. It seems particularly puzzling why no professional philosopher has even publicly expressed a concern about AI philosophical competence and related risks (at least AFAIK), even as developments such as ChatGPT have greatly increased societal attention on AI and AI safety in the last couple of years. I wonder if you have any insights into why that is the case.

I agree that there is a lot of uncertainty, but don't understand how that is compatible with a <1% likelihood of AI sentience. Doesn't that represent near certainty that AIs will not be sentient?

Thanks a lot for the links, I will give them a read and get back to you!

Regarding the "Lower than 1%? A lot more uncertainty due to important unsolved questions in philosophy of mind." part, it was a mistake because I was thinking of current AI systems. I will delete the % credence since I have so much uncertainty that any theory or argument that I find compelling (for the substrate-dependence or substate-independence of sentience) would change my credence substantially.

Thanks so much for this summary, this is one of my favorite easy-to-read pieces on the forum on recent times. You were so measured and thoughtful and it was super accessible. Here's a couple of things I found super interesting.

Even if (like me) you reject hedonistic utilitarianism pretty hard, I agree it doesn't necessarily change the practical considerations too much. I like the framework where if you weaken hedonism, long termism, impartialism or moral realism (like I do for all of them quite a lot), this might still end up not being able to dismiss invertebrates or digital minds. I don't like that I can't dismiss these things completely, but I feel I can't all the same.

I also liked the threshold point you discuss where a low percentage might become "pascal's-mugging-ish". Or even just what percentage chance of disastrous suffering is enough for us to care about something. Some people can motivate themselves by pure expected value numbers, I Cannot. I'm not sure if there's been a survey among EAs which checks what percentage possibilities of suffering or success we are willing to engage with (e.g. 1% 0.0001,% any no matter how now low) and on what level we are willing to engage (discuss, donate, devote whole life).

At even as high as 1 percent sentience chance for insects, I don't think I could motivate myself to dedicate my life's work on that (given there's a 99 percent chance everything I do is almost useless), but with that probability I might consider never eating them, going to a protest against farming insects or donating to an insect welfare org. Others will have different thresholds, and I really respect folks who can motivate themselves purely based on the raw expected value of the work they do.

Why do you not recommend policy and/or advocacy for taking the possibility of AI sentience seriously? I'm pretty concerned that even if AI safety gets taken seriously by society, the likely frame is Humanity vs. AI: humans controlling AI and not caring about the possibility of AI sentience. This is a very timely topic!

Also, interesting factoid: Geoffrey Hinton believes current AI systems are already conscious! (Seems very overconfident to me)

Oh, and I was incredibly disappointed to read Good Ventures is apparently not going to fund work on digital sentience?

Re: Advocacy, I do recommend policy and advocacy too! I guess I haven't seen too many good sources on the topic just yet. Though I just remembered two: Animal Ethics https://www.animal-ethics.org/strategic-considerations-for-effective-wild-animal-suffering-work/ and some blog posts by Sentience Institute https://www.sentienceinstitute.org/research

I will add them at the end of the post.

I guess I slightly worry that these topics might still seem too fringe, too niche, or too weird outside of circles that have some degree of affinity with EA or weird ideas in moral philosophy. But I believe that the overton window will shift inside some circles (some animal welfare organizations, AI researchers, some AI policymarkers), so we might want to target them rather than spreading these somewhat weird and fringe ideas to all of society. Then they can push for policy.

Re: Geoffrey Hinton, I think he might subscribe to a view broadly held by Daniel Dennett (although I'm not sure Dennett would agree with the interpretation of his ideas). I guess in the simplest terms, it might boil down to a version of functionalism, where since the inputs and outputs are similar to a human, it is assumed that the "black box" in the middle is also conscious.

I think that sort of view assumes substate-independence of mental states. It leads to slightly weird conclusions such as the China Brain https://en.wikipedia.org/wiki/China_brain , where people arranged in a particular way doing the same function as neurons in a brain, would make the nation of China be a conscious entity.

Besides that, we might also want to distinguish consciousness and sentience. We might get cases with phenomenal consciousness (basically, an AI with subjective experiences, and also thoughts and beliefs, possibly even desires) but no valenced states of pleasure and pain. While they come together in biological beings, these might come apart in AIs.

Re: Lack of funding for digital sentience, I was also a bit saddened by those news. Though Caleb Parikh did seem excited for funding digital sentience research. https://forum.effectivealtruism.org/posts/LrxLa9jfaNcEzqex3/calebp-s-shortform?commentId=JwMiAgJxWrKjX52Qt

Thanks!

Tbh, I think the Overton window isn't so important. AI is changing fast, and somebody needs to push the Overton window. Hinton says LLMs are conscious and still gets taken seriously.. I would really like to see policy work on this soon!

Damn, I really resonated with this post.

I share most of your concerns, but I also feel that I have some even more weird thoughts on specific things, and I often feel like, "What the fuck did I get myself into?"

Now, as I've basically been into AI Safety for the last 4 years, I've really tried to dive deep into the nature of agency. You get into some very weird parts of trying to computationally define the boundary between an agent and the things surrounding it and the division between individual and collective intelligence just starts to break down a bit.

At the same time I've meditated a bunch and tried to figure out what the hell the "no-self" theory to the mind and body problem all was about and I'm basically leaning more towards some sort of panpsychist IIT interpretation of consciousness at the moment.

I also believe that only the "self" can suffer and that the self is only in the map and not the territory. The self is rather a useful abstraction that is kept alive by your belief that it exists since you will interpret the evidence that comes in as being part of "you." It is therefore a self-fulfilling prophecy or part of "dependent origination".

A part of me then thinks the most effective thing I could do is examine the "self" definition within AIs to determine when it is likely to develop. This feels very much like a "what?" conclusion, so I'm just trying to minimise x-risk instead, as it seems like an easier pill to swallow.

Yeah, so I kind of feel really weird about it, so uhh, to feeling weird, I guess? Respect for keeping going in that direction though, much respect.

Just wanted to point out that the distinction between total and average utilitarianism predates Derek Parfit's Reasons and Persons, with Henry Sidgwick discussing it in the Methods of Ethics from 1874, and John Harsanyi advocates for a form of average utilitarianism in Morality and the Theory of Rational Behaviour from 1977.

Other than that, great post! I feel for your moral uncertainty and anxiety. It reminds me of the discussions we used to have on the old Felicifia forums back when they were still around. A lot of negative leaning utilitarians on Felicifia actually talked about hypothetically ending the universe to end suffering and such, and the hedonium shockwave thing was also discussed a fair bit, as well as the neuron count as sentience metric proxy thing.

A number of Felicifia alumni later became somewhat prominent EAs like Brian Tomasik and Peter Wildeford (back then he was still Peter Hurford).

Executive summary: Impartialist sentientism combined with evidence for invertebrate and AI sentience leads to radical moral conclusions about the astronomical importance of invertebrate and digital welfare across the universe, causing existential anxiety but also motivating further research and action on these neglected issues.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

Is it possibly good for humans to go extinct before ASI is created, because otherwise humans would cause astronomical amounts of suffering? Or might it be good for ASI to exterminate humans because ASI is better at avoiding astronomical waste?

Why is it reasonable to assume that humans must treat potentially lower sentient AIs or lower sentient organic lifeforms more kindly than sentient ASIs that have exterminated humans? Yes, such ASIs extinguish humans by definition, but humans have clearly extinguished a very large number of other beings, including some human subspecies as well. From this perspective, whether or not humans are extinct or whether or not ASIs will extinguish humans may be irrelevant, as the likelihood of both kinds of (good or bad) astronomical impacts seems to be equally likely or actually detrimental to the existence of humans.

"Is it possibly good for humans to go extinct before ASI is created, because otherwise humans would cause astronomical amounts of suffering? Or might it be good for ASI to exterminate humans because ASI is better at avoiding astronomical waste?"

These questions really depend on whether you think that humans can "turn things around" in terms of creating net positive welfare to other sentient beings, rather than net negative. Currently, we create massive amounts of suffering through factory farming and environmental destruction. Depending how you weigh those things, it might lead to the conclusion that humans are currently net-negative to the world. So a lot turns on whether you think the future of humanity would be deeply egoistic and harmful, or if you think we can improve substantially. There are some key considerations you might want to look into, in the post The Future Might Not Be So Great by Jacy Reese Anthis: https://forum.effectivealtruism.org/posts/WebLP36BYDbMAKoa5/the-future-might-not-be-so-great

"Why is it reasonable to assume that humans must treat potentially lower sentient AIs or lower sentient organic lifeforms more kindly than sentient ASIs that have exterminated humans?"

I'm not sure I fully understand this paragraph, but let me reply to the best of my abilities from what I gathered.

I haven't really touched on ASIs on my post at all. And, of course, currently no ASIs have killed humans since we don't have ASIs yet. They might also help us flourish, if we manage to align them.

I'm not saying we must treat less-sentient AIs more kindly. If anything, it's the opposite! The more sentient a being is, the more moral worth they will have, since they will have stronger experiences of pleasure and pain. I think we should promote the welfare of beings in ways that are correlated to their abilities for welfare. But it might be an empirical fact that we might want to promote the welfare of simpler beings rather than more complex ones because they are easier/cheaper to copy/reproduce and help. There might be also more sentience, and thus more moral worth, per unit of energy spent on them.

"Yes, such ASIs extinguish humans by definition, but humans have clearly extinguished a very large number of other beings, including some human subspecies as well."

We have currently driven many other species to extinction through environmental destruction and climate change. I think this is morally bad and wrong, since it is possible (e.g. invertebrates) to probable (e.g. vertebrates) that these animals were sentient.

I tend to think in terms of individuals rather than species. By which I mean: Imagine you were in the moral dilemma that you had to either to fully exterminate a species by killing the last 100 members, versus killing 100,000 individuals of a very similar species but not making them extinct. I tend to think of harm in terms of the individuals killed or thwarted potential. In such a scenario, it is possible that we might prefer some species becoming extinct, but since what we care about is promoting overall welfare. (Though second-order effects on biodiversity makes these things very hard to predict).

I hope that clarifies some things a little. Sorry if I misunderstood your points in that last paragraph.

I'm not talking about the positive or negative sign of the net contribution of humans, but rather the expectation that the sign of the net contribution produced by sentient ASI should be similar to that of humans. Coupled with the premise that ASI alone is more likely to do a better job of full-scale cosmic colonization faster and better than humans, this means that either sentient ASI should destroy humans to avoid astronomical waste, or that humans should be destroyed prior to the creation of sentient ASI or cosmic colonization to avoid further destruction of the Earth and the rest of the universe by humans. This means that humans being (properly) destroyed is not a bad thing, but instead is more likely to be better than humans existing and continuing.

Alternatively ASI could be created with the purpose of maximizing perpetually happy sentient low-level AI/artificial life rather than paperclip manufacturing. in which case humans would either have to accept that they are part of this system or be destroyed as this is not conducive to maximizing averaging or overall hedonism. This is probably the best way to maximize the hedonics of sentient life in the universe, i.e. utility monster maximizers rather than paperclip maximizers.

I am not misunderstanding what you are saying, but pointing out that these marvelous trains of thought experiments may lead to even more counterintuitive conclusions.