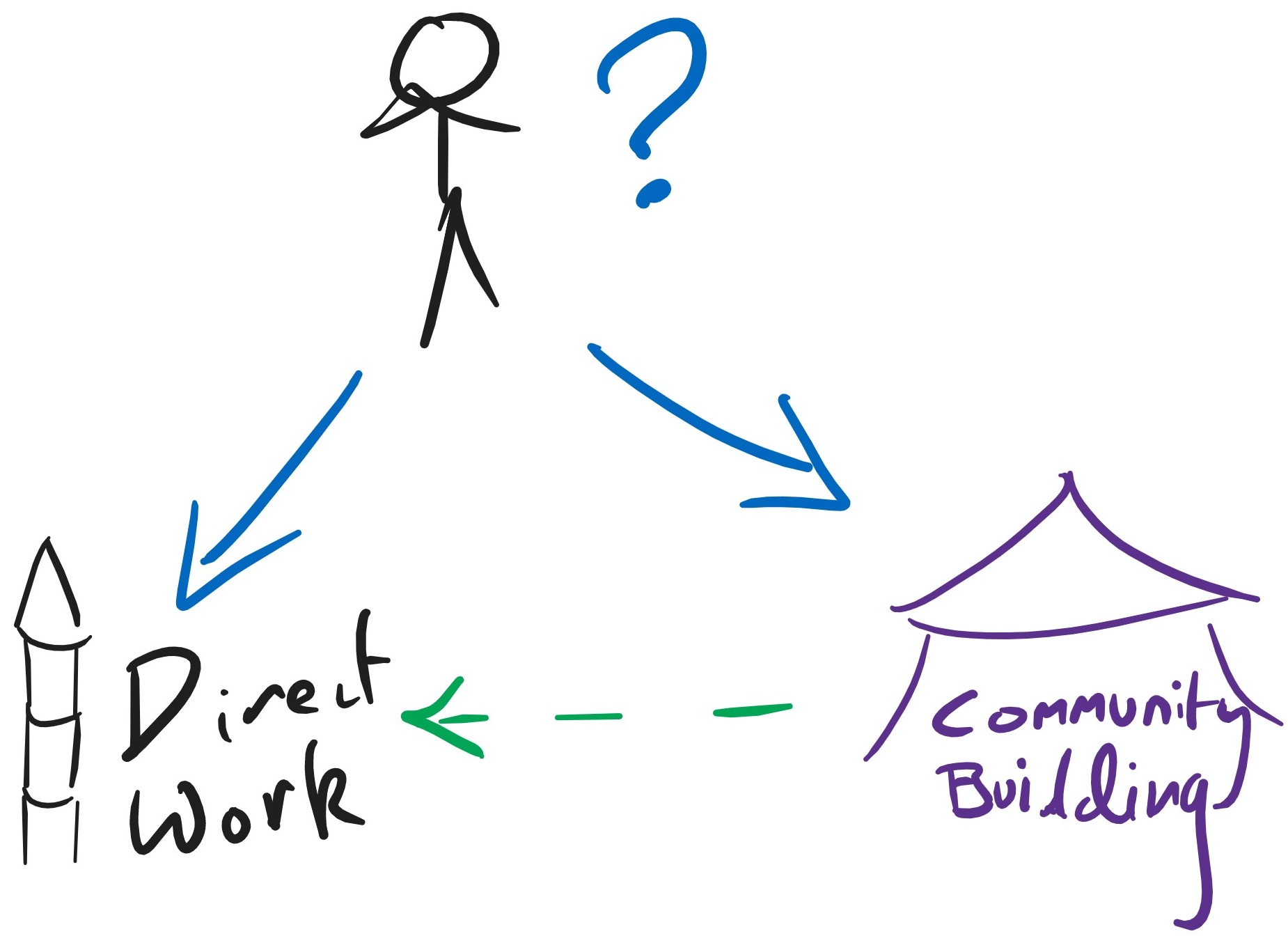

Here's a cartoon picture I think people sometimes have:

I think this is misguided and can be harmful.

The appeal of the picture is that specialization generally brings big benefits; since there are two large buckets of work it's natural to think they should be pursued by specialists working in specialist orgs.

The reason that the specialization argument doesn't just go through is that the things we're trying to build communities for are complicated. I think community-building work is often a lot better when it's high context on what's being done and what's needed in direct work.[1] In some cases this could shift it from negative to positive; in others it merely provides a significant boost. By default in the "separate camps" model, the community-building camp simply isn't high enough context on the direct work camp to achieve that.

Rather than separate camps, I think that it's better to think of there being one camp, which is oriented around "direct work". A good amount of community-building work goes on there, but it's all pretty well integrated with the direct work (often with heavy overlap of the people involved).

At an individual level, I think it's often correct for people to multi-class between direct work and community building. After you have expertise on direct work and what's needed, there are low-hanging fruit in leveraging that expertise in community building (this might be e.g. via giving talks or mentoring junior people). Or if you're mostly focused on community building I think you'll often benefit from spending a fraction of your time really trying to get to the bottom of understanding direct work agendas, and exactly why people are pursuing different strategies. A possible way of getting solid grounding for this is to actually spend a fraction of your time aiming to do high-value direct work, but that's certainly not the only approach — reading content and (especially) talking to people who are engaged with direct work about what their bottlenecks are also helpful. As a very rough rule of thumb, I think that it's good if people doing community-building work spend at least 20% of their time obsessing over the details of what's needed in direct work.[2]

Clarifications & caveats to these claims:

- 20% time doesn't need to mean a day every week. It could be ten weeks a year, or one year every five. (It probably shouldn't be stretched longer than that, because knowledge will go stale.)

- I mean to refer to people trying to build communities aimed at increasing direct work. I think it's fine to have separate specialist people/orgs for things like:

- Education — e.g. if you had an org aimed at getting more people to intuitively understand scope-sensitivity

- Building communities that aren't aimed at direct work — e.g. if you want to build a community of AI researchers who read science fiction together and think about the future of AI

- It's fine to have professional facilitators who are helping the community-building work without detailed takes on object-level priorities, but they shouldn't be the ones making the calls about what kind of community-building work needs to happen

- The whole 20% figure is me plucking a number that feels reasonable out of the air. If someone wants to argue that it should be 12% or 30% I'm like "that's plausible". If someone wants to argue that it should be 2% or 80% I'm going to be pretty sceptical.

- I think a lot of people who are working professionally in community building understand all of this intuitively. But it doesn't seem to me to be uniformly understood/discussed, so I thought it was worth sharing the model.

Corollaries of this view:

- People who want to do community building work should be particularly interested in doing so in environments which give them high access to people doing direct work

- People doing direct work should be excited to look for high-leverage ways to apply their knowledge to help community building, like:

- Spend time uploading their models to community-builders (so long as they can find ones they're excited to work with)

- Meeting directly with promising people, perhaps referred to them by community builders, even when it isn't immediately helpful to their goals

- More people should consider moving between community building and direct work roles through their careers

- (For especially technical direct work roles it may be relatively hard to move into them later, but there are plenty of roles which aren't like this)

- ^

A straightforward case is that it's often very valuable for people who might get involved to talk to someone with sophisticated models of what's being done in different areas, so that they have a chance to interrogate these models.

- ^

And probably more than that early in their careers; cf. Community Builders Spend Too Much Time Community Building.

I used to agree more with the thrust of this post than I do, and now I think this is somewhat overstated.

[Below written super fast, and while a bit sleep deprived]

An overly crude summary of my current picture is "if you do community-building via spoken interactions, it's somewhere between "helpful" and "necessary" to have a substantially deeper understanding of the relevant direct work than the people you are trying to build community with, and also to be the kind of person they think is impressive, worth listening to, and admirable. Additionally, being interested in direct work is correlated with a bunch of positive qualities that help with community-building (like being intellectually-curious and having interesting and informed things to say on many topics). But not a ton of it is actually needed for many kinds of extremely valuable community building, in my experience (which seems to differ from e.g. Oliver's). And I think people who emphasize the value of keeping up with direct work sometimes conflate the value of e.g. knowing about new directions in AI safety research vs. broader value adds from becoming a more informed person and gaining various intellectual benefits from practice engaging with object-level rather than social problems.

Earlier on in my role at Open Phil, I found it very useful to spend a lot of time thinking through cause prioritization, getting a basic lay of the land on specific causes, thinking through what problems and potential interventions seemed most important and becoming emotionally bought-in on spending my time and effort on them. Additionally, I think the process of thinking through who you trust, and why, and doing early audits that can form the foundation for trust, is challenging but very helpful for doing EA CB work well. And I'm wholly in favor of that, and would guess that most people that don't do this kind of upfront investment are making an important mistake.

But on the current margin, the time I spend keeping up with e.g. new directions in AI safety research feels substantially less important than spending time on implementation on my core projects, and almost never directly decision-relevant (though there are some exceptions, e.g. I could imagine information that would (and, historically, has) update(d) me a lot about AI timelines, and this would flow through to making different decisions in concrete ways). And examining what's going on with that, it seems like most decisions I make as a community-building grantmaker are too crude to be affected much by additional info at the relevant level of granularity intra-cause, and when I think about lots of other community-building-related decisions, the same seems true.

For example, if I ask a bunch of AI safety researchers what kinds of people they would like to join their teams, they often say pretty similar versions of "very smart, hardworking people who grok our goals, who are extremely gifted in a field like math or CS". And I'm like "wow , that's very intuitive, and has been true for years, without changing". Subtle differences between alignment agendas do not, in my experience, bear out enough in people's ideas about what kinds of recruitment are good that I've found it to be a good use of time to dig in on. This is especially true given that places where informed, intelligent people who have various important-to-me markers of trustworthiness differ are places where I find that it's particularly difficult for an outsider to gain much justified confidence.

Another testbed is that I spend a few years spending a lot of time on Open Phil's biosecurity strategy, and I formed a lot of my own, pretty nuanced and intricate views about it. I've never dived as deep on AI. But I notice that I didn't find my own set of views about biosecurity that helpful for many broader community-building tradeoffs and questions, compared to the counterfactual of trusting the people who seemed best to me to trust in the space (which I think I could have guessed using a bunch of proxies that didn't involve forming my own models of biosecurity) and catching up with them or interviewing them every 6mo about what it seems helpful to know (which is more similar to what I do with AI). Idk, this feels more like 5-10% of my time, though maybe I absorb additional context via osmosis from social proximity to people doing direct work, and maybe this helpful in ways that aren't apparent to me.

A lot of what Claire says rings true to me.

Just to focus on my experience:

It could be that I'm underweighting the long-term benefits of being generally better informed or non-directed exploration & learning.

From my experience running a team, I think encouraging staff to spend 20% of time on object-level work would be a big cost. Focus and momentum are really important for productivity. In a larger team, it's a challenge even to have just 30-60% of your time free for pushing forward your top priority (lower end for senior staff), so putting 20% of time into a side-project means losing 33-66% of your "actually pushing your top priority forward" time, which would really slow down the org. The benefits would need to be pretty huge.

I'd be more excited about:

In my experience hiring, it's great when someone (e.g. an advisor, researcher) has experience of one of the object level areas, but it's also great when someone has experience getting people interested in EA, or in community building skills like marketing, giving talks, writing, operations etc. It's not obvious to me it would be better to prioritise hiring people with object-level experience a lot more vs. these other types of experience.

Salient points of agreement:

OTOH my gut impression (may well be wrong) is that if 80k doubled its knowledge of object-level priorities (without taking up any time to do so) that would probably increase its impact by something like 30%. So from this perspective spending just 3-5% of time on keeping up with stuff feels like it's maybe a bit of an under-investment (although maybe that's correct if you're doing it for a few years and then spending time in a position which gives you space to go deeper).

One nuance: activity which looks like "let people know there's this community and their basic principles, in order that the people who would be a natural fit get to hear about it" feels to me like I want to put it in the education rather than community-building bucket. Because if you're aiming for these intermediate variables like broad understanding rather than a particular shape of a community, then it's less important to have nuanced takes on what the community should look like. So for that type of work I less want to defend anything like 20% (although I'm still often into people who are going to do that spending a bunch of time earlier in their careers going deep on some of the object-level).

That's useful.

1) Just to clarify, I don't think 80k staff should only spend 3-5% of time keeping up on object level things in total. That's just the allocation to meetings and conferences.

In practice, many staff have to learn about object level stuff as part of their job (e.g. if writing a problem profile, interviewing a podcast guest, figuring out what to do one-on-one) – I'm pro learning that's integrated into your mainline job.

I also think people could spend some of their ten percent time learning about object level stuff and that would be good.

So a bunch probably end up at 20%+, though usually the majority of the knowledge is accumulated indirectly.

2) +30% gain was actually less than I might have expected you'd say. Spending, say, 10% of time to get a 30% gain only sounds like a so-so use of attention to me. My personal take would be that 80k managers should focus on things with either bigger bottom line gains (e.g. how to triple their programme as quickly as possible) or higher ROIs that that.

I thought the worry might be that we'd miss out on tail people, and so end up with, say 90% less impact in the long-term, or something like that.

3) Hmm seems like most of what 80k and I have done is actually education rather than community-building on this breakdown.

Re. 2), I think the relevant figure will vary by activity. 30% is a not-super-well-considered figure chosen for 80k, and I think I was skewing conservative ... really I'm something like "more than +20% per doubling, less than +100%". Losing 90% of the impact would be more imaginable if we couldn't just point outliery people to different intros, and would be a stretch even then.

Thanks, really appreciated this (strong upvoted for the granularity of data).

To be very explicit: I mostly trust your judgement about these tradeoffs for yourself. I do think you probably get a good amount from social osmosis (such that if I knew you didn't talk socially a bunch to people doing direct work I'd be more worried that the 5-10% figure was too low); I almost want to include some conversion factor from social time to deliberate time.

If you were going to get worthwhile benefits from more investment in understanding object-level things, I think the ways this would seem most plausible to me are:

Overall I'm not sure if I should be altering my "20%" claim to add more nuance about degree of seniority (more senior means more investment is important) and career stage (earlier means more investment is good). I think that something like that is probably more correct but "20%" still feels like a good gesture as a default.

(I also think that you just have access to particularly good direct work people, which means that you probably get some of the benefits of sync about what they need in more time-efficient ways than may be available to many people, so I'm a little suspicious of trying to hold up the Claire Zabel model as one that will generalize broadly.)

(Weakly-held view)

It seems like my job for the past 4 years (building software for the EA community, for most of that, the EA Forum) has been pretty much a "Community Building" track job.

You might argue that I should view myself as primarily working on a specific cause area, and that I should have spent 20% of my time working on it (for me it would be AI alignment), but that would be pretty non-obvious. And in any case, I would still look radically different from someone primarily focused on doing alignment research directly.

You might call this a quibble and say you could fit this into a "one-camp" model, but I think there's a pretty big problem for the model if the response is "there's one camp, just with two radically different camps within the one camp".

I don't really disagree with a directional push of this post, that a substantial fraction of community builders should dramatically increase the amount they try to learn relatively in-the-weeds details of object-level causes.

Clearly as a purely factual matter, there is a community-building track, albeit one that doesn't currently have a ton of roles - the title is an overstatement.

My point is that it's not separate. People doing community building can (and should) talk a bunch to people focused on direct work. And we should see some of people moving backwards and forwards between community building and more direct work.

I think if we take a snapshot in 2022 it looks a bit more like there's a community-building track. So arguably my title is aspirational. But I think the presence or absence of a "track" (that people make career decisions based on) is a fact spanning years/decades, and my best guess is that (for the kind of reasons articulated here) we'll see more integration of these areas, and the title will be revealed as true with time.

Overall: playing a bit fast and loose, blurring aspirations with current reporting. But I think it's more misleading to say "there is a separate community-building track" than to say there isn't. (The more epistemically virtuous thing to say would be that it's unclear if there is, and I hope there isn't.)

BTW I agree that the title is flawed, but don't have something I feel comparably good about overall. But if you have a suggestion I like I'll change it.

(Maybe I should just change "track" in the title to "camp"? Feels borderline to me.)

Could change "there is no" to "against the" or "let's not have a"?

Thanks, changed to "let's not have a ..."

I guess you want to say that most community building needs to be comprehensively informed by knowledge of direct work, not that each person who works in (what can reasonably be called) community building needs to have that knowledge.

Maybe something like "Most community building should be shot through by direct work" - or something more distantly related to that.

Though maybe you feel that still presents direct work and community-building as more separate than ideal. I might not fully buy the one camp model.

I do think that still makes them sound more separate than ideal -- while I think many people should be specializing towards community building or direct work, I think that specialized to community building should typically involve a good amount of time paying close attention to direct work, and I think that specialized to direct work should in many cases involve a good amount of time looking to lever knowledge to inform community building.

To gesture at (part of) this intuition I think that some of the best content we have for community building includes The Precipice, HPMoR, and Cold Takes. In all cases these were written by people who went deep on object-level. I don't think this is a coincidence, and while I don't think all community-building content needs that level of expertise to produce well, I think that if we were trying to just use material written by specialized community builders (as one might imagine would be more efficient, since presumably they'll know best how to reach the relevant audiences, etc.) we'd be in much worse shape.

Yeah, I get that. I guess it's not exactly inconsistent with the shot through formulation, but probably it's a matter of taste how to frame it so that the emphasis gets right.

After reflecting further and talking to people I changed "track" in the title to "camp"; I think this more accurately conveys the point I'm making.

Yes. I see some parallels between this discussion and the discussion about the importance of researchers being teachers and vice versa in academia. I see the logic of that a bit but also think that in academia, it's often applied dogmatically and in a way that underrates the benefits of specialisation. Thus while I agree that it can be good to combine community-building and object-level work, I think that that heuristic needs to be applied with some care and on a case-by-case basis.

Fwiw, my role is similar to yours, and granted that LessWrong has a much stronger focus on Alignment, but I currently feel that a very good candidate for the #1 reason that I will fail to steer LW to massive impact is because I'm not and haven't been an Alignment researcher (and perhaps Oli hasn't been either, but he's a lot more engaged with the field than I am).

My first-pass response is that this is mostly covered by:

(Perhaps I should have called out building infrastructure as an important type of this.)

Now, I do think it's important that the infrastructure is pointed towards the things we need for the eventual communities of people doing direct work. This could come about via you spending enough time obsessing over the details of what's needed for that (I don't actually have enough resolution on whether you're doing enough obsessing over details for this, but plausibly you are), or via you taking a bunch of the direction (i.e. what software is actually needed) from people who are more engaged with that.

So I'm quite happy with there being specialized roles within the one camp. I don't think there should be two radically different camps within the one camp. (Where the defining feature of "two camps" is that people overwhelmingly spend time talking to people in their camp not the other camp.)

My hot-take for the EA Forum team (and for most of CEA in-general) is that it would probably increase its impact on the world a bunch if people on the team participated more in object-level discussions and tried to combine their models of community-building more with their models of direct-work.

I've tried pretty hard to stay engaged with the AI Alignment literature and the broader strategic landscape during my work on LessWrong, and I think that turned out to be really important for how I thought about LW strategy.

I indeed think it isn't really possible for the EA Forum team to not be making calls about what kind of community-building work needs to happen. I don't think anyone else at CEA really has the context to think about the impact of various features on the EA Forum, and the team is inevitably going to have to make a lot of decisions that will have a big influence on the community, in a way that makes it hard to defer.

I would find it helpful to have more precision about what it means to "participate more in object level discussion".

For example: did you think that I/the forum was more impactful after I spent a week doing ELK? If the answer is "no," is that because I need to be at the level of winning an ELK prize to see returns in my community building work? Or is it about the amount of time spent rather than my skill level (e.g. I would need to have spent a month rather than a week in order to see a return)?

Definitely in-expectation I would expect the week doing ELK to have had pretty good effects on your community-building, though I don't think the payoff is particularly guaranteed, so my guess would be "Yes".

Thinks like engaging with ELK, thinking through Eliezer's List O' Doom, thinking through some of the basics of biorisk seem all quite valuable to me, and my takes on those issues are very deeply entangled with a lot of community-building decisions I make, so I expect similar effects for you.

Thanks! I spend a fair amount of time reading technical papers, including the things you mentioned, mostly because I spend a lot of time on airplanes and this is a vaguely productive thing I can do on an airplane, but honestly this just mostly results in me being better able to make TikToks about obscure theorems.

Maybe my confusion is: when you say "participate in object level discussions" you mean less "be able to find the flaw in the proof of some theorem" and more "be able to state what's holding us back from having more/better theorems"? That seems more compelling to me.

[Speaking for myself not Oliver ...]

I guess that a week doing ELK would help on this -- probably not a big boost, but the type of thing that adds up over a few years.

I expect that for this purpose you'd get more out of spending half a week doing ELK and half a week talking to people about models of whether/why ELK helps anything, what makes for good progress on ELK, what makes for someone who's likely to do decently well at ELK.

(Or a week on each, but wanting to comment about allocation of a certain amount of time rather than increasing the total.)

Cool, yeah that split makes sense to me. I had originally assumed that "talking to people about models of whether ELK helps anything" would fall into a "community building track," but upon rereading your post more closely I don't think that was the intended interpretation.[1]

FWIW the "only one track" model doesn't perfectly map to my intuition here. E.g. the founders of doordash spent time using their own app as delivery drivers, and that experience was probably quite useful for them, but I still think it's fair to describe them as being on the "create a delivery app" track rather than the "be a delivery driver" track.

I read you as making an analogous suggestion for EA community builders, and I would describe that as being "super customer focused" or something, rather than having only one "track".

You say "obsessing over the details of what's needed in direct work," and talking to experts definitely seems like an activity that falls in that category.

>It's fine to have professional facilitators who are helping the community-building work without detailed takes on object-level priorities, but they shouldn't be the ones making the calls about what kind of community-building work needs to happen

I think this could be worth calling out more directly and emphatically. I think a large fraction (idk, between 25 and 70%) of people who do community-building work aren't trying to make calls about what kinds of community-building work needs to happen.

I guess I think there's a continuum of how much people are making those calls. There are often a bunch of micro-level decisions that people are making which are ideally informed by models of what it's aiming for. If someone is specializing in vegan catering for EA events then I think it's great if they don't have models of what it's all in service of, because it's pretty easy for the relevant information to be passed to them anyway. But I think most (maybe >90%) roles that people centrally think of as community building have significant elements of making these choices.

I guess I'm now thinking my claim should be more like "the fraction should vary with how high-level the choices you're making are" and provide some examples of reasonable points along that spectrum?

Noticing that the (25%, 70%) figure is sufficiently different from what I would have said that we must be understanding some of the terms differently.

My clause there is intended to include cases like: software engineers (but not the people choosing what features to implement); caterers; lawyers ... basically if a professional could do a great job as a service without being value aligned, then I don't think it's making calls about what kind of community building needs to happen.

I don't mean to include the people choosing features to implement on the forum (after someone else has decided that we should invest in there forum), people choosing what marketing campaigns to run (after someone else has decided that we should run marketing campaigns), people deciding how to run an intro fellowship week to week (after someone else told them to), etc. I do think in this category maybe I'd be happy dipping under 20%, but wouldn't be very happy dipping under 10%. (If it's low figures like this it's less likely that they'll be literally trying to do direct work with that time vs just trying to keep up with its priorities.)

Do you think we have a substantive disagreement?

I appreciate this post's high info to length ratio, and I'd be excited about more EAs taking actions along the lines of your corollaries at the end.

On another note, I find it interesting that for the amount of time community builders should be spending skilling up for direct work / doing direct work / skilling up in general:

I'm saying "at least ~20%"; I'm certainly happy with some people with much higher ratios.

My impression is that Emma's post is mostly talking about student organizers. I think ">50%" seems like a very reasonable default there. I think it would be a bit too costly to apply to later career professionals (though it isn't totally crazy especially for "community building leadership" roles).

Largely agree with Owen's post here. I would just add one point.

There's a danger of developing a cadre of specialist or semi-professional 'community-builders' in EA, which is that they could easily become a recruitment bottleneck, a selection filter, a radicalized activist subculture, and a locus of ideological control and misguided norm-enforcement -- just as Human Resources (HR) departments have often become in corporations and academia.

For example, in many companies that become politically radicalized by younger workers, the HR department tends to be in the vanguard of radicalization -- partly because already-radicalized workers know that HR is where the power is (to recruit, select, promote, and discipline other workers, and to enforce certain ideological norms), and partly because highly rational systematizers don't tend to be very interested in joining the people-centered HR world.

My nightmare scenario is that a smallish clique within EA could take over the 'community-building function', and nudge the community-building in certain politicized directions that are contrary to the nonpartisan principles of EA. This is unlikely to go in the direction of making EA more Republican, conservative, or religious than it is -- it seems much more likely that semi-professional community-builders in EA could push EA recruitment, selection, and outreach in a politically Democratic, liberal, progressive, or woke direction. This is not idle speculation. We have seen this happen again and again in corporations, universities, non-profits, foundations, churches, community groups, and other organizations.

This is captured in Robert Conquest's 'Second Law of Politics': "Any organization not explicitly right-wing sooner or later becomes left-wing". Not that EA should be explicitly right-wing. Rather, it should be staunchly, fiercely EA -- which is to say, non-partisan, and highly skeptical about allowing any organizational subcultures to develop that would be vulnerable to a woke/progressive takeover.

Of course, it would be great for more EAs to cultivate skills in public outreach, recruitment, marketing, communication, media, conference hosting, podcasting, youtubing, networking, and specific forms of community building. I'm just giving a personal caveat that we should be quite wary of creating an HR-style cadre of specialist 'community-builders' -- because that would be very delectable and low-hanging fruit for political activists to try to take over.

I think 20% might be a decent steady-state but at the start of their involvement I think I'd like to see new aspiring community builders do something like six months on intensive object-level work/research.