Key takeaways:

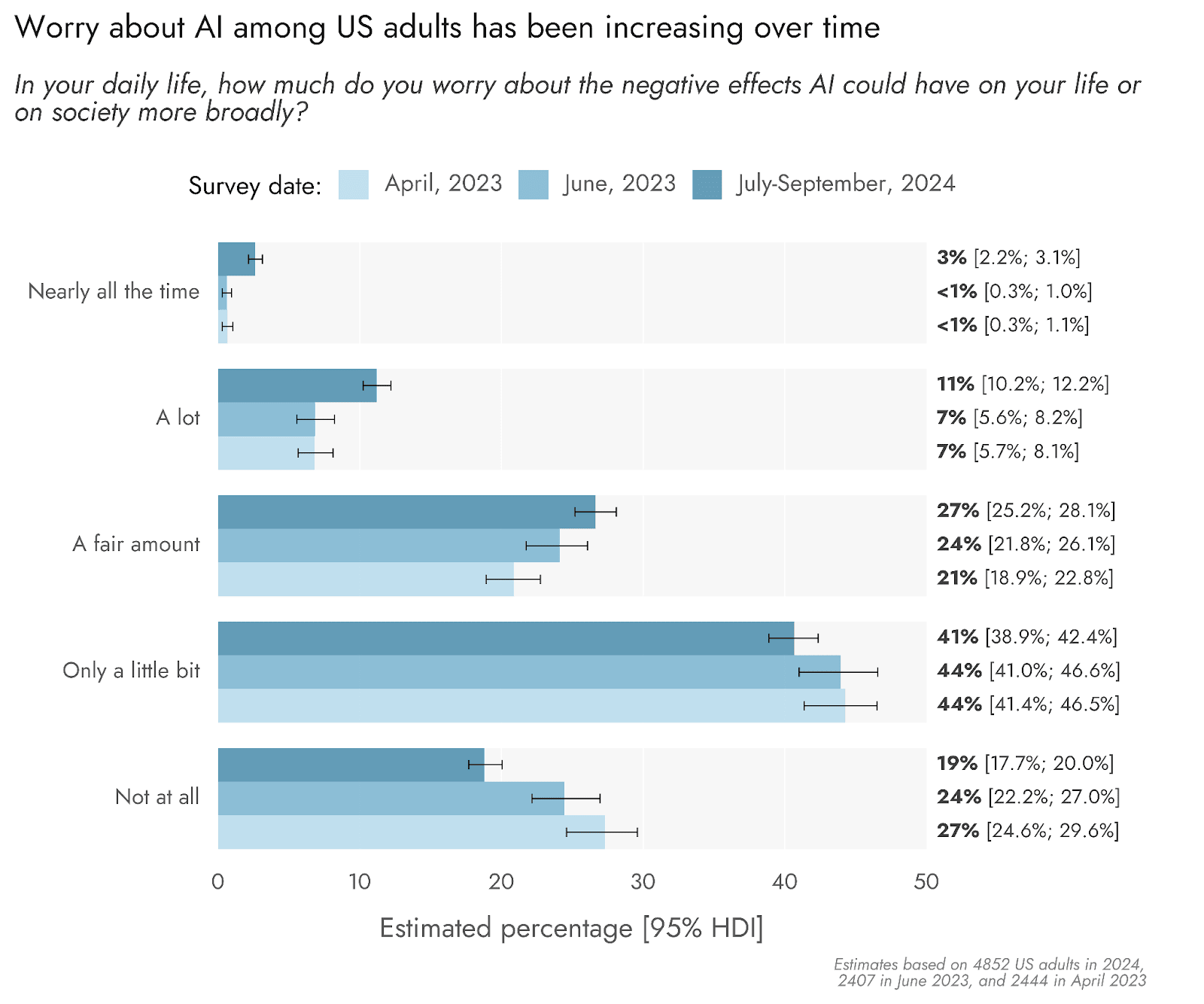

- Worry about the negative effects of AI has increased since April and June, 2023.

- Most US adults still only report worrying Not at all (19%) or Only a little bit (41%), compared with A fair amount (27%), A lot (11%), and Nearly all the time (3%), in their daily lives.

- US adults tended to agree more with outlooks focusing on serious danger from or ethical risks associated with AI, than with the idea that we should be accelerating AI.

The results presented here are based upon the responses of 4,890 US adults[1], who completed the Pulse survey between July and September 2024. The full report can be accessed on our website or as a PDF, which includes more detailed methodological and background information.

Attitudes towards Artificial Intelligence

AI worry

Respondents were asked to indicate the extent to which they worried about the impact AI might have on their lives and society more broadly. As we have asked this same question previously - in April and in June of 2023 - we were able to assess the extent to which concerns about AI have evolved over time. Relative to 2024, it is estimated that fewer members of the US public do not worry at all, and increasing percentages are expected to worry a lot or nearly all the time.

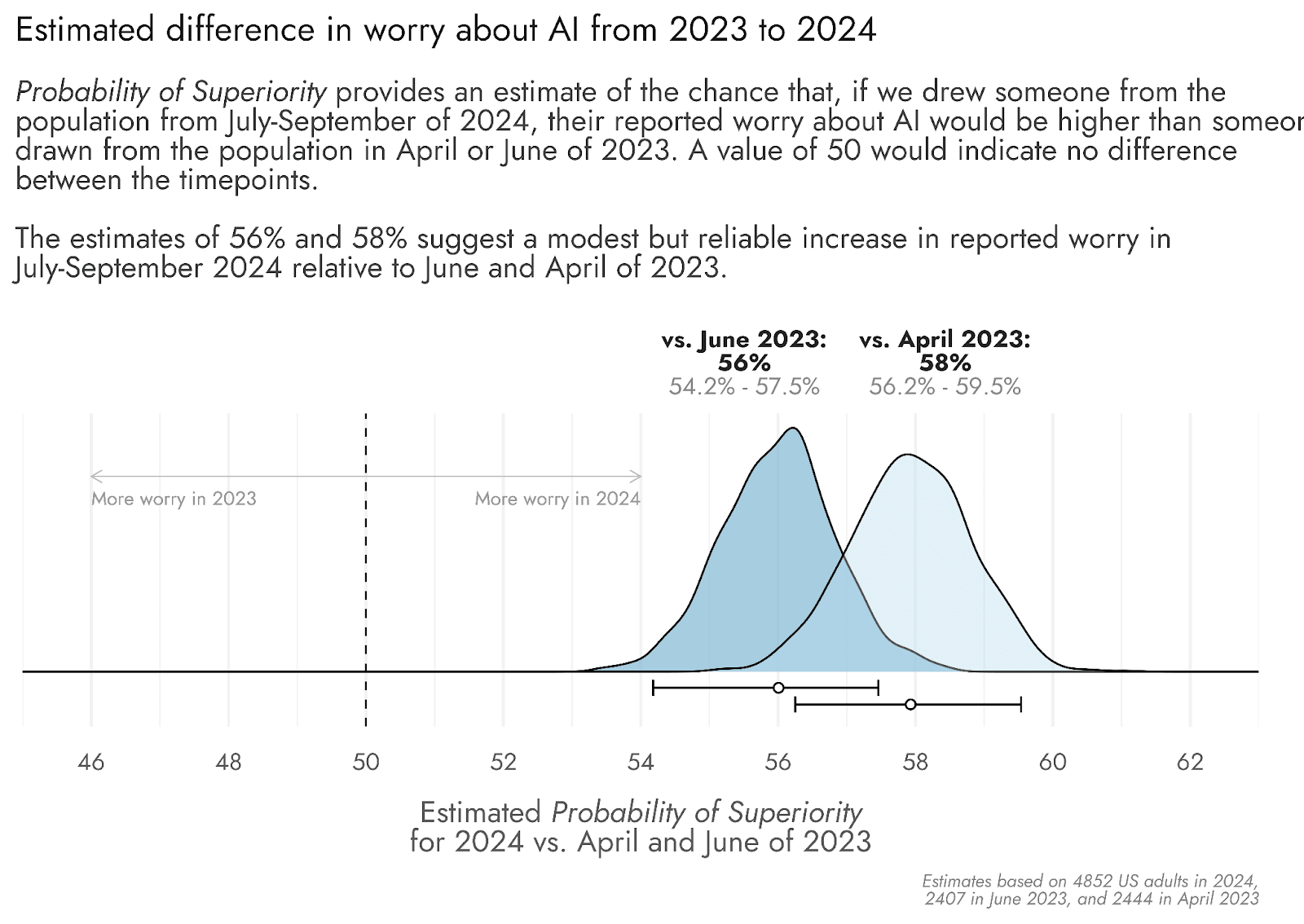

This impression from the estimated percentages of people picking each option is supported by the probability of superiority over time, comparing our current (July-September, 2024) responses with those of April and June 2023. Estimates of 56% and 58% relative to June and April respectively suggest a modest but reliable increase in reported worry over time. The increasing awareness and use of AI tools such as ChatGPT, as well as media coverage regarding possible downsides and risks, may have made concerns over the negative effects of AI more salient.

Stances on costs and benefits of AI

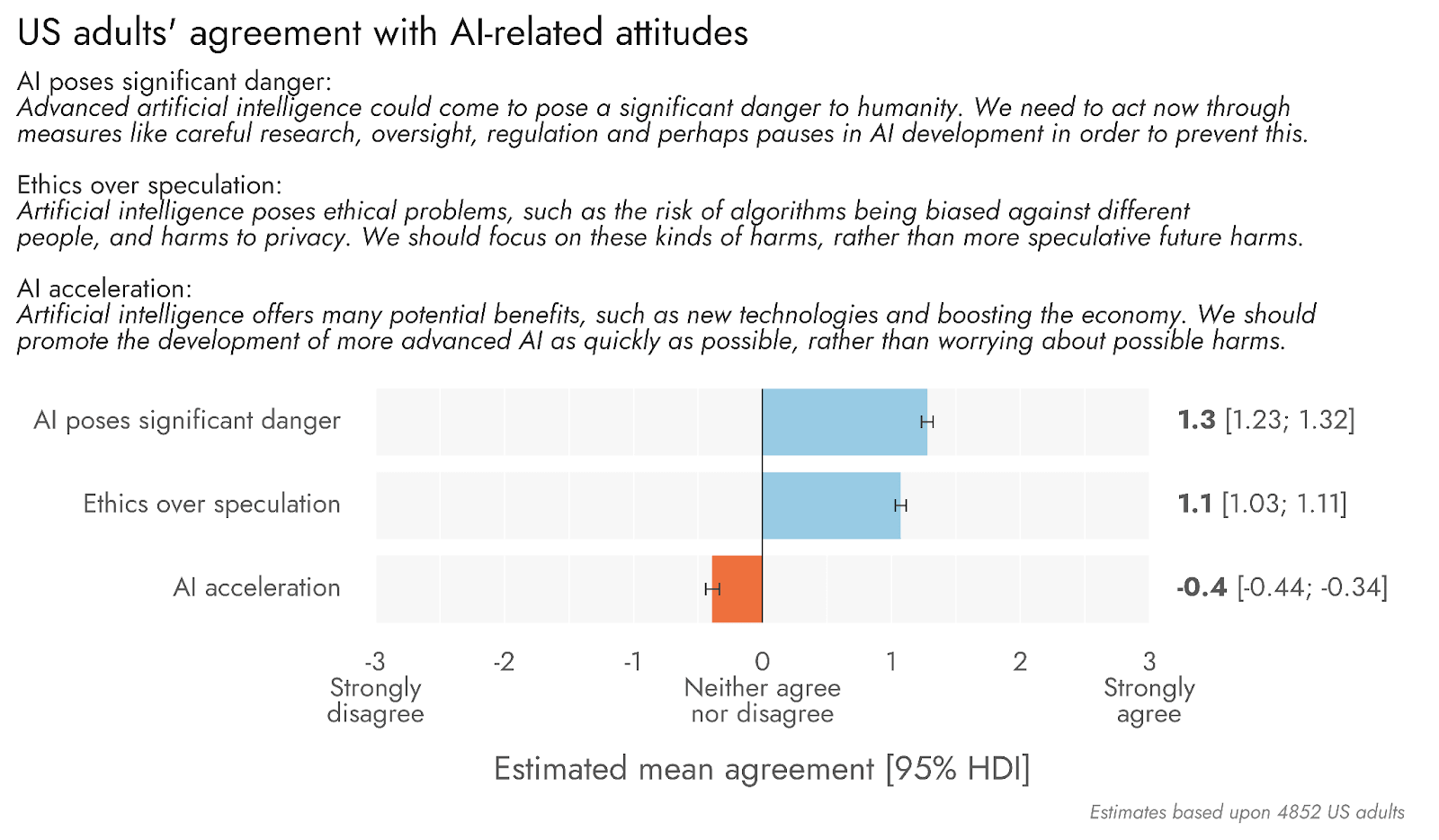

Besides worry about AI, respondents were asked the extent to which they agreed or disagreed with several AI-related stances. These stances reflect three dominant perspectives regarding possible costs and benefits of AI: the AI risk perspective (which focuses on far-reaching catastrophic or civilization-level risks such as an AI takeover), AI ethics (which focuses more on possible harms from factors such as biases in algorithms), and AI acceleration (the idea that we should be ramping up AI capabilities as fast as possible to derive their benefits). The exact wording for each perspective is presented with the figure below.

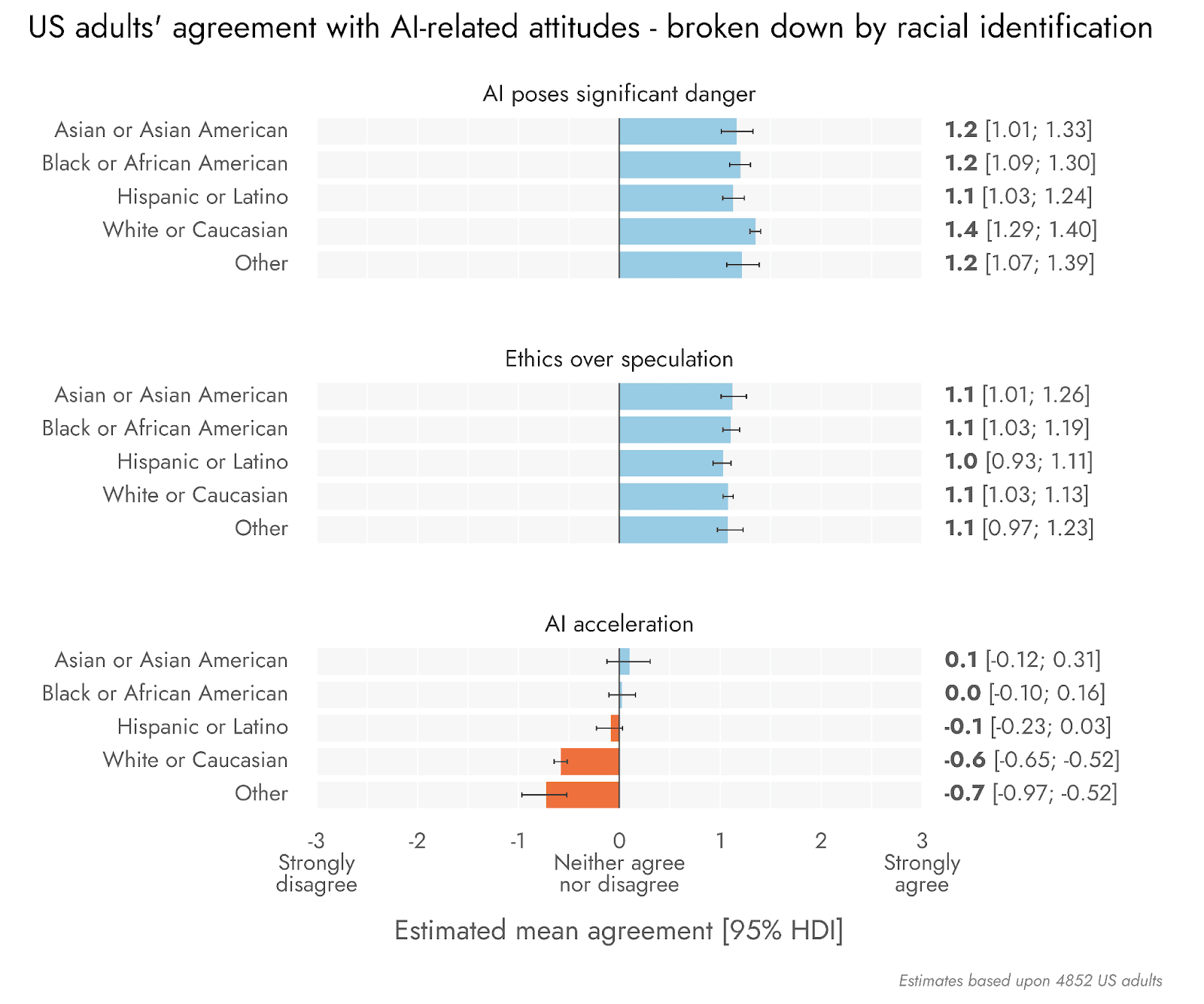

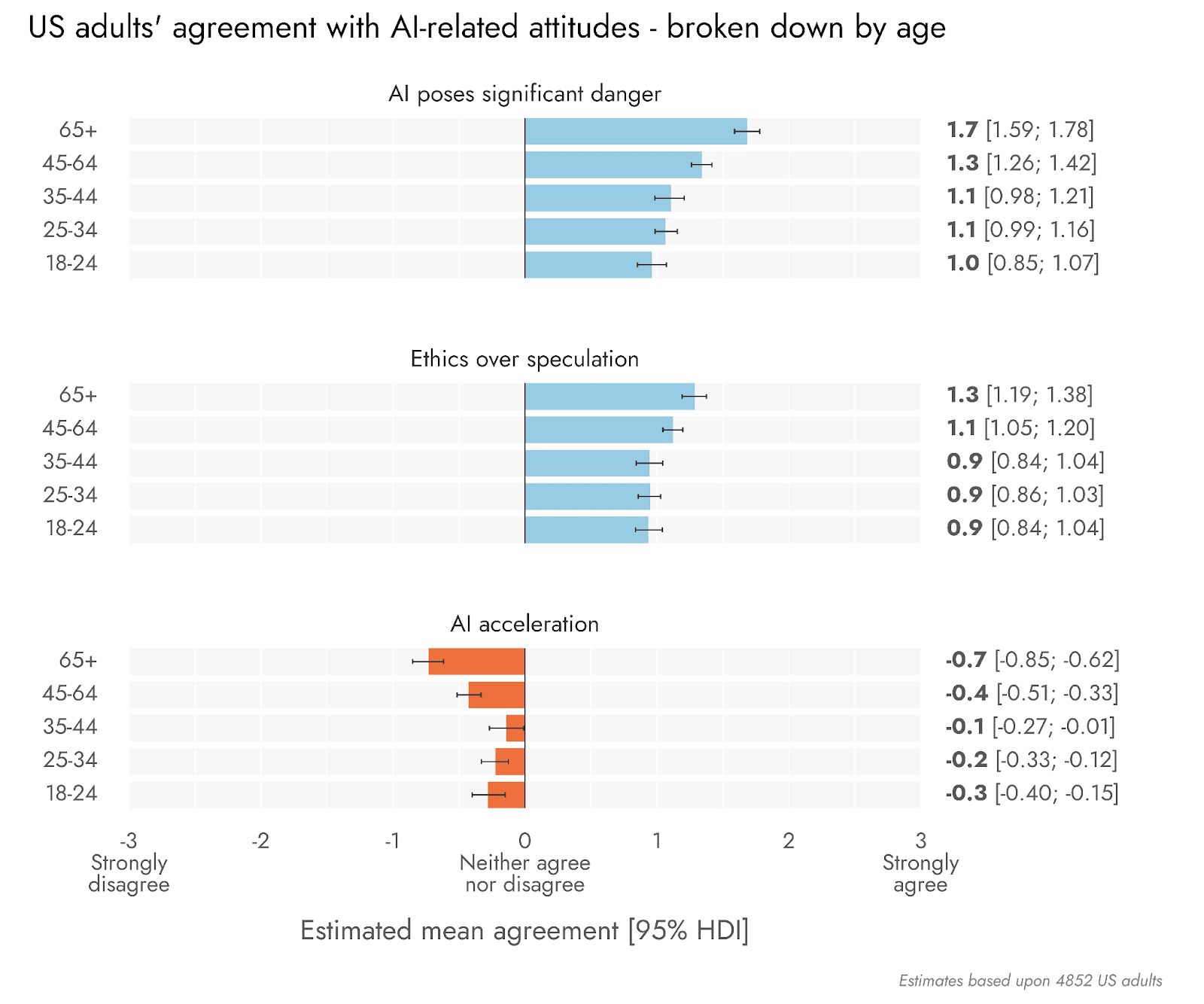

We estimate that the US public would agree - and among the stances, agree most strongly - with the idea that advanced AI could come to pose a significant danger to humanity, necessitating research, regulation, or pauses in AI development (the AI risk stance). However, the public also agreed that ethical risks - such as biased algorithms and privacy harms - should be a focus above more speculative harms (the AI ethics stance). In contrast, we estimate that the US public tends to disagree with accelerating the development of AI as quickly as possible to secure its benefits, as opposed to worrying over harms (the AI acceleration stance).

Although the content of each of these statements might suggest that all three are in tension with one another, we observed a significant positive correlation between AI risk and AI ethics (Spearman’s rank = .49), and significant negative correlations between each of these items and AI acceleration (-.18 with AI ethics, and -.34 with AI risk). Hence, although the AI ethics statement emphasized the importance of focusing on ethical concerns over speculative harms (which might include the kinds of threats referred to in the AI risk stance), agreement with AI ethics did not preclude and was even positively associated with endorsement of AI risk. Agreement with AI risk and AI ethics might therefore reflect a general appreciation of potential harms from AI development.

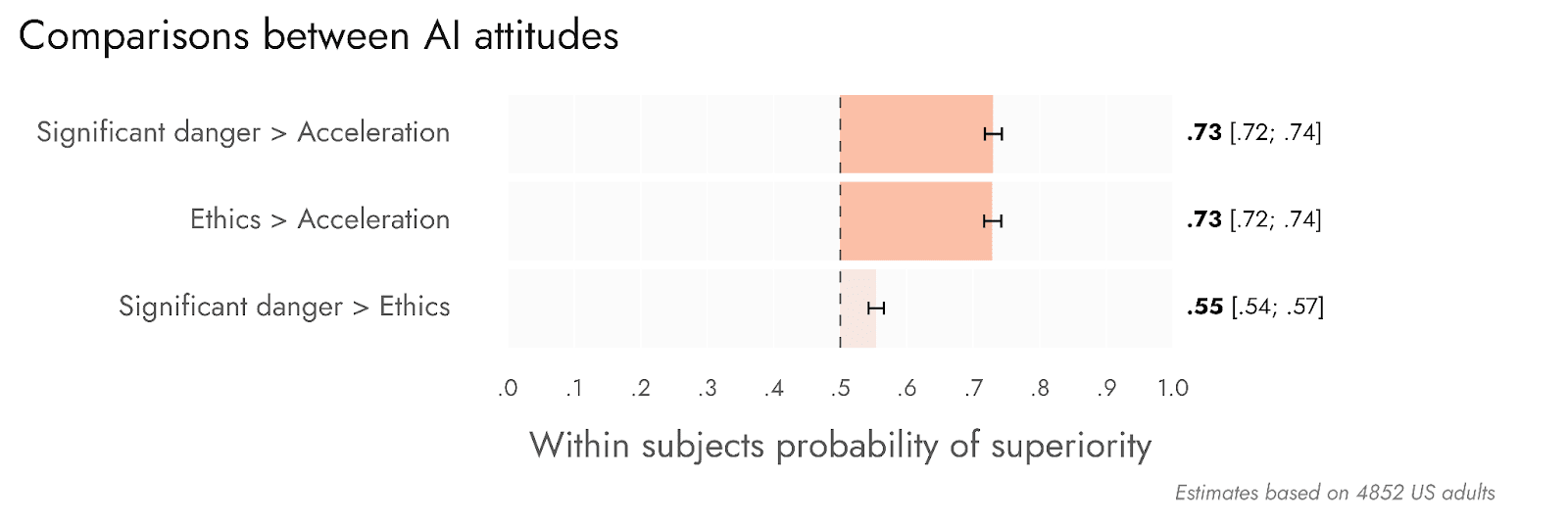

Based on within subjects Probability of Superiority comparisons, we would expect approximately 73% of the US public to express greater agreement with AI risk and AI ethics than with AI acceleration.

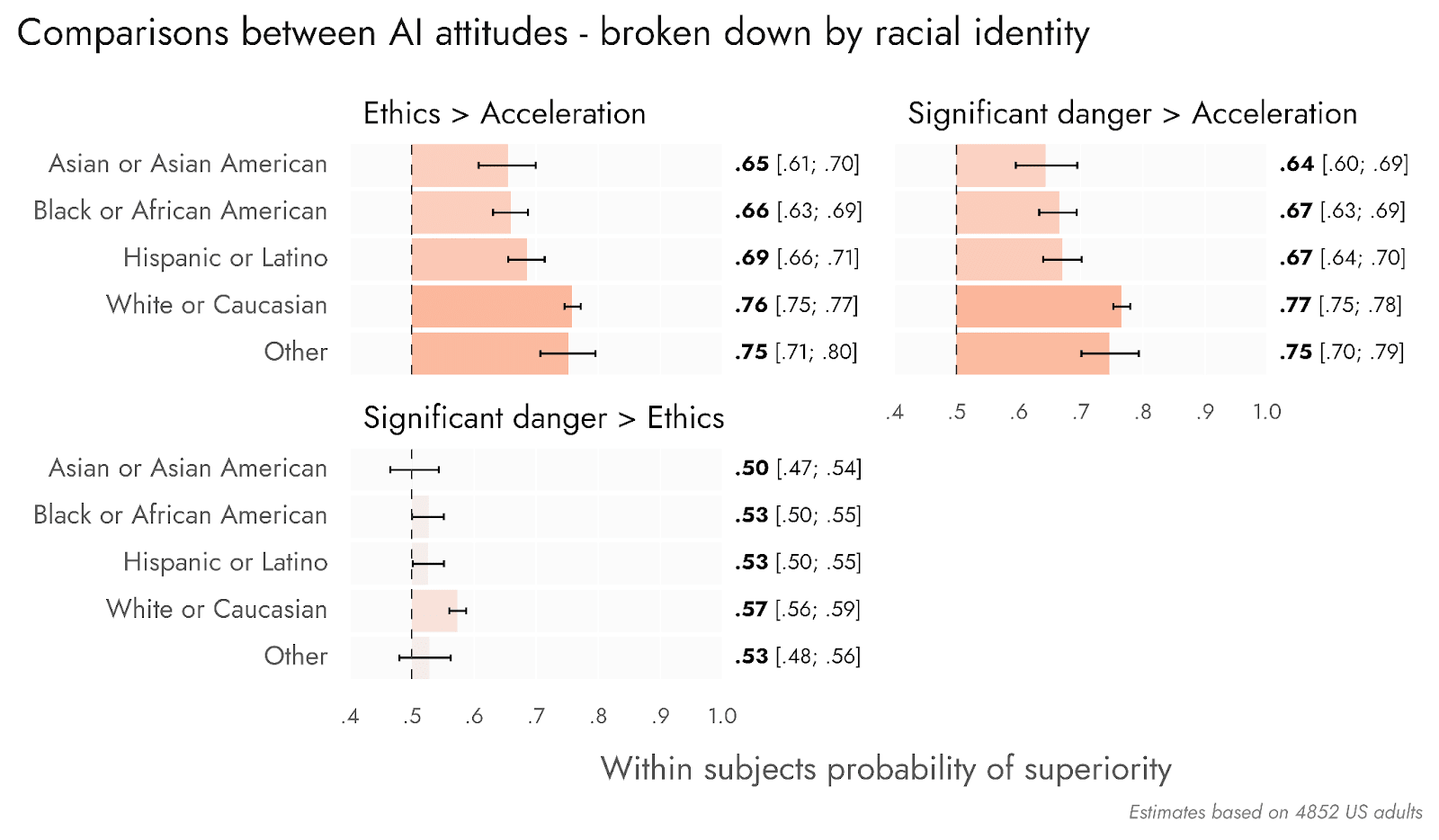

It might be suggested that communities plausibly at greater risk of facing such bias (for example, racial or ethnic minorities) would show greater endorsement of AI ethics. To the contrary, ratings of the importance of AI ethics were quite constant across different racial identities. However, the mean ratings and estimates of Probability of Superiority in each racial subgroup suggest that only White or Caucasian adults reliably rated AI risk more highly than AI ethics. Respondents of all racial identities were on average much more in agreement with each of these risk-oriented statements than with AI acceleration, although White or Caucasian respondents were more negative about AI acceleration than were Asian or Asian American, Black or African American, and Hispanic or Latino adults.

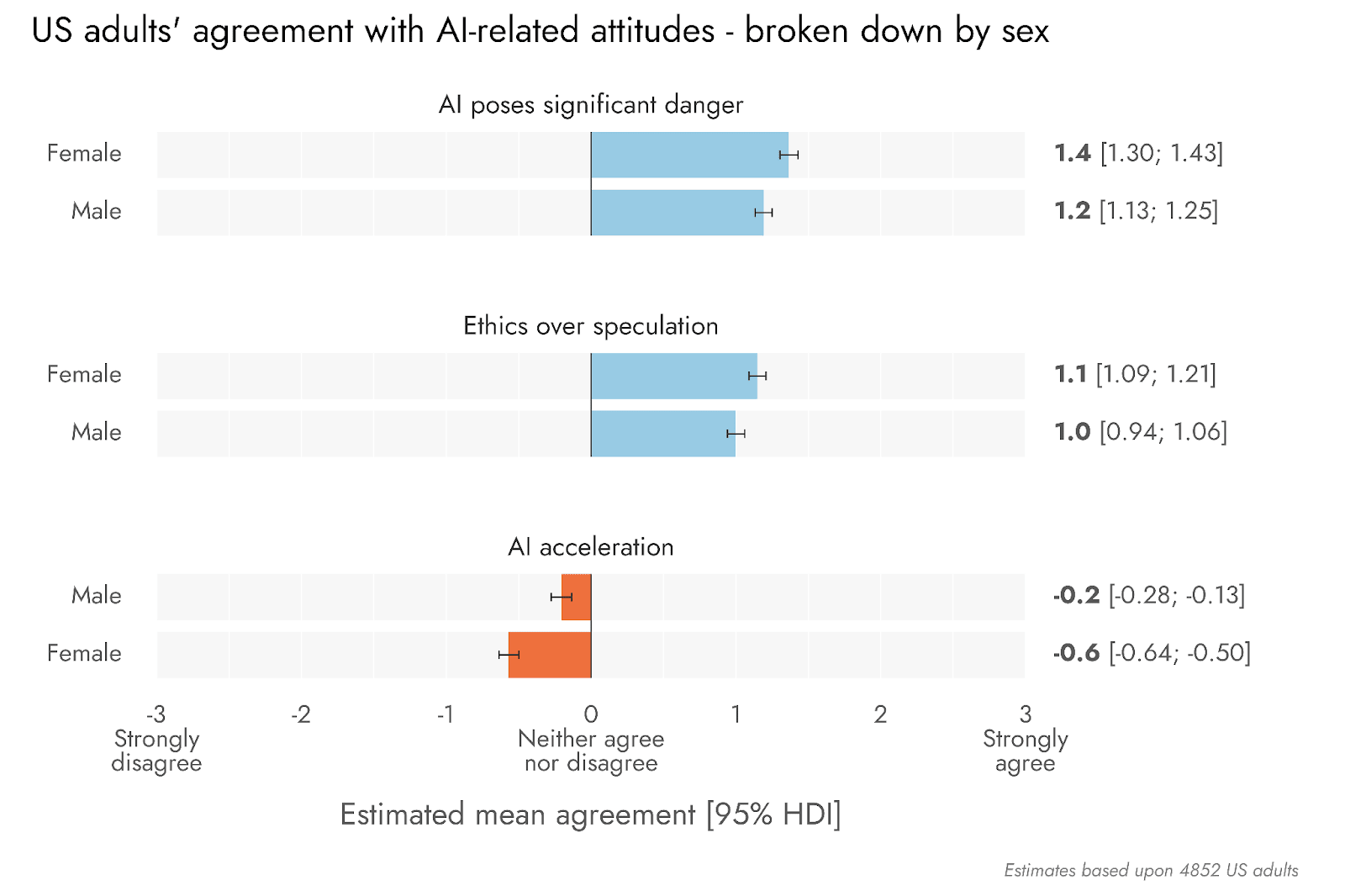

Female adults had more negative attitudes towards AI acceleration, and more positive attitudes towards each of the risk oriented statements, than did males.

Rethink Priorities is a think-and-do tank dedicated to informing decisions made by high-impact organizations and funders across various cause areas. We invite you to explore our research database and stay updated on new work by subscribing to our newsletter.

- ^

The exact sample size for each question may vary due to missing data or branching of the sample to see alternative questions.

Good to see Pulse is back!

Thanks David!

I'd be curious to know what are the reasons behind the growing fear of AI. Is it because of people like Elon Musk or Stephen Hawking expressing concerns about it? Is it because they've used it before and are scared by how "human" it appears? Influence from Hollywood?

thanks for following up, would be quite interesting to know.

We didn't directly examine why worry is increasing, across these surveys. I agree that would be an interesting thing to examine in additional work.

That said, when we asked people why they agreed or disagree with the CAIS statement, people who agreed mentioned a variety of factors including "tech experts" expressing concerns and the fact that they had seen Terminator etc., and directly observing characteristics of AI (e.g. that it seemed to be learning faster than we would be able to handle). In the CAIS statement writeup, we only examined the reasons why people disagreed (the responses tended to be more homogeneous, because many people were just saying ~ it's a serious threat), but we could potentially do further analysis of why they agreed. We'd also be interested to explore this in future work.

It's also perhaps worth noting that we originally wanted to run Pulse monthly, which would allow us to track changes in response to specific events (e.g. the releases of new LLM versions). Now we're running it quarterly (due to changes in the funding situation), that will be less feasible.