Main Findings

It’s widely understood that the Longtermist ecosystem is quite racially homogenous. This analysis seeks to quantify and elaborate on that observation, to enable various stakeholders to make more informed decisions.

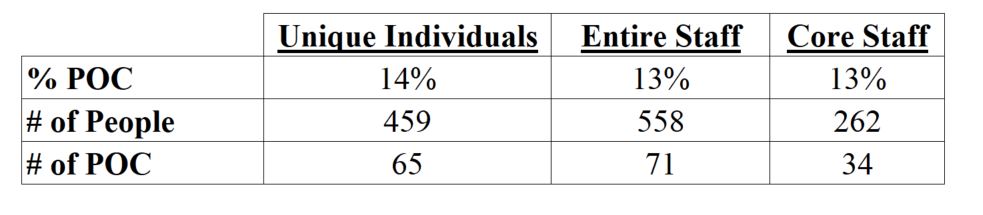

My review of 459 unique individuals listed on the team pages of 21 Longtermist organizations produced the following findings:

- People of Color (POC) make up 13-14% of staff at the 21 Longtermist organizations in my sample. This is less diverse, and in many cases much less diverse, than the communities from which these organizations tend to draw from (such as top US Computer Science programs where POC often make up a majority of students).

- At about half the organizations in my sample (10 out of 21), the core staff is entirely white. At every organization except Open Philanthropy’s AI Fellowship program, POC account for <30% of staff.

- Open Philanthropy’s AI Fellowship program was an extreme outlier, with 9 out of 15 fellows (60%) being POC. This fellowship accounted for just 6% of the core staff in my sample, but 26% of the POC. One of the program’s explicit goals is to improve diversity in the ecosystem, suggesting this sort of intentional effort can successfully bring talented POC into the Longtermist community.

- Organizations/programs that are based at universities are much more racially diverse than other types of Longtermist organizations (typically independent charities). At “University” organizations, POC make up 20% of core staff positions, compared to only 3% at “Non-University” organizations.

- POC appear to be particularly underrepresented in the most influential roles at Longtermist organizations. Only 4% of leadership positions are held by POC. However, POC account for 19-25% of support staff.

- Collectively, these findings suggest that the Longtermist ecosystem is significantly underrepresenting and underutilizing POC. At the same time, Open Philanthropy’s AI Fellowship program suggests improvements are possible if prioritized. Potential strategies for improving diversity are discussed below.

Methodology

Sample

To conduct this analysis, I looked at the “team” pages on the websites of 21 Longtermist organizations/programs, which encompassed 459 unique individuals filling 558 positions (some people hold roles at multiple organizations). This data was collected in late February 2020.

I selected the organizations by coming up with a list of prominent groups working in the ecosystem, supplemented this initial list with grantees of the Long-Term Future Fund, and made some adjustments based on feedback from people working in the Longtermist ecosystem (none of whom suggested adding other organizations). This process required some judgment calls, such as including CFAR (because while it is not explicitly Longtermist, it has received grants from the Long-term Future Fund and has trained many people in the Longtermist space). Similarly, I chose to include the Long-Term Future Fund itself (because despite being a volunteer-run effort rather than an organization, it’s role as funder makes it particularly influential).

In almost every case, I included all team members listed. The only exception was Open Philanthropy, where I included the leadership and people whose job titles/descriptions explicitly suggest they work full-time on Longtermist causes. I did this because Open Philanthropy plays a critical part in the Longtermist ecosystem, but has a scope that exceeds Longtermism. This methodology excluded some Open Philanthropy employees, including some POC, who work part-time on Longtermist causes. (CEA and 80,000 Hours also do work besides Longtermism, but I included all of their employees as it was not clear which of them do Longtermist work.)

Racial Classification

For each person in my sample, I tried to determine whether they are white or a person of color (POC) based on the pictures displayed on the relevant team page (in some cases, I performed a quick google search to try to find additional information). I included people I believed to be multiracial in the POC category. This is admittedly an imperfect approach, and I expect I misclassified some people. I sincerely apologize to anyone I misclassified. I believe it is unlikely that this imprecision materially changes the findings of this analysis, as there generally didn’t seem to be much ambiguity.

Note: While there are of course many dimensions of diversity, for purposes of brevity my use of that term throughout this analysis will refer to racial diversity unless otherwise stated.

Role Classification

Different organizations have different criteria for listing people on their team pages. Some organizations use loose criteria, and include interns, volunteers, and even past employees.

Using job descriptions listed on the team pages, I distinguished between different types of team members in two ways. First, I tried to determine whether or not each person was part of an organization’s “core staff”. I excluded from the core staff designation roles such as board members, freelancers, interns, and research affiliates. If someone was listed on a team page in any capacity, I include them in the “all staff” category.

I also tried to categorize the type of work each person did, distinguishing between “Leadership” roles (e.g. CEO, COO, board members), “Professional Staff” roles (e.g. analysts and researchers), “Support Staff” roles (e.g. operations and administration), and “Associate” roles (e.g. alumni and students).

As an example of how these classifications play out, a board member would be classified as a leadership role, but would not be considered a member of an organization’s “core staff”.

Shortcomings

My methodology includes several shortcomings, including relying on subjective judgements in assessing peoples’ race, who constitutes an organization’s “core team”, and which people are in which type of roles.

To mitigate these shortcomings, I have tried to provide multiple perspectives when possible. For instance, looking only at core team members relies on my assessment of who falls into that category. By contrast, looking at everyone listed on an organization’s team page does not require that assessment, but places equal weight on people who are essential to an organization’s work and those who are somewhat tangential. Each approach has its weaknesses, but looking at both perspectives helps mitigate those weaknesses. In the same vein, I supplement the overall numbers I provide (which effectively weight organizations by their staff sizes) with the average and median figures across organizations (which give organizations equal weight).

Other weaknesses are harder to mitigate. My analysis relies on the team pages it is based upon being (reasonably) accurate. It also relies on these team pages as they stood in February 2020, as it was not practical to continuously update my sample. In some cases teams have shifted since then (e.g. I’m aware that 80,000 Hours has added a POC to their core team, and the Long-term Future Fund has replaced the sole POC on its management committee).

Staff Demographics

Of the 459 unique individuals in this sample, 65 (14%) are POC. POC make up a slightly lower percentage of total roles (13%) and core staff positions (13%).

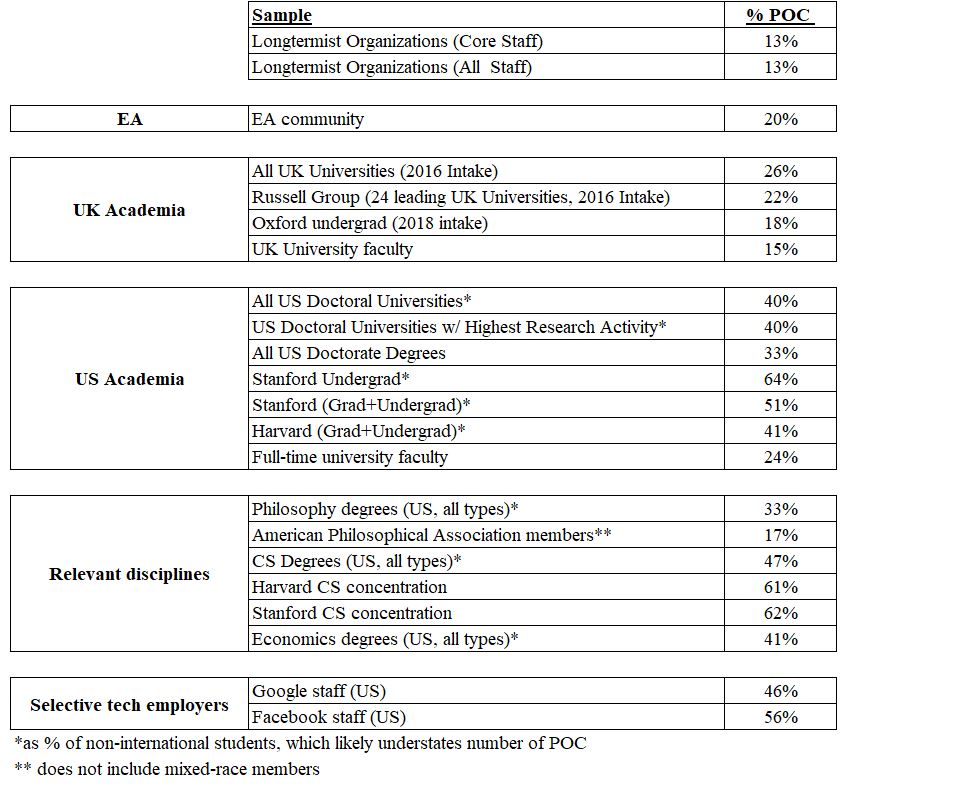

It’s not obvious which reference point(s) should be used to contextualize these figures. The following table lists a variety of possibilities.

Sources: EA Community, UK students inc. Oxford, UK faculty, US Doctoral Universities (overall and high research), All US Doctorates and APA members, Stanford undergrad, Stanford, Harvard, US Faculty, Philosophy degrees, CS Degrees, Harvard CS, Stanford CS, Economics, Google, Facebook.

Longtermist organizations appear less diverse than even the most homogenous of these benchmarks: UK university faculty (15%), the membership of the American Philosophical Association (17%), Oxford’s undergraduate population (18%), and the EA community (19.5%).

It’s plausible that Longtermist diversity is very roughly on par with diversity at top UK philosophy departments (while I could not find demographic data for the latter, it seems reasonable to expect that philosophy programs are less diverse than university populations as a whole in the UK, as is the case in the US). But while UK philosophers are certainly well represented at Longtermist organizations, it seems a stretch to argue that they’re the appropriate benchmark for the ecosystem as a whole. Roughly a quarter of the organizations in my sample have an explicit focus on AI and are based in the Bay Area; for these organizations one might reasonably expect half the staff or more to be POC. Moreover, there’s significant variation in job function both within and across organizations in my sample, which argues for considering a wider range of disciplines as a reference point.

The significant gap in racial diversity between the US and the UK (which collectively are the home of all the organizations in my sample) admittedly complicates the benchmarking process; later, I take a closer look at the relationship between geography and diversity.

Demographic differences across organizations

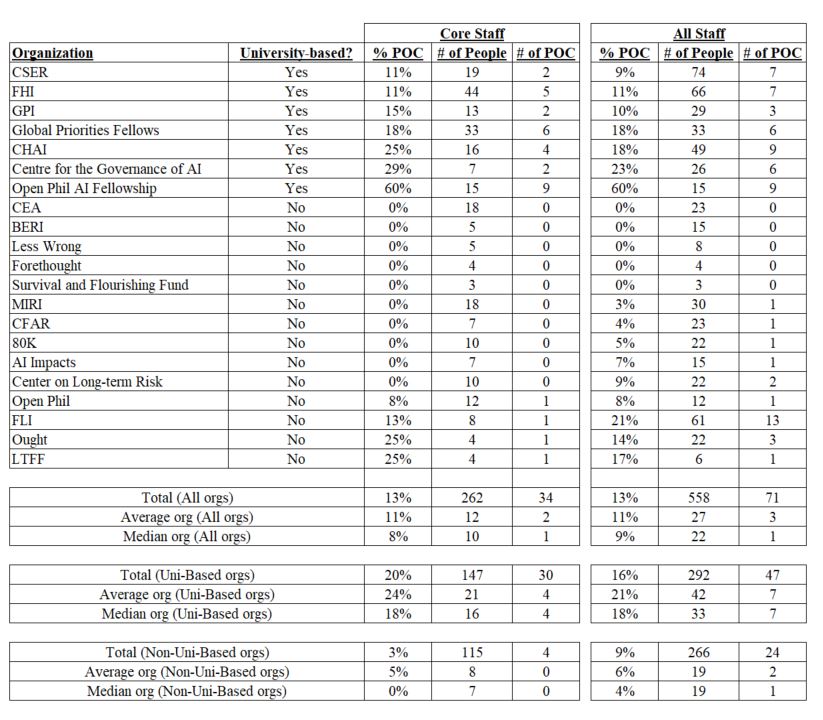

Racial diversity varies significantly across the 21 Longtermist organizations in this sample.

At about half the organizations, core staff is entirely white. At some of the most diverse organizations, a quarter of core staff are POC. The Open Phil AI Fellowship is a huge outlier, with 60% of fellows being POC (twice as much as any other organization.)

There appears to be a strong pattern whereby university-based research centers/programs have much more diverse teams than other types of Longtermist organizations (which are generally standalone registered charities). At “University” organizations, POC make up 20% of core staff positions, compared to only 3% at “Non-University” organizations.

This pattern is quite consistent across organizations. None of the 14 “Non-University” organizations has more than one POC on its core staff, and 10 (71%) have none. By contrast, each of the 7 University organizations has multiple POC on their core staff, and POC make up 24% of the core staff at the average organization.

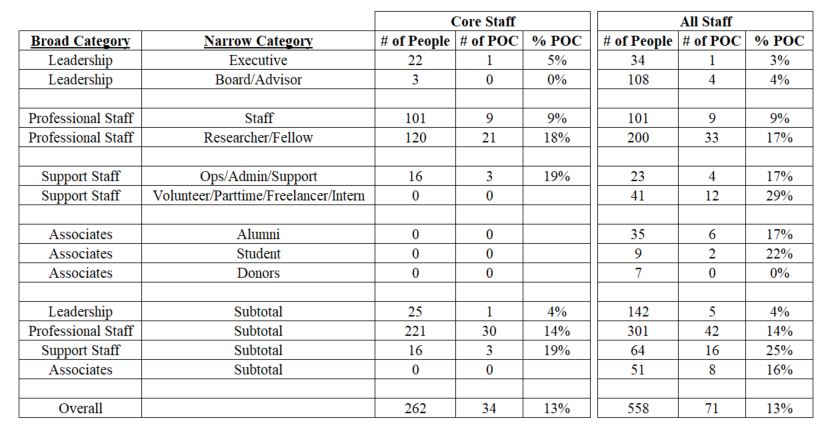

Demographic differences by role

Overall, POC account for ~13% of all roles at the Longtermist organizations in my sample. Certain types of roles, however, have much different levels of diversity. There appears to be a strong pattern in which the more influential a role is within an organization, the less likely it is to be held by a POC.

Only 4% of leadership roles are held by POC. Diversity among professional staff (14%) is in line with overall levels. But for “Support staff” roles (operations, administration, freelancers, part-timers, interns, and volunteers), POC account for 25% of positions.

Interestingly, POC make up 17% of staff alumni. Since this rate is higher than the representation of POC across all roles (13%) and much higher than the representation at organizations excluding the Open Phil AI Fellowship (10%), this raises the questions of whether POC are more likely to leave Longtermist organizations than their white counterparts, and if so, why.

Note: Within the “Professional Staff” category, differences in diversity between “Staff” and “Researcher/Fellow” roles are in large part a function of the Open Philanthropy AI Fellowship’s high percentage of POC. Excluding that program, POC make up 11% of Researcher/Fellow roles.

Demographic differences by geography

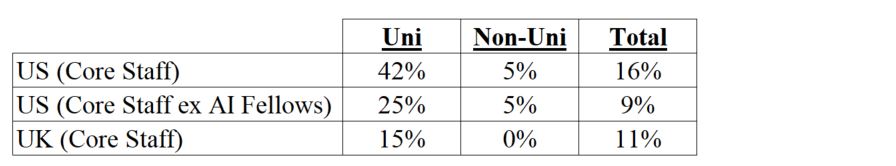

As discussed above, the difference in diversity between US and UK hiring pools complicates the assessment of diversity in the Longtermist ecosystem. And since these two countries house a different mix of University-based and Non-University-based organizations in my sample, it is important to tease apart this confounding effect. The following table does just that (looking only at Core Staff since non-Core Staff numbers include many research affiliates who are not located where the organizations are).

As expected, US-based organizations have a higher percentage of POC than UK-based organizations. A large part of this is because of the outsized influence of the AI Fellowship program. Excluding those fellows, UK organizations are actually more diverse than US organizations overall, but only because UK organizations are more likely to be based at universities. Making an apples-to-apples comparison (e.g. university-based organizations in the US vs. UK), we again see US organizations are more diverse as expected given their respective hiring pools.

There are just two University-based organizations based in the US, the AI Fellowship and CHAI. Together, they have a similar percentage of POC (42%) to enrollment at US Doctoral Universities with the highest research activity (40%), though that is largely driven by the high rate of diversity within the AI Fellowship. Representation at CHAI alone (25%) is 38% below the same benchmark. This shortfall is roughly similar to what we find at University-based organizations in the UK, where POC’s 15% representation is 31% below the 22% POC enrollment rate at leading UK universities.

At non-University-based organizations, POC are extremely rare (5%) in the US, but are completely absent in the UK in my sample. (As mentioned in the methodology section, I’m aware of one POC who was hired by a UK organization after my sample was collected, which would bring the aggregate rate of POC at non-university-based UK organizations to 2%.) One could argue that the lack of representation in the US is actually worse than in the UK, given the US’s more diverse hiring pool (especially when one considers that many of the US-based organizations are focused on AI and likely frequently hire from relatively diverse CS programs).

Suggestions for improving racial diversity

- Proactively seek out diverse candidates. Steps could include surveying networks to identify talented POC, encouraging POC to apply for open positions or start their own organizations, inviting POC to board roles, and advertising job openings in communities with strong POC representation (e.g. the EA Global meetup for POC).

- Survey POC about their experiences in the Longtermist community. This survey (which should permit anonymous answers) could capture valuable information on whether and why POC may feel excluded, and ideas for improving diversity.

- Look for lessons from the Open Philanthropy AI Fellowship program. This program, which explicitly sought to increase diversity, had more than twice as many POC (in percentage terms) as any other organization in my sample. There are likely aspects of its operations, including how it sources and selects candidates, that could help other organizations become more diverse. Scaling this program could also help improve the broader ecosystem’s diversity over time. That said, it’s important to recognize that the AI Fellowship program is not exceptionally diverse; it’s diversity is simply in line with that at Computer Science programs at top US schools.

- Promote formal diversity and inclusion programs. My analysis doesn’t provide a definitive explanation of why being based at a university appears to have such a sizable effect on an organization’s diversity. But it seems reasonable to assume that the formal policies universities typically have in place may play an important role. Wider adoption of such policies could improve diversity throughout the Longtermist ecosystem.

- Shift funding, on the margins, to more diverse organizations. On the margins, some donors may want to support university-based Longtermist organizations, which generally have much more racial diversity than other organizations. Similarly, donors may want to support US-based organizations rather than their less-diverse UK counterparts. Funders who value diversity should communicate this priority to the organizations they support or are considering supporting, particularly large funders who can influence organizational direction.

- Consider POC who are already working with Longtermist organizations for core staff positions. Nearly 30% of volunteers, interns, freelancers, and contractors (past or current) are POC. Adding top performers from this cohort to core staff positions would be a simple way to improve diversity.

- Draw advice from people and organizations that excel in diversity. As mentioned above, the Open Philanthropy AI Fellowship likely has lessons that can be applied throughout the Longtermist ecosystem, and the same can be said for people and organizations from outside that ecosystem. Research into best practices (e.g. here and here) can also help this effort.

- Provide mentorship opportunities for POC. WANBAM provides mentorship for women and non-binary members of the EA community. An analogous program for POC could provide important support and training, while also signaling that improving racial diversity is a priority. Mentorship that could help POC take on leadership positions could be particularly valuable. I reached out to WANBAM’s founder, Kathryn Mecrow-Flynn, who would be willing to help a mentorship program for POC get started: “I would love suggestions [for] women of color who would like to mentor with us going forward. I would additionally welcome a mentorship program for women of color and people of color who are interested in pursuing high impact career paths and I would personally support and lend resources and lessons learned to such a program. You can reach me at <EAMentorshipprogram@gmail.com>.”

Conclusion

This analysis has found that POC are significantly underrepresented at Longtermist organizations and projects relative to the populations they typically draw from. At many organizations, and at most of the organizations that are not based at a university, core staff is entirely white. Where POC are employed, it is disproportionately in support roles and very rarely in leadership roles.

I believe this situation undermines the efficacy of Longtermist organizations, which depends on the talent they employ. Their high degree of racial homogeneity suggests these organizations have been missing out on talented employees and alternative perspectives, and may continue to struggle to attract strong POC candidates in the absence of changes (see here for related discussion).

I also believe this lack of racial diversity undermines the legitimacy of Longtermist efforts. If you were a policy maker in the Global South, how credible would you view “global priorities research” from an ecosystem that’s as white as South Dakota? If you were an AI strategist in China, and knew that Asians outnumbered whites in many top CS programs in the US, would you be skeptical about an overwhelmingly white ecosystem producing research on topics like “distributing the benefits of AI for the common good”?

To be clear, I don’t think the racial homogeneity of Longtermist organizations is in any way due to explicit racism or discrimination on the part of those organizations or their employees. Rather, as CEA’s stance on diversity and inclusion states, “Without conscious effort, groups tend to maintain a similar demographic makeup over time. Counteracting that tendency toward narrowness and homogeneity takes attention and effort.”

My analysis also found that when attention and effort is applied, it can lead to positive outcomes. The example of Open Philanthropy’s AI Fellows clearly shows that intentionally prioritizing diversity works. On a similar note, the efforts of CEA’s Community Health Team have paid off in terms of improving speaker diversity at EA Global. I hope this analysis helps motivate further attention and effort toward improving diversity, and ultimately efficacy, in the Longtermist ecosystem.

I'm a POC, and I've been recruited by multiple AI-focused longtermist organizations (in both leadership and research capacities) but did not join for personal reasons. I've participated in online longtermist discussions since the 1990s, and AFAICT participants in those discussions have always skewed white. Specifically I don't know anyone else of Asian descent (like myself) who was a frequent participant in longtermist discussions even as of 10 years ago. This has not been a problem or issue for me personally – I guess different groups participate at different rates because they tend to have different philosophies and interests, and I've never faced any racism or discrimination in longtermist spaces or had my ideas taken less seriously for not being white. I'm actually more worried about organizations setting hiring goals for themselves that assume that everyone do have the same philosophies and interests, potentially leading to pathological policies down the line.

Thanks for sharing your experience! It’s valuable to get the perspective of someone who’s been involved in the Longtermist community for so long, and I’m glad you haven’t felt excluded during that time.

As a donor I would like to make clear that I do not place any value on diversity. Furthermore, given the enormous stakes I think it would be a mistake for even donors and organisations who do value diversity as a terminal value to dedicate resources to this instead of focusing on their core mission.

Nor do I think there are likely to be significant instrumental benefits. You suggest the biggest issue is missing out on talent and viewpoints:

Yet each of these organisations is very small; likely they are all missing out on a vast number of good candidates, so focusing on one narrow aspect of this seems like privileging the hypothesis. If we are concerned about incorporating a variety of perspectives, this is an issue that can be addressed directly, by hiring people with different academic backgrounds, different world views and different ideologies. It is a well known fact that conservatives are massively under-represented in EA organisations for example, despite the immediate policy relevance. In contrast, hiring someone because of their race, and hoping this will mean they have specific views, seems like a very oblique way of doing so.

Furthermore, there are significant disadvantages to attempting to socially engineer a racial breakdown. Most obvious is the pressure to achieve racist hiring goals over competence, leading to token minorities on the teams. There is evidence that technology investors are biased against white entrepreneurs (see here for example) - indeed I personally experienced being told that we couldn't hire the best candidate because they were a white male and we had hit our diversity quota - and I would not like to see the same happening here.

I very strongly disagree.

At the very least, consider the instrumental benefits from avoiding the PR-risk of the community adopting your far-out view that we ought not value diversity at all. This seems like a legitimate risk for EA, as evidenced by your comment having more upvotes than the author of this thread.

However, my sense is that, despite problems with diversity in EA, this has been recognized, and the majority view is actually that diversity is important and needs to be improved (see for instance CEA's stance on diversity).

Also supporting this view, most of the respondents in 80K’s recent anonymous survey on diversity said they valued demographic diversity. The people who didn’t mention this explicitly generally talked about other types of diversity (e.g. epistemic and political) instead. And nobody expressed Larks’ view that they “do not place any value on diversity.” I agree with Hauke that this perspective carries PR risk, and in my opinion seems especially extreme in a community that politically skews ~20:1 left vs. right.

I assume Larks means 'racial diversity' in the context of this thread (and based on their comment, which talks about increasing diverse viewpoints through other means).

To clarify, my comment about EA's political skew wasn't meant to suggest Larks doesn't care about viewpoint diversity. Rather, I was pointing out that the position of not caring about racial diversity is more extreme in a heavily left leaning community than it would be in a heavily right leaning community.

Gotcha. I actually meant to reply to Hauke (who thought the poster was talking about diversity of any kind, rather than racial diversity).

A few points…

First, I’d very much like to see EA and/or Longtermist organizations hire people with “different academic backgrounds, different world views and different ideologies.” But I don’t think that would eliminate the need for improving diversity on other dimensions like race or gender, which can provide a different set of perspectives and experiences (see, for example, “when I find myself to be the only person of my group in the room I want to leave”) than could be captured by, for example, hiring more white males who studied art history.

Second, I’m not advocating for quotas, which I have a lot of concerns about. I’d prefer to look at interventions that could encourage talented minorities to apply. My prior is that there are headwinds that (on the margins) discourage minority applicants. As multiple respondents to 80K’s recent survey on diversity noted, there’s “a snowball effect there, where once you have a sufficiently non-diverse group it’s hard to make it more diverse.” If that effect is real, claims like “we hired a white male because he was the best candidate” become less meaningful since there might have been better minority candidates who didn’t apply in the first place.

Third, some methods of increasing minority applicants are extremely low cost. For example, I saw one recent job posting from a Longtermist organization that didn’t include language like one often sees in job descriptions along the lines of “We’re an equal opportunity employer and welcome applications from all backgrounds.” It’s basically costless to include that language, so I doubt any minorities see it and think “I’m going to apply because this organization cares about diversity.” But it’s precisely because this language is costless that not including it signals that an organization doesn’t care about diversity, which discourages minorities from applying (especially if they see that the organization’s existing team is very homogeneous.)

In that case you probably shouldn't argue that an opinion being held by an ideological minority makes it especially dangerous:

Diversity doesn't bring any value if you then crush all disagreement!

I don’t think placing no value on diversity is a PR risk simply because it’s a view held by an ideological minority. Few people, either in the general population or the EA community, think mental health is the top global priority. But I don’t think EA incurs any PR risk from community members who prioritize this cause. And I also believe there are numerous ways EA could add different academic backgrounds, worldviews, etc. that wouldn’t entail any material PR risk.

I want to be very explicit that I don’t think EA should seek to suppress ideas simply because they are an extreme view and/or carry PR risks (which is not to say those risks don’t exist, or that EAs should pretend they don’t exist). That’s one of the reasons why I haven’t been downvoting any comments in this thread even if I strongly disagree with them: I think it’s valuable for people to be able to express a wide range of views without discouragement.

I’m sceptical; diversity programs often don't work (Google spent $300m+ on diversity programs and didn't move the needle) and in many cases reduce diversity.

The HBR study you cite actually says the evidence shows that some types of programs do effectively improve diversity, but many companies employ outdated methods that can be counterproductive.

The rest of the article has some good examples and data on which sort of programs work, and would probably be a good reference for anyone looking to design an effective diversity program.

Agreed - though many of the more successful diversity efforts are really just efforts to make companies nicer and more collaborative places to work (e.g. cross-functional teams, mentoring). My personal preference is to focus on making companies welcoming to all rather than specifically targeting racial minorities.

I'm also a little sceptical of the huge gains the HBR article suggests - do diversity task forces really increase the number of Asian men in management by a third? It suggests looking at Google as an example of "a company that's made big bets on [diversity] accountability... We should know in a few years if that moves the needle for them" - it didn't.

Agreed. This makes those sorts of policies all the more attractive in my opinion, since improving diversity is just one of the benefits.

I’m also skeptical that particular programs will lead to huge gains. But I don’t think it’s fair to say that Google’s efforts to improve diversity haven’t worked. The article you cited on that was from 2017. Looking at updated numbers from Google’s site, the mix of new hires (which are less sticky than total employees) does seem to have shifted since 2014 (when Google began its initiatives) and 2018 (most recent data available). These aren’t enormous gains, but new hires do seem to have become notably more diverse. I certainly wouldn’t look at this data and say that Google’s efforts didn’t move the needle.

Women: 30.7% in 2014 vs 33.2% in 2018 (2.5% diff, 8% Pct Change)

Asian+: 37.9% in 2014 vs 43.9% in 2018 (6% diff, 16% Pct Change)

Black+: 3.5% in 2014 vs 4.8% in 2018 (1.3% diff, 37% Pct Change)

Latinx+: 5.9% in 2014 vs 6.8% in 2018 (.9% diff, 15% Pct Change)

Native American+: .9% in 2014 vs 1.1% in 2018 (.2% diff, 22% Pct Change)

White+: 59.3% in 2014 vs 48.5% in 2018 (-10.8% diff, -18% Pct Change)

As another resource on effective D&I practices, HBR just published a new piece on “Diversity and Inclusion Efforts that Really Work.” It summarizes a detailed report on this topic, which “offers concrete, research-based evidence about strategies that are effective for reducing discrimination and bias and increasing diversity within workplace organizations [and] is intended to provide practical strategies for managers, human resources professionals, and employees who are interested in making their workplaces more inclusive and equitable.”

Thanks for adding that resource, Anon.

I'm generally against evaluating diversity programs by how much diversity they create. It's definitely a relevant metric, but we don't evaluate AMF by how many bednets they hand out, but the impact of these bednets.

Thanks for doing this analysis! My project plans for 2020 (at CEA) include more efforts to analyze and address the impacts of diversity efforts in EA.

I'd be interested in being in touch with the author if they're open to it, and with others who have ideas, questions, relevant analysis, plans, concerns, etc.

I'm hopeful that EAs, like the author and commenters here, can thoughtfully identify or develop effective diversity efforts. I think we can take wise actions that avoid common pitfalls, so that EA is strong and flexible enough as a field to be a good "home base" for highly altruistic, highly analytical people from many backgrounds. I'm looking forward to continued collaboration with y'all, if you'd like to be in touch: sky@centreea.org.

Thanks Sky! I’ll be in touch over email.

Thanks for writing this. Another reference point: YC founders are ~16% black or Hispanic.

(I'm not sure if this is the best reference class, I was just curious in the comparison because the population of people who start YC companies seems somewhat similar to the population who join longtermist organizations.)

Thanks Ben! That’s an interesting reference point. I don’t think there are any perfect reference points, so it’s helpful to see a variety of them.

By way of comparison, 1.8% of my sample was black (.7%) or Hispanic (1.1%).

Thanks so much for doing this work! I'm pretty excited about making progress on this. If others want to collaborate, please ping jungwon@ought.org.

Thanks for your post!

Unless I am missing something about your numbers, I think the figures you have from the EA Survey might be incorrect. The 2019 EA Survey was 13% non-white (which is within 0.1% of the figure you find for longtermist orgs).

It seems possible that, although you've linked to the 2019 survey, you were looking at the figures for the 2018 Survey. On the face of it, this looks like EA is 78% white (and so, you might think, 22% non-white), but those figures don't account for people who answered the question but who declined to specify a race. Once that is accounted for the non-white figures are roughly 13% for 2018 as well.

I think the discrepancy is related to mixed race people, a cohort I’m including in my POC figures. Since the 2019 survey question allowed for multiple responses, I calculated the percentage of POC by adding all the responses other than white, rather than taking 1 - % of white respondents (which results in the 13% you mention).

Thinking more about this in response to your question, it’d probably be more accurate to adjust my number by dividing by the sum of total responses (107%). That would bring my 21% figure down to 19%, still well above the figure for longtermist organizations. But I think the best way of looking at this would be to go directly to the survey data and calculate the percentage of respondents who did not self-describe themselves as entirely white. If anyone with access to the survey data can crunch this number, I'd be happy to edit my post accordingly.

Yeh, as you note, this won't work given multiple responses across more than 2 categories.

I can confirm that if you look at the raw data, our sample was 13.1% non-mixed non-white, 6.4% mixed , 80.5% non-mixed white. That said, it seems somewhat risky to compare this to numbers "based on the pictures displayed on the relevant team page", since it seems like this will inevitably under-count mixed race people who appear white.

Thanks for sharing the survey data! I’ll update the post with those numbers.

This is a fair point. For what it’s worth, I classified a handful of people who very well could be white as POC since it looked like they could possibly be mixed race. But these people probably accounted for something like 1% of my sample, far short of the 6.5% mixed race share of EA survey respondents. So it’s plausible that because of this issue diversity at longtermist organizations is pretty close to diversity in the EA community (though that’s not exactly a high bar).

On the other hand, I’d also note Asians are by far the largest category of POC in both my sample and the EA Community, so presumably a large share of the mixed white/non-white population is part white and part Asian. It seems reasonable to assume that ~1/2 of this group would have last names that suggest Asian heritage, but there weren’t many (any?) people in my sample with such names who looked white. This might indicate that my sample had fewer mixed race people than the EA Survey, which would make the issue you're raising less of a problem.

Interestingly, the EA survey data also has a surprisingly high (at least to me) number of mixed race respondents relative to the number of non-mixed POC. In the survey, 33% of people who aren’t non-mixed white are mixed race. For comparison, this figure is 15% at Stanford and 13% at Harvard. So I think the measurement issue you’re pointing out is much less of a problem for benchmarks other than the EA community.

One note: DeepMind is outside the set of typical EA orgs, but is very relevant from a longtermist perspective. It fairs quite a bit better on this measure in terms of leadership: e.g., everyone above me in the hierarchy is non-white.

Thanks for pointing this out, good to know!

DeepMind was on my original list of organizations to include, but doesn’t have a team page on its website. In an earlier draft I mentioned that I would have otherwise included DeepMind, but one of the people I got feedback from (who I consider very knowledgeable about the Longtermist ecosystem) said they didn’t think it should be counted as a Longtermist organization so I removed that comment. And the same is true for OpenAI, FYI.

This is a great point.

Considering the reverse sharpens the point further:

"If a Chinese think tank, funded by a founder of Tencent (or Huawei, etc.), convened a consortium of AI practitioners & policy wonks (almost all of whom were racially & nationally Chinese), and this consortium produced recommendations on how to distribute the benefits of AI for the common good, what would the EA community think of that work?"

It is totally to be expected for a Chinese think tank to be primarily made of Chinese people, and I would both hope and expect the EA community would engage rationally and logically with their arguments and positions, rather than dismissing or discounting them because of their race.

How do you think cohorts like the self-identified conservatives in western democracies or the US intelligence community would view ideas coming from that hypothetical think tank? I’m pretty sure there’d be some skepticism, and that that skepticism would make it harder for the think tank to accomplish its goals. (I'm not arguing that they should be skeptical; I'm arguing that they would be skeptical.)

I agree we should expect a Chinese think tank to be largely staffed with Chinese people because of the talent pool it would be drawing from. I’ve provided a variety of possible reference classes for the Longtermist community; do you have views on what the appropriate benchmark should be?

I suggest this is a bad example; I imagine they'd be sceptical but more because of the involvement of a Chinese state actor (see e.g. concerns over Chinese government influence over Huawei) than because of their race.

I would also hope for this!

It feels fraught though – Chinese leadership seems to have a very different view of what constitutes the good, and a very different vision for the future.

Whether we assume conflict theory or mistake theory is also relevant here.

Thanks Milan!

I’d also add that this concern applies in a domestic context as well. Efforts to influence US policy will require broad coalitions, including the 23 congressional districts that are majority black. The representatives of those districts (among others) may well be skeptical of ideas coming from a community where just 3 of the 459 people in my sample (.7%) are black (as far as I can tell). And if you exclude Morgan Freeman (who is on the Future of Life Institute’s scientific advisory board but isn’t exactly an active member of the Longtermist ecoystem), black representation is under half of a percent.

I think percentages are misleading. In terms of influencing demographic X, what matters isn't so much how many people of demographic X there are in these organisations, but how well-respected they are.

Daniel Dewey and I run this program! Please reach out if you’re hiring for an EA org or running a fellowship program and want to bounce ideas off us. We would be delighted to chat.

I also want to clarify that the Open Phil AI Fellowship is a scholarship program for PhD students, so the students are not employees or staff.

Hi there,

Thank you for the data. 80,000 Hours agrees that improving diversity is important. We made a statement to this effect in our 2017 annual review, and have given updates in 2018 and 2019.

To update on our figures, we’ll now soon have 13 core full-time staff, and 2 will be POC (15%). We made job offers to both before Feb, though we’re still working on visas and one only recently started, which is why the meet the team page is out of date.

Thanks Ben! Great to see 80K making progress on this front! And while I haven’t crunched the numbers, my impression is that 80K’s podcast has also been featuring a significantly more diverse set of guests than when the podcast first started- this also seems like a very positive development.

Given the nature of your work, 80K seems uniquely positioned to influence the makeup of the Longtermist ecosystem as a whole. Do you track the demographic characteristics of your pipeline: people you coach, people who apply for coaching, people who report plan changes due to your work, etc.? If not, is this something you’d ever consider?

Yes we do track these, and have a brief note about it in the 2019 annual review.

Glad this is something you're tracking. For reference, here's the relevant section of the annual review.

Thank you so much for this article! I especially liked the part about "Suggestions for improving racial diversity." As someone who is founding a new organization, I am looking for ideas about what I can do on the front-end to set us up for success from a DEI perspective, and I love that you had a list of concrete bullets!