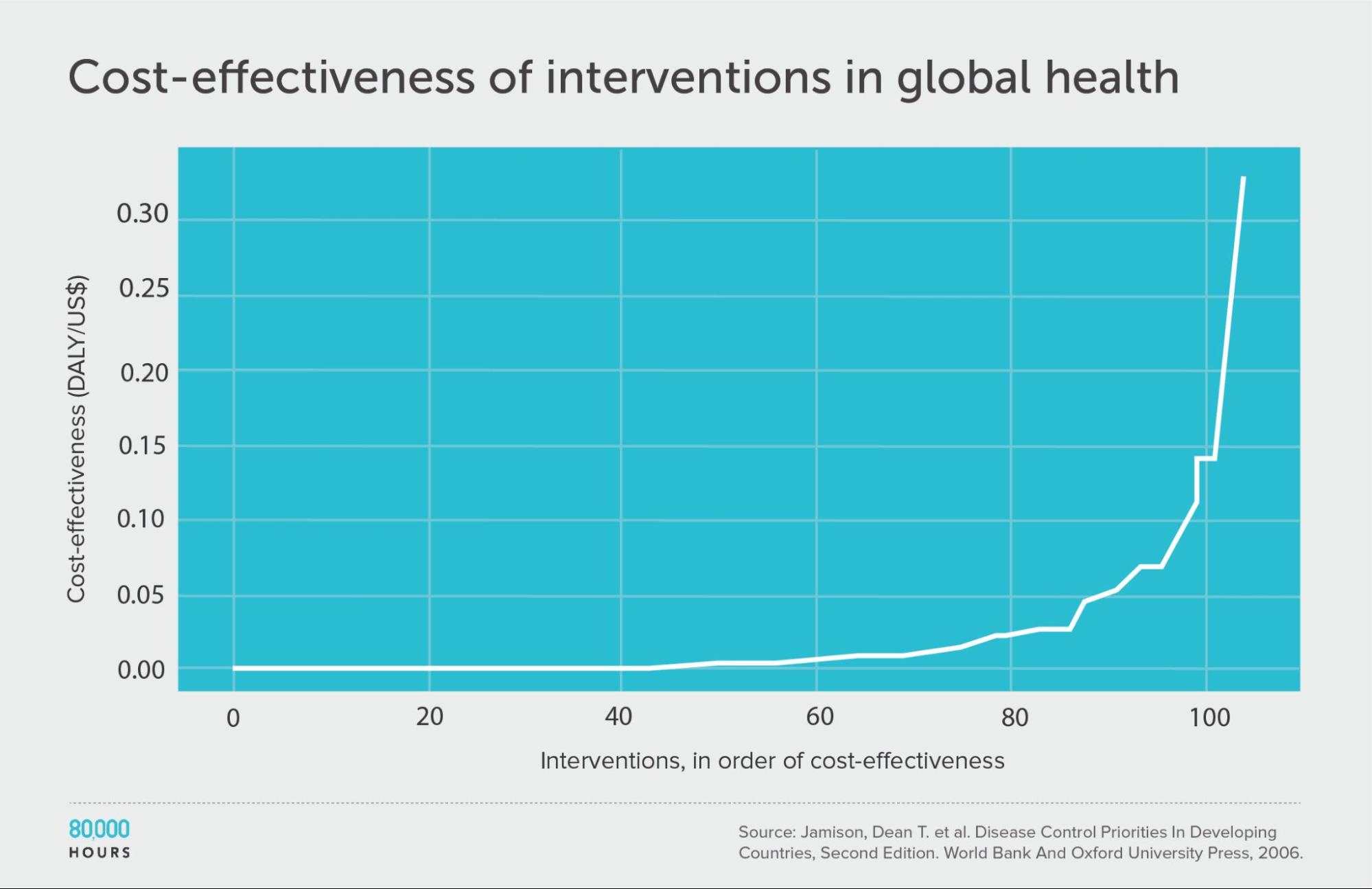

This chart is so right. The local charity environment in Cameroon is probably helping much less people than you imagine. We ran an effectiveness contest that aligns with this research perfectly.

In 2021 we created an EA group in Cameroon. We had multiple seminars covering the basics of Effective Altruism. By the end of 2022, the group got so excited that we created a charity.

“We” are a group of humanitarian/development workers in Cameroon, all currently employed in this field of work. Some of the basic EA principles resonated a lot. Such as the feeling that some activities and projects don’t really help much and that somewhere, sometimes, there is “real impact”.

So we created this charity to help steer organizations towards real impact, and help them become “more effective”. We tried a couple of things:

We offered consultancy services, starting for free, to local charities.

We started a contest to find the best projects in Cameroon.

The first thing did not work. See footnote. [1]

Now about the contest, we think this is relevant to share. The contest helped us confirm this global analysis, some things just work miles away from others, and some organizations are dedicated to things that aren't very useful. We wish there was a nicer way of saying it.

We had 21 submissions in the first year. We designed a simple way to evaluate and compare projects: We divided into 3 categories (health, human rights, and economic) and took all organizations’ reports at face value. Based on their own data, there was a huge divide between top performers and lowest performers. Then we did field surveys to verify the claimed results of the top 6 and we had our 3 winners, with only one organization really meeting expectations.

Main finding:

There was no correlation between experience and effect or grant size and effect, it is as if organizations don't get more effective with experience and professionalism. If anything the correlation is negative. We think this is because organizations get more effective at capturing donor funding not at providing a better service. They only get real valuable feedback from donors who decide to fund them or not. So organizations will focus and implement projects based on what donors appear to want, which sometimes may be connected to the most meaningful effects on the people they serve, but not necessarily.

Details:

First, we had two organizations just applying for funding instead of presenting project results. This happens, just a reminder that it is all about donor funding in the end and that sometimes people don’t read.

The general tendency was that organizations follow donor trends and work to teach people things they probably already know:

- Multiple menstrual health projects translated into a tiny economic transfer (free pads to cover 2 or 3 months) and some lessons either girls already know or they were very likely to be about to find out.

- “Child Protection” is another hot term, particularly in humanitarian contexts, but it was not very clear what people were being taught about and how that helped anyone.

- Sexual reproductive health was also very common but products are available and cheap and it is unlikely the information is that new to Cameroonian girls and women right now. HIV rates in the target areas aren't as high as in other countries, and when we ran the numbers it was unlikely even one infection was averted with these projects.

- “inclusion” of persons with disability in the health sector was a beautiful project with multiple complex activities but had no visible effects on people, with disabilities or not. It involved mostly training health workers, but it is important to understand these health workers were not denying people with disabilities services at baseline, at most, it was teaching them how to be a bit more sensitive to them, it wasn’t clear how access to services was increased by the many activities. Should it have had an element of direct subsidies, it may have performed better, but the massive budget for training alongside a financial transfer would have kept the project very low in cost-effectiveness.

- Another project on “strengthening community capacity and participation in local development” did not do anything clearly.

- Several gender-based violence projects were submitted but there wasn’t really data on prevented violence. We used some available research as a proxy and compared them for their cost per output (people reached) but even with “assumed impact” they were not very cost-effective. It is clear they are educating people on gender-based violence, it is just not clear how that is reducing it.

A couple of projects tried to do many things at once. They were holistic but did not do anything in a cost-effective way. One project gave sanitary pads, rice, oil, and soap to people. It also had spiritual guidance, medical assistance, COVID-19 awareness raising, sexual reproductive health advice, training on growing mushrooms. It must have been nice to implement but it does not perform well if the metric is saving lives and does not perform well if the metric is economic improvement.

We had a climate change project that did not really contribute much to the improvement in the health of anyone in the community. We had to translate CO2 sequestration from tree planting into the health of people in Cameroon, and it did not do anything visible. It was not very fair, and we could have compared multiple environmental projects in Cameroon, but we just had one and as a health project, it wasn’t a good one. We think it was a very nice experience for the children participating.

In human rights, the best projects were those providing documentation. First, they were focused on one thing. Second, this is a real risk in Cameroon that exposes people to extortion and sexual abuse, and for people in remote areas, it can get difficult to renew documents. People in conflict areas are more likely to lose them on the run, and they are the most subject to abuse because they are suspect and because soldiers tend to be more violent in those locations. It was a tiny economic transfer, but well-directed. When the project was focused it was the most cost-effective thing to do in this field. We realize more intangible things are harder to measure so we may be wrong.

In economic empowerment, we really did not have much to work with, only two finalists had livelihoods projects. We qualified the two and one had the results they claimed (participants reported improved income) and the other one did not (participants did not remember participating).

A mental health project seemed OK but when we interviewed participants and gave them basic screening mental health questionnaires they still had depression and anxiety.

We may be biased towards small cheap projects, but the only one we really liked was the sickle cell cash transfer project for which we started fundraising (see this post).

We hope this is useful and we would love to see similar analysis from other developing countries.

- ^

We realized after a year that this was not helping us get anyone to be more effective. There was little organic demand for organizations to improve their capacity to see the final effects and steer activities to be more focused on what works, even if we were working for free. We got a paid consultancy from a UN agency on our first year (which would have been a big accomplishment for any NGO) but started drifting apart more and more from our goal. We realized this was getting pointless, but it was a good experience and the pay will help us run for a while.

Thank you so, so much for this report. I'm stunned and encouraged that you managed to form a group of development people who gather around effective altruism ideas in Cameroon. I'm not the worst at enthusiastic pitching, but I've had close to zero interest while talking to development people here in Uganda. I found everything in this post ring deeply true with my experience.

This observation hit hard while making sense

"There was no correlation between experience and effect or grant size and effect, it is as if organizations don't get more effective with experience and professionalism. If anything the correlation is negative. We think this is because organizations get more effective at capturing donor funding not at providing a better service."

Imagine if there was a field of work, where experience and professionalism could sometimes make you less effective, because increasing money availability is associated with less effective interventions. Maybe more work needs to be done on this? I've seen that play out with a number of NGOs here, where they start off with one clear goal which is probably effective if not optimal. (like refugee mental health, recycling, post-war reconciliation) but then start just chasing the money, adding random less and less effective programs until they are doing everything and nothing.

I believe that Doing one thing well should be a fundamental norm in the NGO/aid world. But its not.

Similar to your observations, the trend/vibe for NGO interventions here seems to center around these three buckets at the moment, although the winds are often changing

And boy is it refreshing to see a fantastic GHD post on here haha. Super keen to link up with your group as well and share more experience - great work!

I’m generally with you on “doing one thing well”; but I also note that there are efficiencies in combining programs, which charities seem extremely reluctant to do on their own, even when the synergies are obvious. It’s plausible to me that merger is the best way to get these economies of scale. Or else some kind of outsourced service provider that could create synergies outside of the individual charities operations, similar to how most smaller e-tailers don’t deliver their own merchandise.

When I visited some GiveDirectly beneficiaries in Kenya, I was struck by the story of a guy who had gotten some horticultural training from PLAN more than a decade previous, but had been unable to put it to use since for lack of funds (until GiveDirectly came around). Yet I doubt that either GiveDirectly or PLAN would have the slightest interest in aligning their programs to get the increased impact that in this case happened by accident. I think about this anecdote a lot.

Yep I agree with this, in a minority of situations it might be plausible to merge orgs. I doubt mergers would usually achieve more efficiency, and would be interested to hear of an example, I would imagine it has worked well in some cases. The Givewell examples are combinations of interventions not merged organisations, and make a lot of sense.

One thing I've been dubious about along these lines is that some big orgs that seem to be moving into cost effective interventions they might not be expert at rolling out to access Givewell funding. For example One Acre fund, an org which has a great mission to maximize crop yield for substance farming, was funded by Givewell to test a chlorination program in Rwanda. I dont love this and my instinct would be I would rather a dedicated experienced chlorination org scaled up to take this on.

In saying that I was super impressed that OneAcre fund and GiveWell decided to stop this trial early because it didn't seem to be working and one acre fund effectively returned some of the money. Kudos to this high integrity approach to effective aid, super rare to see.

Thanks a lot for writing this up and sharing your evaluations and thinking!

I think there is lots of value in on-the-ground investigations and am glad for the data you collected to shine more light on the Cameroonian experience. That said, reading the post I wasn't quite sure what to make of some of your claims and take-aways, and I'm a little concerned that your conclusions may be misrepresentating part of the situation. Could you share a bit more about your methodology for evaluating the cost-effectiveness of different organisations in Cameroon? What questions did these orgs answer when they entered your competition? What metrics and data sources did you rely on when evaluating their claims and efforts through your own research?

Most centrally, I would be interested to know: 1) Did you find no evidence of effects or did you find evidence for no effect[1]?; and 2) Which time horizon did you look at when measuring effects, and are you concerned that a limited time horizon might miss essential outcomes?

If you find the time, I'd be super grateful for some added information and your thoughts on the above!

The two are not necessarily the same and there's a danger of misrepresentation and misleading policy advice when equating them uncritically. This has been discussed in the field of evidence-based health and medicine, but I think it also applies to observational studies on development interventions like the ones you analyse: Ranganathan, Pramesh, & Buyse (2015): Common pitfalls in statistical analysis: "No evidence of effect" versus "evidence of no effect"; Vounzoulaki (2020): ‘No evidence of effect’ versus ‘evidence of no effect’: how do they differ?; Tarnow-Mordi & Healy (1999): Distinguishing between "no evidence of effect" and "evidence of no effect" in randomised controlled trials and other comparisons

Great points, Sarah.

Thanks for your work, EffectiveHelp - Cameroon. I think it would be great if you could share the data underlying your analysis.

Despite our energetic writing, we may have been carried away. We had very limited tools and information. It would be more accurate to say the first, no evidence of effects.

In fact, we did not have the tools or data to look rigorously into all projects and their intended and unintended effects.

We had two layers:

In the first layer we assume projects do exactly what organizations claim they do, and just establish a possible output per dollar (well in this case, per franc CFA). If they have usable output or outcome data, we use that, if not we may even use research on an equivalent program (eg. the GBV example). In each category, it is easy to compare which output per dollar is cheaper, still with some working assumptions.

From there, the difference between organizations was quite huge, and we had some budget to do data collection for the possible best 6 (thanks EA Infrastructure fund). There we just try to confirm the effect claimed by interviewing beneficiaries of the assistance. In two cases the effect claimed wasn't visible at the time of data collection and that gave us two finalists (economic, because in the other project most beneficiaries did not remember participating, and health, because in the other project participants appeared to be worse off than before the project), in the human rights category there were two very similar projects as finalists and one had slightly stronger effects.

We were clearly biased for small budgets, so the overall winner had a big advantage because it was literally an intervention for 1 family, we think this may still be accurate anyway, and it is plausible that there are great opportunities to do good at small scale in developing countries, particularly through cash.

We had also limitations in comparing across sectors, but more or less the 3 finalists got the same (a badge, a framed award, some feedback they can use with potential donors, and subscription to an online newsletter to funding opportunities. We recognized the human rights final position was more tight and we added the runner-up to the newsletter). We decided to do more for the winner because we thought it was the only one meeting cost-effectiveness expectations and we can't find much better in Cameroon, but that was out of the contest.

Going back to your question, if I have to guess, I am sure these projects may have effects that we did not get to see. I am unsure these effects are achieved in a cost-effective manner because they are buried in so much else.

Thanks for explaining! In this case, I think I come away far less convinced by your conclusions (and the confidence of your language) than you seem to. I (truly!) find what you did admirable given the resources you seem to have had at your disposal and the difficult data situation you faced. And I think many of the observations you describe (e.g., about how orgs responded to your call; about donor incentives) are insightful and well worth discussing. But I also think that the output would be significantly more valuable had you added more nuance and caution to your findings, as well as a more detailed description of the underlying data & analysis methods.

But, as said before, I still appreciate the work you did and also the honesty in you answer here!

In my eyes, this is an unusually strong effort to judge these projects. Its obviously far from perfect, but a better effort than most NGOs or competitions would.

My experience with Latin American NGOs and governments at J-PAL corroborates this: most programs lack cost-effectiveness.

The trend of projects attempting to address multiple complex issues simultaneously is particularly concerning. When reviewing funding applications targeting child labor, deforestation, and wage improvement, most NGOs claimed they could tackle all three. This approach is fundamentally flawed — making significant progress on even one of these issues is challenging enough. IMO focusing on a single, well-defined problem is more likely to yield measurable impact than diluting efforts across multiple fronts.

Could it be that their reasoning is this: These three problems are connected in some way, therefore, by tackling some root cause, all these problems can be solved at once?

This line of reasoning is something I've anecdotally also heard from several NGOs and activists.

That matches what I've seen. In this case, though, they were tackling multiple issues to increase their chances of funding, not because they identified a common root cause.

we agree. Small, cheaper projects that focus on one thing tend to work better. We understand big ambitious projects can have more potential impact and that "impact" is difficult to measure. E.g. if a peace-building project can truly achieve peace, nothing beats that, is just that most peace-building projects are just workshops with people that have no say on whether war or peace will happen.

We prefer avoiding the word impact entirely and talking of effects (intended, visible), this misses some of the biggest opportunities and lots of indirect benefits.

At least a few of the projects could have benefitted from breaking them down into smaller projects and considering them separately in each category. We were going down that road at the beginning but then we thought that wasn't entirely fair to the other participants. Because many organizations were not fully understanding with how they were going to be evaluated, giving one 3 chances was an unfair advantage.

I think at least one of these projects could have been a finalist on the economic growth section, should it have been broken down in this way, but if the organization applying thought that was a human rights project then we have to compare it on the effects in that category (which we phrased as likelihood of protecting people from abuse, this was the hardest as there is less work on EA on this subject), and then it did not do much but spent lots of money. In any case, this is only relevant for the contest, we tried to have a fair one but that wasn't the ultimate objective. The contest was only relevant to us as a way to identify cost-effective projects that we would like to support further, it was clear this wasn't one of them.

This is great! I think its extremely important and underrated (dare I say 'neglected'?) work to identify and shift resources towards more effective charities in smaller contexts, even if those charities are unlikely to be the most globally effective.

Are you able to share more of your analysis or data? I'm curious about the proportion of charities in the categories you identify above, and what, if any numerical/categorical values you assigned.

There is a level of confidentiality because organizations shared this in good faith and not for public dissemination.

Moreover, any numerical values were very speculative and just for the purpose of comparison. We can only confidently share a broad analysis like the one in this post.

I love this analysis. We need more frontline investigations like this!

thank you very much this is appreciated. We did not know we were doing much useful honestly :D, the reaction to this post is very encouraging

Thank you for this amazing write up. I had suspected that interventions at the level of charity would not work, compared to interventions at the level of funders. If funders require effectiveness, charities will care - but except some very newly started orgs, I've felt that institutional inertia stops them from caring about the mission and instead they just Goodheart the things funders want or institution has focused on. This makes me update even more away from Local Priorities Research, and towards Contextualisation work even in countries where there must be some cost effective intervention to do. Perhaps a new cause area of "Funder Sophistication" or something is needed to make top-down changes in attitude? Anyhow, thank you for your work and for this write-up!

Similarly to the other commenters, I just want to say thank you for what you are doing and for writing this up! This is great stuff

thank you I appreciate this

Thank you for your post! Nice to see fellow humanitarian/development workers taking the initiative to run their own research activities on local charities/projects (we are trying to do something similar but related to coordination).

Your research seems to add to the notion that nothing that orgs will often prioritize trendy topics "usually" favored by funders over actual field needs.

For local NGOs at least, sadly I'm not sure how much choice they have but to follow the current funding streams (right now climate is huge plus a few others).

The idea of starting an NGo to do something because you genuinely thought it might be the most important thing is usually a high income country perspective.

Hey Nick, I agree with you on the choice aspect of it, you usually need to go where the funding (or the funding priority) is, if you are a smaller NGO. Now, the starting point for NGOs, from my point of view, is often because the founders thought it might help to solve the problem in that specific community (which might be the most important thing to them). Even if it's not aligned with current funding trends, I think the initial motivation is often rooted in addressing local/community needs (later shifting towards funding priorities mainly for sustainability - would be fun to run a small research on this).

Very interesting ! I like this post, especially the specific breakdown of why many interventions might not be super cost effective.

Thanks for doing the yeoman's work of building a chapter, gathering applications, reviewing them thoroughly with local context, and sharing your findings here! I'm sorry to hear that you found the most of applications of a low quality, and hope that in time, excellent locally organized charities emerge.

A couple questions that would help me get a fuller picture:

Hi,