Note: A linkpost from my blog. Epistemic status: A confused physicist trying to grapple with philosophy, and losing.

Effective Altruism is about doing as much good as possible with a given amount of resources, using reason and evidence. This sounds very appealing, but there’s the age-old problem, what counts as “the most good”? Despite the large focus on careful reasoning and rigorous evidence within Effective Altruism, I speculate that many people decide what is “the most good” based largely on moral intuitions.[1]

I should also point out that these moral dilemmas don’t just plague Effective Altruists or those who prescribe to utilitarianism. These thorny moral issues apply to everyone who wants to help others or “do good”. As Richard Chappell neatly puts it, these are puzzles for everyone. If you want to cop-out, you could reject any philosophy where you try to rank how bad or good things are, but this seems extremely unappealing. Surely if we’re given the choice between saving ten people from a terrible disease like malaria and ten people from hiccups, we should be able to easily decide that one is worse than the other? Anything else seems very unhelpful to the world around us, where we allow grave suffering to continue as we don’t think comparing “bads” are possible.

To press on, let’s take one concrete example of a moral dilemma: How important is extending lives relative to improving lives? Put more simply, given limited resources, should we focus on averting deaths from easily preventable diseases or increasing people’s quality of life?

This is not a question that one can easily answer with randomised controlled trials, meta-analyses and other traditional forms of evidence! Despite this, it might strongly affect what you dedicate your life to working on, or the causes you choose to support. Happier Lives Institute have done some great research looking at this exact question and no surprises - your view on this moral question matters a lot. When looking at charities that might help people alive today, they find that it matters a lot whether you prioritise the youngest people (deprivationism), older children over infants (TRIA), or the view that death isn’t necessarily bad, but that living a better life is what matters most (Epicureanism). For context, the graph below shows the relative cost-effectiveness of various charities under different philosophical assumptions, using the metric WELLBYs, which taken into account the subjective experiences of people.

So, we have a problem. If this is a question that could affect what classifies as “the most good”, and I think it’s definitely up there, then how do we proceed? Do we just do some thought experiments, weigh up our intuitions against other beliefs we hold (using reflective equilibrium potentially), incorporate moral uncertainty, and go from there? For a movement based on doing “the most good”, this seems very unsatisfying! But sadly, I think this problem rears its head in several important places. To quote Michael Plant (a philosopher from the University of Oxford and director of Happier Lives Institute):

“Well, all disagreements in philosophy ultimately come down to intuitions, not just those in population ethics!”

To note, I think this is very different from empirical disagreements about doing “the most good”. For example, Effective Altruism (EA) is pretty good at using data and evidence to get to the bottom of how to do a certain kind of good. One great example is GiveWell, who have an extensive research process, drawing mostly on high-quality randomised control trials (see this spreadsheet for the cost-effectiveness of the Against Malaria Foundation) to find the most effective ways to help people alive today. EA tries to apply this empirical problem solving to most things (e.g. Animal Charity Evaluators, Giving Green for climate donors, etc.) but I think it breaks down for some of the most important questions.

In an article I wrote for Giving What We Can a while back, I tried to sketch out some different levels of research (see graphic below). Roughly, I would define the categories as:

- Worldview research: Research into what or who matters most in the world.

- Example: How much should we value humans relative to animals? Additionally, what specific characteristics do we find morally relevant?

- Cause prioritisation: Exploring, understanding, and comparing the value of different cause areas; and considering and estimating the relative value of additional resources devoted to each in a cause-neutral way.

- Example: How important, neglected, and tractable is improving global poverty compared to voting reform?

- Intervention prioritisation: Using evidence to determine the best interventions within a certain cause.

- Example: Is policy advocacy best done via directly lobbying policymakers, encouraging the public to apply political pressure, or by releasing high-quality research?

- Charity evaluation: Recommending charities based on the effectiveness of their programmes.

- Example: Is Against Malaria Foundation or GiveDirectly a more effective way to help people alive now?

In essence, depending on the kind of question you ask, I think an empirical approach can work really well, or it can pretty much fail to give you a concrete answer.

Below I’ll go through some of the, in my opinion, hard-to-answer moral dilemmas that could significantly affect what people consider to be the most valuable thing to work on.

1. The moral value of animals

One clear moral quandary for me is the moral value of different animals, both relative to each other and to humans. I think this question is very important, and could be the deciding factor on whether you work on, for example, global poverty or animal welfare. To illustrate this, Vasco Grilo estimates that under certain assumptions, working on animal welfare could be 10,000 times more effective than giving to GiveWell top charities, which is probably one of the best ways to help people alive today. However, this estimate is somewhat crazily high as the author uses a moral weight for chickens being twice as important as humans, which is more than most people would say! However, even valuing a chicken’s life 1000x less than a human’s life, you still get the fact that working on animal welfare might be 10x better than most global development charities in reducing suffering.

This seems quite wild to me. Personally, I think it’s very plausible that a chicken’s life is over 1/1000th as morally relevant as a human life. Given that we have pretty good evidence that farmed animals have the capacity to feel pain, sadness and form complex social bonds, our treatment of animals seems like a grave injustice. In this case, ending factory farming seems really important. Animal welfare looks even more pressing if you value the lives of the vast numbers of fish, shrimp or insects who are suffering. Just looking at fish, there are up to 2.3 trillion individual fish caught in the wild, excluding the billions of farmed fish.

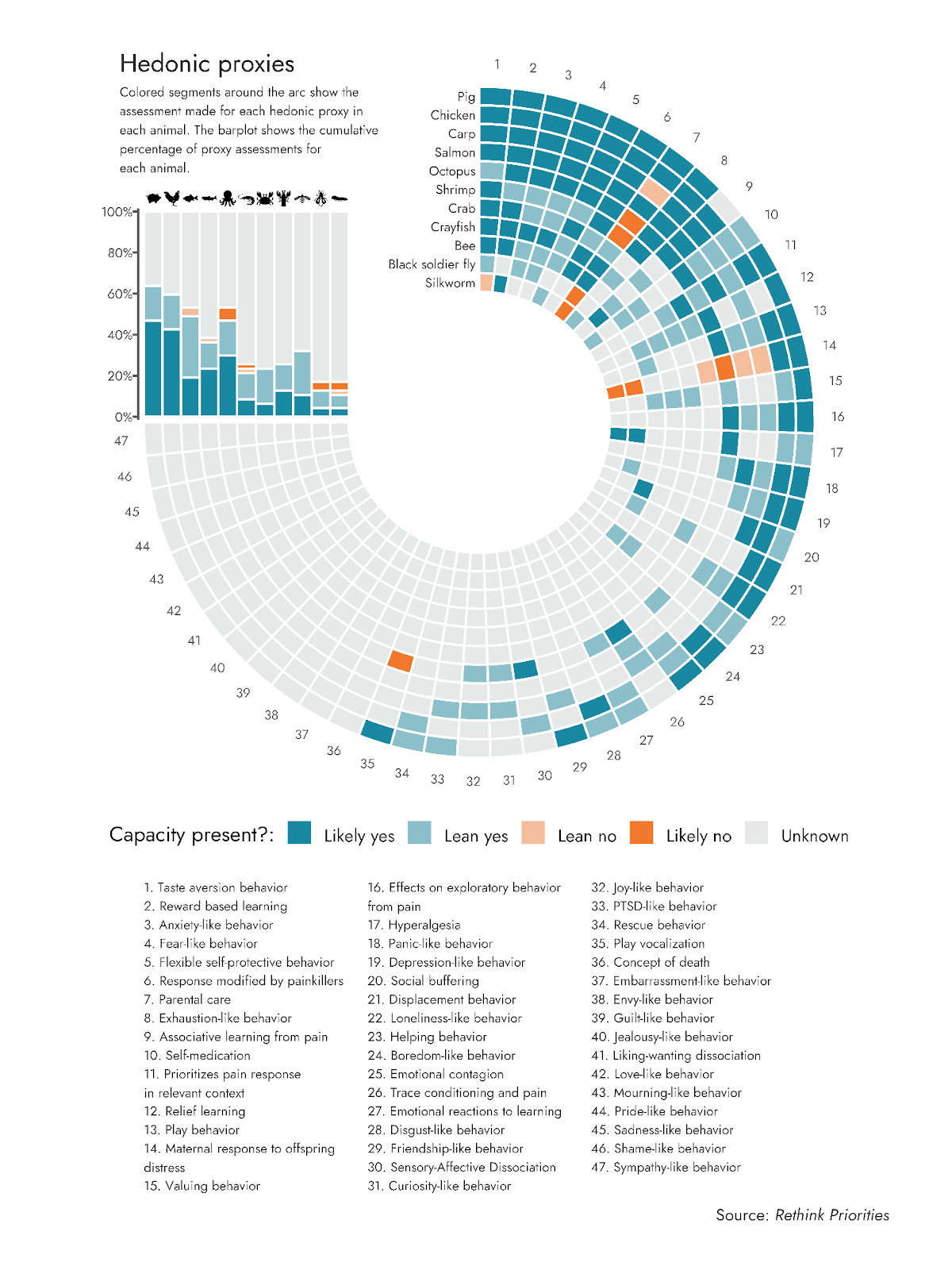

Rethink Priorities are doing some incredibly informative research on this very topic of understanding the cognitive and hedonic (i.e. relevant to their ability to feel pain or pleasure) abilities of different animals, which can be seen below. In short, there is already quite good scientific evidence that animals we farm in huge numbers have very many morally relevant capacities, whether that’s their desire to take actions that reduce pain or exhibit depression-like behaviour. Even research on insects shows that there is a high possibility that they can experience pain, despite us giving them almost no moral consideration.

However, a lot of people might disagree with me (largely based on moral intuitions) on the relative importance of a chicken’s life vs a human life. So, can we get a more empirical answer on the moral value of these different animals?

We can definitely give it a good go, using neuron counts, encephalisation quotients, intensity of animal experiences and other components that lead to moral weights (i.e. the relative moral worthiness of various animals, based on a range of factors).[2] However, moral weights might never paint a perfect picture of the relative importance of different animals, and how they compare to humans. Understanding human consciousness is hard enough, let alone the consciousness of beings we understand much less (see here for more discussion). Overall I think most humans, including some EA do-gooders, may not be convinced by speculative-seeming estimates of the moral value of various animals, or simply not have the time or will to read extensively on the subject, and just revert to their own intuitions.

So this is one unresolved moral quandary that might determine whether you should focus on human issues vs animal issues. Another important moral question might be:

2. Population ethics (and person-affecting views)

Population ethics is the study of the ethical problems arising when our actions affect who is born and how many people are born in the future (thanks Wikipedia). I’m told this is a particularly thorny branch of moral philosophy that most academic philosophers don’t even tackle until PhD-level! Despite this, I’ll attempt to address at least one fundamental open question in this field, around person-affecting views.

Person-affectiving views claim that an act can only be bad if it is bad for someone. Out of this falls the neutrality principle, which claims (Greaves, 2017):

“Adding an extra person to the world, if it is done in such a way as to leave the well-being levels of others unaffected, does not make a state of affairs either better or worse”

What does this actually mean? We can borrow a slogan from Jan Narveson, who says “We are in favour of making people happy, but neutral about making happy people”. To clarify further, this view prioritises improving the lives of existing people, but places no positive moral value on creating additional humans, even if they go on to have flourishing happy lives.

Why does this matter? Rejecting person-affecting views, and the principle of neutrality, is required for (strong) longtermism.[3] For those unfamiliar with longtermism, it's the view that protecting the interests of future generations should be a key moral priority of our time. One of the key claims of longtermism is that future people matter morally, despite them not yet being born. However, this is incompatible with person-affecting views, which place no moral value on the interests of future generations and by default, creating additional future people. Therefore, this seems like an important moral question to resolve, given the rise of longtermism within popular culture.

Sadly, there seems to be no definitive answers whether or not one should hold person-affecting views. There are some convincing arguments for person-affecting views, with some also convincing counterarguments.[4] A disclaimer here is that I’m not a philosopher, so maybe there are bulletproof moral arguments why you should reject or endorse person-affecting views. However, I’m sceptical that they are as convincing as empirical arguments, leading again to many people relying on moral intuitions (and/or deferring to certain trusted individuals) to decide on who matters the most morally. Michael Dickens puts it much more strongly than I might, saying that:

“Most disagreements between professional philosophers on population ethics come down to disagreements about intuition…But none of them ultimately have any justification beyond their intuition.”

I think (and I’ve been informed!) that there’s a lot more to philosophy debates than just intuitions (e.g. finding edge cases where things break down, interrogating certain intuitions, discounting them if they seem like a bias, etc.) but I still think there’s a grain of truth in here.

Ultimately, even if you’re sympathetic to person-affecting views, there might be other good reasons to focus on traditionally longtermist issues, such as reducing existential risks to humanity (e.g. so we don’t potentially go extinct in the next century, see here and here).

With population ethics done and dusted (wait, did I even mention the Total vs Average debate?), we move onto our next moral quandary..

3. Suffering-focused ethics

How important is reducing suffering relative to increasing happiness? If given the offer, would you trade one hour of the worst suffering for one hour of the best pleasure? If not, at how many hours of the best pleasure would you make this trade? Many people I’ve spoken to wouldn’t take the initial trade, and only take it when the pay-offs get very large (e.g. 10,000 hours of the best pleasure for one hour of the worst suffering).

This might be because we find it extremely hard to conceptualise what the best pleasure could feel like. The worst suffering feels easier to extrapolate, usually based on previous physical pain we’ve suffered. Still, there doesn’t seem to be a “right” answer to this question, leading people to diverge quite wildly on very fundamental moral questions.

In one experimental study asking survey participants this very question, the authors find that people weigh suffering more than happiness. Tangibly, survey participants said that more happy than unhappy people were needed for a population to be net positive. The diagram below shows that people, on average, would need a population with approximately 61% of all individuals being happy (and 39% unhappy) for the population to be net positive.

To reiterate how subscribing to suffering-focused ethics might influence your moral and career priorities, I think it would lead people to try to reduce risks of astronomical suffering (s-risks) or ending factory farming (as animals often suffer terribly).

4. Decision Theory / Expected value

This one is slightly cheating, as it’s not a moral intuition. Instead it refers to an epistemic intuition (i.e. relating to our understanding of knowledge) about how we should deal with tiny probabilities of enormous value. In short, how do we deal with situations that offer huge moral rewards, like hundreds of trillions of beings leading flourishing lives, but only offer at 0.00000001% chance of this being reality?

This is similar to the wager offered in Pascal’s mugging, a thought experiment where a mugger offers 1,000 quadrillion happy days of life in return for Pascal, who is being mugged, to hand over their wallet. Even though Pascal thinks there is only a 1 in 10 quadrillion chance of the mugger actually following through, this trade looks pretty good in expectation: a 1 in 10 quadrillion probability multiplied by 1000 quadrillion days if happy = 100 happy days of life.[5]

This is discussed in a great paper by Nick Bickstead and Teruji Thomas, aptly titled “A paradox for tiny probabilities of enormous values”. They make some important claims:

- Reckless theories recommend risking arbitrarily great gains at arbitrarily long odds (1 in 10 quadrillion) for the sake of enormous potential (1,000 quadrillion happy days of life).

- Timid theories permit passing up arbitrarily great gains to prevent a tiny increase in risk. For example, if we had a 10% chance of winning $100 dollars or a 9% chance of winning $100,000,000, one would hope we pick the latter option! But timid theories are risk-averse, so it wants to maximise the probability of winning something, rather than maximising expected returns.

- The third way to resolve this issue is rejecting transitivity, which means saying that in this case, if A=B and B=C, it is not necessarily true that A=C. This seems quite weird, but Lukas Gloor makes some good points about how population ethics might be different to other ethical domains, such that comparing different population states is not very straightforward.

To greatly condense this issue, I recommend reading this summary of the above paper. The author, Tomi Francis, sums up the three ways to square this paradox and concludes:

“None of these options looks good. But then nobody said decision theory was going to be easy.”

Paint me a picture

These were just a few examples of moral issues that I think could significantly impact someone’s cause prioritisation, or otherwise what issue they think is the most important to work on. The (nonexhaustive) list below highlights some other potential moral quandaries I left out for the sake of brevity:

- To what extent do we prioritise helping the people that are the worst off, also known as prioritarianism.

- What things do we actually define as good? Is it happiness, having our preferences met, or some combination of intangible things like creativity, relationships, achievements and more? See here for more discussion on this topic.

- How important is welfare vs equality and justice?

- To what degree can we reliably influence the trajectory of the long-term future (not a moral quandary, but an epistemic one)

- How much confidence do you place on different moral philosophies e.g. classical utilitarianism vs virtue ethics vs deontology vs negative utilitarianism? In other words, what would your moral parliament look like?

- How much do you value saving a life relative to doubling income for a year? See GiveWell’s thoughts on moral weights here.

- Are you a moral realist or anti-realist? In other words, do you believe there are objective moral truths?

All in all, I think there’s probably quite a few thorny moral and epistemic questions that make us value very different things, without the ability to confidently settle on a single correct answer. This means that a group of dedicated do-gooders, such as Effective Altruists, searching for the single best cause to support, can often end up tackling a wide range of issues. As a very naive example, I demonstrate this in the flowchart below, where even just tackling two of these questions can lead to some pretty big divergence in priorities.

Now this flowchart is massively simplified (it only addresses 2 thorny questions rather than 20!), and ignores any discussion of neglectedness and tractability of these issues, but I think it’s still illustrative.[6] It highlights how one can take different yet reasonable views on various moral and epistemic quandaries, and end up focusing on anything from farm animal welfare to promoting space colonisation. It also shows that some causes may look good from a variety of intuitions, or that similar answers can lead to a range of cause areas (where epistemic considerations may then dominate, e.g. how much do you value a priori reasoning vs empirical evidence).

For a nonsensical (and unserious) example of what it might look like to do this for a few more challenging questions - see below!

.

Where do we go from there?

I think this is probably one in a spate of recent articles promoting greater moral pluralism (within Effective Altruism), in that we should diversify our altruistic efforts across a range of potentially very important issues, rather than going all in on one issue.

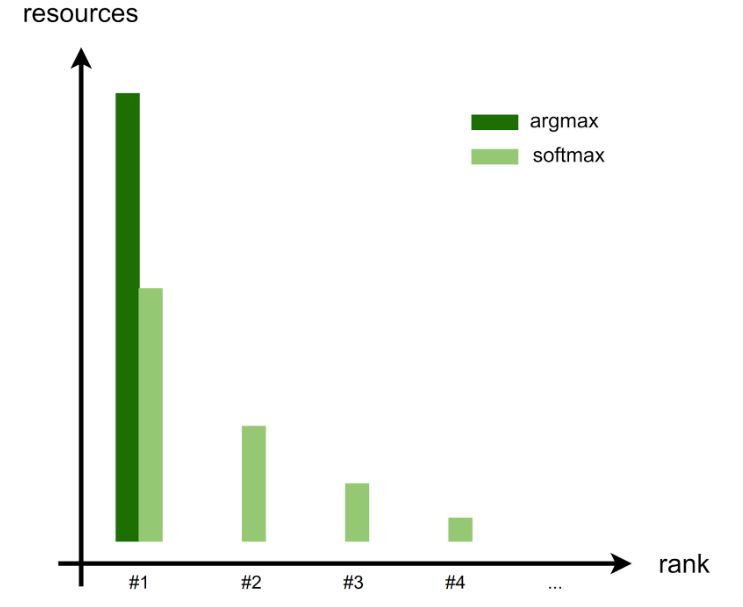

Jan Kulveit and Gavin make a similar point when saying we can move towards ‘softmaxing’ rather than going all in one issue, as seen in their image below.

Holden Karnofsky also talks about why maximising can be perilous, especially when you’re not 100% certain you’re maximising for the right thing. This leads me to the conclusion that being humble in your beliefs about what is “the most good” is a core component of actually doing good. Given the likelihood that we’ve erred on the wrong side of at least one of many difficult moral issues, it feels quite unlikely that we can be very confident we’re actually working on “the most important thing”. Open Philanthropy puts this approach into practice using worldview diversification, which I highly recommend checking out if you don’t already know about it.

So one thing we can do is think hard about moral uncertainty, and different approaches to integrating conflicting moral worldviews. I’m certainly no expert so I feel like it’s time for me to go down a rabbit hole, and I’ll probably check out these resources which look interesting:

- Parliamentary Approach to Moral Uncertainty - Toby Newberry & Toby Ord

- A talk about the book: Moral Uncertainty - by Krister Bykvist, Toby Ord and William MacAskill

- Alasdair MacIntyre, with an opposing view, seems to argue that making moral trade-offs is very hard, due to existing wedded beliefs.

- A bunch of the interesting content under moral uncertainty on the EA Forum

Another thing we can do is pursue causes that look promising from a range of different worldviews. For example, Will MacAskill calls clean energy innovation a win-win-win-win-win-win, for the benefits it has on: Saving lives from air pollution, reducing risks from climate change, boosting positive technological progress, reducing energy poverty in poorer countries, preserving coal for future generations (if we have serious societal collapse) and reducing the chance of authoritarian dictators relying on natural resources to grow huge wealth without listening to the public (okay this one is a bit more tenuous).

I’m not sure if there are other issues that can provide a win-win-win-win-win-win, but that sure seems exploring.

- ^

There’s probably also a good dose of deferring thrown in there too.

- ^

If you want to read on why neuron counts aren’t a useful proxy for moral weights, I recommend reading this work by Rethink Priorities.

- ^

See discussions around strong vs weak longtermism here.

- ^

- ^

See some more background in expected value calculations here.

- ^

For example, there are plenty more epistemic considerations to cause selection here (e.g. a priori reasoning vs empirical data).

EDIT: Maybe what you mean is that differences in empirical beliefs are not as important, perhaps because we don't disagree so much on empirical beliefs in a way that significantly influences prioritization? That seems plausible to me.

Thanks for writing this! I agree that moral (or normative) intuitions are pretty decisive, although I'd say empirical features of the world and our beliefs about them are similarly important, so I'm not sure I agree with "mostly moral intuition". For example, if chickens weren't farmed so much (in absolute and relative numbers), we wouldn't be prioritizing chicken welfare, and, more generally, if people didn't farm animals in large numbers, we wouldn't prioritize farm animal welfare. If AGI seemed impossible or much farther off, or other extinction risks seemed more likely, we would give less weight to AI risks relative to other extinction risks. Between the very broad "direct" EA causes (say global health and poverty, animal welfare, x-risks (or extinction risks and s-risks, separately)), what an individual EA prioritizes seems to be mostly based on moral intuition, but that we're prioritizing these specific causes at all (rather than say homelessness or climate change), and the prioritization of specific interventions or sub-causes depends a lot on empirical beliefs.

Also, a nitpick on person-affecting views:

The principle of neutrality is compatible with concern for future people and longtermism (including strong longtermism) if you reject the independence of irrelevant alternatives or transitivity, which most person-affecting views do (although not all are concerned with future people). You can hold wide person-affecting views, so that it's better for a better off person to be born than a worse off person, and we should ensure better off people come to exist than people who would be worse off, even if we should be indifferent to whether any (or how many) such additional people exist at all. There's also the possibility that people alive today could live for millions of years.

Asymmetric person-affecting views, like Meacham's to which you link, reject neutrality, because bad lives should be prevented, and are also compatible with (strong) longtermism. They might recommend ensuring future moral patients are as well off as possible or reducing s-risks. See also Thomas, 2019 for asymmetric views that allow offsetting bad lives with good lives, but not outweighing bad lives with good lives, and section 6 for practical implications.

Finally, with respect to the procreation asymmetry in particular, I think Meacham's approach offers some useful insights into how to build person-affecting views, but I think he doesn't really offer much defense of the asymmetry (or harm-minimization) itself and instead basically takes it for granted, if I recall correctly. I would recommend actualist accounts and Frick's account. Some links and discussion in my comment here.

Thanks for the thoughtful reply Michael! I think I was thinking more along what you said in your edit: empirical beliefs are very important, but we (or EAs at least) don't really disagree on them e.g. objectively there are billions of chickens killed for food each year. Furthermore, we can actually resolve empirical disagreements with research etc such that if we do hold differing empirical views, we can find out who is actually right (or closer to the truth). On the other hand, with moral questions, it feels like you can't actually resolve a lot of these in any meaningful way to find one correct answer. As a result, roughly holding empirical beliefs constant, moral beliefs seem to be a crux that decides what you prioritise.

I also agree with your point that differing moral intuitions probably lead to different views on worldview prioritisation (e.g. animal welfare vs global poverty) rather than intervention prioritisation (although this is also true for things like StrongMinds vs AMF).

Also appreciate the correction on person-affecting views (I feel like I tried to read a bunch of stuff on the Forum about this, including a lot from you, but still get a bit muddled up!). Will read some of the links you sent and amend the main post.

Thank you for this. I would only caution that we should be vigilant when it comes to poor reasoning disguised as “intuition”. Most EAs would reject out of hand someone’s intuition that people of a certain nationality or ethnic group or gender matter less, even if they claim that it’s about suffering intensity as opposed to xenophobia, racism or sexism. Yet intuitions about the moral weight of nonhuman animals that often seem to have been plucked out of thin air, without any justification provided, seem to get a pass, especially when they’re from “high-status” individuals.

It's a pleasure to see you here, Sir.

Thanks for the post!

Adding on to what Michael said (and disagreeing a bit): when it comes to what people prioritize, I think this post might implicitly underrate the importance of disagreeing beliefs about the reality of the world relative to the importance of moral disagreements. (I think Carl Shulman makes a related point in this podcast and possibly elsewhere.) In particular, I think a good number of people see empirical beliefs as some of their key cruxes for working on what they do (e.g.), and others might disagree with those beliefs.

Potentially a more minor point: I also think people use some terminology in this space in different ways, which might cause additional confusion. For instance, you define "worldview research" as "Research into what or who matters most in the world," and write "Disagreements here are mostly based on moral intuitions, and quite hard to resolve" (in the chart). This usage is fairly common (e.g.). But in other places, "worldview" might be used to describe empirical reality (e.g.).

Thank you for this thoughtful post! I am just about to start a PhD in philosophy on the psychology and metaethics of well-being, so I am fairly familiar with the research literature on this topic in particular. I totally agree with you that foundational issues should be investigated more deeply in EA circles. To me, it is baffling that there is so little discussion of meta-ethics and the grounds for central propositions to EA.

You are right that many philosophers, including some who write about method, think that ethics is about weighing intuitions in reflective equilibrium. However, I think that it is seriously misleading to state this as if it is an undisputed truth (like you do with the quote from Michael Plant). In the 2020 Philpapers survey, I think about half of respondents thought intuitions-based philosophy was the most important. However, the most cited authors that do contemporary work in philosophical methodology eg. Timothy Williamson and Herman Cappelen, dont think intuitions plays important roles at all, at least not if intuitions are thought to be distinct from beliefs.

I think that all the ideas you mention concerning how to move forward look very promising. I would just add "explore meta-ethics", and in particular "non-intuitionism in ethics". I think that there are several active research programs that might help us determine what matters, without relying on intuitions in a non-critical way. I would especially recommend Peter Railton's project. I have also written about this and I am going to write about it in my PhD project. I would be happy to talk about it anytime!

Over time, I've come to see the top questions as:

In one of your charts you jokingly ask, "What even is philosophy?" but I'm genuinely confused why this line of thinking doesn't lead a lot more people to view metaphilosophy as a top priority, either in the technical sense of solving the problems of what philosophy is and what constitutes philosophical progress, or in the sociopolitical sense of how best to structure society for making philosophical progress. (I can't seem to find anyone else who often talks about this, even among the many philosophers in EA.)

Cluster thinking could provide value. Not quite the same as moral uncertainty, in that cluster thinking has broader applicability, but the same type of "weighted" judgement. I disagree with moral uncertainty as a personal philosophy,given the role I suspect that self-servingness plays in personal moral judgements. However, cluster thinking applied in limited decision-making contexts appeals to me.

A neglected areas of exploration in EA is selfishness, and self-servingness along with that. Both influence worldview, sometimes on the fly, and are not necessarily vulnerable to introspection. I suppose a controversy that could start early is whether all altruistic behavior has intended selfish benefits in addition to altruistic benefits. Solving the riddle of self-servingness would be a win-win-win-win-win-win .

Self-servingness has signs that include :

but without prior knowledge useful to identify those signs, I have not gotten any further than detecting self-servingness with simple heuristics (for example, as present when defending one's vices).