James Herbert

Bio

I'm currently a co-director at EA Netherlands (with Marieke de Visscher). We're working to build and strengthen the EA community here.

Before this, I worked as a consultant on urban socioeconomic development projects and programmes funded by the EU. Before that, I studied liberal arts (in the UK) and then philosophy (in the Netherlands).

Hit me up if you wanna find out about the Dutch EA community! :)

Posts 4

Comments34

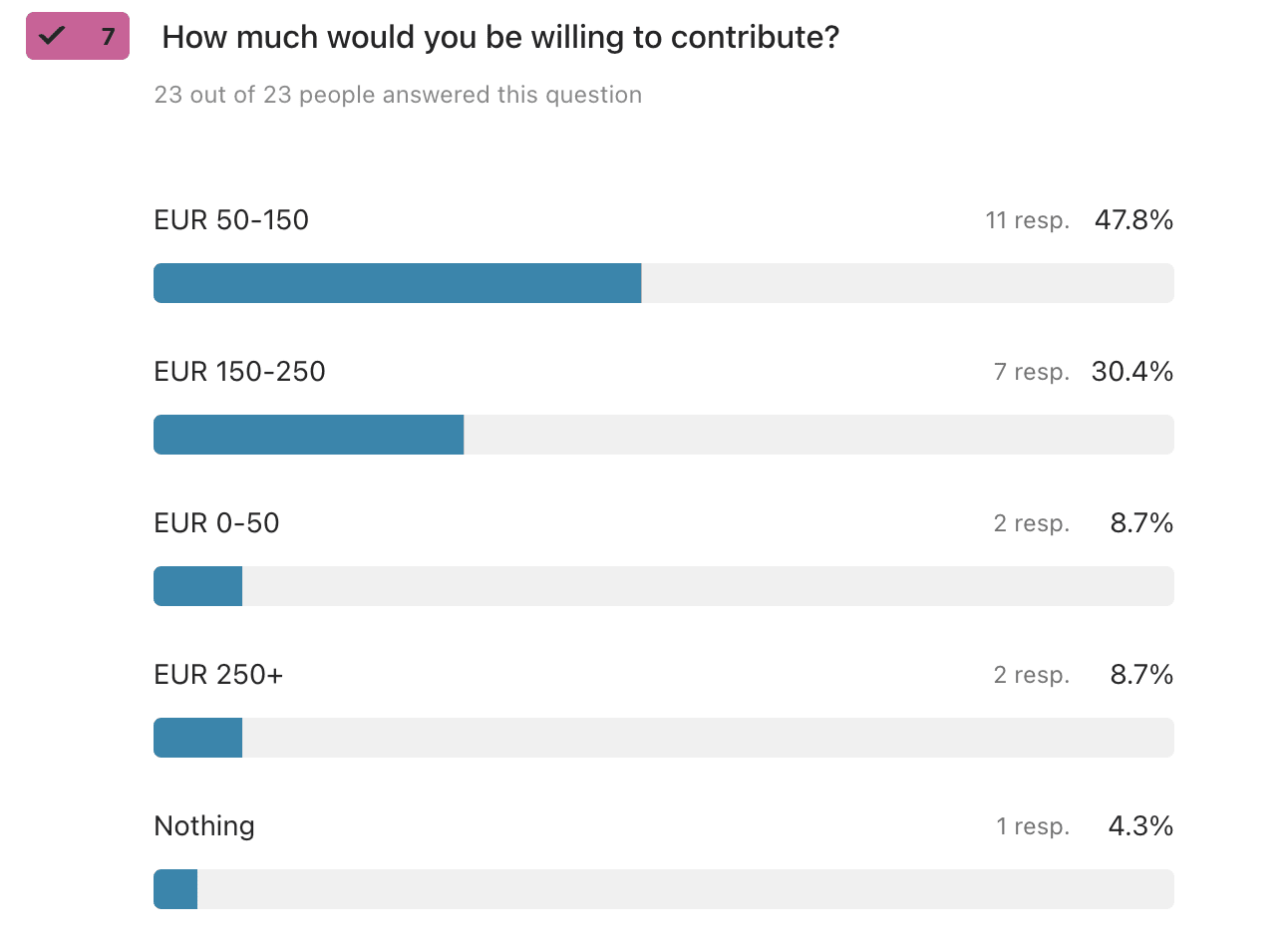

Yes good point! We haven't done this yet (apart from the fact we expect participants to cover their own travel costs (easily done in NL with good public transport)) BUT for our 'EA professionals' retreat last autumn we did ask people to give an indication of how much they'd be willing to pay. I've included the results below.

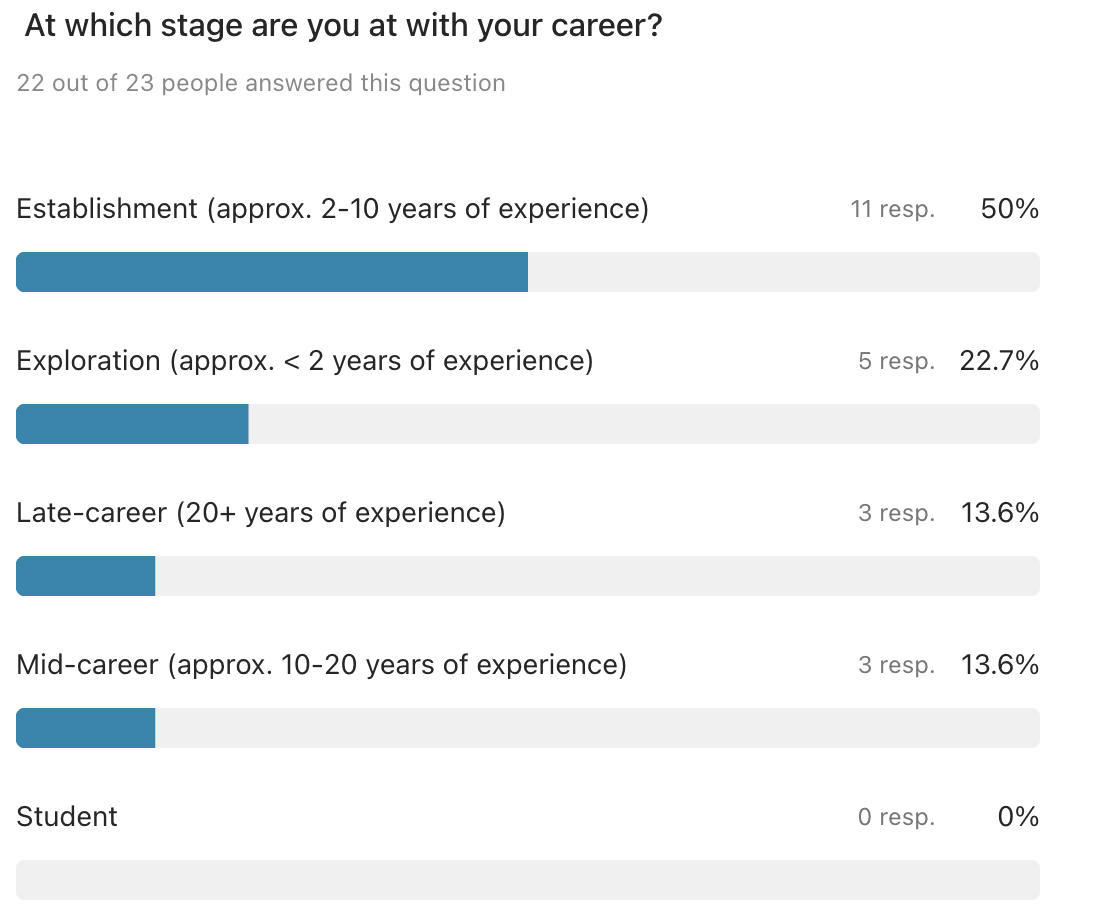

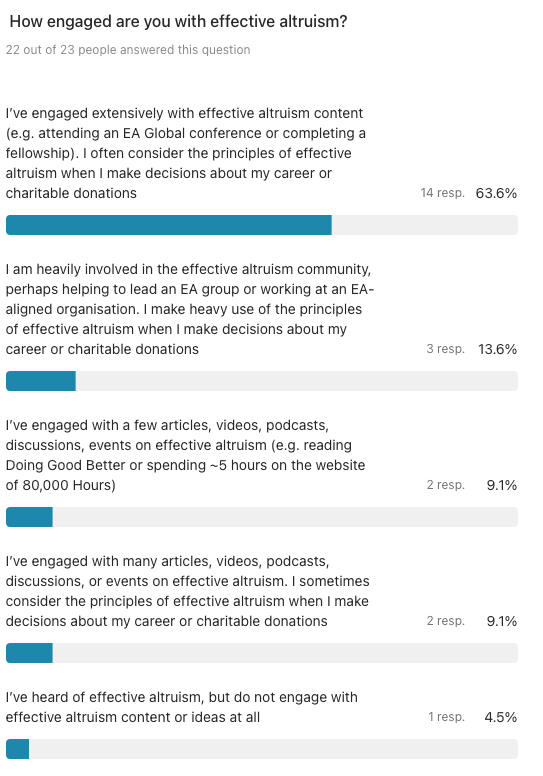

And to give an idea of the respondents, here's some more data:

I ask ChatGPT to analyse the text's sentiment.

What is the text's sentiment re effective altruism?

The sentiment towards effective altruism (EA) in the provided text is primarily skeptical and critical. The text associates effective altruism with the AI safety movement, describing them as intertwined. It suggests that EA has been recruiting ambitious young people on elite college campuses, but questions the movement's focus, methods, and associations.

Here are a few points from the text that highlight the skeptical sentiment toward effective altruism:

- The text mentions that EA initially focused on global poverty but then shifted its focus to rogue AI, with the implication that this shift may not be entirely grounded in current, practical concerns. This suggests a lack of consistency in the movement's goals.

- The text notes that extreme practitioners within EA began promoting an idea called “longtermism,” which is portrayed as prioritizing the lives of hypothetical future beings over present-day suffering. This is presented as a rather abstract and potentially disconnected concern.

- The text mentions that EA is associated with controversial figures and ideas, including a scandal involving one of its largest donors, Bankman-Fried, and a backlash against Nick Bostrom, an influential figure in EA, for controversial past writings. This suggests that the movement is connected to individuals and ideas that have faced criticism.

- The phrase “EA Kool-Aid” is used in the text, a colloquial term that implies that EA might be likened to a cult where followers are expected to accept beliefs without critical examination.

Overall, the sentiment toward effective altruism in the text is that it is a movement with questionable focus, controversial associations, and methods that may be seen as cult-like or ungrounded.

What is the text's sentiment re the field of AI safety?

The sentiment towards the field of AI safety in the provided text is largely mixed with a tinge of skepticism. While the text acknowledges the importance and legitimacy of AI safety as a field, it appears to criticize its association with effective altruism and the extreme scenarios some proponents focus on.

Here are some points from the text that illustrate the sentiment towards AI safety:

- The text acknowledges that AI safety is a “legitimate field”, indicating that there is recognition of its relevance and importance.

- The description of the “superintelligent AI uprising” being an “extremely improbable scenario” reflects skepticism towards certain extreme concerns within the field. It suggests that there may be an exaggerated focus on unlikely outcomes.

- The association of AI safety with effective altruism in the text might imply that some aspects of AI safety are criticized for being too abstract or speculative, as effective altruism is portrayed with skepticism, particularly when it shifted focus to long-term, more abstract concerns like rogue AI.

- The mentioning of AI safety researchers not taking themselves too seriously in the text suggests that there may be an underlying criticism or questioning of the magnitude of certain claims or concerns within the field.

In summary, the text portrays AI safety as an important and legitimate field, but questions the focus on highly speculative scenarios and criticizes its close association with the effective altruism movement, which is portrayed skeptically. The text also seems to suggest a need for a more grounded and realistic approach within the AI safety community.

Do a sentiment analysis of the text

The text provided can be broken down into various elements in terms of sentiment:

Neutral-Positive sentiment towards Paul Edwards and Steve Luby: The text introduces Paul Edwards and Steve Luby in a neutral light and showcases their dedication and positive intentions in educating young minds about potential threats to humanity.

Neutral sentiment regarding Artificial Intelligence (AI) risks: The text discusses AI risks and how the concept has gained traction over time. It doesn't take a side, rather presents the evolving perception of AI.

Positive sentiment towards efforts to counter rogue AI: The text illustrates a positive sentiment towards various organizations, philanthropists, and labs (such as DeepMind, OpenAI, and Anthropic) that are taking steps to prevent AI-related catastrophes by promoting AI safety and developing benign AI systems.

Negative sentiment towards the AI safety movement by critics: The text highlights criticism of the AI safety movement as unscientific and potentially distracting from other important issues. The movement is presented as potentially exaggerative and is likened to a religion by critics.

Positive sentiment towards student involvement in AI safety: The article shows positive sentiment towards the involvement of students, especially Gabriel Mukobi, in AI safety, and how they are becoming increasingly aware and motivated to act against the potential existential risks associated with AI.

Negative sentiment regarding controversial figures and ideas associated with the AI safety movement: The text shows a negative sentiment towards certain figures and ideas associated with the AI safety movement, including Effective Altruism (EA) and past controversial statements by Nick Bostrom.

Neutral-Positive sentiment towards the increasing acknowledgment of AI safety: Towards the end, the article discusses how AI safety is becoming a topic of growing interest and concern among academia and industry. This is presented in a neutral to positive light, indicating the recognition of AI safety as an area that requires attention.

Mixed sentiment among educators: The article ends with mixed sentiments among educators like Luby, who seems to be in agreement with the concerns, and Edwards, who acknowledges AI safety but still sees other issues such as climate change as more immediate threats.

In summary, the text provides a balanced view, containing neutral, positive, and negative sentiments regarding the topic of AI safety and its growing prominence in academia and the tech industry. There is a general positive sentiment towards proactive measures and student involvement, but also a significant negative sentiment related to the criticisms and controversial aspects of the AI safety movement.

Thanks for the thoughtful reply!

Distinguishing between approaches at the level of the individual

I think it gets a little tricky at the level of the individual. But with your specific example, I'd classify an E2G individual on the basis of what they give to. If they give to HLI or GPI I'd say they're field building. If they give to CEA I'd say they're doing movement support and network development.

If they just give to AMF or whatever, i.e., an org doing 'direct work', I'd say they aren't strictly speaking contributing to the specific social change EA is aiming for, viz., increasing the extent to which people use reason and evidence when trying to do good. And so I wouldn't use the classification system I've laid out in this post to describe them.[1]

But that isn't to say they aren't an EA. I wouldn't say you need to be pushing for the increased use of evidence of reason when doing good to be an EA, you just need to be adopting the approach yourself.

What does running an intro fellowship count as?

Based on little more than vibes, I'd describe running an intro fellowship as movement support rather than field building. This is because it isn't directly pushing EA forward as a research field, nor is it providing a professional level of training for future researchers. It also fits quite well into this system for measuring the progress of social (protest) movements (see page 60).

Why I think movement support and promoting the uptake of practices is currently more valuable than networking

Yes for sure networking is important, I get a lot of value from it too, but when I'm talking with other EAs I often find myself saying/thinking, "Have you thought about asking someone who isn't an EA for their opinion on this?", and that to me is an indicator we spend too much time talking to each other. I also think there are lots of people who would benefit the EA movement who are not currently part of it, particularly people beyond the anglosphere and Europe.

Given these two beliefs, I think we should use more of our limited 'social change budget' on getting more people involved. This would also grow the pie, allowing us to do more of all the other stuff. To be more specific, I think we should massively scale up the intro fellowship. In 2021 we had about 60 people complete it in NL, in 2022 it was around 400. I think we should aim for thousands.

Re promoting the uptake of practices, personally, I've updated positively on this after seeing how often CE recommends interventions of this type (and the subsequent successes of its incubatees, e.g., LEEP).

Thanks again for your comment!

- ^

For what it's worth, I'd describe them as field building in the field of global health and development (with the social change being aimed for being something like: "improve the health and wellbeing of populations, particularly in low and middle-income countries").

Hey David, your observation that 'the dominant factors just seem to be those operating at much larger scale, rather than smaller, more targeted interventions' is a recurring theme is very interesting!

To check I understand, are you saying that things like websites, podcasts, and other scalable things seem to be having much more impact than things such as 1-1s? I'm asking because we at EA Netherlands sometimes wonder how much we ought to be investing in our website vs smaller and more targeted interventions.

Thanks for publishing this! Very helpful.

One quick thought: those retreats seem extraordinarily expensive. EA Netherlands has organised four retreats since February last year. I haven't checked the most recent one but, of the first three, the most expensive had a cost per person of EUR 260 (not including the cost of EA Netherlands spending time organising it). Granted, they were short retreats (arrive late on the Friday, leave Sunday afternoon before the evening meal), but it would be interesting to see how they compare to the events you looked at on the outcome measures you specified.

For example, for our 'raw' average number of connections, across all four retreats the figure is 4.6. So that's approx 35% less than the EAGx events you looked at, and 30% less than the retreats you looked at, but our most expensive retreat cost approximately 80% less per person than the average CEP retreat.

Thanks so much for this! Could we get country-by-country breakdowns? Privately would be fine. For example, I'd love to see how the results for the Netherlands have changed over time. I'd also like to see how different countries compare to one another on various metrics.