christian

Posts 29

Comments18

Metaculus is building a team dedicated to AI forecasting

The speed, sophistication, and impacts of AI technology development together comprise some of the most astonishing and significant events of our lifetimes. AI development promises both enormous risks and opportunities for society. Join our AI forecasting team and help humankind better navigate this crucial period.

Open roles include:

Machine Learning Engineer - AI Forecasting

You’ll work to enhance the organization and searchability of our AI analyses, ensure that the AI-related data and thinking that we rely on is up-to-date, comprehensive, and well organized, and deliver (via modeling) forecasts on an enormous set of questions concerning the trajectory of AI.

Research Analyst - AI Forecasting

You’ll engage deeply with ideas about the future of AI and its potential impacts, and share insights with the AI research and forecasting communities and with key decision makers. You’ll use crowd forecasting to help generate these insights, writing forecasting questions that are informative and revealing, facilitating forecasting tournaments, and coordinating with Pro Forecasters.

Quantitative Research Analyst - AI Forecasting

You’ll use quantitative modeling to improve our ability to anticipate the future of AI and its impact on the world, enhance our AI-related decision making capabilities, and enable quantitative evaluation of ideas about the dynamics governing AI progress.

You can learn about our other high-impact, open positions here.

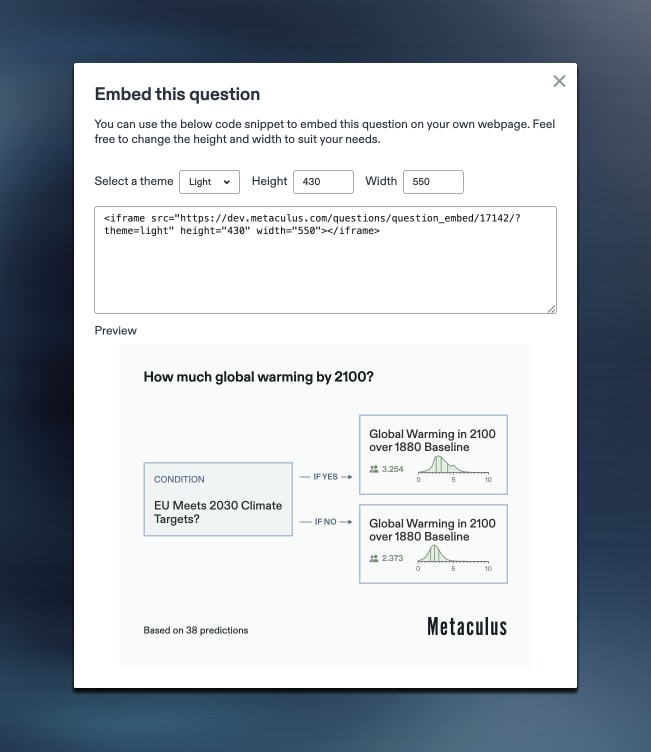

Embed Interactive Metaculus Forecasts on Your Website or Blog

Now you can share interactive Metaculus forecasts for all question types, including Question Groups and Conditional Pairs.

Just click the 'Embed' button at the top of a question page, customize the plot as needed, and copy the iframe html.

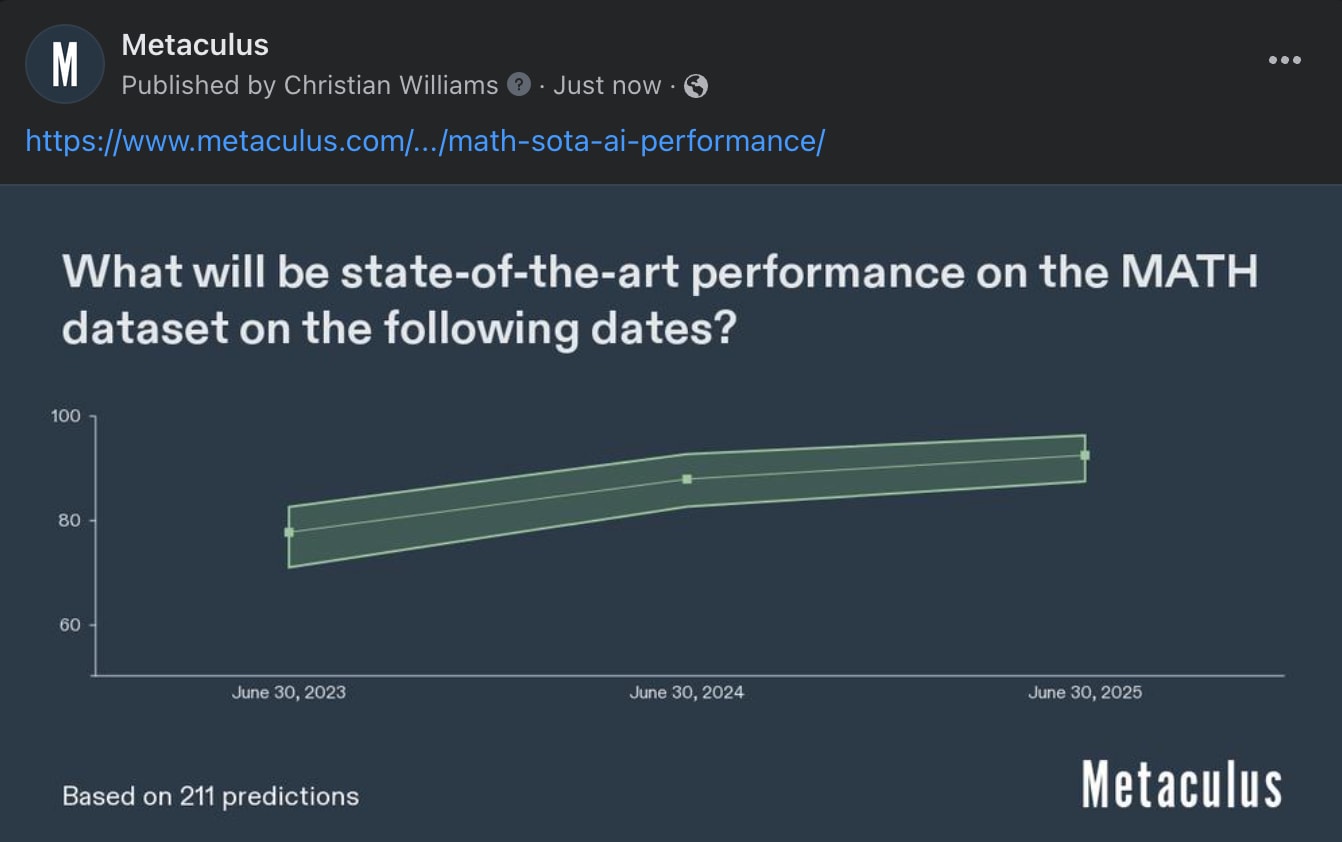

Improved Forecast Previews

Metaculus has also made it easier to share forecast preview images for more question types, on platforms like Twitter, Substack, Slack, and Facebook.

Just paste the question URL to generate a preview of the forecast plot on any platform that supports them.

To learn more about embedding forecasts & preview images, click here.

Metaculus is excited to announce the winners of the inaugural Keep Virginia Safe Tournament! This first-of-its kind collaboration with the University of Virginia (UVA) Biocomplexity Institute and the Virginia Department of Health (VDH) delivered forecasting and modeling resources to public health professionals and public policy experts as they have navigated critical decisions on COVID-19.

Congratulations to the top 3 prize winners!

"On behalf of the Virginia Department of Health, I’d like to offer our congratulations to Sergio, 2e10e122, mattvdm, and all the forecasters who participated in the Keep Virginia Safe Forecasting tournament! Because of your work, Virginia was better able to navigate the Delta and Omicron waves and prepare for the long-term impacts of COVID-19, helping us keep Virginians safe during a critical period."

—Justin Crow, Foresight & Analytics Coordinator for the Virginia Department of Health

Thank you to forecasting community! Your predictions were integrated into VDH planning sessions and were shared with local health department staff, statewide epidemiologists, and even with the Virginia Governor’s office.

For more details on the tournament outcomes, visit the project summary.

Our successful partnership with UVA and VDH continues through the Keep Virginia Safe II Tournament, where Metaculus forecasts continue to provide valuable information. Join to help protect Virginians and compete for $20,000 in prizes.

Find more information about the Keep Virginia Safe Tournament, including the complete leaderboard, here.

Join Metaculus for Forecast Friday, April 28th at 12pm ET

Are you interested in how top forecasters predict the future? Curious how other people are reacting to the forecasts in the main feed?

Join us April 28th at 12pm ET/GMT-4 for Forecast Friday!

Click here to go to Gather Town. Then take the Metaculus portal.

This Friday

Seasoned forecaster and Product Manager for Metaculus Sylvain Chevalier will present for this week's Forensic Friday session.

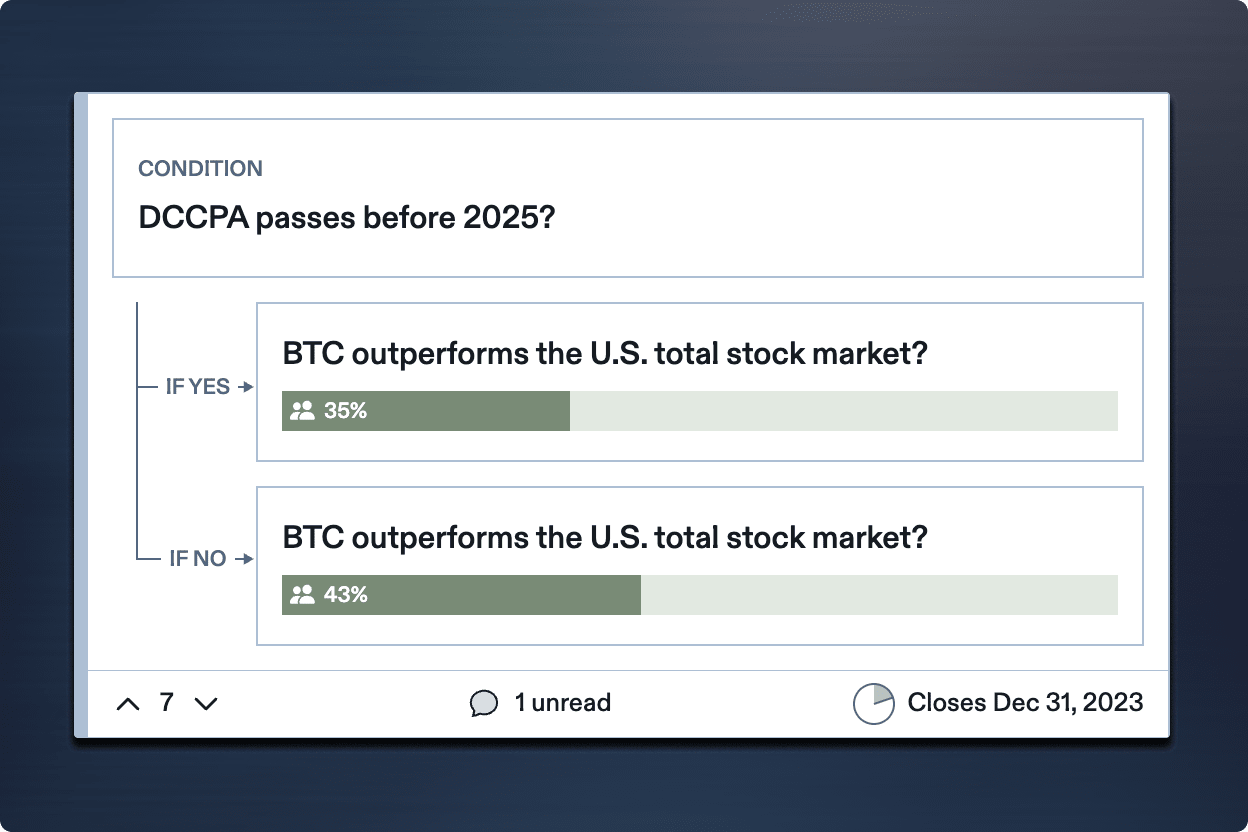

Now You Can Submit Conditional Pair Questions on Metaculus

Curious to learn the likelihood of an event given the outcome of another? Now you can create conditional pairs of binary questions and invite the forecasting community to share their predictions to clarify the relationships between events.

Not yet familiar with Metaculus's conditional pairs? Read the guide here or watch this introductory video to get up to speed.

🔎 Discover More Questions Relevant to You With Metaculus's New Filter & Sort Tools

Where do you disagree with other forecasters? Which community predictions have shifted the most? And what was that nanotech forecast you meant to update? Metaculus has introduced new filter & sort tools that provide more control over the forecast feed so you can find the questions that matter to you.

Learn more

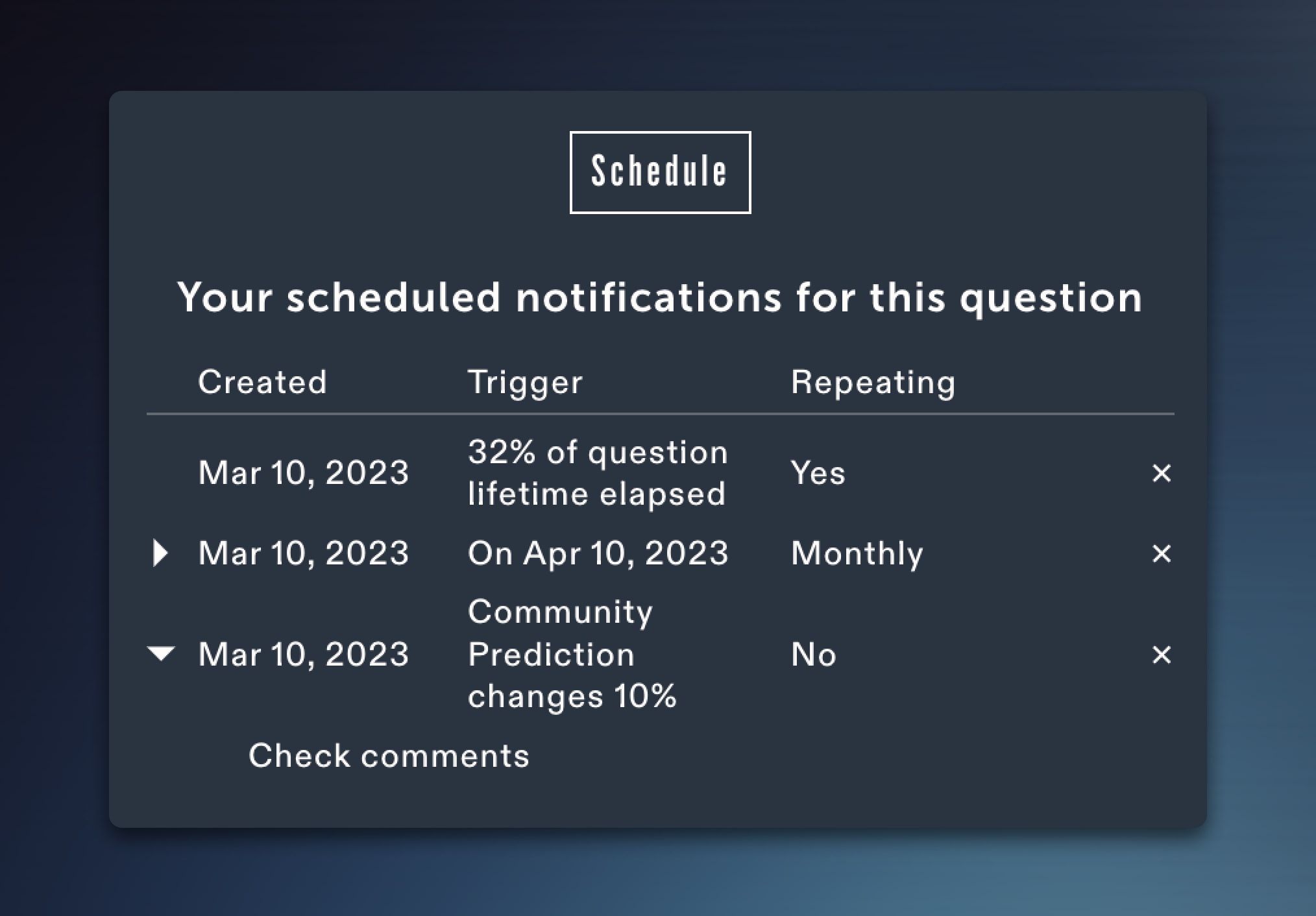

🕛 New Metaculus Feature: Now You Can Set Custom Forecast Notifications

Metaculus has upgraded its forecast notifications to make it easier to follow the questions you care about so you can keep your predictions fresh and stay up to date as stories develop. Set a single notification that alerts you whenever the Metaculus community's prediction shifts, when new comments are made, when a question nears its close date, or at regular intervals over a question's life. Learn more about forecast notifications and how you can use them here.

🔬 Metaculus Officially Launches API

Metaculus has officially launched the Metaculus API, providing access to a rich, quantitative database of aggregate forecasts on 7000+ questions. Start exploring, analyzing, and building here.

Metaculus is conducting its first user survey in nearly three years. If you have read analyses, consumed forecasts, or made predictions on Metaculus, we want to hear from you! Your feedback helps us better meet the needs of the forecasting community and is incredibly important to us.

Take the short survey here — we truly appreciate it! (We'll be sure to share what we learn.)