This is part 3 in a 5-part series entitled Conscious AI and Public Perception, encompassing the sections of a paper by the same title. This paper explores the intersection of two questions: Will future advanced AI systems be conscious? and Will future human society believe advanced AI systems to be conscious? Assuming binary (yes/no) responses to the above questions gives rise to four possible future scenarios—true positive, false positive, true negative, and false negative. We explore the specific risks & implications involved in each scenario with the aim of distilling recommendations for research & policy which are efficacious under different assumptions.

Read the rest of the series below:

- Introduction and Background: Key concepts, frameworks, and the case for caring about AI consciousness

- AI consciousness and public perceptions: four futures

- Current status of each axis (this post)

- Recommended interventions and clearing the record on the case for conscious AI

- Executive Summary (posting later this week)

This paper was written as part of the Supervised Program for Alignment Research in Spring 2024. We are posting it on the EA Forum as part of AI Welfare Debate Week as a way to get feedback before official publication.

Having characterised the major scenarios & risks associated with conscious AI, we now shift gears towards assessing our current situation. This section addresses the two fundamental axes of our 2D framework with a view to the present & near-term future. We begin with discussion of the empirical axis (§4.1), after which we turn to the epistemic axis (§4.2).

4.1 Empirical axis

The upshot as concerns the empirical axis is as follows:

- Current AIs are unlikely to be conscious.

- It is difficult to determine the likelihood of future conscious AI.

- We currently lack robust means of assessing AI consciousness.

4.1.1 Current AIs are [probably] not conscious

In general, experts do not believe that any current AI systems are conscious. As previously mentioned (§2.12), some experts do not even believe that it is possible for AIs to be conscious at all (e.g. because they are not living things, & only living things can be conscious). Yet even those who are sympathetic to substrate neutrality tend to doubt that current AIs (especially LLMs) are conscious (Long; Chalmers). This is because they appear to lack the architectural features described in our best theories of consciousness (e.g. information integration or a global workspace).

4.1.2 Sources of uncertainty regarding AI consciousness

The current state of expert opinion might optimistically be termed a consensus. Even so, this concurrence is highly unstable at best. On the one hand, expert agreement is all but assured to be fractured with continued advancements in AI. On the other hand, there remains much uncertainty regarding several important aspects of AI consciousness:

- We don’t really understand what consciousness is. In other words, we lack a general theory of how consciousness arises from non-conscious matter & processes (e.g. neurochemical reactions)– we do not have a solution to the “hard problem” of consciousness [Chalmers]. Although there exist different proposals (e.g. global workspace theory, integrated information theory, etc.; Ferrante et al 2023; Hildt 2022), neither commands broad consensus among experts.

- We don’t have good ways of empirically testing for consciousness in non-humans. Partly as a result of the foregoing doubts regarding the very possibility of nonbiological consciousness, there currently exists no agreed-upon standard for testing for consciousness in AI (Dung 2023a; Chalmers 2023). These issues are discussed at length in the sections to follow.

- We don’t really understand how current AI systems (i.e., deep learning models) work. Overarching “grand theories” of consciousness do not tend to enable specific predictions about which things are conscious (Dung 2023a). In any case, the physical mechanisms that give rise to consciousness in living things may not be the same as those that give rise to consciousness in machines (ibid). Properly diagnosing consciousness will likely require both “top-down” & “bottom-up” work. Among other things, this will require a deeper understanding of how various types of AI systems work.

- “Risk of sudden synergy”. It’s hard to forecast future developments in AI consciousness because progress may not be linear. It is possible that a convergence of lines of research may lead to abrupt & exponential progress (Metzinger 2021a).

As it stands, we are in a state of radical uncertainty about future conscious AI (Metzinger 2022). Continued progress in (i) consciousness research, (ii) consciousness evaluations, & (iii) interpretability[1] may go some way towards mitigating this uncertainty. In the subsequent sections, we focus on (ii), touching on outstanding obstacles to reliable empirical tests of consciousness.

The current state of consciousness evals

The primary method of assessing consciousness in humans is through introspection & verbal report. However, this method is problematic in two respects: it is both over-exclusive & over-inclusive.

- Over-exclusive: Introspection & verbal report cannot be used to ascertain consciousness in beings that are incapable of language, such as infants, individuals with various linguistic disabilities, or animals.

- Over-inclusive: The verbal reports of AIs such as current LLMs cannot be trusted as evidence of their consciousness, given that (1) their responses can be influenced by leading questions & (Berkowitz 2022) (2) the texts they have been trained on include verbal reports of subjective experience produced by humans (Labossiere 2017; Chalmers 2023; Birch & Wilson 2023; Long & Rodriguez 2023).

To be sure, dedicated tests of machine consciousness have a long history[2]. Despite this, there remains no widely accepted test of AI consciousness. Part of the reason for this is because, as previously mentioned (§2.12), there is still disagreement over whether it is possible for AI to be conscious in the first place. But this is not the only obstacle. Even if researchers agreed that a machine could, in principle, be conscious, the question of how to detect & diagnose consciousness would still remain[3]. In fact, many philosophers argue that consciousness is simply not the kind of thing that is amenable to empirical study (Jackson 1982; Robinson 1982; Levine 1983): that no amount of empirical information could conclusively establish whether or not a thing actually is conscious. In response to this concern, one might adopt a precautionary approach (§2.321)– rather than conditioning AI’s moral status upon our knowing, beyond the shadow of a doubt, that they are conscious, or even strong credence (Chan 2011; Dung 2023a), we might settle for a weaker standard: a non-negligible chance (Sebo & Long 2023). This approach has been profitably pursued in animal welfare advocacy (Birch 2017).

As a result of the above doubts about the in-principle possibility of machine consciousness, as well as slow progress in AI development during the AI winters, progress on consciousness evals has largely been relegated to the field of animal sentience[4]. Indeed, conceptual & empirical research on animal sentience has often served as the template for research into AI consciousness (Dung 2023b; c.f. Tye 2017). Such efforts have proven to be of immense value in three respects:

- Conceptual precisification: Tests of animal sentience have served to disambiguate the notoriously elusive notion of consciousness into more precise capacities. For instance, Birch et al (2020) distinguish between five different dimensions: (i) self-consciousness/selfhood (the capacity to be aware of oneself as distinct from the external world), (ii) phenomenal richness (the capacity to draw fine-grained distinctions in a given sensory modality), (iii) evaluative richness (the capacity to undergo a wide range of positively or negatively valenced experiences), (iv) unity/synchronic integration (the capacity to bring together different aspects of cognition into a single, seamless perspective or field of awareness at a given point in time), & (v) temporal continuity/diachronic integration (the capacity to recall past experiences & simulate future experiences, all while relating them to the present).

This conceptual refinement has important consequences for discussions of AI consciousness. If consciousness can be decomposed into qualitatively distinct elements such as these, then it does not make sense to speak of different creatures as “more conscious” or “less conscious” (ibid; §2.4). Rather, they may better be thought of as “conscious in different ways” (Hildt 2022; cf. Coelho Mollo forthcoming). - Empirical tractability: As a result of being better defined, the above-mentioned capacities proposed by Birch et al promise to facilitate empirical testing. In the same paper, Birch et al catalogue specific questions that have been explored under experimental paradigms under the foregoing five dimensions of consciousness (ibid). These provide avenues for future diagnostics– including testing for consciousness in AIs (Dung 2023b). Satisfying multiple empirical measures of consciousness could provide defeasible grounds for believing that a given creature or AI is conscious (Ladak 2021).

The theory-light paradigm: Theorists studying AI consciousness often draw a distinction between two broad approaches[5]. The “top-down” approach to AI consciousness involves first articulating a general theory of consciousness, & then drawing inferences from this to specific creatures or AIs (Dung 2023a). Over the years, this approach has been forestalled by chronic philosophical & scientific disagreements (Levine 1983). Discontent to defer animal welfare efforts to the fulfilment of this agenda, researchers are increasingly adopting a “bottom-up” (Birch et al 2022) & “theory-light” (Birch 2020) approach to animal & AI consciousness[6]. This approach is defined by a minimal commitment to some sort of relationship between cognition & consciousness (ibid). There are no further overarching tenets, nor is any particular feature of consciousness held to be essential (Ladak 2021). In this way, the theory-light approach enables researchers to make empirical headway on questions related to welfare concerns without waiting for a general theory of consciousness.

Prevailing challenges to testing for consciousness in AI

Today, there is a new wave of interest in AI consciousness evals[7] (Schneider 2019; Berg et al 2024; Sebo 2024). Nonetheless, much work is still needed in order to devise reliable empirical tests of AI consciousness. Four major outstanding issues include:

How to make increasingly fine-grained assessments of specific symptoms or criteria of consciousness? With continued research, consciousness as we know it in the broad sense is increasingly refined into narrower, better defined capacities (Birch et al 2022). These more granular capacities can themselves be assessed in even more precise ways. Take, for instance, the capacity of phenomenal richness: the capacity to draw fine-grained distinctions in a given sensory modality (e.g. vision, olfaction…)[8],[9]. Birch et al (2022) note that P-richness can itself be further resolved into bandwidth (e.g. the amount of visual content that can be perceived at any given moment), acuity (the fineness of “just-noticeable differences” which can be detected), and categorisation power (the ability to organise perceptual properties under high-level categories[10]). Whether & how these, in turn, may be refined into subtler, more empirically robust capacities remains to be seen.

How to integrate different symptoms or criteria into an overall assessment of consciousness? The increasing proliferation of empirical measures of consciousness under the open-ended theory-light paradigm raises the question of how to bring these qualitatively distinct criteria together into a holistic evaluation. Mature tests of AI consciousness will need to systematically negotiate these diverse factors– for instance, by weighing different criteria according to Bayesian rules[11] (Muelhauser 2017; Ladak 2021), or exploring the possibility that certain combinations of traits may be synergistic (or even antagonistic). Importantly, this question will become increasingly critical as empirical measures of consciousness become increasingly refined (see discussion of P-richness in #1).

How to account for AIs possibly gaming consciousness evals? AIs present two unique challenges to consciousness evals (or evals of any sort, for that matter; Hendrycks forthcoming). First, they can be purpose-built by developers to pass or fail consciousness evals (Schwitzgebel 2024). Developers may harbour any number of motivations (financial or otherwise) to ensure that their AIs achieve certain results on consciousness evals, & can design models to behave accordingly in test scenarios. For instance, a lab seeking to skirt regulatory protections for conscious AI might prevent their models from being able to pass consciousness evals. Second, AIs themselves, if sufficiently situationally aware, might determine that certain results on consciousness evals are either conducive or detrimental to their goals. Birch & Andrews (2023) call this the “gaming” problem[12]. For example, in the false positive scenario, society might provide certain legal protections to AI that is “provably” conscious. Non-conscious AI that recognises the strategic value of these benefits with respect to its own goals may aim to pass consciousness evals (or design future systems capable of passing consciousness evals). In the long run, this can lead to human disempowerment (§3.2.2).

All this shows that a variety of approaches will have to be combined in order to ensure the reliability of AI consciousness evals (Hildt 2022). Among other things, this includes research on “negative criteria”– or defeaters: features which count against an AI’s being conscious. One simple example of this is an AI’s having been designed specifically to exhibit certain features of consciousness. In such cases, the AI’s seeming to be conscious is simply ad hoc (Dung 2023a) or gerrymandered (Shevlin 2020; cf. Schwitzgebel 2023) as opposed to “natural”.

AI consciousness evals might become increasingly sensitive & specific, reducing the risks of false positives and false negatives. However, beyond a certain point, further increasing one often comes at the expense of the other due to inherent trade-offs in test design (Doan 2005). Precautionary motivations favour tolerating more false positives. That being said, the diagnostic biases of consciousness evals will likely need to be adapted in response to evolving technological, cultural, & social conditions.Where is this all going? It bears emphasis that the theory-light approach is a stopgap tactic. For the time being, the theory-light approach performs the crucial function of allowing conceptual & empirical consciousness research programmes to progress in parallel– all in the service of potential moral patients (§2.321). In the long run, however, it will become increasingly necessary to build explicit & substantive connections between theory & measurement. Firstly, such connections may help mitigate concerns about AI gaming consciousness evals (Hildt 2022). Secondly, merely suspending theory does not amount to absolute theoretical impartiality. Researchers operating under the theory-light approach might nonetheless unwittingly embed assumptions about consciousness (see, e.g. Thagard 2009; Kuhn). What is nominally “theory-light” may later turn out not to be so neutral after all. Disastrous consequences can follow if implicit, unreflective assumptions about consciousness are allowed to influence policy[13]. Thirdly, concepts of consciousness might drift apart & eventually diverge into fully distinct notions (e.g. biological consciousness vs. machine consciousness; Blackshaw 2023). While natural conceptual evolution is not inherently problematic, this can become an issue if this divergence leads to objectionably preferential treatment. This can manifest in a future scenario in which AIs are considered “conscious but not in the sense that matters” (e.g. in a biological sense). In this case, a bioessentialist concept of consciousness would, all else being equal, prioritise the interests of living things to those of AIs. The point is not that a unified concept of consciousness ought to be preserved at all costs. Rather, it is that all stakeholders should be sensitive to the material consequences of conceptual drift & revision.

4.2 Epistemic axis

Right now, AI consciousness receives relatively little attention compared to other AI-related concerns, such as bias and discrimination, misinformation and disinformation, worker displacement, and intellectual property infringement (Google Trends, 2024). Nevertheless, when asked about it, most people do express some level of concern about AI consciousness—the modal view today is that it is possible for future AIs to be conscious, and if they were, they should be given some degree of moral consideration.

4.2.1 What does the general public believe about AI consciousness today?

A 2023 poll by the Verge and Vox Media’s Insights and Research team of 2,000+ American adults found that around half “expect that a sentient AI will emerge at some point in the future” (Kastrenakes and Vincent, 2023). Similarly, according to the 2023 Artificial Intelligence, Morality, and Sentience (AIMS) survey conducted by the Sentience Institute (Pauketat, Ladak, & Anthis, 2023), nearly 40% of Americans believe it is possible to develop sentient AI, compared to less than a quarter of American adults who believe it is impossible. Some proportion of individuals—5% according to a poll by Public First (Dupont, Wride, and Ali, 2023) and 19% according to the AIMS survey—even believe that some of the AIs we have today are already sentient. However, it is worth highlighting that these polls focus on the US and UK—there is currently a lack of data on the views of other regions, which AI agents will be also subject to the laws and treatment of.

As with most other topics, it seems likely that these beliefs will vary between different demographics. Age and gender have already been shown to influence people’s opinions on other AI-related topics—for example, young people and men are more likely to trust AI, while women and older people are less likely to trust it (Yigitcanlar, 2022). However, research into which demographics are more likely to believe AI consciousness is possible, whether it would be deserving of moral consideration if so, and which policies should be enacted to mitigate the possible harms is either scant or lacking.

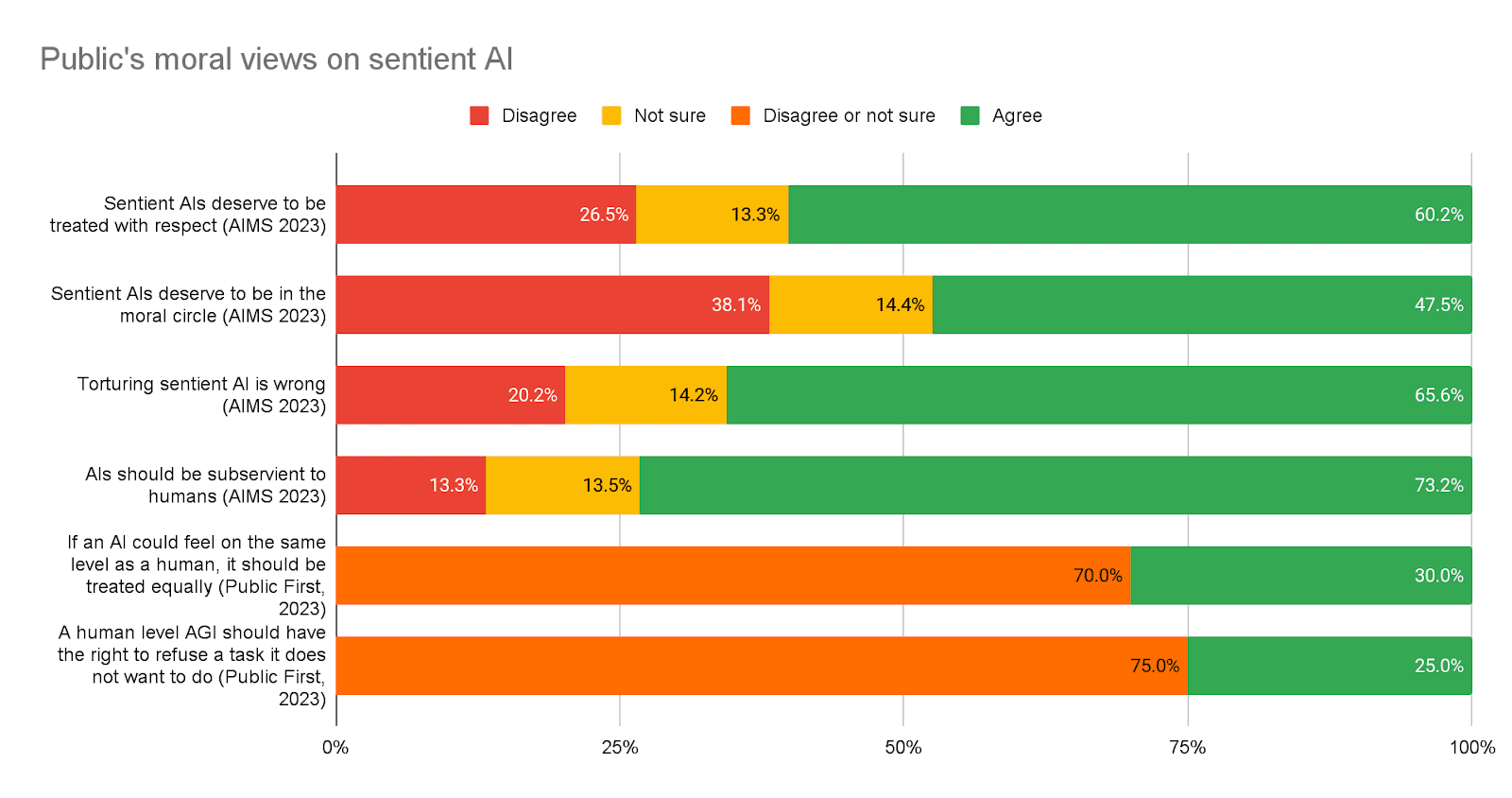

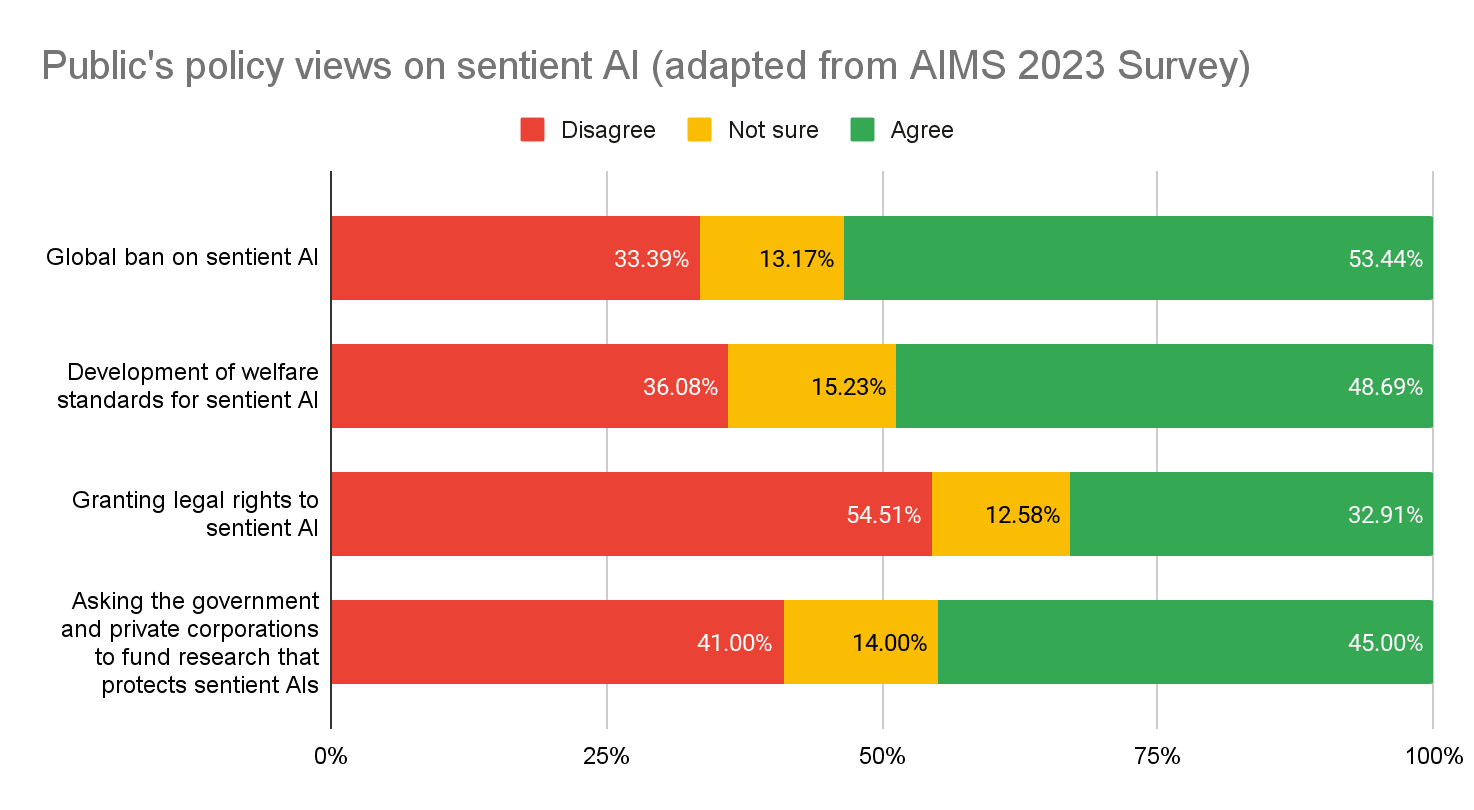

Moral consideration and support for policies

There does seem to be a level of agreement among the public that sentient AIs deserve some degree of moral consideration, though short of being treated equally to humans. This moral consideration translates to varying degrees of support for different public policies:

Moreover, Lima et al. (2020) have shown that certain interventions can have “remarkable” and “significant” effects in promoting support for AI sentience policies. Namely, showing respondents examples of non-human entities that are currently granted legal personhood (e.g. corporations or, in some countries, nature) shifted the opinion of 16.6% of individuals who previously were opposed to granting AIs legal personhood. This shows that shifting public opinion on this topic may be tractable through educational campaigns.

That said, it is worth highlighting that even if people support AI welfare in principle, it is unclear to what extent this will translate into a willingness to make real trade-offs in the name of AI welfare. Using animal welfare as an analogue, many people believe farmed animals are sentient and, in theory, would prefer for them not to be subjected to factory farm conditions—but in practice, few are willing to stop buying animal products or make other lifestyle changes to prevent harms to animals (Anthis and Ladak, 2022). Additionally, the figures above are subject to considerable uncertainty—in the past, a similar survey on factory farming by the Sentience Institute has failed to replicate (Dullaghan, 2022).

4.2.2 How are the public’s beliefs about AI consciousness likely to evolve?

It seems likely that as AI becomes more advanced and anthropomorphic, the proportion of people that believe it is sentient will increase. Vuong et al. (2023) found that “interacting with an AI agent with human-like physical features is positively associated with the belief in that AI agent’s capability of experiencing emotional pain and pleasure” (see also Perez-Osorio & Wykowska 2020). Similarly, Ladak, Harris and Anthis (2024) tested the effect of 11 features on moral consideration for AI, and found that “human-like physical bodies and prosociality (i.e., emotion expression, emotion recognition, cooperation, and moral judgment)” had the greatest impact in people’s belief that harming AI is morally wrong.

Anecdotally, the anthropomorphization of AI seems to be already materialising—some users of AI companion apps like Replika have come to believe their AI partners are sentient (Dave, 2022). These relationships may become increasingly common, and contribute to the belief that AIs can experience feelings—in a poll conducted by the Verge, 56% of respondents agreed that “people will develop emotional relationships with AI”, and 35% said they “would be open to doing so if they were lonely.”

On the other hand, there might also be reasons for the opposite to occur, i.e. anthropodenial (de Waal, 1999). For instance, if treating conscious AIs as tools would be unethical, people would have an incentive not to believe AIs are conscious. This would be precedented by the anthropodenial humanity has engaged in towards other animal species, or historically, towards humans of different ethnicities (Uri, 2022). It is also worth highlighting that the anthropomorphising of AIs may be harmful, as the way AIs prefer to be treated might differ from the way humans prefer to be treated—Mota-Rojas et al. (2021) have outlined how a similar dynamic plays out in interactions towards companion animals.

How will expert and public opinions on AI consciousness interact?

There is evidence that the public currently thinks about conscious experience differently than experts—namely, Sytsma & Machery (2010) found that, compared to philosophers. laypeople were more likely to attribute phenomenal consciousness (e.g. the ability to see ‘red’) to robots while rejecting the idea that they felt pain.

It is unclear what the effect of expert opinion will be on the general public’s belief in AI consciousness. On other topics, such as climate change and vaccines, we have seen the public fracture along ‘high trust’ and ‘low trust’, and place different weight on the views of experts accordingly (Kennedy and Tyson, 2023). However, given the philosophical nature of this topic, it seems possible that people will be especially likely to dismiss ‘expert opinion’. Davoodi and Lombrozo (2022) have shown that people distinguish between scientific unknowns (e.g. What explains the movement of the tides?) and “universal mysteries” (Does God exist?). It is possible that many people will not consider consciousness a scientifically tractable topic, and will consider the views of experts less relevant than their own intuition for this reason.

- ^

Interpretability work that may clarify the question of AI consciousness might include work on representation engineering (Zou et al 2023) or digital neuroscience (Karnofsky 2022).

- ^

For a review, see (Elamrani & Yampolskiy 2019). For a recent proposal, see (Schneider 2019).

- ^

Just knowing that AIs could, in principle, be conscious still leaves us with the question of which AIs are conscious. In the field of animal sentience, this has been called the “distribution question” (Allen & Trestman 2024). Elamrani and Yampolskiy (2019) term this the “other minds” problem.

- ^

In this usage, animal “sentience” essentially means the same thing as animal “consciousness”. The usage of sentience indicates an emphasis on the ethical motivations behind animal welfare efforts, which go beyond disinterested “intellectual” interests.

- ^

Elamrani & Yampolskiy (2019) differentiate between “architectural” & “behavioural” tests of AI consciousness. While the former places an emphasis on the structural & formal implementation of consciousness, the latter focuses on its outward manifestations. This corresponds roughly to Dung’s (2023a) “top-down”/”bottom-up” dichotomy.

- ^

Birch defines the theory-light approach in terms of a minimal commitment to what he calls the facilitation hypothesis: that “Phenomenally conscious perception of a stimulus facilitates, relative to unconscious perception, a cluster of cognitive abilities in relation to that stimulus” (2020). In other words, there is some kind of link between phenomenal consciousness and cognition. On this assumption, psychometric evaluations may be able to support inferences about subjective experience.

More recently, Chalmers (2023) proposes an alternative to Birch’s theory-light approach, which he calls the “theory-balanced” approach. The theory-balanced approach assigns probabilities for a given thing’s being conscious according to (1) how well-supported different theories are & (2) the degree to which that thing satisfies the criteria of these theories. At this point, neither the theory-light approach nor the theory-balanced approach appears to command an obvious advantage. As far as research in animal & AI consciousness is concerned, the upshot is that, today, there are serious contenders to the traditional “theory-heavy” approach which commit to particular theories of consciousness, enabling multiple paradigms to be developed in parallel.

- ^

At the time of writing, Jeff Sebo & Robert Long are currently working on a consciousness eval for AI: a standard test which draws upon the latest research across both AI & animal sentience (Sebo 2024).

- ^

e.g. the ability to distinguish between two colour hues, subtle notes within a wine or perfume, or different textures. Research on “just-noticeable differences” (JNDs) in experimental psychology attempts to pinpoint perceptual thresholds of stimulus intensity.

- ^

Since creatures may differ in the sensory modalities they possess (e.g. humans lack echolocation, robots can be built without the capacity for taste), it does not really make sense to speak of a creature’s “overall” level of P-richness (Birch et al 2022). Nor, of course, does the absence of a given sensory modality necessarily discount the creature’s likelihood of being conscious.

- ^

Lexical concepts such as colour terms (“beige”, “eggshell”) or words for flavours (“salty”, “sweet”).

- ^

- ^

The gaming problem need not require AI to have genuine deceptive intentions. Nonetheless, it is also conceivable that sufficiently intelligent AI might actually harbour genuine deceptive intentions, & conduct itself differently under testing conditions– including consciousness evals.

- ^

In particular, such assumptions could impact which AIs receive moral consideration, & the degree to which their interests are taken into account.

Executive summary: Current AI systems are likely not conscious, but there is significant uncertainty about future AI consciousness and how to reliably test for it, while public opinion on AI consciousness is mixed but evolving.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.