A number of recent proposals have detailed EA reforms. I have generally been unimpressed with these - they feel highly reactive and too tied to attractive sounding concepts (democratic, transparent, accountable) without well thought through mechanisms. I will try to expand my thoughts on these at a later time.

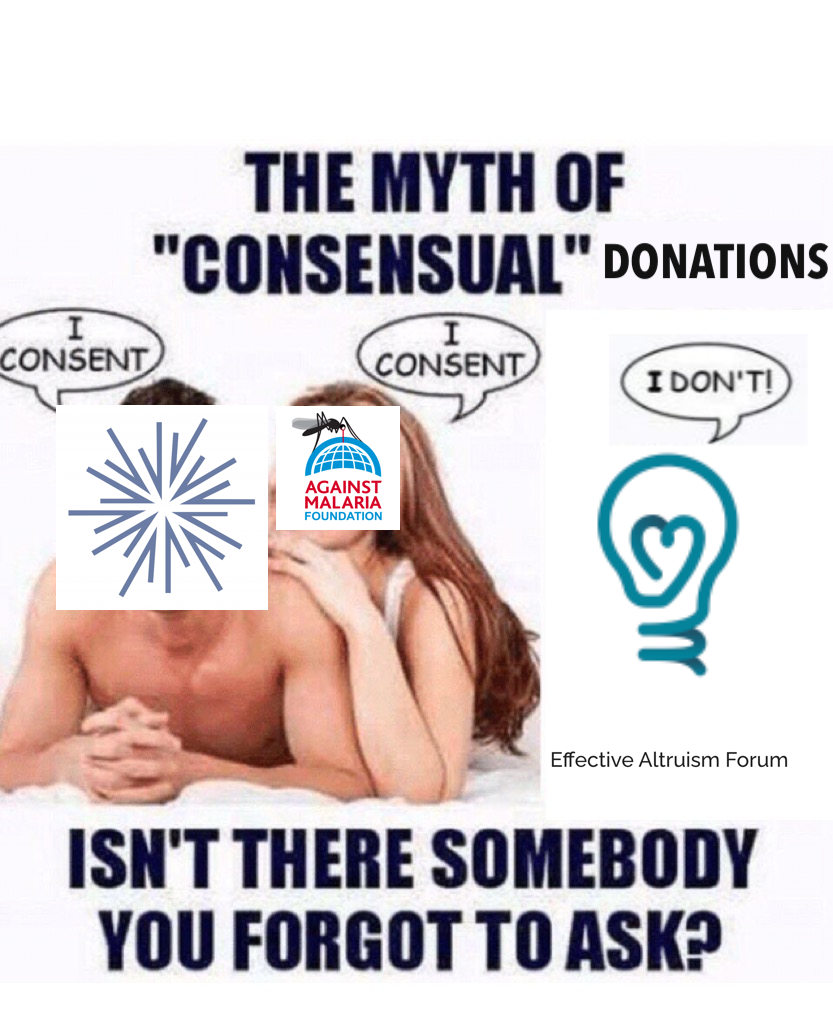

Today I focus on one element that seems at best confused and at worst highly destructive: large-scale, democratic control over EA funds.

This has been mentioned in a few proposals: It originated (to my knowledge) in Carla Zoe Cremer's Structural Reforms proposal:

- Within 5 years: EA funding decisions are made collectively

- First set up experiments for a safe cause area with small funding pots that are distributed according to different collective decision-making mechanisms

(Note this is classified as a 'List A' proposal - per Cremer: "ideas I’m pretty sure about and thus believe we should now hire someone full time to work out different implementation options and implement one of them")

It was also reiterated in the recent mega-proposal, Doing EA Better:

Within 5 years, EA funding decisions should be made collectively

Furthermore (from the same post):

Donors should commit a large proportion of their wealth to EA bodies or trusts controlled by EA bodies to provide EA with financial stability and as a costly signal of their support for EA ideas

And:

The big funding bodies (OpenPhil, EA Funds, etc.) should be disaggregated into smaller independent funding bodies within 3 years

(See also the Deciding better together section from the same post)

How would this happen?

One could try to personally convince Dustin Moskovitz that he should turn OpenPhil funds over to an EA Community panel, that it would help OpenPhil distribute its funds better.

I suspect this would fail, and proponents would feel very frustrated.

But, as with other discourse, these proposals assume that because a foundation called Open Philanthropy is interested in the "EA Community" that the "EA Community" has/deserves/should be entitled to a say in how the foundation spends their money. Yet the fact that someone is interested in listening to the advice of some members of a group on some issues does not mean they have to completely surrender to the broader group on all questions. They may be interested in community input for their funding, via regranting for example, or invest in the Community, but does not imply they would want the bulk of their donations governed by the EA community.

(Also - I'm using scare quotes here because I am very confused who these proposals mean when they say EA community. Is it a matter of having read certain books, or attending EAGs, hanging around for a certain amount of time, working at an org, donating a set amount of money, or being in the right Slacks? These details seem incredibly important when this is the set of people given major control of funding, in lieu of current expert funders)

So at a basic level, the assumption that EA has some innate claim to the money of its donors is basically incorrect. (I understand that the claim is also normative). But for now, the money possessed by Moskovitz and Tuna, OP, and GoodVentures is not the property of the EA community. So what, then, to do?

Can you demand ten billion dollars?

Say you can't convince Moskovitz and OpenPhil leadership to turn over their funds to community deliberation.

You could try to create a cartel of EA organizations to refuse OpenPhil donations. This seems likely to fail - it would involve asking tens, perhaps hundreds, of people to risk their livelihoods. It would also be an incredibly poor way of managing the relationship between the community and its most generous funder--and very likely it would decrease the total number of donations to EA organizations and causes.

Most obviously, it could make OpenPhil less interested in funding the EA community. But additionally, this sort of behavior would also make EA incredibly unattractive to new donors - who would want to try to help a community of people trying to do good and then be threatened by them? Even for potential EAs pursuing Earn to Give, this seems highly demotivating.

Let's say a new donor did begin funding EA organizations, and didn't want to abide by these rules. Perhaps they were just interested in donating to effective bio orgs. Would the community ask that all EA-associated organizations turn down their money? Obviously they shouldn't - insofar as they are not otherwise unethical - organizations should accept money and use it to do as much good as possible. Rejecting a potential donor simply because they do not want to participate on a highly unusual funding system seems clearly wrong headed.

One last note - The Doing EA Better post emphasizes:

Donors should commit a large proportion of their wealth to EA bodies or trusts . . . [as in part a] costly signal of their support for EA ideas

Why would we want to raise the cost of supporting EA ideas? I understand some people have interpreted SBF and other donors as using EA for 'cover' but this has always been pretty specious precisely because EA work is controversial. You can always donate to children's hospitals for good cover. Ideally supporting EA ideas and causes should be as cheap as possible (barring obvious ethical breaches) so that more money is funneled to highly important and underserved causes.

The Important Point

I believe Holden Karnofsky and Alexander Berger, as well as the staff of OpenPhil, are much better at making funding decisions than almost any other EA, let alone a "lottery selected" group of EAs deliberating. This seems obvious - both are immersed more deeply in the important questions of EA than almost anyone. Indeed, many EAs are primarily informed by Cold Takes and OpenPhil investigations.

Why democratic decision making would be better has gone largely unargued. To the extent it has been, "conflicts of interest" and "insularity" seem like marginal problems compared to basically having a deep understanding of the most important questions for the future/global health and wellbeing.

But even if we weren't so lucky to have Holden and Alex, it still seems to be bad strategic practice to demand community control over donor resources. It would:

- Endanger current donor relationships

- Make EA unattractive to potential new donors

- Likely lower the average quality of grantmaking

- Likely yield nebulous benefits

- And potentially drive some of the same problems it purports to solve, like community group-think

(Worth pointing out an irony here: part of the motivation for these proposals is for grant making to escape the insularity of EA, but Alex and Holden have existed fairly separately from the "EA Community" throughout their careers, given that they started GiveWell independently of Oxford EA.)

If you are going to make these proposals, please consider:

- Who you are actually asking to change their behavior?

- What actions you would be willing to take if they did not change their behavior?

A More Reasonable Proposal

Doing EA Better specifically mentions democratizing the EA Funds program within Effective Ventures. This seems like a reasonable compromise. As it is Effective Ventures program, that fund is much more of an EA community project than a third party foundation. More transparency and more community input in this process seem perfectly reasonable. To demand that donors actually turn over a large share of their funds to EA is a much more aggressive proposal.

Yeah, I strongly agree with this and wouldn't continue to donate to the EA fund I currently donate to if it became "more democratic" rather than being directed by its vetted expert grantmakers. I'd be more than happy if a community-controlled fund was created, though.

To lend further support to the point that this post and your comment makes, making grantmaking "more democratic" through involving a group of concerned EAs seems analogous to making community housing decisions "more democratic" through community hall meetings. Those who attend community hall meetings aren't a representative sample of the community but merely those who have time (and also tend to be those who have more to lose from community housing projects).

So its likely that not only would concerned EAs not be experts in a particular domain but would also be unrepresentative of the community as a whole.