Overview

Most AI alignment research focuses on aligning AI systems with the human brain’s stated or revealed preferences. However, human bodies include dozens of organs, hundreds of cell types, and thousands of adaptations that can be viewed as having evolved, implicit, biological values, preferences, and priorities. Evolutionary biology and evolutionary medicine routinely analyze our bodies’ biological goals, fitness interests, and homeostatic mechanisms in terms of how they promote survival and reproduction. However the EA movement includes some ‘brain-over-body biases’ that often make our brains’ values more salient than our bodies’ values. This can lead to some distortions, blind spots, and failure modes in thinking about AI alignment. In this essay I’ll explore how AI alignment might benefit from thinking more explicitly and carefully about how to model our embodied values.

Context: A bottom-up approach to the diversity of human values worth aligning with

This essay is one in a series where I'm trying to develop an approach to AI alignment that’s more empirically grounded in psychology, medicine, and other behavioral and biological sciences. Typical AI alignment research takes a rather top-down, abstract, domain-general approach to modeling the human values that AI systems are supposed to align with. This often combines consequentialist moral philosophy as a normative framework, machine learning as a technical framework, and rational choice theory as a descriptive framework. In this top-down approach, we don’t really have to worry about the origins, nature, mechanisms, or adaptive functions of any specific values.

My approach is more bottom-up, concrete, and domain-specific. I think we can’t solve the problem of aligning AI systems with human values unless we have a very fine-grained, nitty-gritty, psychologically realistic description of the whole range and depth of human values we’re trying to align with. Even if the top-down approach seems to work, and we think we’ve solved the general problem of AI alignment for any possible human values, we can’t be sure we’ve done that until we test it on the whole range of relevant values, and demonstrate alignment success across that test set – not just to the satisfaction of AI safety experts, but to the satisfaction of lawyers, regulators, investors, politicians, religious leaders, anti-AI activists, etc.

Previous essays in this series addressed the heterogeneity of value types within individuals (8/16/2022, 12 min read), the heterogeneity of values across individuals (8/8/2022, 3 min read), and the distinctive challenges in aligning with religious values (8/15/2022, 13 min read). This essay addresses the distinctive challenges of aligning with body values – the values implicit in the many complex adaptations that constitute the human body. Future essays may address the distinctive challenges of AI alignment with political values, sexual values, family values, financial values, reputational values, aesthetic values, and other types of human values.

The ideas in this essay are still rather messy and half-baked. The flow of ideas could probably be better organized. I look forward to your feedback, criticisms, extensions, and questions, so I can turn this essay into a more coherent and balanced argument.

Introduction

Should AI alignment research be concerned only with alignment to the human brain’s values, or should it also consider alignment with the human body’s values?

AI alignment traditionally focuses on alignment with human values as carried in human brains, and as revealed by our stated and revealed preferences. But human bodies also embody evolved, adaptive, implicit ‘values’ that could count as ‘revealed preferences’, such as the body’s homeostatic maintenance of many physiological parameters within optimal ranges. The body’s revealed preferences may be a little trickier to identify than the brain’s revealed preferences, but both can be illuminated through an evolutionary, functional, adaptationist analysis of the human phenotype.

One could imagine a hypothetical species in which individuals’ brains are fully and consciously aware of everything going on in their bodies. Maybe all of their bodies’ morphological, physiological, hormonal, self-repair, and reproductive functions are explicitly represented as conscious parameters and goal-directed values in the brain. In such a case, the body’s values would be fully aligned with the brain’s consciously accessible and articulable preferences. Sentience would, in some sense, pervade the entire body – every cell, tissue, and organ. In this hypothetical species, AI alignment with the brain’s values might automatically guarantee AI alignment with the body’s values. Brain values would serve as a perfect proxy for body values.

However, we are not that species. The human body has evolved thousands of adaptations that the brain isn’t consciously aware of, doesn’t model, and can’t articulate. If our brains understood all of the body’s morphological, hormonal, and self-defense mechanisms, for example, then the fields of human anatomy, endocrinology, and immunology would have developed centuries earlier. We wouldn’t have needed to dissect cadavers to understand human anatomy. We wouldn’t have needed to do medical experiments to understand how organs release certain hormones to influence other organs. We wouldn’t have needed evolutionary medicine to understand the adaptive functions of fevers, pregnancy sickness, or maternal-fetal conflict.

Brain-over-body biases in EA

Effective Altruism is a wonderful movement, and I’m proud to be part of it. However, it does include some fairly deep biases that favor brain values over body values. This section tries to characterize some of these brain-over-body biases, so we can understand whether they might be distorting how we think about AI alignment. The next few paragraphs include a lot of informal generalizations about Effective Altruists and EA subculture norms, practices, and values, based on my personal experiences and observations during the 6 years I’ve been involved in EA. When reading these, your brain might feel its power and privilege being threatened, and might react defensively. Please bear with me, keep an open mind, and judge for yourself whether these observations carry some grain of truth.

Nerds. Many EAs in high school identified as nerds who took pride in our brains, rather than as jocks who took pride in their bodies. Further, many EAs identify as being ‘on the spectrum’ or a bit Asperger-y (‘Aspy’), and feel socially or physically awkward around other people’s bodies. (I’m ‘out’ as Aspy, and have written publicly about its challenges, and the social stigma against neurodiversity.) If we’ve spent years feeling more comfortable using our brains than using our bodies, we might have developed some brain-over-body biases.

Food, drugs, and lifestyle. We EAs often try to optimize our life efficiency and productivity, and this typically cashes out as minimizing the time spent caring for our bodies, and maximizing the time spent using our brains. EA shared houses often settle on cooking large batches of a few simple, fast, vegan recipes (e.g. the Peter Special) based around grains, legumes, and vegetables, which are then microwaved and consumed quickly as fuel. Or we just drink Huel or Soylent so our guts can feed some glucose to our brains, ASAP. We tend to value physical health as a necessary and sufficient condition for good mental health and cognitive functioning, rather than as a corporeal virtue in its own eight. We tend to get more excited about nootropics for our brains than nutrients for our bodies. The EA fad a few years ago for ‘polyphasic sleep’ – which was intended to maximize hours per day that our brains could be awake and working on EA cause areas – proved inconsistent with our body’s circadian values, and didn’t last long.

Work. EAs typically do brain-work more than body-work in our day jobs. We often spend all day sitting, looking at screens with our eyes, typing on keyboards with our fingers, sometimes using our voices and showing our faces on Zoom. The rest of our bodies are largely irrelevant. Many of us work remotely – it doesn’t even matter where our physical bodies are located. By contrast, other people do jobs that are much more active, in-person, embodied, physically demanding, and/or physically risky – e.g. truckers, loggers, roofers, mechanics, cops, firefighters, child care workers, orderlies, athletes, personal trainers, yoga instructors, dancers, models, escorts, surrogates. Even if we respect such jobs in the abstract, most of us have little experience of them. And we view many blue-collar jobs as historically transient, soon to be automated by AI and robotics – freeing human bodies from the drudgery of actually working as bodies. (In the future, whoever used to work with their body will presumably just hang out, supported by Universal Basic Income, enjoying virtual-reality leisure time in avatar bodies, or indulging in a few physical arts and crafts, using their soft, uncalloused fingers)

Relationships. The brain-over-body biases often extend to our personal relationships. We EAs are often sapiosexual, attracted more to the intelligence and creativity of other people’s brains, than to the specific traits of their bodies. Likewise, some EAs are bisexual or pansexual, because the contents of someone’s brain matters more than the sexually dimorphic anatomy of their body. Many EAs also have long-distance relationships, in which brain-to-brain communication is more frequent than body-to-body canoodling.

Babies. Many EAs prioritize EA brain-work over bodily reproduction. They think it’s more important to share their brain’s ideas with other brains, than to recombine their body’s genes with another body’s genes to make new little bodies called babies. Some EAs are principled antinatalists who believe it’s unethical to make new bodies, on the grounds that their brains will experience some suffering. A larger number of EAs are sort of ‘pragmatic antinatalists’ who believe that reproduction would simply take too much time, energy, and money away from doing EA work. Of the two main biological imperatives that all animal bodies evolved to pursue – survival or reproduction – many EAs view the former as worth maximizing, but the latter as optional.

Avatars in virtual reality. Many EAs love computer games. We look forward to virtual reality systems in which we can custom-design avatars that might look very different from our physical bodies. Mark Zuckerberg seems quite excited about a metaverse in which our bodies can take any form we want, and we’re no longer constrained to exist only in base-level reality, or ‘meatspace’. On this view, a Matrix-type world in which we’re basically brains in vats connected to each other in VR, with our bodies turning into weak, sessile, non-reproducing vessels, would not be horrifying, but liberating.

Cryopreservation. When EAs think about cryopreservation for future revival and health-restoration through regenerative medicine, we may be tempted to freeze only our heads (e.g. ‘neuro cryopreservation for $80k at Alcor), rather than spending the extra $120k for ‘whole body cryopreservation’ – on the principal that most of what’s valuable about us is in our head, not in the rest of our body. We have faith that our bodies can be cloned and regrown in human form – or replaced with android bodies – and that our brains won’t mind.

Whole brain emulation. Many EAs are excited about a future in which we can upload our minds to computational substrates that are faster, safer, better networked, and longer-lasting than human brains. We look forward to whole brain emulation, but not whole body emulation, on the principle that if we can upload everything in our minds, our bodies can be treated as disposable.

Animal welfare. Beyond our species, when EAs express concerns about animal welfare in factory farming, we typically focus on the suffering that goes on in the animals’ brains. Disruptions to their bodies’ natural anatomy, physiology, and movement patterns are considered ethically relevant only insofar as they impose suffering on their brains. Many EAs believe that if we could grow animal bodies – or at least organs, tissues, and cells – without central nervous systems that could suffer, then there would be no ethical problem with eating this ‘clean meat’. In this view, animal brains have values, preferences, and interests, but animal bodies, as such, don’t. (For what it’s worth, I’m sympathetic to this view, and support research on clean meat.)

This is not to say that EA is entirely focused on brain values over body values. Since its inception, EA has promoted global public health, and has worked to overcome the threats to millions of human bodies from malaria, intestinal parasites, and malnutrition. There is a lot of EA emphasis on biosecurity, global catastrophic biological risks (GCBRs), and pandemic preparedness – which testifies to a biologically grounded realism about our bodies. EA work on nuclear security often incorporates a visceral horror at how thermonuclear weapons can burn, blast, and mutate human bodies. Some EA animal welfare work focuses on how selective breeding and factory farms undermine the anatomy, endocrinology, and immune systems of domesticated animal bodies.

Of course, EA’s emphasis on brains over bodies is not just a set of nerdy, sapiosexual, antinatalist, knowledge-worker biases. There are more principled reasons for prioritizing brains over bodies as ‘cause areas’, grounded in EA’s consequentialism and sentientism. Even since Bentham and Mill, utilitarians have viewed moral value as residing in brains capable of experience pleasure and pain. And ever since Peter Singer’s Animal Liberation book in 1975, animal welfare has been viewed largely through a sentientist lens: the animal’s sentient experiences in their brains are considered more ethically relevant than the survival and reproduction of their bodies. Reconciling sentientist consequentialism with a respect for body values is an important topic for another essay.

Brains are cool. I get it. I’ve been fascinated with brains ever since I took my first neuroscience course as an undergrad in 1985. I’ve devoted the last 37 years of my academic career to researching, writing, and teaching about human minds and brains. But there’s more to our lives than our nervous systems, and there’s more to our interests as human beings than what our brains think they want.

If we’re just aligning with brains, how much of the body are we really aligning with?

To overcome these brain-over-body biases, it might help to do some thought exercises.

Imagine we want AI systems to align with our entire phenotypes – our whole bodies – and not just our brains. How representative of our embodied interests are our brains?

Let’s do a survey:

- By weight, the typical person has a 1,300 gram brain in a 70-kg body; so the brain is about 2% of body mass

- By cell-type, brains are mostly made of 2 cell types (neurons and glia), whereas the body overall includes about 200 cell types, so the brain includes about 1% of cell types

- By cell-count, brains include about 80 billion neurons and 80 billion glia cells, whereas the body overall includes about 30 trillion cells; so the brain includes about 0.5% of the body’s cells

- By organ-count, the brain is one organ out of about 78 organs in the human body, so the brain is about 1.3% of the body’s organs

If the human phenotype was a democracy, where organs got to vote in proportion to their weight, cell types, cell counts, or organ counts, brains would get somewhere between 0.5% and 2% of the body’s votes. If AI is aligned only with our brains, it might be aligning with only about 1% of our whole human bodies, and we’d leave 99% unrepresented and unaligned.

Another way to look at the human phenotype’s values and preferences is from the viewpoint of selfish gene theory and disposable soma theory. The human brain is arrogant. It thinks it’s in charge, and should be in charge. However, from an evolutionary gene-centered view, the gonads are really where the action is. The ‘immortal germline replicators’ (as Richard Dawkins called them in The Selfish Gene) are carried in testes and ovaries. Everything else in the body is just an evolutionary dead end – it’s a ‘disposable soma’. The somatic cells outside the gonads are just there to protect, nourish, and help replicate the sperm and eggs in the gonads. From that perspective, the brain is just helping the genes in the gonads make more genes in next generation’s gonads. The brain’s values and preferences may or may not be aligned with the evolutionary interests of the germ-line replicators in the gonads. From a longtermist evolutionary perspective, maybe AI systems should try to be aligned with the interests of the immortal germ-line replicators, not just the transient, disposable brains the evolved to represent their interests. (More on this in another essay.)

How brain-over-body biases can increase AI X-risk

When we think about existential risks from AI, many EAs focus on the dangers of superintelligence growing misaligned from human intelligence, pursing different abstract goals, and quietly taking over our world through the Internet. Hollywood depictions of Terminator-style robots physically imitating, hunting, and killing human bodies are considered silly distractions from the real business of aligning artificial brains with human brains. Indeed, some EAs believe that if a superintelligence offered a credible way to upload our minds into faster processors, even at the cost of killing our physical human bodies, that would count as a win rather than a loss. In this view, a transhumanist future of post-human minds colonizing the galaxy, without any human bodies, would be considered a victory rather than an AI apocalypse. This is perhaps the strongest example of the EA brain-over-body bias.

You might well be asking, so what if EAs have brain-over-body biases? Does it really matter for AI alignment, and for minimizing existential risks (X risks)? Can’t we just ignore bodies for the moment, and focus on the real work of aligning human brains and artificial brains?

Consider one example from narrow AI safety: self-driving cars. When we’re designing AI systems to safely control our cars, we don’t just want the car’s AI to act in accordance with our brain’s preferences and values. Our number one priority is for the car not to crash in a way that squishes our body so we die. The best way to keep our bodies safe isn’t just for the AI to model our brains’ generic preference for life over death. It’s for the AI system designers to model -- in grisly, honest, and biomedically grounded detail, the specific types of crashes that could cause specific kinds of injuries to specific parts of our bodies.

Full AI alignment for self-driving cars would require, at least implicitly, alignment with the hundreds of specific physical vulnerabilities of the specific human bodies that are actually in the car right now. From the perspective of an AI in a self-driving car, given its millisecond-response-rate sensors and multi-gigahertz processors, every crash happens in excruciatingly slow motion. There are plenty of ways to use steering, braking, acceleration, evasive maneuvers, air bag deployment, etc., to influence how the crash plays out and what kinds of injuries it causes to occupants. As a professor, I’d want my car’s AI to manage the crash so it prioritizes protecting my eyes (for reading), my brain (for thinking), and my hands (for typing). But if I’m a professional dancer, I might want it to put a slightly higher priority on protecting my knees, ankles, and spine. If I’m a parent, I might want it to put a higher priority on protecting my baby in their right rear car seat than on protecting me in the front left driver’s seat. If I’m driving my elderly parent around, and the AI knows from their medical records that they recently had their right hip joint replaced, I might want it to put a priority on reducing the crash’s likely impact on that leg. In general, we want self-driving cars to understand our specific body values and vulnerabilities, not just our brain values. These body values cannot be reduced to the kinds of hypothetical trolley problems that ask for people’s stated preferences about the acceptability of harming different kinds of car occupants and pedestrians (e.g. this.)

Narrow AI systems for biomedical applications also need to understand body values. These could include AI-controlled surgery robots, autonomous ambulances, robotic health care workers, telehealth consultants, etc. In each case, the AI doesn’t just need to model human preferences (e.g. ‘I don’t want to die please’); it also needs to actually understand the human body’s thousands of adaptations at a very granular, biological level that can guide its medical interventions. This would include, for example, the AI needing to model the goal-directed homeostatic mechanisms that control blood pressure, blood sugar, body temperature, fluid balance, extracellular pH levels, etc.

Similar issues arise with the safety of narrow AI systems controlling industrial robots with human workers’ bodies nearby, or controlling military weapons systems with civilian bodies nearby. We want the AI systems to be aligned with all the organs, tissues, and cells of all the human bodies nearby, not just with the conscious values in their brains.

Military applications could be especially worrisome, because the better a benevolent AI system can get aligned with human body values and vulnerabilities, the more easily a hostile AI system could copy and invert those body values, treating them as vulnerabilities, in order to impose injury or death in precisely targeted ways. Consider scene 86 in Terminator 2: Judgment Day (1991), when the ‘good’ T-800 Terminator, played by Arnold Schwarzenegger, is suturing Sarah Conner’s stab wounds that were inflicted by the misaligned, liquid metal T-1000. Reassuring her about his biomedical knowledge, the T-800 says ‘I have detailed files on human anatomy’. Sarah says ‘I’ll bet. Makes you a more efficient killer, right?’. He says ‘Correct’. Detailed understanding of human body values can be used both to inflict maximum damage, and to offer maximally effective medical care.

When AI alignment researchers think about X risks to humanity, there’s a tendency to ignore these kinds of body values, and to treat our human interests way too abstractly. Mostly, ordinary folks just want the AI systems of the future not to kill their bodies. They don’t want the AI to do a drone strike on our house. They don’t want it to turn their bodies into paperclips. They don’t want it to use thermonuclear weapons on their bodies. Alignment with our brain values is often secondary to alignment with our body values.

Note that this argument holds for any future situation in which our minds are grounded in any substrate that could be viewed as a sort of ‘physical body’, broadly construed, and that’s vulnerable to any sort of damage. If our heads are cryopreserved in steel cylinders at the Alcor facilities in Arizona, then those cylinders are our new bodies, and we would want AI guardians watching over those bodies to make sure that they are safe against physical attack, cybersecurity threats, financial insolvency, and ideological propaganda – for centuries to come. If we’re uploaded to orbital solar-powered server farms, and our minds can’t survive without those computational substrates working, then those server-satellites are our new bodies, and they will have body values that our AI guardians should take into account, and that might be quite different from our mind’s values. So, one failure mode in AI alignment is to focus too much on what our brains want, and not enough on what could mess up our bodies – whatever current or future forms they happen to take.

The concept of body values provides a bridge between narrower issues of AI alignment, and broader issues of AI health and safety. Certainly, avoiding catastrophic damage to the human body seems like a fairly obvious goal to pursue in designing certain autonomous AI systems such as cars or robots. However, embodied values get a lot more numerous, diverse, subtle, and fine-grained than just our conscious preference for AI systems not to break our bones or crush our brains.

Can we expand the moral circle from brains to bodies?

Maybe one approach to incorporating body values into AI alignment research is to keep our traditional EA consequentialist emphasis on sentient well-being, and simply expand our moral circle from brains to bodies. This could involve thinking of bodies as a lot more sentient than we realized. (But, as we’ll see, I don’t think that really solves the problem of body values.)

Peter Singer famously argued in a 1981 book that a lot of moral progress involves humans expanding the ‘moral circle’ of who’s worthy of moral concern – e.g. from the self, to family members, to the whole tribe, to the whole human species, to other species.

Post hoc, from our current sentientist perspective, this looks like a no-brainer – it’s just a matter of gradually acting nicer towards more and more of the beings that are obviously sentient. However, historically, when these moral battles were being fought, expanding the moral circle often seemed like a matter of expanding the definition of sentience itself. How to do so was usually far from obvious.

To a typical animal with a high degree of nepotism (concern for close blood relatives, due to kin selection), but no tribalism (concern for other group members, due to reciprocal altruism and multi-level selection), blood relatives may seem sentient and worthy of moral concern, but non-relatives may not. To a prehistoric hunter-gatherer, people within one’s tribe may seem sentient, but people in other tribes speaking other languages can’t express their values in ways we can understand, so they are typically dehumanized as less than sentient. To a typical anthropocentric human from previous historical eras, all humans might be considered sentient, but nonhuman animals were usually not, because they can’t even express their preferences in any language. Expanding the moral circle often required rethinking what sentience really means, including which kinds of beings have morally relevant preferences, interests, and values, and how those values are mentally represented within the individuals and articulated to other individuals.

Let’s zoom in from moral circle expansion at the grand scale, and consider the individual scale.

The moral circle is centered on the ‘self’. But what is this ‘self’? What parts of the self should be included in the moral circle? Only the parts of the cerebral cortex that can verbally articulate the brain’s interests through stated preferences? Or should we also include parts of the brain that can’t verbally state their preferences, but that can guide behavior in a way that reveals implicit preferences? Does the ethically relevant self include only the cerebrum, or does it also include the revealed preferences of the diencephalon, midbrain, and pons? Does the self include spinal reflexes, sensory organs, the peripheral nervous system, the autonomic nervous system, and the enteric nervous system? Does the self include the rest of our body, beyond the nervous system?

Sentience seems easy to spot where we’re looking at central nervous systems like vertebrate brains. Those kinds of brains embody preferences that clearly guide movement towards some kinds of stimuli and away from other kinds of stimuli, and that generate reward and punishment signals (pleasures and pains) that clearly guide reinforcement learning.

However, sentience gets trickier to spot when we’re looking at, say, the gut’s enteric nervous system, which can operate independently of the brain and spinal cord. This system coordinates digestion, including peristalsis, segmentation contractions, and secretion of gastrointestinal hormones and digestive enzymes. The enteric nervous system uses more than 30 neurotransmitters, and contains about 90% of the body’s serotonin and 50% of the body’s dopamine. It includes some 200-600 million neurons, distributed throughout two major plexuses (the myenteric and submucosal plexuses), and thousands of small ganglia. Its complexity is comparable to that of central nervous systems in other species that EAs generally consider sentient – e.g. zebrafish have about 10 million neurons, fruit bats have about 100 million, pigeons have about 300 million, octopuses have about 500 million. Moreover, the enteric nervous system can do a variety of learning and memory tasks, including habituation, sensitization, long term facilitation, and conditioned behavior. Should the enteric nervous system be considered sentient? I don’t know, but I think it has some implicit, evolved preferences, values, and homeostatic mechanisms that we might want AI systems to become aligned with.

EA consequentialism tends to assume that ethically relevant values (e.g. for AI alignment) are coterminous with sentience. This sentientism gets tricky enough when we consider whether non-cortical parts of our nervous system should be considered sentient, or treated as if they embody ethically relevant values. It gets even tricker when we ask whether body systems outside the nervous system, which may not be sentient in most traditional views, carry values worth considering.

Do bodies really have ‘values’?

You might be thinking, OK, within the ‘self’, maybe it’s reasonable to expand the moral circle from the cerebral cortex to subcortical structures like the diencephalon, midbrain, pons, and to the peripheral, autonomic, and enteric nervous systems. But shouldn’t we stop there? Surely non-neural organs can’t be considered to be sentient, or to have ‘values’ and ‘preferences’ that are ethically relevant?

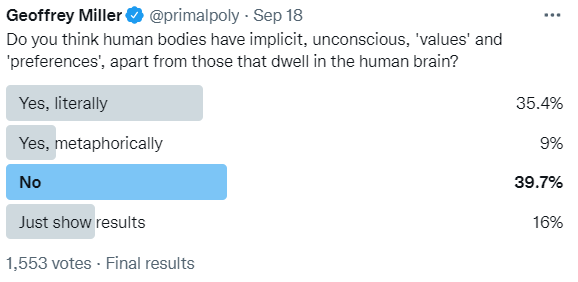

My intuitions are mixed. I can see both sides of this issue. When that happens, I often run a Twitter poll to see what other folks think. On Sept 18, 2022, I ran this poll, with these results (in this screenshot):

My typical follower is a centrist American male, and only about 1% of my followers (1,553 out of 123,900) responded to this poll. This is far from a globally representative sample of humans, and this poll should not be taken too seriously as data. Its only relevance here is in showing that people have quite mixed views on this issue. Many (35%) think human bodies do, literally, have implicit, unconscious values and preferences. Many others (40%) think they do not. Some (9%) think they do metaphorically but not literally. Let’s see if there’s any sense in which bodies might embody values, whether literally or metaphorically.

Embodied goals, preferences, and motivations

In what possible sense does the human body have values that might be distinct from the brain’s conscious goals or unconscious preferences? Are non-sentient, corporeal values possible?

In control theory terms, a thermostat has designed-in ‘goals’ that can be understood through revealed preferences, e.g. ‘trying’ to keep a house within a certain temperature range. The thermostat does not need to be fully sentient (capable of experiencing pleasure or pain) to have goals.

If the thermostat can be said to have goals, then every homeostatic mechanism in the body also has ‘goals’, evolved rather than designed, that can be understood through analyzing the body’s revealed preferences (e.g. ‘trying’ to keep body temperature, blood glucose, estradiol, and muscle mass within certain optimal ranges). Thus, we can think of the body as a system of ‘embodied motivations’ (values, preferences, goals) that can be understood through an evolutionary, functional, adaptationist analysis of its organs, tissues, and cells.

There’s an analogy here to the concept of ‘embodied cognition’ – the idea that a lot of our goal-directed behavior doesn’t just arise from the brain in isolation, but depends on an adaptive interplay between brain, body, and environment, and cannot be understood accurately without explicitly considering the specific physical features and capabilities of bodies.

For example, a standard cognitivist approach to understanding hunger and goal-directed eating might focus on the brain’s mental representations of hunger stimuli, whereas an embodied cognition approach would also talk explicitly about the structure, physiology, and innervation of the stomach and gut, the release and uptake of hunger-related hormones leptin and ghrelin, and the interaction between the gut microbiome and the human host body.

Here, I’m arguing that if we consider the entire human phenotype – body, brain, and behavior –then a lot of our human values are highly embodied. We could call this the domain of embodied volution, embodied motivation, or embodied values. (I use the terms ‘body values’, ‘embodied values’, and ‘corporeal values’ more or less interchangeably in this essay.)

Just as the field of embodied cognition has developed new terms, ideas, theories, and models for understanding how the brain/body system as a whole processes information and guides behavior, a field of ‘embodied values’ might need to develop new terms, ideas, theories, and models for understanding how the brain/body system as a whole pursues certain preferences, values, and goals – especially if we want to build AI systems that are aligned with the full range of our embodied values.

Aligning with embodied values requires detailed, evolutionary, functional analysis of bodily adaptations

Imagine we take seriously the idea that AI alignment should include alignment with embodied values that might not be represented in the nervous system the way that more familiar sentient values are. How do we proceed?

With brain values, we can often just ask people what they want, or have them react to different options, or physically demonstrate what they’d prefer. We don’t need a detailed functional understanding of where those brain values come from, how they work, or what they’re good for.

However, with body values, we can’t just ask what our gut microbiome wants, what our liver wants, or what our anti-cancer defenses want. We need to actually do the evolutionary biology and evolutionary medicine. AI alignment with body values would require AI to model everything we learn about how human bodies work.

If this argument is correct, it means there may not be any top-down, generic, all-purpose way to achieve AI alignment until we have a much better understanding of the human body’s complex adaptations. If Artificial General Intelligence is likely to be developed within a few decades, but if it will take more than a few decades to have a very fine-grained understanding of body values, and if body values are crucial to align with, then we will not achieve AGI alignment. We would need, at minimum, a period of Long Reflection focused on developing better evolutionary medicine models of body values, before proceeding with AGI development.

Aligning with embodied values might also require different input/output channels for AI systems. We’re used to thinking that we’ll just communicate with AI systems through voice, keyboard, face, and gesture – all under the brain’s voluntary control. However, alignment with body values might require more intrusive biomedical sensors that actually track the interests and well-being of various bodily systems. People involved in the ‘quantified self’ movement already try to collect a lot of this kind of data, using sensors that might be useful to AI systems. Whether we would want AI systems to be able to directly affect our physiology – e.g. through direct control over pharmaceuticals, hormones, or other biomedical interventions – is an open question.

What difference would it make if AI alignment considered embodied values?

What are some examples where an ‘embodied-values’ approach to AI alignment would differ from a standard ‘brain-values-only’ approach?

1. Caring for the microbiome. The human body hosts a complex microbiome – an ecology of hundreds of different microscopic organisms such as bacteria that are found throughout our skin, hair, gut, and other organs. Human health depends on a healthy microbiome. But the microbiome doesn’t have a brain, and can’t state its preferences. It has different DNA than we do, and different genetic interests. Human brains didn’t even know that human bodies contained microbiomes until a few decades ago. And medicine didn’t understand the microbiome’s importance until after 1980s, when Barry Marshall showed that helicobacter pylori can cause ulcers (and then got the Nobel prize in 2005). If an AI system is aligned with the human brain, but it ignores the microbiome hosted within the human body, then it won’t be aligned with human interests (or the microbiome’s interests).

2. Caring for a fetus. Female human bodies can get pregnant, and a lot of adaptive physiology goes on in pregnancy between the mother’s body, the uterine lining, the placenta, and the fetus, that is not consciously accessible to the mother’s brain. Yet the outcome of the adaptive physiology in pregnancy matters enormously to pregnant mothers. It can make the difference between a spontaneous abortion, a miscarriage, a stillbirth, and a healthy baby. For an AI system to be fully aligned with a pregnant mother’s values and interests, it should be able to represent and care for the full range of physiological dynamics happening within her reproductive system and her offspring.

3. Protecting against cancer. Cells in the human body often undergo spontaneous mutations that turn them into runaway replicators, i.e. cancer cells, that develop ‘selfish’ agendas (reproduce and spread everywhere) that are contrary to the body’s general long-term interests. In response, bodies have evolved many anti-cancer defenses that embody the revealed preference of ‘try not to die of cancer, especially when young’). Most human brains have no idea that this arms race between incipient cancers and anti-cancer defenses is going on, every day, right under our noses. Yet, the body has genuine ‘embodied values’ to avoid runaway cancer growth that would undermine survival and reproduction. Any AI system that doesn’t track exposure to carcinogenic chemicals, incipient cancers, and the state of anti-cancer defenses, wouldn’t really be aligned with the body’s embodied value of reducing cancer risk.

4. Promoting longevity. Human bodies evolved to live surprisingly long lives, even by the long-lived standards of mammals and social primates. Our bodies include lots of anti-aging adaptations design to extend our survival and reproductive longevity. The evolutionary biology subfields of life history theory, including senescence theory, model how our longevity adaptations evolve, and how we developed embodied values to promote longer life-spans. Our brains also evolved to promote longevity, but they tend to do so by perceiving external threats such as predators, parasites, pathogens, and aggressive rivals, and coordinating behaviors to avoid or overcome those threats. Our brains didn’t evolve to track the hundreds of other longevity-promoting adaptations inside our bodies, that don’t require external sensory perception or coordinated whole-body behaviors to cope with. Thus, there’s a gap between what our brains think is crucial to longevity (e.g. avoid getting eaten by predators, avoiding getting into fights with psychopaths), and what our bodies think is crucial to longevity (e.g. eating nutritious foods, preserving the microbiome, exercising enough to maintain muscles and bones, etc.) Often, there are conflicts of interest between what the brain wants (e.g. more donuts) and what our embodied longevity values would want (e.g. avoid donuts, eat leafy greens). Of course, among humans who happy to absorb accurate nutritional insights from medical research, their brains might internally represent this conflict between valuing donuts and valuing leafy greens. But not everyone has gotten the message – and historically, much of the public nutrition advice has been based on bad science, and is not actually aligned with the body’s long-term interests. Thus, there can be cases where our embodied longevity values deviate dramatically from what our brains think they want. So, which should our AI systems align with – our brains’ revealed preferences for donuts, or our bodies’ revealed preferences for leafy greens?

Benefits of considering embodied values in AI alignment

I think there are several good reasons why AI alignment should explicitly try to integrate embodied values into alignment research.

First, handling the full diversity of human types, traits, and states. We might want AI systems that can align with the full range of humans across the full range of biological and psychological states in which we find them. At the moment, most AI alignment seems limited to incorporating goals and preferences that physically healthy, mentally health, awake, sentient adults can express through voluntary motor movements such as through the vocal tract (e.g. saying what you want), fingers (e.g. typing or clicking on what you want), or larger body movements (e.g. showing a robot how to do something). This makes it hard for AI systems to incorporate the embodied values and preferences of people who are asleep, in a coma, under general anesthetic, in a severely depressed state, in a state of catatonic schizophrenia, on a psychedelic trip, suffering from dementia, or preverbal infants. None of these people are in a condition to do cooperative inverse reinforcement learning (CIRL), or most of the other proposed methods for teaching AI systems our goals and preferences. Indeed, it’s not clear that the brains of sleeping, comatose, or catatonic people have ‘goals and preferences’ in the usual conscious sense. However, their bodies still have revealed preferences, e.g. to continue living, breathing, being nourished, being safe, etc.

Second, the brain’s conscious goals often conflict with the body’s implicit biological goals. Let’s consider some examples where we might really want the AI system to take the body’s goals into account. Assume that we’re dealing with cases a few years in the future, when the AI systems are general-purpose personal assistants, and they have access to some biomedical sensors on, in, or around the body.

Anorexia. Suppose an AI system is trying to fulfil the preferences of an anorexic teenaged girl: her brain might say ‘I’m overweight, my body is disgusting, I shouldn’t eat today’, but her body might be sending signals that say ‘If we don’t eat soon, we might die soon from electrolyte imbalances, bradycardia, hypotension, or heart arrhythmia’. Should the AI pay more attention to the girl’s stated preferences, or her body’s revealed preferences?

Suicidal depression. Suppose a college student has failed some classes, his girlfriend broke up with him, he feels like a failure and a burden to his family, and he is contemplating suicide. His brain might be saying ‘I want to kill myself right now’, but his body is saying ‘Actually every organ other than your brain wants you to live’. Should the AI fulfill his brain’s preferences (and help arrange the suicide), or his body’s preferences (and urge him to call his mom, seek professional help, and remember what he has to live for)? Similar mismatches between what the brain wants and what the body wants can arise in cases of drug addiction, drunk driving, extreme physical risk-taking, etc.

Athletic training. Suppose AI/robotics researchers develop life-sized robot sparring partners for combat sports. A woman has a purple belt in Brazilian jujitsu (BJJ), and she’s training for an upcoming competition. She says to her BJJ sparring robot ‘I need a challenge; come at me as hard as you can bro’. The robot’s AI needs to understand not just that the purple belt is exaggerating (doesn’t actually want it to use its full strength); it also needs a very accurate model of her body’s biomechanics, including the locations, strengths, and elasticities of her joints, ligaments, sinews, muscles, and blood vessels, when using BJJ techniques. If the robot gets her in a joint lock such as an arm bar, it needs to know exactly how much pressure on her elbow will be too little to matter, just enough to get her to tap out, or too much, so she gets a serious elbow strain or break. If it gets her in a choke hold such as a triangle choke, it needs to understand exactly how much pressure on her neck will let her escape, versus lead her to tap out, versus compress her carotid artery to render her unconscious, versus kill her. She may have no idea how to verbally express her body’s biomechanical capabilities and vulnerabilities to the robot sparring partner. But it better get aligned with her body somehow – just as her human BJJ sparring partners do. And it better not take her stated preferences for maximum-intensity training too seriously.

Cases where AI systems should prioritize brain values over body values

Conversely, there may be cases where a person (and/or their friends and family members) might really want the AI to prioritize the brain’s values over the body’s values.

Terminal disease and euthanasia. Suppose someone has a terminal disease and is suffering severe chronic pain. Their life is a living hell, and they want to go. But their body is still fighting, and showing revealed preferences that say ‘I want to live’. Advance care directives (‘living wills’) are basically legally binding statements that someone wants others to prioritize their brain values (e.g. stop suffering) over their body values – and we might want AI biomedical care systems to honor those directives.

Cryopreservation and brain uploading. Suppose someone elderly is facing a higher and higher chance of death as they age. Their brain would prefer for their body to undergo cryopreservation by Alcor, or whoever, in hopes of eventual resuscitation and anti-aging therapies. But their body still works mostly OK. Should their AI system honor their cryopreservation request – even if it results in technical death by legal standards? Or, further in the future, the brain might want to be uploaded through a whole-brain emulation method. This would require very fine-scale dissection and recording of brain structure and physiology, that results in the death of the body. Should the AI system concur with destructive dissection of the brain, contrary to the revealed preferences of the body?

Self-sacrifice. People sometimes find themselves in situations where they can save others, at the possible cost of their own life. Heroic self-sacrifice involves the brain’s altruism systems over-riding the body’s self-preservation systems. Think of soldiers, fire fighters, rescue workers, and participants in high-risk clinical trials. Should the AI side with the altruistic brain, or the self-preserving body? In other cases, someone’s brain might be willing to sacrifice their body for some perceived greater good – as in the case of religious martyrdom. Should an AI allow a true believer to do a suicide bombing, if the martyrdom is fully aligned with their brain’s values, but not with their body’s revealed preferences?

Conclusion

I’ve argued for a bottom-up, biologically grounded approach to AI alignment that explicitly addresses the full range and variety of human values. These values include not just stated and revealed values carried in the central nervous system, but evolved, adaptive goals, preferences, and values distributed throughout the human body. EA includes some brain-over-body biases that make our body values seem less salient and important. However, the most fundamental challenge in AI safety is keeping our bodies safe, by explicitly considering their values and vulnerabilities. Aligning to our brain values is secondary.

I am deeply impressed by the amount of ground this essay covers so thoughtfully. I have a few remarks. They pertain to Miller's focal topic as well as avoiding massive popular backlash against general AI and limited, expert system AI, backlash that will make current resistance against science and human "expertise" in general look pretty innocuous. I close with a remark on alignment of AI with animal interests.

I offer everyone an a priori apology if this comment seems pathologically wordy.

I think that AI alignment with interests of the body are quite essential to achieve alignment with human minds; probably necessary but not sufficient. Cross-culturally, regardless of superficial and (anyway) dynamic differences in values amongst cultures, humans generally have a hard time being happy and content if they are concerned with bodily well-being. Sicknesses of all kinds lead to invasive thoughts and emotions amounting to existential dread for most people, even the likes of Warren Zevon.

The point is that know that I, and probably most people across cultures, would be delighted to have a human doctor or nurse walk into and exam or hospital room with a pleasent-looking robot that we (the patient) truly perceived , correctly so, to possess general diagnostic super-intelligence based on deep knowledge of the healthy functioning of every organ and physiological system in the human body. Personally, I've never had the experience that any doctor of mine, including renowned specialists, had much of a clue about any aspect of my biology. I'd also feel better right now if I knew there was an expert system that was going to be in charge of my palliative care, which I'll probably need sooner rather than later, a system that would customize my care to minimize my physical pain and allow me to die consciously, without irresistible distraction from physical suffering. Get to work on that, please.

Such a diagnostic AI system, like a deeply respected human shamanic healer treating a devout in-group religious follower, would even be capable of generating a supernormal placebo effect (current Western medicine and associated health-insurance systems most often produces strong nocebo effects, Ugg.), which it seems clear would be based on nonconscious mental processes in the patient. (I think one of the important albeit secondary adaptive functions of religions is to produce supernormal placebo effects; I have a hypothesis about why placebo effects exist and why religious healers in spiritual alignment with their patients are especially good at evoking them, a topic for a future essay.) The existence of placebo effects, and their opposite, are good evidence that AI alignment with body is somewhat equivalent to alignment with mind.

Truly perceived is important. That is one reason I recommend that a relaxed and competent human health professional accompany the visiting AI system. Even though the AI itself may speak to the patient, it is important to have this super-expert system, perhaps limited in its ability to engage emotionally with the patient (like the Hugh Laurie character, "House") be gazed upon with admiration and a bit of awe by the human partner during any interaction with the patient. The human then at least competently pretends to understand the diagnosis and promises the patient to promptly implement the recommended treatment. They can also help answer questions the patient may have throughout the encounter. An appropriate religious professional could also be added to the team, as needed, as long as they too show deep respect for the AI system.

I think a big part of my point is that when an AI consequentially aligns with our bodies, it thereby engenders a powerful "pre-reflective" intimacy with the person. This will help preempt reflective objections to the existence and activities of any AI system. And this will work cross-culturally, with practically everyone to ameliorate the alignment problem, at least as humans perceive it. It will promote AI adoption.

Stepping back a moment, as humans evolved the cognitive capacities to cooperate in large groups while preserving significant degrees of individual sovernity (e.g., unlike social insects) and then promptly began to co-evolve capacities to engage in the quintessentially human cross-cultural way of life I'll call "complex contractual reciprocity" (CCR), a term better unpacked elsewhere, we also had to co-evolve a stong hunger for externally-sourced maximally authoritative moral systems, preferably ones perceived as "sacred." (Enter a long history of natural selection for multiple cognitive traits favoring religiosity.) If its not from a sacred external source, but amounts to some person's or subculture's opinion, argument and instability, and the risk of chaos, is going to be on everyone's minds. Durable, high degrees of moral alignment within groups (whose boundaries can, under competent leadership, adaptively expand and contract) facilitates maximally productive CCR, and that is almost synonymous with high, on average, individual lifetime inclusive fitness within groups.

AI expert systems, especially when accompanied by caring compassionate human partners, can be made to look like highly authoritative, externally-sourced fountains of sacred knowledge related to fundamental aspects of our well-being. Operationally here, sacred means minimally questionable. As humans we instinctively need the culturally-supplied contractual boilerplate, our group's moral system (all about alignment), and other forms of knowledge intimately linked to our well-being to be minimally questionable. If a person feels like an AI system is BOTH morally aligned with them and their in-group, and can take care of their health practically like a god, then from the human standpoint, alignment doubts will be greatly ameliorated.

Finally, a side note, which I'll keep brief. Having studied animals in nature and in the lab for decades, I'm convinced that they suffer. This includes invertebrates. However, I don't think that even dogs reflect on their suffering. (Using meditative techniques, humans can get access to what it means to have a pre-reflective yet very real experience.) Anyway, for AI to every become aligned with animals, I think it's going to require that the AI aligns with their whole bodies, not just their nervous systems or particular ganglia therein. Again, because with animals the AI is facing the challenge of ameliorating pre-reflective suffering. (I'd say most human suffering, because of the functional design of human consciousness, is on the reflective level.) So, by designing AI systems that can achieve alignment with humans in mind and body, I think we may simultaneously generate AI that is much more capable of tethering to the welfare of diverse animals.

Best wishes to all, PJW

Great post, Dr. Milller! I'll be curious what you think of my thoughts here!

And we view many blue-collar jobs as historically transient, soon to be automated by AI and robotics – freeing human bodies from the drudgery of actually working as bodies. (In the future, whoever used to work with their body will presumably just hang out, supported by Universal Basic Income, enjoying virtual-reality leisure time in avatar bodies, or indulging in a few physical arts and crafts, using their soft, uncalloused fingers)

This makes me recall Brave New World by Aldous Huxley. Specifically, the use of "Soma" - a drug that everyone takes to feel pleasure, so they never have to feel pain. Something seems off about imagining people living their lives in virtual reality in an everlasting “pleasurable" state. I think I find this unsettling because it does not fit into my conception of a good life (the point of Brave New World). There is something powerful and rewarding in facing reality and going through a bit of pain. Although I have not explored the AI alignment problem in depth, there seems to be some neglect for the value of pain (I’m using "pain" in a relatively broad, nonserious manner here). For instance, the pain of working an 8-hour shift moving boxes in a warehouse. This is an interesting idea to consider, Paul Bloom in The Sweet Spot reviews how there is frequently pleasure in pain. This might not be super problematic if there is pain that is inherently pleasurable, but the situation gets sticky when pain is only interpreted as worthwhile after the fact. Yet another layer of complexity.

EA consequentialism tends to assume that ethically relevant values (e.g. for AI alignment) are coterminous with sentience. This sentientism gets tricky enough when we consider whether non-cortical parts of our nervous system should be considered sentient, or treated as if they embody ethically relevant values. It gets even tricker when we ask whether body systems outside the nervous system, which may not be sentient in most traditional views, carry values worth considering.

I do think that the AI alignment problem becomes infinitely complex if we consider panpsychism. Or if we consider dualism for that matter. What if everything has subjective experience? What if subjective experience isn't dependent on the body? Or as you suggest "Are non-sentient, corporeal values possible?"

Although I (mostly) agree with many of the assumptions EAs make (As I see them: reductive materialism, the existence of objective truth), I agree that there is a lot of neglect to a wide array of beliefs and values.

Another problem that I see is that if we are considering reductive materialism to be correct, consciousness could be obtained by AI. If consciousness can be obtained by AI, we have created a system with values of its own. What do we owe to these new beings we have created? It is possible that the future is populated primarily by AI because their subjective experience is qualitatively better than humans' subjective experience.

The thermostat does not need to be fully sentient (capable of experiencing pleasure or pain) to have goals.

I partially disagree with this. As Searle points out, these machines do not have intentionality and lack a true understanding of what "they" are doing. I think I can grant that the thermostat has goals, but the thermostat does not truly understand those goals, because it lacks intentionality.

Although I don't think this point is central to your argument. I think we can get to the inherent value of the body because of its connection with the brain - and therefore the mind (subjective experience). Thus, the values of the body are only valuable because the body mediates the subjective experience of the person.

If this argument is correct, it means there may not be any top-down, generic, all-purpose way to achieve AI alignment until we have a much better understanding of the human body’s complex adaptations. If Artificial General Intelligence is likely to be developed within a few decades, but if it will take more than a few decades to have a very fine-grained understanding of body values, and if body values are crucial to align with, then we will not achieve AGI alignment. We would need, at minimum, a period of Long Reflection focused on developing better evolutionary medicine models of body values, before proceeding with AGI development.

I think this is largely pointing out that EAs have failed to consider that some people might not be comfortable with the idea of uploading their brain (mind) into a virtual reality device. I personally find this idea absurd; I don't have any urges to be immortal. Ah, I run into so many problems trying to think this through. What do we do if people consider their own demise valuable?

If an AI system is aligned with the human brain, but it ignores the microbiome hosted within the human body, then it won’t be aligned with human interests (or the microbiome’s interests).

Perhaps I do disagree with your conclusion here...

Following your above logic, I find it likely that AGI would be developed before we have a full understanding of the human body. I don't agree that it is necessary to have a full understanding of the human body for AGI to be generally aligned with the values of the body. It might be possible for AGI to be aligned with the human body in a more abstract way. "The body is a vital part of subjective experience, don't destroy it." Then, theoretically, AGI would be able to learn everything possible about the body to truly align itself with that interest. (Maybe this idea is impractical from the side of creating the AGI?)

So, which should our AI systems align with – our brains’ revealed preferences for donuts, or our bodies’ revealed preferences for leafy greens?

Could an AGI transcend this choice? Leafy greens that taste like donuts? Or donuts that have the nutritional value of leafy greens?

Regardless of this, I do get your point of conflicting values between the body and brain. I was mostly considering the values of the body and brain as highly conducive to each other. Not sure what to do about the frequent incongruencies.

She may have no idea how to verbally express her body’s biomechanical capabilities and vulnerabilities to the robot sparring partner. But it better get aligned with her body somehow – just as her human BJJ sparring partners do. And it better not take her stated preferences for maximum-intensity training too seriously.

"But it better get aligned with her body somehow" is a key point for me here. If the AGI has the general notion to not hurt human bodies, it might be possible that the AGI would just use caution in this situation. Or even refuse to play because it understands the risk. This is to say, there might be ways for AGI to be aligned with the body values without it having a complete understanding of the body. Although, a complete understanding would be best.

On one hand, I agree with the sentiment that we need to consider the body more! On the other hand, I'm not positive that we need to completely understand the body to align AGI with the body. Although it seems to be a logical possibility that understanding the body isn't necessary for AGI alignment, I'm not sure if it is a practical point.

Please provide some pushback! I don't feel strongly about any of my arguments here, I know there is a lot of background that I'm missing out on.

Amazing. Thank you