Does some kind of consolidated critiques of effective altruism FAQ exist?[1] That is my question in one sentence. The following paragraphs are just context and elaboration.

Every now and then I come across some discussion in which effective altruism is critiqued. Some of these critiques are of the EA community as it currently exists (such as a critique of insularity) and some are critiques of deeper ideas (such as inherent difficulties with measurement). But in general I find them to be fairly weak critiques, and often they suggest that the person doesn't have a strong grasp of various EA ideas.

I found myself wishing that there was some sort of FAQ (A Google Doc? A Notion page?) that can be easily linked to. I'd like to have something better than telling people to read these three EA forum posts, and this Scott Alexander piece, and those three blogs, and these fourteen comment threads.

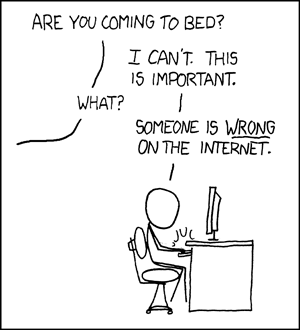

While I don't endorse anyone falling down the rabbit hole of arguing on the internet, it would be nice to have a consolidated package of common critiques and responses all in one place. It would be helpful for refuting common misconceptions. I found Dialogues on Ethical Vegetarianism to be a helpful consolidation of common claims and rebuttals, there have been blogs that have served a similar purpose for the development/aid world, and I'd love to have something similar for effective altruism. If nothing currently exists, then I might just end up creating a shared resource with a bunch of links to serve as this sort of a FAQ.

A few quotes from a few different people in a recent online discussion that I observed which sparked this thought of a consolidated FAQ, with minor changes in wording to preserve anonymity.[2] Many of these are not particularly well-thought out critiques, and suggest that the writers have a fairly inaccurate or simplified view of EA.

Any philosophy or movement with loads of money and that preaches moral superiority and is also followed by lots of privileged white guys lacking basic empathy I avoid like the plague.

EA is primarily a part of the culture of silicon valley's wealthy tech people who warm fuzzy feelings and to feel like they're doing something good. Many charity evaluating organizations existed before EA, so it is not concept that Effective Altruism created.

lives saved per dollar is a very myopic and limiting perspective.

EA has been promoted by some of the most ethically questionable individuals in recent memory.

Using evidence to maximize positive impact has been at the core of some horrific movements in the 20th century.

Improving the world seems reasonable in principle, but who gets to decide what counts as positive impact, and who gets to decide how to maximize those criteria? Will these be the same people who amassed resources through exploitation?

One would be hard pressed to find to specific examples of humanitarian achievements linked to EA. It is capitalizing on a philosophy than implementing it. And philosophically it’s pretty sophomoric: just a bare bones Anglocentric utilitarianism. So it isn't altruistic or effective.

In a Marxist framework, in order to amass resources you exploit labour and do harm. So wouldn't it be better to not do harm in amassing capital rather than 'solve' social problems with the capital you earned through exploiting people and create social problems.

EA is often a disguise for bad behavior without evaluating the root/source problems that created EA: a few individuals having the majority of wealth, which occurred by some people being highly extractive and exploitative toward others. If someone steals your land and donates 10% of their income to you as an 'altruistic gesture' while still profiting from their use of your land, the fundamental imbalance is still there. EA is not a solution.

It's hard to distinguish EA from the fact that its biggest support (including the origin of the movement) is from the ultra rich. That origin significantly shapes the the movement.

It’s basically rehashed utilitarianism with all of the problems that utilitarianism has always had. But EA lacks the philosophical nuance or honesty.

- ^

Yes, I know that EffectiveAltruism.org has a FAQ, but I'm envisioning something a bit more specific and detailed. So perhaps a more pedantic version of my question would be "Does some kind of consolidated critiques of effective altruism FAQ exist aside from the FAQ on EffectiveAltruism.org?"

- ^

If for some reason you really want to know where I read these, send me a private message and I'll share the link with you.

Here are some caveats/counterpoints:

- EA/OP does give large amounts of resources to areas that others find hard to care about, in a way which does seem more earnest & well-meaning than many other people in society

- The alternative framework in which to operate is probably capitalism, which is also not perfectly aligned with human values either.

- There is no evil mustache twirling mastermind. To the extent these dynamics arise, they do so out of some reasonably understandable constraints, like having a tight-knit group of people

- In general it's just prett

... (read more)I found 1 unpopular EA post discussing your last point of the malthusian risk involved with global health aid in subsaharan Africa, and I'm unsure why this topic isn't discussed more frequently on this forum. The post also mentions a study that found that East Africa may currently be in a malthusian trap such that a charity contributing to population growth in this region could have negative utility and be doing more harm than good.