This is a special post for quick takes by Gemma 🔸. Only they can create top-level comments. Comments here also appear on the Quick Takes page and All Posts page.

Centre for Effective Altruism and Ambitious Impact (formerly Charity Entrepreneurship) are probably named the wrong way around in terms of what they actually do and IMO this feeds into the EA branding problem.

Why do I think this?

"Effective" Altruism implies a value judgement that requires strong evidence to back up - like launching charities aiming to beat GiveWell benchmarks and raising large amounts of money from donors who expect to see evidence significant returns in the next 3 years or shut down.

IMO this is very friendly to a wide business-friendly and government audience

"Ambitious Impact" implies more speculative, less easy to measure activities in pursuit of even higher impact returns. My understanding is that Open Philanthropy split from GiveWell because of the realisation that there was more marginal funding required for "Do-gooding R&D" with a lower existing evidence base.

Why do we need "Do-Gooding R&D"?

So we can find better ways to help others in the future.

To use the example of a pharmaceutical company, why don't they reduce the prices of all their currently functional drugs to help more people? So, they can fund their expensive hit-based R&D efforts. There's obviously trade-offs, but it's short sighted to pretend the low hanging fruit won't eventually be picked.

So what? IMO AIM has outcompeted CEA on a number of fronts (their training is better, their content (if not their marketing) is better, they are agile and improve over time). Probably 80% of the useful and practical things I've learned about how to do effective altruism, I've learned from them.

The AIM folks I've spoken to are frustrated that their results - based on exploiting cost-effective high-evidence base interventions - are used to launder the reputation of OP funded low evidence base "Do-gooding R&D." I think before you should get to work on "Do-Gooding R&D", you should probably learn how the current state of Do-Gooding best practices.

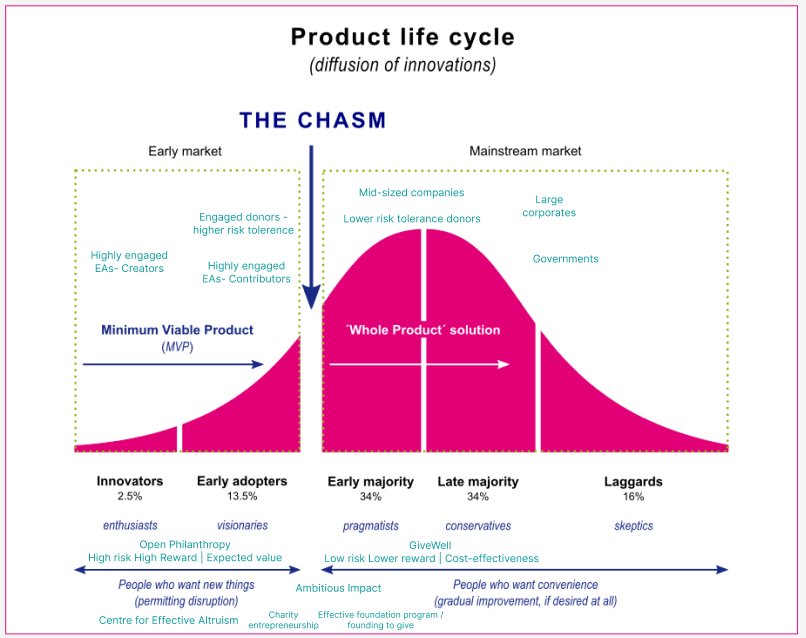

If we think about EA brand as a product, I'd guess we're in "The Chasm" below as the EA brand is too associated with the "weird" stuff that innovators are doing to be effectively sold to lower risk tolerance markets.

This is bad because lower risk tolerance markets (governments etc) are the largest scale funders.

AIM should be the face of EA and should be feeding in A LOT more to general outreach efforts. Too strong a take - see this reply to Lorenzo's comment

Concrete suggestions

I'm not suggesting they swap names, its likely locked in at this point BUT I think they have more in common than they think and are focussing too much on where they differ. Looking at where CEA people actually donate, it looks like they are hedging the higher risk nature of their work with donations to interventions with clearer returns. Retracted - too strong a take as highlight by this comment

One solution would be a merger since there are lots of synergies but they are too far away from each other in terms of views on cost effectiveness and funding independence to do so (yet)

I think they should be collaborating more, maybe coworking in the same office sometimes?

My wider take is that EA should become a profession (post building on this one incoming if I can ever actually finish it) so there is better regulation of individuals enabling us to have internally facing competition/innovation/R&D based on shared principles while generating externally facing standards that can be used by non-insiders and allow us to scale what works.

We shouldn't be internally fighting for a bigger slide of the existing pie, we should be demonstrating value externally so we can grow the size of the pie.

I work for CEA but I'm writing this from a personal perspective. Others at CEA may disagree with me.

Thanks for writing this! :) I think it's an interesting argument. I generally agree with everything @Lorenzo Buonanno🔸 said in his comments, so I'll just add a few things here.

I ultimately disagree that CEA should change its name, because EA principles are important to me and I like that we are trying to do good explicitly using the framework of EA (including promoting the framework itself) rather than using a more nebulous framing. I can't speak for AIM, but it does seem like our two organizations have different goals, so in that sense it seems good that we both exist and work towards achieving our own separate goals. For example, I think (just a low confidence guess based on public info) that AIM are not interested in stewarding EA or owning improving the EA brand. CEA is interested in doing those things, and it seems good for us to have "EA" in our name in order to do those things. I think you and I both agree that the EA brand needs improving, and CEA is working on hiring for our Comms Team to have more capacity to do this work.

I think they have more in common than they think and are focussing too much on where they differ.

I'm not sure who "they" are in this sentence. I personally don't think I have done this. I have a very high opinion of AIM overall, and I think that sentiment is common within CEA. I have personally applied to one of their incubation rounds because I thought there was a chance I could do more good there than at CEA. They are one of the orgs that best takes advantage of CEA's infrastructure (such as EAG and the EA Forum) — they make frequent appearances in our user surveys about how people have found value from those projects. Our team includes AIM's opportunities in our email newsletters and have curatedmultipleposts by them. I don't personally know the people who run AIM, but from my perspective we are collaborators on the same team.

Looking at where CEA people actually donate, it looks like they are hedging the higher risk nature of their work with donations to interventions with clearer returns.

I think it's hard to use the linked post as evidence to support this. I counted ~4/10 of the CEA employees that responded as falling into that category, and the rest mostly donated to causes that I think you would consider more speculative (at least more than the average AIM charity). Most CEA employees decided not participate in the public post, and I'm guessing that the ones that did not are more biased towards donating to less legibly cost-effective projects. I think there is also a bit of a theme where people tended to donate to interventions with clearer returns before joining CEA, and at CEA are spending more time considering other donation options (this is broadly true for myself, for example). So there are forces that push in both directions and it's not clear to me what the net result is.

We shouldn't be internally fighting for a bigger slide of the existing pie, we should be demonstrating value externally so we can grow the size of the pie.

Again, I'm not sure who is fighting internally. I guess this is making me worried that AIM views us as fighting with each other? That possibility makes me genuinely quite sad. If anyone at AIM wants to talk with me about it, you're welcome to message me on the Forum.

I'd like to caveat that I'm not sure I got the tone quite right in my original quick take. I'm glad I put it out there, but it is very much based on vibes and is motivated by an impression that there's opportunities for stronger relationships to be built. (Mostly based on conversations with AIM folks but I don't speak for them.)

My vibes-based take looks like it might not be true. There might be more collaboration than I can see, or it might just make sense to growth separately since there are clear differences in opinion for cause prioritisation and approach to cost effectiveness. CEA also is beholden to one funder which makes it much harder to be independent from that funder's views.

To be clear I think everyone involved cares deeply, is competent and is, very reasonably, prioritising other things.

I ultimately disagree that CEA should change its name, because EA principles are important to me and I like that we are trying to do good explicitly using the framework of EA (including promoting the framework itself) rather than using a more nebulous framing. I can't speak for AIM, but it does seem like our two organizations have different goals, so in that sense it seems good that we both exist and work towards achieving our own separate goals. For example, I think (just a low confidence guess based on public info) that AIM are not interested in stewarding EA or owning improving the EA brand. CEA is interested in doing those things, and it seems good for us to have "EA" in our name in order to do those things. I think you and I both agree that the EA brand needs improving, and CEA is working on hiring for our Comms Team to have more capacity to do this work.

Agree with this - I don't think names should be changed and I don't think AIM should/wants to maintain the EA brand. I do think there should be more centralisation of comms though (especially as it seems hard to hire for) - I'm generally in favour of investing more in infrastructure and cutting costs on operating expenses where possible (see my comment here)

I think it's hard to use the linked post as evidence to support this. I counted ~4/10 of the CEA employees that responded as falling into that category, and the rest mostly donated to causes that I think you would consider more speculative (at least more than the average AIM charity). Most CEA employees decided not participate in the public post, and I'm guessing that the ones that did not are more biased towards donating to less legibly cost-effective projects. I think there is also a bit of a theme where people tended to donate to interventions with clearer returns before joining CEA, and at CEA are spending more time considering other donation options (this is broadly true for myself, for example). So there are forces that push in both directions and it's not clear to me what the net result is.

This is fair and my original take was too strong. Edited to reflect that.

I'm not sure who "they" are in this sentence.

I don't personally know the people who run AIM, but from my perspective we are collaborators on the same team.

We shouldn't be internally fighting for a bigger slide of the existing pie, we should be demonstrating value externally so we can grow the size of the pie.

As noted above, I don't speak for either group here - I'm only a volunteer.

I think fighting was too strong a word, but I don't get the impression there are strong trust-based relationships which I do think is leaving impact on the table by missing potential opportunities to cut costs in the long term by centralising infrastructure/operating expenses.

Appreciate the response! To be clear, I am genuinely glad that you wrote the quick take, so I don't want to discourage you from doing more off-the-cuff quick takes in the future. Hopefully hearing my perspective was helpful as well. I'm glad to hear that you don't think we are actually fighting. :)

On collaborating/cutting costs: my outside impression is that AIM is already quite good about keeping their costs low and is not shy about being proactive. So my view is something like, if they thought it would be good for the world to collaborate more closely with CEA, I trust that they would have acted upon that belief. This is something that I respect about AIM (at least the version that's in my head, since I don't know them).

I feel I'm not informed enough to reply to this, and it feels weird to speculate about orgs I know very little about, but I worry that the people most informed won't reply here for various reasons, so I'm sharing some thoughts based on what very little information I have (almost entirely from reading posts on this forum, and of course speaking only for myself). This is all very low confidence.

"Effective" Altruism implies a value judgement that requires strong evidence to back up

I think if you frame it as a question, something like "We are trying to do altruism effectively, this is the best that we're able to do so far", it doesn't require that much evidence (for better or worse)

"Ambitious Impact" implies more speculative, less easy to measure activities in pursuit of even higher impact returns.

That is not clear to me, one can be very ambitious by working on things that are very easy to measure. For example, people going through AIM's "founding to give" program seem to have a goal that's easy to measure: money donated in 10 years[1], but I still think of them as clearly "ambitious" if they try to donate millions. Google defines ambitious as "having or showing a strong desire and determination to succeed"

My understanding is that Open Philanthropy split from GiveWell because of the realisation that there was more marginal funding required for "Do-gooding R&D" with a lower existing evidence base.

IMO AIM has outcompeted CEA on a number of fronts (their training is better, their content (if not their marketing) is better, they are agile and improve over time). Probably 80% of the useful and practical things I've learned about how to do effective altruism, I've learned from them.

I agree that AIM is more impressive than CEA on many fronts, but I think they mostly have different scopes.[2] My impression is that CEA doesn't focus much on specific ways to implement specific approaches to "how to do effective altruism", but on things like "why to do effective altruism" and "here are some things people are doing/writing about how to do good, go read/talk to them for details".

If not for CEA, I think I probably wouldn't have heard of AIM (or GWWC, or 80k, or effective animal advocacy as a whole field). And if I had only interacted with AIM, I'm not sure if I would have been exposed to as many different perspectives on things like animal welfare, longtermism, and spreading positive values[3]

The AIM folks I've spoken to are frustrated that their results - based on exploiting cost-effective high-evidence base interventions - are used to launder the reputation of OP funded low evidence base "Do-gooding R&D."

I understand the frustration, especially given the brand concerns below and because I think many AIM folks think that a lot of the assumptions behind longtermism don't hold.[4] But I don't know if this "reputation laundering" is actually happening that much:

My sense is that the (vague) relation to the Shrimp Welfare Project is not helping the reputation of some other EA-Adjacent projects

I think AIM is just really small compared to e.g. GiveWell, which I think is more often used to claim that EA is doing some good

When e.g. 80000hours interviews a LEEP cofounder, I think it's because they believe that LEEP is really amazing (as everyone does), they want to promote it, and they want more people to do similar amazing things. I think the reason people talk about the best AIM projects is usually not to look better by association but to promote them as examples of things that are clearly great.

If we think about EA brand as a product, I'd guess we're in "The Chasm" below as the EA brand is too associated with the "weird" stuff that innovators are doing to be effectively sold to lower risk tolerance markets.

I personally believe that the EA brand is in a pretty bad place, and at the moment often associated with things like FTX, TESCREAL and OpenAI, and that is a bigger issue. I think EA is seen as a group non-altruistic people, not as a group of altruistic people who are too "weird". (But I have even lower confidence on this than on the rest of this comment)

AIM should be the face of EA and should be feeding in A LOT more to general outreach efforts.

Related to the point above, it's not clear to me why AIM should be the face of "EA" instead of any other "doing the most good" movement (e.g. Roots of Progress, School for Moral Ambition, Center for High Impact Philanthropy, ...). I think none of these would make a lot of sense, and don't see why "AIM being the face of AIM" would be worse than AIM being the face of something else. You can see in their 2023 annual review that they did deeply consider building a new community "but ultimately feel that a more targeted approach focusing on certain careers with the most impact would be better for us".[5]

In general, I agree with your conclusions on wishing for more professionalization, and increasing the size of the pie (but it might be harder than one would think, and it might make sense to instead increase the number of separate pies)

I imagine positive externalities from the new organizations will also be a big part of their impact, but I expect the main measure will be amount donated.

AIM obviously does a lot for animal welfare, but I don't think they focus on helping people reson about how to prioritize human vs non-human welfare/rights/preferences.

I can't link to the quote, so I'll copy-paste it here.

JOEY: Yeah, I basically think I don't find a really highly uncertain, but high-value expected value calculation as compelling. And they tend to be a lot more concretely focused on what's the specific outcome of this? Like, okay, how much are we banking on a very narrow sort of set of outcomes and how confident are we that we're going to affect that, and what's the historical track record of people who've tried to affect the future and this sort of thing. There's a million and a half weeds and assumptions that go in. And I think, most people on both sides of this issue in terms of near-term causes versus long-term causes just have not actually engaged that deeply with all the different arguments. There's like a lot of assumptions made on either side of the spectrum. But I actually have gotten fairly deeply into this. I had this conversation a lot of times and thought about it quite thoroughly. And yeah, just a lot of the assumptions don't hold.

An option many people have been asking us about in the wake of the struggles of the EA movement is if CE would consider building out a movement that brings back some of the strengths of EA 1.0. We considered this idea pretty deeply but ultimately feel that a more targeted approach focusing on certain careers with the most impact would be better for us. The logistical and time costs of running a movement are quite large and it seems as though often a huge % of the movement's impact comes from a small number of actors and orgs. Although we like some things the EA movement has brought to the table when comparing it to more focused uses (e.g. GiveWell focuses more on GiveWell's growth), we have ended up more pessimistic about the impact of new movements.

I don't think they linked to their 2024 annual report on the forum, so this might be different now.

This is helpful and I agree with most of it. I think my take here is mostly driven by:

EA atm doesn't seem very practical to take action on except for donating and/or applying to a small set of jobs funded mostly by one source. My guess is this is reducing the number of "operator" types that get involved and selects for cerebral philosophising types. I heard about 80k and CEA first but it was the practical testable AIM charities that sparked my interest THEN I've developed more of an interest in implications from AI and GCRs.

When I've run corporate events, I've avoided using the term Effective Altruism (despite it being useful and descriptive) because of the existing brand.

I think current cause prioritisation methods are limiting innovation in the field because it's not teaching people about tools they can then use in different areas. There's probably low hanging fruit that isn't being picked because of this narrow philosophical approach.

I'm not a comms person so my AIM should be the face of EA thing is too strong. But I do think it's a better face for more practical less abstract thinkers

I agree with 3 of your points but I disagree with the first one:

EA atm doesn't seem very practical to take action on except for donating and/or applying to a small set of jobs funded mostly by one source.

On jobs: 80k, Probably Good and Animal Advocacy Careers have job boards with lots of jobs (including all AIM jobs) and get regularly recommended to people seeking jobs. I met someone new to EA at EAGx Berlin a month ago, and 3 days ago they posted on LinkedIn that they started working at The Life You Can Save.

On donations: I'm biased but I think donations can be a really valuable action, and EA promotes donations to a large number of causes (including AIM).

My guess is this is reducing the number of "operator" types that get involved and selects for cerebral philosophising types.

It's really hard for me to tell if this is a good or bad thing, especially because I think it's possible that things like animal welfare or GCR reduction can plausibly be significantly more effective than more obviously good "practical testable" work (and the reason to favour "R&D" mentioned previously)

I heard about 80k and CEA first but it was the practical testable AIM charities that sparked my interest THEN I've developed more of an interest in implications from AI and GCRs.

Not really a disagreement, but I think it's great that there's cross-pollination, with people getting into AIM from 80k and CEA, and into 80k and CEA from AIM

Earning to give is not a good description for what I do because I'm not optimising across career paths for high pay for donations - more like the highest pay I can get for a 9-5.

I think of it more as "Self-funded community builder"

On cross pollination, yeah I think we agree. The self sorting between cause areas based on intuition and instinct isn't great though - it means that there are opportunities to innovate that are missed in both camps.

One thing I occasionally think about is how few "competitors" exist for CEA's products/services. I feel a little odd using this kind of terminology in a non-profit context, but to put it simplistically: if anyone wants to start up a "competing" conference for do-gooders, they can do that. In a simplistic sense there isn't anything stopping AIM, or GWWC, or High Impact Professionals, or you & I as individuals from putting on a Effective Altruism Annual Conference, or from hosting online introductory EA programs, or from providing coaching and advice to city and university EA groups.

There actually is a lot stopping people from doing this independently - if you would ever want to scale and get funding you basically have 3 sources of funders, and if they don't approve what you are doing you won't get to become a serious competitor

I'm probably less informed than you are, but depending on what you mean by "sources of funders" I disagree.

I think if you can demonstrate getting valuable results and want funding to scale, people will be happy to fund you. My impression is that several people influencing >=6 digit allocations are genuinely looking for projects to fund that can be even more effective than what they're currently funding.

I'm fairly confident that if anyone hosted a conference or online program, got good results, had a clear theory of change with measurable metrics, and gradually asked for funding to scale, people will be happy to fund that.

I agree with you. Hypothetically, anyone can 'compete' by providing an alternative offering. But realistically there are barriers to entry. (I know that I wouldn't be able to put on a conference or run an online forum without lots of outside funding and expertise.) Maybe we could make an argument that there are some competitors with CEA's services (such as Manifest, AVA Summit, LessWrong, Animal Advocacy Forum) but I suspect that the target market is different enough that these don't really count as competitors.

Of all the things that CEA does, running online intro EA programs would probably be the easiest thing to provide an alternative offering for: just get a reading list and start running sessions. Heck, I run book clubs that meet video video chat, and all it takes in 15-45 minutes of administrative work each month.

On a local/national level, maybe university/city group support could realistically be done? But I'm fairly skeptical. My informal impression is that for most of what CEA does it wouldn't make sense for alternative offerings to try to 'compete.'

I'd say the key thing CEA is providing is infrastructure/assets rather than product/services and that tends to be the kind of thing to centralise where possible. Ie. EA forum, community health, shared resources/knowledge, distribution channels etc.

Events are closer to product/services and. AIM has done conferences in the past but they aren't open to wider groups like EAGxs.

The blocker for those orgs is probably capacity - both AIM and GWWC are <20 people, HIP is 2 people, EA UK [1] is 0.8 FTE. For me personally, I do run a lot of events but my frustration is that the barrier to entry is pretty high because of existing network effects, the fact that they do have know-how and that I basically have to do a ton of my own marketing and maintain my own mailing list to run GWWC events.[2]

We could compete but why are we doing that? This is not a zero sum game for impact, it is very positive sum. There's so much work to be done.

a) I agree that it would be better if the names were reversed, however, I also agree that it's locked in now. b) "AIM should be the face of EA and should be feeding in A LOT more to general outreach efforts" - They're an excellent org, but I disagree. I tried writing up an explanation of why, but I struggled to produce something clear.

Should we be making it so difficult for users with an EA forum account to make updates to the forum wikis?

I imagine the platform vision for the EA forum is to be the "Wikipedia for do-gooders" and make it useful as a resource for people working out the best ways to do good. For example, when you google "Effective Altruism AI Safety" on incognito mode - the first result is the forum topic on AI safety: AI safety - EA Forum (effectivealtruism.org)

I was chatting to @Rusheb about this who has spent the last year upskilling to transition into AI Safety from software development. He had some great ideas for links (ie. new 80k guides, site that had links for newbies or people making the transition from software engineering)

Ideally someone who had this experience and opinions on what would be useful on a landing page for AI Safety should be able to suggest this on the wiki page (like you can do on Wikipedia with the caveat that you can be overruled). However, he doesn't have the forum karma to do that and the tooltip explaining that was unclear on how to get the karma to do it.

I have the forum karma to do it but I don't think I should get the credit - I didn't have the AI safety knowledge - he did. In this scenario, the forum has lost out on some free improvements to its wiki plus an engaged user who would feel "bought in". Is there a way to "lend him" my karma?

I got it from posting about EA Taskmaster which shouldn't make me an authority on AI Safety.

Hmmm I'm not being as prescriptive as that. Maybe there is a better solution to this specific problem - maybe requiring someone with higher karma to confirm the suggestion? (original person gets the credit)

This is similar to how StackOverflow / StackExchange works, I think – any user can propose an edit (or there's some very low reputation threshold, I forget) but if you're below some reputation bar then your edit won't be published until reviewed by someone else.

Making this system work well though probably requires higher-karma users having a way of finding out about pending edits.

See also the Payroll Giving (UK) or GAYE - EA Forum (effectivealtruism.org) page which it is the top google result for "Effective Altruism Payroll Giving". It made sense for me to update since I am an accountant and have experience trying to get this done at my workplace.

Did I need to make a post about something unrelated to do that?

Centre for Effective Altruism and Ambitious Impact (formerly Charity Entrepreneurship) are probably named the wrong way around in terms of what they actually do and IMO this feeds into the EA branding problem.

Why do I think this?

"Effective" Altruism implies a value judgement that requires strong evidence to back up - like launching charities aiming to beat GiveWell benchmarks and raising large amounts of money from donors who expect to see evidence significant returns in the next 3 years or shut down.

"Ambitious Impact" implies more speculative, less easy to measure activities in pursuit of even higher impact returns. My understanding is that Open Philanthropy split from GiveWell because of the realisation that there was more marginal funding required for "Do-gooding R&D" with a lower existing evidence base.

Why do we need "Do-Gooding R&D"?

So we can find better ways to help others in the future.

To use the example of a pharmaceutical company, why don't they reduce the prices of all their currently functional drugs to help more people? So, they can fund their expensive hit-based R&D efforts. There's obviously trade-offs, but it's short sighted to pretend the low hanging fruit won't eventually be picked.

So what?

IMO AIM has outcompeted CEA on a number of fronts (their training is better, their content (if not their marketing) is better, they are agile and improve over time). Probably 80% of the useful and practical things I've learned about how to do effective altruism, I've learned from them.

The AIM folks I've spoken to are frustrated that their results - based on exploiting cost-effective high-evidence base interventions - are used to launder the reputation of OP funded low evidence base "Do-gooding R&D." I think before you should get to work on "Do-Gooding R&D", you should probably learn how the current state of Do-Gooding best practices.

If we think about EA brand as a product, I'd guess we're in "The Chasm" below as the EA brand is too associated with the "weird" stuff that innovators are doing to be effectively sold to lower risk tolerance markets.

AIM should be the face of EA and should be feeding in A LOT more to general outreach efforts.Too strong a take - see this reply to Lorenzo's commentConcrete suggestions

Looking atwhere CEA people actually donate, it looks like they are hedging the higher risk nature of their work with donations to interventions with clearer returns.Retracted - too strong a take as highlight by this commentMy wider take is that EA should become a profession (post building on this one incoming if I can ever actually finish it) so there is better regulation of individuals enabling us to have internally facing competition/innovation/R&D based on shared principles while generating externally facing standards that can be used by non-insiders and allow us to scale what works.

We shouldn't be internally fighting for a bigger slide of the existing pie, we should be demonstrating value externally so we can grow the size of the pie.

I work for CEA but I'm writing this from a personal perspective. Others at CEA may disagree with me.

Thanks for writing this! :) I think it's an interesting argument. I generally agree with everything @Lorenzo Buonanno🔸 said in his comments, so I'll just add a few things here.

I ultimately disagree that CEA should change its name, because EA principles are important to me and I like that we are trying to do good explicitly using the framework of EA (including promoting the framework itself) rather than using a more nebulous framing. I can't speak for AIM, but it does seem like our two organizations have different goals, so in that sense it seems good that we both exist and work towards achieving our own separate goals. For example, I think (just a low confidence guess based on public info) that AIM are not interested in stewarding EA or owning improving the EA brand. CEA is interested in doing those things, and it seems good for us to have "EA" in our name in order to do those things. I think you and I both agree that the EA brand needs improving, and CEA is working on hiring for our Comms Team to have more capacity to do this work.

I'm not sure who "they" are in this sentence. I personally don't think I have done this. I have a very high opinion of AIM overall, and I think that sentiment is common within CEA. I have personally applied to one of their incubation rounds because I thought there was a chance I could do more good there than at CEA. They are one of the orgs that best takes advantage of CEA's infrastructure (such as EAG and the EA Forum) — they make frequent appearances in our user surveys about how people have found value from those projects. Our team includes AIM's opportunities in our email newsletters and have curated multiple posts by them. I don't personally know the people who run AIM, but from my perspective we are collaborators on the same team.

I think it's hard to use the linked post as evidence to support this. I counted ~4/10 of the CEA employees that responded as falling into that category, and the rest mostly donated to causes that I think you would consider more speculative (at least more than the average AIM charity). Most CEA employees decided not participate in the public post, and I'm guessing that the ones that did not are more biased towards donating to less legibly cost-effective projects. I think there is also a bit of a theme where people tended to donate to interventions with clearer returns before joining CEA, and at CEA are spending more time considering other donation options (this is broadly true for myself, for example). So there are forces that push in both directions and it's not clear to me what the net result is.

Again, I'm not sure who is fighting internally. I guess this is making me worried that AIM views us as fighting with each other? That possibility makes me genuinely quite sad. If anyone at AIM wants to talk with me about it, you're welcome to message me on the Forum.

Hey - thanks for your reply!

I'd like to caveat that I'm not sure I got the tone quite right in my original quick take. I'm glad I put it out there, but it is very much based on vibes and is motivated by an impression that there's opportunities for stronger relationships to be built. (Mostly based on conversations with AIM folks but I don't speak for them.)

My vibes-based take looks like it might not be true. There might be more collaboration than I can see, or it might just make sense to growth separately since there are clear differences in opinion for cause prioritisation and approach to cost effectiveness. CEA also is beholden to one funder which makes it much harder to be independent from that funder's views.

To be clear I think everyone involved cares deeply, is competent and is, very reasonably, prioritising other things.

Agree with this - I don't think names should be changed and I don't think AIM should/wants to maintain the EA brand. I do think there should be more centralisation of comms though (especially as it seems hard to hire for) - I'm generally in favour of investing more in infrastructure and cutting costs on operating expenses where possible (see my comment here)

This is fair and my original take was too strong. Edited to reflect that.

As noted above, I don't speak for either group here - I'm only a volunteer.

I think fighting was too strong a word, but I don't get the impression there are strong trust-based relationships which I do think is leaving impact on the table by missing potential opportunities to cut costs in the long term by centralising infrastructure/operating expenses.

Appreciate the response! To be clear, I am genuinely glad that you wrote the quick take, so I don't want to discourage you from doing more off-the-cuff quick takes in the future. Hopefully hearing my perspective was helpful as well. I'm glad to hear that you don't think we are actually fighting. :)

On collaborating/cutting costs: my outside impression is that AIM is already quite good about keeping their costs low and is not shy about being proactive. So my view is something like, if they thought it would be good for the world to collaborate more closely with CEA, I trust that they would have acted upon that belief. This is something that I respect about AIM (at least the version that's in my head, since I don't know them).

I feel I'm not informed enough to reply to this, and it feels weird to speculate about orgs I know very little about, but I worry that the people most informed won't reply here for various reasons, so I'm sharing some thoughts based on what very little information I have (almost entirely from reading posts on this forum, and of course speaking only for myself). This is all very low confidence.

I think if you frame it as a question, something like "We are trying to do altruism effectively, this is the best that we're able to do so far", it doesn't require that much evidence (for better or worse)

That is not clear to me, one can be very ambitious by working on things that are very easy to measure. For example, people going through AIM's "founding to give" program seem to have a goal that's easy to measure: money donated in 10 years[1], but I still think of them as clearly "ambitious" if they try to donate millions. Google defines ambitious as "having or showing a strong desire and determination to succeed"

That is not my understanding, reading their public comms I thought OP split from GiveWell to better serve 7-figure donors "Our current product is a poor fit with the people who may represent our most potentially impactful audience." (which I assumed implicitly meant that Moskovitz and Tuna could use more bespoke recommendations)

I agree with this! I liked Finding before funding: Why EA should probably invest more in research, but I expect that the "R&D" work itself might be tricky to do in practice. Still, I'm very excited about GiveWell's RCT grants

I agree that AIM is more impressive than CEA on many fronts, but I think they mostly have different scopes.[2] My impression is that CEA doesn't focus much on specific ways to implement specific approaches to "how to do effective altruism", but on things like "why to do effective altruism" and "here are some things people are doing/writing about how to do good, go read/talk to them for details".

If not for CEA, I think I probably wouldn't have heard of AIM (or GWWC, or 80k, or effective animal advocacy as a whole field). And if I had only interacted with AIM, I'm not sure if I would have been exposed to as many different perspectives on things like animal welfare, longtermism, and spreading positive values[3]

I understand the frustration, especially given the brand concerns below and because I think many AIM folks think that a lot of the assumptions behind longtermism don't hold.[4] But I don't know if this "reputation laundering" is actually happening that much:

I personally believe that the EA brand is in a pretty bad place, and at the moment often associated with things like FTX, TESCREAL and OpenAI, and that is a bigger issue. I think EA is seen as a group non-altruistic people, not as a group of altruistic people who are too "weird". (But I have even lower confidence on this than on the rest of this comment)

Related to the point above, it's not clear to me why AIM should be the face of "EA" instead of any other "doing the most good" movement (e.g. Roots of Progress, School for Moral Ambition, Center for High Impact Philanthropy, ...). I think none of these would make a lot of sense, and don't see why "AIM being the face of AIM" would be worse than AIM being the face of something else.

You can see in their 2023 annual review that they did deeply consider building a new community "but ultimately feel that a more targeted approach focusing on certain careers with the most impact would be better for us".[5]

In general, I agree with your conclusions on wishing for more professionalization, and increasing the size of the pie (but it might be harder than one would think, and it might make sense to instead increase the number of separate pies)

I imagine positive externalities from the new organizations will also be a big part of their impact, but I expect the main measure will be amount donated.

And that this does not say that much about CEA as imho AIM is more impressive than the vast majority of other projects.

AIM obviously does a lot for animal welfare, but I don't think they focus on helping people reson about how to prioritize human vs non-human welfare/rights/preferences.

I can't link to the quote, so I'll copy-paste it here.

Linked from this post.

Full quote:

I don't think they linked to their 2024 annual report on the forum, so this might be different now.

This is helpful and I agree with most of it. I think my take here is mostly driven by:

I agree with 3 of your points but I disagree with the first one:

It's really hard for me to tell if this is a good or bad thing, especially because I think it's possible that things like animal welfare or GCR reduction can plausibly be significantly more effective than more obviously good "practical testable" work (and the reason to favour "R&D" mentioned previously)

Not really a disagreement, but I think it's great that there's cross-pollination, with people getting into AIM from 80k and CEA, and into 80k and CEA from AIM

I agree donations and switching careers are really important! However - I think those shouldn't be the only ways.

Having your job be EA makes it difficult to be independent - livelihoods rely on this and so it makes EA as a whole less robust IMO. I like the Tour of Service model https://forum.effectivealtruism.org/posts/waeDDnaQBTCNNu7hq/ea-tours-of-service

Earning to give is not a good description for what I do because I'm not optimising across career paths for high pay for donations - more like the highest pay I can get for a 9-5.

I think of it more as "Self-funded community builder"

On cross pollination, yeah I think we agree. The self sorting between cause areas based on intuition and instinct isn't great though - it means that there are opportunities to innovate that are missed in both camps.

One thing I occasionally think about is how few "competitors" exist for CEA's products/services. I feel a little odd using this kind of terminology in a non-profit context, but to put it simplistically: if anyone wants to start up a "competing" conference for do-gooders, they can do that. In a simplistic sense there isn't anything stopping AIM, or GWWC, or High Impact Professionals, or you & I as individuals from putting on a Effective Altruism Annual Conference, or from hosting online introductory EA programs, or from providing coaching and advice to city and university EA groups.

There actually is a lot stopping people from doing this independently - if you would ever want to scale and get funding you basically have 3 sources of funders, and if they don't approve what you are doing you won't get to become a serious competitor

I'm probably less informed than you are, but depending on what you mean by "sources of funders" I disagree.

I think if you can demonstrate getting valuable results and want funding to scale, people will be happy to fund you. My impression is that several people influencing >=6 digit allocations are genuinely looking for projects to fund that can be even more effective than what they're currently funding.

I'm fairly confident that if anyone hosted a conference or online program, got good results, had a clear theory of change with measurable metrics, and gradually asked for funding to scale, people will be happy to fund that.

Ah sorry I should have just said "3 main / larger scale funders" (op, eaif + meta funding circle). Funders from those groups include individuals.

But I was also unclear in my comment - I'll clarify this soon.

I agree with you. Hypothetically, anyone can 'compete' by providing an alternative offering. But realistically there are barriers to entry. (I know that I wouldn't be able to put on a conference or run an online forum without lots of outside funding and expertise.) Maybe we could make an argument that there are some competitors with CEA's services (such as Manifest, AVA Summit, LessWrong, Animal Advocacy Forum) but I suspect that the target market is different enough that these don't really count as competitors.

Of all the things that CEA does, running online intro EA programs would probably be the easiest thing to provide an alternative offering for: just get a reading list and start running sessions. Heck, I run book clubs that meet video video chat, and all it takes in 15-45 minutes of administrative work each month.

On a local/national level, maybe university/city group support could realistically be done? But I'm fairly skeptical. My informal impression is that for most of what CEA does it wouldn't make sense for alternative offerings to try to 'compete.'

EAGx conferences are technically not organized by EAG but they are supported by it, so I'm not sure if they count as "competitors"

CEA seems to maintain control over most high-level aspects of EAGx, so I don't think this counts as competition.

Yeah, I could see a reasonable argument either way for that.

I'd say the key thing CEA is providing is infrastructure/assets rather than product/services and that tends to be the kind of thing to centralise where possible. Ie. EA forum, community health, shared resources/knowledge, distribution channels etc.

Events are closer to product/services and. AIM has done conferences in the past but they aren't open to wider groups like EAGxs.

The blocker for those orgs is probably capacity - both AIM and GWWC are <20 people, HIP is 2 people, EA UK [1] is 0.8 FTE. For me personally, I do run a lot of events but my frustration is that the barrier to entry is pretty high because of existing network effects, the fact that they do have know-how and that I basically have to do a ton of my own marketing and maintain my own mailing list to run GWWC events.[2]

We could compete but why are we doing that? This is not a zero sum game for impact, it is very positive sum. There's so much work to be done.

I'm on the EA UK board

I think the death of Facebook has had an underrated impact on EA Community Building - its actually so much more effort now to run events.

a) I agree that it would be better if the names were reversed, however, I also agree that it's locked in now.

b) "AIM should be the face of EA and should be feeding in A LOT more to general outreach efforts" - They're an excellent org, but I disagree. I tried writing up an explanation of why, but I struggled to produce something clear.

Yeah on reflection I think b) is too strong (virtue of this being a quick take).

My best explanation is that they don't have the management capacity to effectively scale and AIM's current comms are very EA insider coded. Very excited about them making a strong comms hire https://docs.google.com/document/d/1YbP7m187DK6CNbijnu5lHQj4JWpdQB8hR4w6tgFnVfY/edit?usp=drivesdk

I'd be curious about your unpolished thoughts if you'd like to DM me.

London folks - I'm going to be running the EA Taskmaster game again at the AIM office on the afternoon of Sunday 8th September.

It's a fun, slightly geeky, way to spend a Sunday afternoon. Check out last year's list of tasks for a flavour of what's in store 👀

Sign up here

(Wee bit late in properly advertising so please do spread the word!)

The OECD are currently hiring for a few potentially high-impact roles in the tax policy space:

The Centre for Tax Policy and Administration (CTPA)

I know less about the impact of these other areas but these look good:

Trade and Agriculture Directorate (TAD)

International Energy Agency (IEA)

Financial Action Task Force

Should we be making it so difficult for users with an EA forum account to make updates to the forum wikis?

I imagine the platform vision for the EA forum is to be the "Wikipedia for do-gooders" and make it useful as a resource for people working out the best ways to do good. For example, when you google "Effective Altruism AI Safety" on incognito mode - the first result is the forum topic on AI safety: AI safety - EA Forum (effectivealtruism.org)

I was chatting to @Rusheb about this who has spent the last year upskilling to transition into AI Safety from software development. He had some great ideas for links (ie. new 80k guides, site that had links for newbies or people making the transition from software engineering)

Ideally someone who had this experience and opinions on what would be useful on a landing page for AI Safety should be able to suggest this on the wiki page (like you can do on Wikipedia with the caveat that you can be overruled). However, he doesn't have the forum karma to do that and the tooltip explaining that was unclear on how to get the karma to do it.

I have the forum karma to do it but I don't think I should get the credit - I didn't have the AI safety knowledge - he did. In this scenario, the forum has lost out on some free improvements to its wiki plus an engaged user who would feel "bought in". Is there a way to "lend him" my karma?

I got it from posting about EA Taskmaster which shouldn't make me an authority on AI Safety.

Is your concrete suggestion/ask "get rid of the karma requirement?"

Hmmm I'm not being as prescriptive as that. Maybe there is a better solution to this specific problem - maybe requiring someone with higher karma to confirm the suggestion? (original person gets the credit)

This is similar to how StackOverflow / StackExchange works, I think – any user can propose an edit (or there's some very low reputation threshold, I forget) but if you're below some reputation bar then your edit won't be published until reviewed by someone else.

Making this system work well though probably requires higher-karma users having a way of finding out about pending edits.

See also the Payroll Giving (UK) or GAYE - EA Forum (effectivealtruism.org) page which it is the top google result for "Effective Altruism Payroll Giving". It made sense for me to update since I am an accountant and have experience trying to get this done at my workplace.

Did I need to make a post about something unrelated to do that?

Since they were well received last year, I'm going to be hosting the EA London Quarterly Review Coworking sessions again for 2024.

You can register here: Q1 FY24 session sign up; Q2 FY24 session sign up; Q3 FY24 session sign up; Q4 FY24 session sign up

Thanks to Rishane for making this poster and to LEAH for hosting us.