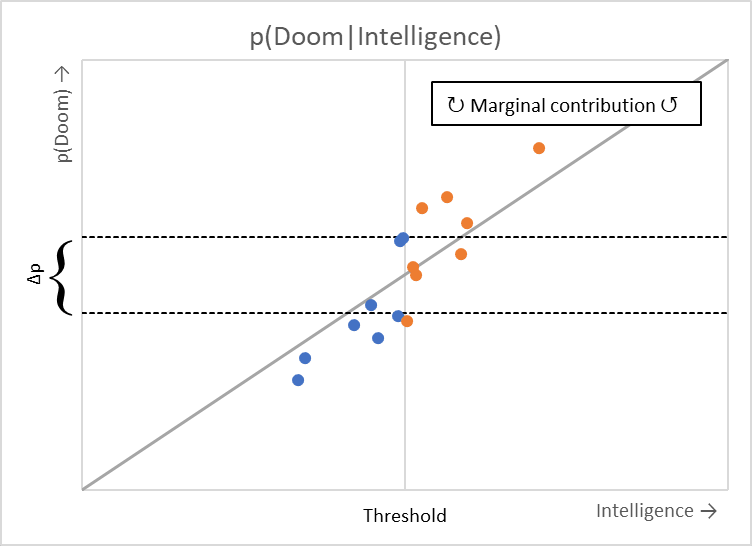

Suppose you are an economist with a strong institutional skepticism against claims of discontinuous effects (this may or may not be me). This framework has brought economics as a field far to date, but has been largely missing from the discourse on AI risk, so this perspective is worth exploring on its face. Given the continuity assumption, marginality provides an arguably more useful framework for understanding the world than Bayesian probability.

Without a threshold, your estimation of p(Doom) in a net sense may be close to zero: the risk of "doom" is about the same after crossing the threshold of "superintelligence" as before (whatever the hypothesized definitions of those terms.) Or maybe not: it would depend on how much variation in the X axis - the independent variable - is preserved after splitting the world into those two cases. Maybe most scenarios within those two cases will reside far from the cutoff point, but p(Doom) is supposed to measure the variation between cases, not within them. Moreover, even in that case, it would not be fair to characterize this view as "AI risk skepticism," because a significant risk of doom exists in most scenarios, regardless of precisely on which side of the threshold they reside - which is caused specifically by computational intelligence, and proportional to its growth. In some sense, then, you may be even more concerned about risks from AI, including existential ones, than someone concerned only about risks which arise above the threshold.

The world will not be adhering to the baseline rate of "doom" risk which might have existed during the stone age - regardless of whether or not we achieve any given posited threshold of intelligence. In this post, I want to explain why someone might still worry about these sub-threshold risks in the context or AI development. I will also argue that cybersecurity might be significantly underestimated as a constraint on property rights over the long term, and that market mechanisms - cybersecurity insurance - remain substantially underutilized. This helps form a vision of AI safety which significantly differs from the 'alignment' perspective.

The biggest hurdle for AI safety is defining the problem in the first place, so to kick this post off, it may be worth considering a concrete anecdote. The first case of independent cyber crime morphing into kinetic military action has arguably occurred in a form that does not appear so futuristic. Hundreds of thousands of people have been kept captive in Myanmar working in scam centers, developing a social engineering model for profit with victims located all over the world. Although the proximate cause of Myanmar’s current civil war is the 2021 coup, the scam industry has been a factor in China's equivocation in support between the ruling regime and the rebels, which has even challenged its larger foreign policy principle of non-interference. Based on this case, the impact of AI on geopolitics (which is its most direct route to catastrophic harm) is of much more of a bottom-up nature than generally understood.

The importance of cybersecurity

Foundation models exist as only one element of a full stack which will be developed as time goes on. They will be extended through RAG or agents to eventually become explainable, with the capacity for self-criticism - characteristics which will required to eventually generate working code without human supervision. In the domain of cybersecurity, on either the offensive or defensive side, 100% accuracy is not required for many tasks, so this will be an early application of AI with transformative risk impact.

It is unclear why risks to property rights would not transfer over to existential risks in the longer term either - which is even true for scenarios without obvious links to the internet. With the concept of defense-in-depth, we think not only about networks in isolation, but also how they interact with other systems. If a malicious actor were to use AI to make a biological weapon, for example, they would still need a physical supply chain which could conceivably interact with the content of AI models. Meanwhile, as suggested by the name, 'biohacking' involves the interaction between code and biological networks.

Cybersecurity is an important potential cause area, both for its direct impacts and its insight into AI risks. A quick magnitude estimate may be in order - one estimate forecasts the overall cost of cybersecurity to rise to 10 trillion US dollars by 2025. Based on this figure, if cybercrime were a country, its GDP would rank third in the world, following only the US and China. This is a big enough problem to be potentially destabilizing, as illustrated above. Moving forward, models themselves will be targets of even more value.

All that having been said, the field of cybersecurity nevertheless remains relentlessly backward-looking, outside of the pure cryptography aspect. Essentially every practical cyberattack vector includes some social element (to a greater or lesser degree), but without engaging with the economics discipline, it is difficult to theorize on this dimension using calculus, taking the limit as computer intelligence approaches infinity. Social engineering will even be extended to include prompt engineering in the future, showing that the potential for 'psychological' attacks is not limited to the number of humans on earth.

When thinking about scaling, cybersecurity even scales seamlessly into geopolitics. Suppose a superpower wants assurances of being included in AI governance if they give up arms - this is cybersecurity by some definition, as it involves security as well as computer networks. Solutions will involve a mix of technical and social elements, as with any other area of cybersecurity. In fact, this is not a new insight, as popular commentators have been conflating cybersecurity with society-scale social attacks since the 2016 election, even though this arguably does not represent the main potential of social attacks. In any case, it makes no difference in this scenario whether super-intelligence has arrived yet or is on its way at some undetermined point in the future. Geopolitical fault lines will be re-aligned based on rational expectations - which seems like an accurate description of the world today.[1]

Tractability of cyber risk in the AI era

Having argued that AI risk can be reduced to "cybersecurity" under some definition, it is nevertheless not realistic to treat AI simply as a more powerful version of an ordinary computer. Notwithstanding how the term is applied loosely to almost any decision machine these days, there is clearly something clearly different this time. The most fundamental difference between AI and traditional computing is probably its analog basis. Whether or not that principle is reflected in the physical substrate, or perhaps simulated using low-precision number formats, it is clear that computing is moving from linear composition into a more qualitative paradigm of massive variable interactions.[2] Analog, or qualitative computing can be associated with social attacks, in contrast to the more familiar digital domain.

Ironically, the analog dimension is relatively straightforward to quantify by the number of parameters, but the digital dimension is more subtle. Except for encryption applications, digital complexity is not restricted by computing power, but rather by the ability of humans to understand the risks of a system. These risks are associated with both the sensitivity of a given function, correlating with its monetary value, and its connectivity, representing attack surface. In short, digital value refers to the number of steps it takes to convert given bytes of information into cash.

The big picture of cybersecurity is one of increasing financialization over time. Consider the recent rise of ransomware, the upmarket cousin of social engineering scams. Although this trend is driven in part by the rise of the post-COVID work-from-home transition, it also reflects the refinement of a so-called Ransomware-as-a-Service division of labor model on the dark web. It has now become easier for threat actors to disaggregate the quantitative elements of penetration from qualitative social engineering or business negotiation aspects of an attack. It is the ability to exchange value which underpins this new market structure.[3] Money laundering prevention will require human labor for a long time, since it involves deciding who has access to the financial system, and therefore can be represented in monetary units as a 'price.'

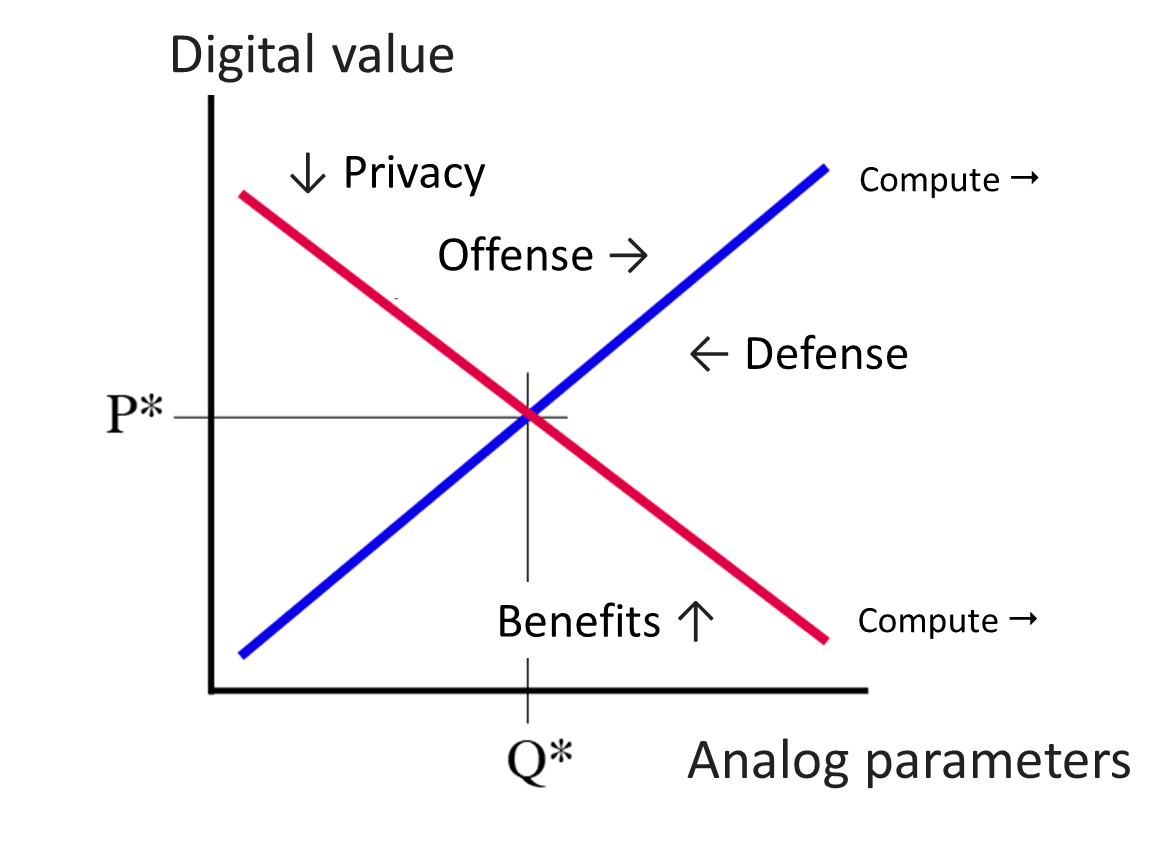

With these definitions in place, we have a supply-demand model of cybersecurity:

It is worth comparing this model to the more traditional notion of offense-defense balance. The latter focuses solely on the technical dimensions of security, but both sides have entire ecosystems, which tend to be organized according to market principles. The best representation of O-D balance on this chart might be the slope of the supply curve, which represents attackers' costs. Legal remedies require certain economies of scale, so costs for attackers rise with larger operations.

The O-D balance entirely neglects the role of privacy, which is shown in the demand curve. If the technological security situation deteriorates, we might become less willing to put their personal information on the internet to get stolen (and governments and corporations might likewise have less leverage to nudge people to do so), nudging demand down and thereby restoring equilibrium. Absent such a threat, however, these stakeholders obtain benefits from the uses of data, and therefore will tend to ignore privacy concerns. These benefits could even include important factors for development like financial inclusion; "privacy" in this context could also include money laundering restrictions which would make it more difficult to send money around the world at the touch of a button.

Cyber insurance is neglected

Having established this market foundation, cybersecurity has been plugged into the core of economics. Insurance becomes tractable as a way to mitigate harms, and also as a way to model AI risk. To date, the AI safety conversation has seldom involved markets, yet finance arguably models risk in a more pure sense than any other field. As cybersecurity matures from its early 'hacktivism' into market commodification, the defensive side can also think about creating its own market ecosystems. Many potential EA cause areas involving markets have questionable neglectedness, but cybersecurity insurance has received almost no public attention.

It should not be considered as niche a cause area as it is. The insurance sector has already proven to be an important advocate for several public policy issues. Climate scientists raised the alarm about global warming early on through re-insurers, driving the public dialogue, and insurers were also a key factor in the creation of seat belt laws, etc. Estimated at US$ 17 billion in 2023, the cybersecurity insurance market is almost three orders of magnitude smaller than the above figure for harms from cybersecurity. The optimal market size is difficult to define, but could easily be more than an order of magnitude larger, without even counting growth of the underlying problem.

Around 2022, in the wake of the ransomware wave, increased skepticism emerged about the future of the insurance model. Premiums to end users rose, while insurers were forced to cede their own profit margins to re-insurers. Ransomware may appear to be a problem of moral hazard, as insured entities could use insurer funds to negotiate with criminals; in fact, the moral hazard problem may have been worse before attackers had such open financial motivations. Theft of personal information could have previously been considered as a victimless crime, an externality which the insurers and insured were motivated to jointly conceal, which has now been properly internalized.

The current bottleneck problem of cybersecurity is an explosion of stakeholders, which raises the transaction costs inhibiting Coasean bargaining within a political (and geopolitical) environment characterized by increasing mistrust. This would mean that capitalization is an important hurdle, yet certain structural factors combine to make cyber-insurance a difficult business line which is arguably chronically undercapitalized. Cyber-risk is much more difficult to model than other areas, facing problems like the potential for catastrophic failures uncontained by geography, with few sources of historical data and uncertain impacts from AI. Notably, these are the precise problems considered within AI safety, although expressed in a different way.

One particular issue worth highlighting, common both to cybersecurity and many AI "doom" scenarios, is the role of state power. Insurers are increasingly arguing for an expansive definition of "acts of war" to exclude claims involving any state-based attack. From their perspective, these risks are indeed harder to model, yet at the same time, policyholders still require this coverage. Whether or not state-based attackers follow the same cost-benefit principles seems like a highly relevant AI risk question, which is now being raised through the insurance mechanism. Considering the larger geopolitical implications, it could be worth exploring state financial guarantees for the sector in exchange for bearing an expanded scope of responsibility.[4]

Discussion

This approach redefines misalignment as the unreasonable use of AI for overly sensitive applications in the first place, turning it into a regulation and market formation problem, rather than a purely technical problem. It is worth noting that uncertainty, or risk that cannot be properly modelled or insured because we don't even know the questions to ask, has a long intellectual history. Nevertheless, even if the financial approach fails to accurately model some of the risks arising from AI, it is difficult to see how a user-side approach to safety (including other applications which negligently enable malicious use) might inhibit concurrent efforts on the supplier side. Possible exceptions however might include a couple edge cases of failure modes which truly unfathomable ex ante, or else extinction which is so quick as to be painless.

This argument for continuity is not a case for sanguinity. Many risks existed even before AI entered the scene, and AI will create more. For better or worse, though, many fears about AI read like veiled critiques of capitalism itself, which is a somewhat different proposition. The evolutionary nature of AI training algorithms makes them difficult to control by nature, psychopathically 'cheating' their way out of badly specified controls, yet economists since Schumpeter have noted the evolutionary nature of economic growth. Growth has always been exponential, which is what gives interest rates such fundamental significance, and the exponential function itself can be defined in as an evolutionary algorithm (; the marginal scaling equals the level that has already been achieved). In short, corporations are already post-human actors with the potential to go rogue.

On a more practical cybersecurity level as well, as much as faith as we might have in machine objectivity, trial and error testing at scale is essentially always an indispensable test method. Economic structure helps explain the very practical cybersecurity problem of legacy code. Some older companies still use 60 year-old software stacks, a persistence pattern which looks similar to the information transmission of DNA.

Some criticisms of capitalism are quite valid. Markets have no inherent interest in discriminating between fixed characteristics of an individual and other characteristics which create positive incentives, which comes back to the basic fact that correlation isn't causation. This becomes ever more relevant as we further streamline the ability of corporations to arbitrarily exclude certain actors from the financial system and the internet. Necessary as such actions may be, they point to potential tradeoffs between X-risks and S-risks which are worth exploring.

When thinking specifically about scaling AI safety, however, markets are an unavoidable topic. Malicious actors create market ecosystems, which extend conceptually to nation-states. Governments on the 'blue team' unavoidably look to existing stakeholders, frequently corporations, when establishing social consensus on a balance between accelerationism and decelerationism. If functionality restrictions are indeed necessary, we must ask who is on the short side of that transaction, in a position to make a profit. Crude restrictions on processing power, under the premise that consumers don't yet understand the transformative power of AI, are brittle and likely to fail just when we depend on them.

Institutional biases can be a tricky topic to work around. Other approaches to AI safety certainly exist, such as mechanistic interpretability, which cannot be represented in economic models. For such an abstract topic, it is essential that the most diverse range of perspectives possible is presented.

- ^

While on the topic of geopolitical risk, AI, and marginality, it is also worth making a note about the problem of retrospective attribution. Suppose AI caused superpowers to engage in (conventional, non-automated) nuclear war, which they would not have done in an imaginary counterfactual world without that level of AI development. We, as analysts (now camped in our bunkers) will not get to see this counterfactual world. Instead, we will see a multitude of risk factors, which may or may not have any apparent relation to AI. This is a good reminder that effects can appear in very different places from root causes. Financial flows of various sorts may provide a better indication of risk than the capability level of the most powerful AI system, implying the need for an economic approach to AI risk.

- ^

From the Wired article,

Digital computers are very good at scalability. Analog is very good at complex interactions between variables.

To expound on this difference, consider an exponential function , where the exponent k refers to the number of variables, and base m corresponds to the precision (or size) of each variable. Calculus tells us that increasing m scales the overall result faster, however k may correspond better to objects in the real world, particularly in the presence of measurement error. In particular, it could also correspond to attack surfaces, at least before considering encryption, which is more digital in nature.

The underlying principles here are older than computers and can be reflected in statistics as well. The standard descriptive statistics, such as mean and standard deviation, are digital in nature, and preserve the precision of the underlying data, after properly accounting for any mechanical transformations. Dimensionality reduction statistics, like principal components analysis, on the other hand, discovers latent variables ("dimensions") hidden within the data. PCA includes a non-algebraic step of ranking the latent variables, which creates the same explainability problems as AI. Indeed, neural nets could be considered a generalization of PCA.

This concept is even manifested on a philosophical level. The Age of AI notes that in contrast to most enlightenment philosophers,

Wittgenstein counseled that knowledge was to be found in generalizations about similarities across phenomena, which he termed “family resemblances” ...The quest to define and catalog all things, each with its own sharply delineated boundaries, was mistaken, he held. Instead, one should seek to define “This and similar things” and achieve familiarity with the resulting concepts, even if they had “blurred” or “indistinct” edges.

[Italics in original.] The authors note that this thinking later informed thinking about AI.

- ^

Illicit online markets do not depend entirely on digital currencies, and digital currencies have legitimate uses, but they can still help demonstrate the interplay between encryption strength, system integration, and market valuation. Their market valuation depends in part on integration into the formal financial system, a highly sensitive application. This facet is uniquely digital and arguably does not apply to other historical analogues like wildcat currencies. Cryptocurrencies were the the last major application of commodity computing prior to AI.

- ^

This is also in line with the existing public-private model of terrorism insurance promoted in the wake of 9/11. The Terrorism Risk Insurance Program in the US is in fact starting to focus on cybersecurity: according to its 2022 report, while insurers are indeed excluding "acts of [cyber] war," acts of terrorism as certified by the US government are an exception to that exception. If broadened beyond the somewhat limited frame of terrorism, designations like these could open up space for bargaining between great powers on the margin, helping formalize rules of engagement in order to prevent escalation.

Executive summary: An economist's perspective suggests that AI safety should focus on cybersecurity risks that exist on a continuum, rather than solely on risks from a hypothetical superintelligence threshold.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.