The Happier Lives Institute (HLI) is a non-profit research institute that seeks to find the best ways to improve global wellbeing, then share what we find. Established in 2019, we have pioneered the use of subjective wellbeing measures (aka ‘taking happiness seriously’) to work out how to do the most good.

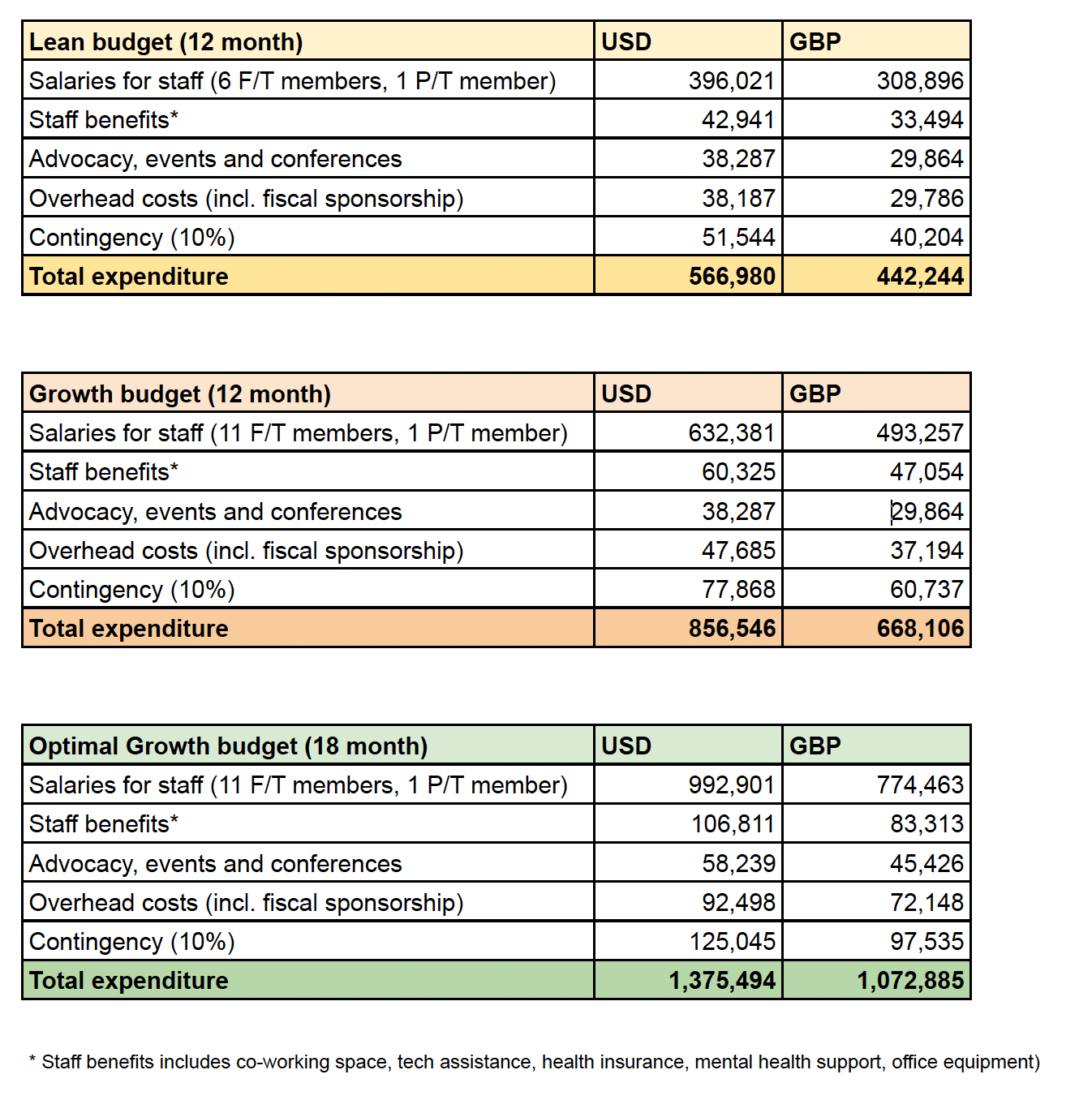

HLI is currently funding constrained and needs to raise a minimum of 205,000 USD to cover operating costs for the next 12 months. We think we could usefully absorb as much as 1,020,000 USD, which would allow us to expand the team, substantially increase our output, and provide a runway of 18 months.

This post is written for donors who might want to support HLI’s work to:

- identify and promote the most cost-effective marginal funding opportunities at improving human happiness.

- support a broader paradigm shift in philanthropy, public policy, and wider society, to put people’s wellbeing, not just their wealth, at the heart of decision-making.

- improve the rigour of analysis in effective altruism and global priorities research more broadly.

A summary of our progress so far:

- Our starting mission was to advocate for taking happiness seriously and see if that changed the priorities for effective altruists. We’re the first organisation to look for the most cost-effective ways to do good, as measured in WELLBYs (Wellbeing-adjusted life years)[1]. We didn’t invent the WELLBY (it’s also used by others e.g. the UK Treasury) but we are the first to apply it to comparing which organisations and interventions do the most good.

- Our focus on subjective wellbeing (SWB) was initially treated with a (understandable!) dose of scepticism. Since then, many of the major actors in effective altruism’s global health and wellbeing space seem to have come around to it (e.g., see these comments by GiveWell, Founders Pledge, Charity Entrepreneurship, GWWC). [Paragraph above edited 10/07/2023 to replace 'all' with 'many' and remove a name (James Snowden) from the list. See below]

- We’ve assessed several top-regarded interventions for the first time in terms of WELLBYs: cash transfers, deworming, psychotherapy, and anti-malaria bednets. We found treating depression is several times more cost-effective than either cash transfers or deworming. We see this as important in itself as well as a proof of concept: taking happiness seriously can reveal new priorities. We've had some pushback on our results, which was extremely valuable. GiveWell’s own analysis concludes treating depression is 2x as good as cash transfers (see here, which includes our response to GiveWell).

- We strive to be maximally philosophically and empirically rigorous. For instance, our meta-analysis of cash transfers has since been published in a top academic journal. We’ve shown how important philosophy is for comparing life-improving against life-extending interventions. We’ve won prizes: our report re-analysing deworming led GiveWell to start their “Change Our Mind” competition. Open Philanthropy awarded us money in their Cause Exporation Prize.

- Our work has an enormous global scope for doing good by influencing philanthropists and public policy-makers to both (1) redirect resources to the top interventions we find and (2) improve prioritisation in general by nudging decision-makers to take a wellbeing approach (leading to resources being spent better, even if not ideally).

- Regarding (1), we estimate that just over the period of Giving Season 2022, we counterfactually moved around $250,000 to our top charity, StrongMinds; this was our first campaign to directly recommend charities to donors[2].

- Regarding (2), the Mental Health Funding Circle started in late 2022 and has now disbursed $1m; we think we had substantial counterfactual impact in causing them to exist. In a recent 80k podcast, GiveWell mention our work has influenced their thinking (GiveWell, by their count, influences $500m a year)[3].

- We’ve published over 25 reports or articles. See our publications page.

- We’ve achieved all this with a small team. Presently, we’re just five (3.5 FTE researchers). We believe we really 'punch above our weight', doing high impact research at a low cost.

- However, we are just getting started. It takes a while to pioneer new research, find new priorities, and bring people around to the ideas. We’ve had some impact already, but really we see that traction as evidence we’re on track to have a substantial impact in the future.

What’s next?

Our vision is a world where everyone lives their happiest life. To get there, we need to work out (a) what the priorities are and (b) have decision-makers in philanthropy and policy-making (and elsewhere) take action. To achieve this, the key pieces are:-

- conducting research to identify different priorities compared to the status quo approaches (both to do good now and make the case)

- developing the WELLBY methodology, which includes ethical issues such as moral uncertainty and comparing quality to quantity of life

- promoting and educating decision-makers on WELLBY monitoring and evaluation

- building the field of academic researchers taking a wellbeing approach, including collecting data on interventions.

Our organisational strategy is built around making progress towards these goals. We've released, today, a new Research Agenda for 2023-4 which covers much of the below in more depth.

In the next six months, we have two priorities:

Build the capacity and professionalism of the team:

- We’re currently recruiting a communications manager. We’re good at producing research, but less good at effectively telling people about it. The comms manager will be crucial to lead the charge for Giving Season this year.

- We’re about to open applications for a Co-Director. They’ll work with me and focus on development and management; these aren’t my comparative advantage and it’ll free me up to do more research and targeted outreach.

- We’re likely to run an open round for board members too.

And, to do more high-impact research, specifically:

- Finding two new top recommended charities. Ideally, at least one will not be in mental health.

- To do this, we’re currently conducting shallow research of several causes (e.g., non-mood related mental health issues, child development effects, fistula repair surgery, and basic housing improvements) with the aim of identifying promising interventions.

- Alongside that, we’re working on wider research agenda, including: an empirical survey to better understand how much we can trust happiness surveys; summarising what we’ve learnt about WELLBY cost-effectiveness so we can share it with others; revise working papers on the nature and measurement of wellbeing; a book review Will MacAskill’s ‘What We Owe The Future’.

The plan for 2024 is to continue developing our work by building the organisation, doing more good research, and then telling people about it. In particular:

- Investigate 4 or 5 more cause areas, with the aim of adding a further three top charities by the end of 2024.

- Develop the WELLBY methodology, exploring, for instance, the social desirability bias in SWB scales

- Explore wider global priorities/philosophical issues, e.g. on the badness of death and longtermism.

- For a wider look at these plans, see our Research Agenda for 2023-4, which we’ve just released.

- If funding permits, we want to grow the team and add three researchers (so we can go faster) and a policy expert (so we can better advocate for WELLBY priorites with governments)

- (maybe) scale up providing technical assistance to NGOs and researchers on how to assess impact in terms of WELLBYs (we do a tiny amount of this now)

- (maybe) launch a ‘Global Wellbeing Fund’ for donors to give to.

- (maybe) explore moving HLI inside a top university.

We need you!

We think we’ve shown we can do excellent, important research and cause outsized impact on a limited budget. We want to thank those who’ve supported us so far. However, our financial position is concerning: we have about 6 months’ reserves and need to raise a minimum of 205,000 USD to cover our operational costs for the next 12 months. This is even though our staff earn about ½ what they would in comparable roles in other organisations. At most, we think we could usefully absorb 1,020,000 USD to cover team expansion to 11 full time employees over the next 18 months.

We hope the problem is that donors believe the “everything good is fully funded” narrative and don’t know that we need them. However, we’re not fully-funded and we do need you! We don’t get funding from the two big institutional donors, Open Philanthropy and the EA Infrastructure fund (the former doesn’t fund research in global health and wellbeing; we didn’t get feedback from the latter). So, we won’t survive, let alone grow, unless new donors come forward and support us now and into the future.

Whether or not you’re interested in supporting us directly, we would like donors to consider funding our recommended charities; we aim to add two more to our list by the end of 2023. We expect these will be able to absorb millions or tens of dollars, and this number will expand as we do more research.

We think that helping us ‘keep the lights on’ for the next 12-24 months represents an unusually large counterfactual opportunity for donors as we expect our funding position to improve. We’ll explore diversifying our funding sources by:

- Seeking support from the wider world of philanthropy (where wellbeing and mental health are increasing popular topics)

- Acquiring conventional academic funding (we can’t access this yet as we’re not UKRI registered, but we’re working on this; we are also in discussions about folding HLI into a university)

- Providing technical consultancy on wellbeing-based monitoring and evaluation of projects (we’re having initial conversations about this too).

To close, we want to emphasise that taking happiness seriously represents a huge opportunity to find better ways to help people and reallocate enormous resources to those things, both in philanthropy and in public-policymaking. We’re the only organisation we know of focusing on finding the best ways to measure and improve the quality of lives. We sit between academia, effective altruism and policy-making, making us well-placed to carry this forward; if we don’t, we don’t know who else will.

If you’re considering funding us, I’d love to speak with you. Please reach out to me at michael@happierlivesinstitute.org and we’ll find time to chat. If you’re in a hurry, you can donate directly here.

Appendix 1: HLI budget

- ^

One WELLBY is equivalent to a 1-point increase on a 0-10 life satisfaction scale for one year

- ^

The total across two matching campaigns at the Double-Up Drive, the Optimus Foundation as well as donations via three effective giving organisations (Giving What We Can, RC Forward, and Effectiv Spenden) was $447k. Note not all this data is public and some public data is out of date. The sum donated be larger as donations may have come from other sources. We encourage readers to take this with a pinch of salt and how to do more accurate tracking in future.

- ^

Some quotes about HLI’s work from the 80k podcast:

[Elie Hassenfeld] ““I think the pro of subjective wellbeing measures is that it’s one more angle to use to look at the effectiveness of a programme. It seems to me it’s an important one, and I would like us to take it into consideration[Elie] “…I think one of the things that HLI has done effectively is just ensure that this [using WELLBYs and how to make tradeoffs between saving and improving lives] is on people’s minds. I mean, without a doubt their work has caused us to engage with it more than we otherwise might have. […] it’s clearly an important area that we want to learn more about, and I think could eventually be more supportive of in the future.”

[Elie] “Yeah, they went extremely deep on our deworming cost-effectiveness analysis and pointed out an issue that we had glossed over, where the effect of the deworming treatment degrades over time. […] we were really grateful for that critique, and I thought it catalysed us to launch this Change Our Mind Contest. ”

>Since then, all the major actors in effective altruism’s global health and wellbeing space seem to have come around to it (e.g., see these comments by GiveWell, Founders Pledge, Charity Entrepreneurship, GWWC, James Snowden).

I don't think this is an accurate representation of the post linked to under my name, which was largely critical.

[Speaking for myself here]

I also thought this claim by HLI was misleading. I clicked several of the links and don't think James is the only person being misrepresented. I also don't think this is all the "major actors in EA's GHW space" - TLYCS, for example, meet reasonable definitions of "major" but their methodology makes no mention of wellbys

Hello James. Apologies, I've removed your name from the list.

To explain why we included it, although the thrust of your post was to critically engage with our research, the paragraph was about the use of the SWB approach for evaluating impact, which I believed you were on board with. In this sense, I put you in the same category as GiveWell: not disagreeing about the general approach, but disagreeing about the numbers you get when you use it.

Thanks for editing Michael. Fwiw I am broadly on board with swb being a useful framework to answer some questions. But I don’t think I’ve shifted my opinion on that much so “coming round to it” didn’t resonate

Hi everyone,

To fully disclose my biases: I’m not part of EA, I’m Greg’s younger sister, and I’m a junior doctor training in psychiatry in the UK. I’ve read the comments, the relevant areas of HLI’s website, Ozler study registration and spent more time than needed looking at the dataset in the Google doc and clicking random papers.

I’m not here to pile on, and my brother doesn’t need me to fight his corner. I would inevitably undermine any statistics I tried to back up due to my lack of talent in this area. However, this is personal to me not only wondering about the fate of my Christmas present (Greg donated to Strongminds on my behalf), but also as someone who is deeply sympathetic to HLI’s stance that mental health research and interventions are chronically neglected, misunderstood and under-funded. I have a feeling I’m not going to match the tone here as I’m not part of this community (and apologise in advance for any offence caused), but perhaps I can offer a different perspective as a doctor with clinical practice in psychiatry and on an academic fellowship (i.e. I have dedicated research time in the field of mental health).

The conflict seems to be that, on one hand, HLI has im... (read more)

Strongly upvoted for the explanation and demonstration of how important peer-review by subject matter experts is. I obviously can't evaluate either HLI's work or your review, but I think this is indeed a general problem of EA where the culture is, for some reason, aversive to standard practices of scientific publishing. This has to be rectified.

[Speaking from a UK perspective with much less knowledge of non-medical psychotherapy training]

I think the importance is having a strong mental health research background, particularly in systematic review and meta-analysis. If you have an expert in this field then the need for clinical experience becomes less important (perhaps, depends on HLI's intended scope).

It's fair to say psychology and psychiatry do commonly blur boundaries with psychotherapy as there are different routes of qualification - it can be with a PhD through a psychology/therapy pathway, or there is a specialism in psychotherapy that can be obtained as part of psychiatry training (a bit like how neurologists are qualified through specialism in internal medicine training). Psychotherapists tend to be qualified in specific modalities in order to practice them independently e.g. you might achieve accreditation in psychoanalytic psychotherapy, etc. There are a vast number of different professionals (me included, during my core training in psychiatry) who deliver psychotherapy under supervision of accredited practitioners so the definition of therapist is blurry.

Psychotherapy is similarly researched through the perspe... (read more)

[Own views]

- I think we can be pretty sure (cf.) the forthcoming strongminds RCT (the one not conducted by Strongminds themselves, which allegedly found an effect size of d = 1.72 [!?]) will give dramatically worse results than HLI's evaluation would predict - i.e. somewhere between 'null' and '2x cash transfers' rather than 'several times better than cash transfers, and credibly better than GW top charities.' [I'll donate 5k USD if the Ozler RCT reports an effect size greater than d = 0.4 - 2x smaller than HLI's estimate of ~ 0.8, and below the bottom 0.1% of their monte carlo runs.]

- This will not, however, surprise those who have criticised the many grave shortcomings in HLI's evaluation - mistakes HLI should not have made in the first place, and definitely should not have maintained once they were made aware of them. See e.g. Snowden on spillovers, me on statistics (1, 2, 3, etc.), and Givewell generally.

- Among other things, this would confirm a) SimonM produced a more accurate and trustworthy assessment of Strongminds in their spare time as a non-subject matter expert than HLI managed as the centrepiece of their activity; b) the ~$250 000 HLI has moved to SM should be counted on th

... (read more)Hi Greg,

Thanks for this post, and for expressing your views on our work. Point by point:

- I agree that StrongMinds' own study had a surprisingly large effect size (1.72), which was why we never put much weight on it. Our assessment was based on a meta-analysis of psychotherapy studies in low-income countries, in line with academic best practice of looking at the wider sweep of evidence, rather than relying on a single study. You can see how, in table 2 below, reproduced from our analysis of StrongMinds, StrongMinds' own studies are given relatively little weight in our assessment of the effect size, which we concluded was 0.82 based on the available data. Of course, we'll update our analysis when new evidence appears and we're particularly interested in the Ozler RCT. However, we think it's preferable to rely on the existing evidence to draw our conclusions, rather than on forecasts of as-yet unpublished work. We are preparing our psychotherapy meta-analysis to submit it for academic peer review so it can be independently evaluated but, as you know, academia moves slowly.

- We are a young, small team with much to learn, and of course, we'll make mistakes. But, I wouldn't characterise th

... (read more)Hello Michael,

Thanks for your reply. In turn:

1:

HLI has, in fact, put a lot of weight on the d = 1.72 Strongminds RCT. As table 2 shows, you give a weight of 13% to it - joint highest out of the 5 pieces of direct evidence. As there are ~45 studies in the meta-analytic results, this means this RCT is being given equal or (substantially) greater weight than any other study you include. For similar reasons, the Strongminds phase 2 trial is accorded the third highest weight out of all studies in the analysis.

HLI's analysis explains the rationale behind the weighting of "using an appraisal of its risk of bias and relevance to StrongMinds’ present core programme". Yet table 1A notes the quality of the 2020 RCT is 'unknown' - presumably because Strongminds has "only given the results and some supporting details of the RCT". I don't think it can be reasonable to assign the highest weight to an (as far as I can tell) unpublished, not-peer reviewed, unregistered study conducted by Strongminds on its own effectiveness reporting an astonishing effect size - before it has even been read in full. It should be dramatically downweighted or wholly discounted until then, rather than included a... (read more)

Hello Gregory. With apologies, I’m going to pre-commit both to making this my last reply to you on this post. This thread has been very costly in terms of my time and mental health, and your points below are, as far as I can tell, largely restatements of your earlier ones. As briefly as I can, and point by point again.

1.

A casual reader looking at your original comment might mistakenly conclude that we only used StrongMinds own study, and no other data, for our evaluation. Our point was that SM’s own work has relatively little weight, and we rely on many other sources. At this point, your argument seems rather ‘motte-and-bailey’. I would agree with you that there are different ways to do a meta-analysis (your point 3), and we plan to publish our new psychotherapy meta-analysis in due course so that it can be reviewed.

2.

Here, you are restating your prior suggestions that HLI should be taken in bad faith. Your claim is that HLI is good at spotting errors in others’ work, but not its own. But there is an obvious explanation about 'survivorship' effects. If you spot errors in your own research, you strip them out. Hence, by the time you publish, you’ve found all the ones you... (read more)

Props on the clear and gracious reply.

I sense this is wrong, if I think the unpublished work will change my conclusions a lot, I change my conclusions some of the way now though I understand that's a weird thing to do and hard to justify perhaps. Nonetheless I think it's the right move.

Could you say a bit more about what you mean by "should not have maintained once they were made aware of them" in point 2? As you characterize below, this is an org "making a funding request in a financially precarious position," and in that context I think it's even more important than usual to be clear about HLI has "maintained" its "mistakes" "once they were made aware of them." Furthermore, I think the claim that HLI has "maintained" is an important crux for your final point.

Example: I do not like that HLI's main donor advice page lists the 77 WELLBY per $1,000 estimate with only a very brief and neutral statement that "Note: we plan to update our analysis of StrongMinds by the end of 2023." There is a known substantial, near-typographical error underlying that analysis:

... (read more)Hello Jason,

With apologies for delay. I agree with you that I am asserting HLI's mistakes have further 'aggravating factors' which I also assert invites highly adverse inference. I had hoped the links I provided provided clear substantiation, but demonstrably not (my bad). Hopefully my reply to Michael makes them somewhat clearer, but in case not, I give a couple of examples below with as best an explanation I can muster.

I will also be linking and quoting extensively from the Cochrane handbook for systematic reviews - so hopefully even if my attempt to clearly explain the issues fail, a reader can satisfy themselves my view on them agrees with expert consensus. (Rather than, say, "Cantankerous critic with idiosyncratic statistical tastes flexing his expertise to browbeat the laity into aquiescence".)

0) Per your remarks, there's various background issues around reasonableness, materiality, timeliness etc. I think my views basically agree with yours. In essence: I think HLI is significantly 'on the hook' for work (such as the meta-analysis) it relies upon to make recommendations to donors - who will likely be taking HLI's representations on its results and reliability (cf... (read more)

I really appreciate you putting in the work and being so diligent Gregory. I did very little here, though I appreciate your kind words. Without you seriously digging in, we’d have a very distorted picture of this important area.

edited to that I only had a couple of comments rather than 4

I am confident those involved really care about doing good and work really hard. And i don't want that to be lost in this confusion. Something is going on here, but I think "it is confusing" is better than "HLI are baddies".

For clarity being 2x better than cash transfers would still provide it with good reason to be on GWWC's top charity list, right? Since GiveDirectly is?

I guess the most damning claim seems to be about dishonesty, which I find hard to square with the caliber of the team. So, what's going on here? If, as seems likely the forthcoming RCT downgrades SM a lot and the HLI team should have seen this coming, why didn't they act? Or do they still believe that they RCT will return very positive results. What happens when as seems likely, they are very wrong?

Note that SimonM is a quant by day and for a time top on metaculus, so I am less surprised that he can produce such high caliber work in his sp... (read more)

+1 Regarding extending the principle of charity towards HLI. Anecdotally it seems very common for initial CEA estimates to be revised down as the analysis is critiqued. I think HLI has done an exceptional job at being transparent and open regarding their methodology and the source of disagreements e.g. see Joel's comment outlining the sources of disagreement between HLI and GiveWell, which I thought were really exceptional (https://forum.effectivealtruism.org/posts/h5sJepiwGZLbK476N/assessment-of-happier-lives-institute-s-cost-effectiveness?commentId=LqFS5yHdRcfYmX9jw). Obviously I haven't spent as much time digging into the results as Gregory has made, but the mistakes he points to don't seem like the kind that should be treated too harshly.

As a separate point, I think it's generally a lot easier to critique and build upon an analysis after the initial work has been done. E.g. even if it is the case that SimonM's assessment of Strong Minds is more reliable than HLI's (HLI seem to dispute that the critique he levies are all that important as they only assign a 13% weight to that RCT), this isn't necessarily evidence that SimonM is more competent than the HLI team. When the heavy lifting has been done, it's easier to focus in on particular mistakes (and of course valuable to do so!).

Here is a manifold market for Gregory's claim if you want to bet on it.

HLI - but if for whatever reason they're unable or unwilling to receive the donation at resolution, Strongminds.

The 'resolution criteria' are also potentially ambiguous (my bad). I intend to resolve any ambiguity stringently against me, but you are welcome to be my adjudicator.

[To add: I'd guess ~30-something% chance I end up paying out: d = 0.4 is at or below pooled effect estimates for psychotherapy generally. I am banking on significant discounts with increasing study size and quality (as well as other things I mention above I take as adverse indicators), but even if I price these right, I expect high variance.

I set the bar this low (versus, say, d = 0.6 - at the ~ 5th percentile of HLI's estimate) primarily to make a strong rod for my own back. Mordantly criticising an org whilst they are making a funding request in a financially precarious position should not be done lightly. Although I'd stand by my criticism of HLI even if the trial found Strongminds was even better than HLI predicted, I would regret being quite as strident if the results were any less than dramatically discordant.

If so, me retreating to something like "Meh, they got lucky"/"Sure I wa... (read more)

I can also vouch for HLI. Per John Salter's comment, I may also have been a little sus early (sorry Michael) on but HLI's work has been extremely valuable for our own methodology improvements at Founders Pledge. The whole team is great, and I will second John's comment to the effect that Joel's expertise is really rare and that HLI seems to be the right home for it.

I guess I would very slightly adjust my sense of HLI, but I wouldn't really think of this as an "error." I don't significantly adjust my view of GiveWell when they delist a charity based on new information.

I think if the RCT downgrades StrongMinds' work by a big factor, that won't really introduce new information about HLI's methodology/expertise. If you think there are methodological weaknesses that would cause them to overstate StrongMinds' impact, those weaknesses should be visible now, irrespective of the RCT results.

I disagree with the valence of the comment, but think it reflects legitimate concerns.

I am not worried that "HLI's institutional agenda corrupts its ability to conduct fair-minded and even-handed assessment." I agree that there are some ways that HLI's pro-SWB-measurement stance can bleed into overly optimistic analytic choices, but we are not simply taking analyses by our research partners on faith and I hope no one else is either. Indeed, the very reason HLI's mistakes are obvious is that they have been transparent and responsive to criticism.

We disagree with HLI about SM's rating — we use HLI's work as a starting point and arrive at an undiscounted rating of 5-6x; subjective discounts place it between 1-2x, which squares with GiveWell's analysis. But our analysis was facilitated significantly by HLI's work, which remains useful despite its flaws.

Individual donors are, however, more likely to take a charity recommender's analysis largely on faith -- because they do not have the time or the specialized knowledge and skills necessary to kick the tires. For those donors, the main point of consulting a charity recommender is to delegate the tire-kicking duties to someone who has the time, knowledge, and skills to do that.

Was a little sus on HLI before I got the chance to work a little with them. Really bright and hardworking team. Joel McGuire has been especially useful.

We're planning on evaluating most if not all of our interventions using SWB on an experimental basis. Honestly, QALYs kinda suck so the bar isn't very high. I wouldn't have ever given this any thought without HLIs posts however.

200K seems excellent value for money for the value provided even if the wellby adoption moonshot doesn't materialise.

I'm also impressed by this post. HLI's work has definitely shifted my priors on wellbeing interventions.

It's also great to see the organisation taking philosophical/empirical concerns seriously. I still have some concerns/questions about the efficacy of these interventions (compared to Givewell charities), but I am confident in HLI continuing to shed light on these concerns in the future.

For example, projects like the one below I think are really important.

and

Impressed by the post; I'd like to donate! Is there a way to do so that avoids card fees? And if so, at what donation size do you prefer that people start using it?

If you donate through PayPal Giving Fund here 100% of your donation goes to HLI, as PayPal pays all the transaction fees. (Disclaimer: I work for PayPal, but this comment reflects my views alone, not those of the company.)

My sense when a lot of of sort of legitimate but edge case criticisms are brought up with force is that something else might be going on. So I don't know how to ask this but, is there another point of disagreement that underlies this, rather than the SM RCT likely going to return worse results?

[Edit: wrote this before I saw lilly's comment, would recommend that as a similar message but ~3x shorter].

============

I would consider Greg's comment as "brought up with force", but would not consider it an "edge case criticism". I also don't think James / Alex's comments are brought up particularly forcefully.

I do think it is worth making a case that pushing back on making comments that are easily misinterpreted or misleading are also not edge case criticisms though, especially if these are comments that directly benefit your organisation.

Given the stated goal of the EA community is "to find the best ways to help others, and put them into practice", it seems especially important that strong claims are sufficiently well-supported, and made carefully + cautiously. This is in part because the EA community should reward research outputs if they are helpful for finding the best ways to do good, not solely because they are strongly worded; in part because EA donors who don't have capacity to engage at the object level may be happy to defer to EA organisations/recommendations; and in part because the counterfactual impact diverted from the EA donor is likely higher than the average dono... (read more)

Here's my (working) model. I'm not taking a position on how to classify HLI's past mistakes or whether applying the model to HLI is warranted, but I think it's helpful to try to get what seems to be happening out in the open.

Caveat: Some of the paragraphs relie more heavily on my assumptions, extrapolations, suggestions about the "epistemic probation" concept rather than my read of the comments on this and other threads. And of course that concept should be seen mostly as a metaphor.

- Some people think HLI made some mistakes that impact their assessment of HLI's epistemic quality (e.g., some combination of not catching clear-cut model errors that were favorable to its recommended intervention, a series of modeling choices that while defensible were as a whole rather favorable to the same, some overconfident public statements).

- Much of the concern here seems to be that HLI may be engaged in motivated reasoning (which could be 100% unconscious!) on the theory that its continued viability as an organization is dependent on producing some actionable results within the first few years of its existence.

- These mistakes have updated the people's assessment of HLI's epistemic qualit

... (read more)[I don’t plan make any (major) comments on this thread after today. It’s been time-and-energy intensive and I plan to move back to other priorities]

Hello Jason,

I really appreciated this comment: the analysis was thoughtful and the suggestions constructive. Indeed, it was a lightbulb moment. I agree that some people do have us on epistemic probation, in the sense they think it’s inappropriate to grant the principle of charity, and should instead look for mistakes (and conclude incompetence or motivated reasoning if they find them).

I would disagree that HLI should be on epistemic probation, but I am, of course, at risk of bias here, and I’m not sure I can defend our work without coming off as counter-productively defensive! That said, I want to make some comments that may help others understand what’s going on so they can form their own view, then set out our mistakes and what we plan to do next.

Context

I suspect that some people have had HLI on epistemic probation since we started - for perhaps understandable reasons. These are:

- We are advancing a new methodology, the happiness/SWB/WELLBY approach. Although there are decades of work in social science on this and it’s

... (read more)I think your last sentence is critical -- coming up with ways to improve epistemic practices and legibility is a lot easier where there are no budget constraints! It's hard for me to assess cost vs. benefit for suggestions, so the suggestions below should be taken with that in mind.

For any of HLI's donors who currently have it on epistemic probation: Getting out of epistemic probation generally requires additional marginal resources. Thus, it generally isn't a good idea to reduce funding based on probationary status. That would make about as much sense as "punishing" a student on academic probation by taking away their access to tutoring services they need to improve.

The suggestions below are based on the theory that the main source of probationary status -- at least for individuals who would be willing to lift that status in the future -- is the confluence of the overstated 2022 communications and some issues with the SM CEA. They lean a bit toward "cleaner and more calibrated public communication" because I'm not a statistican, but also because I personally value that in assessing the epistemics of an org that makes charity recommendations to the general public. I also lean in th... (read more)

I really like this concept of epistemic probation - I agree also on the challenges of making it private and exiting such a state. Making exiting criticism-heavy periods easier probably makes it easier to levy in the first place (since you know that it is escapable).

Adding a +1 to Nathan's reaction here, this seems to have been some of the harshest discussion on the EA Forum I've seen for a while (especially on an object-level case).

Of course, making sure charitable funds are doing the good that the claim is something that deserves attention, research, and sometimes a critical eye. From my perspective of wanting more pluralism in EA, it seems[1] to me that HLI is a worthwhile endeavour to follow (even if its programme ends with it being ~the same or worse than cash transfers). Of all the charitable spending in the world, is HLI's really worth this much anger?

It just feels like there's inside baseball that I'm missing here.

weakly of course, I claim no expertise or special ability in charity evaluation

This is speculative, and I don't want this to be read as an endorsement of people's critical comments; rather, it's a hypothesis about what's driving the "harsh discussion":

It seems like one theme in people's critical comments is misrepresentation. Specifically, multiple people have accused HLI of making claims that are more confident and/or more positive than are warranted (see, e.g., some of the comments below, which say things like: "I don't think this is an accurate representation," "it was about whether I thought that sentence and set of links gave an accurate impression," and "HLI's institutional agenda corrupts its ability to conduct fair-minded and even-handed assessments").

I wonder if people are particularly sensitive to this, because EA partly grew out of a desire to make charitable giving more objective and unbiased, and so the perception that HLI is misrepresenting information feels antithetical to EA in a very fundamental way.

So there's now a bunch of speculation in the comments here about what might have caused me and others to criticise this post.

I think this speculation puts me (and, FWIW, HLI) in a pretty uncomfortable spot for reasons that I don't think are obvious, so I've tried to articulate some of them:

- There are many reasons people might want to discuss others' claims but not accuse them of motivated reasoning/deliberately being deceptive/other bad faith stuff, including (but importantly not limited to):

a) not thinking that the mistake (or any other behaviour) justifies claims about motivated reasoning/bad faith/whatever

b) not feeling comfortable publicly criticising someone's honesty or motivations for fear of backlash

c) not feeling comfortable publicly criticising someone's honesty of motivations because that's a much more hurtful criticism to hear than 'I think you made this specific mistake'

d) believing it violates forum norms to make this sort of public criticism without lots of evidence

- In situations where people are speculating about what I might believe but not have said, I do not have good options for moving that speculation closer to the truth, once I notice that this m... (read more)

(Apologies if this is the wrong place for an object-level discussion)

Suppose I want to give to an object-level mental health charity in the developing world but I do not want to give to StrongMinds. Which other mental health charities would HLI recommend?

One thing that confused me a little when looking over your selection process was whether HLI evaluated in-depth any other mental health charities on your shortlist. Reading naively, it seems like (conditional upon a charity being on your shortlist) StrongMinds were mostly chosen for procedural reasons (they were willing to go through your detailed process) than because of high confidence that the charity is better than its peers. Did I read this correctly? If so, should donors wait until HLI or others investigate the other mental health charities and interventions in more detail? If not, what would be the top non-StrongMinds charities you would recommend for donors interested in mental health?

Hello Linch. We're reluctant to recommend organisations that we haven't been able to vet ourselves but are planning to vet some new mental health and non-mental health organisations in time for Giving Season 2023. The details are in our Research Agenda. For mental health, we say

On how we chose StrongMinds, you've already found our selection process. Looking back at the document, I see that we don't get into the details, but it wasn't just procedural. We hadn't done a deep dive analysis at the point - the point of the search process was to work out what we should look at in more depth - but our prior was that StrongMinds would come out at or close to the top anyway. To explain, it was delivering the intervention we thought would do most good per person (therapy for depression), doing this cheaply (via lay-delivered interpersonal group therapy) and it seems to be a well-run organisation. I thought Friendship Bench might beat it (Friendship Bench had a volunteer model and so plausibly much lower costs but also lower efficacy) but they didn't offer us their data at the time, someth... (read more)

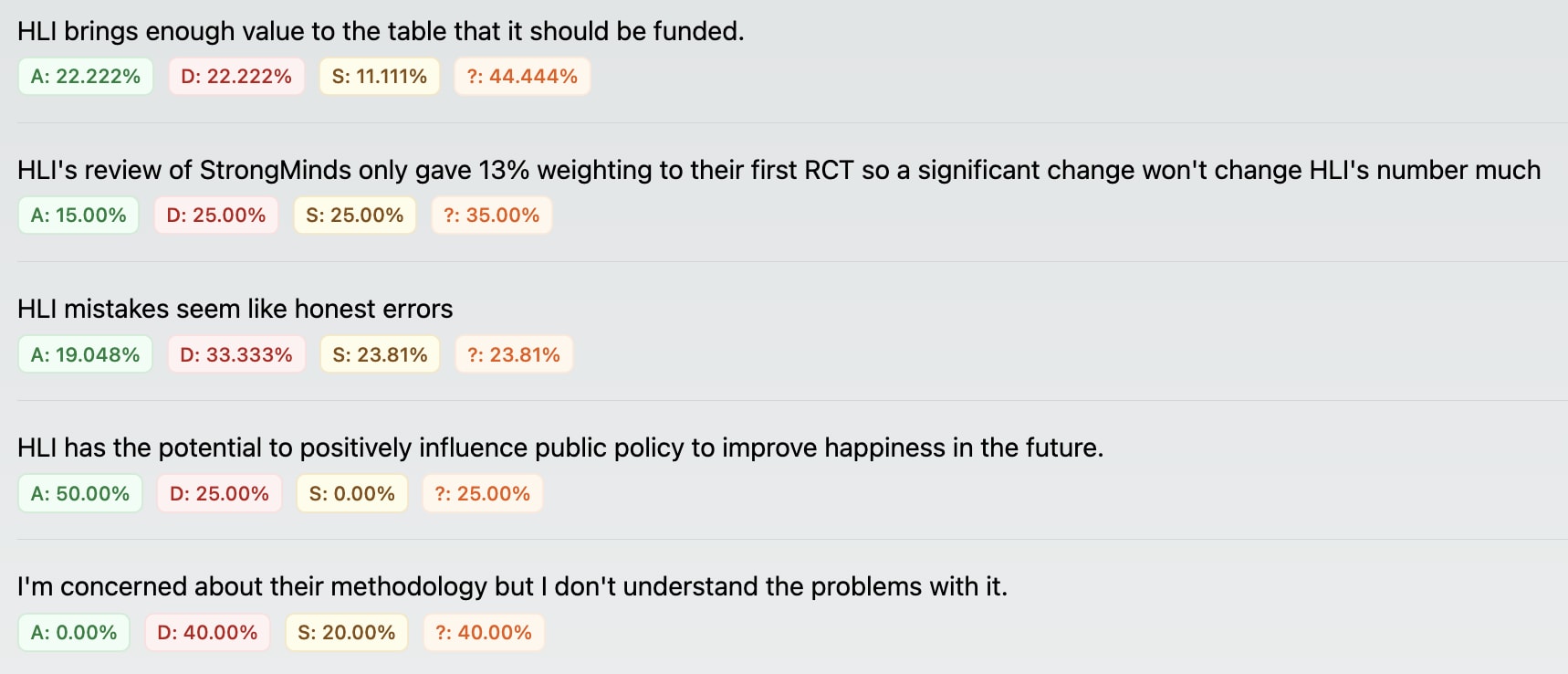

Takeaways poll

What are your takeaways having read the comments of this piece?

Personally I find it's good to understand what we all agree/disagree/are uncertain on.

Please add your own comments (there is a button at the bottom) or rewrite comments you find confusing into ones you could agree/disagree with.

Also if you know the answer confidently to something people seem unsure of, perhaps say.

https://viewpoints.xyz/polls/concrete-takeaways-from-hli-post

Results (33 responses): https://viewpoints.xyz/polls/concrete-takeaways-from-hli-post/analytics

Concensus of agree/disagre

Uncertainty (lets write some more comments or give answers in the comments)

Some questions I'd like to know the answers to

- What would convince you that HLI brings enough value to the table that it should be funded?

- In the weighting of the RCT, that seems a fact claim. How could it be written such that you'd agree with it given the below table?

- How do you judge honest/dishonest errors, what is a clearer standard

- What would HLI managing controversies well or badly look like?

- How could we know if SW is more well respec

... (read more)To confirm, the main difference between the "growth" and "optimal growth" budget is the extension of the time period from 12 to 18 months? [I ask because I had missed the difference in length specification at first glance; without that, it would look like the biggest difference was paying staff about 50% more given that the number of FTEs is the same.]

The first two are good.

"Growth + more runway"? (plus a brief discussion of why you think adding +6 months runway would increase impact). Optimal could imply a better rate of growth, when the difference seems to be more stability.

Anyway, just donated -- although the odds of me moving away from GiveWell-style projects for my object-level giving is relatively modest, I think it's really important to have a good range of effective options for donors with various interests and philosophical positions.

My reading of the Strongminds debate that has taken place is that the strength of the evidence wasn't sufficient to list Strongminds as a top charity (relevant posts are 1, 2, 3).

With regards to spillovers Joel McGuire says in a separate post:

If the data isn't good enough might it be worth suggesting people fund research studies rather than suggesting people fund the charity itself?

EDIT: I just want to say I would feel uncomfortable if anyone else updated too much based on my comments. I would encourage people to read the critiques I linked for themselves as well as HLI responses.