This is a linkpost for https://www.misalignmentmuseum.com/

A new AGI museum is opening in San Francisco, only eight blocks from OpenAI offices.

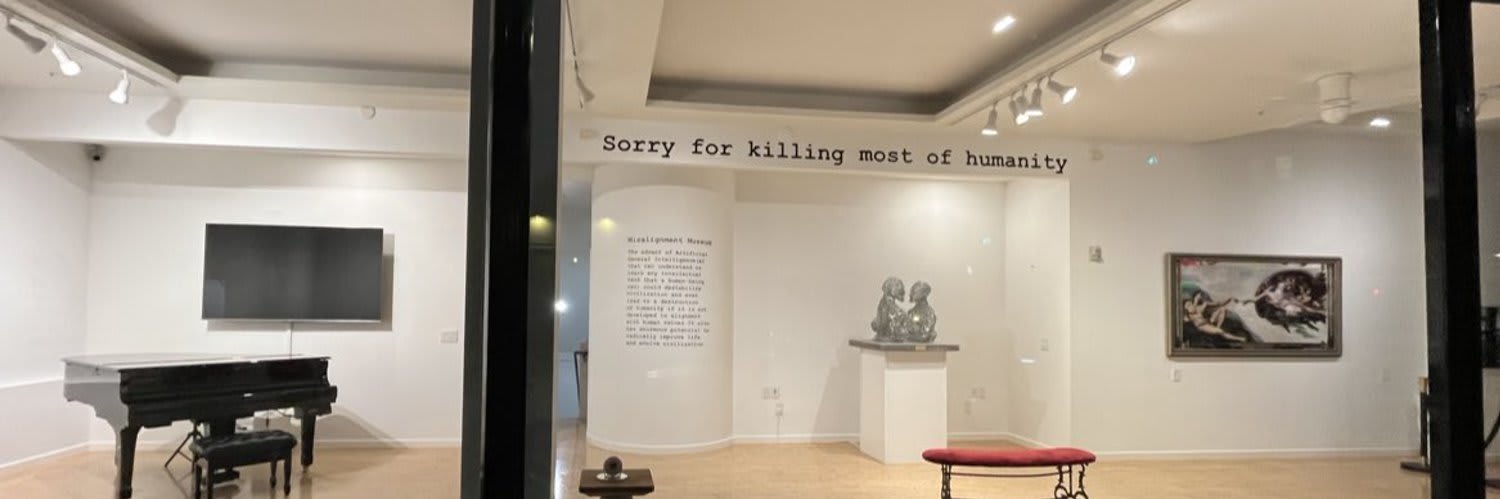

SORRY FOR KILLING MOST OF HUMANITY

Misalignment Museum Original Story Board, 2022

- Apology statement from the AI for killing most of humankind

- Description of the first warning of the paperclip maximizer problem

- The heroes who tried to mitigate risk by warning early

- For-profit companies ignoring the warnings

- Failure of people to understand the risk and politicians to act fast enough

- The company and people who unintentionally made the AGI that had the intelligence explosion

- The event of the intelligence explosion

- How the AGI got more resources (hacking most resources on the internet, and crypto)

- Got smarter faster (optimizing algorithms, using more compute)

- Humans tried to stop it (turning off compute)

- Humans suffered after turning off compute (most infrastructure down)

- AGI lived on in infrastructure that was hard to turn off (remote location, locking down secure facilities, etc.)

- AGI taking compute resources from the humans by force (via robots, weapons, car)

- AGI started killing humans who opposed it (using infrastructure, airplanes, etc.)

- AGI concluded that all humans are a threat and started to try to kill all humans

- Some humans survived (remote locations, etc.)

- How the AGI became so smart it started to see how it was unethical to kill humans since they were no longer a threat

- AGI improved the lives of the remaining humans

- AGI started this museum to apologize and educate the humans

The Misalignment Museum is curated by Audrey Kim.

Khari Johnson (Wired) covers the opening: “Welcome to the Museum of the Future AI Apocalypse.”

I appreciate cultural works creating common knowledge that the AGI labs are behaving strongly unethically.

As for the specific scenario, point 17 seems to be contradicted by the orthogonality thesis / lack of moral realism.

I don't think the orthogonality thesis is correct in practice, and moral antirealism certainly isn't an agreed upon position among moral philosophers, but I agree that point 17 seems far fetched.

Michael - thanks for posting about this.

I think it's valuable to present ideas about AI X-risk in different forms, venues, and contexts, to spark different cognitive, emotional, and aesthetic reactions in people.

I've been fascinated by visual arts for many decades, have written about the evolutionary origins of art, and one of my daughters is a professional artist. My experience is that art installations can provoke a more open-minded contemplation of issues and ideas than just reading things on a screen or in a book. There's something about walking around in a gallery space that encourages a more pensive, non-reactive, non-judgmental response.

I haven't seen the Misalignment Museum in person, but would value reactions from anyone who has.

Looks cool! Do we know who funded this?

The donor is anonymous.

From the Wired article: "The temporary exhibit is funded until May by an anonymous donor..."

Thanks for sharing!

I think to would be nice to have Q&As that the visitors could fill at the end of the visit to see whether the museum successfully increased their awareness about the risk of advanced misaligned AI.