Toby Ord’s The Precipice is an ambitious and excellent book. Among many other things, Ord attempts to survey the entire landscape of existential risks[1] humanity faces. As part of this, he provides, in Table 6.1, his personal estimates of the chance that various things could lead to existential catastrophe in the next 100 years. He discusses the limitations and potential downsides of this (see also), and provides a bounty of caveats, including:

Don’t take these numbers to be completely objective. [...] And don’t take the estimates to be precise. Their purpose is to show the right order of magnitude, rather than a more precise probability.

Another issue he doesn’t mention explicitly is that people could anchor too strongly on his estimates.

But on balance, I think it’s great that he provides this table, as it could help people to:

- more easily spot where they do vs don’t agree with Ord

- get at least a very approximate sense of what ballpark the odds might be in

In this post, I will:

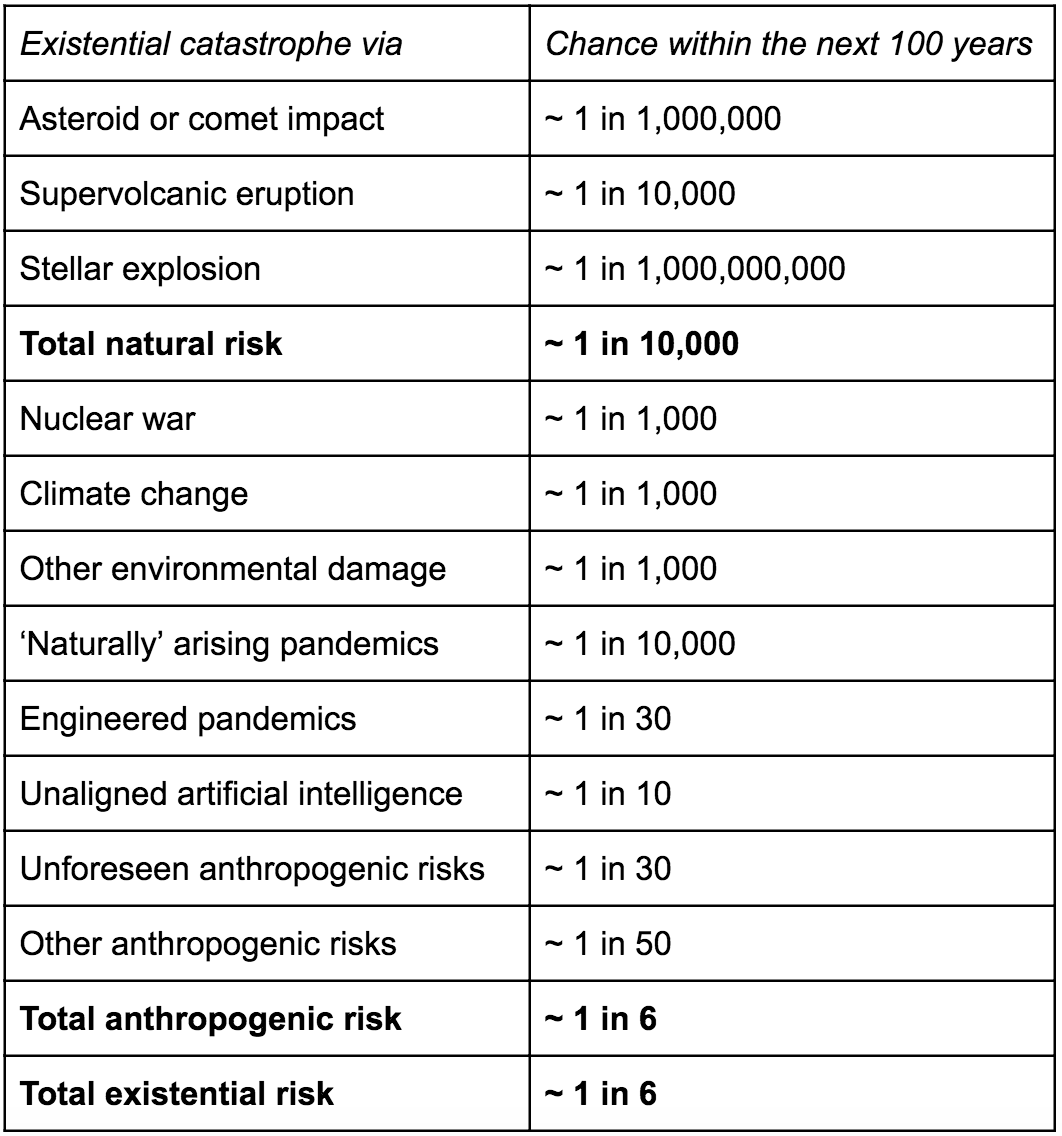

- Present a reproduction of Table 6.1

- Discuss whether Ord may understate the uncertainty of these estimates

- Discuss an ambiguity about what he’s actually estimating when he estimates the risk from “unaligned AI”

- Discuss three estimates I found surprisingly high (at least relative to the other estimates)

- Discuss some adjustments his estimates might suggest EAs/longtermists should make to their career and donation decisions

Regarding points 2 and 4: In reality, merely knowing that these are Ord’s views about the levels of uncertainty and risk leads me to update my views quite significantly towards his, as he’s clearly very intelligent and has thought about this for much longer than I have. But I think it’s valuable for people to also share their “independent impressions” - what they’d believe without updating on other people’s views. And this may be especially valuable in relation to Ord’s risk estimates, given that, as far as I know, we have no other single source of estimates anywhere near this comprehensive.[2]

I’ll hardly discuss any of the evidence or rationale Ord gives for his estimates; for all that and much more, definitely read the book!

The table

Here’s a reproduction of Table 6.1:[3]

Understating uncertainty?

In the caption for the table, Ord writes:

There is significant uncertainty remaining in these estimates and they should be treated as representing the right order of magnitude - each could easily be a factor of 3 higher or lower.

Lighthearted initial reaction: Only a factor of 3?! That sounds remarkably un-uncertain to me, for this topic. Perhaps he means the estimates could easily be a factor of 3 higher or lower, but the estimates could also be ~10-50 times higher or lower if they really put their backs into it?

More seriously: This at least feels to me surprisingly “certain”/“precise”, as does his above-quoted statement that the estimates’ “purpose is to show the right order of magnitude”. On the other hand, I’m used to reasoning as someone who hasn’t been thinking about this for a decade and hasn’t written a book about it - perhaps if I had done those things, then it’d make sense for me to occasionally at least know how many 0s should be on the ends of my numbers. But my current feeling is that, when it comes to existential risk estimates, uncertainties even about orders of magnitude may remain appropriate even after all that research and thought.

Of course, the picture will differ for different risks. In particular:

-

for some risks (e.g., asteroid impacts), we have a lot of at least somewhat relevant actual evidence and fairly well-established models.

- But even there, our evidence and models are still substantially imperfect for existential risk estimates.

-

And for some risks, the estimated risk is already high enough that it’d be impossible for the “real risk” to be two orders of magnitude higher.

- But then the real risk could still be orders of magnitude lower.

I’d be interested in other people’s thoughts on whether Ord indeed seems to be implying more precision than is warranted here.

What types of catastrophe are included in the “Unaligned AI” estimate?

Ord estimates a ~1 in 10 chance that “unaligned artificial intelligence” will cause existential catastrophe in the next 100 years. But I don’t believe he explicitly states precisely what he means by “Unaligned AI” or “alignment”. And he doesn’t include any other AI-related estimates there. So I’m not sure which combination of the following issues he’s estimating the risk from:[4]

-

AI systems that aren’t even trying to act in accordance with the instructions or values of their operator(s) (as per Christiano’s definition).

- E.g., the sorts of scenarios Bostrom’s Superintelligence focuses on, where an AI actively strategises to seize power and optimise for its own reward function.

-

AI systems which are trying to act in accordance with the instructions or values of their operator(s), but which make catastrophic mistakes in the process.

- I think that, on some definitions, this would be an “AI safety” rather than “AI alignment” problem?

-

AI systems that successfully act in accordance with the instructions or values of their operator(s), but not of all of humanity.

- E.g., the AI systems are “aligned” with a malicious or power-hungry actor, causing catastrophe. I think that on some definitions this would be a “misuse” rather than “misalignment” issue.

-

AI systems that successfully act in accordance with something like the values humanity believes we have, but not what we truly value, or would value after reflection, or should value (in some moral realist sense).

- I think this is related to what Will MacAskill has called “the actual alignment problem”.

-

“Non-agentic” AI systems which create “structural risks” as a byproduct of their intended function, such as by destabilising nuclear strategies.

In Ord’s section on “Unaligned artificial intelligence”, he focuses mostly on the sort of scenario Bostrom’s Superintelligence focused on (issue #1 in the above list). However, within that discussion, he also writes that we can’t be sure the “builders of the system are striving to align it with human values”, as they may instead be trying “to achieve other goals, such as winning wars or maximising profits.” And later in the chapter, he writes:

I’ve focused on the scenario of an AI system seizing control of the future, because I find it the most plausible existential risk from AI. But there are other threats too, with disagreement among experts about which one poses the greatest existential risk. For example, there is a risk of a slow slide into an AI-controlled future, where an ever-increasing share of power is handed over to AI systems and an increasing amount of our future is optimised towards inhuman values. And there are the risks arising from deliberate misuse of extremely powerful AI systems.

I’m not sure whether those quotes suggest Ord is including some or all of issues #2-5 in his definition or estimate of risks from “unaligned AI”, or if he’s just mentioning “other threats” as also worth noting but not part of what he means by “unaligned AI”.

I’m thus not sure whether Ord thinks the existential risk:

(A) from all of the above-mentioned issues is ~1 in 10.

(B) from some subset of those issues is ~1 in 10, while the risk from the other issues is negligible.

- But how low would be negligible anyway? Recall that Table 6.1 includes a risk for which Ord gives only odds of 1 in a billion; I imagine he’d seen the other AI issues as more risky than that.

(C) from some subset of those issues is ~1 in 10, while the risk from others of those issues is non-negligible but (for some other reason) not directly estimated

Personally, and tentatively, it seems to me that at least the first four of the above issues may contribute substantially to existential risk, with no single one of the issues seeming more important than the other three combined. (I’m less sure about the importance of structural risks from AI.) Thus, if Ord meant B or especially C, I may have reason to be even more concerned than the ~1 in 10 estimate suggests.

I’d be interested to hear other people’s thoughts either on what Ord meant by that estimate, or on their own views about the relative importance of each of those issues.

“Other environmental damage”: Surprisingly risky?

Ord estimates a ~1 in 1000 chance that “other environmental damage” will cause existential catastrophe in the next 100 years. This includes things like overpopulation, running out of critical resources, or biodiversity loss, and not climate change.

I was surprised that that estimate was:

- that high

- as high as his estimate of the existential risk from each of nuclear war and climate change

- 10 times higher than his estimate for the risk of existential catastrophe from “‘naturally’ arising pandemics”

I very tentatively suspect that this estimate of the risk from other environmental damage is too high. I also suspect that, whatever the “real risk” from this source is, it’s lower than that from nuclear war or climate change. E.g., if ~1 in 1000 turns out to indeed be the “real risk” from other environmental damage, then I tentatively suspect the “real risk” from those other two sources is greater than ~1 in 1000. That said, I don’t really have an argument for those suspicions, and I’ve spent especially little time thinking about existential risk from other environmental damage.

I also intuitively feel like the risks from ‘naturally’ arising pandemics is larger than that from other environmental damage, or at least not 10 times lower. (And I don’t think that’s just due to COVID-19; I think I would’ve said the same thing months ago.)

One the other hand, Ord gives a strong argument that the per-century extinction risks from “natural” causes must be very low, based in part on our long history of surviving such risks. So I mostly dismiss my intuitive feeling here as quite unfounded; I suspect my intuitions can’t really distinguish “Natural pandemics are a big deal!” from “Natural pandemics could lead to extinction, unrecoverable collapse, or unrecoverable dystopia!”

On the third hand, Ord notes that that argument doesn’t apply neatly to ‘naturally’ arising pandemics. This is because changes in society and technology have substantially changed the ability for pandemics to arise and spread (e.g., there’s now frequent air travel, although also far better medical science). In fact, Ord doesn’t even classify ‘naturally’ arising pandemics as a natural risk, and he places them in the “Future risks” chapter. Additionally, as Ord also notes, that argument applies most neatly to risks of extinction, not to risks of “unrecoverable collapse” or “unrecoverable dystopia”.

So I do endorse some small portion of my feeling that the risk from ‘naturally’ arising pandemics is probably more than a 10th as big as the risk from other environmental damage.

“Unforeseen” and “other” anthropogenic risks: Surprisingly risky?

By “other anthropogenic risks”, Ord means risks from

- dystopian scenarios

- nanotechnology

- “back contamination” from microbes from planets we explore

- aliens

- “our most radical scientific experiments”

Ord estimates the chances that “other anthropogenic risks” or “unforeseen anthropogenic risks” will cause existential catastrophe in the next 100 years are ~1 in 50 and ~1 in 30, respectively. Thus, he views these categories of risks as, respectively, ~20 and ~33 times as existentially risky (over this period) as are each of nuclear war and climate change. He also views them as in the same ballpark as engineered pandemics. And as there are only 5 risks in the “other” category, this means he must see at least some of them (perhaps dystopian scenarios and nanotechnology?) as posing much higher existential risks than do nuclear war or climate change.

I was surprised by how high his estimates for risks from the “other” and “unforeseen” anthropogenic risks were, relative to his other estimates. But I hadn’t previously thought about these issues very much, so I wasn’t necessarily surprised by the estimates themselves, and I don’t feel myself inclined towards higher or lower estimates. I think my strongest opinion about these sources of risk is that dystopian scenarios probably deserve more attention than the longtermist community typically seems to give them, and on that point it appears Ord may agree.

Should this update our career and donation decisions?

One (obviously imperfect) metric of the current priorities of longtermists is the problems 80,000 Hours recommends people work on. Their seven recommended problems are:

- Positively shaping the development of artificial intelligence

- Reducing global catastrophic biological risks

- Nuclear security

- Climate change (extreme risks)

- Global priorities research

- Building effective altruism

- Improving institutional decision-making

The last three of those recommendations seem to me like they’d be among the best ways of addressing “other” and “unforeseen” anthropogenic risks. This is partly because those three activities seem like they’d broadly improve our ability to identify, handle, and/or “rule out” a wide range of potential risks. (Another top contender for achieving such goals would seem to be “existential risk strategy”, which overlaps substantially with global priorities research and with building EA, but is more directly focused on this particular cause area.)

But as noted above, if Ord’s estimates are in the right ballpark, then:

- “other” and “unforeseen” anthropogenic risks are each (as categories) substantially existentially riskier than each of nuclear war, climate change, or ‘naturally’ arising pandemics

- at least some individual “other” risks must also be substantially higher than those three things

- “other environmental damage” is similarly existentially risky as nuclear war and climate change, and 10 times more so than ‘naturally’ arising pandemics

So, if Ord’s estimates are in the right ballpark, then:

- Perhaps 80,000 Hours should write problem profiles on one or more of those specific “other” risks? And perhaps also about “other environmental damage”?

- Perhaps 80,000 Hours should more heavily emphasise the three “broad” approaches they recommend (global priorities research, building EA, and improving institutional decision-making), especially relative to work on nuclear security and climate change?

- Perhaps 80,000 Hours should write an additional “broad” problem profile on existential risk strategy specifically?

- Perhaps individual EAs should shift their career and donation priorities somewhat towards:

- those broad approaches?

- specific “other anthropogenic risks” (e.g., dystopian scenarios)?

- “other environmental damage”?

Of course, Ord’s estimates relate mainly to scale/impact, and not to tractability, neglectedness, or number of job or donation opportunities currently available. So even if we decided to fully believe his estimates, their implications for career and donation decisions may not be immediately obvious. But it seems like the above questions would be worth considering, at least.

From memory, I don’t think Ord explicitly addresses these sorts of questions, perhaps because he was writing partly for a broad audience who would neither know nor care about the current priorities of EAs. Somewhat relevantly, though, his “recommendations for policy and research” (Appendix F) include items specifically related to nuclear war, climate change, environmental damage, and “broad” approaches (e.g., horizon-scanning for risks), but none specifically related to any of the “other anthropogenic risks”.

As stated above, I thought this book was excellent, and I’d highly recommend it. I’d also be excited to see more people commenting on Ord’s estimates (either here or in separate posts), and/or providing their own estimates. I do see potential downsides in making or publicising such estimates. But overall, it seems to me probably not ideal how many strategic decisions longtermists have made so far without having first collected and critiqued a wide array of such estimates.

This is one of a series of posts I plan to write that summarise, comment on, or take inspiration from parts of The Precipice. You can find a list of all such posts here.

This post is related to my work with Convergence Analysis, but the views I expressed in it are my own. I’m grateful to David Kristoffersson for helpful comments on an earlier draft.

Ord defines an existential catastrophe as “the destruction of humanity’s longterm potential”. Such catastrophes could take the form of extinction, “unrecoverable collapse”, or “unrecoverable dystopia”. He defines an existential risk as “a risk that threatens the destruction of humanity’s longterm potential”; i.e., a risk of such a catastrophe occurring. ↩︎

The closest other thing I’m aware of was a survey from 12 years ago, which lacks estimates for several of the risks Ord gives estimates for. ↩︎

I hope including this is ok copyright-wise; all the cool codexes were doing it. ↩︎

This is my own quick attempt to taxonomise different types of “AI catastrophe”. I hope to write more about varying conceptualisations of AI alignment in future. See also The Main Sources of AI Risk? ↩︎

Note: I haven't read the book. Also, based on your other writing, MichaelA, I suspect much of what I write here won't be helpful to you, but it might be for other readers less familiar with Bayesian reasoning or order of magnitude calculations.

On uncertainty about Bayesian estimates of probabilities (credences), I think the following statement could be rewritten in a way that's a bit clearer about the nature of these estimates:

But these are Ord's beliefs, so when he says they could be a factor of 3 higher or lower, I think he means that he think there's a good chance that he could be convinced that they're that much higher or lower, with new information, and since he says they "should be treated as representing the right order of magnitude", he doesn't think he could be convinced that they should be more than 3x higher or lower.

I don't think it's meaningful to say that a belief "X will happen with probability p" is accurate or not. We could test a set of beliefs and probabilities for calibration, but there are too few events here (many of which are extremely unlikely according to his views and are too far in the future) to test his calibration on them. So it's basically meaningless to say whether or not he's accurate about these. We could test his calibration on a different set of events and hope his calibration generalizes to these ones. We could test on multiple sets of events and see how his calibration changes between them to get an idea of the generalization error before we try to generalize.

On the claim, it seems like for many of his estimates, he rounded to the nearest 1 in 10k, on a logarithmic scale, since the halfway point between 10k and 10k+1 on a log scale is 10k+1/2≃3.16×10k, so he can only be off by a factor of √10∼3.16∼3. If he were off by more than a factor of 3, then he would have had to round to a different power of 10. The claim that they represent the right orders of magnitude is equivalent to them being correct to within a factor of about 3. (Or that he thinks he's unlikely to change his mind about the order of magnitude with new information is equivalent to him believing that new information is unlikely to change his mind about these estimates by more than a factor of 3.)

I'd be curious to know if there are others who have worked as hard on estimating any of these probabilities and how close their estimates are to his.

I definitely share this curiosity. In a footnote, I link to this 2008 "informal survey" that's the closest thing I'm aware of (in the sense of being somewhat comprehensive). It's a little hard to compare the estimate, as that was for extinction (or sub-extinction events) rather than existential catastrophe more generally, and was for before 2100 rather than before 2120. But it seems to be overall somewhat more pessimistic than Ord, though in roughly the same ballpark for "overall/total risk", AI, and engineered pandemics at least.

I don't off the top of my head know anything comparable in terms of amount of effort, except in the case of individual AI researchers estimating the risks from AI, or specific types of AI catastrophe - nothing broader. Or maybe a couple 80k problem profiles. And I haven't seen these collected anywhere - I think it could be cool if someone did that (and made sure the collection prominently warned against anchoring etc.).

A related and interesting question would be "If we do find past or future estimates based on as much hard work, and find that they're similar to Ord's, what do we make of this observation?" It could be taken as strengthening the case for those estimates being "about right". But it could also be evidence of anchoring or information cascades. We'd want to know how independent the estimates were. (It's worth noting that the 2008 survey was from FHI, where Ord works.)

Update: I'm now creating this sort of a collection of estimates, partly inspired by this comment thread (so thanks, MichaelStJules!). I'm not yet sure if I'll publish them; I think collecting a diversity of views together will reduce rather than exacerbate information cascades and such, but I'm not sure. I'm also not sure when I'd publish, if I do publish.

But I think the answers are "probably" and "within a few weeks".

If anyone happens to know of something like this that already exists, and/or has thoughts on whether publishing something like this would be valuable or detrimental, please let me know :)

Update #2: This turned into a database of existential estimates, and a post with some broader discussion of the idea of making, using, and collecting such estimates. And it's now posted.

So thanks for (probably accidentally) prompting this!

Thanks for the comment!

Yes, it does seem worth pointing out that these are Bayesian rather than "frequency"/"physical" probabilities. (Though Ord uses them as somewhat connected to frequency probabilities, as he also discusses how long we should expect humanity to last given various probabilities of x-catastrophe per century.)

To be clear, though, that's what I had in mind when suggesting that being uncertain only within a particular order of magnitude was surprising to me. E.g., I agree with the following statement:

...but I was surprised to hear that, if Ord does mean that the way it sounds to me, he thinks he could only be convinced to raise or lower his credence by a factor of ~3.

Though it's possible he instead meant that they could definitely be off by a factor of 3, which that wouldn't surprise him at all, but it's also plausible they could be off by even more.

I think there's something to this, but I'm not sure I totally agree. Or at least it might depend on what you mean by "accurate". I'm not an expert here, but Wikipedia says:

I think a project like Ord's is probably most useful if it's at least striving for objectivist Bayesian probabilities. (I think "the epistemic interpretation" is also relevant.) And if it's doing so, I think the probabilities can be meaningfully critiqued as more or less reasonable or useful.

I agree that this is at least roughly correct, given that he's presenting each credence/probability as "1 in [some power of 10]". I didn't mean to imply that I was questioning two substantively different claims of his; more just to point out that he reiterates a similar point, weakly suggesting he really does mean that this is roughly the range of uncertainty he considers these probabilities to have.

I'm also not an expert here, but I think we'd have to agree about how to interpret knowledge and build the model, and have the same priors to guarantee this kind of agreement. See some discussion here. The link you sent about probability interpretations also links to the reference class problem.

I think we can critique probabilities based on how they were estimated, at least, and I think some probabilities we can be pretty confident in because they come from repeated random-ish trials or we otherwise have reliable precedent to base them on (e.g. good reference classes, and the estimates don't vary too much between the best reference classes). If there's only really one reasonable model, and all of the probabilities are pretty precise in it (based on precedent), then the final probability should be pretty precise, too.

Just found a quote from the book which I should've mentioned earlier (perhaps this should've also been a footnote in this post):

And I'm pretty sure there was another quote somewhere about the complexities with this.

As for your comment, I'm not sure if we're just using language slightly differently or actually have different views. But I think we do have different views on this point:

I would say that, even if one model is the most (or only) reasonable one we're aware of, if we're not certain about the model, we should account for model uncertainty (or uncertainty about the argument). So (I think) even if we don't have specific reasons for other precise probabilities, or for decreasing the precision, we should still make our probabilities less precise, because there could be "unknown unknowns", or mistakes in our reasoning process, or whatever.

If we know that our model might be wrong, and we don't account for that when thinking about how certain vs uncertain we are, then we're not using all the evidence and information we have. Thus, we wouldn't be striving for that "evidential" sense of probability as well as we could. And more importantly, it seems likely we'd predictably do worse in making plans and achieving our goals.

Interestingly, Ord is among the main people I've seen making the sort of argument I make in the prior paragraph, both in this book and in two prior papers (one of which I've only read the abstract of). This increased my degree of surprise at him appearing to suggest he was fairly confident these estimates were of the right order of magnitude.

I agree that we should consider model uncertainty, including the possibility of unknown unknowns.

I think it's rare that you can show that only one model is reasonable in practice, because the world is so complex. Mostly only really well-defined problems with known parts and finitely many known unknowns, like certain games, (biased) coin flipping, etc..

Some other context I perhaps should've given is that Ord writes that his estimates already:

This kind of complexity tells me that we should talk more often of risk %'s in terms of the different scenarios they are associated with. E.g., the form of current trajectory Ord is using, and also possibly better (if society would act further more wisely) and possible worse trajectories (society makes major mistakes), and what the probabilities are under these.

We can't disentangle talking about future risks and possibilities entirely from the different possible choices of society since these choices are what shapes the future. What we do affect these choices.

(Also, maybe you should edit the original post to include the quote you included here or parts of it.)

It'd be interesting to see how Toby Ord and others would update the likelihood of the different events in light of the past two years. I'm thinking specifically re:

I haven't looked at this in much detail, but Ord's estimates seem too high to me. It seems really hard for humanity to go extinct, considering that there are people in remote villages, people in submarines, people in mid-flight at the time a disaster strikes, and even people on the International Space Station. (And yes, there are women on the ISS, I looked that up.) I just don't see how e.g. a pandemic would plausibly kill all those people.

Also, if engineered pandemics, or "unforeseen" and "other" anthropogenic risks have a chance of 3% each of causing extinction, wouldn't you expect to see smaller versions of these risks (that kill, say, 10% of people, but don't result in extinction) much more frequently? But we don't observe that.

(I haven't read Ord's book so I don't know if he addresses these points.)

What is the significance of the people on the ISS? Are you suggesting that six people could repopulate the human species? And what sort of disaster takes less time than a flight, and only kills people on the ground?

Also, I expect to see small engineered pandemics, but only after effective genetic engineering is widespread. So the fact that we haven't seen any so far is not much evidence.

Yes, that was broadly the response I had in mind as well. Same goes for most of the "unforeseen"/"other" anthropogenic risks; those categories are in the chapter on "Future risks", and are mostly things Ord appears to think either will or may get riskier as certain technologies are developed/advanced.

Sleepy reply to Tobias' "Ord's estimates seem too high to me": An important idea in the book is that "the per-century extinction risks from “natural” causes must be very low, based in part on our long history of surviving such risks" (as I phrase it in this post). The flipside of that is roughly the argument that we haven't got strong evidence of our ability to survive (uncollapsed and sans dystopia) a long period with various technologies that will be developed later, but haven't been yet.

Of course, that doesn't seem sufficient by itself as a reason for a high level of concern, as some version of that could've been said at every point in history when "things were changing". But if you couple that general argument with specific reasons to believe upcoming technologies could be notably risky, you could perhaps reasonably arrive at Ord's estimates. (And there are obviously a lot of specific details and arguments and caveats that I'm omitting here.)

I don't think so. I think a reasonable prior on this sort of thing would have killing 10% of people not much more likely than killing 100% of people, and actually IMO it should be substantially less likely. (Consider a distribution over asteroid impacts. The range of asteroids that kill around 10% of humans is a narrow band of asteroid sizes, whereas any asteroid above a certain size will kill 100%.)

Moreover, if you think 10% is much more likely than 100%, you should think 1% is much more likely than 10%, and so on. But we don't in fact see lots of unknown risks killing even 0.1% of the population. So that means the probability of x-risk from unknown causes according to you must be really really really small. But that's just implausible. You could have made the exact same argument in 1917, in 1944, etc. and you would have been wildly wrong.

Well, historically, there have been quite a few pandemics that killed more than 10% of people, e.g. the Black Death or Plague of Justinian. There's been no pandemic that killed everyone.

Is your point that it's different for anthropogenic risks? Then I guess we could look at wars for historic examples. Indeed, there have been wars that killed something on the order of 10% of people, at least in the warring nations, and IMO that is a good argument to take the risk of a major war quite seriously.

But there have been far more wars that killed fewer people, and none that caused extinction. The literature usually models the number of casualties as a Pareto distribution, which means that the probability density is monotonically decreasing in the number of deaths. (For a broader reference class of atrocities, genocides, civil wars etc., I think the picture is similar.)

Smoking, lack of exercise, and unhealthy diets each kill more than 0.1% of the population each year. Coronavirus may kill 0.1% in some countries. The advent of cars in the 20th century resulted in 60 million road deaths, which is maybe 0.5% of everyone alive over that time (I haven't checked this in detail). That can be seen as an unknown from the perspective of someone in 1900. Granted, some of those are more gradual than the sort of catastrophe people have in mind - but actually I'm not sure why that matters.

Looking at individual nations, I'm sure you can find many examples of civil wars, famines, etc. killing 0.1% of the population of a certain country, but far fewer examples killing 10% (though there are some). I'm not claiming the latter is 100x less likely but it is clearly much less likely.

I don't understand this. What do you think the exact same argument would have been, and why was that wildly wrong?

I think there are some risks which have "common mini-versions," to coin a phrase, and others which don't. Asteroids have mini-versions (10%-killer-versions), and depending on how common they are the 10%-killers might be more likely than the 100%-killers, or vice versa. I actually don't know which is more likely in that case.

AI risk is the sort of thing that doesn't have common mini-versions, I think. An AI with the means and motive to kill 10% of humanity probably also has the means and motive to kill 100%.

Natural pandemics DO have common mini-versions, as you point out.

It's less clear with engineered pandemics. That depends on how easy they are to engineer to kill everyone vs. how easy they are to engineer to kill not-everyone-but-at-least-10%, and it depends on how motivated various potential engineers are.

Accidental physics risks (like igniting the atmosphere, creating a false vacuum collapse or black hole or something with a particle collider) are way more likely to kill 100% of humanity than 10%. They do not have common mini-versions.

So what about unknown risks? Well, we don't know. But from the track record of known risks, it seems that probably there are many diverse unknown risks, and so probably at least a few of them do not have common mini-versions.

And by the argument you just gave, the "unknown" risks that have common mini-versions won't actually be unknown, since we'll see their mini-versions. So "unknown" risks are going to be disproportionately the kind of risk that doesn't have common mini-versions.

...

As for what I meant about making the exact same argument in the past: I was just saying that we've discovered various risks that don't have common mini-versions, which at one point were unknown and then became known. Your argument basically rules out discovering such things ever again. Had we listened to your argument before learning about AI, for example, we would have concluded that AI was impossible, or that somehow AIs which have the means and motive to kill 10% of people are more likely than AIs which pose existential threats.

I think I agree with this general approach to thinking about this.

From what I've seen of AI risk discussions, I think I'd stand by my prior statement, which I'd paraphrase now as: There are a variety of different types of AI catastrophe scenario that have been discussed. Some seem like they might be more likely or similarly likely to totally wipe us out that to cause a 5-25% death toll. But some don't. And I haven't seen super strong arguments for considering the former much more likely than the latter. And it seems like the AI safety community as a whole has become more diverse in their thinking on this sort of thing over the last few years.

For engineered pandemics, it still seems to me that literally 100% of people dying from the pathogens themselves seems much less likely than a very high number dying, perhaps even enough to cause existential catastrophe slightly "indirectly". However "well" engineered, pathogens themselves aren't agents which explicitly seek the complete extinction of humanity. (Again, Defence in Depth seems relevant here.) Though this is slightly different from a conversation about the relative likelihood of 10% vs other percentages. (Also, I feel hesitant to discuss this in great deal, for vague information hazards reasons.)

I agree regarding accidental physics risks. But I think the risks from those is far lower than the risks from AI and bio, and probably nanotech, nuclear, etc. (I don't really bring any independent evidence to the table; this is just based on the views I've seen from x-risk researchers.)

I think that'd logically follow from your prior statements. But I'm not strongly convinced about those statements, except regarding accidental physics risks, which seem very unlikely.

I think this is an interesting point. It does tentatively update me towards thinking that, conditional on there indeed being "unknown risks" that are already "in play", they're more likely than I'd otherwise thing to jump straight to 100%, without "mini versions".

However, I think the most concerning source of "unknown risks" are new technologies or new actions (risks that aren't yet "in play"). The unknown equivalents of risks from nanotech, space exploration, unprecedented consolidation of governments across the globe, etc. "Drawing a new ball from the urn", in Bostrom's metaphor. So even if such risks do have "common mini-versions", we wouldn't yet have seen them.

Also, regarding the portion of unknown risks that are in play, it seems to be appropriate to respond to the argument "Most risks have common mini-versions, but we haven't seen these for unknown risks (pretty much by definition)" partly by updating towards thinking the unknown risks lack such common mini-versions, but also partly by updating towards thinking unknown risks are unlikely. We aren't forced to fully take the former interpretation.

Tobias' original point was " Also, if engineered pandemics, or "unforeseen" and "other" anthropogenic risks have a chance of 3% each of causing extinction, wouldn't you expect to see smaller versions of these risks (that kill, say, 10% of people, but don't result in extinction) much more frequently? But we don't observe that. "

Thus he is saying there aren't any "unknown" risks that do have common mini-versions but just haven't had time to develop yet. That's way too strong a claim, I think. Perhaps in my argument against this claim I ended up making claims that were also too strong. But I think my central point is still right: Tobias' argument rules out things arising in the future that clearly shouldn't be ruled out, because if we had run that argument in the past it would have ruled out various things (e.g. AI, nukes, physics risks, and come to think of it even asteroid strikes and pandemics if we go far enough back in the past) that in fact happened.

1. I interpreted the original claim - "wouldn't you expect" - as being basically one in which observation X was evidence against hypothesis Y. Not conclusive evidence, just an update. I didn't interpret it as "ruling things out" (in a strong way) or saying that there aren't any unknown risks without common mini-versions (just that it's less likely that there are than one would otherwise think). Note that his point seemed to be in defence of "Ord's estimates seem too high to me", rather than "the risks are 0".

2. I do think that Tobias's point, even interpreted that way, was probably too strong, or missing a key detail, in that the key sources of risks are probably emerging or new things, so we wouldn't expect to have observed their mini-versions yet. Though I do tentatively think I'd expect to see mini-versions before the "full thing", once the new things do start arising. (I'm aware this is all pretty hand-wavey phrasing.)

3i. As I went into more in my other comment, I think the general expectation that we'll expect to see very small versions before and more often than small ones, which we expect to see before and more often than medium, which we expect to see before and more often than large, etc., probably would've served well in the past. There was progressively more advanced tech before AI, and AI is progressively advancing more. There were progressively more advanced weapons, progressively more destructive wars, progressively larger numbers of nukes, etc. I'd guess the biggest pandemics and asteroid strikes weren't the first, because the biggest are rare.

3ii. AI is the least clear of those examples, because:

But on (a), I do think most relevant researchers would say the risk this month from AI is extremely low; the risks will rise in future as systems become more capable. So there's still time in which we may see mini-versions.

And on (b), I'd consider that a case where a specific argument updates us away from a generally pretty handy prior that we'll see small things earlier and more often than extremely large things. And we also don't yet have super strong reason to believe that those arguments are really painting the right picture, as far as I'm aware.

3iii. I think if we interpreted Tobias's point as something like "We'll never see anything that's unlike the past", then yes, of course that's ridiculous. So as I mentioned elsewhere, I think it partly depends on how we carve up reality, how we define things, etc. E.g., do we put nukes in a totally new bucket, or consider it a part of trends in weaponry/warfare/explosives?

But in any case, my interpretation of Tobias's point, where it's just about it being unlikely to see extreme things before smaller versions, would seem to work with e.g. nukes, even if we put them in their own special category - we'd be surprise by the first nuke, but we'd indeed see there's one nuke before there are thousands, and there are two detonations on cities before there's a full-scale nuclear war (if there ever is one, which hopefully and plausibly there won't be).

In general I think you've thought this through more carefully than me so without having read all your points I'm just gonna agree with you.

So yeah, I think the main problem with Tobias' original point was that unknown risks are probably mostly new things that haven't arisen yet and thus the lack of observed mini-versions of them is no evidence against them. But I still think it's also true that some risks just don't have mini-versions, or rather are as likely or more likely to have big versions than mini-versions. I agree that most risks are not like this, including some of the examples I reached for initially.

Hmm. I'm not sure I'm understanding you correctly. But I'll respond to what I think you're saying.

Firstly, the risk of natural pandemics, which the Spanish Flu was a strong example of, did have "common mini-versions". In fact, Wikipedia says the Black Death was the "most fatal pandemic recorded in human history". So I really don't think we'd have ruled out the Spanish Flu happening by using the sort of argument I'm discussing (which I'm not sure I'd call "my argument") - we'd have seen it as unlikely in any given year, and that would've been correct. But I imagine we'd have given it approximately the correct ex ante probability.

Secondly, even nuclear weapons, which I assume is what your reference to 1944 is about, seem like they could fit neatly in this sort of argument. It's a new weapon, but weapons and wars existed for a long time. And the first nukes really couldn't have killed everyone. And then we gradually had more nukes, more test explosions, more Cold War events, as we got closer and closer to it being possible for 100% of people to die from it. And we haven't had 100% die. So it again seems like we wouldn't have ruled out what ended up happening.

Likewise, AI can arguably be seen as a continuation of past technological, intellectual, scientific, etc. progress in various ways. Of course, various trends might change in shape, speed up, etc. But so far they do seem to have mostly done so somewhat gradually, such that none of the developments would've been "ruled out" by expecting the future to looking roughly similar to the past or the past+extrapolation. (I'm not an expert on this, but I think this is roughly the conclusion AI Impacts is arriving at based on their research.)

Perhaps a key point is that we indeed shouldn't say "The future will be exactly like the past." But instead "The future seems likely to typically be fairly well modelled as a rough extrapolation of some macro trends. But there'll be black swans sometimes. And we can't totally rule out totally surprising things, especially if we do very new things."

This is essentially me trying to lay out a certain way of looking at things. It's not necessarily the one I strongly adopt. (I actually hadn't thought about this viewpoint much before, so I've found trying to lay it out/defend it here interesting.)

In fact, as I said, I (at least sort-of) disagreed with Tobias' original comment, and I'm very concerned about existential risks. And I think a key point is that new technologies and actions can change the distributions we're drawing from, in ways that we don't understand. I'm just saying it still seems quite plausible to me (and probably likely, though not guaranteed) that we'd see a 5-25%-style catastrophe from a particular type of risk before a 100% catastrophe from it. And I think history seems consistent with that, and that that idea probably would've done fairly well in the past.

(Also, as I noted in my other comment, I haven't yet seen very strong evidence or arguments against the idea that "somehow AIs which have the means and motive to kill 10% of people are more likely than AIs which pose existential threats" - or more specifically, that AI might result in that, whether or not it has the "motive" to do that. It seems to me the jury is still out. So I don't think I'd use the fact an argument reaches that conclusion as a point against that argument.)

I agree with all this and don't think it significantly undermines anything I said.

I think the community has indeed developed more diverse views over the years, but I still think the original take (as seen in Bostrom's Superintelligence) is the closest to the truth. The fact that the community has gotten more diverse can be easily explained as the result of it growing a lot bigger and having a lot more time to think. (Having a lot more time to think means more scenarios can be considered, more distinctions made, etc. More time for disagreements to arise and more time for those disagreements to seem like big deals when really they are fairly minor; the important things are mostly agreed on but not discussed anymore.) Or maybe you are right and this is evidence that Bostrom is wrong. Idk. But currently I think it is weak evidence, given the above.

Yeah in retrospect I really shouldn't have picked nukes and natural pandemics as my two examples. Natural pandemics do have common mini-versions, and nukes, well, the jury is still out on that one. (I think it could go either way. I think that nukes maybe can kill everyone, because the people who survive the initial blasts might die from various other causes, e.g. civilizational collapse or nuclear winter. But insofar as we think that isn't plausible, then yeah killing 10% is way more likely than killing 100%. (I'm assuming we count killing 99% as killing 10% here?) )

I think AI, climate change tail risks, physics risks, grey goo, etc. would be better examples for me to talk about.

With nukes, I do share the view that they could plausibly kill everyone. If there's a nuclear war, followed by nuclear winter, and everyone dies during that winter, rather than most people dying and then the rest succumbing 10 years later from something else or never recovering, I'd consider that nuclear war causing 100% deaths.

My point was instead that that really couldn't have happened in 1945. So there was one nuke, and a couple explosions, and gradually more nukes and test explosions, etc., before there was a present risk of 100% of people dying from this source. So we did see something like "mini-versions" - Hiroshima and Nagasaki, test explosions, Cuban Missile Crisis - before we saw 100% (which indeed, we still haven't and hopefully won't).

With climate change, we're already seeing mini-versions. I do think it's plausible that there could be a relatively sudden jump due to amplifying feedback loops. But "relatively sudden" might mean over months or years or something like that. And it wouldn't be a total bolt from the blue in any case - the damage is already accruing and increasing, and likely would do in the lead up to such tail risks.

AI, physics risks, and nanotech are all plausible cases where there'd be a sudden jump. And I'm very concerned about AI and somewhat about nanotech. But note that we don't actually have clear evidence that those things could cause such sudden jumps. I obviously don't think we should wait for such evidence, because if it came we'd be dead. But it just seems worth remembering that before using "Hypothesis X predicts no sudden jump in destruction from Y" as an argument against hypothesis X.

Also, as I mentioned in my other comment, I'm now thinking maybe the best way to look at that is specific arguments in the case of AI, physics risks, and nanotech updating us away from the generally useful prior that we'll see small things before extreme versions of the same things.

Minor: some recent papers argue the death toll from the Plague of Justinian has been exaggerated.

https://www.pnas.org/content/116/51/25546?fbclid=IwAR1bN1LgbMI-CVUNxGsm3QxCEhGMVMB50IkEVoKpEIfSySEmxY6Ug5IhRTE

https://academic.oup.com/past/article/244/1/3/5532056

(Two authors appear on both papers.)

I think, for basically Richard Ngo's reasons, I weakly disagree with a strong version of Tobias's original claim that:

(Whether/how much I disagree depends in part on what "much more frequently" is meant to imply.)

I also might agree with:

(Again, depends in part on what "much more likely" would mean.)

But I was very surprised to read:

And I mostly agree with Tobias's response to that.

The point that there's a narrower band of asteroid sizes that would cause ~10% of the population's death than of asteroid sizes that would cause 100% makes sense. But I believe there are also many more asteroids in that narrow band. E.g., Ord writes:

And for pandemics it seems clearly far more likely to have one that kills a massive number of people than one that kills everyone. Indeed, my impression is that most x-risk researchers think it'd be notably more likely for a pandemic to cause existential catastrophe through something like causing collapse or reduction in population to below minimum viable population levels, rather than by "directly" killing everyone. (I'm not certain of that, though.)

I'd guess, without much basis, that:

I guess I'd have to see the details of what the modelling would've looked like, but it seems plausible to me that a model in which 10% events are much less likely than 1% events, which are in turn much less likely than 0.1% events, would've led to good predictions. And cherry-picking two years where that would've failed doesn't mean the predictions would've been foolish ex ante.

E.g., if the model said something as bad as the Spanish Flu would happen every two centuries, then predicting a 0.5% (or whatever) chance of it in 1917 would've made sense. And if you looked at that prediction alongside the surrounding 199 predictions, the predictor might indeed seem well-calibrated. (These numbers are made up for the example only.)

(Also, the Defence in Depth paper seems somewhat relevant to this matter, and is great in any case.)

Yeah I take back what I said about it being substantially less likely, that seems wrong.

I feel the need to clarify, by the way, that I'm being a bit overly aggressive in my tone here and I apologize for that. I think I was writing quickly and didn't realize how I came across. I think you are making good points and have been upvoting them even as I disagree with them.

Just FYI: I don't think I found your tone overly aggressive in either of your comments. Perhaps a slight vibe of strawmanning or accidental misinterpretation (which are hard to tease apart), but nothing major, and no sense of aggression :)

Something I've just realised I forgot to mention in reply: These are estimates of existential risk, not extinction risk. I do get the impression that Ord thinks extinction is the most likely form of existential catastrophe, but the fact that these estimates aren't just about extinction might help explain at least part of why they're higher than you see as plausible.

In particular, your response based on it seeming very unlikely an event could kill even people in remote villages etc. is less important if we're talking about existential risk than extinction risk. That's because if everyone except the people in the places you mentioned were killed, it seems very plausible we'd have something like an unrecoverable collapse, or the population lingering at low levels for a short while before some "minor" catastrophe finishes them off.

This relates to Richard's question "Are you suggesting that six people could repopulate the human species?"

I'm afraid Toby underestimates the risks from climate change in the following ways:

All the Best

Johnny West

Some comments from Michelle Hutchinson (80k's Head of Advising) on the latest 80k podcast episode seem worth mentioning here, in relation to the last section of this post: