I show how a standard argument for advancing progress is extremely sensitive to how humanity’s story eventually ends. Whether advancing progress is ultimately good or bad depends crucially on whether it also advances the end of humanity. Because we know so little about the answer to this crucial question, the case for advancing progress is undermined. I suggest we must either overcome this objection through improving our understanding of these connections between progress and human extinction or switch our focus to advancing certain kinds of progress relative to others — changing where we are going, rather than just how soon we get there.[1]

Things are getting better. While there are substantial ups and downs, long-term progress in science, technology, and values have tended to make people’s lives longer, freer, and more prosperous. We could represent this as a graph of quality of life over time, giving a curve that generally trends upwards.

What would happen if we were to advance all kinds of progress by a year? Imagine a burst of faster progress, where after a short period, all forms of progress end up a year ahead of where they would have been. We might think of the future trajectory of quality of life as being primarily driven by science, technology, the economy, population, culture, societal norms, moral norms, and so forth. We’re considering what would happen if we could move all of these features a year ahead of where they would have been.

While the burst of faster progress may be temporary, we should expect its effect of getting a year ahead to endure.[2] If we’d only advanced some domains of progress, we might expect further progress in those areas to be held back by the domains that didn’t advance — but here we’re imagining moving the entire internal clock of civilisation forward a year.

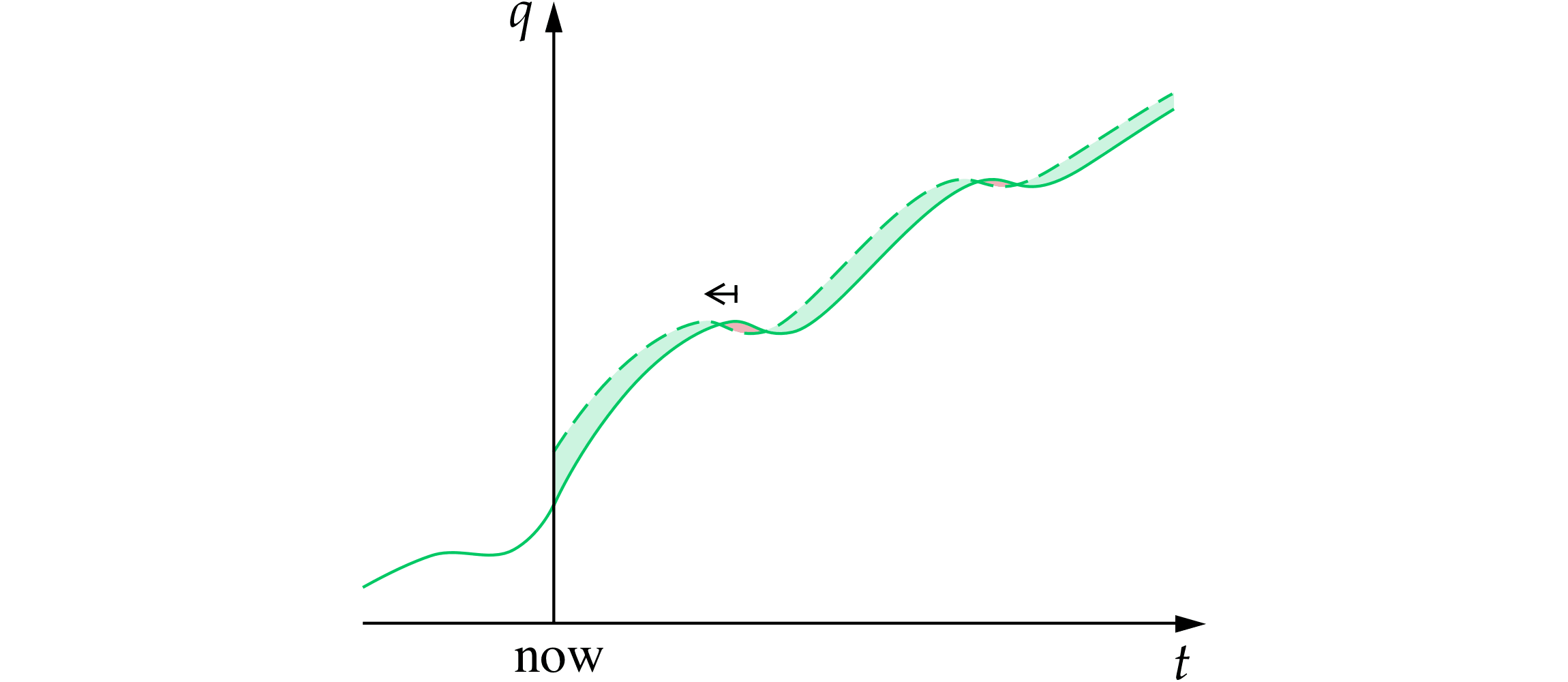

If we were to advance progress in this way, we’d be shifting the curve of quality of life a year to the left. Since the curve is generally increasing, this would mean the new trajectory of our future is generally higher than the old one. So the value of advancing progress isn’t just a matter of impatience — wanting to get to the good bits sooner — but of overall improvement in people’s quality of life across the future.

Figure 1. Sooner is better. The solid green curve is the default trajectory of quality of life over time, while the dashed curve is the trajectory if progress were uniformly advanced by one year (shifting the default curve to the left). Because the trajectories trend upwards, quality of life is generally higher under the advanced curve. To help see this, I’ve shaded the improvements to quality of life green and the worsenings red.

That’s a standard story within economics: progress in science, technology, and values has been making the world a better place, so a burst of faster progress that brought this all forward by a year would provide a lasting benefit for humanity.

But this story is missing a crucial piece.

The trajectory of humanity’s future is not literally infinite. One day it will come to an end. This might be a global civilisation dying of old age, lumbering under the weight of accumulated bureaucracy or decadence. It might be a civilisation running out of resources: either using them up prematurely or enduring until the sun itself burns out. It might be a civilisation that ends in sudden catastrophe — a natural calamity or one of its own making.

If the trajectory must come to an end, what happens to the previous story of an advancement in progress being a permanent uplifting of the quality of life? The answer depends on the nature of the end time. There are two very natural possibilities.

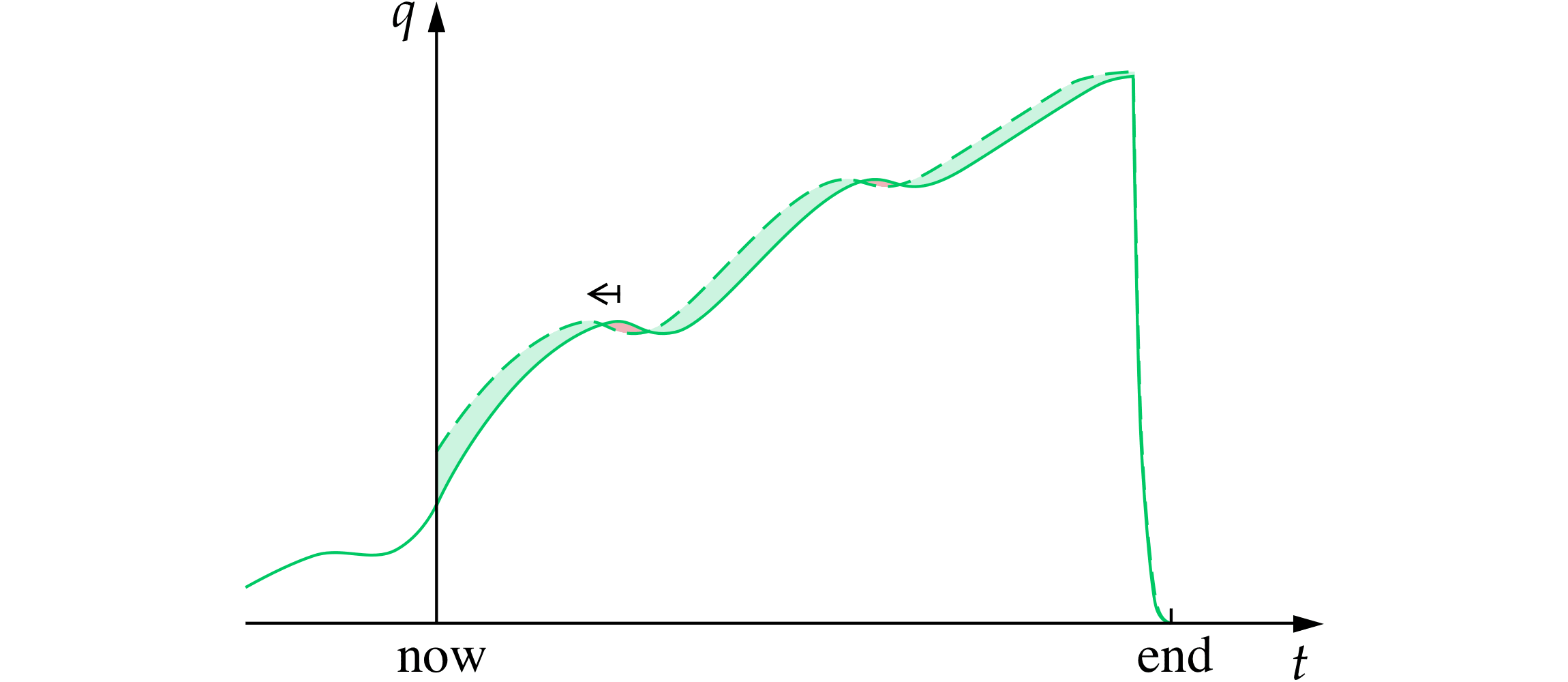

One is that the end time is fixed. It is a particular calendar date when humanity would come to an end, which would be unchanged if we advanced progress. Economists would call this an exogenous end time. On this model, the standard story still works — what you are effectively doing when you advance progress by a year is skipping a year at current quality of life and getting an extra year at the final quality of life, which could be much higher.

Figure 2. Exogenous end time. Advancing progress with an unchanging end time. Note that the diagram is not to scale (our future is hopefully much longer).

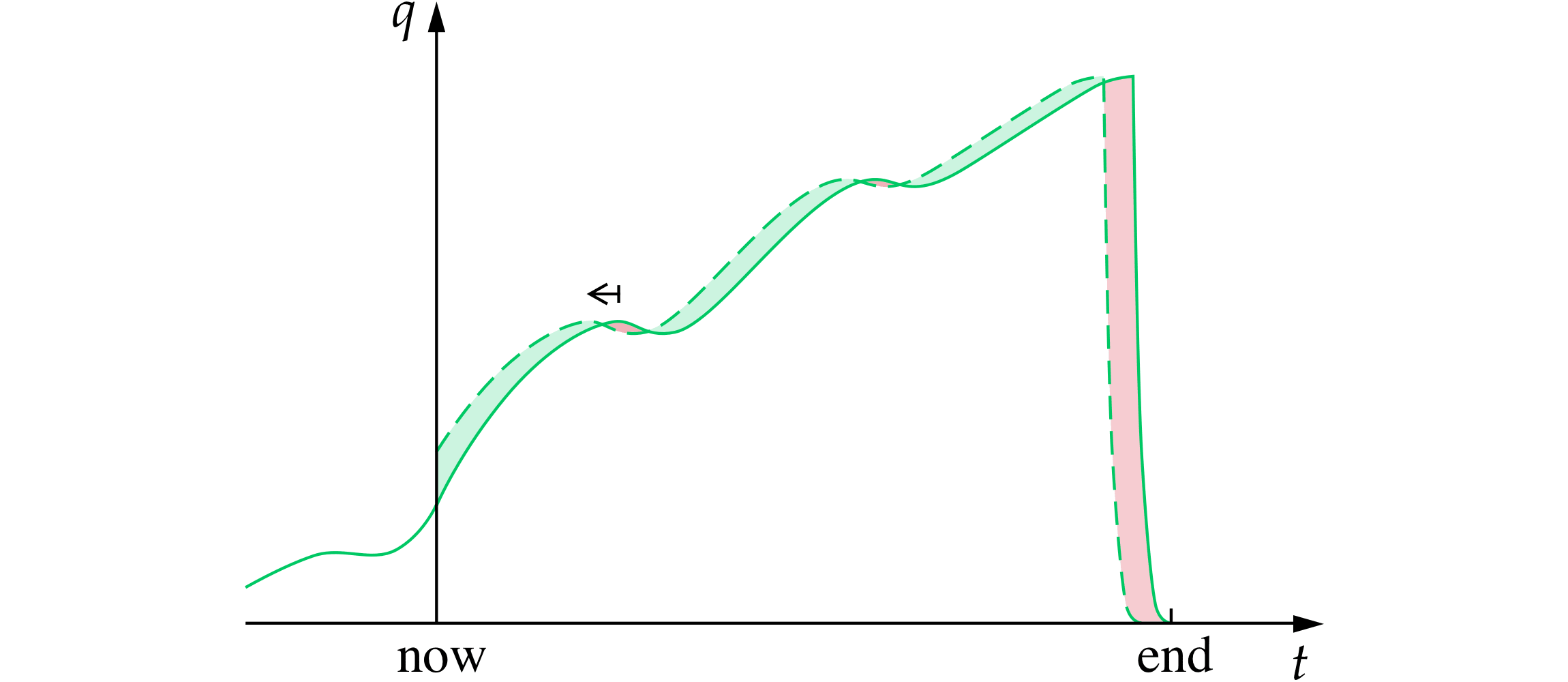

The other natural model is that when advancing progress, the end time is also brought forward by a year (call it an endogenous end time). A simple example would be if there were a powerful technology which once discovered, inevitably led to human extinction shortly thereafter — perhaps something like nuclear weapons, but more accessible and even more destructive. If so, then advancing progress would bring forward this final invention and our own extinction.

Figure 3. Endogenous end time. Advancing progress also brings forward the end time.

On this model, there is a final year which is much worse under the trajectory where progress is advanced by a year. Whether we assume the curve suddenly drops to zero at the end, or whether it declines more smoothly, there is a time where the upwards march of quality-of-life peaks and then goes down. And bringing the trajectory forward by a year makes the period after the peak worse, rather than better. So we have a good effect of improving the quality of life for many years combined with a bad effect of bringing our demise a year sooner.

It turns out that on this endogenous model, the bad effect always outweighs the good: producing lower total and average quality of life for the period from now until the original end time. This is because the region under the advanced curve is just a shifted version of the same shape as the region under the default curve — except that it is missing the first slice of value that would have occurred in the first year.[3] Here advancing progress involves skipping a year at the current quality of life and never replacing it with anything else. It is like skipping a good early track on an album whose quality steadily improves — you get to the great stuff sooner, but ultimately just hear the same music minus one good song.

So when we take account of the fact that this trajectory of humanity is not infinite, we find that the value of uniformly advancing progress is extremely sensitive to whether the end of humanity is driven by an external clock or an internal one.

Both possibilities are very plausible. Consider the ways our trajectory might play out that we listed earlier. We can categorise these by whether the end point is exogenous or endogenous.

Exogenous end points:

- enduring until some external end like the sun burning out

- a natural catastrophe like a supervolcanic eruption or asteroid impact

Endogenous end points:

- accumulation of bad features like bureaucracy or decadence

- prematurely running out of resources

- anthropogenic catastrophe such as nuclear war

Whether advancing all kinds of progress by a short period of time is good or bad, is thus extremely sensitive to whether humanity’s ultimate end is caused by something on the first list or the second. If we knew that our end was driven by something on the second list, then advancing all progress would be a bad thing.

I am not going to attempt to adjudicate which kind of ending is more likely or whether uniformly advancing progress is good or bad. Importantly — and I want to be clear about this — I am not arguing that advancing progress is a bad thing.

Instead, the point is that whether advancing progress is good or bad depends crucially on an issue that is rarely even mentioned in discussions on the value of progress. Historians of progress have built a detailed case that (on average) progress has greatly improved people’s quality of life. But all this rigorous historical research does not bear on the question of whether it will also eventually bring forward our end. All the historical data could thus be entirely moot if it turns out that advancing progress has also silently shortened our future lifespan.

One upshot of this is that questions about what will ultimately cause human extinction[4] — which are normally seen as extremely esoteric — are in fact central to determining whether uniform advancements of progress are good or bad. These questions would thus need to appear explicitly in economics and social science papers and books about the value of progress. While we cannot hope to know about our future extinction with certainty, there is substantial room for clarifying the key questions, modelling how they affect the value of progress, and showing which parameter values would be required for advancing all progress to be a good thing.

Examples of such clarification and modelling would include:

- Considerations of the amount of value at stake in each case.[5]

- Modelling existential risk over time, such as with a time-varying hazard rate.[6]

- Allowing the end time (or hazard curve) to be neither purely exogenous nor purely endogenous.[7]

Note that my point here isn’t the increasingly well-known debate about whether work aimed at preventing existential risks could be even more important than work aimed at advancing progress.[8] It is that considerations about existential risk undermine a promising argument for advancing progress and call into question whether advancing progress is good at all.

Why hasn’t this been noticed before? The issue is usually hidden behind a modelling assumption of progress continuing over an infinite future. In this never-ending model, when you pull this curve sideways, there is always more curve revealed. It is only when you say that it is of a finite (if unknown) length that the question of what happens when we run out of curve arises. There is something about this that I find troublingly reminiscent of a Ponzi scheme: in the never-ending model, advancing progress keeps bringing forward the benefits we would have had in year t+1 to pay the people in year t, with the resulting debt being passed infinitely far into the future and so never coming due.

Furthermore, the realisation that humanity won’t last for ever is surprisingly recent — especially the possibility that its lifespan might depend on our actions.[9] And the ability to broach this in academic papers without substantial pushback is even more recent. So perhaps it shouldn’t be surprising that this was only noticed now.

The issue raised by this paper has also been masked in many economic analyses by an assumption of pure time-preference: that society should have a discount rate on value itself. If we use that assumption, we end up with a somewhat different argument for advancing progress — one based on impatience; on merely getting to the good stuff sooner, even if that means getting less of it.

Even then, the considerations I’ve raised would undermine this argument. For if it does turn out that advancing progress across the board is bad from a patient perspective, then we’d be left with an argument that ‘advancing progress is good, but only due to fundamental societal impatience and the way it neglects future losses’. The rationale for advancing progress would be fundamentally about robbing tomorrow to pay for today, in a way that is justified only because society doesn’t (or shouldn’t) care much about the people at the end of the chain when the debt comes due. This strikes me as a very troubling position and far from the full-throated endorsement of progress that its advocates seek.

Could attempts to advance progress have some other kind of predictable effect on the shape of the curve of quality of life over time?[10] They might. For instance, they might be able to lengthen our future by bringing forward the moment we develop technologies to protect us from natural threats to our survival. This is an intriguing idea, but there are challenges to getting it to work: especially since there isn’t actually much natural extinction risk for progress to reduce, whereas progress appears to be introducing larger anthropogenic risks.[11] Even if the case could be made, it would be a different case to the one we started with — it would be a case based on progress increasing the duration of lived experience rather than its quality. I’d be delighted if such a revised case can be made, but we can’t just assume it.[12]

If we were to advance some kinds of progress relative to others, we could certainly make large changes to the shape of the curve, or to how long humanity lasts.[13] But this is not even a different kind of argument for the value of progress; it is a different kind of intervention, which we could call differential progress.[14] This would not be an argument that progress is generally good, but that certain parts of it are. Again, I’m open to this argument, but it is not the argument for progress writ large that its proponents desire.

And it may have some uncomfortable consequences. If advancing all progress would turn out to be bad, but advancing some parts of it would be good, then it is likely that advancing the remaining parts would be even more bad. Since some kinds of progress are more plausibly linked to bringing about an earlier demise (e.g. nuclear weapons, climate change, and large-scale resource depletion only became possible because of technological, economic, and scientific progress) these parts may not fare so well in such an analysis. So it may really be an argument for differentially boosting other kinds of progress, such as moral progress or institutional progress, and perhaps even for delaying technological, economic, and scientific progress.

It is important to stress that through all of this we’ve only been exploring the case for advancing progress — comparing the default path of progress to one that is shifted earlier. There might be other claims about the value of progress that are unaffected. For example, these considerations don’t directly undermine the argument that progress is better than stasis, and thus that if progress is fragile, we need to protect it. That may be true even if humanity does eventually bring about its own end, and even if our progress brings it about sooner. Or one could understand ‘progress’ to refer only to those cases where the outcomes are genuinely improved, such that it is by definition a good thing. But the arguments we’ve explored show that these other arguments in favour of progress wouldn’t have many of the policy implications that advocates of progress typically favour — as whether it is good or bad to advance scientific research or economic productivity will still depend very sensitively on esoteric questions about how humanity ends.

I find all this troubling. I’m a natural optimist and an advocate for progress. I don’t want to see the case for advancing progress undermined. But when I tried to model it clearly to better understand its value, I found a substantial gap in the argument. I want to draw people’s attention to that gap in the hope that we can close it. There may be good arguments to settle this issue one way or the other, but they will need to be made. Alternatively, if we do find that uniform advancements of progress are in fact bad, we will need to adjust our advocacy to champion the right kinds of differential progress.

References

Aschenbrenner, Leopold; and Trammell, Philip. (2024). Existential Risk and Growth. GPI Working Paper No. 13-2024, (Global Priorities Institute and Department of Economics, University of Oxford).

Beckstead, Nick. (2013). On the Overwhelming Importance of Shaping the Far Future. PhD Thesis. Department of Philosophy, Rutgers University.

Bostrom, Nick. (2002). Existential Risks: Analyzing Human Extinction, Journal of Evolution and Technology 9(1).

Bostrom, Nick. (2014). Superintelligence: Paths, dangers, strategies, (Oxford: OUP).

Moynihan, Thomas. (2020). X-Risk: How Humanity Discovered its Own Extinction, (Urbanomic).

Ord, Toby. (2020). The Precipice: Existential risk and the Future of Humanity, (London: Bloomsbury).

Ord, Toby. (Forthcoming). Shaping humanity’s longterm trajectory. In Barrett et al (eds.) Essays on Longtermism, (Oxford: OUP).

Snyder-Beattie, Andrew E.; Ord, Toby; and Bonsall, Michael B. (2019). An upper bound for the background rate of human extinction. Scientific Reports 9:11054, 1–9.

- ^

I’d like to thank Fin Moorhouse, Kevin Kuruc, and Chad Jones for insightful discussion and comments, though of course this doesn’t imply that they endorse the arguments in this paper.

- ^

The arguments in this paper would also apply if it were instead a temporary acceleration leading to a permanent speed-up of progress. In that case an endogenous end time would mean a temporal compression of our trajectory up to that point: achieving all the same quality levels, but having less time to enjoy each one, then prematurely ending.

- ^

This is proved in more detail, addressing several complications in Ord (forthcoming). Several complexities arise from different approaches to population ethics, but the basic argument holds for any approach to population ethics that considers a future with lower total and average wellbeing to be worse. If your approach to population ethics avoids this argument, then note that whether advancing progress is good or bad is now highly sensitive to the theory of population ethics — another contentious and esoteric topic.

- ^

One could generalise this argument beyond human extinction. First, one could consider other kinds of existential risk. For instance, if a global totalitarian regime arises which permanently reduces quality of life to a lower level than it is today, then what matters is whether the date of its onset is endogenous. Alternatively, we could broaden this argument to include decision-makers who are partial to a particular country or culture. In that case, what matters is whether advancing progress would advance the end of that group.

- ^

For example, if final years are 10 times as good as present years, then we would only need a 10% chance that humanity’s end was exogenous for advancing progress to be good in expectation. I think this is a promising strategy for saving the expected value of progress.

- ^

In this setting, the former idea of an endogenous end time roughly corresponds to the assumption that advancing progress by a year also shifts humanity’s survival curve left by a year, while an exogenous end time corresponds to the assumption that the survival curve stays fixed. A third salient possibility is that it is the corresponding hazard curve which shifts left by a year. This possibility is mentioned in Ord (forthcoming, pp. 17 & 30). The first and third of these correspond to what Bostrom (2014) calls transition risk and state risk.

- ^

For example, one could decompose the overall hazard curve into an exogenous hazard curve plus an endogenous one, where only the latter gets shifted to the left. Alternatively, Aschenbrenner & Trammell (2024) present a promising parameterisation that interpolates between state risk and transition risk (and beyond).

- ^

See, for example, my book on existential risk (Ord 2020), or the final section of Ord (forthcoming).

- ^

See Moynihan (2020).

- ^

Any substantial change to the future, including advancing progress by a year, would probably have large chaotic effects on the future in the manner of the butterfly effect. But only a systematic change to the expected shape or duration of the curve, could help save the initial case for advancing progress.

- ^

The fossil record suggests natural extinction risk is lower than 1 in 1,000 per century — after all humanity has already survived 2,000 centuries and typical mammalian species survive 10,000 centuries. See Snyder-Beattie et al. (2019) and Ord (2020, pp. 80–87).

- ^

Another kind of case that is often made is that the sheer rate of progress could change the future trajectory. A common version is that the world is more stable with steady 3–5% economic growth: if we don’t keep moving forward, we will fall over. This is plausible but gives quite a different kind of conclusion. It suggests it is good to advance economic progress when growth is at 2% but may be bad to advance it when growth is 5%. Moreover, unless the central point about progress bringing forward humanity’s end is dealt with head-on, the best this steady-growth argument can achieve is to say that ‘yes, advancing progress may cut short our future, but unfortunately we need to do it anyway to avoid collapse’.

- ^

Beckstead calls this kind of change to where we’re going, rather than just how quickly we get there a ‘trajectory change’ (Beckstead 2013).

- ^

Bostrom’s concept of differential technological development (Bostrom 2002) is a special case.

If I believe that:

then I want to "skip the current track".

That's an interesting and unusual argument for progress:

Progress so far has brought us to a point where we are causing so much harm on a global scale that the value of each year is large and negative. But pushing on further with progress is a good thing because it will help end this negative period.

That could well be correct, though it is also very different from the usual case by proponents of progress.

Yeah, agree this isn't the usual argument from pro-progress people, who tend to be optimists who wouldn't agree that current civilisation does more harm than good.

And yeah, my second bullet point is clearest when the end of factory farming is endogenous rather than exogenous. In the exogenous case it's more confusing because I see progress pulling in both directions: in general, larger populations and more prosperity seem likely to increase economic demand for factory farms, and on the other hand, better alternatives in combination with social and political advocacy have the potential to decrease the number of animals used.

In the presence of exogenous ends, I suppose the track-skipping condition is precisely that the next track is worse than the track that (if you don't skip) would have played but is stopped at the time of the exogenous event. In the specific case of factory farming, it's not obvious to me what to expect in terms of which one is better.

Yes, that's right about the track-skipping condition for the exogenous case, and I agree that there is a strong case the end of factory farming will be endogenous. I think it is a good sign that the structure of my model represents some/all of the key considerations in your take on progress too — but with the different assumption about the current value changing the ultimate conclusion.

Thank you for the article! I've been skeptical of the general arguments for progress (from a LT perspective) for several years but never managed to articulate a model quite as simple/clear as yours.

When I've had these debates with people before, the most compelling argument for me (other than progress being good under a wide range of commonsensical assumptions + moral/epistemic uncertainty) is a combination of these arguments plus a few subtleties. It goes something like this:

This set of arguments only establishes that undifferentiated progress is better than no progress. They do not by themselves directly argue against differential technological progress. However, people who ~roughly believe the above set of arguments will probably say that differential technological progress (or differential progress in general) is a good idea in theory but not realistic except for a handful of exceptions (like banning certain forms of gain-of-function research in virology). For example, they might point to Hayekian difficulties with central planning and argue that in many ways differential technological progress is even more difficult than the traditional issues that attempted central planners have faced.

On balance, I did not find their arguments overall convincing but it's pretty subtle and I don't currently think the case against "undifferentiated progress is exceptionally good" is a slam dunk[1], like I did a few years ago.

In absolute terms. I think there's a stronger case that marginal work on preventing x-risk is much more valuable in expectation. Though that case is not extremely robust because of similar sign-error issues that plague attempted x-risk prevention work.

I think more important than the Hayekian difficulties of planning is that, to my understanding of how the term progress is normally used, part of the reason why all these notions of economic-technological, values, and institutional progress have been linked together under the term "progress" is because they are mutually self-reinforcing. Economic growth and peace and egalitarian, non-hierarchical values/psychology -> democracy -> economic growth and peace and egalitarian values, a-la Kant's perpetual peace. You can't easily make one go faster than the others because they all come together in a virtuous circle.

Further, these notions of progress are associated with a very particular vision of society put forward by liberals (the notion of long-run general progress, not just a specific type of moral progress from this particular law, is an idea mostly reserved to liberals). Progress, then, isn't moving forward on humanity's timeline, but moving forward on the desired liberal timeline of history, with a set of self-reinforcing factors (economic growth, democratization, liberal values) that lock-in the triumph of liberalism to eventually usher in the end of history.

I think this understanding makes the notion of undifferentiated progress much more compelling. It is hard to separate the different factors of progress, so differentiating is unreasonable, but progress is also a specific political vision that can be countered, not some pre-determined inevitable timeline. We live at a time where it feels uncontestable (Fukuyama a couple decades ago), but it isn't quite.

I'd be interested to hear a short explanation of why this seems like a different result from Leopold's paper, especially the idea that it could be better to accelerate through the time of perils.

That talks about the effect of growth on existential risk; this analysis is explicitly not considering that. Here's a paragraph from this post:

Leopold's analysis is of this "revised case" type.

Thank you!

Thanks for the post, Toby.

Here the counterfactual impact is small, usually positive and roughly constant for billions of years or more, and then becomes super negative? The (posterior) counterfactual increase in expected total hedonistic utility caused by interventions whose effects can be accurately measured, like ones in global health and development, decays to 0 as time goes by, and can be modelled as increasing the value of the world for a few years, decades or centuries at most. So I do not see how something like the above can hold.

The hypothetical being considered in this piece is that all progress is advanced by a year. So e.g. we have everything humanity had in the year 1000 in the year 999 instead. Imagine that it was literally exactly the same state of the world, but achieved a year earlier. Wouldn't we then expect to have 2025 technology in 2024? If not, what could be making the effect go away?

In general, there is not much of an external clock that would be setting quality of life in the year 3000 just because that is what the calendar says. It will mainly depend on the internal clock of the attributes of civilisation and this is the clock we are imagining advancing.

This is very different to the the global health and wellbeing interventions, which I wouldn't class as attempting to 'advance progress' and where I'd be surprised to see a significant permanent effect. The permanence will be stronger if the effect is broader across different sectors, or if it is in a sector that is driving the others, and it is aimed at the frontiers of knowledge or technology or institutions, rather than something more like catch-up growth.

To clarify, I agree with the consequences you outline given the hypothetical. I just think this is so unlikely and unfalsifiable that it has no practical value for cause prioritisation.

I disagree with you on this one Vasco Grilo, because I think the argument still works even when things a more stochastic.

To make things just slightly more realistic, suppose that progress, measured by q, is a continuous stochastic process, which at some point drops to zero and stays there (extinction). To capture the 'endogenous endpoint' assumption, suppose that the probability of extinction in any given year is a function of q only. And to simplify things, lets assume it's a Markov process (future behaviour depends only on current state, and is independent of past behaviour).

Suppose current level of progress is q_0, and we're considering an intervention that will cause it to jump to q_1. We have, in absence of any intervention:

Total future value given currently at q_0 = Value generated before we first hit q_1 + Value generated after first hitting q_1

By linearity of expectation:

E(Total future value given q_0) = E(Value before first q_1) + E(Value after first q_1)

By Markov property (small error in this step, corrected in reply, doesn't change conclusions):

E(Total future value given q_0) = E(Value before first q_1) + E(Total future value given q_1)

So as long as E(Value before first q_1) is positive, then we decrease expected total future value by making an intervention that increases q_0 to q_1.

It's just the "skipping a track on a record" argument from the post, but I think it is actually really robust. It's not just an artefact of making a simplistic "everything moves literally one year forward" assumption.

I'm not sure how deep the reliance on the Markov property in the above is. Or how dramatically this gets changed when you allow the probability of extinction to depend slightly on things other than q. It would be interesting to look at that more.

But I think this still shows that the intuition of "once loads of noisy unpredictable stuff has happened, the effect of your intervention must eventually get washed out" is wrong.

Realised after posting that I'm implicitly assuming you will hit q_1, and not go extinct before. For interventions in progress, this probably has high probability, and the argument is roughly right. To make it more general, once you get to this line:

E(Total value given q_0) = E(Value before first q_1) + E(Value after first q_1)

Next line should be, by Markov:

E(Total future value given q_0) = E(Value before first q_1) + P(hitting q_1) E(Value given q_1)

So:

E(Value given q_1) = (E(Value given q_0) - E(Value before first q_1)) / P(hitting q_1)

Still gives the same conclusion if P(hitting q_1) is close to 1, although can give a very different conclusion if P(hitting q_1) is small (would be relevant if e.g. progress was tending to decrease in time towards extinction, in which case clearly bumping q_0 up to q_1 is better!)

Thanks, Toby. As far as I can tell, your model makes no use of empirical data, so I do not see how it can affect cause prioritisation in the real (empirical) world. I am fan of quantitative analyses and models, but I think these still have to be based on some empirical data to be informative.

The point of my comment was to show that a wide range of different possible models would all exhibit the property Toby Ord is talking about here, even if they involve lots of complexity and randomness. A lot of these models wouldn't predict the large negative utility change at a specific future time, that you find implausible, but would still lead to the exact same conclusion in expectation.

I'm a fan of empiricism, but deductive reasoning has its place too, and can sometimes allow you to establish the existence of effects which are impossible to measure. Note this argument is not claiming to establish a conclusion with no empirical data. It is saying if certain conditions hold (which must be determined empirically) then a certain conclusion follows.

Your model says that instantly going from q_0 to q_1 is bad, but I do not think real world interventions allow for discontinuous changes in progress. So you would have to compare "value given q_0" with "value given q_1" + "value accumulated in the transition from q_0 to q_1". By neglecting this last term, I believe your model underestimates the value of accelerating progress.

More broadly, I find it very implausible that an intervention today could meaningully (counterfactually) increase/decrease (after adjusting for noise) expected total hedonistic utility more than a few centuries from now.

Causing extinction (or even some planet scale catastrophe with thousand-year plus consequences that falls short of extinction) would be an example of this wouldn't it? Didn't Stanislav Petrov have the opportunity to meaningfully change expected utility for more than a few centuries?

I can only think of two ways of avoiding that conclusion:

I think either of these would be interesting claims, although it would now feel to me like you were the one using theoretical considerations to make overconfident claims about empirical questions. Even if (1) is true for global nuclear war, I can just pick a different human-induced catastrophic risk as an example, unless it is true for all such examples, which is an even stronger claim.

It seems implausible to me that we should be confident enough in either of these options that all meaningful change in expected utility disappears after a few centuries.

Is there a third option..?

I do actually think option 2 might have something going for it, it's just that the 'few centuries' timescale maybe seems too short to me. But, if you did go down route 2, then Toby Ord's argument as far as you were concerned would no longer be relying on considerations thousands of years from now. That big negative utility hit he is predicting would be in the next few centuries anyway, so you'd be happy after all?

Sure, but the question is what do we change by speeding progress up. I considered the extreme case where we reduce the area under the curve between q_0 and q_1 to 0, in which case we lose all the value we would have accumulated in passing between those points without the intervention.

If we just go faster, but not discontinuously, we lose less value, but we still lose it, as long as that area under the curve has been reduced. The quite interesting thing is that it's the shape of the curve right now that matters, even though the actual reduction in utility is happening far in the future.

I do not think so.

In my mind, not meaningfully so. Based on my adjustments to the mortality rates of cooling events by CEARCH, the expected annual mortality rate from nuclear winter is 7.32*10^-6. So, given a 1.31 % annual probability of a nuclear weapon being detonated as an act of war, the mortality rate from nuclear winter conditional on at least 1 nuclear detonation is 0.0559 % (= 7.32*10^-6/0.0131). I estimated the direct deaths would be 1.16 times as large as the ones from the climatic effects, and I think CEARCH's mortality rates only account for these. So the total mortality rate conditional on at least 1 nuclear detonation would be 0.121 % (= 5.59*10^-4*(1 + 1.16)), corresponding to 9.68 M (= 0.00121*8*10^9) deaths for today's population. Preventing millions of deaths is absolutely great, but arguably not enough to meaningfully improve the longterm future?

Why not simply assessing the value of preventing wars and pandemics based on standard cost-effectiveness analyses, for which we can rely on empirically informed estimates like the above?

The way I think about it is that interventions improve the world for a few years to centuries, and then after that practically nothing changes (in the sense the expected benefits coming from changing the longterm are negligible). If I understand correctly, frameworks like Toby's or yours that suggest that improving the world now may be outweighted by making the world worse in the future suppose we can reliably change the value the world will have longterm, and that this is may be the driver of the overall effect.

Thanks for the detailed reply on that! You've clearly thought about this a lot, and I'm very happy to believe you're right on the impact of nuclear war, but It sounds like you are more or less opting for what I called option 1? In which case, just substitute nuclear war for a threat that would literally cause extinction with high probability (say release of a carefully engineered pathogen with high fatality rate, long incubation period, and high infectiousness). Wouldn't that meaningfully affect utility for more than a few centuries? Because there would be literally no one left, and that effect is guaranteed to be persistent! Even if it "just" reduced the population by 99%, that seems like it would very plausibly have effects for thousands of years into the future.

It seems to me that to avoid this, you have to either say that causing extinction (or near extinction level catastrophe) is virtually impossible, through any means, (what I was describing as option 1) or go the other extreme and say that it is virtually guaranteed in the short term anyway, so that counterfactual impact disappears quickly (what I was describing as option 2). Just so I understand what you're saying, are you claiming one of these two things? Or is there another way out that I'm missing?

Thanks for the kind words. I was actually unsure whether I should have followed up given my comments in this thread had been downvoted (all else equal, I do not want to annoy readers!), so it is good to get some information.

I think the effect of the intervention will still decrease to practically 0 in at most a few centuries in that case, such that reducing the nearterm risk of human extinction is not astronomically cost-effective. I guess you are imagining that humans either go extinct or have a long future where they go on to realise lots of value. However, this is overly binary in my view. I elaborate on this in the post I linked to at the start of this paragraph, and its comments.

I guess the probability of human extinction in the next 10 years is around 10^-7, i.e. very unlikely, but far from impossible.

I'm at least finding it useful figuring out exactly where we disagree. Please stop replying if it's taking too much of your time, but not because of the downvotes!

This isn't quite what I'm saying, depending on what you mean by "lots" and "long". For your "impossible for an intervention to have counterfactual effects for more than a few centuries" claim to be false, we only need the future of humanity to have a non-tiny chance of being longer than a few centuries (not that long), and for there to be conceivable interventions which have a non-tiny chance of very quickly causing extinction. These interventions would then meaningfully affect counterfactual utility for more than a few centuries.

To be more concrete and less binary, suppose we are considering an intervention that has a risk p of almost immediately leading to extinction, and otherwise does nothing. Let U be the expected utility generated in a year, in 500 years time, absent any intervention. If you decide to make this intervention, that has the effect of changing U to (1-p)U, and so the utility generated in that far future year has been changed by pU.

For this to be tiny/non-meaningful, we either need p to be tiny, or U to be tiny (or both).

Are you saying:

1, in the sense I think the change in the immediate risk of human extinction per cost is astronomically low for any conceivable intervention. Relatedly, you may want to check my discussion with Larks in the post I linked to.

It seems worth mentioning the possibility that progress can also be bottlenecked by events external to our civilization. Maybe we need to wait for some star to explode for some experiment or for it to reach some state to exploit it. Or maybe we will wait for the universe to cool to do something (like the aestivation hypothesis for aliens). Or maybe we need to wait for an alien civilization to mature or reach us before doing something.

And even if we don’t "wait" for such events, our advancement can be slowed, because we can't take advantage of them sooner or as effectively along with our internal advancement. Cumulatively, they could mean advancement is not lasting and doesn't make it to our end point.

But I suppose there's a substantial probability that none of this makes much difference, so that uniform internal advancement really does bring everything that matters forward roughly uniformly (ex ante), too.

And maybe we miss some important/useful events if we don't advance. For example, the expansion of the universe puts some stars permanently out of reach sooner if we don’t advance.

Yes, it could be interesting to try to understand the most plausible trajectory-altering things like this that will occur on the exogenous calendar time. They could be very important.

Thanks Toby, this is a nice article, and I think more easily approachable than anything previous I knew on the topic.

For those interested in the topic, I wanted to add links to a couple of Paul Christiano's classic posts:

(I think this is perhaps mostly relevant for the intellectual history.)

Interesting posts!

I don't recall reading either of them before.

Paul's main argument in the second piece is that progress "doesn't have much effect on very long-term outcomes" because he expects this quality-of-life curve to eventually reach a plateau. That is a somewhat different argument. As I show in Shaping Humanity's Longterm Trajectory, the value of advancements can be extremely large as they scale with the instantaneous value of the future. e.g. it is plausible that if civilisation has spread to billions of billions of worlds that having an extra year of this is worth a lot (indeed this is the point of the first part of 'Astronomical Waste'). But it doesn't scale with the duration of our future, so can get beaten by things like reducing existential risk that scale with duration as well.

So the argument that we are likely to reach a plateau is really an argument that existential risk (or some other trajectory changes) will be even more important than progress, rather than that progress may be as likely to be negative value as positive value. For that you need to consider that the curve will end and that the timing of this could be endogenous.

(That said, I think arguments based on plateaus could be useful and powerful in this kind of longtermist reasoning, and encourage people to explore them. I don't think it is obvious that we will reach a plateau (mainly as we might not make it that far), but they are a very plausible feature of the trajectory of humanity that may have important implications if it exists, and which would also simplify some of the analysis.)

@Toby_Ord, thank you - I find this discussion interesting in theory, but I wonder if it's actually tractable? There's no metaphorical lever for affecting overall progress, to advance everything - "science, technology, the economy, population, culture, societal norms, moral norms, and so forth" all at the same pace. Moreover, any culture or effort that in principle seeks to advance all of these things at the same time is likely to leave some of them behind in practice (and I fear that those left behind would be the wrong ones!).

Rather, I think that the "different kind of intervention, which we could call differential progress" is in fact the only kind of intervention there is. That is to say, there are a whole bunch of tiny levers we might be able to pull that affect all sorts of bits of progress. Moreover, some of these levers are surprisingly powerful, while other levers don't really seem to do much. I agree about "differentially boosting other kinds of progress, such as moral progress or institutional progress, and perhaps even for delaying technological, economic, and scientific progress." And I might venture to say that our levers are more more powerful when it comes to the former set than the latter.

This paragraph was intended to speak to the relevance of this argument given that (as you say) we can't easily advance all progress uniformly:

"While there are substantial ups and downs, long-term progress in science, technology, and values have tended to make people’s lives longer, freer, and more prosperous. We could represent this as a graph of quality of life over time, giving a curve that generally trends upwards." Is there good evidence for this? I think after agriculture one can argue that things got better for many. But what about pre agriculture? Given that we were specifically evolved for hunter gather societies, and that anxiety and depression are wide spread today, I find it intuitively plausible that we might not, on average, be better of today than pre agriculture. But I am far from an expert but did understand that something like "a history of happiness" is sorely lacking as an academic discipline.

Perhaps not surprisingly, Karnofsky has written about this and I agree that this could be extremely high impact. https://www.cold-takes.com/hunter-gatherer-happiness/

Great article.

Of the cases you outlined (Exogenous end and Endogenous end) is it prudent to assume we are in the second one?

My thinking is that by definition if exogenous end point is true then it is something we cannot affect. We can’t move that end date forward or back. The endogenous case seems to be where actions or omissions have actual consequences. It’s in this case where we could make things much worse or better.

I completely agree.

But others may not, because most humans aren't longtermists nor utilitarians. So I'm afraid arguments like this won't sway the public opinion much at all. People like progress because it will get them and their loved ones (children and grandchildren, whose future they can imagine) better lives. They just barely care at all whether humanity ends after their grandchildren's lives (to the extent they can even think about it).

This is why I believe that most arguents against AGI x-risk are really based on differing timelines. People like to think that humans are so special we won't surpass them for a long time. And they mostly care about the future for their loved ones.

I really like the paper and I appreciate the effort to put it together and easy to understand. And, I particularly appreciate the effort put in rising attention to this problem. But I am extremely surprised/puzzled that this was not common understanding! This is what lies below the AGI and even degrowth discourses, for example, no? This is why one has to first make sure AGI is safe before putting it out there. What am I missing?

I think the point is making this explicit and having a solid exposition to point to when saying "progress is no good if we all die sooner!"

The very first premise of this post, "Things are getting better." is flawed. Our life-support system, the biosphere, for example, has been gradually deteriorating over the past decades (centuries?) and is in danger of collapse. Currently, humanity has transgressed 6 of the 9 planetary boundaries. See:

https://www.weforum.org/agenda/2024/02/planetary-boundary-health-checks/

Technological 'progress', on balance, is accelerating this potential collapse (e.g. the enormous energy resources consumed by AI). Ord should revise his position accordingly.

Please note that I have been a great fan of Ord's work in the past (particularly The Precipice, which I donated to my school library also), along with his colleagues' work on long-termism. Nevertheless, his latest work (both the blog post and the chapter in the forthcoming OUP book, Essays in Longtermism) feels like a philosophical version of Mark Zuckerberg's Facebook motto, Move fast and (risk) break(ing) things. It downplays uncertainty to an irresponsible degree, and its quantitative mathematical approach fails to sufficiently take into account advances in complex systems science, where the mathematics of dynamical systems and chaos hold sway.

I'd note that quoting that sentence without including the one immediately after it seems misleading, so here it is — it's essentially a distillation of (for instance) Our World in Data's main message:

The fact that people’s lives are longer, freer, and more prosperous now than a few centuries ago doesn't contradict the fact that (as you rightly point out) the biosphere is degrading horrifically.

I'd also note that your depiction of Ord here

seems strawmannish and unnecessarily provocative; it doesn't engage with nuances like (quoting the OP)

and right before that

which sounds like a serious consideration of the opposite of "move fast and risk breaking things", for which I commend Ord given how this goes against his bias (separately from whether I agree with his takes or the overall vibe I get from his progress-related writings).

Another possible endogenous end point that could be advanced is meeting (or being detected by) an alien (or alien AI) civilization earlier and having our civilization destroyed by them earlier as a result.

Or maybe we enter an astronomical suffering or hyperexistential catastrophe due to conflict or stable totalitarianism earlier (internally or due to aliens we encounter earlier) and it lasts longer, until an exogenous end point. So, we replace some good with bad, or otherwise replace some value with worse value.

Executive summary: The standard argument for advancing progress is undermined by uncertainty about whether it also advances humanity's extinction, requiring either better understanding of this connection or a shift to advancing certain kinds of progress relative to others.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.