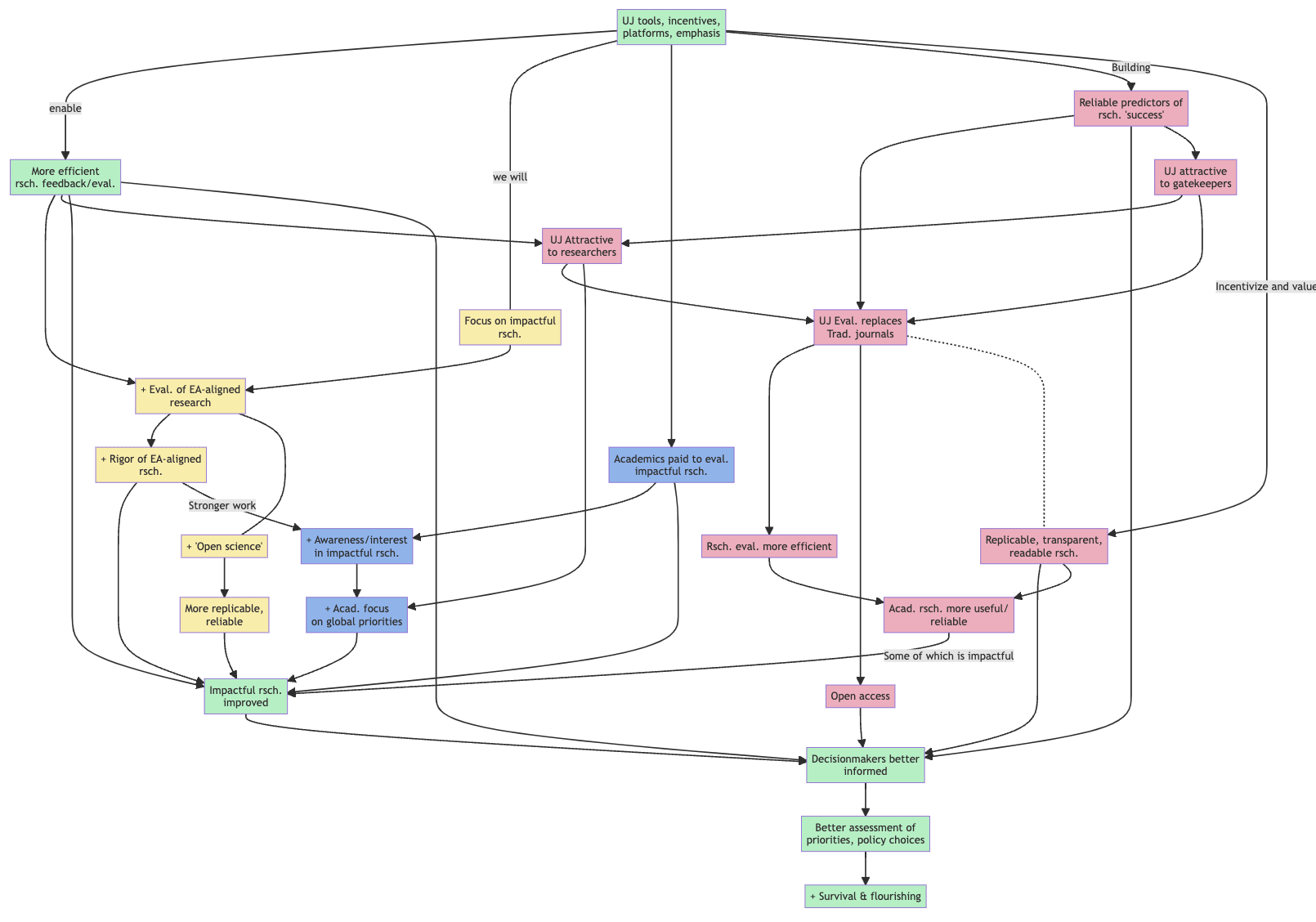

Background: The Unjournal organizes and funds public journal-independent feedback, rating, and evaluation of hosted papers and dynamically-presented research projects. We will focus on work that is highly relevant to global priorities (especially in economics, social science, and impact evaluation). We will encourage better research by making it easier for researchers to get feedback and credible ratings on their work. We promote transparency and open-science practives. We aim to make rigorous research more impactful, and impactful research more rigorous.

We plan to put post our latest updates every 2-4 weeks. I'm linkposting this one because there are some opportunities and calls to action you might want to be aware of.

Note: I initially posted an abbreviated version, but I think it might have been too abbreviated at the cost of readability. Now posting the full content below.

28 July update: some of the links embedded below were incorrect in the initial post. I've tried to fix these; otherwise the 'main linked post' should have correct links.

Funding

The SFF grant is now 'in our account' (all is public and made transparent on our OCF page). This makes it possible for us to

- Move forward in filling staff and contractor positions (see below)

- Increase evaluator compensation and incentives/rewards (see below)

We are circulating a press release sharing our news and plans.

Timelines and pipelines

Our 'Pilot Phase', involving ten papers and roughly 20 evaluations is almost complete. We just released the evaluation package for "The Governance Of Non-Profits And Their Social Impact: Evidence From A Randomized Program In Healthcare In DRC”. We are now waiting on one last evaluation, followed by author responses and 'publishing' the final two packages at https://unjournal.pubpub.org/. (Remember: we publish the evaluations, responses and synthesis; we link the research being evaluated.)

We will make decisions and award our Impactful Research Prize (and possible seminars) and evaluator prizes soon after. The winners will be determined by a consensus of our management team and advisory board (potentially consulting external expertise). The choices will be largely driven by the ratings and predictions given by Unjournal evaluators. After we make the choices, we will make our decision process public and transparent.

"What research should we prioritize for evaluation, and why?"

We continue to develop processes and policies around 'which research to prioritize'. For example, we are considering whether we should set targets for different fields, for related outcome "cause categories," and for research sources. This discussion continues among our team and with stakeholders. We intend to open up the discussion further, making it public and bringing in a range of voices. The objective is to develop a framework and a systematic process to make these decisions. See our expanding notes and discussion on What is global-priorities relevant research?

In the meantime, we are moving forward with our post-pilot “pipeline” of research evaluation. Our management team is considering recent prominent and influential working papers from the National Bureau of Economics Research (NBER) and beyond, and we continue to solicit submissions, suggestions, and feedback. We are also reaching out to users of this research (such as NGOs, charity evaluators, and applied research think tanks), asking them to identify research they particularly rely on and are curious about. If you want to join this conversation, we welcome your input.

(Paid) Research opportunity: to help us do this

We are also considering hiring a small number of researchers to each do a one-off (~16 hours) “research scoping for evaluation management” project. The project is sketched here; essentially, summarize a research theme and its relevance, identify potentially-high-value papers in this area, choose one paper, and curate it for potential Unjournal evaluation.

We see a lot in this task, and expect to actually use and credit this work.

See Unjournal - standalone work task: Research scoping for evaluation management. If you are interested in applying to do this paid project, please let us know through our CtA survey form here.

Call for "Field Specialists"

Of course, we can't commission the evaluation of every piece of research under the sun (at least not until we get the next grant :) ). Thus, within each area, we need to find the right people to monitor and select the strongest work with the greatest potential for impact, and where Unjournal evaluations can add the most value.

This is a big task and there is a lot of ground to cover. To divide and conquer, we’re partitioning this space (looking at natural divisions between fields, outcomes/causes, and research sources) amongst our management team as well as what we now call...

"Field Specialists" (FS), who will

- focus on a particular area of research, policy, or impactful outcome;

- keep track of new or under-considered research with potential for impact;

- explain and assess the extent to which The Unjournal can add value by commissioning this research to be evaluated;

- “curate” these research objects: adding them to our database, considering what sorts of evaluators might be needed, and what the evaluators might want to focus on, and

- potentially serve as an evaluation manager for this same work.

Field specialists will usually also be members of our Advisory Board, and we are encouraging expressions of interest for both together. (However, these don’t need to be linked in every case.) .

Interested in a field specialist role or other involvement in this process? Please fill out this general involvement form (about 3–5 minutes).

Setting priorities for evaluators

We are also considering how to set priorities for our evaluators. Should they prioritize:

- Giving feedback to authors?

- Helping policymakers assess and use the work?

- Providing a 'career-relevant benchmark' to improve research processes?

We discuss this topic here, considering how each choice relates to our Theory of Change.

Increase in evaluator compensation, incentives/rewards

We want to attract the strongest researchers to evaluate work for The Unjournal, and we want to encourage them to do careful, in-depth, useful work. We've increased the base compensation for (on-time, complete) evaluations to $400, and we are setting aside $150 per evaluation for incentives, rewards, and prizes. Details on this to come.

Please consider signing up for our evaluator pool (fill out the good old form).

Adjacent initiatives and 'mapping this space'

As part of The Unjournal’s general approach, we keep track of (and keep in contact with) other initiatives in open science, open access, robustness and transparency, and encouraging impactful research. We want to be coordinated. We want to partner with other initiatives and tools where there is overlap, and clearly explain where (and why) we differentiate from other efforts. This Airtable view gives a preliminary breakdown of similar and partially-overlapping initiatives, and tries to catalog the similarities and differences to give a picture of who is doing what, and in what fields

Also to report

New Advisory Board members

Gary Charness, Professor of Economics, UC Santa Barbara

Nicolas Treich, Associate Researcher, INRAE, Member, Toulouse School of Economics (animal welfare agenda)

Anca Hanea, Associate Professor, expert judgment, biosciences, applied probability, uncertainty quantification

Jordan Dworkin, Program Lead, Impetus Institute for Meta-science

Michael Wiebe, Data Scientist, Economist Consultant; PhD University of British Columbia (Economics)

Tech and platforms

We're working with PubPub to improve our process and interfaces. We plan to take on a KFG membership to help us work with them closely as they build their platform to be more attractive and useful for The Unjournal and other users.

Our hiring, contracting, and expansion continues

Our next hiring focus: Communications. We are looking for a strong writer who is comfortable communicating with academics and researchers (particularly in economics, social science, and policy), journalists, policymakers, and philanthropists. Project-based.

We've chosen (and are in the process of contracting) a strong quantitative meta-scientist and open science advocate for the project: “Aggregation of expert opinion, forecasting, incentives, meta-science”. (Announcement coming soon).

We are also expanding our Management Committee and Advisory Board; see calls to action.

Potentially-relevant events in the outside world

Institute for Replication grant