Acknowledgements

I’d like to thank the following for giving feedback on this project: Jakob Graabak, John Walker, Michael Noetel and Charlie Dougherty

These people may or may not agree with all the viewpoints in this article.

I especially want to thank Michael Noetel and John Walker for letting me use their work on EA MOOCs as inspiration. Some of the ideas in this article originate from their work.

Introduction

This article is a submission to the “EA Criticism and Red Teaming contest” and critically reviews web-based introductory EA courses to provide constructive feedback. This project roughly imitates a red team assessment, so I want to emphasize that the critiques are intentionally rigorous and aim to identify any possibilities for improvement.

The key idea behind this project is that the EA community’s uncommon and unique knowledge separates EA from other efforts to do good and (hopefully) enables us to do good better. Because of this, optimizing how this knowledge is conveyed to others could be an important area.

Evidence-based instructional design can improve learning effectiveness, memory retention, user satisfaction, user engagement and completion rates of courses. The empirical evidence behind this is referenced below. For brevity, I refer to all of these factors under the umbrella term “course quality”.

Please note that this review does not include in-person fellowships but focuses only on web-based courses. Lastly, this review only includes general introductions to EA, not advanced courses with specialized content.

My definition of a “web-based introductory EA course” is an openly accessible web-based course for structured learning of core EA concepts and topics without requiring prior knowledge. Only courses that roughly fit this definition were included in the review.

Courses included in the review:

- The Effective Altruism Handbook - EA Forum

- Coursera | Effective Altruism - Princeton University

- The Virtual Introductory EA Program - Centre for Effective Altruism

- YouTube | Introduction to Effective Altruism - Centre for Effective Altruism

- 80,000 Hours Podcast | Effective Altruism: An introduction - 80,000 Hours

- Non-trivial

I hope this review does not come across as overcritical, and I am sorry if it does, as this was obviously not my intention. The rest of this article does not properly give credit to all the great work that went into these courses, so I wanted to express my gratitude for them here. I do believe the reviewed courses are highly valuable.

Summary

The findings from the evaluation indicate that existing courses do not reach their full potential for learning effectiveness, memory retention, user satisfaction, user engagement, or completion rates.

Although it seems tractable to improve some of the existing courses, it may be better to create new EA MOOCs to replace existing courses as they enable many benefits not found in the existing ones. One proposed solution (and ongoing project) is to create MOOCs on well-established MOOC platforms such as Coursera, edX and Udacity, as it may be an excellent opportunity for EA outreach and spreading the knowledge of EA-related concepts and topics.

Another promising solution could be to create an EA MOOC platform owned by an EA-aligned organization. Such a platform could enable total design freedom, content freedom and continuous improvement of the platform using new findings from empirical research and user feedback, in addition to providing numerous other beneficial features.

I also think it would be helpful to further research how EA courses produce value and how to design them to optimize for this. For example, further analyzing which course features are the most important and which user metrics to track. Lastly, I think one of the most critical research questions to explore is: to what degree does course quality affect the chance of attendees eventually becoming HEAs, and which course factors affect this the most?

Context

The EA movement is growing, and activity on the EA forum doubled between 2020 and 2021 (1, 2, 3, 4). Additionally, the MOOC market is growing rapidly with an increase in attendee counts from 35 million in 2015 to 220 million in 2021, growing by 40 million between 2020 and 2021 (source). Lastly, global internet access grew from 40% in 2015 to 60% in 2020, with an increase of 6% between 2019 and 2020 (source).

As EA gets more attention and global internet access increases, the number of expected and potential enrollments in web-based introductory EA courses may escalate. With higher numbers of enrollments, the quality of these courses becomes increasingly important. This may especially apply to EA courses on MOOC platforms as the MOOC market grows.

The MOOC market is also getting saturated with courses on EA-related topics but not aligned with EA principles. A hypothetical example is AI courses focusing on autonomous weapons and the dangers of self-driving cars without mentioning global catastrophic risks (GCRs) from AI. Such a course could draw away attention from courses on GCRs from AI and make it harder for new EA-aligned AI courses to enter the market and gain traction.

Even though there is some debate about the value of EA movement growth, most of the EA community seems to be in favor of extra growth (source). The 2019 EA Survey found that movement building is the second most prevalent cause area to work on for highly-engaged effective altruists (HEAs; source). Additionally, many large EA-aligned organizations are funding projects related to EA community building and growth (1, 2, 3, 4).

Additionally, I believe most agree that the EA movement is currently more skill-constrained than funding-constrained and that this will persist for several years (1, 2, 3, 4). Because of this, human capital relevant for high-impact work is in great demand.

Web-based EA courses may be a highly cost-effective way to grow the EA community and generate human capital (in the form of skills and knowledge) relevant for high-impact work. This is mainly due to the great potential for scalability, accessibility and learning effectiveness that web-based courses may provide.

Below is a brief overview of how I believe high-quality introductory EA courses may produce value. I also think they provide several other benefits not mentioned here.

(1) Growing the EA community

- By inducing potential HEAs through courses that facilitate and inspire further engagement with EA after completion

- By providing a highly scalable alternative to in-person fellowships for EA groups who can’t organize in-person fellowships for all their members

- By giving satisfied course attendees a concrete recommendation they may share with others

- By massively reaching out to people via EA courses created at well-established educational institutions or MOOC platforms

(2) Reducing skill-constraints in the EA community

- By (1) growing the EA community

- By effectively teaching EA-relevant knowledge and skills

Evidence-based course evaluation framework

This section explains the framework used for evaluating web-based courses. The goal of this framework is to evaluate key factors of a course that contribute to learning effectiveness, memory retention, user satisfaction, user engagement, user motivation or completion rates. The framework evaluates 21 different course factors using evidence-based rating criteria.

Only criteria that were reasonably possible to rate objectively were included. For example, this framework does not rate visual design or subjective user experience, even though these factors may also contribute to the success of a course. The selected criteria are based on a wide range of studies on instructional design, primarily literature reviews and meta-analyses.

Further research is needed before making any conclusions about what an ideal course looks like. The final ratings from this framework should only be considered as roughly indicative of the courses’ quality, so please take the results with a grain of salt. I am sure the framework can be expanded and improved, e.g. by taking more inspiration from the Inspire Toolkit.

Definitions:

- Content section: a single video, audio recording or text that teaches parts of the curriculum, with a clearly defined beginning and end.

- Module: a collection of content sections.

- Learning activity: a quiz, assignment, peer feedback, discussion or other activity that requires engagement by the user.

Rating framework

| Factor | Rating criteria (0-1) |

Proportion of video content (PVC) | 1: each module has at least one video-based content section. 0.5: the course has at least one video-based content section. |

Segmented learning (SL) | 1: average length of uninterrupted passive viewing (e.g., video without interactivity; reading) is < 10 minutes. 0.5: average length of uninterrupted passive viewing is < 20 minutes. |

Retrieval practice (RP) | 1: provides retrieval practice in the form of quizzes, text answers or other tasks for each module. 0.5: provides at least one retrieval practice task throughout the course. |

Automated elaborate formative feedback (EFF)

| 1: provides learning activities with elaborate formative feedback that explains the correctness of answers. 0.5: provides learning activities with feedback on whether or not answers are correct but without explanations. |

Simulated learning tasks (SLT) | 1: students are enabled to apply their theoretical knowledge and practice skills in a controlled environment with automated feedback about how they are doing. E.g. coding challenges in a coding editor. 0.5: same as above but without automated feedback. |

Automated content and process hints (AH)

| 1: provides one of the following during or after all learning activities:

0.5: provides one of the above at least once throughout the course. |

Discussion prompts (DP) | 1: provides structured and guided online discussions among students by providing prompts, questions and dilemmas. Stimulates students to discuss, reply and share their ideas. Informs them about some ground rules such as how long their comments should be and how to reply to peers. 0.5: provides functionality for online discussions among students but without any guidance. |

Teacher feedback (TF) | 1: provides live hangout sessions or pre-recorded ‘office hour’ videos in which the teacher answers frequently asked questions or comments on commonly made mistakes. |

Student collaboration (SC) | 1: provides opportunities for collaborating with other students on learning activities. |

Gamification (GAM) | 1: at least three of the following are present:

0.5: at least one of the above are present. |

Introductory summaries (IS) | 1: has short introductory summaries (in any format) at the start of each module that summarizes the highlights of the previous module and informs about the topic and goals of the current module. 0.5: has short introductory summaries at the start of each module that either summarizes the highlights of the previous module or informs about the topic and goals of the current module. |

User feedback collection (UFC) | 1: prompts users to answer a feedback survey throughout the course. |

Accreditation (ACR) | 1: each module has content sections that are accredited by an educational institution or professor. 0.5: at least one of the modules has content that is accredited by an educational institution or professor. |

Certificate (CRT) | 1: provides a certificate at the end of the course |

Displayed course workload (DCW) | 1: displays the expected workload of the entire course and of each content section at the start of the course. 0.5: displays the expected workload of the entire course or of each content section at the start of the course. |

Visualizations and diagrams (VD) | 1: each content section (e.g. video or article) has visualizations or diagrams (i.e. multimedia content). 0.5: each module has at least one content section with multimedia content. |

Quickly scannable content (QSC) | 1: the curriculum is divided into small sections that are quickly scannable by users before beginning the course. 0.5: the curriculum is divided into small sections but is not quickly scannable. |

Clear schedule (CS) | 1: all modules have a clear recommended schedule to follow. |

Clearly defined goals (CDG) | 1: each module has clearly defined learning goals. 0:5: the course as a whole has clearly defined learning goals, but each module does not. |

Equally distributed content (EDC) | 1: the content is divided into modules with roughly equal estimated workloads. |

Additional bibliography (AB) | 1: additional instructional material or bibliography is provided for each module. 0.5: additional instructional material or bibliography is provided at least once throughout the course. |

Important notes about this evaluation framework:

- The studies selected may suffer from unintentional cherry-picking as I am not an expert on the relevant literature and may have missed key studies.

- Some of the factors are not completely objectively rateable, so I used the best of my judgment.

- It fails to include several factors contributing to course quality and usefulness. For example, the advantage of podcasts being usable without looking at a screen.

- It does not take into account that some of the courses have different target audiences and intended usage scenarios.

- It may seem unsuitable to rate podcast series, YouTube playlists, and online courses using the same criteria, but the reason for doing so is to showcase the downsides and upsides of different platforms.

- Every factor is not intended to be interpreted as equally important, and the numerical ratings are just a means of visualization. Please consider the numbers as tiers rather than a point system. Summing the ratings together is not intended.

- It does not rate the success of the course development projects as a whole. Each project may have achieved all its goals regardless of its course’s rating following this framework.

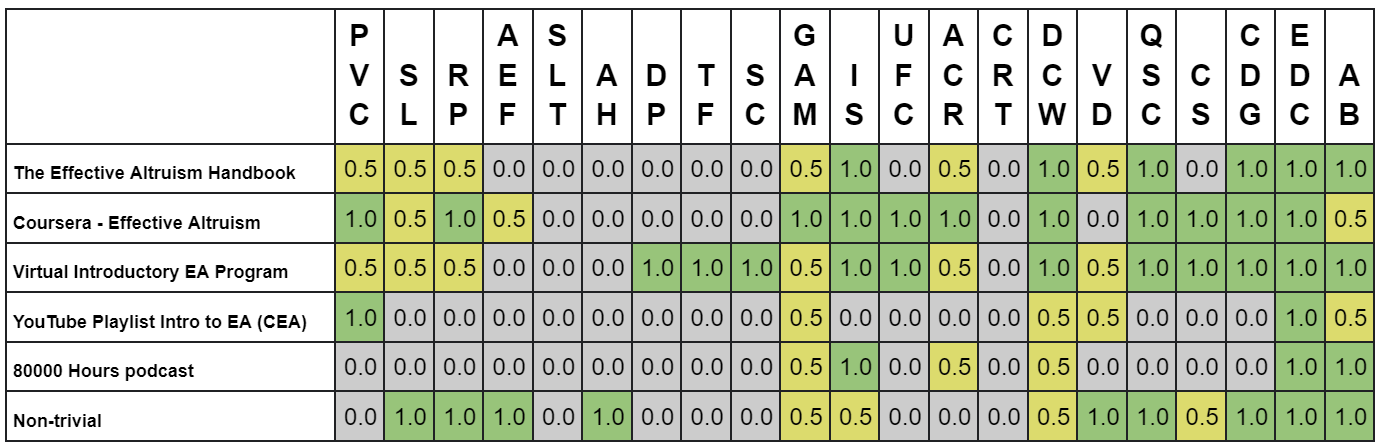

Course rating results

Here are the results from rating each course using the framework above. The ratings were finalized on August 27, 2022, and represent the courses’ states at that date. Please keep in mind that some of these projects are still regularly updated, and they may already have plans to improve some of the reviewed factors.

Final course ratings:

Link to the spreadsheet (for readability)

Abbreviation list:

| Proportion of video content (PVC) |

| Segmented learning (SL) |

| Retrieval practice (RP) |

| Automated elaborate formative feedback (AEF) |

| Simulated learning tasks (SLT) |

| Automated content and process hints (AH) |

| Discussion prompts (DP) |

| Teacher feedback (TF) |

| Student collaboration (SC) |

| Gamification (GAM) |

| Introductory summaries (IS) |

| User feedback collection (UFC) |

| Accreditation (ACR) |

| Certificate (CRT) |

| Displayed course workload (DCW) |

| Visualizations and diagrams (VD) |

| Quickly scannable content (QSC) |

| Clear schedule (CS) |

| Clearly defined goals (CDG) |

| Equally distributed content (EDC) |

| Additional bibliography (AB) |

Discussion

The results from the evaluation show that all reviewed courses fall short in multiple key factors of instructional design. There is a widespread lack of retrieval practice tasks, feedback and multimedia content, which the literature shows are critical factors for improving learning effectiveness, memory retention and user engagement.

Some of the courses also have longer content sections than what is optimal, with several texts and videos far surpassing the recommended lengths. None of the courses provide opportunities for attendees to practice their learnt skills in a simulated environment, which is another feature that could positively affect learning outcomes. The gamification implementation in most courses may also be improved to increase user motivation and engagement.

As all courses don't comply with several best practices for instructional design, I suspect the courses also have room for improvement in other key course factors not evaluated in this project.

Subjective review

The review below aims to highlight any potential downsides of each course. This review is largely based on my judgment, and many of the potential downsides are not backed up by empirical evidence. I don’t mean that all the potential downsides necessarily have any implications or that they should be fixed. I simply listed everything that came to mind in case it may serve as constructive feedback.

Different from the evidence-based evaluation, this review does not follow a specific structure, and it also includes critiques of the content topics and my subjective user experience throughout the courses. For brevity, this review primarily focuses on the courses’ negative aspects, even though they all have a wide range of positive aspects not mentioned here. After all, please keep in mind that this is a red team assessment.

Non-trivial and the YouTube playlist was not included in the subjective review due to time constraints and because I prioritized the courses with the highest enrollment.

The Effective Altruism Handbook - EA Forum

The Effective Altruism Handbook is a primarily text-based course with eight modules hosted on the EA Forum. The course gives users an in-depth introduction to EA, and I estimate a total workload of 12-16 hours, depending on reading speed and whether or not the users complete the assignments. It is a course for self-directed learning, i.e. there is no defined schedule to follow or feedback to the user throughout the course.

List of potential downsides:

- Built on the LessWrong/EA forum software

- Limits the design freedom for custom features and content

- May lead to a high technical debt over time

- May slow down the development time of new features

- Hard for new developers to contribute due to a large codebase

- The course content is hosted on forum posts which may complicate maintaining the course

- Unnecessary distracting elements

- Comments, profile name, karma score, voting buttons and forum topics

- Other forum posts and forum functionality on each forum post

- It provides too many options to users throughout the course

- Too much optional reading material and other external links may distract users from the main focus of introductory EA courses, namely core EA concepts and topics

- Constantly deciding what to read throughout the course takes extra time and may cause decision fatigue

- Newcomers usually don’t know what content is the most important to learn

- The content is not intentionally created to be part of an introductory EA course

- The content is a collection of forum posts by various authors

- Most of the forum posts are originally created for other purposes

- It makes the writing style incohesive throughout the course

- Poor navigation

- Impractical to navigate between modules and sections

- May be especially confusing for newcomers to the forum

- It lacks a navigation menu that is visible at all times, showing all course modules

- The navigation buttons are a bit hard to identify

- Suboptimal progress visualization

- It only tracks visited pages but doesn’t check if the section has actually been read or if exercises are completed

- No progress visualization from within sections

- The long duration of the course may lower completion rates

- Course length is negatively correlated with completion rates (Jordan, 2014) (Jordan, 2015)

- Poor SEO for its target audience

- It does not show up on Google Search’s first page when searching “introduction to effective altruism”, “learn effective altruism”, or “effective altruism course”

- Some of the forum posts are linkposts to external sites

- Worse user experience than integrating the article into the course site

- The displayed workload estimations are incorrect

- The calculations do not include assignments or the word count of linkposts

- Some of the forum posts are several years old

- This may lower the credibility of the course as it indicates that the information may be outdated

Final comments:

The Effective Altruism Handbook is an excellent collection of EA-relevant resources and thoroughly introduces many core EA concepts and topics. However, I think it falls short regarding its user experience and learning effectiveness. Because of the UX issues, lack of automated feedback, the high proportion of text content, and the high total workload of the course, I also believe this course has a considerably low completion rate. Lastly, I think using the EA Forum as the course platform needs reconsideration, as moving the course to another platform could provide many benefits.

Effective Altruism | Coursera - Princeton University

The Coursera Effective Altruism course is a 6-week course with weekly quizzes and a final writing assignment (with no grading), with an estimated course workload of 10-15 hours. The course heavily focuses on ethics and effective giving with little focus on other EA-related concepts and topics. 49.800 people have enrolled in the course as of August 28, 2022, possibly making it the introductory EA course with the highest total enrollment. Despite being hosted on Coursera, it does not grant certificates upon completion.

List of potential downsides:

- Lacks several core EA concepts and topics

- Cause prioritization

- Longtermism

- Global catastrophic risks

- Animal welfare

- Direct impact careers

- It misrepresents the current state of EA

- Due to the high focus on effective giving and lack of other core EA concepts and topics, this course does not accurately represent the current direction of the EA movement

- Highly focused on earning to give

- Earning to give is demanding and can be off-putting for many newcomers

- Platform constraints

- I assume Coursera complicates the process of editing content and hence increases technical debt

- No design freedom for custom software features

- Other Coursera courses may distract users from this course

- No possibilities for custom data collection functionality

- Outdated content

- May lower the credibility of the course

- The most cost-effective charities are constantly changing, and GiveWell has now changed its criteria for evaluating charities (source)

- Some of the data may be outdated

- Many of the “lectures” are simply recorded conversations

- Less engaging than lectures with presentations and visualizations

- Probably not what users expect from a Coursera course

- Requires user registration and login

- May be a barrier to entry for some people and increase bounce rates

- No calls to action at the end of the course

- No end-of-course survey for user feedback

- No suggested further actions to engage with EA

Final comments:

The Coursera Effective Altruism course is hosted on a well-established, high-quality platform and fulfills many of the criteria in the rating framework. The course also differs from the other reviewed courses by offering plenty of retrieval practice tasks. Despite all this, I consider the outdated content lacking several core EA concepts and topics a significant issue with this course, possibly outweighing all the benefits.

Virtual Introductory EA Program - CEA

The Virtual Introductory EA Program is an 8-week course with weekly assignments and discussion meetings following a clearly defined schedule. This course uses The Effective Altruism Handbook for preparation material for the discussion meetings, so all of the critiques in that course’s review also apply to this course. The total workload of the course is roughly 24 hours but likely varies greatly from person to person.

List of potential downsides:

- Requires one facilitator per four to six attendees

- High marginal overhead costs

- May become facilitator constrained

- Meetings are constrained to a schedule

- May reduce attendance due to colliding events

- May cause stress due to deadlines

- Unsuitable for certain groups of people

- Socially anxious people

- People with hearing disorders or deafness

- People with speech disorders

- People with difficulties speaking English

- People without web cameras or microphones

- People with inadequate network connection

- Effortful for attendees

- Preparing for meetings

- Staying engaged during discussions

- Keeping track of scheduled meetings

- Discussions can be more mentally draining than alternatives such as reading, watching or listening

- Attendee attrition affects other attendees

- Lowers the variety of viewpoints in discussions

- May demotivate others to continue the program

- May cause other attendees to feel abandoned or rejected

- Attrition rate of 34-61% depending on calculation method (n = 1284), numbers provided by CEA

- Requires signing up through a form

- May deter people from joining

- Users cannot start the course immediately

- Fixed progress rate

- Weekly meetings for eight weeks

- May slow down people who would have progressed faster alone

- May be exhausting for people who prefer progressing at a slower pace

- Inconsistent discussion quality

- The quality of discussions are highly dependent on the course facilitator and the people in the discussion group.

Final comments:

The Virtual Program has the highest marginal cost per attendee of all the reviewed courses, but this cost may be worth it due to the extra value that the discussions provide. The opportunity cost of using experienced EAs as facilitators also needs consideration, as experienced EAs could have other higher-impact work to spend their time on.

Unlike other courses, Virtual Programs are a bit inconsistent and pose several smaller risks. One risk is negative experiences from discussions. For example, boring discussions, disagreements, social awkwardness, heated arguments or attendee attrition. Technical difficulties may also occur during video calls, worsening the experience for everyone involved. Other courses don’t seem to have similar risks and seem like more reliable options for providing most attendees with a positive experience throughout the course.

Lastly, the Virtual Program has a much narrower audience than most other web-based courses, as it is unsuitable for several groups of people. I still believe the Virtual Program is the best existing option for most people.

Effective Altruism: An introduction - 80,000 Hours Podcast

The 80.000 Hours Introduction to EA is a podcast series of 10 episodes which aims to quickly get listeners introduced to effective altruism. It has a total listening time of roughly 24 hours at normal speed.

List of potential downsides:

- Hard to navigate to specific subtopics of an episode

- The episodes don’t have tables of contents with timestamps

- Platform constraints

- No interactive elements to engage users

- No feedback throughout the course

- The episodes are seemingly not created to function as an introductory series

- The series seems like a collection of standalone episodes

- It means the content is likely not optimized to serve as an introduction to EA

- It makes the series feel less cohesive

- Poor adaptability

- Hard to edit podcast recordings

- Entire episodes must be replaced to make edits to the content

- Most or all episodes will get outdated and eventually need replacements.

- Podcasts have suboptimal learning effectiveness

- Multimedia content has better learning outcomes (Adesope and Nesbit, 2012)

- Easy to lose attention or get distracted while listening

- More impractical to rewind recordings than to re-read sentences

- May be too focused on guest speakers

- I believe introductory EA courses should only contain absolutely essential information to make the courses as concise and short as possible

- Podcasts often do a bad job of emphasizing important information

- It’s hard for listeners to tell what parts of the conversations are the most important, and core EA ideas are sometimes subtly mentioned in conversation

- The conversations sometimes digress from the core EA ideas

- Some podcasts are blocked/unavailable in China and other countries

- Although I am unsure if this is the case with this podcast

Final comments:

The 80.000 Hours podcast series offers a practical and low-effort way of getting introduced to effective altruism and may be the best option for a few niche audiences, e.g. people with busy schedules who listen to podcasts while commuting, or people with visual impairments. However, due to the suboptimal learning effectiveness of podcasts and this course’s poor ratings in the evidence-based evaluation, I don’t believe it is the best option for most people.

Project proposals

This section lists some project proposals which I believe could be valuable. I only briefly describe these project proposals here, but I am happy to discuss them further. Please reach out to me for more details.

- Research surrounding the area of EA courses

- Research into the scale/importance of EA courses, e.g. total attendee count, completion rates and to what degree introductory course quality affects the chance of attendees eventually becoming HEAs

- Research into the value of optimizing user experience, learning effectiveness, and other course features, e.g. to what degree completion rates can be improved by applying evidence-based best practices

- Research into what course factors and metrics should be prioritized when creating courses, e.g. examining the tradeoff between content depth and completion rates, as longer courses have lower completion rates (Jordan, 2014)

- Improving and expanding the rating framework used in this project, for later using it as a template when creating new EA courses

- Surveying experts in the EA community about introductory EA course content

- What EA concepts have they used the most themselves and have been the most valuable for them?

- What EA-related concepts are the most important to include in introductory EA courses?

- Do current courses have any content that seems non-essential?

- In what order should different EA concepts and topics be introduced?

- Asking for concrete recommendations for content to include in courses

- Doing a thorough evaluation of the UX of existing courses

- This review mainly reviewed the instructional design of courses, but I also believe some of the existing courses may have room for improvement when it comes to their UX

- Evaluating if the courses follow best practices for UX research, e.g. their automated data/feedback collection systems and whether they track all core KPI metrics (Zhao and Jibing, 2022)

- Creating concise multimedia video lectures on core EA concepts

- Multimedia content has better learning outcomes (Adesope and Nesbit, 2012)

- These videos may be reusable in many courses and scenarios

- These videos should be accredited by a well-established university or professor (Goopio and Cheung, 2020)

- The video lengths should not be longer than necessary (Noetel, Griffith, Delaney, et al., 2021) (Brame, 2017)

- Developing new EA courses on well-established MOOC platforms

- A course created on Coursera can act as a more up-to-date alternative to the Coursera EA course by Princeton University, with content that more accurately represents the current state of effective altruism

- A great opportunity for outreach due to the high activity on these platforms

- MOOC platforms often have the possibility of providing certificates

- Many MOOC platforms already follow many evidence-based best practices for instructional design

- Improving the Effective Altruism Handbook

- Possibly migrate it away from the EA Forum software

- More multimedia content

- More concise sections

- Fewer linkposts to external sites, more integrated texts

- Adding retrieval practice tasks with automated elaborate feedback

- Adding an end-of-course user survey

- Improving navigation by adding a sidebar and more options for navigating between sections

- Fixing incorrect workload estimations

- Possibly adding more gamification elements

- Removing non-essential links to other sites

- Hiding unnecessary distracting forum functionality

- A new EA MOOC platform

- Owned entirely by an EA-aligned organization

- Full design freedom for custom content and features

- A self-made MOOC platform is presumably the only way to achieve a perfect score on all factors of the evaluation framework above

- High adaptability to enable quick continuous improvement of the platform and updating courses based on new empirical evidence, user feedback and user data collected from the platform

- Supports creating EA-relevant courses on any topic and level of expertise

- Can also be used for hosting fellowship preparation material (and a new version of The Effective Altruism Handbook)

Ongoing relevant projects

Michael Noetel and John Walker are working on a project that aims to collaborate with renowned educational institutions to create an introductory EA MOOC to improve EA outreach. They are interested in scaling up this approach to create MOOCs on a range of EA-relevant topics, and John is interested in eventually expanding from developing MOOCs to trying to match learners with potential employers and helping learners develop early stage ideas through an early stage startup called Effective Learning.

Non-trivial recently released their first version of their EA learning platform for teenagers, the project is still a work in progress. More details can be found in the EA Forum post announcing its launch.

Open Philanthropy is offering grants for developing new courses on effective altruism and related topics, I also believe the scope of the EA Infrastructure Fund may cover EA courses. FTX Future Fund also funds projects related to EA, which may include EA courses.

CEA was hiring a UI/UX designer (with a deadline of July 18, 2022) to work on the EA forum, indicating that they may eventually improve the UI and UX of the EA Handbook. Some new features and content were also added to the EA Handbook in July and August 2022.

Khan Academy recently posted (on August 27, 2022) on the EA Forum looking about collaborating with the EA community to create EA-related courses on their platform.

Digitales Institut recently announced its project plans to create four EA-related courses on AI and emerging technologies.

I am developing a new web app for fellowship preparation material for in-person fellowships at my EA university group. The aim of the project is to make fellowship preparations a more appealing activity for students and reduce attrition from fellowships. The app will be made open-source for use by all EA groups.

I am also exploring the tractability of creating an EA MOOC platform, what features it should include and how it should function to produce the most value. The aim of this platform is to make it easy for the EA community to create openly available EA courses following evidence-based best practices for instructional design. I am very interested in further exploring this idea with others.

This seems really helpful, and I look forward to reviewing your comments when we next decide how to modify/update Animal Advocacy Careers' online course, which may be in a week or so's time.

(A shame we weren't reviewed, as I would have loved to see your ranking + review! But I appreciate that our course is less explicitly/primarily focused on effective altruism.)

I'm glad to hear that! I would have liked to include a wider range of EA-related courses in the initial project, but I am still happy to provide feedback on more courses individually. We could try to find a time to talk sometime soon if you're interested.

It seems like there are quite a few EA courses/programs following similar structures as the Virtual Programs by CEA, i.e. weekly reading lists + discussion meetings. I think most of these courses would end up with similar results using the evaluation framework in this project, this may also apply to AAC's course from the looks of it.

I've also been in contact with a few other course designers lately, and I'm very keen on coordinating with more course projects to discuss ideas and avoid redundant work. I think many of these course projects will face similar challenges and decisions going forward, e.g. how to scale with increasing enrollment, and what software systems to use. Maybe we should set up a Slack workspace or another communication platform for course designers unless it already exists.

Thanks! I'm keen for staying in the loop with any coordination efforts.

Although I'll note that AAC's course structure is quite different from EAVP. Its content + questions/ activities, not a reading group with facilitated meetings. (The Slack and surrounding community engagement is essentially and optional, additional support group.) I would hazard a guess that the course would score more highly on your system than most or all of the other reviewed items here but I haven't gone through the checklist carefully yet.

My apologies, thanks for clarifying! I like the concept of a course with interactive learning activities and optional supplementary discussions. Is the course currently openly accessible to have a look at without signing up and getting accepted? I have a sense that it might increase enrollment rates to let users explore the curriculum in more detail before signing up, either by allowing users to test the course itself or by adding a more detailed curriculum description to the sign-up page.

I also gravitate toward letting users complete the course without signing up, if that's possible on your software system, but still reserving discussions and certificates for attendees who apply and get accepted. A five-minute form seems like quite a big barrier to entry, especially when users don't know exactly what they are signing up for.

You might be right that we lose some people due to the form, but I expect the cost is worth it to us. The info we gather is helpful for other purposes and services we offer.

Regarding more info about the course on the sign up page: of course there's plenty of info we could add to that page, but I worry about an intimidatingly large wall of text deterring people as well.

Thank you for this! I think your framework for instructional design is likely to be very useful to several projects working to create educational content about EA. I happen to be one of these people, and would love to get in touch. Here is a onepager about the project I am currently pursuing. I shared your post with others who might fint it interesting.

I look forward to seeing what you decide to do next!