(Edited 10/14/2022 to include Lifland review. Edited 7/10/23 to include Levinstein review.)

Open Philanthropy solicited reviews of my draft report “Is power-seeking AI an existential risk?” (Edit: arXiv version here) from various sources. Where the reviewers allowed us to make their comments public in this format, links to these comments are below, along with some responses from me in blue.

- Leopold Aschenbrenner

- Ben Garfinkel

- Daniel Kokotajlo

- Ben Levinstein

- Eli Lifland

- Neel Nanda

- Nate Soares

- Christian Tarsney

- David Thorstad

- David Wallace

- Anonymous 1 (software engineer at AI research team)

- Anonymous 2 (academic computer scientist)

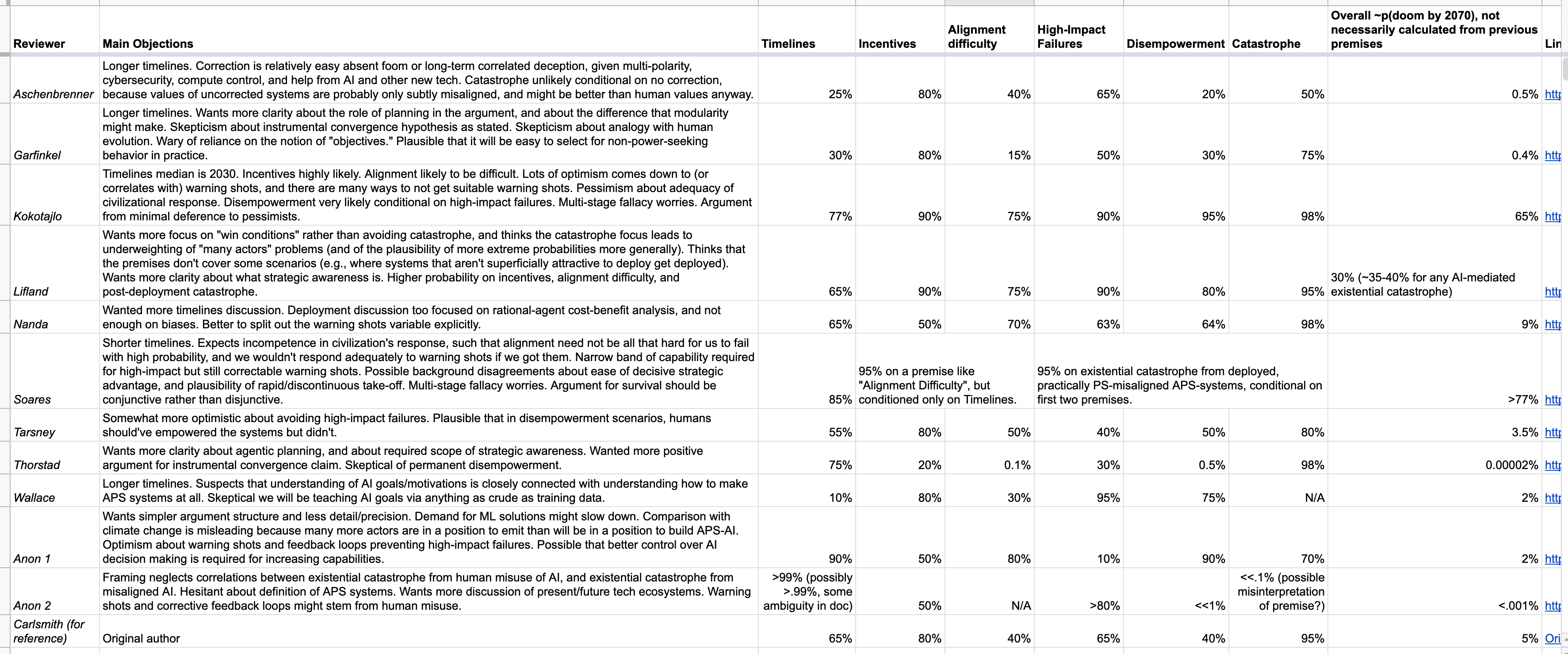

The table below (spreadsheet link here) summarizes each reviewer’s probabilities and key objections.

An academic economist focused on AI also provided a review, but they declined to make it public in this format.

This is really cool. The format makes it easy to see different conclusions and the cruxes people are relying on to reach those different conclusions. Thanks to you and the reviewers for doing this work, and spending the time to present it in such an easily understandable way!

Very cool!

It would be convenient to have the specific questions that people give probabilities for (e.g. I think "timelines" refers to the year 2070?)

Seconding this

FYI: Cell A7 in the spreadsheet says "Tarnsey" instead of "Tarsney"