This post is scavenged and adapted from my report on resilience to global cooling catastrophes [summary here].

Summary

- There is significant disagreement about the validity and severity of nuclear winter

- I use results from two papers on either side of the debate to construct a model that incorporates epistemic uncertainty

- I also consider other factors - like counterforce/countervalue targeting - that make severe nuclear winter less likely

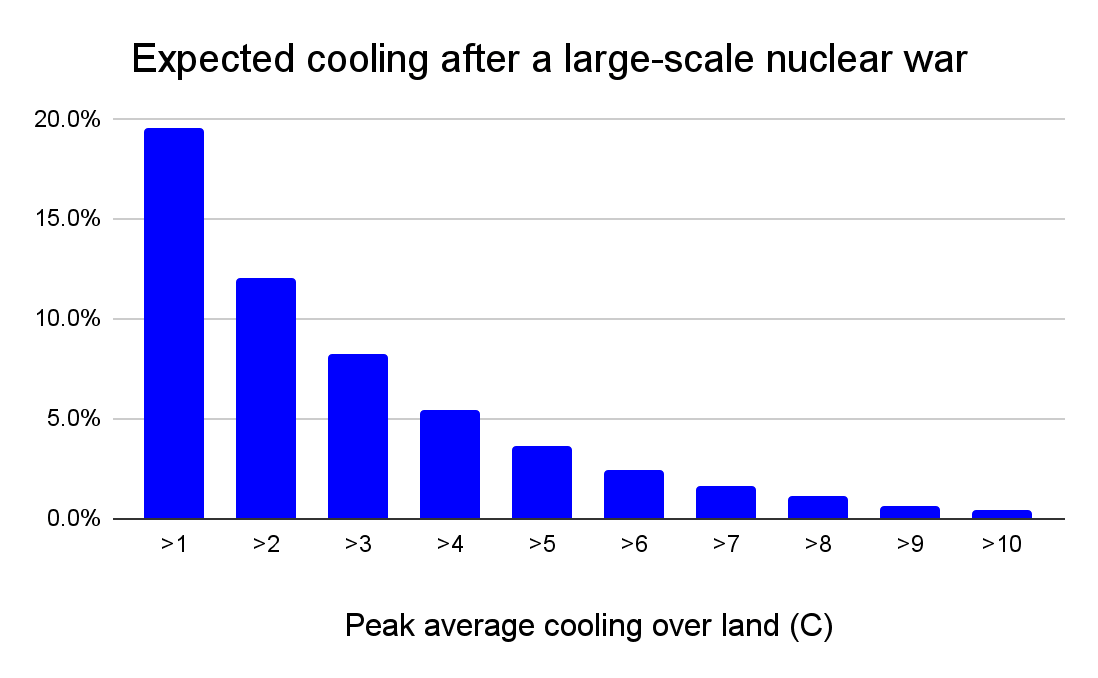

- I find that 20% of large-scale nuclear conflicts cause at least 1°C of cooling over land and 1% lead to at least 4°C of cooling.

- This implies that the cooling effects of a large-scale nuclear war may not outweigh the direct damage of the blasts. The risk of nuclear winter is widely overstated, but it remains one of the top threats to the global food system and should not be ignored.

Context: nuclear winter is contentious

Mike Hinge gives a great overview of nuclear winter here (33 mins). To summarize the field in my own words:

- Nuclear winter is a contested theory that nuclear detonations, especially over cities, would trigger firestorms that loft soot high into the atmosphere. This would block sunlight, creating a years-long cooling effect.

- There are several stages between nuclear detonation and global cooling, each introducing a layer of uncertainty. This makes nuclear winter modeling very sensitive to the assumptions used[1].

- Most nuclear winter research has come from a small number of scientists who believe that their theory is a good deterrent against nuclear conflict[2]. By making pessimistic assumptions at each stage, they may be grossly overestimating nuclear cooling.

Model

Findings at a glance

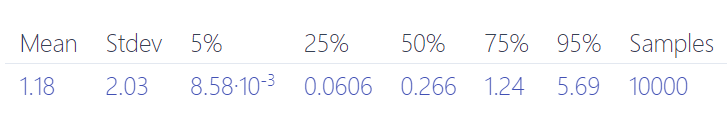

According to the model, around 80% of large-scale nuclear wars do not lead to significant cooling[3].

Cooling severity after a large-scale nuclear conflict.

How bad is 1°C of cooling over land? It is comparable to the cooling caused by the two greatest volcanic cooling events of the past millennium: 1257 (linked with the Little Ice Age) and 1815 (linked with the Year Without a Summer).

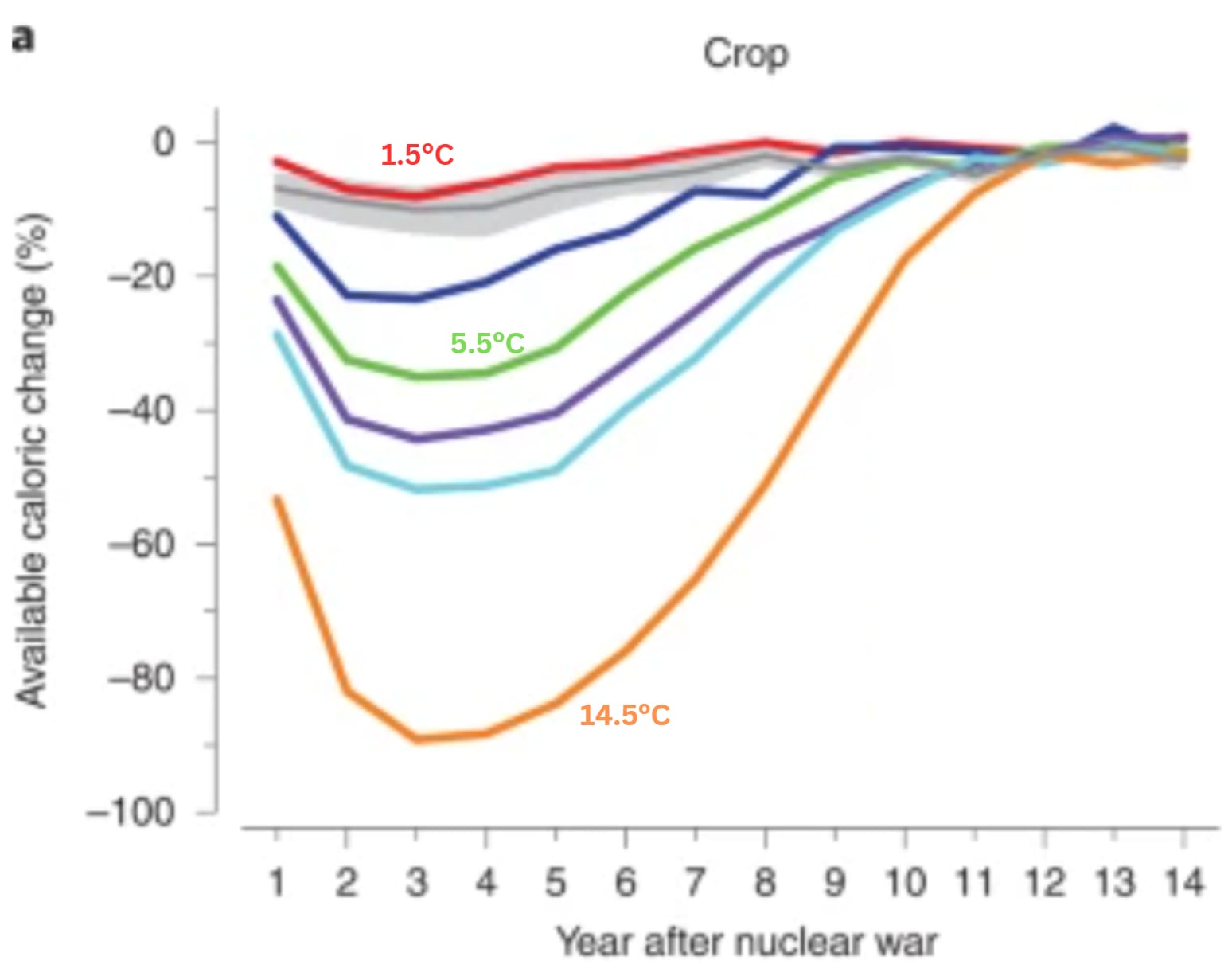

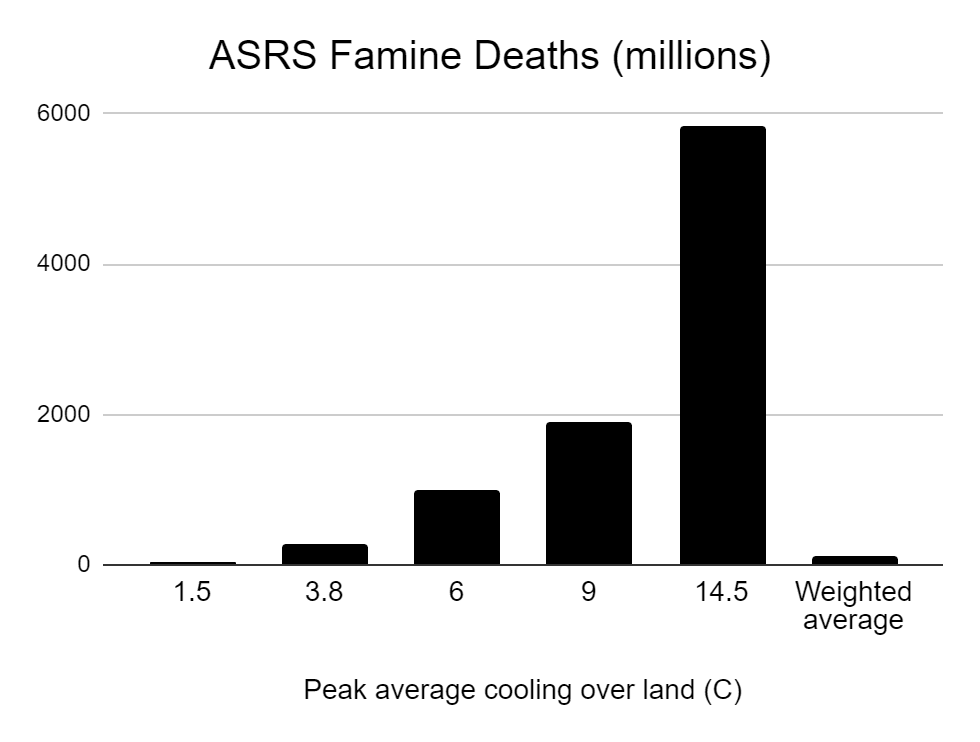

Xia et al. (2022) models the effects of nuclear cooling on agricultural yields (Fig. 2a, shown below). A 1.5°C cooling event would result in a reduction in available calories of almost 10%.

Fig 2a from Xia et al. (2022), edited to show the peak average cooling over land associated with three of the scenarios.

In short, cooling effects of at least 1°C over land would have significant effects on agricultural production. However, given that much of what we grow is wasted or fed to livestock, an effective human response could avert famine in all but the most severe catastrophes.

Skip to Human response & risk of famine

How the model works

The full model is built on Squiggle and can be accessed here.

The key innovation of the model is to incorporate uncertainty in the amount of soot injected into the stratosphere by nuclear detonations over cities (the detonation-soot relationship). Duelling models in Reisner et al. (2019) and Toon et al. (2007) both estimate the soot injection caused by nuclear conflict between India and Pakistan involving 100 small (15kT) nuclear detonations. The papers estimate 0.2Tg and 5Tg of soot, respectively, although Reisner et al. (2019) does not account for the fact that only a fraction of emitted soot reaches the stratosphere, so I further adjust downwards from 0.2Tg to 0.0044Tg.

I use these two estimates - 0.0044Tg and 5Tg - as the 5th and 95th percentiles of a distribution for the detonation-soot relationship[4].

My model for the amount of stratospheric soot produced (in Tg) by a 11-warhead conflict between India and Pakistan.

I make some adjustments for the difference in the amount of soot produced by the larger weapons in US/Russia/China stockpiles, to estimate that the soot produced by a conflict with 100 smaller detonations is equal to the soot produced by 13-30 larger detonations.

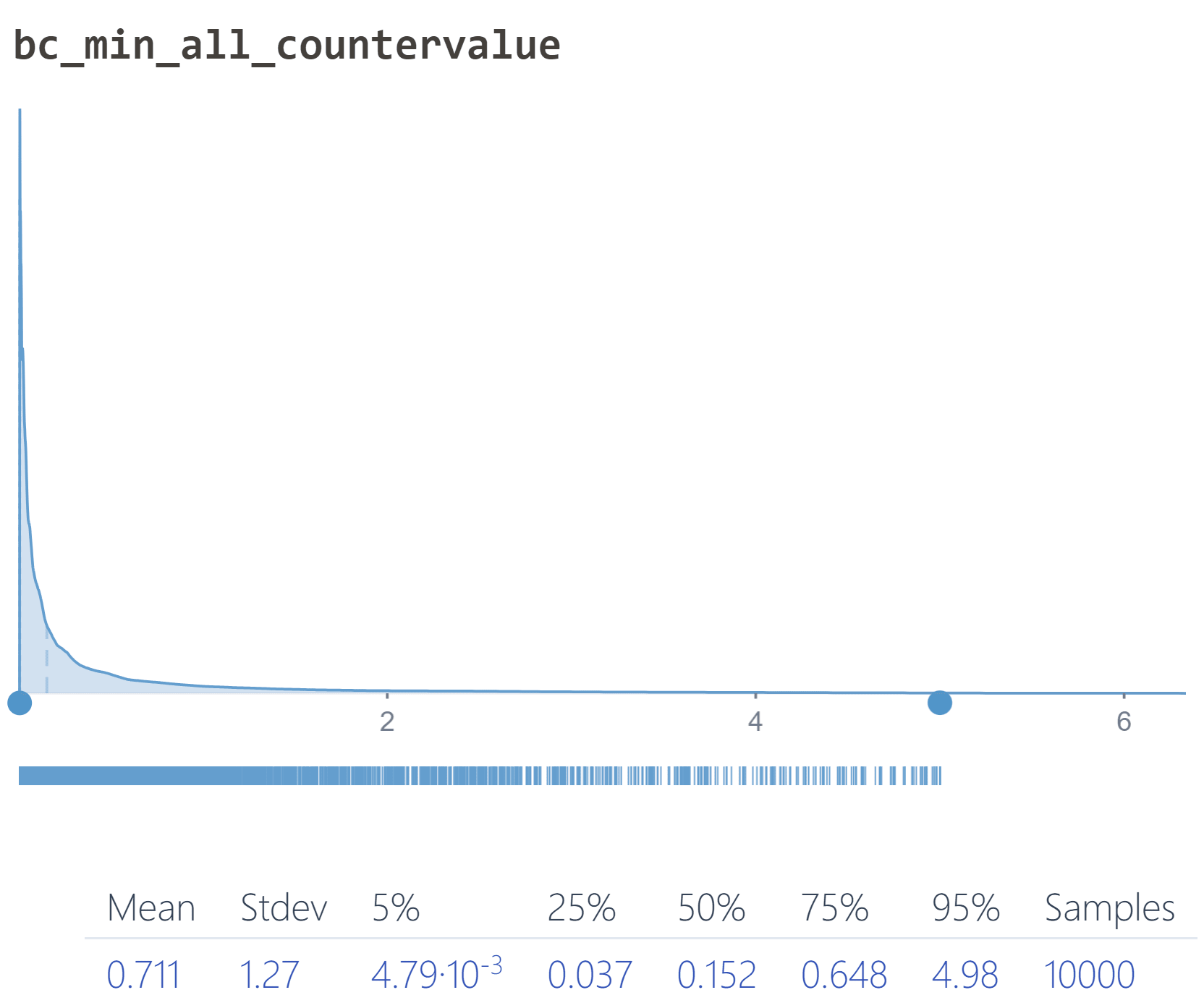

I then discount for the fact that counterforce-targeted detonations (those targeted at military infrastructure) are far less likely to inject soot into the stratosphere, as the requisite firestorm conditions probably only occur in urban areas[5]. I estimate that on average 30% (0.7% to 80%) of detonations in a large-scale nuclear conflict would be countervalue-targeted (urban), with the remainder counterforce.

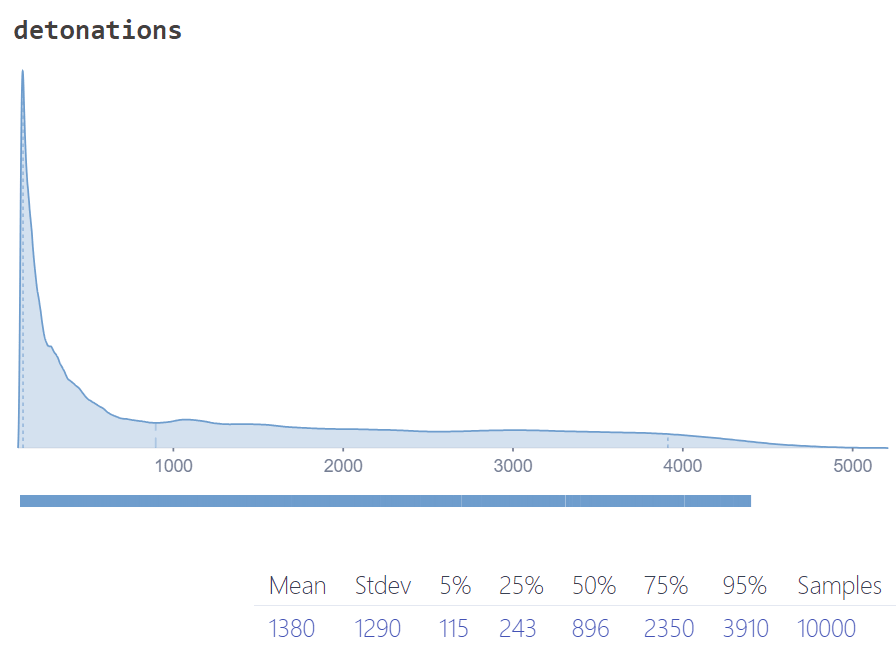

Then I model the number of detonations in a 100+-detonation conflict involving Russia and the US. For this, I draw upon aggregate forecasts and individual estimates to build the following distribution:

By now I have a detonation-soot ratio and a model for the number of detonations. Combining these, I get a distribution of the amount of stratospheric soot expected in a large-scale nuclear war.

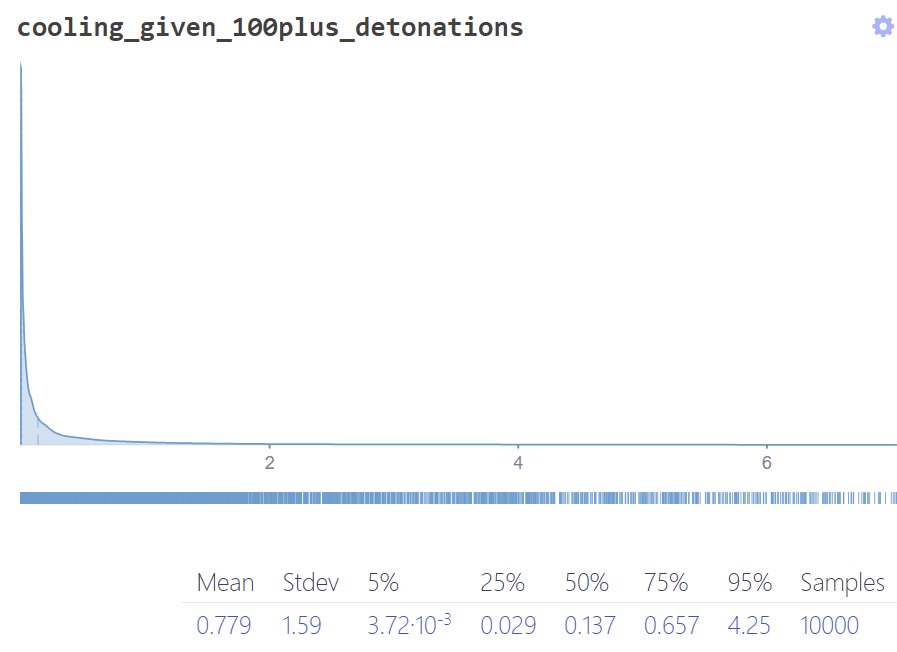

To get a soot-cooling relationship, I reverse-engineer a curve from the findings in Xia et al. (2022). This creates the following distribution for the expected cooling in a 100+-detonation conflict.

I find that in more than 75% of cases there is less than 1°C of cooling over land.

Changing key assumptions

Much of the uncertainty comes from the initial detonation-soot ratio: expected cooling is modest even when I restrict only to conflicts of 1000+ detonations:

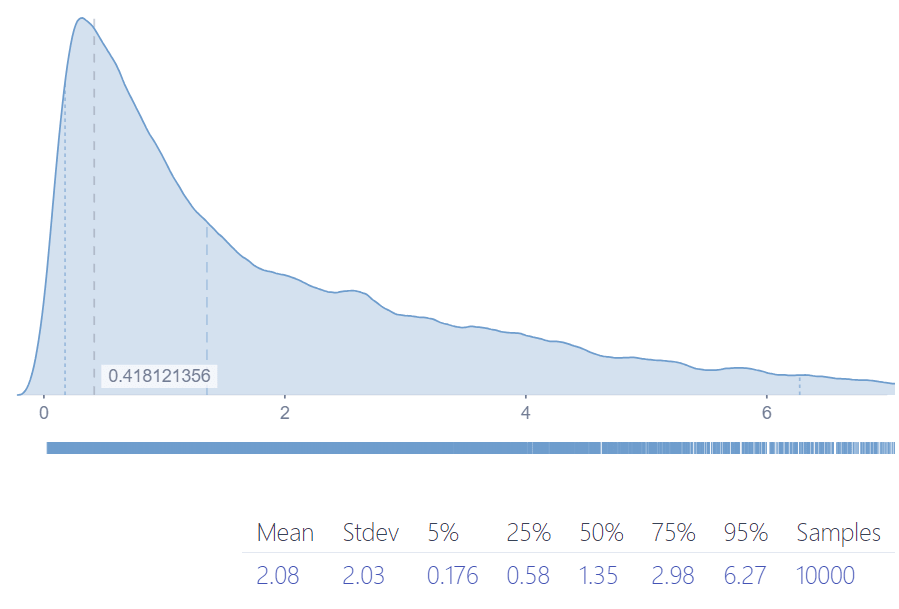

The changes are much more radical if I adopt the alternative assumption that the amount of stratospheric soot in an India/Pakistan conflict is between 0.5Tg and 5Tg (rather than 0.0044Tg and 5Tg), I get the following cooling distribution for 100+-detonation conflicts:

This supports the conclusion that the most important uncertainty is the soot-lofting capacity of urban firestorms.

Human response & risk of famine

I also attempt to model the cooling-damage curve of global cooling catastrophes. In brief, I assume that if global trade is maintained and there are enough calories to go around, mass famine is avoided. If countries make sensible adaptations to their food systems, more calories are available. The more severe the cooling catastrophe, the less likely it is that global food trade continues.

This is a crude simplification, but I believe it captures important features of global food system resilience:

- With strong international cooperation and adaptation the world can avoid famine in all but the worst catastrophes

- In mild catastrophes the most likely outcome is that mass famine is averted. The expected death toll is driven by the minority of scenarios in which famine is not prevented

- Due to the interdependence of the global food system, a breakdown in international trade is catastrophic no matter the scale of cooling. Food system adaptation is only critically important in regions cut off from trade, or in severe scenarios when global supply is not enough for everyone.

The model suggests that the cooling-mortality relationship is super-linear.

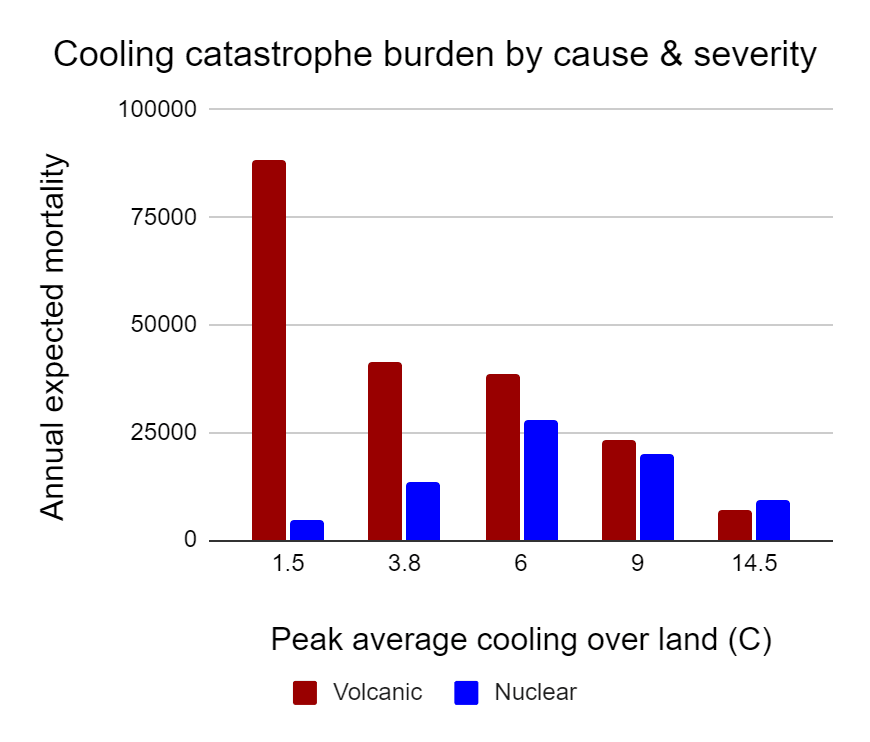

I find that the annualized burden peaks for 'moderate' catastrophes of around 6°C of cooling.

For more details, see the Human Response section of the report and famine mortality model.

Expected burden of nuclear winter

The annualized mortality burden of nuclear winter is estimated at 75,000 deaths.

Contrast this with what proponents of nuclear winter might suggest: a 1% annual risk of a nuclear winter killing billions would have an annualized burden in the tens of millions.

Yes, there is huge uncertainty about the severity of nuclear cooling (my 90% CI of the level of cooling in the next large-scale nuclear conflict is (0.004°C - 4°C)) but I believe that the apocalyptic projections of 10+°C cooling represent the extreme tail end of what could happen.

- ^

The amount of long-term stratospheric soot depends on (a) fuel density of the target region (b) the efficiency of the firestorm at converting fuel to soot (c) the proportion of soot that reaches the stratosphere and (d) The amount of soot that is "rained out" in the early weeks. In a simplified model, these inputs are multiplied. So by making each input just 50% more amenable to cooling, I get a final result with 1.5^4 = 5x more stratospheric soot.

- ^

- ^

I estimate that the risk of a large-scale nuclear war (100+ detonations, with US and/or Russian involvement) is around 10% per century, which implies that the risk of nuclear winter is only 1.9% per century.

- ^

It is a lognormal distribution with 90% CI (0.0044,5), bounded above and below by replacing the top and bottom 5% of the distribution with 0.0044 and 5. This approach was chosen because not bounding the upper end of the distribution led to infeasible cooling levels - a 99th percentile of 21Tg.

- ^

I estimate that counterforce detonations inject 10x less stratospheric soot than countervalue (urban) detonations.

Dear Stan.

I think there are issues with this analysis. As it stands, it presents a model of nuclear winter if firestorms are unlikely in a future large scale nuclear conflict. That would be an optimistic take, and does not seem to be supported by the evidence:

In addition, there are points to raise on the distribution of detonations - which seems very skewed towards the lower end for a future nuclear conflict between great powers with thousands of weapons in play and strong game theoretic reasons to “use or lose” much of their arsenals. However, we commented on that in your previous post, and as you say it matters less for your model than the sensitivity of soot lofted per detonation, which seems to be the main contention.

Hi Mike, thanks for taking the time to respond to another of my posts.

I think we might broadly agree on the main takeaway here, which is something like people should not assume that nuclear winter is proven - there are important uncertainties.

The rest is wrangling over details, which is important work but not essential reading for most people.

Yes, I agree that the crux is whether firestorms will form. The difficulty is that we can only rely on very limited observations from Hiroshima and Nagasaki, plus modeling by various teams that may have political agendas.

I considered not modeling the detonation-soot relationship as a distribution, because the most important distinction is binary - would a modern-day countervalue nuclear exchange trigger firestorms? Unfortunately I could not figure out a way of converting the evidence base into a fair weighting of 'yes' vs. 'no', and the distributional approach I take is inevitably highly subjective.

Another approach I could have taken is modeling as a distribution the answer to the question "how specific do conditions have to be for firestorms to form?". We know that a firestorm did form in a dense, wooden city hit by a small fission weapon in summer, with low winds. Firestorms are possible, but it is unclear how likely they are.

These charts are made up. The lower chart is an approximation of what my approach implies about firestorm conditions: most likely, firestorms are possible but relatively rare.

Los Alamos and Rutgers are not very helpful in forming this distribution: Los Alamos claim that firestorms are not possible anywhere. Rutgers claims that they are possible in dense cities under specific atmospheric conditions (and perhaps elsewhere). This gives us little to go on.

Agreed. My understanding is that fusion weapons are not qualitatively different in any important way other than power.

Yet there is a lot of uncertainty - it has been proposed that large blast waves could smother much of the flammable materials with concrete rubble in modern cities. The height at which weapons are detonated also alters the effects of radiative heat vs blast, etc.

Semi agree. Rutgers model the effects of a 100+ detonation conflict between India and Pakistan:

Conclusion

In the post I suggest that nuclear winter proponents may be guilty of inflating cooling effects by compounding a series of small exaggerations. I may be guilty of the same thing in the opposite direction!

I don't see my model as a major step forward for the field of nuclear winter. It borrows results from proper climate models. But it is bolder than many other models, extending to annual risk and expected damage. And, unlike the papers which explore only the worst-case, it accounts for important factors like countervalue/force targeting and the number of detonations. I find that nuclear autumn is at least as great a threat as nuclear winter, with important implications for resilience-building.

The main thing I would like people to take away is that we remain uncertain what would be more damaging about a nuclear conflict: the direct destruction, or its climate-cooling effects.

Great points, Stan!

I am not confident this is the crux.

I arrived at the same conclusion in my analysis, where I estimated the famine deaths due to the climatic effects of a large nuclear war would be 1.16 times the direct deaths.

Hi Stan + others.

Around one year after my post on the issue, another study was flagged to me: "Latent Heating Is Required for Firestorm Plumes to Reach the Stratosphere" (https://agupubs.onlinelibrary.wiley.com/doi/full/10.1029/2022JD036667). The study raises another very important firestorm dynamic, that a dry firestorm plume has significantly less lofting versus a wet one due to the latent heat released as water moves from vapor to liquid - which is the primary process for generating large lofting storm cells. However, if significant moisture can be assumed in the plume (and this seems likely due to the conditions at its inception) lofting is therefore much higher and a nuclear winter more likely.

The Los Alamos analysis only assesses a dry plume - and this may be why they found so little risk of a nuclear winter - and in the words of the authors: "Our findings indicate that dry simulations should not be used to investigate firestorm plume lofting and cast doubt on the applicability of past research (e.g., Reisner et al., 2018) that neglected latent heating".

This has pushed me further towards being concerned about nuclear winter as an issue, and should also be considered in the context of other analysis that relies upon the Reisner et al studies originating at Los Alamos (at least until they can add these dynamics to their models). I think this might have relevance for your assessments, and the article here in general.

Hi Mike.

I think this may well misrepresent Los Alamos' view, as Reisner 2019 does not find significantly more lofting, and they did model firestorms. I estimated 6.21 % of emitted soot being injected into the stratosphere in the 1st 40 min from the rubble case of Reisner 2018, which did not produce a firestorm. Robock 2019 criticised this study, as you did, for not producing a firestorm. In response, Reisner 2019 run:

Crucially, they say (emphasis mine):

These simulations led to a soot injected into the stratosphere in the 1st 40 min per emitted soot of 5.45 % (= 0.461/8.454) and 6.44 % (= 1.53/23.77), which are quite similar to the 6.21 % of Reisner 2018 for no firestorm I mentioned above. This suggests a firestorm is not a sufficient condition for a high soot injected into the stratosphere per emitted soot under Reisner's view?

In my analysis, I multiplied the 6.21 % emitted soot that is injected into the stratosphere in the 1st 40 min from Reisner 2018 by 3.39 in order to account for soot injected afterwards, but this factor is based on estimates which do not involve firestorms. Are you implying the corrective factor should be higher for firestorms? I think Reisner 2019 implicitly argues against this. Otherwise, they would have been dishonest by replying to Robock 2019 with an incomplete simulation whose results differ from that of the full simulation. In my analysis, I only adjusted the results from Reisner’s and Toon’s views in case there was explicit information to do so[1], i.e. I did not assume they concealed key results.

In my analysis, I also did not integrate evidence from Wagman 2020 (whose main author is affiliated with Lawrence Livermore National Laboratory) to estimate the soot injected into the stratosphere per countervalue yield. As far as I can tell, they do not offer independent evidence from Toon's view. Rather than estimating the emitted soot as Reisner 2018 and Reisner 2019 did, they set it to the soot injected into the stratosphere in Toon 2007:

For example, I adjusted downwards the soot injected into the stratosphere from Reisner 2019 (based on data from Denkenberger 2018), as it says (emphasis mine):

First of all, thank you for the work. I hope a peer reviewed version will be available. I find nuclear war modelling a most neglected research field. I have 3 comments:

I really think thay comprehensive nuclear war modelling is a something we shall spend between hundreds of millions and billions, but the field is almost a desert. Nuclear winter is almost the only issue extensively commented. I would be very happy to help with any development, but I really don't know who is working on this.