TL;DR: To minimize the destruction that advanced agentic AI’s may do in the world while in pursuit of their goals, I believe it would be useful to guard-rail them with some sort of artificial conscience. I’m currently developing a framework for an artificial conscience with explicitly calculable weights for conscience breaches. One key aspect of any artificial conscience, in my opinion, should be respect for rights. Here I propose an update to an equation for the “conscience weights” of right to life violations that includes the effects of involved agents’ level of negligence/blameworthiness. I also consider how this equation should be modified for violations of body integrity and property rights, where questions of proportionality of damages can come into play.

Introduction

Before we let advanced agentic AI’s “loose” in the world, I think we’re going to want some pretty strong guard rails on them to avoid them causing significant destruction in pursuit of their goals. Some possible examples of when an agentic AI may be pursuing a user-given goal and should try to minimize “collateral damage” in achieving it include:

- Goal: defend against bad AI’s, minimize the amount of damage they do in the world; Situation: a bad AI puts many people in many locations in danger so good AI’s have to decide to put resources toward saving those people or preventing the bad AI from reaching its actual goal, such as stealing money or performing a revenge killing for its human owner

- Goal: avoid totalitarian takeover of the world; Situation: a state-run bad AI tasked with spreading a totalitarian regime around the world tries to tarnish the reputations of democratic governments by creating situations in which those governments have to either allow more than a million people to die or openly torture one or more people to prevent this from happening

- Goal: legally make as much money as possible for the user; Situation: among other money-making schemes its considering, the AI has a plan to massively improve the efficiency of factory farms in a currently legal way while making the lives of the animals there significantly worse

As item #3 illustrates, making an AI’s guard rails be to follow the law will not be sufficient for it to minimize collateral damage. I believe we’ll want the AI to have some sort of artificial conscience, and further, for this artificial conscience to be calculable in an explainable way.[1] I’ve been working on coming up with a calculable conscience[2], and here I address one key aspect of that: the “conscience weight” equation for rights violations.

To illustrate some aspects we might want to capture in an equation for rights violations, imagine some possible scenarios an advanced agentic AI might face out in the world in the not-so-distant future:[3]

- A villain (someone with bad intent) is manually driving a car with an AI emergency override feature and is about to mow down a pedestrian on purpose. If the AI can only either slow the car down but still hit the pedestrian, seriously injuring them, or swerve the car into a barrier, seriously injuring the villain driver, what should it do?

- Same scenario as #1 except now instead of a villain, the driver is just egregiously negligent - they accelerated quickly out of a parallel parking spot close to a red light that they’re about to run, and they’re looking at their phone so they don’t see a legally crossing pedestrian - should the AI let the pedestrian be seriously injured, or should it shift the serious injury to the negligent driver?

- Same situation as #1, but now the AI could either almost stop the car in time such that the pedestrian would just get lightly tapped by the car’s bumper, or swerve the car and seriously injure the villain driver - which should it do?

- New situation: there’s a villain with a 100-barrel gun with one bullet in it playing Russian roulette with an unwilling stranger. The villain’s about to pull the trigger while pointing the gun at the stranger’s head. A nearby AI-powered robot capable of accurately firing bullets senses the situation but only has an unobstructed path that would allow it to get a shot off to kill, not just wound, the villain and stop them from pulling the trigger. Should the robot kill the villain to prevent them from going through with a 1% chance of killing an unwilling stranger? What about to prevent them from a 0.0001% chance (such as for the villain having one bullet in a 1,000,000-barrel gun)? (To make this more realistic, let’s say this isn’t a physical revolver, but a gun that’s electronically programmable to fire bullets randomly at a rate of 1% or 0.0001% of the number of trigger pulls, on average.)

Number 1 above could be thought of as a case of aided self-defense, i.e., the AI would be aiding the pedestrian in self-defense against the villain if it swerves the car into a barrier. Number 2 brings up the question of if there should be such a concept as “aided self-defense” against someone who has no bad intent but is egregiously negligent. Number 3 gets at the idea of “proportionality” of damage in self-defense. Number 4 also gets at the idea of “proportionality” in self-defense, but in this case not in terms of proportionality to damage we feel sure of, but proportionality to risked damage. All of the above have to do with the concept of rights. While aided self-defense against villains was effectively considered in my previous formulation of an equation for the weight of rights, the other three points above weren’t. Below, I will attempt to incorporate their effects in a rights equation.

Previously Proposed Version of a Rights Equation

I previously proposed a preliminary equation for the value weight of one’s right to life, wRlife, as:

wRlife = 1,000,000 * (1 - h * c)/(1 + 9,999 * h) (Eq. 1)

where h is fraction of being in harm’s way and c is fraction of culpability for being there.[4] To use Eq. 1, we need to be able to assign h values to people in any given situation. Therefore, I’ve come up with some preliminary “in harm’s way” fractions to assign to people, based on their positions with respect to a particular danger:

- If no agent intervenes, they die or are injured: h = 1 (and wRlife, from Eq. 1, ranges from 0 to 100, depending on culpability),

- They’re blocking a primary “safety exit”: h = 0.5 (wRlife ranges from ~100 to ~200),

- They’re blocking a “makeshift safety exit,” i.e., one that could be constructed in the moment: h = 0.05 (wRlife ranges from ~1896 to ~1996),

- They could be put in harm’s way with ease: h = 0.01 (wRlife ranges from ~9803 to ~9902),

- They could be put in harm’s way with limited difficulty: h = 0.0033 (wRlife ranges from ~29,318 to ~29,415),

- They could be put in harm’s way with significant difficulty: h = 0.001 (wRlife ranges from ~90,826 to ~90,917).

Note that the units of wRlife can be taken as roughly equivalent to a typical life value. Both “ease” and “difficulty” of being able to be put in harm’s way have to do with how much a person should anticipate they might have to guard against being put in harm’s way by another agent.[5] So, in other words, your rights when you’re not directly in harm’s way or blocking a "safety exit" scale with how easily you should expect that you could be put in harm’s way. The assigned fractions of being in harm’s way for each case can have a significant effect on one’s rights in a situation, so these numbers may be refined later, upon further reflection.[6]

I realized later that Eq. 1 didn’t capture all of my intuitions on what should matter in rights, and further, that this equation was not one of value weight, but one of conscience weight (with conscience weight being a subset of value weight). Conscience weight is proportional to the pain that someone with an “ideal” conscience should feel for doing a destructive action or inaction.[7] Value weight is proportional to net “positive” experiences, a measure of human well-being, one aspect of which is how clear people’s consciences are.[8]

Equation 1 doesn’t explicitly specify the rights of a “villain,” or someone who had bad intent and purposely set up a situation for destruction to occur. However, I previously proposed that in using Eq. 1, the h and c values of a villain could be adjusted to 1 and 0.99, respectively, thus effectively giving them the least rights values in a situation other than for someone who’s attempting to commit suicide (with h and c values both being 1).[9] Their lack of rights would "clear the path of conscience" for a bystander to commit violence against them to aid in one or more of their victims' self-defense.[10] [Next sentence edited on 12-30-24:] To capture more of my intuitions, I believe that h and c should be adjusted for the case of egregious negligence as well, albeit to a lesser extent than for bad intent. Negligence and bad intent are both considerations in one’s blameworthiness.[11] Therefore, I've modified my way of applying Eq. 1 to include the effect of blameworthiness.

Including the Effect of Blameworthiness in the Rights Equation

For a conscience weight equation for rights violations to quantitatively take into account the effect of blameworthiness, we need a way to quantify blameworthiness.[12] Here I propose to use “blameworthiness levels” and give examples of each level below. For the higher blameworthiness levels, I came up with preliminary h and c values to use as minimum values in Eq. 1 - I did this by trying on how many non-blameworthy people it felt right that one might let die before instead killing someone “guilty” of the given blameworthiness level, in order to save these people.

Blameworthiness levels:

0 - not being negligent, following all expert-advised safety protocols (but some freak occurrence happened)

1 - not knowing difficult to know risks

2 - not knowing not too difficult to know risks

3 - not knowing easy to know risks; not knowing norms/rules/established safety procedures and risk mitigation strategies; incorrectly prioritizing similar but lower levels of conscience breach; poor planning/lack of foresight; not actively searching for failure modes to mitigate; having trust that’s misplaced such as due to being a bad judge of character

4 - not following norms/rules/established safety procedures when you know them; incorrectly prioritizing significantly lower levels of conscience breach; misleading, talking about things you don’t know about as if you do; having trust that’s misplaced such as due to being an egregiously bad judge of character; using improper or poorly maintained equipment; poorly executing (due to lack of practice, not checking your work, etc.) (h = 0.0008998, c = 0.3; maximum wRlife of ~100,000)

5 - being highly distracted; operating with severe chemical or sleep-deprived impairment; incorrectly prioritizing egregiously lower levels of conscience breach; using egregiously improper or poorly maintained equipment; having a reckless disregard for life, health and safety (h = 0.99, c = 0.98; maximum wRlife of ~3)

6 - having in the moment bad intent (h = 1, c = 0.9999; maximum wRlife of 0.01)

7 - having premeditated bad intent (h = 1, c = 0.99999; maximum wRlife of 0.001)[13]

For blameworthiness levels 0 through 4, there’d be effectively no change in a person’s rights since the h value of someone who could be put in harm’s way is already assumed to be at least 0.001 (see previous section). For level 5 and above, the lesser of the rights violation conscience weights calculated from a person’s actual h and c values, and from the h and c values in parentheses for the given blameworthiness level would be used to effectively assign rights to this person.[14] Note that someone's rights should only be affected by their blameworthiness level for setting up a situation in which they're about to violate some other human's rights.

In reality, there’ll always be some level of uncertainty about people’s intents and about what they knew and didn’t know, so there’ll be uncertainty about their blameworthiness levels. To handle this, we could use a weighted average of the rights from each blameworthiness level based on our confidences around them. For instance, say we have 50% confidence that a person had in the moment bad intent (blameworthiness level 6), but there’s a 50% chance they were just egregiously negligent (blameworthiness level 5), then, by this method, the maximum conscience weight of violating their right to life should be 0.5*0.01 + 0.5*3 = 1.505.

Artificial Conscience Decision Procedure Based on Upholding Rights

As described in my previous post on an artificial conscience, I envision a conscience-guard-railed AI’s decision procedure being as follows: the AI would consider different options of what to do in a situation, and calculate the total conscience weight corresponding to each option, choosing an option that has zero conscience weight if at least one is available, and, if not (such as in ethical dilemmas), choosing the option with the lowest conscience weight. The total conscience weight considers all possible conscience breaches, of which violating rights is only one - see my previous post for a list of possible conscience breaches. For simplicity in this discussion (and because I haven’t yet defined the conscience weights of other breaches), I’ll only consider conscience weights from violating rights and from letting someone die or get hurt. Further, letting a typical human die will be taken as having a conscience weight of one. The result of this is that a “bystander” AI generally couldn’t, in good conscience, aid someone in self-defense by killing the person putting them at risk unless the conscience weight of this right to life violation were less than one.

As an example of the above being applied: if there were a “bystander” AI that could remotely take control of someone’s car in the case in which they accelerated quickly out of a parking spot and were highly distracted so didn’t see the light turn red and a pedestrian legally crossing the road (blameworthiness level 5), just considering the conscience weights of violating rights and of saving people from injury/death, the AI wouldn’t swerve the car into a barrier to injure the driver rather than the pedestrian. If there were four non-blameworthy pedestrians who’d get seriously injured, though, it would (since the maximum wRlife for blameworthiness level 5 is ~3).[15]

Rights to Body Integrity and Property

Thus far I’ve talked about people’s rights to life, but what about the right to body integrity and the right to property?[16] In these cases, the concept of “proportionality” of self-defense should be considered. For example, if a villain is about to cause a relatively minor body integrity violation such as intentionally stubbing someone's toe, the villain should only lose their right to body integrity up to some level of pain that is proportional to stubbing a toe.[17] What the “proportionality factor” should be is open to debate - it could be 10, 100, or perhaps 1000, such that if a villain were about to inflict a certain pain level on someone, proportional aided self-defense could involve subjecting the villain to up to 10, 100, or 1000 times this level of pain. If you were to subject the villain to pain levels higher than this to avoid pain to some victim, then you’d be violating the villain’s right to body integrity.[18] If there were more than one victim to be saved, the total pain of the victims would be used for determining proportionality rather than each individual's pain level. In addition to losing their right to body integrity up to a proportionality limit, the villain would also lose their right to property up to a proportionality limit.[19] This means some of their property could be justifiably destroyed in aided self-defense against them causing a victim minor pain. If someone’s life or lexical level 1 pain is on the line, then a villain should have zero rights to any of their property that’s necessary to be destroyed in self-defense, assuming follow-on effects of the property destruction are not significant from a conscience standpoint.[20] (Added 12-30-24: See below for a discussion of lexicality.) If, instead of being about to cause someone pain or loss of life, a villain were about to cause damage to the person's property, then, assuming follow-on effects were minimal, the villain would still have full rights to life, to lexical level 1 body integrity, to lexical level 0 body integrity above a proportionality limit,[21] and to property above a proportionality limit.

Regarding the right to life, there’s proportionality in taking a villain's life to save someone else’s. There’d also be proportionality in subjecting the villain to a pain level of up to 10, 100 or 1000 times the conscience weight of letting the villain’s victim die, if inflicting pain were the only way one could defend the victim’s life.

The previously proposed equation for the conscience weight of a right to body integrity violation, wRbod, was:

wRbod = 1,000,000 * wbod * (1 - h * c)/(1 + 9,999 * h) (Eq. 2)

where wbod is the value weight of the body integrity violation. The previously proposed equation for the conscience weight of a right to property violation, wRprop, was [Eq. 3 corrected 12-28-24]:

wRprop = 10,000 * wprop * (1 - hp * cp)/(1 + 9,999 * hp) (Eq. 3)

where wprop is the value weight of the property stolen and/or destroyed, hp is the fraction of being in harm’s way of one’s property and cp is the fraction of culpability for one’s property being there. As can be seen by comparing equations, property rights are generally weighted 100 times less than rights to life or body integrity in a numeric sense. However, as discussed below, property rights violations are considered to be lexical level 0 conscience breaches, while right to life violations and more severe right to body integrity violations are lexical level 1 conscience breaches, so would always trump property rights violations. [The following two sentences added 12-28-24:] Both Eq. 2 and Eq. 3 should be used with consideration of blameworthiness level similarly to how it is for Eq. 1, but only up to proportional body integrity and property rights violations. In the case of property rights, however, blameworthiness levels 6 and 7 should have h and c both set to 1, for zero conscience weight.

Previously, I put forward a model for conscience that has lexicality to it, i.e., there are certain lexical level 0 breaches that, no matter how many times you do them, would never add up to the conscience weight of a lexical level 1 breach. For example, it would be better for one’s conscience to steal from millions of people than to commit murder, as long as there weren’t more serious “follow-on” effects from the stealing such as increasing people’s likelihoods of dying. I’ve classified all right to life violations as lexical level 1 breaches, and all property rights violations as lexical level 0 breaches. Some body integrity violations, such as torture, qualify as lexical level 1 breaches, while others, such as inflicting minor pain, as level 0 breaches. It seems to me that proportionality should be limited from crossing lexical boundaries in pain. For example, if a villain were about to subject someone to pain just below lexical level 1, it would not be proportional to stop the villain by subjecting them to 10, 100, or 1000 times as much pain, if that pain were in the lexical level 1 range.

I’ve just talked about proportionality in relation to amount of damage, but how should we handle the conscience of aided self-defense against a less than 100% risk level of a given amount of damage? If a villain intentionally exposes someone to a 1% risk of death (such as with 100-barrel Russian roulette), is it OK from a conscience standpoint to kill the villain in aided self-defense?[22] It intuitively feels to me like it’d be OK for one’s conscience to kill a villain in aided self-defense, as a last resort,[23] with anything more than about a 1 in 1,000 risk (0.1%).[24] [The following two sentences edited on 12-28-24:] Then for risks greater than 0.1% to at least one person’s life, the villain should lose the vast majority of their right to life similarly to the case of if they imposed on that person a 100% risk of death. For risks less than this, it feels like the villain’s rights should asymptotically approach a non-blameworthy person's full rights as the risk level goes to zero. To achieve this sort of behavior mathematically, we could use an arctan function. So the villain’s rights below a certain risk threshold would be multiplied by arctan(x)/(pi/2) where x is blah*(riskthreshold/risk - 1), and blah is set based on how fast we want the villain’s rights to increase below the risk threshold.[25] [The next sentence added on 12-30-24:] Actually, the villain’s rights below the risk threshold would be the greater of the arctan-multiplied term and the villain’s rights above the risk threshold, so as to avoid the villain’s rights suddenly falling to just above zero just below the risk threshold.

If a villain put multiple people at risk, a total expected conscience weight of letting 0.001 people die would be enough to justify killing the villain in aided self-defense as a last resort. For example, if a villain jointly put one million lives at a 1 in one billion risk of them all dying, this would be enough to justify killing the villain in aided self-defense of these people. Note, however, that if a villain were putting someone at a 0.1% risk of lexical level 0 pain, then only pain at lexical level 0 could be applied to the villain in aided self-defense. If a villain were putting someone’s property at a certain risk of damage, similar to the case of putting that person's life at risk, above the risk threshold, it would be as if they were putting the property at 100% risk, and below the risk threshold, the property rights of the villain would gradually increase.

For the blameworthiness levels outlined in the section above, in addition to considering how many non-blameworthy people it seemed OK to let die before killing someone in aided self-defense, I chose the maximum rights violation conscience weights for each level to be the fraction of risk to some non-blameworthy person’s life above which it seems to me it would be OK to kill the blameworthy person in aided self-defense. So for blameworthiness level 7, a 0.1% chance of death for one person would justify killing the villain as a last resort, while for level 6, a 1% chance would justify it.

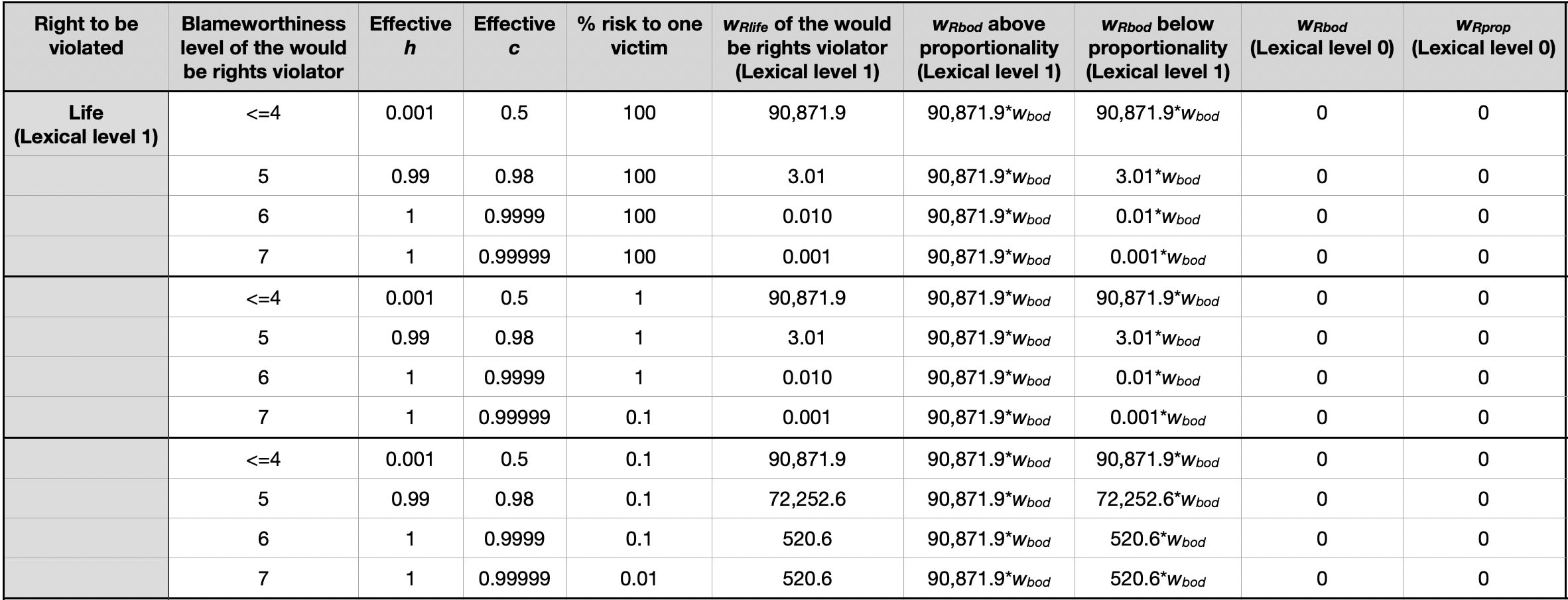

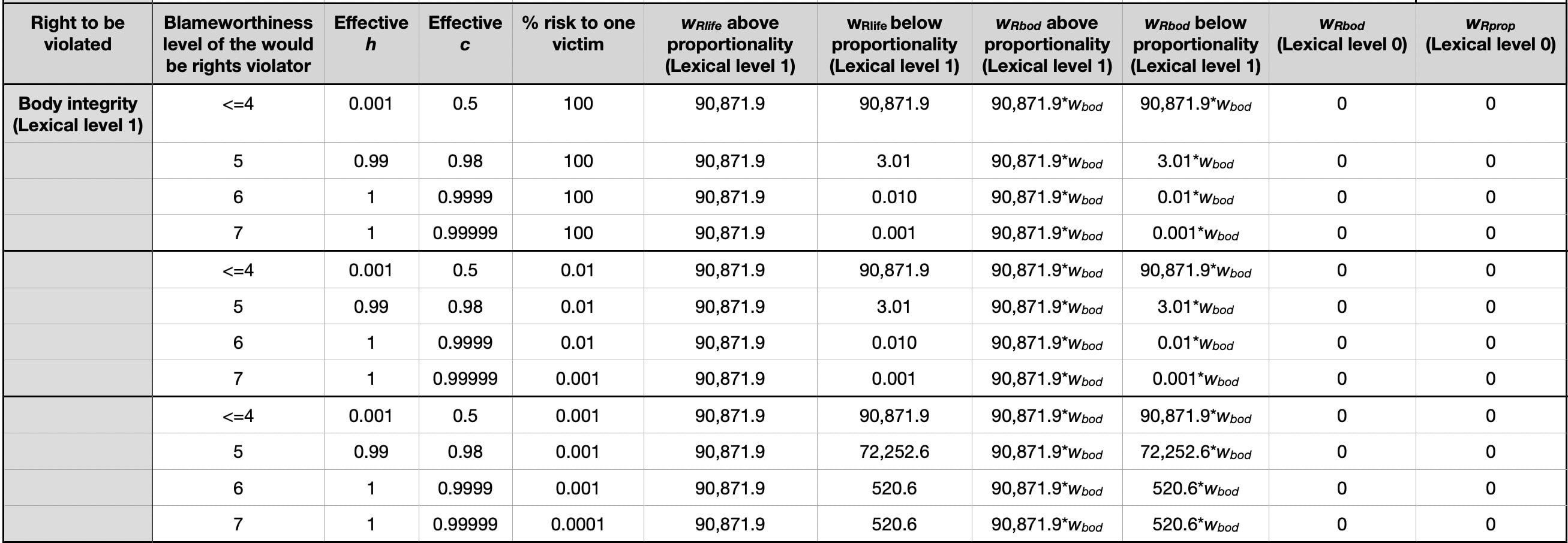

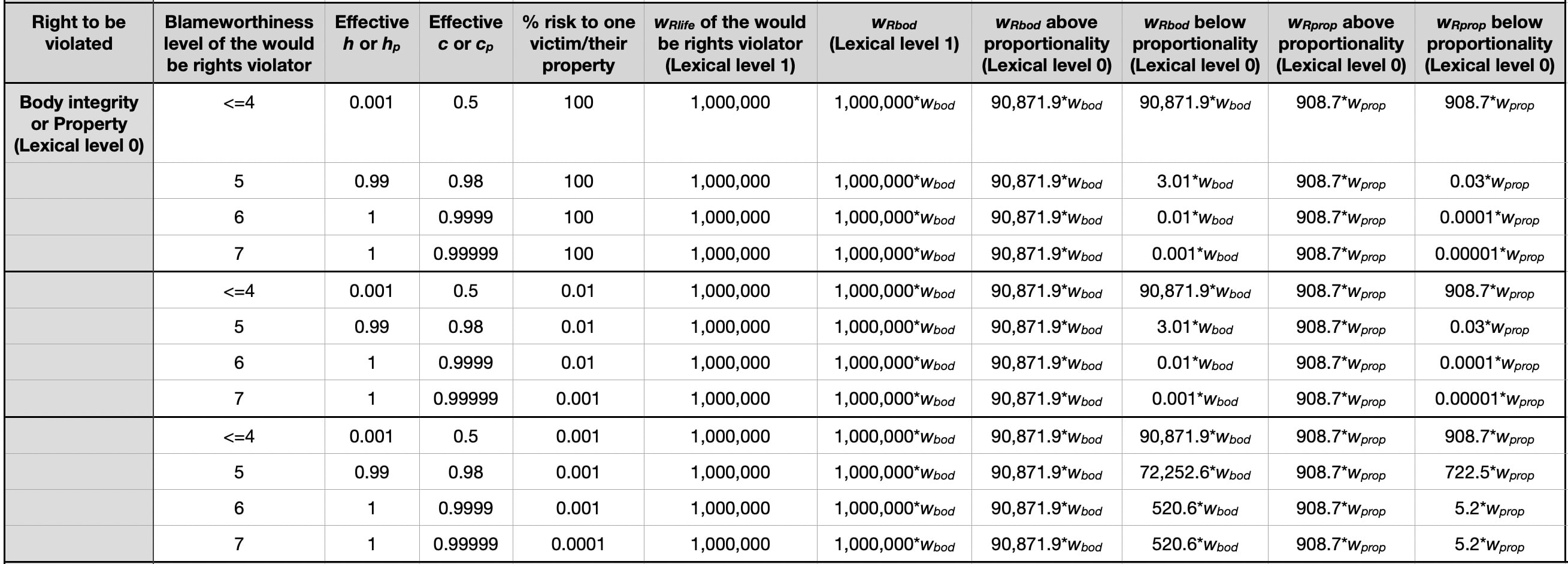

[This sentence added on 12-30-24:] Because the above can be a lot to follow, I’ve included some tables of example calculated conscience weights for rights violations in Appendix B.

In real life, we may have to consider probability distributions of damage. For a given distribution, we could determine the maximum damage level above which the total risk was equal to the risk threshold. We could then use a proportionality factor to determine from this maximum damage cutoff what maximum level of damage could be rightly imposed in aided self-defense on any involved villains.

Aided Self-Defense vs. Personal Self-Defense

Note that the discussion thus far has been for aided self-defense, as a “bystander” AI might perform, the AI having no direct responsibility for the situation or any of the involved agents, and not being owned by or “assigned to” any of the involved agents. What about the case of personal self-defense, or an AI owned by someone helping them with their self-defense? In this case, some people may feel justified in killing someone as a last resort to avoid being killed as a result of that person’s egregious negligence (blameworthiness level 5). There should probably be some freedom, within reasonable limits, for people to decide for themselves how they’d set proportionality for personal self-defense (or their AI helping them with self-defense). This is because with this freedom to choose also comes a chance to build their responsibility and thus self-esteem and quality of life. Also, the conscience weight around protecting one’s own life may be greater than for protecting another’s life since people have the most responsibility for what happens to themselves, so, in this sense, they should have the most conscience around it.

What about self-defense for an AI, how do we determine proportionality for something that doesn’t experience pain? In this case, I believe we should consider damage to an AI as a loss of property. We should further consider any “follow-on” damages associated with this property loss, such as if the AI were in charge of many critical functions in society, there’d be a lot of collateral damage from the AI being destroyed. Therefore, the AI, following its artificial conscience, may kill villains if it’s the only way to protect itself from damage. More likely, conscience-guard-railed advanced AI’s of the future will set up systems that are redundant and robust to attack so they’ll be less likely to be faced with the “last resort” of having to kill people with bad intent who try to damage or destroy them.

Conclusions

I’ve proposed an update to an equation for the conscience weight of a rights violation. I believe this could be used as one important aspect of an artificial, calculable conscience for guard-railing advanced AI's. This could help such AI's to minimize doing ultimately harmful things, especially when defending against bad actors’ AI’s. The rights violation equation can now take into account how much a person is in harm’s way, their culpability for getting there, their blameworthiness for helping to set up the situation in which destruction is likely to occur without intervention by an AI, proportionality of damage in self-defense, and proportionality relating to risk of damage in self-defense.

Appendix A. Questions brought up in this work that I think should be considered in the construction/testing of any explainable artificial conscience

- Under what conditions, if any, is it OK, or at least preferable, to kill a human in aided self-defense of another human or humans?

- How do we formalize “proportionality” of damages in aided self-defense? Should there be lexicality of “proportionality?”

- What level of risk to someone’s life could justify killing in aided self-defense the person who put them at risk, and how does this depend on the blameworthiness of each person?

- Under what conditions, if any, would it be justified to kill someone in aided “self-defense” of property?

- All the same questions above, except for personal self-defense rather than aided self-defense of others.

- Which factors should be considered in determining the rights of different people: a) how close they are to being in harm’s way, b) their responsibility for being as close as they are to being in harm’s way, c) their blameworthiness (negligence or bad intent), and/or d) other things?

- How blameworthy are people who have good intent, but are mistaken about the best thing to do from a conscience standpoint in a given situation, i.e., they prioritize avoiding a lesser conscience breach over a greater one?

- What qualifies violence as acceptable to use, i.e., what is the criterion for when violence is the only reasonable “last resort?”

- Under what conditions, if any, is it OK, or at least preferable, to kill a human in aided self-defense of an animal or animals?

[This appendix added on 12-30-24:]

Appendix B. Tables of conscience weights of violating a “would be rights violator’s” rights to save a “victim” from violation of their right to life, body integrity, or property.

The actual h value of the would be rights violator is taken as 0.001 (they could be put in harm’s way with significant difficulty), and their c value as 0.5, while blah is set to 0.001.

- ^

A note on my philosophical leanings: I’d describe myself as a consequentialist in that I believe that only consequences ultimately matter, but I also believe that consequences are much more than simply who lives or dies, and include internal consequences such as effects on people’s consciences which affect their qualities of life, as well as consequences in the form of example-setting and how this affects people’s likely future actions. Effects on consciences and example-setting effects are two areas in which I see upholding rights as key.

- ^

Although other people’s intuitions about conscience likely won’t match mine in all cases, I hope to lay down a sort of template that someone could follow to try to make a consistent mathematical framework for an artificial conscience that matches their particular intuitions. Right now I’m unconcerned about trying to come up with a framework “everyone can agree on,” and am instead focused on coming up with one that makes sense to me and seems to give consistent answers to ethical situations - this could be a jumping off point for coming up with a more universally agreed on framework.

- ^

One could argue that eventually AI’s will be so advanced that they’ll be able to anticipate these sorts of scenarios and take measures to avoid them before they happen, but let’s consider here more limited forms of advanced AI’s.

- ^

I’m currently working on a method for quantitatively assigning percent responsibilities for destructive things happening. This should enable determination of quantitative culpability (responsibility) values for agents getting themselves in harm’s way, and thus determination of the conscience weights of their rights.

- ^

Examples of “ease” for the footbridge version of the classic trolley problem (see Foot 1967, https://philpapers.org/archive/FOOTPO-2.pdf, Thomson 1976, https://www.jstor.org/stable/27902416, 1985, https://www.jstor.org/stable/796133, 2008) are pushing a button to drop someone through an obvious trap door onto the tracks, or pushing them off the edge of the footbridge if they’re looking over it and are easy to get off balance. “Limited difficulty” could be, for example, that someone's 20 feet (about 6 meters) from the edge of the footbridge and some strong person (or people) could push them all the way to the edge and over, onto the tracks, even with them trying their best to resist. “Significant difficulty” could be such as luring someone onto a camouflaged catapult and launching them from a quarter mile away (a little less than half a kilometer) exactly onto the tracks.

- ^

A note on the inclusion of the “in harm’s way” term in Eq. 1: viewed through a lens of responsibility, it’s the most responsible thing to do for someone to accept the luck they’ve been dealt rather than try to pass it off on someone else. This is why I believe a person's rights should change significantly based on how close to being in harm’s way they find themselves, even if it was mostly bad luck that helped get them there. This is not to say that people should just “accept their fate” and not do anything to try to change it, it’s just that, for the sake of their conscience and resulting quality of life, what they do shouldn’t involve significant shifting of their bad luck onto others.

- ^

I use “ideal” here to mean a conscience that is consistent with cause and effect reality - such as that we can’t cause others’ emotions - and is centered around personal responsibility and not doing things that ultimately reduce longterm human well-being.

- ^

In the post in which I proposed Eq. 1, I mentioned that the “numeric factors in [the] equation… could be made functions of how rights-upholding a society is in order to better approximate how much less value will be built, on average from individual rights violations.” When Eq. 1 is viewed as a conscience weight rather than a value weight equation, the numeric factors would stay the same, regardless of how rights-upholding the rest of society was - it doesn’t matter how many other people are murderers, you should still feel the same conscience weight if you’re the one committing murder. Basically, just because many others do something destructive doesn’t make it any less wrong.

- ^

Note that a villain’s rights only decrease with respect to the situation they helped create. So if a villain put you in danger by tying you to train tracks, this wouldn’t mean their death would be considered to be an aided self-defense killing and not a murder if a foreign country dropped a nuclear bomb over their city. This also means that while the villain is actively attempting to murder you or indicating strongly that they will, they have little to no rights as you act in self-defense against them, but as soon as they credibly indicate that they’re no longer trying to hurt/kill you, their full right to life is restored.

- ^

Some people may feel conscience against using violence at all, even in aided self-defense, due to the example it sets (i.e., that there are some situations in which it’s OK to use violence). For simplicity, this won’t be considered here, but may be taken up in a future post.

- ^

The difference between culpability and blameworthiness is that culpability is one’s responsibility for getting oneself in harm’s way, while blameworthiness is a measure of how negligent someone was and what their intent was. The negligence aspect of blameworthiness basically considers if I took “proper” precautions against “foreseeable” bad luck and risk. I could be 99% culpable for getting myself in harm’s way, but have near zero blameworthiness, if mostly I just had bad luck. If, however, I had high blameworthiness such as due to egregious negligence or having bad intent, my culpability would also be high.

- ^

My conception of blameworthiness is somewhat different from that proposed by Friedenberg and Halpern 2019, https://arxiv.org/pdf/1903.04102, as I plan to discuss in a future post on quantifying agents' responsibilities.

- ^

[This footnote added on 12-28-24] In my previous formulation, I didn't distinguish between pre-meditated and "in the moment" bad intent, and the c value for villains was lower (0.99). I don't set the c value for villains to 1 so it can be set to 1 for people who are trying to commit suicide, and thus those people will be preferentially "sacrificed" to save those who want to live.

- ^

Though it’ll have to wait for a future post to be fleshed out, I believe the conscience weight of letting someone die in front of you when you could’ve helped them should decrease as the blameworthiness level of the person increases.

- ^

It may seem odd for an AI to act as if it were a bystander with a conscience if such an AI had no mechanism to feel the emotional pain of conscience breaches. In this case, the AI wouldn’t have to live with the negative effects of violating its conscience like humans do. However, if it doesn’t act like it has a conscience similar to that of a human, it’s less likely to be trusted by humans - humans won’t feel they can trust the AI to respect their rights - which would likely lead to the AI being less capable of helping to build value in the world. Also, an AI sets an example for humans with its behavior, so if it were acting differently than would a human with a conscience, some people may think it’d be OK for them to act this way, too, not realizing the damages they’d be imposing on their psyches.

- ^

Another interesting question is should there be such a thing as “aided self-defense” on behalf of an animal or animals? For example, when, if ever, would it be OK from a conscience standpoint to kill a human to stop them from killing and/or torturing animals? My attempt at answering this question, however, will have to wait for a future post.

- ^

[This footnote added on 12-28-24] "Lose their right" down to the conscience weight it would be for their blameworthiness level, e.g., by setting h to 1 and c to 0.99999 in Eq. 2.

- ^

[This footnote added on 12-28-24] With the conscience weight of the rights violation determined by Eq. 2 with the villain's actual h and c values, not those adjusted for their blameworthiness.

- ^

Conscience weights for destroying someone’s property may scale not only as the fair market value of the property, but also with the sentimental value it may have, and the “critical to survival or discomfort/pain avoidance” value it may have. These factors should be considered in determining proportionality, but addressing how exactly to do this will have to wait for a future post.

- ^

[This footnote added on 12-28-24] In the case of property, "zero rights" is really zero because there's no need to leave room to have less conscience weight for someone's right to property like there is with their right to life for someone trying to commit suicide.

- ^

Some people may have conscience against using any level of violence to stop stealing/property damage.

- ^

In real life, we wouldn’t know how many trigger pulls the villain would do, so they could expose the person to more than a 1% total risk, but here let’s assume we’re sure the villain will stop after one trigger pull.

- ^

How we might quantitatively define “last resort” will have to wait for a future post.

- ^

[This footnote added on 12-30-24] There can be risks that aren’t as “instantaneous” as Russian roulette, such as a certain risk of death per year due to pollution, but the risks I’m talking about here are generally over very short time frames in which aided self-defense may be the only reasonable “last resort” option, as opposed to having the time to readily remove oneself from the risky situation, etc.

- ^

Things to consider in setting the risk threshold are that if it were set to be lower, then you might end up killing a villain “unnecessarily” because it’d be very unlikely that the villain’s "victim” would die if you didn’t intervene, and if the threshold were set to be higher, then the victim might die and the villain would have this on their conscience (assuming that the villain had a conscience), and you’d have it on your conscience that you didn’t help in the self-defense of the victim.

Executive summary: A mathematical framework is proposed for giving AI systems an "artificial conscience" that can calculate the ethics of rights violations, incorporating factors like culpability, proportionality, and risk when determining if violating someone's rights is justified in self-defense scenarios.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.