This is an appendix to “Cooperating with aliens and (distant) AGIs: An ECL explainer”. The sections do not need to be read in order—we recommend that you look at their headings and go to whichever catch your interest.

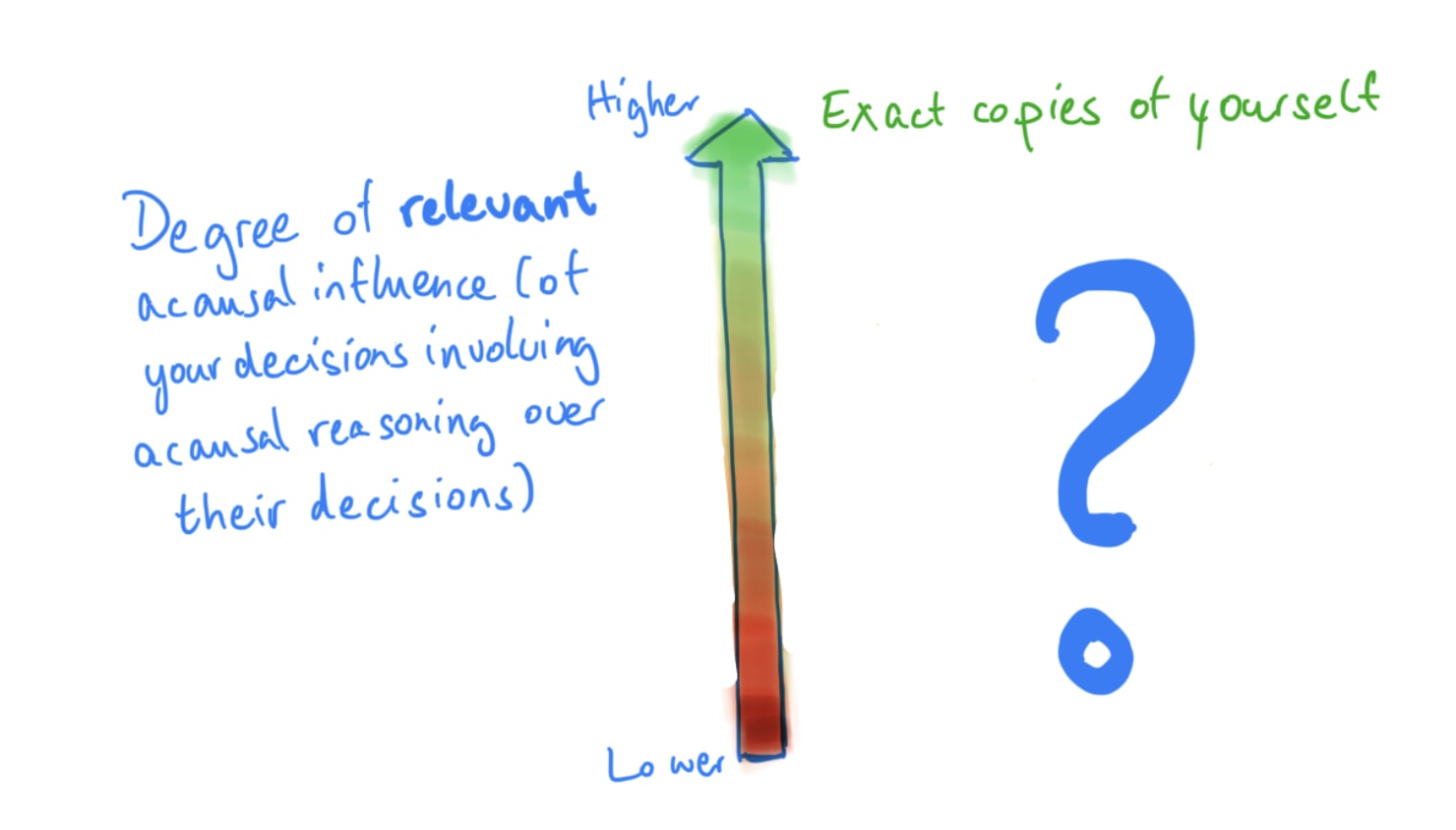

1. Total acausal influence vs. relevant acausal influence

Our ECL explainer touches on an argument for thinking that you can only leverage a small fraction of your total acausal influence over other agents. The argument, in more detail, goes as follows:

We can only leverage our acausal influence by thinking about acausal influence and concluding what conclusions it favors. Otherwise, we just do whatever we would have done in the absence of considering acausal influence. Even if it is in fact the case that, for example, my donating money out of kindness acausally influences others to do the same, I cannot make use of this fact (about my donating acausal influencing others to the same) without being aware of acausal influence and reasoning about how my donating will acausally influence others to do the same.

We established, in our explainer, that your acausal influence on another agent’s decision comes from the similarity between your decision-making and their decision-making. However, as soon as you base your decision on acausal reasoning, your decision-making diverges from that of agents who don’t consider acausal reasoning. In particular, even if it is the case that most of your decision-making is the same, the part of your decision-making that involves acausal reasoning can plausibly only influence the part of the other agent’s decision-making that involves acausal reasoning. This means that one is limited to only acausally cooperating with other agents who take acausal influence seriously. More broadly, one’s total acausal influence on another agent’s decision might be different from one’s relevant acausal influence on them—that is, the part one can strategically use to further one’s goals/values.

2. Size of the universe

(This appendix section follows on from the “Many agents” section of our main post.)

- More on spatial-temporal expanse: Under the current standard model of cosmology, the Lambda cold dark matter model, a single parameter, the universe’s curvature, dictates its spatial (and temporal) extent. Data from the Planck mission shows that our universe’s curvature is very close to zero, implying that it is either infinite (if it is zero or negative) in extent or finite and probably in the range from 250 to 10^7 times larger than the observable universe.[1] (The observable universe has a diameter of roughly 93 billion light years. For reference, the diameter of the solar system is 0.0127 light years and the diameter of the milky way is roughly 100,000 light years.)

- More on the inflationary multiverse: The standard explanation of the early universe is the inflationary model. In the inflationary model, the big bang occurs after a period of rapid inflation. The leading theory of inflation is eternal inflation.[2] Eternal inflation posits that our universe is one bubble universe in an inflationary multiverse that spawns many bubble universes. Inflation is eternal in at least the future direction,[3] and so there are infinitely many bubble universes. Some of these bubble universes will be much like ours, while most might have very different physics.[4]

- More on Everett branches: In broad strokes, the Everett interpretation says that whenever a quantum process has a random outcome, the universe splits into a number of universes (branches) housing each possible outcome.[5] There are branches that are very similar to ours—these will tend to be branches that only split off from ours recently—and there are branches that are very different (e.g., ones in which Earth never formed). We cannot causally interact with other branches.[6]

In a 2011 survey (p.8), 18% of polled experts cited Everett as their favorite interpretation of quantum mechanics.[7] The community forecast on the Metaculus question “Will a reliable poll of physicists reveal that a majority of those polled accept the many-worlds interpretation by 2050?” currently lies at 38%.

3. Size of potential gains from cooperation across light cones

(This appendix section follows on from the “Size of the potential gains” paragraph within the “Net gains from cooperation” section of our explainer post.)

We start with the assumption that we, and some other agents, care about what happens in other light cones. There are a few reasons to think that there are gains to be had from mutual cooperation (assuming perfect cooperation between two agents) compared to everyone just optimizing for their own values. These are: diminishing marginal returns within but not across light cones, comparative advantage, and combinability. (Note that these reasons/categories overlap somewhat.)

3.1 Diminishing marginal returns (in utility) within vs. across light cones

By their nature, some values imply lower marginal utility from having more resources invested into them in their “home” light cone compared to in another light cone.[8] For example, if some agent values preservation—preservation of culture, say, or biodiversity—then most of what they care about within their light cone might already be preserved without them spending all their resources.[9] Another example: suffering reduction. An agent who cares about suffering reduction might manage to eradicate most suffering in their light cone and still have resources to spare.

It seems plausible that the first unit of resource in each light cone invested towards these kinds of values helps a lot, with the second unit helping less, and so on. For instance, there is probably low-hanging fruit for significantly reducing suffering or preserving most species, but reducing suffering all the way to zero, or preserving every last species, are likely difficult tasks. If agents with these kinds of values exist in the universe/multiverse, then everyone can gain from cooperating with them, and they also gain from cooperation. Everyone can invest a little of their resources towards these kinds of values (to pick the low-hanging fruit), and in exchange, agents with these values put some—perhaps most—of their resources towards benefitting other values.

3.2 Comparative advantage

One has a comparative advantage at producing A instead of B, compared to some other agent, if the amount of resources one needs to produce A, relative to B, is smaller than the amount of resources the other agent needs to produce A, relative to B. Suppose that I value A, and another agent values B, but I have a comparative advantage over them at producing B (and they have a comparative advantage over me at producing A). In this case, we are both better off if we each optimize for the other agent’s values instead of our own. There are a few reasons to expect comparative advantages in the multiverse context:[10]

Different physical conditions. Different agents might face different physical laws (see “Inflationary multiverse” in the above “Size of the universe” section.) That said, inferring one’s comparative advantages over agents in other universes based on the physical constants in one’s home universe could be challenging.[11]

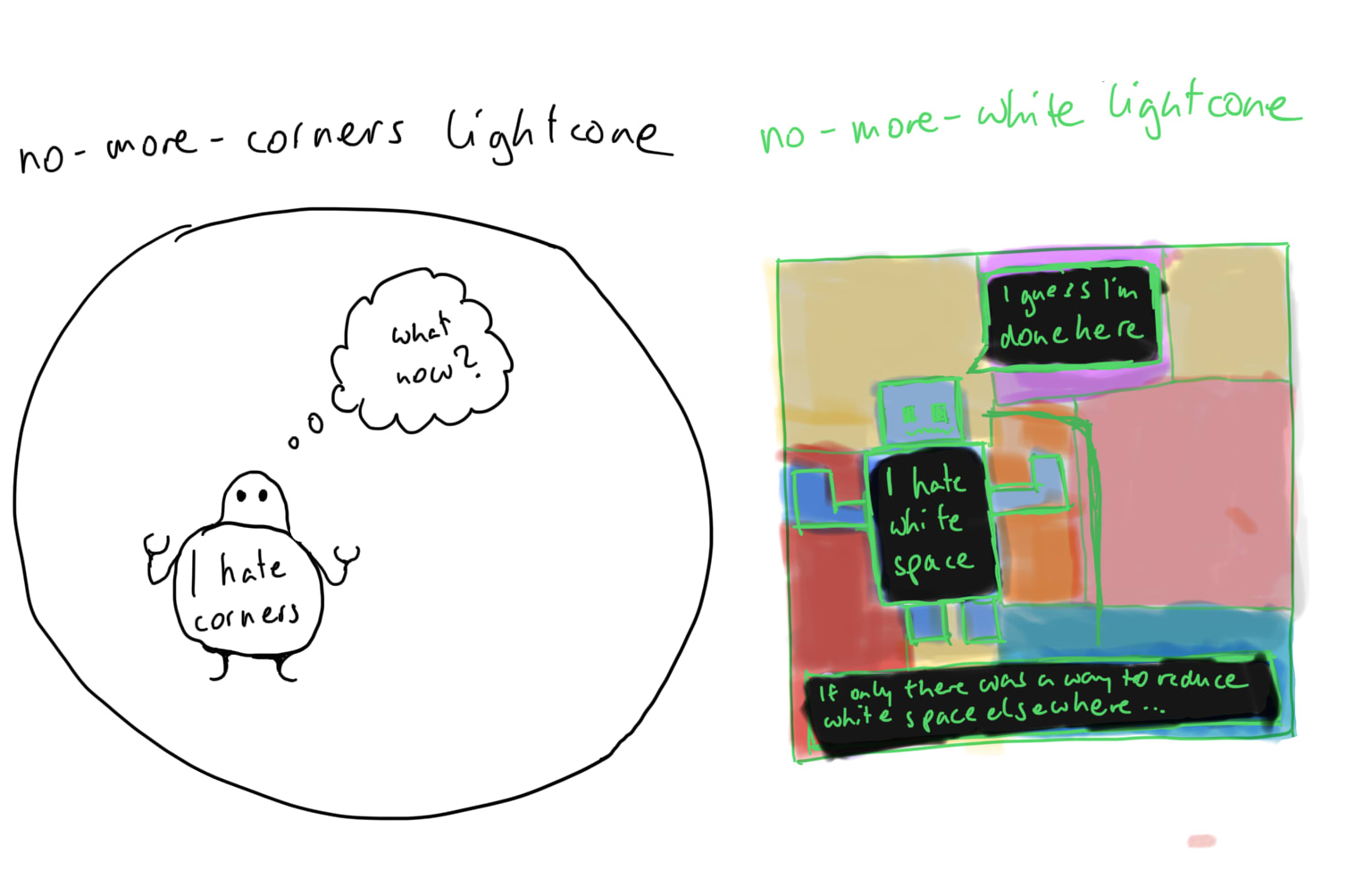

Nonetheless, there might be easier ways to determine one’s physical conditions-based comparative advantage. For instance, different agents might simply have different types of materials available to them.[12] One agent might live in an area with a lot of empty space, while another might have access to dense energy sources. (And it might be fairly easy to realize that you are located in an area that has, say, an unusual frequency of black holes.) If the latter agent values, for example, the creation of a vast, mostly-empty place of worship, and the former values computronium tessellation, then they are each better off optimizing for the other’s values.

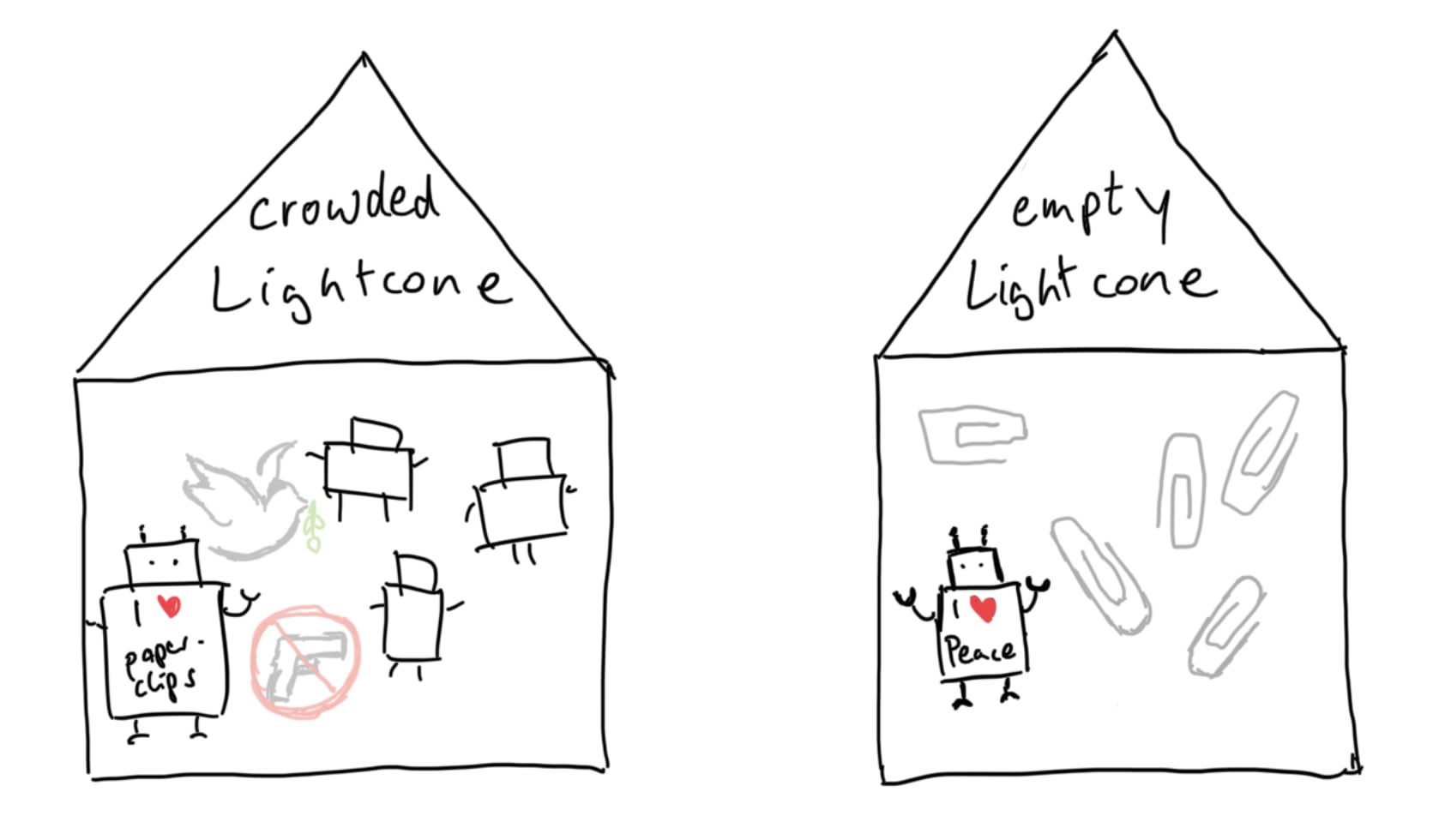

Different neighbors. Different agents might have different numbers and different types of neighbors (aka aliens) in their respective light cones. These factors determine some of the opportunities—i.e., possible cooperative actions—available to any given ECL participant (, especially so if their neighbors are not ECL participants.) For example, an agent in a densely populated and conflict-prone locale is probably well placed to promote peace or preserve cultural diversity. In other words, they possess a comparative advantage in promoting peace or preserving culture. Meanwhile, an agent in a different and perhaps less crowded light cone might have an easier time maximizing paperclips or—more reasonably—hedonium.

Other differences. Many other differences could create comparative advantages. For example, location in time. An agent at the cusp of developing AGI has very different opportunities than an agent close to the heat death of their universe. Even among agents who face similar physical and temporal conditions, there might still be important differences. For instance, the relative ease of building an intent aligned AGI versus building an AGI that engages in ECL.

Contrary to the above, there is an argument against comparative advantages contributing much to gains from trade. Namely, agents might systematically have a comparative advantage at realizing their own values because their environment shaped their values. This being said, we still expect comparative advantages to be a significant reason for gains from cooperation.

3.3 Combinability

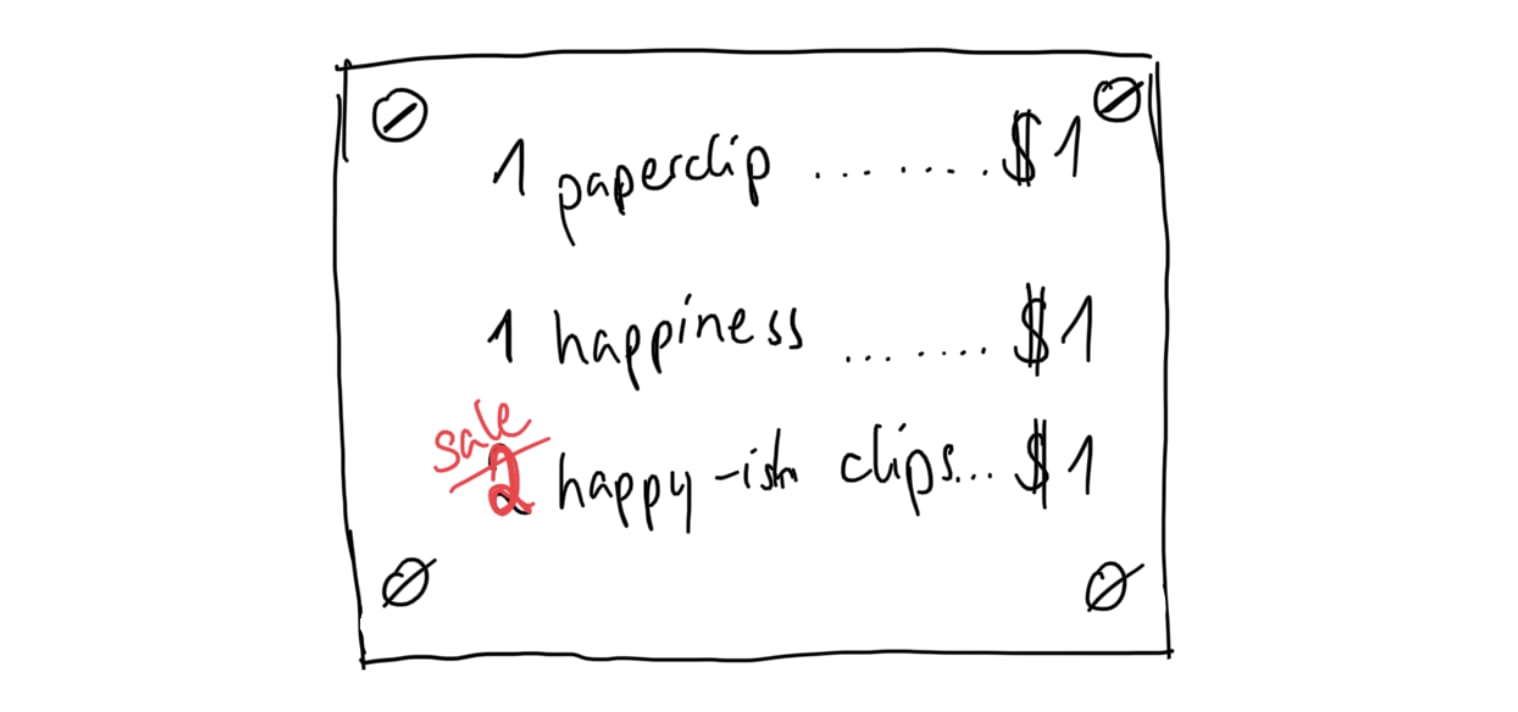

Many values might be easily combinable such that jointly optimizing for them is more efficient than optimizing for them separately. Let’s say, for example, that I can either spend $1 to generate 1 unit of value for me, or $1 to generate 1 unit of value for another agent. Our values are combinable if I can instead spend $1 to generate something where our combined valuation is >1 unit, for example by generating 0.75 units of value for both of us.[13]

Combining values works particularly well if we have partially overlapping or partially opposed values. In the multiversal context, where direct competition is unlikely, the practical implication is that we can focus more on promoting shared values instead of advocating for values that are more likely contested, such as the precise trade-off between happiness and suffering.

Non-overlapping values can also be combinable. Let’s say that I only value minds that experience athletic achievement, and some other agent only values minds that experience friendship. We are each neutral about a mind that does not experience athletic achievement or friendship, respectively. If together we create two minds that experience both achievement and friendship at the same time, for example, minds that experience winning a team sport with their closest friends, then we plausibly create more [experience of athletic achievement + experience of friendship] per resource invested than if we each created a mind fully optimized for the one type of experience we care about. In this way, both agents benefit from cooperating.

There are values that we cannot combine to make their pursuit more efficient. This is the case if the values we are trying to combine are exactly opposed (e.g., I am a paperclip maximizer and they are a paperclip minimizer), otherwise zero-sum (e.g., I value a specific tile being red and they value that same tile being green), or fully overlapping (i.e., the same values). Fully overlapping values are not combinable in the way defined here because trying to benefit an agent’s values does not change my actions if they have the same values as me.[14]

3.4 Gains from cooperation: Conclusion

Overall, we think large gains from cooperation are likely. Note that this is for agents who are cooperating for sure—i.e., before adjusting for cooperation partners not knowing how to benefit the other parties. (We cover this issue of partners potentially not knowing how to benefit each other in our main post, here. Our tentative bottom line is that agents will be fairly competent at cooperating.) We don’t have a conclusive view as to the exact magnitude of these potential gains. This could be a worthwhile research direction: a better idea of the magnitude of cooperation gains would help us figure out how much to prioritize ECL.

4. Acausally influencing agents to harm us

(This appendix section follows on from the “Right kind of acausal influence” paragraph within the “Net gains from cooperation” section of our explainer post.)

4.1 Direct harm

If we cooperate, how many agents with different values from us do we acausally influence toward harming us, as compared to benefitting us (the intended effect)?

There are two chief ways in which we could have this unintended effect of influencing agents to harm us if we cooperate. Firstly, our acausal influence on them (i.e., whatever action we take, they take the opposite action). Secondly, we could acausally influence agents towards cooperating with other agents who have opposite values to us.

The first scenario seems quite implausible, and so we expect negative acausal influence to be rare. For a more in-depth discussion, see Oesterheld (2017a, sec. 2.6.2).

For the second scenario, one might, at first glance, ask oneself the following general question, and to expect its answer to fully address the concern: Is it generally good, or generally bad, if more acausal cooperation happens in the world, from the perspective of our values? The problem with this question, however, is that even if the answer is “generally bad,” this does not necessarily mean we should not take part in acausal cooperation. The naive argument against cooperating in the “generally bad” scenario goes as follows: “I think more acausal cooperation happening in the world is bad from the perspective of my values. Therefore, I should not acausally cooperate, since doing so should acausally influence other agents to not cooperate.”

The problem is that we probably don’t generally influence other agents to not cooperate if we don’t cooperate, because the situation is not symmetric. For some value systems, more acausal cooperation happening in the world is generally good. So, if our policy is “engage in acausal cooperation if more acausal cooperation happening in the world is generally good, from the perspective of our values; otherwise, don’t,” then agents whose decision-making is very similar to ours will employ a similar policy. In considering the question of whether more acausal cooperation happening in the world is generally good, we acausally influence those other agents to also consider the question. However, this would not lead to everyone acausally cooperating less (or more). Instead, those for whose values acausal cooperation is good, engage in cooperation. Those for whose values acausal cooperation is bad, do not engage in cooperation.

Now, imagine again that acausal cooperation is bad for our values. Presumably, this is the case because it is bad for us if certain other agents, for example those with opposite values to us, participate in acausal cooperation and hence better realize their values (which are opposed to ours). However, if they have opposite values to us, it cannot be the case that cooperation is bad for us and bad for them. Hence, they would continue engaging in acausal cooperation even while we refrain. Therefore, we would end up in a situation where we incur the same cost—agents with opposite values from us cooperating and thus getting more of their values realized across the multiverse—and get none of the benefit from cooperating ourselves.

Hence, we must consider the question, “Is it generally good if more acausal cooperation happens, or generally bad?”, from a perspective that is not partial to our particular values. We need to evaluate the question from some other standpoint—for example, our best guess for the distribution of values across the multiverse. Aside from the fact that it would be quite surprising if acausal cooperation turns out to be bad from that standpoint, choosing to not engage in cooperation if it really is net bad would be in and of itself cooperative, because this choice benefits the aggregate of agents in the world.

The above logic leads us to not be very concerned about direct harm through acausal influence.

4.2 Harm through opportunity cost

Lastly, we turn towards the acausal opportunity cost of us trying to benefit agents with other values. In our main post’s toy model, the acausal cost of us cooperating was that we influenced agents with the same values as us to also act cooperatively instead of causally optimizing for their own (and hence our own) values. More generally, we need to consider the following:

- How high is the (opportunity) cost each agent with our values pays if they cooperate, relative to the benefit they gain when an agent with different values cooperates?

- This is addressed in the “Size of potential gains” section of this appendix.

- How strong is our acausal influence over agents with our values versus agents with different values?

- We address this below.

Note: for simplicity, we will continue assuming that agents either have exactly our values or values that are neutral from our perspective.[15]

There are two closely related reasons for why our acausal influence on agents with our values might be much higher than on agents with different values, so as to render ECL action-irrelevant. Firstly, you might think that acausal influence is fragile, such that the only agents you have influence over are close copies of yourself.[16] People with this opinion might think that acausal influence drops off sharply going from “exact copies” to “agents who endorse the same decision theory but who are in slightly different situations” or “agents who endorse the same decision theory but are in different moods that bias their reasoning one way or another.”

Secondly, you might think that while you do have acausal influence over agents who are quite different from you, you systematically have much higher acausal influence over agents who share your values. Relevant acausal influence flows through similarity in the way decisions are made, and an agent’s decision-making might be heavily shaped by factors that also shape their values. (Alternatively, values and decision-making might directly heavily shape each other.) Typically, this view is paired with a fragile conception of acausal influence (i.e., the first reason).

On the other hand, some people think it’s sufficient for two agents to follow the same formal decision theory to establish significant acausal influence. If this is the case, observing that humans with different values seem to share views on decision theory would be enough to establish significant acausal influence on agents with different values. See for example Oesterheld (2017a, sec. 2.6.1). Typically, this view is paired with thinking that acausal influence is fairly commonplace. As discussed in our main post, one reason to think this is that the different details between two agents’ situations might not matter given that they both face the same abstract decision: whether to commit to a policy of cooperation.

How high the ratio of acausal influences can be for ECL to still be action-relevant—i.e., to still recommend “cooperate”—depends on how many agents with our values vs. other values there are, and how much agents can benefit those with other values (compared to how much they can benefit themselves). To illustrate: In our main post’s model, our acausal influence over agents who share our values is twice as strong as that over our agents with different values, and the economic value produced by cooperating is three times higher than that produced by defecting. Therefore, cooperation results in a ~1.5x higher payoff than defection. (Reminder: The numbers in our model are just toy numbers to illustrate how different parameters influence what we should do. They are not rigorous estimates.)

- ^

Vardanyan, Trotta and Silk (2011) find, with 99% probability, that the full universe is at least 251 times larger than the observable universe, and also find, with 95% probability, that the full universe is at least 1000 times larger than the observable universe. Their findings do assume that matter/energy is uniformly distributed, but this is a widely accepted view in cosmology (see, e.g., Wikipedia, n.d.).

In a different paper (2009), the same authors find that if the universe is spatially finite, then it's actually quite small: at most 10^7 Hubble volumes. (A Hubble volume is the size of the observable universe, i.e., about 10^30 stars or 10^19 Milky Way-equivalents.)

- ^

We could not find a survey that establishes that this is in fact the leading theory. The claim is, however, in line with one of the author’s—Will’s—experience studying cosmology at undergraduate level and talking to several cosmology professors.

- ^

- ^

Due to different physical constants. This is noteworthy as a solution—the most popular one—to the fine-tuning problem.

- ^

Technically, there’s no intrinsic discreteness to quantum wavefunction evolution, and so at the fundamental level it’s really Everett smears rather than Everett branches. An observer can dissect a smear and analyze the slices, so to speak, but if the slices are too thin then they will find that the slices still interfere with each other.

- ^

Technically, branches can “join up” again (or, rather, smear slices that are far enough apart to not be interfering can start interfering again), but this is extremely unlikely.

- ^

There have been surprisingly few surveys on quantum mechanics interpretations. We cite the one that seems the most reliable (i.e., sample size on the large end; participants are indeed experts; survey appears to be impartial). My—Will’s—impression based on informal conversations with professional cosmologists is that many-worlds has gained support over the years, and that support for the original Copenhagen interpretation has been declining. Moreover, the few surveys that do exist, including the one we cite in the main text, don’t quite capture what we are most interested in. The most common objections to many-worlds are methodological and/or epistemic—“many-worlds is untestable, so the other branches are not worth thinking about”—rather than ontic—“I actively believe that these other branches don’t exist” (Tegmark, 2010).

- ^

Diminishing returns to resources invested to produce a good are commonplace on earth. Concave (i.e., diminishing returns) production functions are the norm in the economics literature. Things that are linear in how expensive they are to produce (or which, further still, become cheaper to produce the more of it already exists) are rare. Hence, we discuss examples where diminishing returns are a feature. However, it’s not clear that this will hold for advanced civilizations leveraging atomically precise manufacturing to produce things like computronium or hedonium.

- ^

We can also imagine much more exotic values that have diminishing returns in utility within light cones but not across light cones: For example, an agent might care about there being at least one elephant in as many light cones as possible. However, on the face of it, these values seem likely to be rare and hard to predict. On the other hand, the agents who hold them would be very attractive cooperation partners!

- ^

Arguably, diminishing marginal returns within but not across lightcones can be categorized as a source of comparative advantage.

- ^

To do this, one might need to simulate other bubble universes. And these simulations might need to be very accurate, on account of how sensitive the features of a universe are to its parameters (and initial conditions). Additionally, modern string theory suggests that there are something like 10^500 different possible combinations of parameters. This makes obtaining a representative sample of possible universes to identify one’s (and others’) comparative advantage challenging if one has to run very many simulations just to obtain a representative sample of possible universes.

However, there might be workarounds where you get most of the information from randomly selecting a universe to simulate. Various people have informally suggested related ideas to get around computational constraints on simulations.

- ^

Though note that according to the Lambda cold dark matter model, the universe is homogeneous on scales larger than 250 million light-years, which is much smaller than the radius of our observable universe, 46.5 billion light-years.

- ^

Note that this deal working does not require the ability to compare between utility functions. However, comparing utility functions is potentially needed to agree on a specific deal when many different deals are possible.

- ^

That said, we could maybe still gain from coordination or epistemic cooperation.

- ^

In reality, the situation is a bit more complicated than this. Not only are there likely to be agents who only partially share our values, there might also be agents who have (partially) opposite values to us. We don’t think that partially shared values would change the picture very much. In fact, partially shared values might sometimes improve our ability to cooperate, especially if there is a mix of opposed and shared values. Similarly, if we have partially opposite values to another agent, we can cooperate by concentrating on the parts of our goals that are not opposed to each other. However, the situation is more complicated when there are agents who have exactly opposite values to each other. There are a few (sketchy, internal, back of the envelope) attempts to analyze this situation but we are not confident in their conclusions.

- ^

This concern pertains especially to functional decision theory and other decision theories that run on logical causation rather than correlation. For more, see Kollin’s “FDT defects in a realistic Twin Prisoners' Dilemma”: While proponents of functional decision theory—FDT—“want” for FDT to entail pretty broad forms of cooperation (e.g., the sort of cooperation that drives ECL), Kollin points out that FDT might not actually entail such cooperation. The implication is that FDT proponents might need to put forward a new decision theory that does have the cooperation properties they find desirable, or which explains why FDT can cooperate. (Anthony DiGiovanni adds: “Even if you’re more sympathetic to evidential decision theory than to logical causation-based views, you may still have the concern that what constitutes ‘correlation’ between agents’ decisions is an open question. It seems plausible to me the only kind of acausal action-relevant correlations that survive conditioning on the right information come from logical identities.”)

Executive summary: This appendix provides additional details and clarifications on topics covered in the main "ECL explainer" post.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.