{Epistemic Status: Repeating critiques from David Thorstad’s excellent papers (link, link) and blog, with some additions of my own. The list is not intended to be representative and/or comprehensive for either critiques or rebuttals. Unattributed graphs are my own and more likely to contain errors.}

I am someone generally sympathetic to philosophical longtermism and total utilitarianism, but like many effective altruists, I have often been skeptical about the relative value of actual longtermism-inspired interventions. Unfortunately, though, for a long time I was unable to express any specific, legible critiques of longtermism other than a semi-incredulous stare. Luckily, this condition has changed in the last several months since I started reading David Thorstad’s excellent blog (and papers) critiquing longtermism.[1] His points cover a wide range of issues, but in this post, I would like to focus on a couple of crucial and plausibly incorrect modeling assumptions Thorstad notes in analyses of existential risk reduction, explain a few more critiques of my own, and cover some relevant counterarguments.

Model assumptions noted by Thorstad

1. Baseline risk (blog post)

When estimating the value of reducing existential risk, one essential – but non-obvious – component is the ‘baseline risk’, i.e., the total existential risk, including risks from sources not being intervened on.[2]

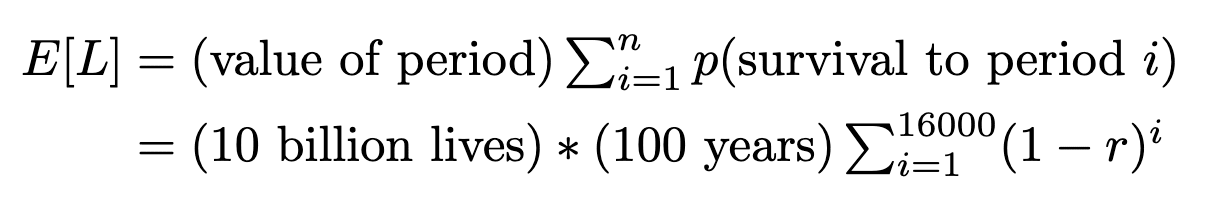

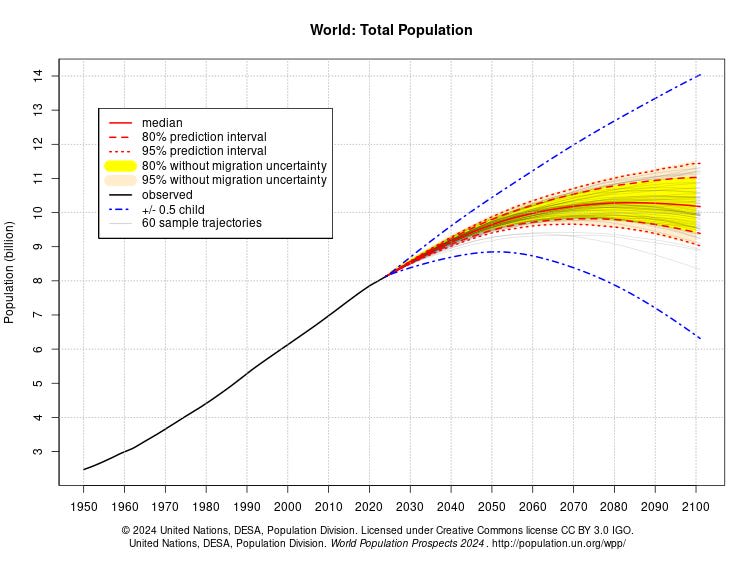

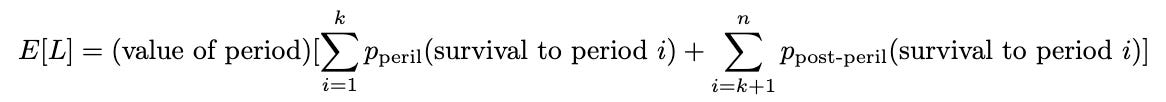

To understand this, let’s start with an equation for the expected life-years E[L] in the future, parameterized by a period existential risk (r), and fill it with respectable values:[3]

Now, to understand the importance of baseline risk, let’s start by examining an estimated E[L] under different levels of risk (without considering interventions):

Here we can observe that the expected life-years in the future drops off substantially as the period existential risk (r) increases and that the decline (slope) is greater for smaller period risks than for larger ones. This finding might not seem especially significant, but if we use this same analysis to estimate the value of reducing period existential risk, we find that the value drops off in exactly the same way as baseline risk increases.

Indeed, if we examine the graph above, we can see that differences in baseline risk (0.2% vs. 1.2%) can potentially dominate tenfold (1% vs. 0.1%) differences in absolute period existential risk (r) reduction.

Takeaways from this:

(1) There’s less point in saving the world if it’s just going to end anyway. Which is to say that pessimism about existential risk (i.e. higher risk) decreases the value of existential risk reduction because the saved future is riskier and therefore less valuable.

(2) Individual existential risks cannot be evaluated in isolation. The value of existential risk reduction in one area (e.g., engineered pathogens) is substantially impacted by all other estimated sources of risk (e.g. asteroids, nuclear war, etc.). It is also potentially affected by any unknown risks, which seems especially concerning.

2. Future Population (blog post)

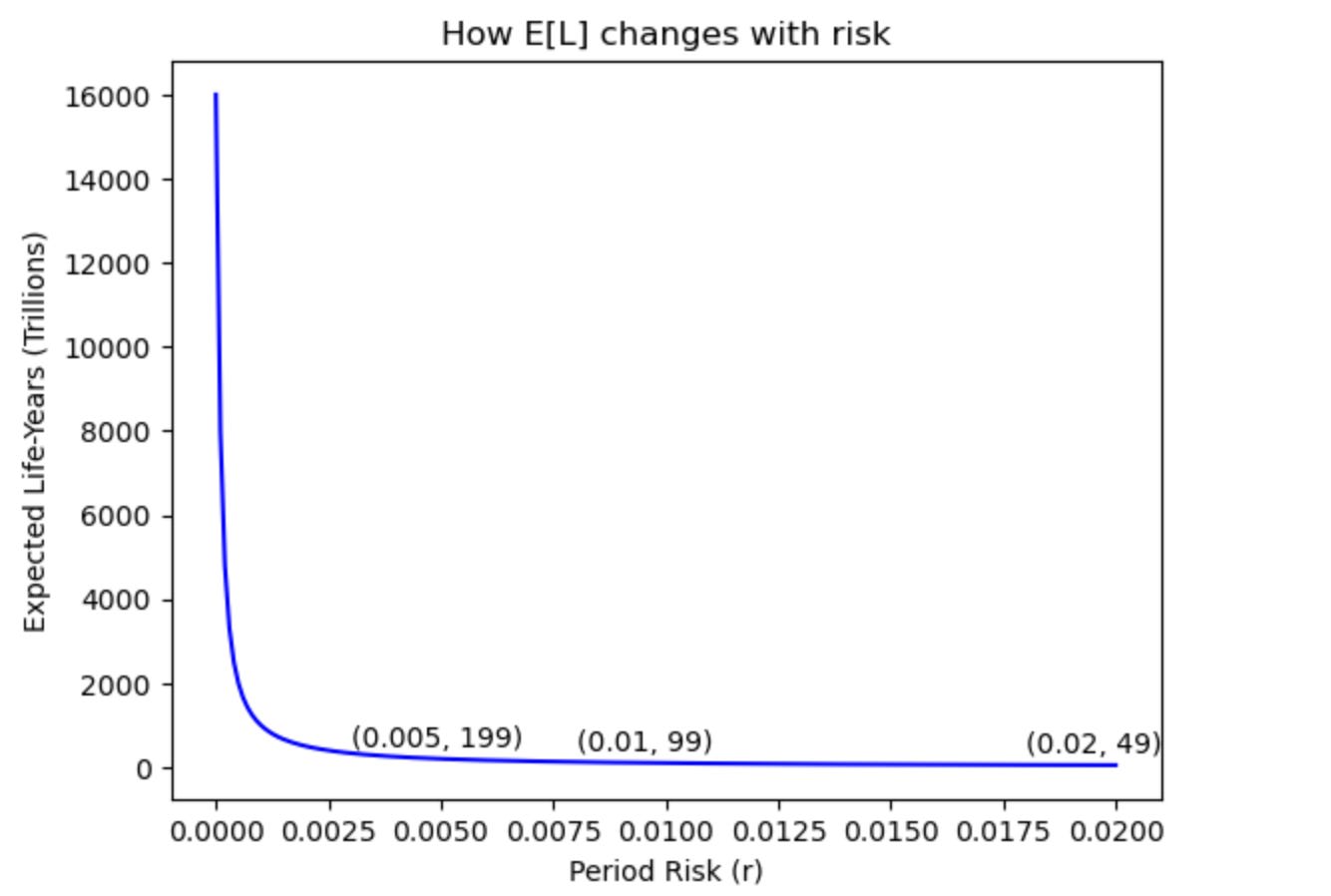

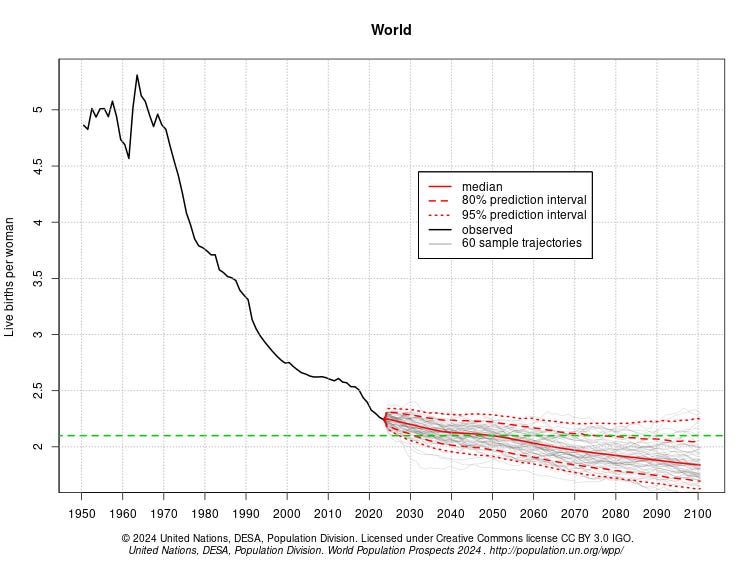

When calculating the benefits of reduced existential risk, another key parameter choice is the estimate of future population size. In our model above, we used a superficially conservative estimate of 10 billion for the total future population every century. This might seem like a reasonable baseline given that the current global population is approximately 8 billion, but once we account for current and projected declines in global fertility, this assumption shifts from appearing conservative to appearing optimistic.

United Nations modeling currently projects that global fertility will fall below replacement rate around 2050 and continue declining from there. The effect is that total population is expected to begin dropping around 2100.

If you take the U.N. models and try to extrapolate them out further, the situation begins to look substantially more dire since fertility declines below replacement compound each generation to quickly shrink populations.

Takeaways from this:

(1) Current trends suggest that future populations may be substantially smaller than the current population. Neither growing nor constant future populations are presumable as default outcomes.

(2) The fewer people there are in the future, the less valuable it is to save. This effect can plausibly drop the value of existential risk reduction by nearly an order of magnitude (from 10 billion or more lives per century to 2 billion or fewer lives) on conservative estimates.[4] Decreases can reach multiple orders of magnitude if original estimates are larger, such as from assuming population reaches carrying capacity.

Other model assumptions/considerations I think are important

1. Intervention decay

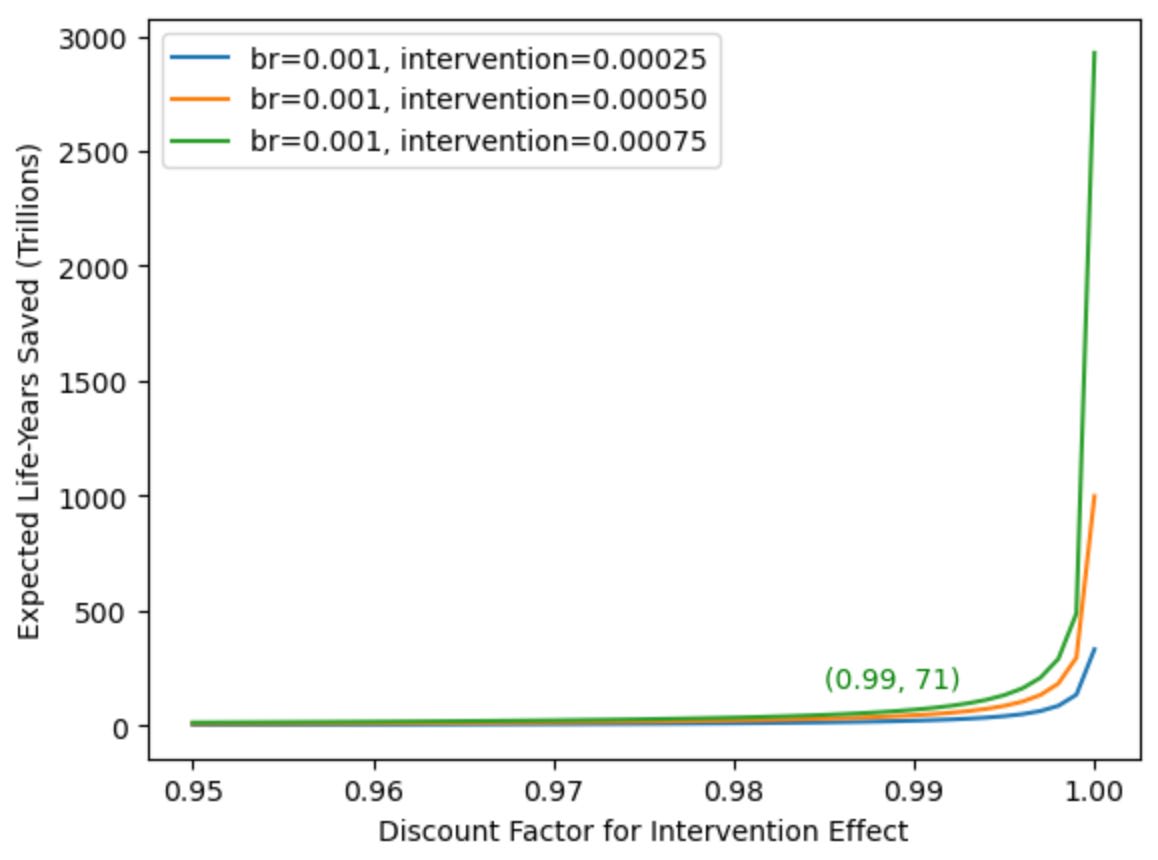

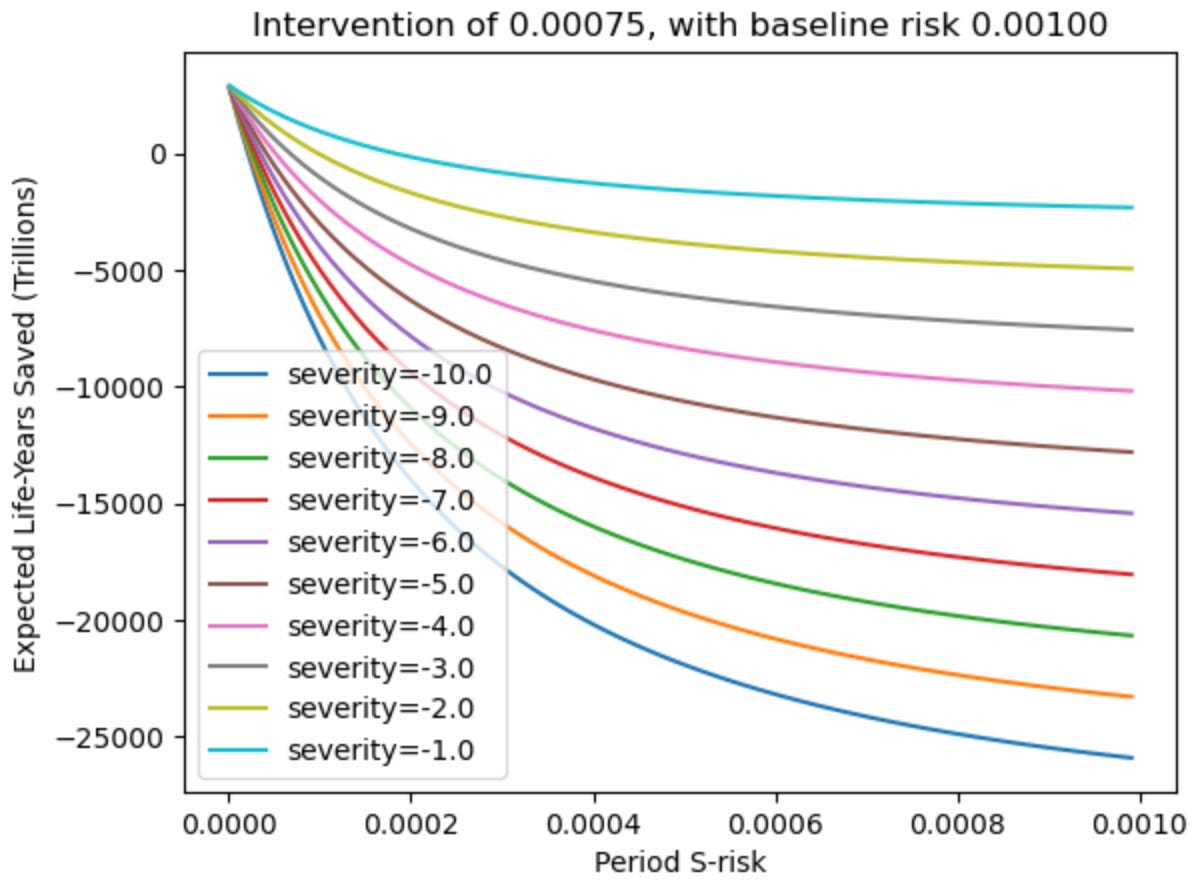

One important assumption made in the model above is that an intervention reduces period existential risk uniformly across centuries. This is an extremely implausible assumption. In order to understand the impact of this assumption, let’s model the decay of intervention effects with a constant discount factor each period.

We can then see the effect of changing this new parameter on the value of existential risk reduction on our original model.

Looking at these estimate graphs, it seems clear that intervention decay has a substantial negative effect on the value of existential risk reduction, even in very optimistic scenarios. Moving from no discount to a discount of 0.99 per century can result in an estimated value decrease in the range of 80-97% (as seen above).

Takeaways from this:

(1) Introducing even very small intervention decay into our model can result in extremely diminished value of existential risk reduction.

(2) Intervention decay is likely to be much more severe for at least two reasons. First, continual effects require extremely strong inter-generational coordination or lock-in. Second, the risks faced by future civilizations are likely to be compositionally different from current risks. As a result, we should expect interventions today to have progressively less impact since new dangers will grow as a proportion of risk.

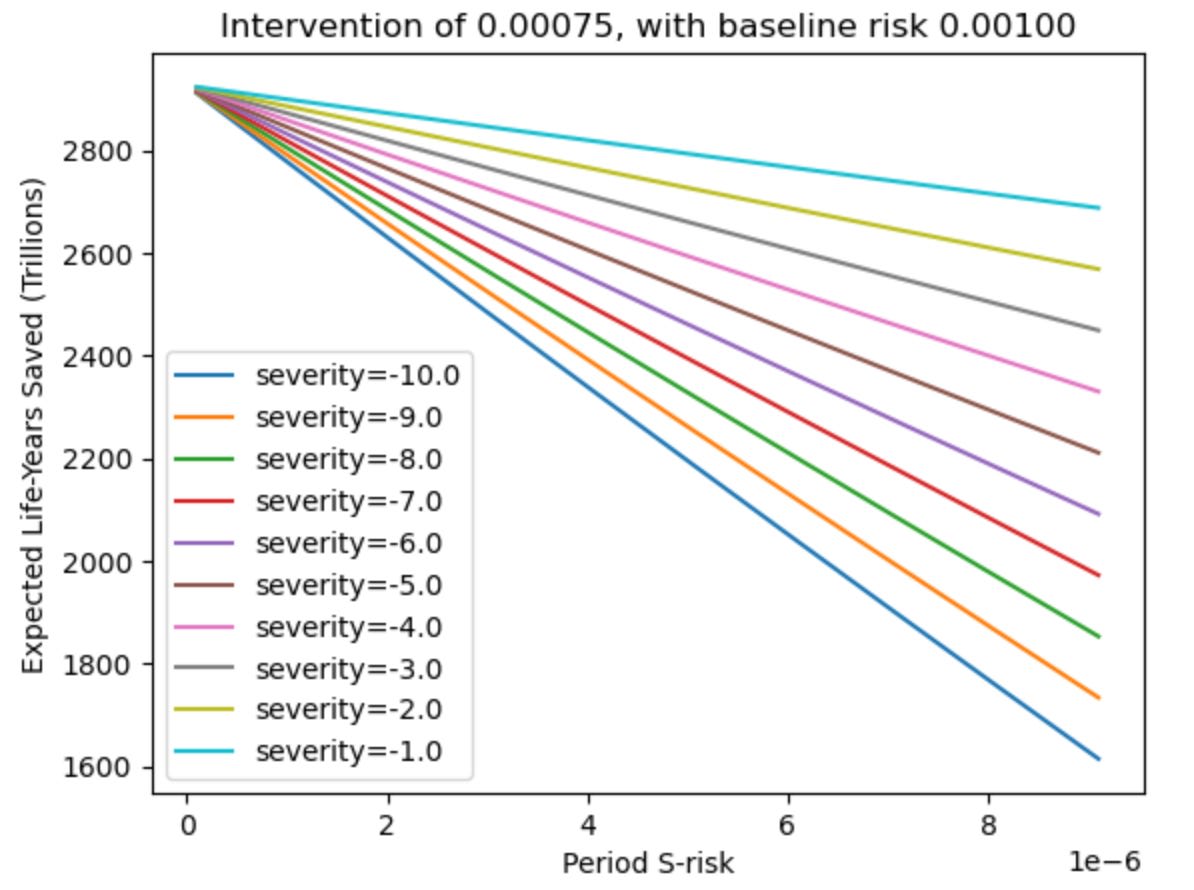

2. Suffering risks (S-risks)

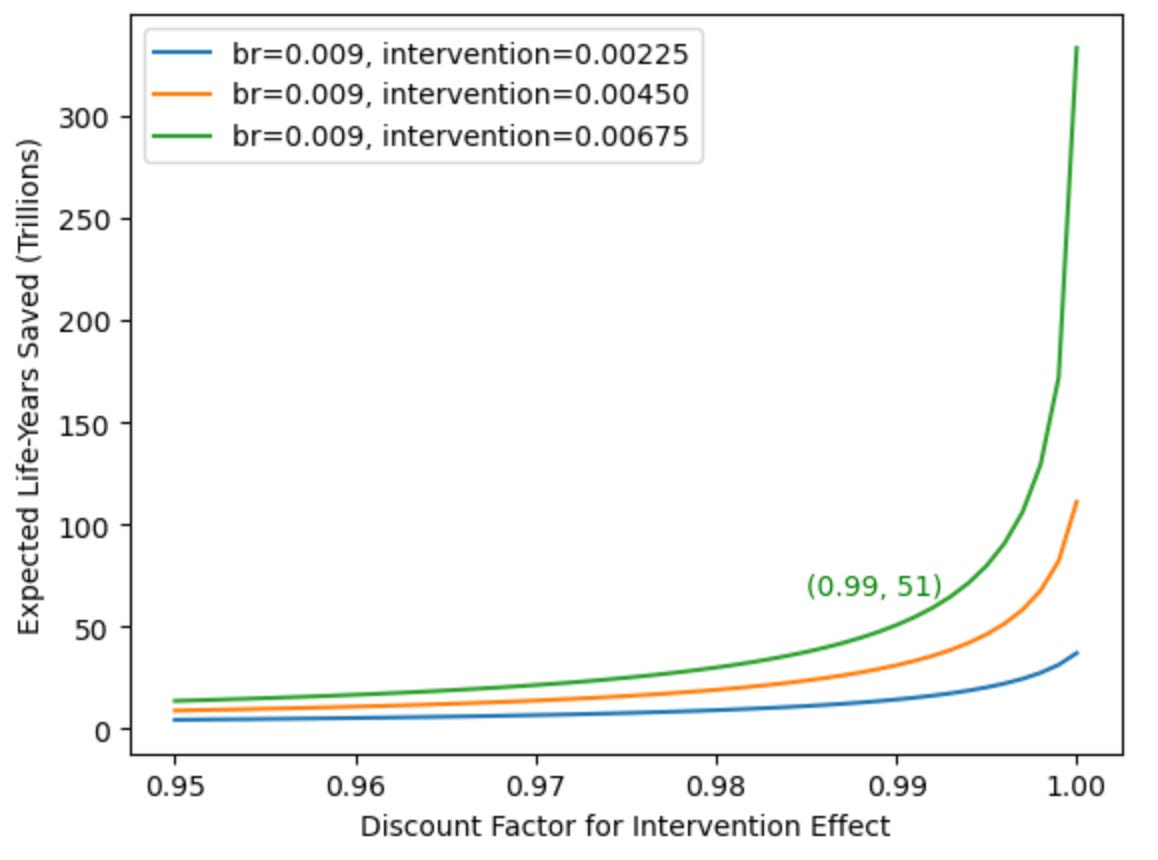

In the original model, the value of an existing future is always positive, but this is obviously not guaranteed. Although mostly speculative, it is important to also take account of S-risks such as mass expansion of factory farming or unending and catastrophic war. To see the impact of these risks on our model, we can add two parameters, the probability of an S-risk and its severity, and see how they affect the value of existential risk mitigation. Here is the revised model:[5]

Now we can take a look at the model outputs.

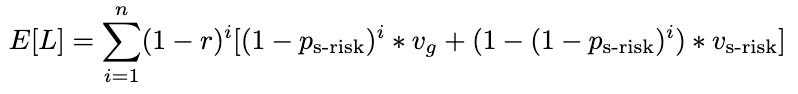

First we have the super scary graph:

Looking at this graph you can observe the obvious point that if either the severity or period probability of an S-risk increases, then (all else held equal) the value of existential risk reduction decreases. Of course, while this graph gives a good view of the general distribution shape, my expectation is that both the probabilities and severities depicted are misleadingly large. For more conservative outputs, we can zoom in to the top left corner of the graph:

Takeaways from this:

(1) Unlike the previous items mentioned in this post, the question of whether S-risks have a strong effect on the benefits of existential risk reduction is debatable. It seems plausible to me that reasonable people would mostly agree that per-century risk is in the ‘one in a million or less’ bucket, but your priorage may vary. Regarding the severity of S-risks, I am considerably more uncertain, but I think the outcomes modeled above suggest that higher probabilities are more concerning than greater severity at the current margin.

(2) As before, the effect of interventions is strongly affected by baseline risk and the impact of S-risks can be highly variable across (intervention, baseline risk) pairs once for larger period S-risk probabilities. The variance is substantially smaller at low probabilities.

Counter-arguments for the value of existential risk reduction

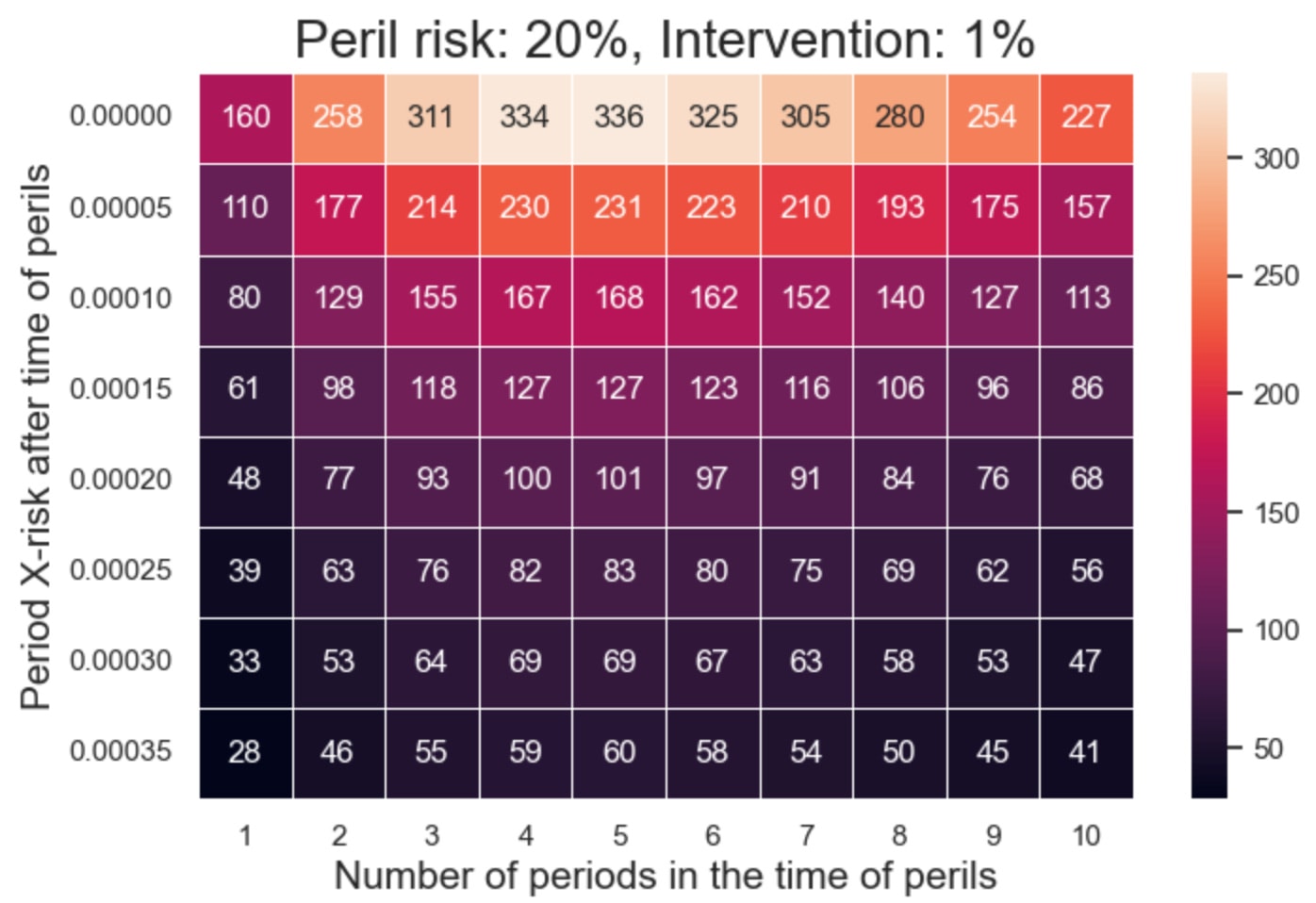

1. The ‘time of perils hypothesis’ versus ‘baseline risk’ and ‘intervention decay’

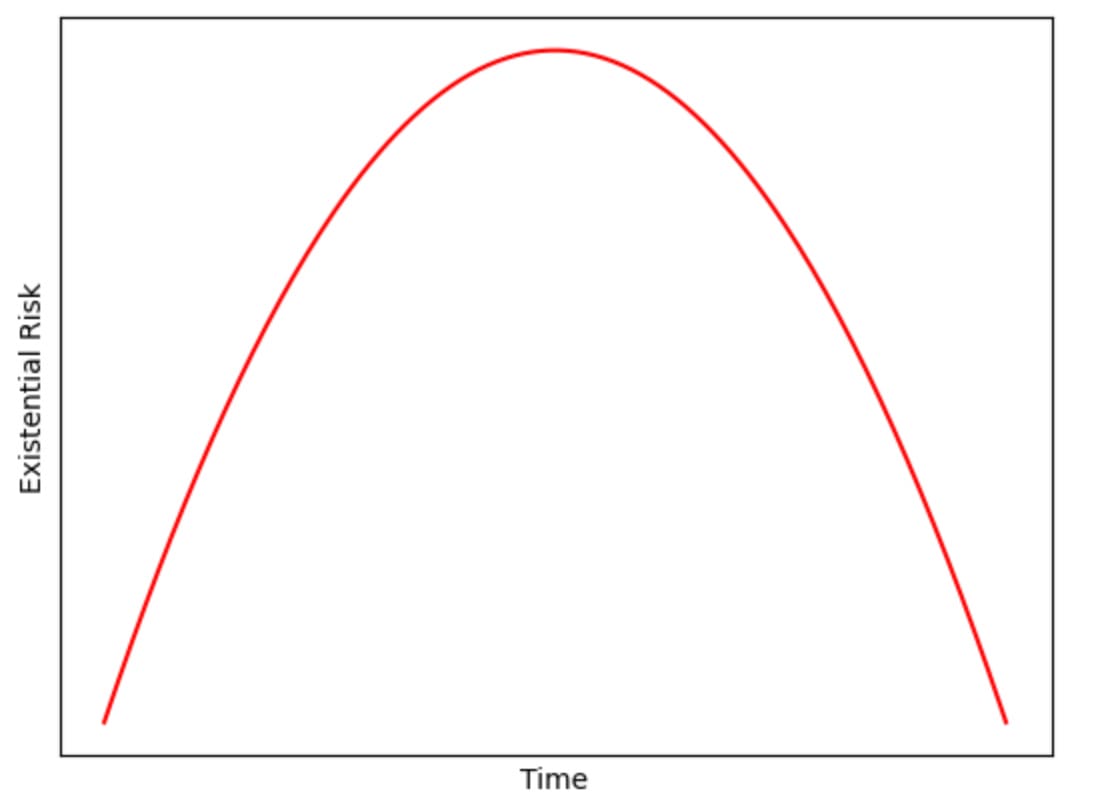

The baseline risk assumption highlighted above seems to suggest that high existential risk estimates should decrease the expected value of existential risk reduction. Despite this, longtermist analysis often suggests there is both a high existential risk and a high value to longtermist interventions. This seems paradoxical until you account for the ‘time of perils hypothesis,’ which suggests that existential risk may be high and/or increasing right now, but that as civilization improves, risk will fall to extremely low levels following a Kuznets curve.

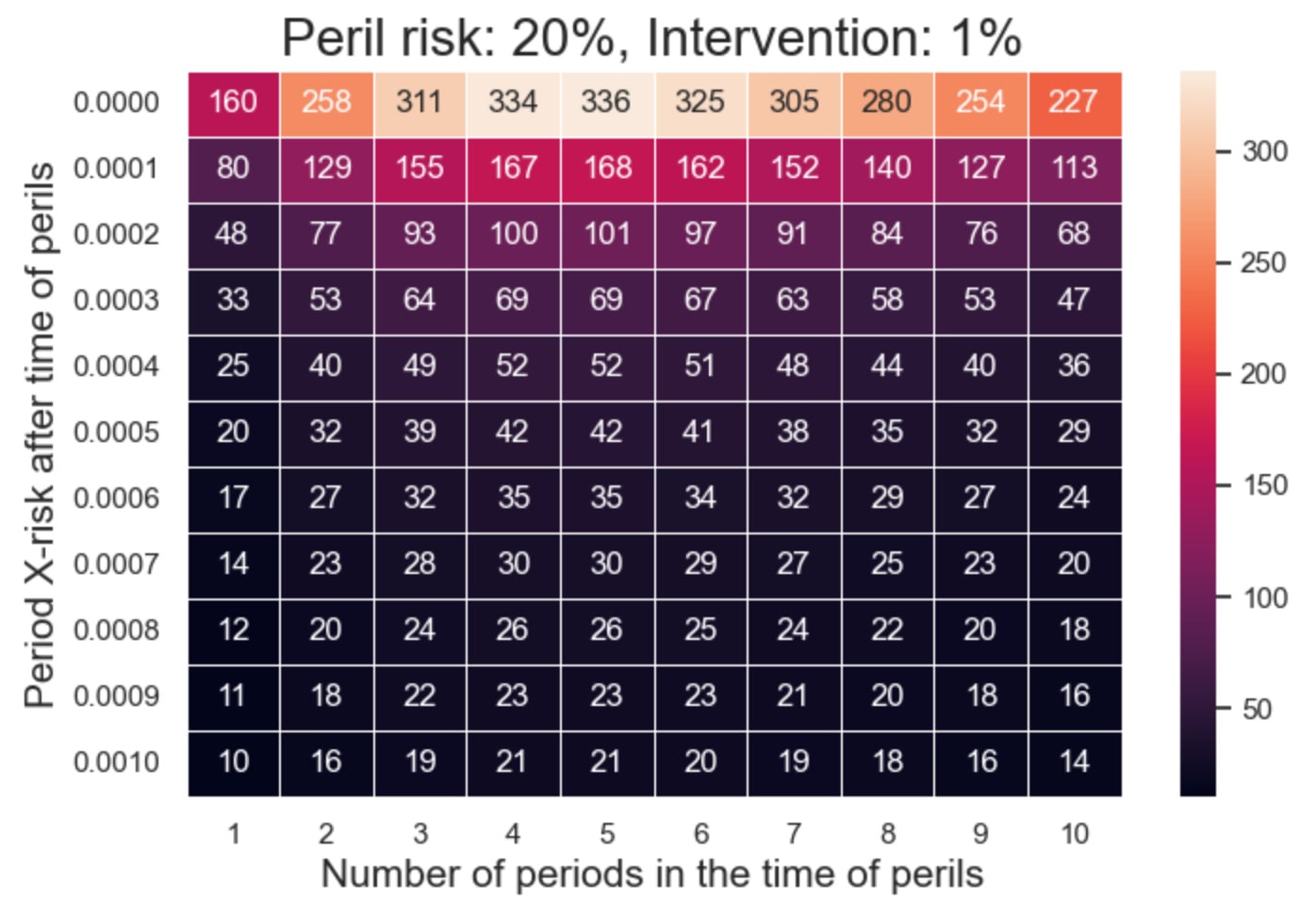

This new model can successfully save the value of longtermist interventions from both the baseline risk problem and intervention decay problem. The baseline risk problem is addressed by lowering future ‘after perils’ risk and the intervention decay problem is addressed by moving risk into periods where interventions have yet to decay. However, this new model requires both that the time of perils is short and that the reduction in risk is very substantial after the time of perils. We can adapt our original model by adding a new “time of perils” to our model:

Then we can see how the value of interventions changes with respect to new parameters.

Looking at these outputs, we can see that even after applying the time of perils hypothesis, estimates of the value of existential risk reduction are still substantially affected by baseline estimates for period existential risk (after the time of perils). As Thorstad has noted, these extremely low estimates for period risk seem especially implausible under the ‘time of perils’ hypothesis because the relative risk reduction needed to achieve low post-peril risk is substantially higher than if there were no ‘time of perils.’

Takeaways from this:

(1) The time of perils hypothesis can successfully defend the dual claim that existential risks are high and that reducing them is extremely valuable. However, endorsing this view likely requires fairly speculative claims about how existing risks will nearly disappear after the time of perils has ended.

(2) Even with the time of perils hypothesis, the value of existential risk mitigation is still highly impacted by small changes in estimated future risk.

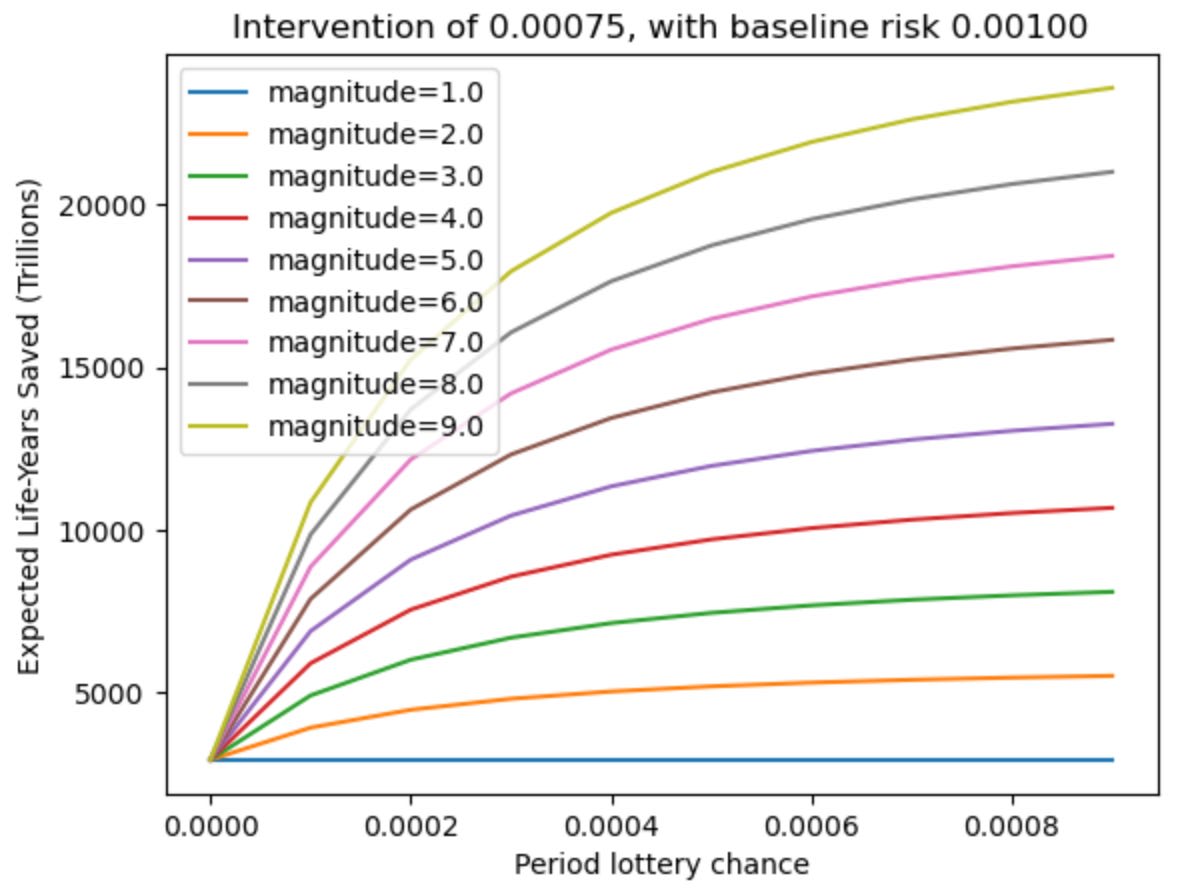

2. Hedonic lotteries

Since I’ve discussed S-risks as a potentially important consideration, I feel it would be disingenuous to skip their positive mirror ‘hedonic lotteries’ which are potential symmetrically massive improvements in wellbeing. To model the effect of these in isolation, I will just use the S-risk model again, but with positive magnitudes, rather than negative ones. Here are the two ‘hedonic lottery’ versions of the graphs from the S-risk section.

Takeaways from this:

(1) Hedonic lotteries appear to be approximately (though not exactly) symmetric with S-risks in their effect on future value. Still, imbalances in either probabilities or magnitudes between the two can easily allow them to be impactful.

(2) Though not obvious from the two graphs above, baseline risk remains relevant for determining the impact of hedonic lotteries on the value of existential risk reduction.

Final thoughts

The main two factors motivating this post were (1) reading David Thorstad’s criticisms of longtermist analysis and (2) seeing that many of his critiques were presented only with point estimates, rather than more continuous graph,s. I hope that this post managed to add some value on that second front.

I also want to note that the specific estimates in this post were fairly limited in terms of the models and values considered. That said, the considerations examined are likely relevant to many analyses. Ultimately, I think this is an area where a team like Rethink Priorities could improve general practice by creating an X-risk intervention value calculator.[6] I have considered making such a tool myself, but haven’t gotten around to it.

{Code used to generate plots is available at https://github.com/ohmurphy/longtermism-sensitivity-analysis/tree/main}

- ^

This was lucky insofar as it gave me a better way to explicitly model my doubts. In utility terms, I expect that this news is unfavorable since it mostly involves decreasing the expected value of the future.

- ^

Thorstad actually uses the ‘Background Risk’, which (I think) only contains risks outside the current intervention field. I’ve used ‘baseline risk’ to combine both domain and background risk since it seems simpler to me.

- ^

The constants here are set to approximately match the arrangement presented in Existential Risk and Cost-Effective Biosecurity, where the future is modeled with a “global population of 10 billion for the next 1.6 million years.” Periods are set as centuries, which is a difference from their model since they only use cumulative, rather than period risk. This was another point of criticism by Thorstad, but I decided to skip discussing it in this post. The equation used is approximately copied from Three Mistakes in Moral Mathematics of Existential Risk, but with the number of periods increased to better match the MSB paper.

- ^

It is also plausible that smaller populations would experience greater existential risk since smaller, catastrophic risks might become existential. This would further reduce the value of existential risk reduction.

- ^

This model assumes the S-risks are attractor states, so once reached they never return to a “normal” state. I think it would be worth modeling possible reversions explicitly, but I simplifying for the moment.

- ^

Some ideas for inclusion: (1) parameter and equation options, (2) simple diagram generation, (3) highlighting of key assumptions (ones with high value of information), (4) estimates taken from different experts/research/forecasts made available as parameters, (5) ability to apply differing moral systems, (6) cost-effectiveness estimates relative to GiveWell recommendations.

My central objection to Thorstad's work on this is the failure to properly account for uncertainty. Attempting to exclusively model a most-plausible scenario, and draw dismissive conclusions about longtermist interventions based solely on that, fails to reflect best practices about how to reason under conditions of uncertainty. (I've also raised this criticism against Schwitzgebel's negligibility argument.) You need to consider the full range of possible models / scenarios!

It's essentially fallacious to think that "plausibly incorrect modeling assumptions" undermine expected value reasoning. High expected value can still result from regions of probability space that are epistemically unlikely (or reflect "plausibly incorrect" conditions or assumptions). If there's even a 1% chance that the relevant assumptions hold, just discount the output value accordingly. Astronomical stakes are not going to be undermined by lopping off the last two zeros.

Tarsney's Epistemic Challenge to Longtermism is so much better at this. As he aptly notes, as long as you're on board with orthodox decision theory (and so don't disproportionately discount or neglect low-probability possibilities), and not completely dogmatic in refusing to give any credence at all to the longtermist-friendly assumptions (robust existential security after time of perils, etc.), reasonable epistemic worries ultimately aren't capable of undermining the expected value argument for longtermism.

(These details can still be helpful for getting better-refined EV estimates, of course. But that's very different from presenting them as an objection to the whole endeavor.)

This feels like an isolated demand for rigour, since as far as I can see Thorstad's[1] central argument isn't that a particular course of the future is more plausible, but that [popular representations of] longtermist arguments themselves don't consider the full range of possibilities, don't discount for uncertainty, and that apparently modest-sounding claims that existential risk is non-zero and that humanity could last a long time if we survive near-term threats are compatible only if one makes strong claims about the hinginess of history[2]

I don't see him trying to build a more accurate model of the future[3] so much as pointing out how very simple changes completely change longtermist models. As such, his models are intentionally simple and Owen's expansion above adds more value for anyone actively trying to model a range of future scenarios. But I'm not sure why it would be incumbent on the researcher arguing against choosing a course of action based on long term outcomes to be the one who explicitly models the entire problem space. I'd turn that around and question why longtermists who don't consider the whole endeavour of predicting the long term future in our decision theory to be futile generally dogmatically reject low probability outcomes with Pascalian payoffs that favour the other option, or to simply assume the asymmetry of outcomes works in their favour.

Now personally I'm fine with "no, actually I think catastrophes are bad", but that's because I'm focused on the near term where it really is obvious that nuclear holocausts aren't going to have a positive welfare impact. Once we're insisting that our decisions ought to be guided by tiny subjective credences in far future possibilities with uncertain likelihood but astronomic payoffs and that it's an error not to factor unlikely interstellar civilizations into our calculations of what we should do if they're big enough, it seems far less obvious that the astronomical stakes skew in favour of humanity.

The Tarsney paper even explicitly models the possibility of non-human galactic colonization, but with the unjustified assumption that no non-humans will be of converting resources to utility at a higher rate than [post]humans, so their emergence as competitors for galactic resources merely "nullifies" the beneficial effects of humanity surviving. But from a total welfarist perspective, the problem here isn't just that maximizing the possible welfare across the history of the universe may not be contingent on the long term survival of the human species,[4] but that humans surviving to colonise galaxies might diminish galactic welfare. Schweitzgebel's argument that actually human extinction might be net good for total welfare is only a mad hypothetical if you reject fanaticism: otherwise it's the logical consequence of accepting the possibility, however small, that a nonhuman species might convert resources to welfare much more efficiently than us.[5] Now a future of decibillions of aliens building Dyson Spheres all over the galaxy because there's no pesky humans in their way sounds extremely unlikely, and perhaps even less likely than a galaxy filled with the same fantastic tech to support quadrillions of humans - a species we at least know exists and has some interest in inventing Dyson Spheres - but despite this the asymmetry of possible payoff magnitudes may strongly favour not letting us survive to colonise the galaxy.[6]

In the absence of any particular reason for confidence that the EV of one set of futures is definitely higher than the others, it seems like you end up reaching for heuristics like "but letting everyone die would be insane". I couldn't agree more, but the least arbitrary way to do that is to adjust the framework to privilege the short term with discount rates sufficiently high to neuter payoffs so speculative and astronomical we can't rule out the possibility they exceed the payoff from [not] letting eight billion humans die[7] Since that discount rate reflects extreme uncertainty about what might happen and what payoffs might look like, it also feels more epistemically humble than basing an entire worldview on the long tail outcome of some low probability far futures whilst dismissing other equally unsubstantiated hypotheticals because their implications are icky. And I'm pretty sure this is what Thorstad wants us to do, not to place high credence in his point estimates or give up on X-risk mitigation altogether.

For the avoidance of doubt I am a different David T ;-)

which doesn't of course mean that hinginess is untrue, but does make it less a general principle of caring about the long term and more a relatively bold and specific claim about the distribution of future outcomes.

in the arguments referenced here anyway. He has also written stuff which attempts to estimate different XR base rates from those posited by Ord et al, which I find just as speculative as the longtermists'

there are of course ethical frameworks other than maximizing total utility across all species which give us reason to prefer 10^31 humans over a similarly low probability von Neumann civilization involving 10^50 aliens or a single AI utility monster (I actually prefer them, so no proposing destroying humanity as a cause area from me!) but they're a different from the framework Tarsney and most longtermists use, and open the door to other arguments for weighting current humans over far future humans.

We're a fairly stupid, fragile and predatory species capable of experiencing strongly negative pain and emotional valences at regular intervals over fairly short lifetimes, with competitive social dynamics, very specific survival needs and a wasteful approach to consumption, so it doesn't seem obvious or even likely that humanity and its descendants will be even close to the upper bound for converting resources to welfare...

Of course, if you reject fanaticism, the adverse effects of humans not dying in a nuclear holocaust on alien utility monsters are far too remote and unlikely and frankly a bit daft to worry about. But if you accept fanaticism (and species-neutral total utility maximization), it seems as inappropriate to disregard the alien Dyson spheres as the human ones...

Disregarding very low probabilities which are subjective credences applied to future scenarios we have too little understanding of to exclude (rather than frequencies inferred from actual observation of their rarity) is another means to the same end, of course.

The point about accounting for uncertainty is very well taken. I had not considered possible asymmetries in the effects of uncertainty when writing this.

On longtermism generally, I think my language in the post was probably less favorable to longtermism than I would ultimately endorse. As you say, the value of the future remains exceptionally large even after reduction by a few orders of magnitude, a fact that should hopefully be clear from the units (trillions of life-years) used in the graphs above.

If I have time in future, I may try to create new graphs for sensitivity and value that take into account uncertainty.

This is a cool piece of work! I have one criticism, which is much the same as my criticism of Thorstad's argument:

I think not believing this requires fairly speculative claims if a potential 'end of time of perils' we envisage is just human descendants spreading out across planets and then stars. Keeping current nonspeculative risks (eg nukes, pandemics, natural disasters) approximately constant per unit volume, the risk to all of human descendants would rapidly approach 0 as the volume we inhabited increased.

So for it to stay anywhere near constant, you need to posit that there's some risk that is equally as capable of killing an interstellar civilisation as a single-planet one. This could be misaligned AGI, but AGI development isn't constant - if there's something that stops us from creating it in the next 1000 years, that something might be evidence that we'll never create it. If we have created it by then, and it hasn't killed us, then it seems likely that it never will.

So you need something else, like the possibility of triggering false vacuum decay, to imagine a 'baseline risk' scenario.

Positing an interstellar civilization seems to be exactly what Thorstad might call a "speculative claim" though. Interstellar civilization operating on technology indistinguishable from magic is an intriguing possibility with some decent arguments against (Fermi, lightspeed vs current human and technological lifespans) rather than something we should be sufficiently confident of to drop our credences in the possibility of humans becoming extinct down to zero in most years after the current time of perils,[1] and even if it were achieved I don't see why nukes and pandemics and natural disaster risk should be approximately constant per planet or other relevant unit of volume for small groups of humans living in alien environments[2]

Certainly this doesn't seem like a less speculative claim than one sometimes offered as a criticism of longtermism's XR-focus: that the risk of human extinction (as opposed to significant near-term utility loss) from pandemics, nukes or natural disasters is already zero[3] because of things that already exist. Nuclear bunkers, isolation and vaccination and the general resilience of even unsophisticated lifeforms to natural disasters are congruent with our current scientific understanding in a way which faster than light travel isn't, and the farthest reaches of the galaxy aren't a less hostile environment for human survival than a post-nuclear earth.

And of course any AGI determined to destroy humans is unlikely to be less capable than relatively stupid, short-lived, oxygen-breathing lifeforms in space, so the AGI that destroys humans after they acquire interstellar capabilities is no more speculative than the AI that destroys humans next Tuesday. A persistent stable "friendly AI" might insulate humans from all these risks if sufficiently powerful (with or without space travel) as you suggest but that feels like an equally speculative possibility - and worse still one which many courses of action aimed at mitigating AI risk have a non-zero possibility of inadvertently working against....

if the baseline rate after the current time of peril is merely reduced a little by the nonzero possibility that interstellar travel could mitigate x-risk but remains nontrivial, the expected number of future humans alive still drops off sharply the further we go into the future (at least without countervailing assumptions about increased fecundity or longevity)

Individual human groups seem significantly less likely to survive a given generation the smaller they are and further they are from earth and the more they have to travel, to the point where the benefit against catastrophe of having humans living in other parts of the universe might be pretty short lived. If we're not disregarding small possibilities there's the possibility of a novel existential risk from provoking alien civilizations too...

I don't endorse this claim FWIW, though I suspect that making humans extinct as opposed to severely endangered is more difficult than many longtermists predict.

'Indistinguishable from magic' is a huge overbid. No-one's talking about FTL travel. There 's nothing in current physics that prevents us building generation ships given a large enough economy, and a number of options consistent with known physics for propelling them some of which have already been developed, others of which are tangible but not yet in reach, others of which get pretty outlandish.

Pandemics seem likely to be relatively constant. Biological colonies will have strict atmospheric controls, and might evolve (naturally or artificially) to be too different from each other for a single virus to target them all even if it could spread. Nukes aren't a threat across star systems unless they're accelerated to relativistic speeds (and then the nuclear-ness is pretty much irrelevant).

I don't know anyone who asserts this. Ord and other longtermists think it's very low, though not because of bunkers or vaccination. I think that the distinction between killing all and killing most people is substantially less important than those people (and you?) believe.

This is an absurd claim.

"Indistinguishable from magic" is an Arthur C Clarke quote about "any sufficiently advanced technology", and I think you're underestimating the complexity of building a generation ship and keeping it operational for hundreds, possibly thousands of years in deep space. Propulsion is pretty low on the list of problems if you're skipping FTL travel, though you're not likely to cross the galaxy with a solar sail or a 237mN thruster using xenon as propellant. (FWIW I actually work in the space industry and spent the last week speaking with people about projects to extract oxygen from lunar regolith and assemble megastructures in microgravity, so it's not like I'm just dismissing the entire problem space here)

I'm actually in agreement with that point, but more due to putting more weight on the first 8 billion than the hypothetical orders of magnitude more hypothetical future humans. (I think in a lot of catastrophe scenarios technological knowledge and ambition rebounds just fine eventually, possibly stronger)

Why is it absurd? If humans can solve the problem of sending a generation ship to Alpha Centurai, an intelligence smart (and malevolent) enough to destroy 8 billion humans in their natural environment surely isn't going to be stymied by the complexities involved in sending some weapons after them or transmitting a copy of itself to their computers...

That's an interesting point. I'm a bit skeptical of modeling risk as constant per unit volume since almost all of the volume bordered by civilizations will be empty and not contributing to survival. I think a better model would just use the number of independent/disconnected planets colonized. I also expect colonies on other planets to be more precarious than civilization on Earth since the basic condition of most planets is that they are uninhabitable. That said, I do take the point that an interstellar civilization should be more resilient than a non-interstellar one (all else equal).

Yeah, I was somewhat lazily referring to planets and similar as 'units'. I wrote a lot more about this here.

I don't think precariousness would be that much of an issue by the time we have the technology to travel between stars. Humans can be bioformed, made digital, replaced by AGI shards, or just master their environments enough to do brute force terraforming.

Even if you do think they're more precarious, over a long enough expansion period the difference is going to be eclipsed by the difference in colony-count.

A note on this: the first people to study the notion of existential risk in an academic setting (Bostrom, Ord, Sandberg, etc.) explicitly state in their work many of those assumptions.

They chiefly revolve around the eventual creation of advanced AI which would enable the automation of both surveillance and manufacturing; the industrialization of outer space, and eventually the interstellar expansion of Earth-originated civilization.

In other words: they assume that both

Proposed mechanisms for (2.) include interstellar expansion and automated surveillance.

Thus, the main crux on the value of working on longtermist interventions is the validity of assumptions (1.) and (2.). In my opinion, finding out how likely they are to be true or not is very important and quite neglected. I think that scrutinizing (2.). is both more neglected and more tractable than examining (1.), and I would love to see more work on it.

Ok, but indeed all arguments for longtermism, as plenty of people have commented on the past, make a case for hingeyness, not just the presence of catastrophic risk.

There is currently no known technology that would cause a space-faring civilization that sends out Von Neumann probes to the edge of the observable universe to go extinct. If you can manage to bootstrap from that, you are good, unless you get wiped out by alien species coming from outside of our Lightcone. And even beyond that, outside of AI, there are very few risks to any multiplanetary species, it does really seem like the risks at that scale get very decorrelated.

Executive summary: Longtermist modeling of existential risk reduction relies on several sensitive assumptions that, when examined critically, may significantly reduce the estimated value of such interventions.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.