I will not eat the bugs, I will not read the The Precipice

[Epistemic confidence: 80%, some bets at the bottom]

Summary:

- 1 min video summary of this article

- There are not enough easy summaries of EA works

- This makes it harder for people to learn their content, which costs time, energy and potential EAs

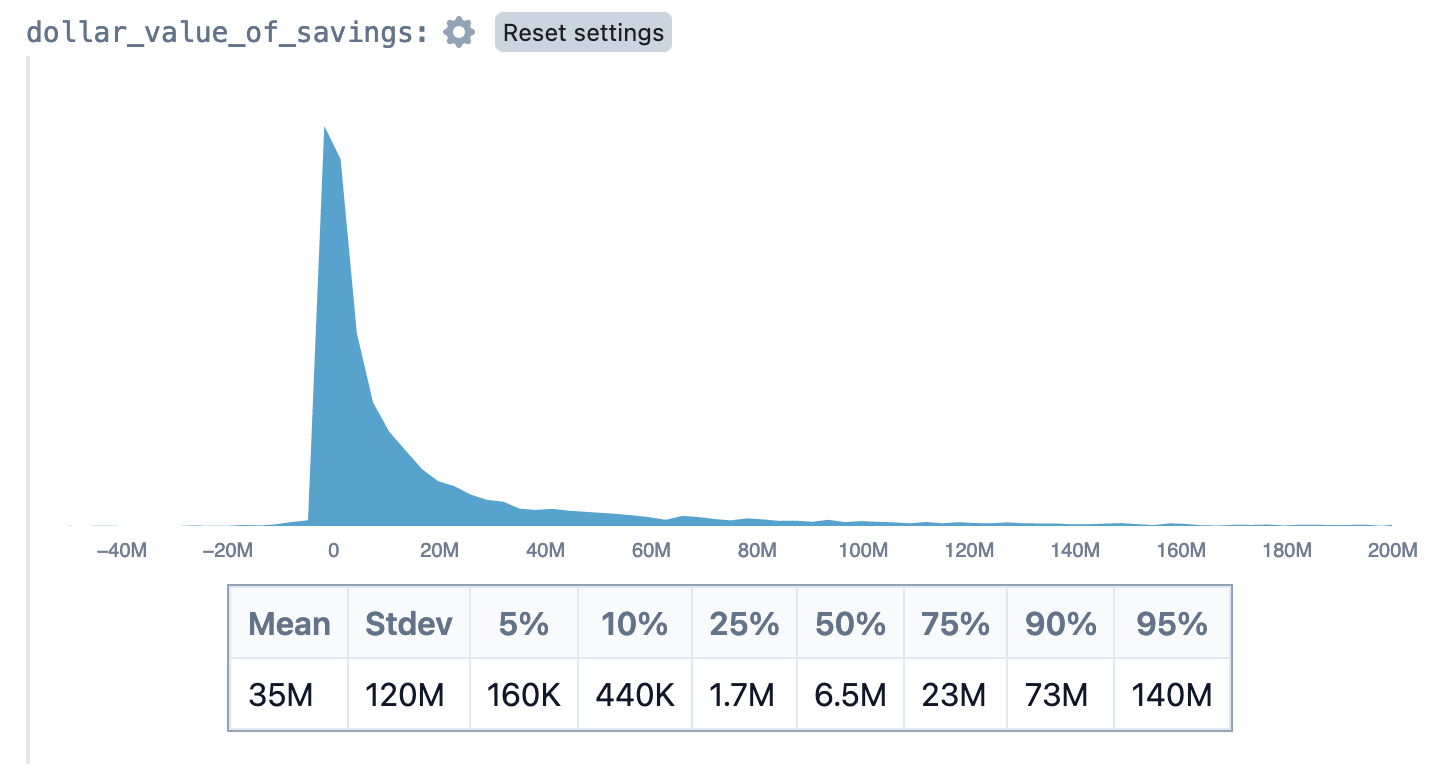

- I estimate the time cost of this problem to be from $160k to $140mn (90% CI) with a median of $6.5mn

- I reckon we could see huge improvement with about $20k of summary prizes

- We should pay prizes for summaries, put our works in audio form, and have a community wiki somewhere

- Some possible counterarguments are: (1) summaries are hard to judge, (2) summaries provide low resolution versions of ideas with no positive tradeoffs and (3) I am uniquely poor at consuming long works. I’m not convinced by any of these counterarguments.

The Problem: Not Enough Summaries

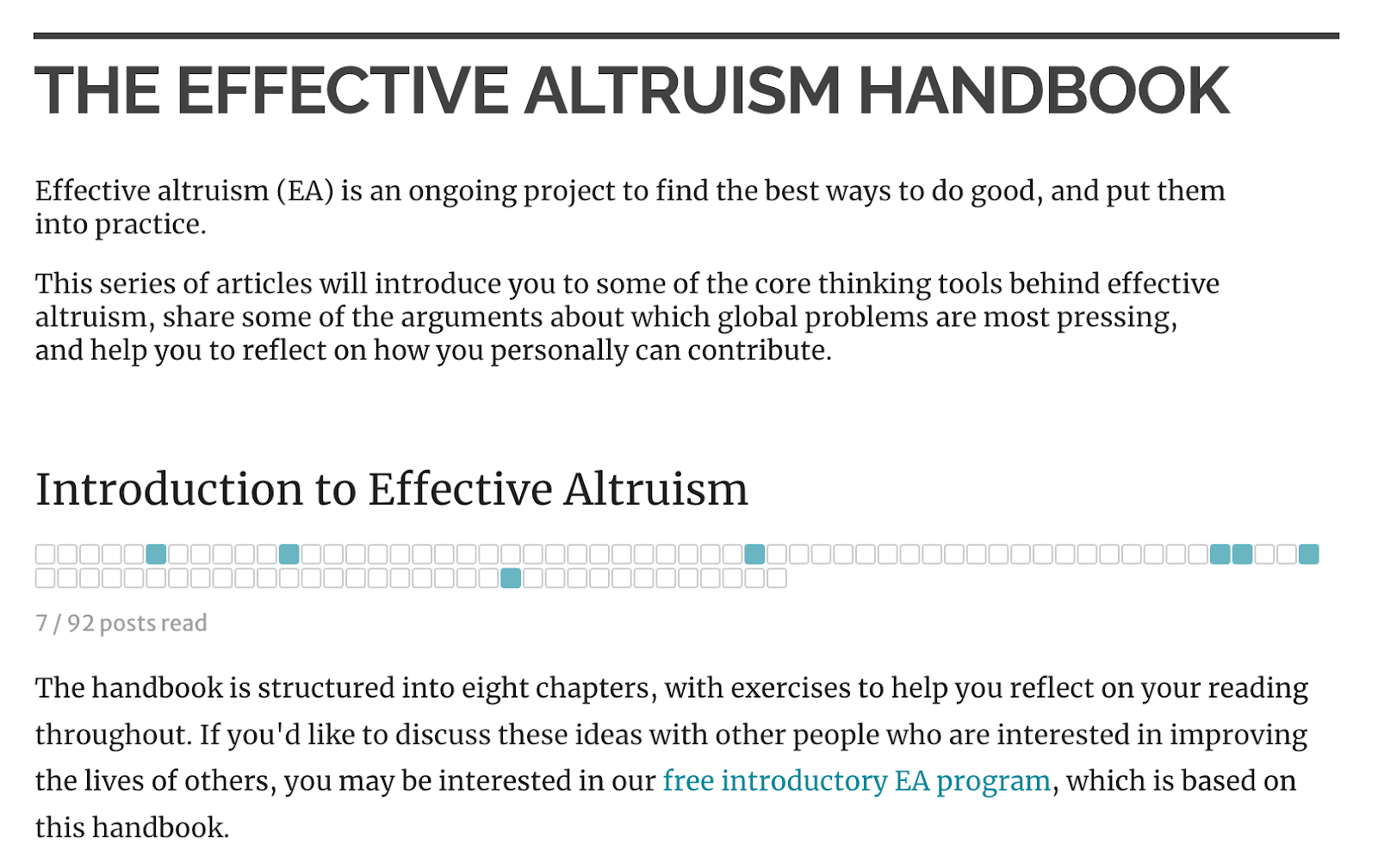

You hear about this cool thing called EA. You decide to learn more about it. If you are lucky, someone points you towards the EA Handbook. This is a focused summary of effective altruism. Here’s the opening page of the Handbook[1]:

It is very long. 8 chapters, with 92 posts.

And after that, if you want to understand EA in more depth, you are expected to read Doing Good Better. And the Precipice. And listen to 100 episodes of the 80k podcast. Or do an 8 week fellowship.

In short, good luck[2].

For lots of top-tier EA content, either there aren’t any summaries, or the summaries are of medium quality. Summaries and snippets make it easier for people to figure out if content is something they want to engage with. By having too few, we make it harder to enter EA and harder to upskill.

Let's look at some of the foundational works.

Doing Good Better

The best summary of this that I found was by Lara M. As far as I’m aware, it’s not linked anywhere on the Forum, or included in the Introductory Fellowships. The EA Forum wiki page looks like this. That’s the whole article. Since sharing this I’ve been sent a number of summaries written by university groups. Someone looking for infromation about EA would not have access to these.

The Precipice

The best summary I found was on Wikipedia. It’s only three paragraphs long, so it isn’t detailed. Again, there’s no summary on the Forum

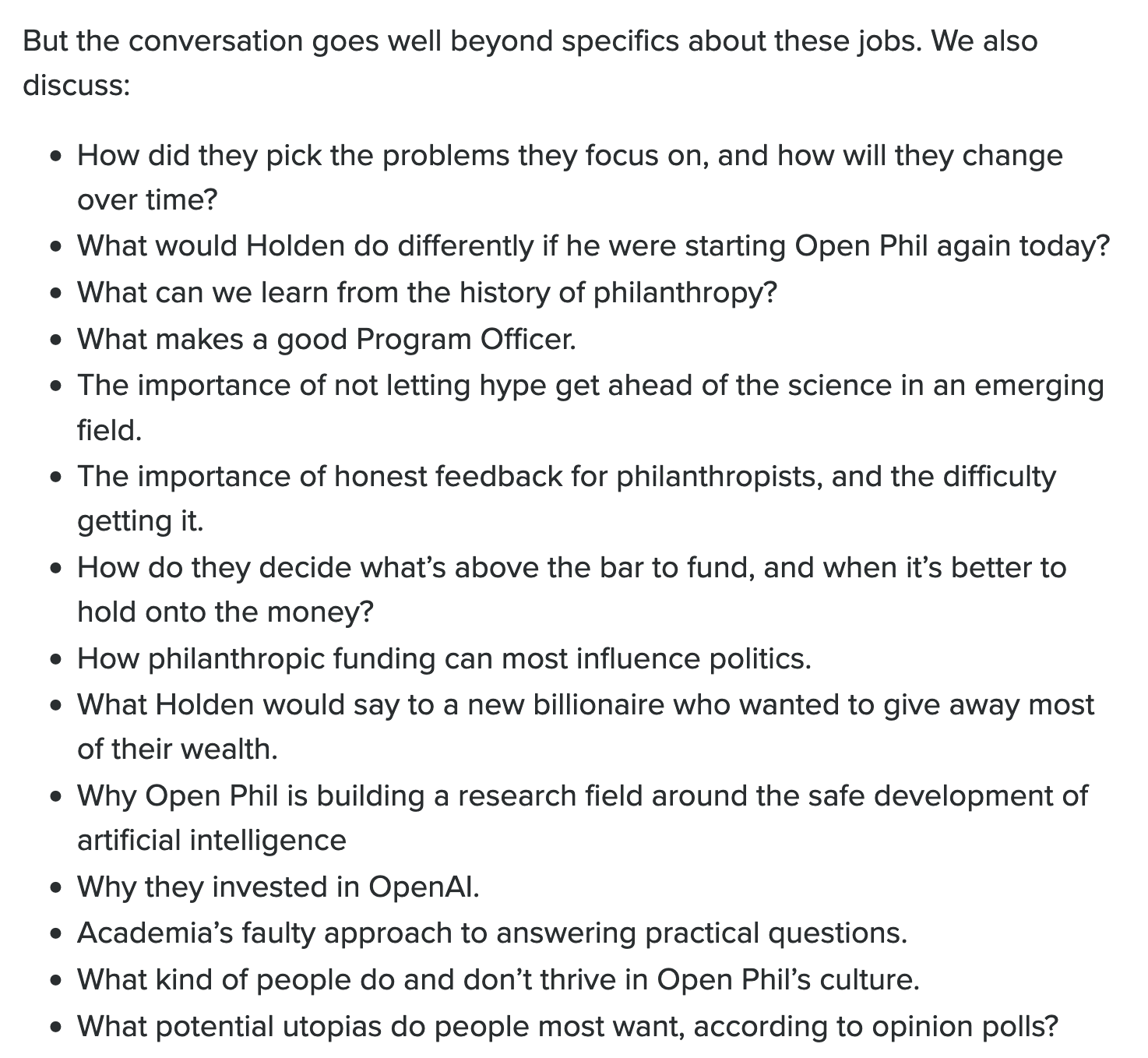

80,000 Hours podcasts

This is pretty good - the podcast has an introductory series and all podcast episodes have a written summary with some highlights.80k have a list of questions that each episode answers, allowing me to scan through and decide whether I want to listen.

https://80000hours.org/podcast/episodes/holden-karnofsky-open-philanthropy/

However, I still have to do a lot of clicking then scrolling to get here. There wasn’t a clear summary page I could find.There are no summaries of the podcasts on the Forum.

The Sequences

Lightcone gave out a short version of the Sequences n book form at EAG SF. This included selected blogs from the original Sequences, but they were still full-length.LessWrong has a set of summaries of blogposts from 2007. This is very good, but it only exists for 2007.

Forum articles

Many Forum articles begin with a summary. This isn’t good enough. I want to know if I should click on the article. You can hover for a preview, but I find this works only about 50% of the time.

Recently, Zoe Williams announced that she plans to publish weekly summaries of popular (40+ karma) EA Forum and LessWrong posts. This is great, I’ve subscribed.[3]

What about the EA Wiki?

The EA forum has a wiki, but it is used to give a brief description of a topic before showing a list of articles in that topic. It does not seek to summarise them. I have written before that I’d like to see a new type of post using the same technology but with a different framing.

As a final example, when the Economist published ‘What to read to understand “effective altruism”’ the top recommendation was.. The introductory series of 80k podcasts! This is about 20 hours of content. If a top flight news organisation can’t find an accessible introduction to point people then random members of the public are probably trying to find out about us and missing out.

In a better world, I suggest that for each piece of content we consider important, there would be:

- A Forum linkpost[4]

- An audio version of the text (this one is largely covered)

- A Forum summary, perhaps a topic article which links to many posts

- A Twitter thread

- A Tiktok

- Diagrams illustrating key points in the content

Currently, for many important pieces of EA content, these things are not available, which makes the content harder to access.

I think we have a great opportunity here: if we produce more high-quality (but short) summaries of EA material, we can lower the barriers to entry for new EAs, save existing EAs time, and help spread our top content more. Many people don’t have enough bandwidth for a 40 minute podcast:

- Random twitter users

- Busy startup founders and billionaires

- Students who don’t know an EA

- Politicians

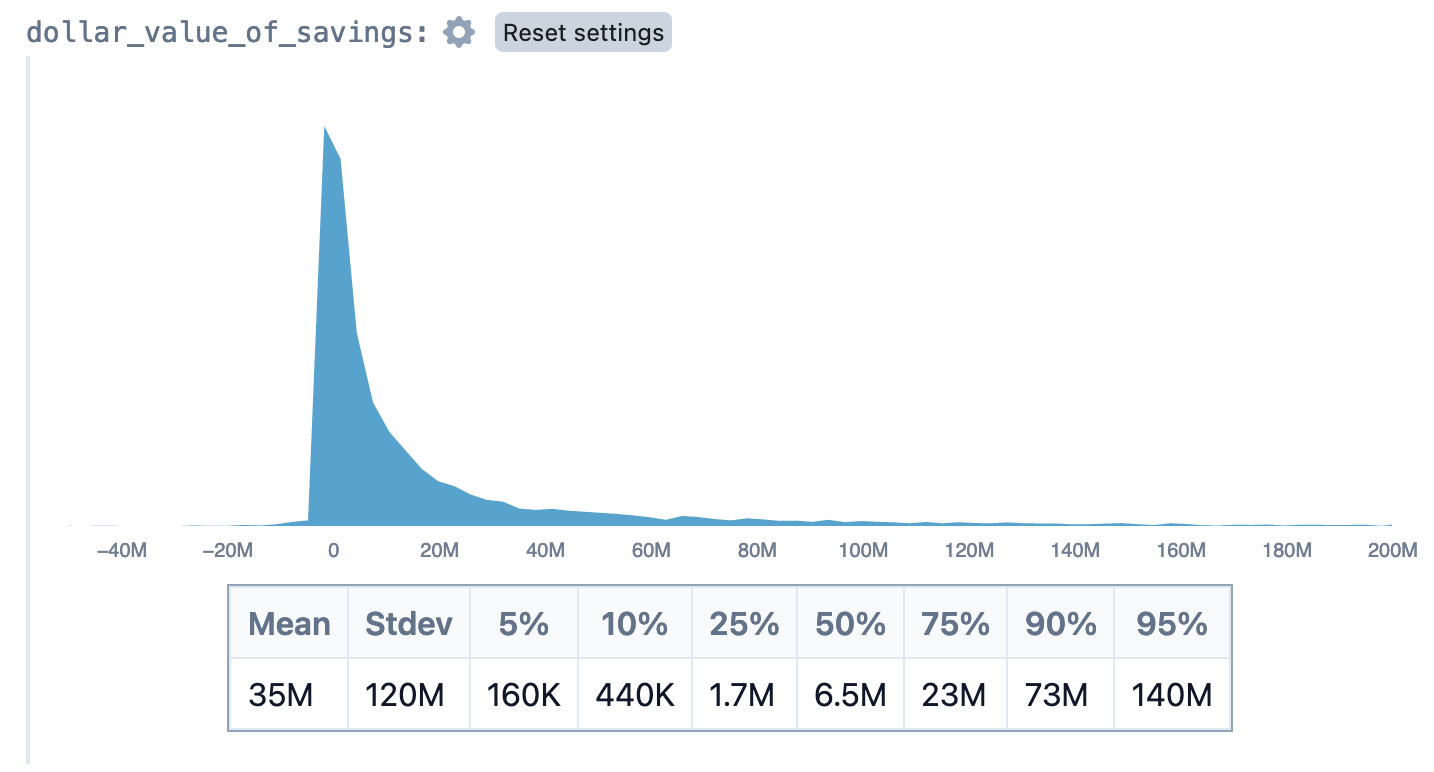

I estimate that this costs the EA community $160,000 to $140 million (90% CI)

Inspired by Nuno Sempere and Sam Nolan, I used the estimation language squiggle to estimate the value lost to the EA community because of the lack of accessible summaries. I estimate the number of EAs, how much content they consume, and how much value summaries deliver despite being shorter than original works. Then I estimate how much of one’s reading might go to summaries instead (10% to 50%) and how valuable an EA’s time is. The estimate spits out the following.

current_EAs = 1500 to 12000

new_EAs_this_year = 500 to 5000

podcasts_listened_this_year = 0 to 250

podcast_time= .5 to 3

top_blogs_read_this_year = 5 to 500

blog_time = .1 to .5

top_books_read_this_year = 0 to 3

book_time = 5 to 20

how_much_shorter_are_summaries = .01 to .5

what_percentage_are_summaries_used = .1 to .5

how_much_of_the_full_value_is_in_the_summary = .1 to .95

value_of_EA_time = 10 to 1000

dollar_value_of_savings = (current_EAs+new_EAs_this_year)*(podcasts_listened_this_year*podcast_time+top_blogs_read_this_year*blog_time+top_books_read_this_year*book_time)*what_percentage_are_summaries_used*how_much_of_the_full_value_is_in_the_summary*(1-truncate(how_much_shorter_are_summaries,0,1))*value_of_EA_time

This is the money saved alone. Feel free to critique my numbers or do your own calculation. But to me, this looks like a solid case that summaries have a lot to offer us. Even spending $150k on summarisation would go a long way.

What’s more, people would probably still read the content, but the summaries would help them familiarise themselves faster and get a sense of what they are interested in.

Concrete solutions

- You could write summaries

- Pay for summarisation

- Make audio versions of things (this is already happening!)

- Write syntheses

- Summarise works from other communities

You could write summaries[5]

I have mixed feelings about this. I would really recommend that if summarisation brings you joy, you should do it. But also, I think there is a supply and demand problem here. Summaries aren't created because they aren't rewarded with resources or prestige. That's the problem we need to fix.

Pay for summarisation

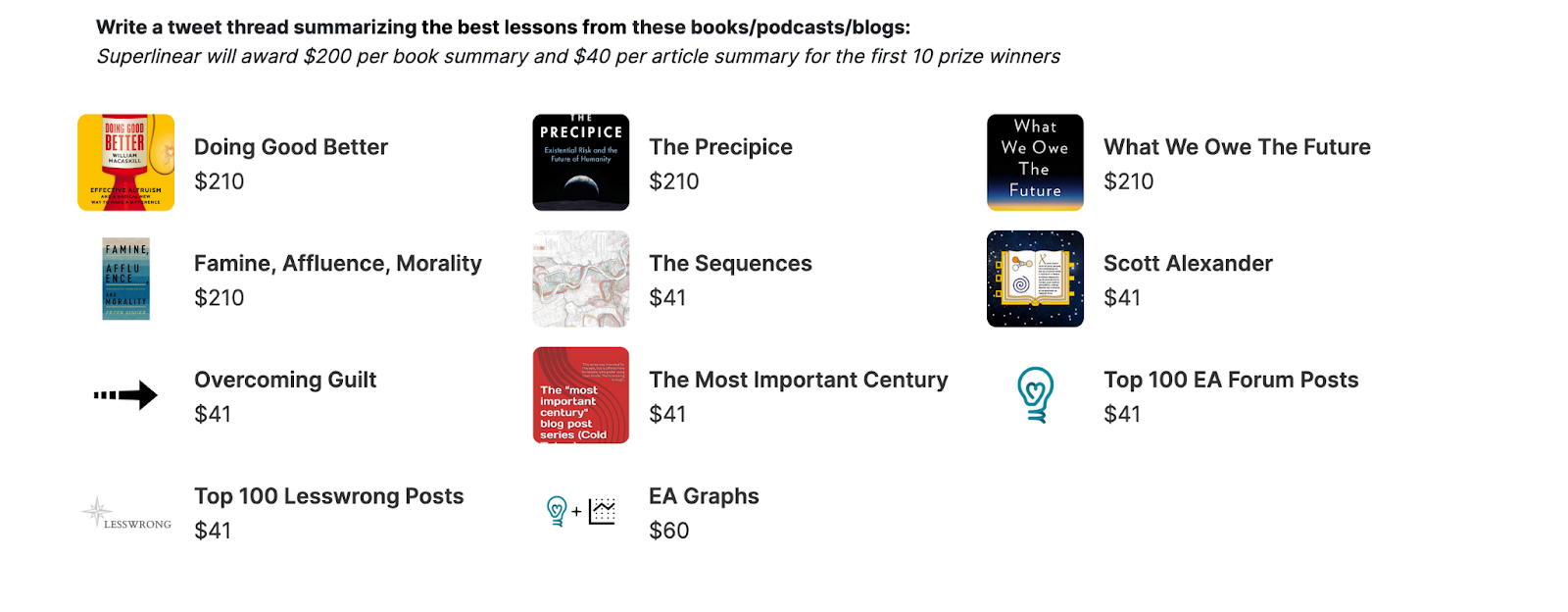

We can offer bounties for summaries of content that are “good enough”. I created a bounty, funded by Superlinear, for summarizing books in Twitter threads.

Here, for example, is the prize for Doing Good Better. We have had 4 entries in the two weeks it’s been up and they have been great.

All of these cost $183. Arjun told me his Epistemic Learned Helplessness tweet had been viewed ~3000 times. Ines’ EA graphs was even shared more. At this price you could have good to great summaries of all the key works in EA for about $20k. That’s 500 twitter threads. I’m confident that I or others could judge that in 10-20 hours.

Making audio versions of things

Nonlinear have worked hard to turn many top works into audio, opening them up to a much wider audience. If you like podcasts, I’d recommend it.

It would be even better if you could filter their playlist by karma or cause area, or listen to summaries of posts before you jump into an entire post.

Still, this is really good!

More graphs

Someone who commented on this just really wanted more graphs.

Syntheses

I haveve written a few syntheses on the LessWrong wiki (the EA wiki doesn’t like syntheses; they see things more as topic headers than full wiki pages). Here are my attempts, which are early works in progress, if you don’t like ‘em, make ‘em better.

Other summaries

I am surprised by a lack of summaries of the best ideas of other spaces on the forum. Some high priority topics for this might include:

- Econ 101

- Sociology

- Psychology

- Feminism

- Individuals talking about their learnings from business

It seems to me that many of these communities will have something to teach us that we might as well have one summary of.

Possible counterarguments

It’s hard to judge what a good summary is

I strongly disagree. You could judge the quality of a summary by, for example:

- Putting the summary up as a Forum post and seeing if it gets a certain number of karma. Note that I don't think summaries get enough karma, but this can still be used to judge between bad (0 karma) and good (20 karma)

- Getting a set of judges to assess it. Summaries are very short; you can read many of them in an hour. I’d happily do this.

Summaries share a lower-resolution version of the ideas

Sometimes this is true. However, sometimes low-resolution summaries can pique my interest in a topic, leading me to read about it more deeply later. Sometimes the idea wasn’t that well explained in the original work and the summary gets to the core of it. Generally, I would suggest that summaries often allow more people to access the core ideas of much longer works

I am unrepresentative because I have a very short attention span

I have worried about this idea. All I can say is that politicians, members of our outgroup, and billionaires all have short attention spans for new ideas as well. Maybe their attention span is more like mine than the median EA’s.

Conclusion

Summarisation provides a lot of value and we aren’t doing much of it. As a result, our ideas are harder to understand.

It’s easier to spread ideas in their clearest, most distilled form. We should not ask people to read whole books. We should certainly not ask people to read series of long technical blogs.

The ‘median voter hypothesis’ is the idea that you get roughly what the median person in your country wants. This idea looks roughly true to me. Likewise, I think that our ability to function flexibly as a community will be correlated to how well the median EA understands the most important ideas. If we make it easier for people to understand the core ideas of EA, the larger EA coordinate better and check the work of key decisionmakers. That's what this sequence of posts is about.

I spoke this as a voice note to https://otter.ai which transcribed it, then I wrote it up and Amber Ace copy edited then I added a load more spelling errors in. I would recommend Amber for editing, you can message her here. Thanks to Sudhanshu Kasewa, who talked about this idea with me. Thanks to Arjun Panickssery, Emma Richter and Nuño Sempere and others who looked over early drafts of this. (If you helped, I'd love to thank, you but I need your permission)

This post is part of a test I'm doing on works-in-progress.

Some bets I'll offer:

- 80% of up to $500 that in 5 years time if we ask the forum question "was it good ex-ante to spend $20k on summaries" [or equivalent] the top postive answer will get more upvotes than the top negative one, with it being made clear that it resolves this bet

- ^

- ^

In long: I have a 2 hour podcast you can listen to.

- ^

I don’t know how to say this without sounding like an ass, but on hearing that Zoe wrote these summaries but didn’t publish them, I told Peter Wildeford to suggest that she did. Perhaps she intended to publish them anyway but it’s very plausible to me that we’d never have got a really high quality resource had noone.. asked for it.

- ^

I think it’s a good idea to produce linkposts even for books because then they can be upvoted and downvoted and we can see their karma ranking, but I won’t discuss that here.)

- ^

Rohin mentioned this and it was an oversight not to have included this discussion originally

Tiktok EA forum article summaries?

Great idea. If anyone wants to run a prize like this, I'd be open to funding it.

I'd love to enter a competition like this.

Strongly agreed!

I would love for utilitarianism.net to host high-quality summaries of major EA philosophical works (incl. Doing Good Better and The Precipice). The challenge is finding qualified writers who have the time to spare for such a task. (I did it myself for Singer's 'Famine, Affluence, and Morality'.)*

But if anyone reading this is (i) either a graduate student in a top philosophy program or a professional philosopher (or can otherwise make a strong case for being qualified), and (ii) interested in writing a high-quality précis of such a book for utilitarianism.net, please get in touch! (I can offer a $1000 honorarium if I agree to commission your services.)

Just out of curiosity: why restrict it to Twitter threads? (E.g. I can't imagine a tweet-thread on Singer's FAM being nearly as useful as my above study guide.)

* = folks who like that summary might also appreciate my summary of Parfit's entire moral philosophy in seven blog posts.

Can I put your honorarium as a superlinear prize?

Twitter is something I understand. I don't think summaries should be restricted to this.

I'm not sure what you're asking. You're welcome to refer to the utilitarianism.net honorarium (and academic qualification requirements) for commissioned articles, and encourage any interested parties to contact me with further questions.

(Or, if someone really wanted to try writing the article first, and then approach us to check whether we viewed it as of appropriate quality for us to publish, that would also be fine. But a bit of a risk on their part, since writing an academic article is a significant time investment, and there's no guarantee we would accept it!)

super-linear.org is an EA aligned prize platform. We could put your honorarium there and then more people would see it. People would write entries and if they were good enough, be rewarded.

Ah, thanks for the explanation. I'll run this by my co-editors, and get back to you if they're interested.

I'm surprised that "write summaries" isn't one of the proposed concrete solutions. One person can do a lot.

Added and credit you

Idk, in my particular case I'd say writing summaries was a major reason that I now have prestige / access to resources.

I think it's probably just hard to write good summaries; many of the summaries posted here don't get very much karma.

This is one of the problems I'm criticising, both here and here. I'm confused, your tone seems like you are dismissing the criticism, but you seem to agree on the object level that summaries are undersupplied given their value.

Maybe you think that summaries do give rewards and prestige but only in the long term? In which case, isn't that a problem that can be sorted by paying people who write good summaries and ensuring that the karma system appropriately rewards summarising?

You can either interpret low karma as a sign that the karma system is broken or that the summaries aren't sufficiently good. In hindsight I think you're right and I lean more towards the former --even though people tell me they like my newsletter, it doesn't actually get that much karma.

I thought you thought that karma was a decent measure since you suggested

as a way to evaluate how good a summary is.

Yeah I think I don't think karma is as good as I imply here. I'll change.

Agree with the post, and I really like the video summary you've been putting at the top.

I don't really understand the model. Which variable represents the counterfactual value of reading these materials at all? (Also it's confusing that there are no units, even if that's almost always true for programming).

I support your idea that many EA (and relevant non-EA) materials are really long and need to be summarised to be impactful. But for most of the materials you listed, I don't think it makes a difference if they're read at all (my bias is that out of all of them the only one I've read is half of the Precipice). I find EA ideas, books and articles to be very repetitive, and for each of them I strongly suspect almost all information can be much more easily gotten by other means.

The two exceptions I see to this are non-EA materials, like the summaries on Econ or feminism that you suggest (which I'd happily read); and the Sequences, for which I'd also happily try to read a summary if it was significantly less verbose than the original, but which I suspect are not important for EA.

In regard to them model, thanks for the feedback. It's quite early days for me making them and I could explain them better.

Reading your comment suggests you'd like some syntheses. I agree. Many EA works are similar, but they do impart a small part of their own value. So why can't we turn them all into one summary page which links to perhaps 10 pages of summaries.

Upvote this if you think I justfy too much, downvote if you think I justify too little.

Do we have a list of summaries? I'd like to add this comment.

The wiki should be that list imo

If you want to make suggestions to this post, a cool thing I'd like to try is adding people as coathors and letting them add things.

Coming to this post a bit late, but I completely agree that summaries are underrated. In fact, I started a website this year called To Summarise. My initial focus was not on EA, but just on sharing (unusually quite detailed) summaries of books I've read. One of those was for Doing Good Better, and another was for Destined for War by Graham Allison, which I think was recommended on some 80k Hours or other EA website.

Just this week I posted my first podcast summary for an 80k hours interview with Hilary Greaves on longtermism, cluelessness and existence comparativism. It was very dense, so perhaps not the best choice for my first podcast summary, but I plan to do some other ones later - I was thinking of maybe doing the recent 80k interview with Will MacAskill. I am mostly doing this for fun right now (I work mostly full-time at a non-EA job) but would be interested in pursuing this further if others also think it is useful for the community.

I agree that funding summaries is worth doing and that any important content should exist in the forms you listed to promote discovery and awareness—audio version, EA Forum linkpost, Twitter thread, Tiktok video, etc.

Besides the Handbook, the CEA has this introductory page which strikes me as absolutely fantastic. Maybe they ought to link to the introductory page from the beginning of the handbook?

Point of possible disagreement: I think that the deficit of good summaries looks less severe than you've suggested if one's main heuristic is to look at Google search results rather than EA Forum results. When I imagine someone time-constrained trying to find out what Doing Good Better says, for example, I imagine them looking for the book on Google, and to me the results seem not too bad. (A few clicks took me to the CEA's introduction to effective altruism, in fact!) If I wanted to find out more, I could go read the book.

About the issue of you not being sure if you want to click on a Forum post, that sounds like a matter of UI on this site specifically. Are you imagining some kind of descriptive text that people could see? On computers, there is hover text of the first bit of the post. If people summarize their own posts, maybe that partly addresses the issue?

Edit: You mentioned The Precipice not having a very good summary on Wikipedia. Would editing Wikipedia articles on major EA works (or even topics connected to EA) be another action someone interested in this could take? Is there something like a Wikipedia editorial policy that would prevent us from doing this?