NB: Minor content warning for anyone in the community who might feel particular anxiety about questioning their own career decisions. Perhaps consider reading this first: You have more than one goal, and that's fine — EA Forum (effectivealtruism.org). This piece is about the importance of having non-impact focussed goals, and that it is extremely ok to have them. This post is intended to suggest what people should do in so far as they want to have more impact, rather than being a suggestion of what everyone in the EA community should do.

Your decisions matter. The precise career path you pick really matters for your impact. I think many people in the EA community would say they believe this if asked, but haven’t really internalised what this means for them. I think many people would have great returns for impact to thinking more carefully about prioritisation for their career, even within the “EA careers space. Here are some slight caricatures of statements I hear regularly:

“I want an ‘impactful’ job”

“I am working on an important problem, so I'll do what I think is interesting”

“I was already interested in something on 80,000 Hours, so I will work on that”

“People seem to think this area is important, so I suppose I should work on that”

“I am not a researcher, so I shouldn’t work on that problem”

I think these are all major mistakes for those who say them, in so far as impact is their primary career goal. My goals for this post are:

1. To make the importance of prioritisation feel more salient to members of the community (the first half of my post).

2. To help making progress feel and be more attainable (the second half from “What does thinking seriously about prioritisation look like”.

Key Claims

- For any given person, their best future ‘EA career paths’ are at least an order of magnitude more impactful than their median ‘EA career path’.

- For over 50% of self identifying effective altruists, in their current situation:

- Thinking more carefully about prioritisation will increase their expected impact by several times.

- There will be good returns to thinking more about the details of prioritising career options for yourself, not just uncritically deferring to others or doing very high-level “cause prioritisation”.

- They overvalue personal fit and prior experience when determining what to work on.

I think the conclusions of my argument should excite you.

Helping people is amazing.. This community has enabled many of us to help orders of magnitude more people than we otherwise would have. I am claiming that you might be able to make a similar improvement again with careful thought, and that as a community we might be able to achieve a lot more.

Defining prioritisation in terms of your career

Prioritisation: Determining which particular actions are most likely to result in your career having as much impact as possible. In practice, this looks like a combination of career planning and cause/intervention prioritisation. So, “your prioritisation” means something like “your current best guess of what precisely you should be doing with your career for impact”.

Career path: The hyper-specific route which you take through your career, factoring decisions in such as which specific issues/interventions to work on, which organisations to work at, and which specific roles to take. I do not mean to be as broad as “AI alignment researcher” or “EA community builder”.

‘EA’ person/career path: By this I mean a person or choice which is motivated by impact, not necessarily in so-called ‘EA’ organisations or explicitly identifying as part of the community.

For any given person, the best “EA” career paths are >10x better than the median EA career paths:

Many EAs seem to implicitly assume there is a binary between EA and non-EA careers; that by choosing an area that is listed by 80,000 hours, your thinking about prioritisation is done, and you are pursuing an “EA career path”. Insofar as this is true, I think this is a mistake according to their own values, and that they are probably misevaluating their options.

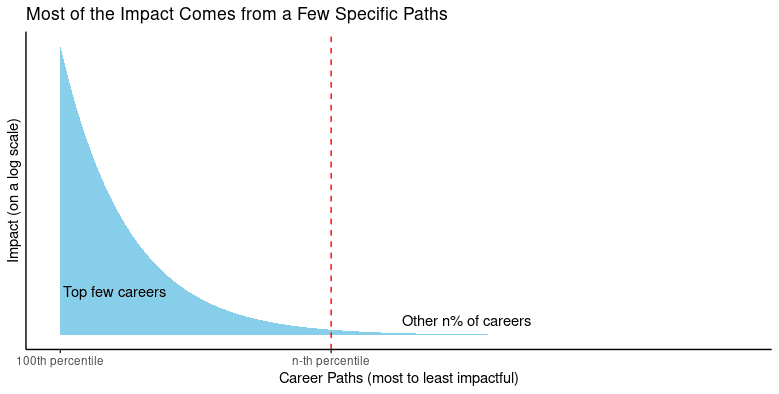

If you were to travel into the future and look back at the outcomes of all possible “EA” career paths that you might take, a large proportion of the total impact would come from a small number of these paths. The 10x number in the subtitle for conveying the difference between these best options and the median is a conservative lower bound of what I think this theory implies. I think my claim can be most easily understood graphically (depicting a power-law distribution):

(It would be arbitrary to attach quantifications to these proportions since a career’s impact is too complex to be calculated or measured accurately, but my bottom-line still stands for a range of proportions.)

Why might this be the case?

Many distributions in the world follow this kind of shape, such as wealth and the cost-effectiveness of health interventions. There is some more examples and discussion in this post: All moral decisions in life are on a heavy-tailed distribution — EA Forum (effectivealtruism.org).

This research report by Max Daniel and Benjamin Todd indicates that the most important factors for determining the heavy-tailedness of performance in a domain are:

- Complexity: The number and difficulty of multiplicative sub-tasks which constitute the domain.

- Scalability: Whether performance can vary by orders of magnitude in practice.

If we think of the domain as “maximising the impact of your career”, it seems intuitive that it would be both very complex and very scalable.. There are numerous semi-independent decisions to make relating to your career that I would expect to be very difficult by default (eg. determining which intervention it would be best to work on within an area).

The domain probably has significant scalability because we should expect making good decisions to make you more likely to make further good decisions, by improving your ability to model the world accurately and gaining relevant expertise. This means that we are more likely to get extreme results of people making successive good (or bad) decisions, so the total plausible range should be large if we accept my model presented below.

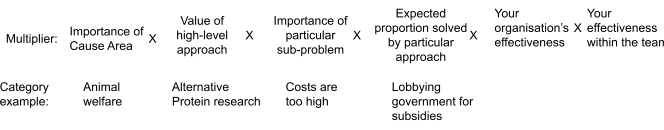

You can model your career path as a long series of decisions, each of which is a multiplier for your impact. Even if the possible variation in impact for each of these factors spans only 1 full order of magnitude, then the product would still vary by several orders of magnitude. Thinking about effectiveness as multipliers is explored in Thomas Kwa’s post here, but I think it misses some of its implications. This is a heavily simplified potential model of multipliers for how the impact of a career path could work:

Why we should model these factors as multipliers and not a sum

These decisions can be seen as multiplicative since successfully achieving impact in your career relies on getting multiple factors right, and not making large mistakes. Even if one successfully picks the most important cause and particular problem, choosing a useless approach or a completely ineffective organisation will lead to negligible impact. Likewise, even if you choose a highly effective approach or sub problem, you are severely limiting your impact if you choose a much less important cause area.

Looking for large multipliers

The idea that there are large differences in importance between problems and the effectiveness of interventions is one of the core premises of EA, and I don’t think this is a controversial view. As per the above model, we can conceive of these differences as where there are large impact multipliers for choosing the best options. Exactly where to expect the largest actual multipliers will depend on your particular worldview, but generally I would expect the biggest difference to be at the broader decisions (cause area, high-level approach) due to their importance ranging over the largest scale.

One way to think about where to expect the largest multipliers is by looking a underlying moral and empirical questions/assumptions which, depending on their answer, would make the biggest difference for what you should work on.. A few basic examples:

- Should we expect the future of humanity to be astronomically big?

- The significant possibility of a big future makes longtermist work several orders of magnitude more valuable.

- Here is a good post demonstrating how important this consideration is, providing useful quantification on the value of X-risk work for different sizes of the future.

- How much do you weigh the welfare of animals and potential digital minds relative to humans?

- This could cause the value of work on each area to vary by orders of magnitude, and potentially make s-risk work far more impactful.

- What is the chance of space colonisation happening this century?

- This causes the value of space governance to vary by orders of magnitude, as well as the potential importance of animal welfare. If high, it could reduce your X-risk estimates significantly too depending on your threat models.

These are just a few of the many questions which exemplify how the value of different areas can vary by orders of magnitude, and the importance of getting them right. Obviously, these are all very hard questions where I expect many in the EA community have substantial disagreements. However, I think they are worth spending time on nevertheless.

Multipliers that are close to 0

Conversely, consider the decision branches which would suggest that the value of your work goes to ~0. It should be fairly intuitive that these exist; consider what might make a path obsolete, or even negative. This will depend on your particular field, but some potential examples could be:

- Animal welfare research which has no chance of ever being implemented because it won’t ever be politically feasible.

- AI alignment research which takes too much further work to produce positive results that it misses the action window, and so will not be valuable in the large majority AI timeline scenarios.

- Working on a global development project which is surpassed by a more cost-effective approach targeting the same problem, who you are competing for funding with.

Why thinking about prioritisation can help you reach the best career paths for you

By default, you should expect thinking about prioritisation-relevant decisions to improve them.

If the actual impact of different paths differs substantially, it would be surprising if you had no ability to distinguish between them. Thinking carefully about prioritisation has helped identify cause areas and interventions which are far more cost-effective than others, and the strong differences of opinion on prioritisation within the EA community suggest that there are large differences in expectation. So it would be surprising if somehow we had identified a tier of indistinguishable problems.

In general, spending time thinking about something generally leads to better decisions. The question of “how much better can you make decisions by spending more time thinking about them?” is an important one, but if you accept the high stakes then even small improvements can improve your expected impact significantly.

Good decision-making compounds

Modelling the world accurately relies not just on specific knowledge and experience, but also can be thought of as having an underlying skill, which can be approximated to “judgement”. It is reasonable to think that thinking about prioritisation will improve your judgement by allowing you to practise modelling the world and gathering additional relevant knowledge and viewpoints, which will increase the chance that you make further good decisions. Additionally, making your thinking explicit allows you to get feedback on your views as more research is done and world events unfold which can validate the accuracy of your models based on what you expected to happen. Improving judgement means you get disproportionate returns to spending time trying to make good decisions since you improve both your current and future decisions, increasing your chances of reaching “the heavy tail”.

Having clear models helps you make useful updates

Even if you develop a prioritisation model which has sufficiently large uncertainties as to not warrant you changing your actions, clearly understanding what your cruxes and uncertainties are will allow you to update more easily on new information and make clear improvements to your impact when possible. It also makes your thinking more legible to others, allowing them to improve your prioritisation more easily.

Thinking about prioritisation allows you to be more entrepreneurial

Thinking about what questions are truly important allows you to identify where the most exciting gaps in current work might be, and what questions might be most important to push for further work on. Understanding this facilitates you being a stronger leader, since you have a position that doesn’t rely heavily on deference and allows you to use your view to set a distinct direction. The world is changing rapidly and the continual evolution of important problems means that we should expect to often be bottlenecked by our ability to design innovative solutions or research agendas, which makes being more entrepreneurial far more useful.

Other useful effects:

Even if you are unsure you can make substantially better decisions by taking prioritisation more seriously, the following reasons might still make it worth doing.

Improving your epistemics

In my experience, thinking seriously about prioritisation is great for encouraging me to be in a “scout mindset”. Questioning where I might be wrong, making myself put views in writing to get feedback, and considering the bigger picture have all had a distinct effect on my ability to think clearly in general. The habit of actively thinking in a prioritisation framework is also very helpful for making better decisions in other work areas.

Improving community epistemics

In the current community, there seems to be an unideal blend of:

- People deferring to people who are also just deferring themselves, resulting in few people knowing whose view they are really deferring to.

- People who are perceived to have thought consciously about prioritisation but actually haven’t, causing inaccurate outside-view weightings.

- People who think for a few hours about cause prioritisation and decide on a poorly thought through answer, the importance of which is not reflected in the time spent. The most common example is people deciding AI safety is the most important thing for them to work on because that is the area which has associated status in the community. See this recent post for some thoughts on this.

An EA community in which most people are actively thinking about prioritisation on a regular basis is likely to perform better than a community where there is heavy deference. It would be less prone to groupthink, and so more likely to catch important mistakes and discover new exciting areas. Doing your part to establish norms around this also helps to shift social status away from conclusions and towards careful thought.

I am in favour of “broad-tent” EA in so far as people have actually thought seriously about what might be best and decided on different conclusions. I worry that the distribution of resources in the community is currently misallocated because of misconceptions about how people have thought about prioritisation.

Providing motivation

Perhaps this isn’t for everyone, but for me there is something exciting and motivating about trying to build my own detailed picture of what I think the best thing for me to work on is. It feels much more like I have agency, and am empowered to make decisions for myself.

What does thinking seriously about prioritisation look like?

Everyone’s situation and priorities will vary, which means that I would encourage you to think about what is best for you. What follows is my opinion, and is perhaps a place to start rather than the final say. There are 4 points which I would argue constitute taking prioritisation within EA ‘seriously’:

- Isolating impact from your other goals – To be clear, I actively encourage you to have other goals. However, I think it is important to try to independently work out what your best options might be for impact, and then only afterwards add other constraining goals (eg. wanting a certain salary) on top. Trying to deal with them all simultaneously is messy and I think generally leads to worse judgement calls from my experience.

- Not being afraid to zoom out and survey the landscape of options – I think many people only look within their narrow scope of their cause area or particular subfield, when I think it is very possible they are stuck at some local maximum and they are missing huge opportunities elsewhere.

- A subpoint here is be ambitious. If you buy the distribution of your potential career paths’ impact which I depicted above, it would be surprising if the very best options were not from you being ambitious.

- Being action-driven and asking yourself difficult questions – It is critical to actually be action-driven and focus on questions or uncertainties which might change your plans as a result, otherwise you are wasting your time. Asking yourself questions about the possibility of changing job or field is hard and likely feels aversive to many. However, confronting these uncertainties are what is likely to help you start making better decisions.

- Being prepared to repeat and iterate – As made clear previously, it is not simply the general cause area you work on that matters. The specifics of what you do will make a huge difference in most cases. It is important to not just think about prioritisation once but instead make prioritisation an iterative process. Of course the world will develop and the information available to you will change, and this will likely shift what the best exact route is for you.

A more concrete suggestion:

This is intended to provide a more tangible process to act as a starting point for how I think people can go about improving their prioritisation, but you should certainly change things according to your preferences.

- Write the argument for your current best guess of what you think is the most impactful thing for you to work on.

- Akin to Neel Nanda’s suggestion here, spend an hour reflecting and writing the strongest justification you can for what you currently think is best.

- Poke holes and write counterarguments by yourself for another hour, in writing.

- Question the assumptions you are making, question what evidence you have to support the most important claims, and identify the largest and smallest potential multipliers as discussed.

- How much thinking went into your decision to initially prioritise what you currently do? If not much, it would be quite suspicious if what you were currently doing is best.

- Another framing could be “what would knowledgeable and thoughtful people who have different priorities to me say? What are the cruxes of disagreement I might have with them?”

- Question the assumptions you are making, question what evidence you have to support the most important claims, and identify the largest and smallest potential multipliers as discussed.

- Reflect on how much you buy the counterarguments now, and make a list of the important cruxes/uncertainties you feel least confident in.

- Using this list of uncertainties, write down what you think the best alternative options might be if different combinations of these changed from your current best guess. It is probably best to consult someone else on this who might have more context/experience than you, if possible.

- Now look at this list and the arguments you made for and against what your current best guess is. Reflect on what feels emotionally salient to you. What are you curious about? What can you picture yourself going and looking into?

- Try to form/improve your views on what you feel most curious about from your list.. Suggestions for this:

- Engaging with broad introductory materials for areas where you have low context. Eg. AGI Safety Fundamentals or an 80,000 hours problem profile like this Nuclear war page.

- I think a key aim here is to improve your understanding to the point where you can break the larger topic down into important sub-questions you may want to then focus on.

- Talking to experts and absorbing their models.

- Even experienced EAs are generally exceptionally kind to (polite) cold emails. Even if they don’t have the capacity to meet with you, they can often point you to people who will or other helpful resources.

- EAGs are a great way to do this. For example, I felt uncertain about the distribution of power within the UK political system, and at EAG London this year I spoke to almost a dozen relevant people about this, so I could then synthesise their views and greatly improve the nuance I had on the topic.

- This doesn’t mean defer quickly - there are normally a variety of views within a field on any given subject which you should understand before rushing to uncritically accept a single stance.

- Say intuitive/unconfident hot takes to others and get feedback from peers/more knowledgeable people.

- This is personally what I have found most useful (although I have the fortune of living in an EA hub and thus being around knowledgeable people on a daily basis), as it allows me to make quite quick and cheap updates and introduce considerations I had missed.

- Learning By Writing.

- A process for forming weak hypotheses quickly is writing them out, and then reviewing and revising them. This is very similar to the general prioritisation method discussed in this post.

- Getting experience in the field.

- This seems quite important for more precise details of how particular types of work function, how you might fit into it, and how important the issue might actually be.

- Obviously, this is more expensive than the above options, and I would suggest this is the thing to do last. It doesn’t have to be a full-time job, but could be an internship/fellowship, side project, or even volunteering where appropriate.

- Engaging with broad introductory materials for areas where you have low context. Eg. AGI Safety Fundamentals or an 80,000 hours problem profile like this Nuclear war page.

- Repeat at an interval which works for you.

- It is important not to see prioritisation as a one-off process, and instead I suggest that to successfully make large improvements to your expected impact, you need to repeat it.

- As a very rough anchoring point, every 6 months might make sense for someone in full-time work. Perhaps more frequently would make sense for students/others who have less settled views.

- I expect the first time most people do this will be the longest and most intensive as you won’t have a clear, existing framework to start from. So the first time might be very difficult and strenuous, but it will likely get easier!

Incorporating personal fit:

(This contradicts the advice from more experienced career advisors, and so take it with the appropriate pinch of salt.)

There is no doubt that personal fit is an important consideration for career choice, however I think that it is severely overvalued as a determinant of which problem you work on. I would suggest prioritising initially without regarding personal fit, and then carefully rule out some potential options in accordance with your personal fit. I think there are 2 key claims I would make to support this:

There’s normally multiple bottlenecks for any given issue, they aren’t always obvious, and so don’t associate personal fit with an entire problem area.

Note that I use AI safety as an example here because I had been thinking about this idea recently in that context, but think that this is applicable to other causes.

I often hear statements like “I think AI safety is definitely the most important issue, but I don’t like research/am not a technical person, therefore I should go and work on something else”. I think this is a huge error in prioritisation.

Firstly, there are lots of ways to plausibly contribute to the problem. To name a few: operations, earn-to-give, policy maker, governance research, field-building, advocacy. While it is important to consider how much these particular skills are marginally useful in the field, you should investigate this rather than dismissing a field outright.

Secondly, even if you decide that the sheer number of technical alignment researchers is by far the most important way to address threats from AI, then you can address this without doing the research yourself. Alignment research is currently funding constrained, and so perhaps donating a large proportion of your salary or earning to give is a great way to help more alignment research happen. Particular types of operations skillsets and field-building also feel valuable in this worldview.

People underrate the difference in importance of problems compared to the cost of transitioning fields

As outlined, I think you can make progress on assessing the relative importance of different EA problems, and it seems likely that there are orders of magnitude difference between them depending on your empirical beliefs. If there really is such a difference between problems, then one should expect that the value of working on a more important cause area to be much greater.

My guess is that the time needed to upskill in areas generally considered to be important in EA worth the cost in lots more cases than people seem to think. Many areas of research (AI alignment/governance, animal sentience, space governance, S-risks, civilizational resilience etc) are quite new, and my understanding is that one can reach the frontier of knowledge fairly quickly relative to established fields such as physics, and that the skills previously developed will still be useful. Often there can be surprisingly useful overlaps or contributions from different disciplines. As an example, PIBBSS are primarily biologists looking to contribute towards AI alignment.

The core point here seems even more true for non-research roles (eg. communications, operations etc), where deep, field-specific knowledge is less useful, and the skills seem both more learnable and transferable.

When to stop thinking about prioritisation?

I don’t think every EA needs to be thinking more about prioritisation, and obviously the ideal proportion of your time spent thinking about prioritisation in your career is not 100% (otherwise you would never get anything done), so it is important to think about what you are hoping to achieve.

I agree with this 80,000 hours page and this post by Greg Lewis that a sensible time to stop initially is when your best guess for what you should be doing stops changing much with further research. I think it is important to note two points here, however:

- It is easy for this to be the case when you aren’t taking it very seriously, and you could otherwise be making useful updates.

- It is important to reiterate that this is not a singular action taken at the beginning of your career, but rather is something you should regularly reflect on.

I think the way to manage both of these points is to set points at regular intervals where you take time to zoom out, potentially with the help of a friend/colleague.

I think you should also realise that ideally, once you have developed a framework for your prioritisation, you should hopefully be slotting in new information you learn passively and subconsciously updating. I think it is good to try and notice when important updates happen to try and catch them, but still take the opportunity to do proper regular reviews in order to actually make sure you make updates actionable.

Advice on forming your own views on key uncertainties

This feels like the hardest part of prioritisation to me, and so I thought it was worth slightly fleshing out what I found to be very helpful pieces of advice.

It is ok to start with not knowing much, even if you think you are “supposed” to have thought more about things. Prioritisation is hard.

Not internalising this is what stopped me from thinking more about prioritisation for a long time. It felt embarrassing that I, as a community builder, didn’t have my own inside view on important EA issues. I made the ingenious move to then avoid thinking about this too much, and to try and obscure my lack of knowledge.

At a retreat, I received a talk by someone where they asserted that it was ok to not have great existing ideas and that this was very difficult, and I think this made a huge difference to my trajectory in hindsight. I found being told this was key to me actually getting past my insecurity, and I would like to emphasise that it is difficult and it is ok to start from very fundamental things. Honestly, the best returns to this prioritisation process are likely when you don’t know much.

Make your own thinking very explicit

Writing your argumentation down is great for finding gaps in your reasoning, and allowing others to give feedback on it. This can be quite difficult, but in my opinion is seriously worth doing. In my own experience, I have found that it is far easier to sneak in biases or other mistakes when only discussing my views in quick conversation.

Seriously go and talk to people

It is generally very important to talk to others about your prioritisation. This is for your own sanity/motivation, getting essential feedback on your thinking, and understanding others’ models to compare with your own. Perhaps surprisingly, even senior EAs seem to be quite willing to have a meeting with you if you have a clear aim.

Follow what is actually important/exciting for you

I think a failure mode is starting with abstract, fairly intractable questions like “what is my exact credence in person-affecting views?”. This generally leads to a lack of motivation and not much progress. Instead, I would chase what feel like the most salient things to you, after some reflection. This is important for motivation, orientating in a truth-seeking way, and actually being willing to update on the information you learn.

Buy, not browse (usually)

This means that you should be deliberate and specific in targeting your questions/uncertainties (ie. “buying”) with research/discussions, for the sake of efficiency and actually making updates. The alternative, which you should avoid, is “browsing” – simply reading a semi-random assortment of material on a broad subject (eg. browsing the EA forum) without much direction, which is generally not conducive to improving your prioritisation.

The exception here might be if you are extremely new to the area, so you don’t have an idea of what the important questions/uncertainties are. A good place to start for this is 80,000 Hours’ problem profiles, which often list key cruxes for valuing particular areas.

Mistakes to avoid when thinking about prioritisation

Anchoring too much on what opportunities you have in the short-term

A common mistake seems to be that people have an ambitious long-term career goal but aren’t able to directly move towards it with their current options. Job applications often have a heavy factor of luck, and swings in the job market can mean that some roles are hard to get for a period of time.

If you think working in AI safety communications is the best thing for you to do long-term, and you get rejected from the 2 jobs which were available at the time you were job-hunting, then it doesn’t make sense to anchor on another systems operations job you received an offer from, but instead apply for other communications jobs which will allow you to build relevant skills. Even if for financial reasons you have to take the systems operations job, you should not necessarily lose sight of your previous prioritisation. Of course it is important to consider whether you might be a good fit for communications roles, but generally people seem to update too quickly.

Trying to form an inside view on everything

Forming your own detailed, gears-level model of any complex question is very difficult. It is absolutely impossible to do this for all the possible questions relevant for your prioritisation. You should prioritise according to what feels important for you to know well, and what is tractable. For the rest, you have to rely on “deliberate deference”.You will know you are deferring deliberately when you are able to sum up different positions in the relevant field(s), decide which position’s advocates you epistemically trust more, and explain why you trust this advocate more. This is a good distillation of a piece on thinking about this: In defence of epistemic modesty [distillation]

Naive optimisation

I think it would be easy to take this post as a suggestion to form a “radical inside view” which is overconfident and followed at the expense of outside views and other goals. I would encourage people to not be naive utilitarians, and instead appropriately weight other factors such as overheads of changing positions, financial security, non-EA views, etc. Additionally, while I have argued that people overrate issues like personal fit, there are real differences which may be more significant for your personal situation.

Consider the Eliezer Yudkowsky tweet: “Go three-quarters of the way from deontology to utilitarianism and then stop. You are now in the right place. Stay there at least until you have become a god”.

Being paralysed by uncertainty

Once you have highlighted to yourself how much you don’t know and how important these decisions are, it would be easy to either give up or spend forever on prioritisation at the expense of doing work. To those who might give up, I would emphasise the stakes and highlight that even small improvements could make a big difference. There is also value in just laying out your uncertainties explicitly to help build your prioritisation later. Taking forever is equally bad; consider that you are making real trade-offs with career capital and skill development which will be important for accessing the best paths. Therefore, it is important to ensure you are actually making action-relevant progress and that it is ok to make decisions under even very important uncertainties.

Useful resources for prioritisation:

This list is not extensively researched and is more like “things I have come across either during research for this piece or randomly otherwise”, and so please suggest things to add!

On heavy-tailed distributions and the differences between opportunities:

- Prospecting for Gold

- Why the problem you work on is the biggest driver of your impact - 80,000 Hours

- How much does performance differ between people?

- All moral decisions in life are on a heavy-tailed distribution

- Effectiveness is a Conjunction of Multipliers

Help for thinking broadly about prioritisation:

- EAs underestimate uncertainty in cause prioritisation (see the top comment for a further list of resources)

- Career exploration: when should you settle?

- Notes on good judgement and how to develop it - 80,000 Hours uses judgement in a slightly different way to how I defined above, but very useful.

- EA cause areas are just areas where great interventions should be easier to findHow to compare global problems for yourself - 80,000 Hours

- Terminate deliberation based on resilience, not certainty

- In defence of epistemic modesty

- Some thoughts on deference and inside-view models

Forming views on empirical uncertainties:

- Post 47: How I Formed My Own Views About AI Safety — Neel Nanda (most of it generalises beyond AI safety)

- Minimal-trust investigations - advice on when/how to question your default assumptions.

- The Wicked Problem Experience - advice for looking into particularly thorny issues, which often come up in prioritisation.

- Learning By Writing - advice for developing a view on an issue by writing.

Why you might want to act as if my claims are true, even if you have significant doubts:

The potential upsides of my claims being true are much greater than the downsides of time wasted if I am wrong. If I am right then you may be able to fairly cheaply increase your work’s impact by several times, which significantly outweighs any reasonable amount of time invested in thinking about prioritisation. Unless you think that taking prioritisation seriously would be net negative for your decisions, it also holds that the arguments made about motivation and both individual and community epistemics are true. This should push you towards taking prioritisation seriously when wanting to pursue impact if you have any significant credence in my argument.

Acknowledgements:

A big thanks to Daisy Newbold-Harrop for herculean feedback efforts, and I also really appreciate Suryansh Mehta and Isaac Dunn for spending time reviewing earlier drafts.

I’d also like to credit Isaac Dunn for being the key person who encouraged me to take prioritisation more seriously in the first place using arguments similar to some of those found in this post. This post would have happened without them. Henry Sleight and Will Payne were also critical for shaping how I orientate towards prioritisation myself and so thank you to them.

Thanks, this post is interesting. I've often experienced the frustration that EA seems to really emphasise the importance of cause prioritisation, but also that the resources for how to actually do it are pretty sparse. I've also fallen into the trap of 'apply for any EA job, it doesn't matter which', and have recently been thinking that this was a mistake and that I should invest more time in personal cause prioritization, including more strongly considering causes that EAs don't tend to prioritize, but that I think are important.

I think the idea of 'heavy-tailedness' can be overused. I'd need to look more into the links to thoroughly discuss this, but a few points:

(1) By definition, not everyone can be in the heavy tail. Therefore, while it might be true that some job opportunities that exist are orders-of-magnitude more impactful than my current job, it's less clear to me that those opportunities aren't already taken.

Concretely, a job at AMF is orders-of-magnitude more impactful than most jobs, but they're not hiring afaik, and even if they were, they might not hire me.

And you might say 'ok, well, but that's a failure of imagination if you only think that roles at famous EA orgs are super high impact - maybe you should be an entrepreneur, or...'

But my point is... it seems like by definition, not everyone can have exceptional impact/be in the heavy tail.

(2) As an EA, I shouldn't care about whether I personally am in an unusually-high-impact role, but that the best-suited person does it, by which I mean 'the person who is most competent at this job but who also wouldn’t do some other, more impactful job, even more competently'. So maybe some EAs take a view like 'well, I'm not sure exactly which EA jobs are the most impactful, but I'll just contribute to the EA ecosystem which supports whichever people end up with those super-impactful roles'.

Thanks for the thoughtful comment Amber! I appreciate the honesty in saying both that you think people should think more about prioritisation and that you haven't always yourself. I have definitely been like this at times and I think it is good/important to be able to say both statements together. I would be happy/interested to talk through your thinking about prioritisation if you wanted. I have some other accounts of people finding me helpful to talk to about that kind of thing as it happens frequently in my community building work.

Re. (1), I agree that not everyone can be in the heavy tail of the community distribution, but I don't think there's strong reasons to think that people can't reach their "personal heavy tail" of their career options as per the graph. Ie. they might not all be able to have exceptional impact on a scale relative to the world/EA population, but they can have exceptional impact relative to different counterfactuals of them, and I think that is something still worth striving for.

For (1) and (2), I guess my model of the job market/impact opportunities is less static than I think your phrasing suggests you think about it. I don't think I conceive of impact opportunities as being a fixed number of "impactful" jobs at EA orgs that we need to fill, and I think you often don't need to be super "entrepreneurial" per your words to look beyond this. Perhaps ironically, I think your work is a great example of this (from what I understand). You use your particular writing skills to help other EAs in a way that could plausibly be very impactful, and this isn't necessarily a niche that would have been filled if you hadn't taken it. It seems like there are also lots of other career paths (eg. journalism, politics, earn to give etc) which have impact potential probably higher for many people than typical EA orgs, but aren't necessarily represented in viewing things the way I perceived you to be. Of course there are also different "levels" of being entrepreneurial too which mean you aren't really directly substituting for someone else even if you aren't founding your own organisation (such as deciding on a new research agenda, taking a team in a new direction etc).

I think you might have already captured a lot of this with your "failure of imagination..." sentence, but I do think that what I am saying implies that people are capable of finding their path such that they can reach their impact potential. Perhaps some people will be the very best for particular "EA org" jobs, but that doesn't mean others can't make very impactful career paths for themselves. I agree that in some cases this might look like contributing to the EA ecosystem and using particular skills to be a multiplier on others doing work you think is really important, but I don't think it is a binary between this and working in a key role at an "EA org".

Thank you for this post it really resonated with me.

I think people get recruited by EA relatively fast. Go to intro fellowship, attend a eag/retreat, take ideas seriously, plan your career…

This process is too fast to actually grasp the complex ideas to do a good cause prioritization. After this 30+ hour EA learning phase, you stop the “learning mindset” and start taking ideas seriously and act on top problems. I was surprised to see the amount of deference in my first EA event. Even a lot of “Experienced EAs” did not have an somewhat understanding on really serious topics like AGI timelines and their implications

Anyway, I was planning to take a “Prioritization Self-Internship” Where I study for my prioritization full-time for a month and this post made me take that more seriously.

People get internships to explore really niche and untransferable stuff. Why not do an internship on something more important, neglected, and transferable like working on prioritization?

I’ll just read, write, and get feedback full-time. I want to have a visual map of arguments at the end of it where it shows all of my mental “turns” on how I ended up on that conclusion. I’d also be able to update my conclusion easily with new information I can add to the map. It’ll also be easy to get criticism with the map as well.