(This is a cross-post from my blog. The formatting there is a bit nicer. Most importantly, the tables are less squished. Also, the internal links work.)

Summary

Meta: This post attempts to summarize the interdisciplinary work on the (un)reliability of moral judgements. As that work contains many different perspectives with no grand synthesis and no clear winner (at present), this post is unable to offer a single, neat conclusion to take away. Instead, this post is worth reading if the (un)reliability of moral judgements seems important to you and you'd like to understand what the current state of investigation is.

We'd like our moral judgments to be reliable—to be sensitive only to factors that we endorse as morally relevant. Experimental studies on a variety of putatively irrelevant factors—like the ordering of dilemmas and incidental disgust at time of evaluation—give some (but not strong, due to methodological issues and limited data) reason to believe that our moral judgments do in practice track these irrelevant factors. Theories about the origins and operations of our moral faculties give further reason to suspect that our moral judgments are not perfectly reliable. There are a variety of responses which try to rehabilitate our moral judgments—by denying the validity of the experimental studies, by blocking the inference to the people and situations of most concern, by accepting their limited reliability and shrugging, by accepting their limited reliability and working to overcome it—but it's not yet clear whether any of them do or can succeed.

(This post is painfully long. Coping advice: Each subsection within Direct (empirical) evidence, within Indirect evidence, and within Responses is pretty independent—feel free to dip in and out as desired. I've also put a list-formatted summary at the end of each these sections boiling down each subsection to one or two sentences.)

Intro

"Dan is a student council representative at his school. This semester he is in charge of scheduling discussions about academic issues. He often picks topics that appeal to both professors and students in order to stimulate discussion."

Is Dan's behavior morally acceptable? On first glance, you'd be inclined to say yes. And even on the second and third glance, obviously, yes. Dan is a stand-up guy. But what if you'd been experimentally manipulated to feel disgust while reading the vignette? If we're to believe (Wheatley and Haidt 2005), there's a one-third chance you'd judge Dan as morally suspect. 'One subject justified his condemnation of Dan by writing "it just seems like he's up to something." Another wrote that Dan seemed like a "popularity seeking snob."'

The possibility that moral judgments track irrelevant factors like incidental disgust at the moment of evaluation is (to me, at least) alarming. But now that you've been baited, we can move on the boring, obligatory formalities.

What are moral judgments?

A moral judgment is a belief that some moral proposition is true or false. It is the output of a process of moral reasoning. When I assent to the claim "Murder is wrong.", I'm making a moral judgment.

(Quite a bit of work in this area talks about moral intuitions rather than moral judgments. Moral intuitions are more about the immediate sense of something than about the all-things-considered, reflective judgment. One model of the relationship between intuitions and judgments is that intuitions are the raw material which are refined into moral judgments by more sophisticated moral reasoning. We will talk predominately about moral judgments because:

- It's hard to get at intuitions in empirical studies. I don't have much faith in directions like "Give us your immediate reaction.".

- Moral judgments are ultimately what we care about insofar as we call the things that motivate moral action moral judgments.

- It's not clear that moral intuitions and moral judgments are always distinct. There is reason to believe that, at least for some people some of the time, moral intuitions are not refined before becoming moral judgments. Instead, they are simply accepted at face value.

On the other hand, in this post, we are interested in judgmental unreliability driven by intuitional unreliability. We won't focus on additional noise that any subsequent moral reasoning may layer on top of unreliability in moral intuitions.)

What would it mean for moral judgments to be unreliable?

The simplest case of unreliable judgments is when precisely the same moral proposition is evaluated differently at different times. If I tell you that "Murder is wrong in context A." today and "Murder is right in context A." tomorrow, my judgments are very unreliable indeed.

A more general sort of unreliability is when our moral judgments as actually manifested track factors that seem, upon reflection, morally irrelevant[1]. In other words, if two propositions are identical on all factors that we endorse as morally relevant, our moral judgments about these propositions should be identical. The fear is that, in practice, our moral judgments do not always adhere to this rule because we pay undue attention to other factors.

These influential but morally irrelevant factors (attested to varying degrees in the literature as we'll see below) include things like:

- Order: The moral acceptability of a vignette depends on the order in which it's presented relative to other vignettes. Disgust and cleanliness

- Disgust and cleanliness: The moral acceptability of a vignette depends how disgusted or clean the moralist (i.e. the person judging moral acceptability) feels at the time.

(The claim that certain factors are morally irrelevant is itself part of a moral theory. However, some factors seem to be morally irrelevant on a very wide range of moral theories.)

Why do we care about the alleged unreliability of moral judgments?

"The [Restrictionist] Challenge, in a nutshell, is that the evidence of the [human philosophical instrument]'s susceptibility to error makes live the hypothesis that the cathedra lacks resources adequate to the requirements of philosophical enquiry." —(J. M. Weinberg 2017a)

We're mostly going to bracket metaethical concerns here and assume that moral propositions with relatively stable truth-like values are possible and desirable and that our apprehension of these proposition should satisfy certain properties.

Given that, the overall statement of the Unreliability of Moral Judgment Problem looks like this:

- Ethical and metaethical arguments suggest that certain factors are not relevant for the truth of certain moral claims and ought not to be considered when making moral judgments.

- Empirical investigation and theoretical arguments suggests that the moral judgments of some people, in some cases, track these morally irrelevant factors.

- Therefore, for some people, in some cases, moral judgments track factors they ought not to.

Of course, how worrisome that conclusion is depends on how we interpret the "some"s. We'll address that in the final section. Before that, we'll look at the second premise. What is the evidence of unreliability?

Direct (empirical) evidence

We now turn to the central question: "Are our moral intuitions reliable?". There's a fairly broad set of experimental studies examining this question.

(When we examine each of the putatively irrelevant moral factors below, for the sake of brevity[2], I'll assume it's obvious why there's at least a prima facie case for irrelevance.)

Procedure

I attempted a systematic review of these studies. My search procedure was as follows:

- I searched for "experimental philosophy" and "moral psychology" on Library Genesis and selected all books with relevant titles. If I was in doubt based on the title alone, I looked at the book's brief description on Library Genesis.

- I then examined the table of contents for each of these books and read the relevant chapters. If I was in doubt as to the relevance of a chapter, I read its introduction or did a quick skim.

- I searched for "reliability of moral intuitions" on Google Scholar and selected relevant papers based on their titles and abstracts.

- I browsed the "experimental philosophy: ethics" section of PhilPapers and selected relevant papers based on their titles and abstracts.

- Any relevant paper (as judge by title and abstract) that was cited in the works gathered in steps 1-4 was also selected for review.

When selecting works, I was looking for experiments that examined how moral (not epistemological—another common subject of experiment) intuitions about the rightness or wrongness of behavior covaried with factors that are prima facie morally irrelevant. I was open to any sort of subject population though most studies ended up examining WEIRD college students or workers on online survey platforms like Amazon Mechanical Turk.

I excluded experiments that examined other moral intuitions like:

- whether moral claims are relative

- if moral behavior is intentional or unintentional (Knobe 2003)

There were also several studies that examined people's responses to Kahneman and Tversky's Asian disease scenario. Even though this scenario has a strong moral dimension, I excluded these studies on the grounds that any strangeness here was most likely (as judged by my intuitions) a result of non-normative issues (i.e. failure to actually calculate or consider the full implications of the scenario).

For each included study, I extracted information like sample size and the authors' statistical analysis. Some putatively irrelevant factors—order and disgust—had enough studies that homogenizing and comparing the data seemed fruitful. In these cases, I computed the effect size for each data point (The code for these calculations can be found here).

is a measure of effect size like the more popular (I think?) Cohen's . However, instead of measuring the standardized difference of the mean of two populations (like ), measures the fraction of variation explained. That means is just like . The somewhat arbitrary conventional classification is that represents a small effect, represents a medium effect and anything larger counts as a large effect.

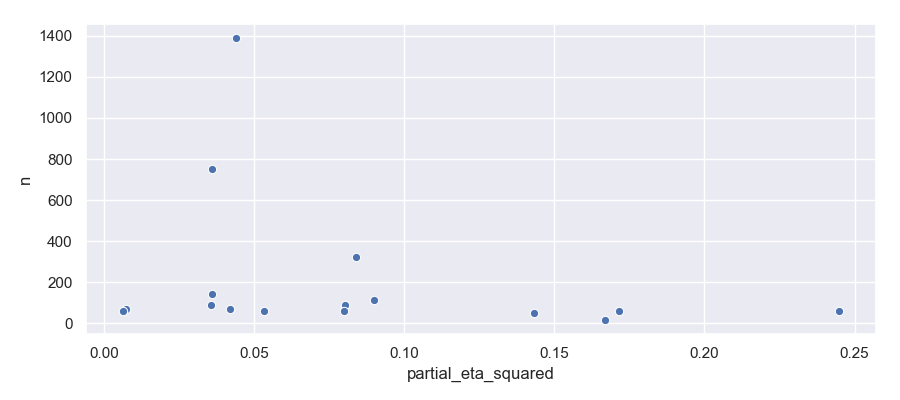

For the factors with high coverage—order and disgust—I also created funnel plots. A funnel plot is a way to assess publication bias. If everything is on the up and up, the plot should look like an upside down funnel—effect sizes should spread out symmetrically as we move down from large sample studies to small sample studies. If researchers only publish their most positive results, we expect the funnel to be very lopsided and for the effect size estimated in the largest study to be the smallest.

Order

Generally, the manipulation in these studies is to present vignettes in different sequences to investigate whether earlier vignettes influence moral intuitions on later vignettes. For example, if a subject receives:

- a trolley problem where saving five people requires killing one by flipping a switch, and

- a trolley problem where saving five people requires pushing one person with a heavy backpack into the path of the trolley,

do samples give the same responses to these vignettes regardless of the order they're encountered?

The findings seems to be roughly that:

- there are some vignettes which elicit stable moral intuitions and are not susceptible to order effects

- more marginal scenarios are affected by order of presentation

- the order effect seems to operate like a ratchet in which people are willing to make subsequent judgments stricter but not laxer

But should we actually trust the studies? I give brief comments on the methodology of each study in the appendix. Overall, these studies seemed of pretty methodologically standard to me—no major red flags.

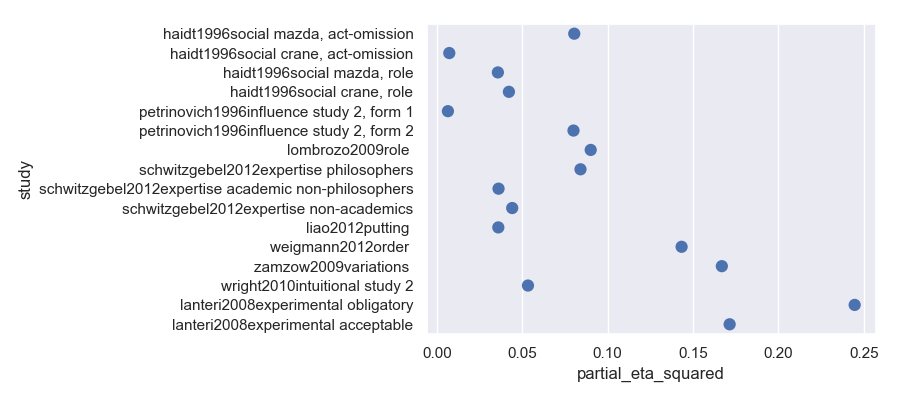

The quantitive results follow. The summary is that while there's substantial variation in effect size and some publication bias, I'm inclined to believe there's a real effect here.

Studies of moral intuitions and order effects

| Study | Independent variable | Dependent variable | Sample size | Result | Effect size |

|---|---|---|---|---|---|

| [@petrinovich1996influence], study 2, form 1 | Ordering of inaction vs action | Scale of agreement | 30 vs 29 | ; | |

| [@petrinovich1996influence], study 2, form 2 | Ordering of inaction vs action | Scale of agreement | 30 vs 29 | ; | |

| [@haidt1996social], mazda | Ordering of act vs omission | Rating act worse | 45.5 vs 45.5[^estimate] | ; | |

| [@haidt1996social], crane | Ordering of act vs omission | Rating act worse | 34.5 vs 34.5 | ; | |

| [@haidt1996social], mazda | Ordering of social roles | Rating friend worse | 45.5 vs 45.5 | ; | |

| [@haidt1996social], crane | Ordering of social roles | Rating foreman worse | 34.5 vs 34.5 | ; | |

| [@lanteri2008experimental] | Ordering of vignettes | Obligatory or not | 31 vs 31 | ; | |

| [@lanteri2008experimental] | Ordering of vignettes | Acceptable or not | 31 vs 31 | ; $ p=0.0011$ | |

| [@lombrozo2009role] | Ordering of trolley switch vs push | Rating of permissibility | 56 vs 56 | ; | |

| [@zamzow2009variations] | Ordering of vignettes | Right or wrong | 8 vs 9 | ; | |

| [@wright2010intuitional], study 2 | Ordering of vignettes | Right or wrong | 30 vs 30 | ; | |

| [@schwitzgebel2012expertise], philosphers | Within-pair vignette orderings | Number of pairs judged equivalent | 324 | ; | |

| [@schwitzgebel2012expertise], academic non-philosophers | Within-pair vignette orderings | Number of pairs judged equivalent | 753 | ; | |

| [@schwitzgebel2012expertise], non-academics | Within-pair vignette orderings | Number of pairs judged equivalent | 1389 | ; | |

| [@liao2012putting] | Ordering of vignettes | Rating of permissibility | 48.3 vs 48.3 vs 48.3 | ; | |

| [@wiegmann2012order] | Most vs least agreeable first | Rating of shouldness | 25 vs 25 | ; |

(Pseudo-)Forest plot showing reported effect sizes for order manipulations

While there's clearly dispersion here, that's to be expected given the heterogeneity of the studies. The most important source of which (I'd guess) is the vignettes used[3]. The more difficult the dilemma, the more I'd expect order effects to matter and I'd expect some vignettes to show no order effect. I'm not going to endorse murder for fun no matter which vignette you precede it with. Given all this, a study could presumably drive the effect size from ordering arbitrarily low with the appropriate choice of vignettes. On the other hand, it seems like there probably is some upper bound on the magnitude of order effects and more careful studies and reviews could perhaps tease that out.

Funnel plot showing reported effect sizes for order manipulations

The funnel plot seems to indicate some publication bias, but it looks like the effect may be real even after accounting for that.

Wording

Unfortunately, I only found one paper directly testing this. In this study, half the participants had their trolley problem described with:

(a) "Throw the switch, which will result in the death of the one innocent person on the side track" and (b) "Do nothing, which will result in the death of the five innocent people."

and the other half had their problem described with:

(a) "Throw the switch, which will result in the five innocent people on the main track being saved" and (b) "Do nothing, which will result in the one innocent person being saved."

.

The actual consequences of each action are the same in each condition—it's only the wording which has changed. The study (with each of two independent samples) found that indeed people's moral intuitions varied based on the wording:

Studies of moral intuitions and wording effects

| Study | Independent variable | Dependent variable | Sample size | Result | Effect size |

|---|---|---|---|---|---|

| [@petrinovich1993empirical], general class | Wording of vignettes | Scale of agreement | 361 | ; | |

| [@petrinovich1993empirical], biomeds | Wording of vignettes | Scale of agreement | 60 | ; |

While the effects are quite large here, it's worth noting that in other studies in other domains framing effects have disappeared when problems were more fully described (Kühberger 1995). (Kuhn 1997) found that even wordings which were plausibly equivalent led subjects to alter their estimates of implicit probabilities in vignettes.

Disgust and cleanliness

In studies of disgust[4], subjects are manipulated to feel disgust via mechanisms like:

- recalling and vividly writing about a disgusting experience,

- watching a clip from Trainspotting,

- being exposed to a fart spray, and

- being hypnotized to feel disgust at the word "often" (Yes, this is really one of the studies).

In studies of cleanliness, subjects are manipulated to feel clean via mechanisms like:

- doing sentence unscrambling tasks with words about cleanliness,

- washing their hands, and

- being in a room where Windex was sprayed.

After disgust or cleanliness is induced (in the non-control subjects), subjects are asked to undertake some morally-loaded activity (usually making moral judgments about vignettes). The hypothesis is that their responses will be different because talk of moral purity and disgust is not merely metaphorical—feelings of cleanliness and incidental disgust at the time of evaluation have a causal effect on moral evaluations. Confusingly, the exact nature of this putative relationship seems rather protean: it depends subtly on whether the subject or the object of a judgment feels clean or disgusted and can be mediated by private body consciousness and response effort.

As the above paragraph may suggest, I'm pretty skeptical of a shocking fraction of these studies (as discussed in more detail in the appendix). Some recurring reasons:

- the manipulations often seem quite weak (e.g. sentence unscrambling, a spritz of Lysol on the questionnaire),

- the manipulation checks often fail but the authors never seem particularly troubled by this or the fact that they find their predicted results despite the apparent failure of manipulation,

- authors seem more inclined to explain noisy or apparently contradictory results by complicating their theory than by falsifying their theory, and

- multiple direct replications have failed.

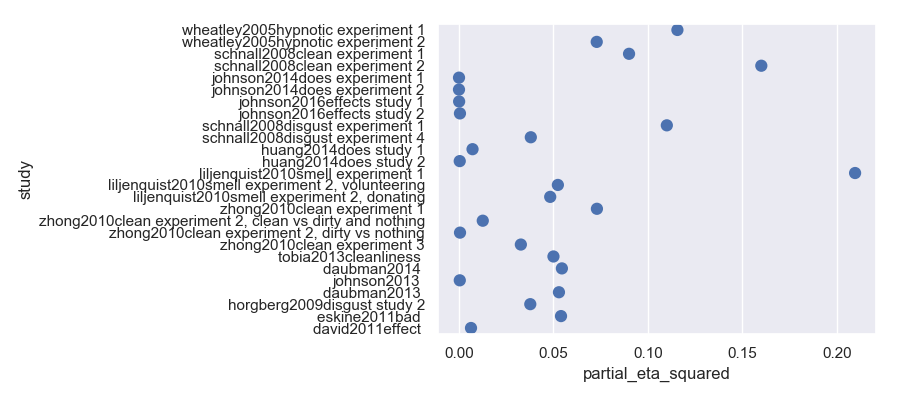

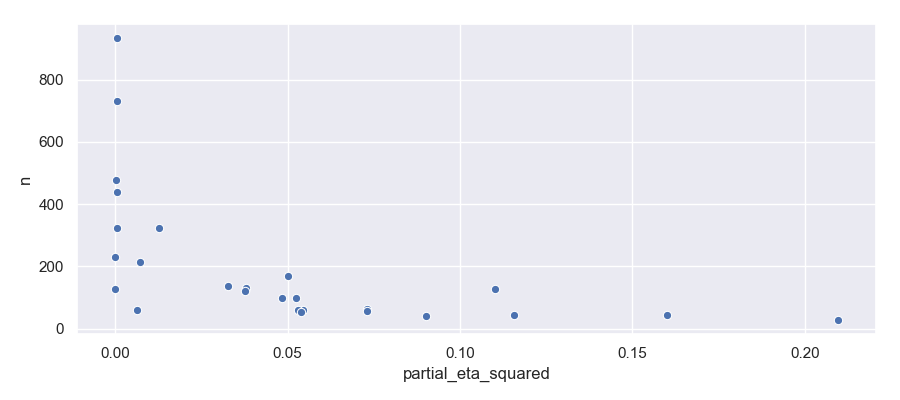

The quantitative results follow. I'll summarize them in advance by drawing attention to the misshapen funnel plot which I take as strong support for my methodological skepticism. The evidence marshaled so far does not seem to support the claim that disgust and cleanliness influence moral judgments.

Studies of moral intuitions and disgust or cleanliness effects

| Study | Independent variable | Dependent variable | Sample size | Result | Effect size |

|---|---|---|---|---|---|

| [@wheatley2005hypnotic], experiment 1 | Hypnotic disgust cue | Scale of wrongness | 45 | ; | |

| [@wheatley2005hypnotic], experiment 2 | Hypnotic disgust cue | Scale of wrongness | 63 | ; | |

| [@schnall2008clean], experiment 1 | Clean word scramble | Scale of wrongness | 20 vs 20 | ; | |

| [@schnall2008clean], experiment 2 | Disgusting movie clip | Scale of wrongness | 22 vs 22 | ; | |

| [@schnall2008disgust], experiment 1 | Fart spray | Likert scale | 42.3 vs 42.3 vs 42.3 | ; | |

| [@schnall2008disgust], experiment 2 | Disgusting room | Scale of appropriacy | 22.5 vs 22.5 | Not significant | |

| [@schnall2008disgust], experiment 3 | Describe disgusting memory | Scale of appropriacy | 33.5 vs 33.5 | Not significant | |

| [@schnall2008disgust], experiment 4 | Disgusting vs sad vs neutral movie clip | Scale of appropriacy | 43.3 vs 43.3 vs 43.3 | ; | |

| [@horberg2009disgust], study 2 | Disgusting vs sad movie clip | Scale of rightness and wrongness | 59 vs 63 | ; | |

| [@liljenquist2010smell], experiment 1 | Clean scent in room | Money returned | 14 vs 14 | ; | |

| [@liljenquist2010smell], experiment 2 | Clean scent in room | Scale of volunteering interesting | 49.5 vs 49.5 | ; | |

| [@liljenquist2010smell], experiment 2 | Clean scent in room | Willingness to donate | 49.5 vs 49.5 | ; | |

| [@zhong2010clean], experiment 1 | Antiseptic wipe for hands | Scale of immoral to moral | 29 vs 29 | ; | |

| [@zhong2010clean], experiment 2 | Visualize clean vs dirty and nothing | Scale of immoral to moral | 107.6 vs 107.6 vs 107.6 | ; | |

| [@zhong2010clean], experiment 2 | Visualize dirty vs nothing | Scale of immoral to moral | 107.6 vs 107.6 vs 107.6 | ; | |

| [@zhong2010clean], experiment 3 | Visualize clean vs dirty | Scale of immoral to moral | 68 vs 68 | ; | |

| [@eskine2011bad] | Sweet, bitter or neutral drink | Scale of wrongness | 18 vs 15 vs 21 | ; | |

| [@david2011effect] | Presence of disgust-conditioned word | Scale of wrongness | 61 | ; Not significant | |

| [@tobia2013cleanliness], undergrads | Clean scent on survey | Scale of wrongness | 84 vs 84 | ; | |

| [@tobia2013cleanliness], philosophers | Clean scent on survey | Scale of wrongness | 58.5 vs 58.5 | Not significant | |

| [@huang2014does], study 1 | Clean word scramble | Scale of wrongness | 111 vs 103 | ; | |

| [@huang2014does], study 2 | Clean word scramble | Scale of wrongness | 211 vs 229 | ; | |

| [@johnson2014does], experiment 1 | Clean word scramble | Scale of wrongness | 114.5 vs 114.5 | ; | |

| [@johnson2014does], experiment 2 | Washing hands | Scale of wrongness | 58 vs 68 | ; | |

| [@johnson2016effects], study 1 | Describe disgusting memory | Scale of wrongness | 222 vs 256 | ; | |

| [@johnson2016effects], study 2 | Describe disgusting memory | Scale of wrongness | 467 vs 467 | ; | |

| [@daubman2014] | Clean word scramble | Scale of wrongness | 30 vs 30 | ; | |

| [@daubman2013] | Clean word scramble | Scale of wrongness | 30 vs 30 | ; | |

| [@johnson2014] | Clean word scramble | Scale of wrongness | 365.6 vs 365.5 | ; |

(Pseudo-)Forest plot showing reported effect sizes for disgust/cleanliness manipulations

Funnel plot showing reported effect sizes for disgust/cleanliness manipulations

This funnel plot suggests pretty heinous publication bias. I'm inclined to say that the evidence does not support claims of a real effect here.

Gender

This factor has extra weight within the field of philosophy because it's been offered as an explanation for the relative scarcity of woman in academic philosophy (Buckwalter and Stich 2014): if women's philosophical intuitions systematically diverge from those of men and from canonical answers to various thought experiments, they may find themselves discouraged.

Studies on this issue typically just send surveys to people with a series of vignettes and analyze how the results vary depending on gender.

I excluded (Buckwalter and Stich 2014) entirely for reasons described in the appendix.

Here are the quantitative results:

Studies of moral intuitions and gender effects

| Study | Independent variable | Dependent variable | Sample size | Result |

|---|---|---|---|---|

| [@lombrozo2009role], trolley switch | Gender | Scale of permissibility | 74.7 vs 149.3 | , |

| [@lombrozo2009role], trolley push | Gender | Scale of permissibility | 74.7 vs 149.3 | , |

| [@seyedsayamdost2015gender], plank of Carneades, MTurk | Gender | Scale of blameworthiness | 70 vs 86 | , |

| [@seyedsayamdost2015gender], plank of Carneades, SurveyMonkey | Gender | Scale of blameworthiness | 48 vs 50 | , |

| [@adleberg2015men], violinist | Gender | Scale from forbidden to obligatory | 52 vs 84 | , |

| [@adleberg2015men], magistrate and the mob | Gender | Scale from bad to good | 71 vs 87 | , |

| [@adleberg2015men], trolley switch | Gender | Scale of acceptability | 52 vs 84 | , |

As we can see, there doesn't seem to be good evidence for an effect here.

Culture and socioeconomic status

There's just one study here[5]. It tested responses to moral vignettes across high and low socioeconomic status samples in Philadelphia, USA and Porto Alegre and Recife, Brazil.

As mentioned in the appendix, I find the seemingly very artificial dichotimization of the outcome measure a bit strange in this study.

Here are the quantitative results:

Studies of moral intuitions and culture/SES effects

| Study | Independent variable | Dependent variable | Sample size | Result |

|---|---|---|---|---|

| [@haidt1993affect], adults | Culture | Acceptable or not | 90 vs 90 | ; |

| [@haidt1993affect], children | Culture | Acceptable or not | 90 vs 90 | ; |

| [@haidt1993affect], adults | SES | Acceptable or not | 90 vs 90 | ; |

| [@haidt1993affect], children | SES | Acceptable or not | 90 vs 90 | ; |

The study found that Americans and those of high socioeconomic status were more likely to judge disgusting but harmless activities as morally acceptable.

Personality

There's just one survey here examining how responses to vignettes varied with Big Five personality traits.

Studies of moral intuitions and personality effects

| Study | Independent variable | Dependent variable | Sample size | Result |

|---|---|---|---|---|

| [@feltz2008fragmented], experiment 2 | Extraversion | Is it wrong? Yes or no | 162 | , |

Actor/observer

In these studies, one version of the vignette has some stranger as the central figure in the dilemma. The other version puts the survey's subject in the moral dilemma. For example, "Should Bob throw the trolley switch?" versus "Should you throw the trolley switch?".

I'm actually mildly skeptical that inconsistency here is necessarily anything to disapprove of. Subjects know more about themselves than about arbitrary characters in vignettes. That extra information could be justifiable grounds for different evaluations. For example, if subjects understand themselves to be more likely than the average person to be haunted by utilitarian sacrifices, that could ground different decisions in moral dilemmas calling for utilitarian sacrifice.

Nevertheless, the quantitative results follow. They generally find there is a significant effect.

Studies of moral intuitions and actor/observer effects

| Study | Independent variable | Dependent variable | Sample size | Result |

|---|---|---|---|---|

| [@nadelhoffer2008actor], trolley switch, undergrads | Actor vs observer | Morally permissible? Yes or no | 43 vs 42 | 90% permissible in observer condition; 65% permissible in actor condition; |

| [@tobia2013moral], trolley switch, philosophers | Actor vs observer | Morally permissible? Yes or no | 24.5 vs 24.5 | 64% permissible in observer condition; 89% permissible in actor condition; |

| [@tobia2013moral], Jim and the natives, undergrads | Actor vs observer | Morally obligated? Yes or no | 20 vs 20 | 53% obligatory in observer condition; 19% obligatory in actor condition; |

| [@tobia2013moral], Jim and the natives, philosophers | Actor vs observer | Morally obligated? Yes or no | 31 vs 31 | 9% obligatory in the observer condition; 36% obligatory in the actor condition; |

| [@tobia2013cleanliness], undergrads | Actor vs observer | Scale of wrongness | 84 vs 84 | ; |

| [@tobia2013cleanliness], philosophers | Actor vs observer | Scale of wrongness | 58.5 vs 58.5 | Not significant |

Summary

- Order: Lots of studies, overall there seems to be evidence of an effect

- Wording: Just one study, big effect, strikes me as plausible that there's an effect here

- Disgust and cleanliness: Lots of studies, lots of methodological problems and lots of publication bias, I round this to no good evidence of the effect

- Gender: Medium amount of studies, studies generally don't find evidence of an effect

- Culture and socioeconomic status: One study, found effect, seems hard to imagine there's no effect here

- Personality: One study, found effect

- Actor/observer: A couple of studies, found big effects, strikes me as plausible that there's an effect here

Indirect evidence

Given that the direct evidence isn't quite definitive, it may be useful to look at some indirect evidence. By that, I mean we'll look at (among other things) some underlying theories about how moral intuitions operate and what bearing they have on the question of reliability.

Heuristics and biases

No complex human faculty is perfectly reliable. This is no surprise and perhaps not of great import.

But we have evidence that some faculties are not only "not perfect" but systematically and substantially biased. The heuristics and biases program (heavily associated with Kahneman and Tversky) of research has shown[6] serious limitations in human rationality. A review of that literature is out of scope here, but the list of alleged aberrations is extensive. Scope insensitivity—the failure of people, for example, to care twice as much about twice as many oil-covered seagulls—is one example I find compelling.

How relevant these problems are for moral judgment is a matter of some interpretation. An argument for relevance is this: even supposing we have sui generis moral faculties for judging purely normative claims, much day-to-day "moral" reasoning is actually prudential reasoning about how best to achieve our ends given constraints. This sort of prudential reasoning is squarely in the crosshairs of the heuristics and biases program.

At a minimum, prominent heuristics and biases researcher Gerd Gigerenzer endorses the hypothesis that heuristics underlying moral behavior are "largely" the same as heuristics underlying other behavior (Gigerenzer 2008). He explains, "Moral intuitions fit the pattern of heuristics, in our"narrow" sense, if they involve (a) a target attribute that is relatively inaccessible, (b) a heuristic attribute that is more easily accessible, and (c) an unconscious substitution of the target attribute for the heuristic attribute." Condition (a) is satisfied by many accounts of morality and heuristic attributes as mentioned in (b) abound (e.g. how bad does it feel to think about action A). It seems unlikely that the substitution described in (c) fails to happen only in the domain of moral judgments.

Neural

Now we'll look at unreliability at a lower level.

A distinction is sometimes drawn between joint evaluations—choice—and single evaluations—judgment. In a choice scenario, an actor has to choose between multiple options presented to them simultaneously. For example, picking a box of cereal in the grocery store requires choice. In a judgment scenario, an actor makes some evaluation of an option presented in isolation. For example, deciding how much to pay for a used car is judgment scenario.

For both tasks, leading models are (as far as I understand things) fundamentally stochastic.

Judgment tasks are described by the random utility model in which, upon introspection, an actor samples from a distribution of possible valuations for an option rather than finding a single, fixed valuation (Glimcher, Dorris, and Bayer 2005). This makes sense at the neuronal level because liking is encoded as the firing rate of a neuron and firing rates are stochastic.

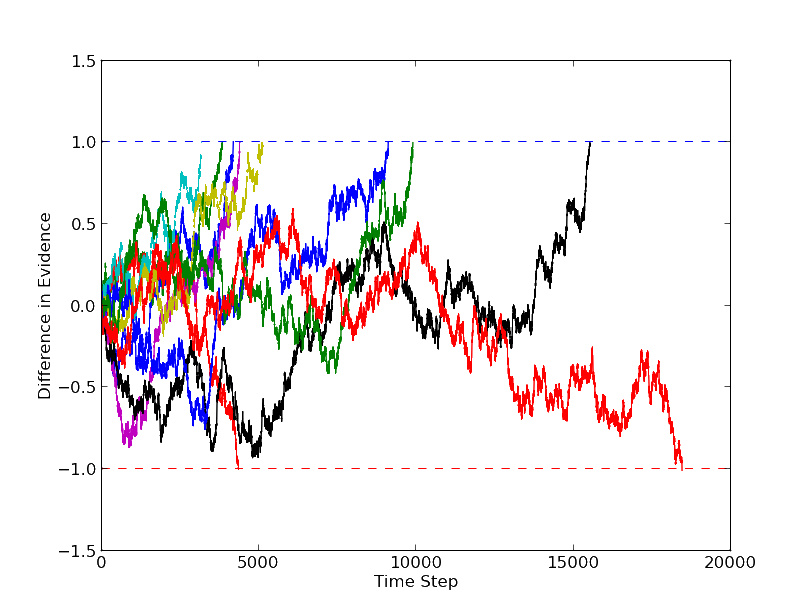

Choice tasks are described by the drift diffusion model in which the current disposition to act starts at 0 on some axis and takes a biased random walk (drifts) (Ratcliff and McKoon 2008). Away from zero, on two opposite sides, are thresholds representing each of the two options. Once the current disposition drifts past a threshold, the corresponding option is chosen. Because of the random noise in the drift process, there's no guarantee that the threshold favored by the bias will always be the first one crossed. Again, the randomness in this model makes sense because neurons are stochastic.

Example of ten evidence accumulation sequences for the drift diffusion model, where the true result is assigned to the upper threshold. Due to the addition of noise, two sequences have produced an inaccurate decision. From Wikipedia.

So for both choice and judgment tasks, low-level models and neural considerations suggest that we should expect noise rather than perfectly reliability. And we should probably expect this to apply equally in the moral domain. Indeed, experimental evidence suggests that a drift diffusion model can be fit to moral decisions (Crockett et al. 2014) (Hutcherson, Bushong, and Rangel 2015).

Dual process

Josh Greene's dual process theory of moral intuitions (Greene 2007) suggests that we have two different types of moral intuitions originating from two different cognitive systems. System 1 is emotional, automatic and produces characteristically deontological judgments. System 2 is non-emotional, reflective and produces characteristically consequentialist judgments.

He makes the further claim that these deontological, system 1 judgments ought not to be trusted in novel situations because their automaticity means they fail to take new circumstances into account.

Genes

All complex behavioral traits have substantial genetic influence (Plomin et al. 2016). Naturally, moral judgments are part of "all". This means certain traits relevant for moral judgment are evolved. But an evolved trait is not necessarily an adaptation. A trait only rises to the level of adaptation if it was the result of natural selection (as opposed to, for example, random drift).

If our evolved faculties for moral judgment are not adaptations (i.e. they are random and not the product of selection), it seems clear that they're unlikely to be reliable.

On the other hand, might adaptations be reliable? Alas, even if our moral intuitions are adaptive this is no guarantee that they track the truth. First, knowledge is not always fitness relevant. For example, "perceiving gravity as a distortion of space-time" would have been no help in the ancestral environment (Krasnow 2017). Second, asymmetric costs and benefits for false positives and false negatives means that perfect calibration isn't necessarily optimal. Prematurely condemning a potential hunting partner as untrustworthy comes at minimal cost if there are other potential partners around while getting literally stabbed in the back during a hunt would be very costly indeed. Finally, because we are socially embedded, wrong beliefs can increase fitness if they affect how others treat us.

Even if our moral intuitions are adaptations and were reliable in the ancestral environment, that's no guarantee that they're reliable in the modern world. There's reason to believe that our moral intuitions are not well-tuned to "evolutionarily novel moral dilemmas that involve isolated, hypothetical, behavioral acts by unknown strangers who cannot be rewarded or punished through any normal social primate channels". (Miller 2007) (Though for a contrary point of view about social conditions in the ancestral environment, see (Turner and Maryanski 2013).) This claim is especially persuasive if we believe that (at least some of) our moral intuitions are the result of a fundamentally reactive, retrospective process like Greene's system 1[7].

(If you're still skeptical about the role of biological evolution in our faculties for moral judgment, Tooby and Cosmides's social contract theory is often taken to be strong evidence for the evolution of some specifically moral faculties. Tooby and Cosmides are advocates of the massive modularity thesis according to which the human brain is composed of a large number of special purpose modules each performing a specific computational task. Social contract theory finds that people are much better at detecting violations of conditional rules when those rules encode a social contract. Tooby and Cosmides[8] take this to mean that we have evolved a special-purpose module for analyzing obligation in social exchange which cannot be applied to conditional rules in the general case.)

(There's a lot more research on the deep roots of cooperation and morality in humans: (Boyd and Richerson 2005), (Boyd et al. 2003), (Hauert et al. 2007), (Singer and others 2000).)

Universal moral grammar

Linguists have observed a poverty of the stimulus—children learn how to speak a natural language without anywhere near enough language experience to precisely specify all the details of that language. The solution that Noam Chomsky came up with is a universal grammar—humans have certain language rules hard-coded in our brains and language experience only has to be rich enough to select among these, not construct them entirely.

Researchers have made similar claims about morality (Sripada 2008). The argument is that children learn moral rules without enough moral experience to precisely specify all the details of those rules. Therefore, they must have a universal moral grammar—innate faculties that encode certain possible moral rules. There are of course arguments against this claim. Briefly: moral rules are much less complex than languages, and (some) language learning must be inductive while moral learning can include explicit instruction.

If our hard-coded moral rules preclude us from learning the true moral rules (a possibility on some metaethical views), our moral judgments would be very unreliable indeed (Millhouse, Ayars, and Nichols 2018).

Culture

I'll take it as fairly obvious that our moral judgments are culturally influenced[9] (see e.g. (Henrich et al. 2004)). A common story for the role of culture in moral judgments and behavior is that norms of conditional cooperation arose to solve cooperation problems inherent in group living (Curry 2016) (Hechter and Opp 2001). But, just as we discussed with biological evolution, these selective pressures aren't necessarily aligned with the truth.

One of the alternative accounts of moral judgments as a product of culture is the social intuitionism of Haidt and Bjorklund (Haidt and Bjorklund 2008). They argue that, at the individual level, moral reasoning is usually a post-hoc confabulation intended to support automatic, intuitive judgments. Despite this, these confabulations have causal power when passed between people and in society at large. These socially-endorsed confabulations accumulate and eventually become the basis for our private, intuitive judgments. Within this model, it seems quite hard to arrive at the conclusion that our moral judgments are highly reliable.

Moral disagreements

There's quite a bit of literature on the implications of enduring moral disagreement. I'll just briefly mention that, on many metaethical views, it's not trivial to reconcile perfectly reliable moral judgments and enduring moral disagreement. (While I think this is an important line of argument: I'm giving it short shrift here because: 1. the fact of moral disagreement is no revelation, and 2. it's hard to make it bite—it's too easy to say, "Well, we disagree because I'm right and they're wrong.".)

Summary

- Heuristics and biases: There's lots of evidence that humans are not instrumentally rational. This probably applies at least somewhat to moral judgments too since prudential reasoning is common in day-to-day moral judgments.

- Neural: Common models of both choice and judgment are fundamentally stochastic which reflects the stochasticity of neurons.

- Dual process: System 1 moral intuitions are automatic, retrospective and untrustworthy.

- Genes: Moral faculties are at least partly evolved. Adaptations aren't necessarily truth-tracking—especially when removed from the ancestral environment.

- Culture: Culture influences moral judgment and cultural forces don't necessarily incentivize truth-tracking.

- Moral disagreement: There's a lot of disagreement about what's moral and it's hard to both accept this and claim that moral judgments are perfectly reliable.

Responses

Depending on how skeptical of skepticism you're feeling, all of the above might add up to serious doubts about the reliability of our moral intuitions. How might we respond to these doubts? There are a variety of approaches discussed in the literature. I will group these responses loosely based on how they fit into the structure of the Unreliability of Moral Judgment Problem:

- The first type of response simply questions the internal validity of the empirical studies calling the core of premise 2 into question.

- The second type of response questions the external validity of the studies thereby asserting that the "some"s in premise 2 ("some people, in some cases") are narrow enough to defuse any real threat in the conclusion.

- The third type of response accepts the whole argument and argues that it's not too worrisome.

- The fourth type of response accepts the whole argument and argues that we can take countermeasures.

Internal validity

If the experimental results that purport to show that moral judgments are unreliable lack internal validity, the argument as a whole lacks force. On the other hand, the invalidity of these studies isn't affirmative evidence that moral judgments are reliable and the indirect evidence may still be worrying.

The validity of the studies is discussed in the direct evidence section and in the appendix so I won't repeat it here[10]. I'll summarize my take as: the cleanliness/disgust studies have low validity, but the order studies seem plausible and I believe that there's a real effect there, at least on the margin. Most of the other factors don't have enough high-quality studies to draw even a tentative conclusion. Nevertheless, when you add in my priors and the indirect evidence, I believe there's reason to be concerned.

Expertise

The most popular response among philosophers (surprise, surprise) is the expertise defense: The moral judgments of the folk may track morally irrelevant factors, but philosophers have acquired special expertise which immunizes them from these failures[11]. There is an immediate appeal to the argument: What does expertise mean if not increased skill? There is even supporting evidence in the form of trained philosophers' improved performance on cognitive reflection tests (This test asks questions with intuitive but incorrect responses. For example, "A bat and a ball cost $1.10 in total. The bat costs $1.00 more than the ball. How much does the ball cost?". (Frederick 2005)).

Alas, that's where the good news ends and the trouble begins. As (Weinberg et al. 2010) describes it, the expertise defense seems to rely on a folk theory of expertise in which experience in a domain inevitably improves skill in all areas of that domain. Engagement with the research on expert performance significantly complicates this story.

First, it seems to be the case that not all domains are conducive to the development of expertise. For example, training and experience do not produce expertise at psychiatry and stock brokerage according to (Dawes 1994) and (Shanteau 1992). Clear, immediate and objective feedback appears necessary for the formation of expertise (Shanteau 1992). Unfortunately, it's hard to construe whatever feedback is available to moral philosophers considering thought experiments and edge cases as clear, immediate and objective (Clarke 2013) (Weinberg 2007).

Second, "one of the most enduring findings in the study of expertise [is that there is] little transfer from high-level proficiency in one domain to proficiency in other domains—even when the domains seem, intuitively, very similar" (Feltovich, Prietula, and Ericsson 2006). Chess experts have excellent recall for board configurations, but only when those configurations could actually arise during the course of a game (De Groot 2014). Surgical expertise carries over very little from one surgical task to another (Norman et al. 2006). Thus, evidence of improved cognitive reflection is not a strong indicator of improved moral judgment[12]. Nor is evidence of philosophical excellence on any task other than moral judgment itself likely to be particularly compelling. (And even "moral judgment" may be too broad and incoherent a thing to have uniform skill at.)

Third, it's not obvious that expertise immunizes from biases. Studies have claimed that Olympic gymnastics judges and professional auditors are vulnerable to order effects despite being expert in other regards (Brown 2009) (Damisch, Mussweiler, and Plessner 2006).

Finally, there is direct empirical evidence that philosophers moral judgments continue to track putatively morally irrelevant factors[13]. See (K. P. Tobia, Chapman, and Stich 2013), (K. Tobia, Buckwalter, and Stich 2013) and (Schwitzgebel and Cushman 2012) already described above. ((Schulz, Cokely, and Feltz 2011) find similar results for another type of philosophical judgment.)

So, in sum, while there's an immediate appeal to the expertise defense (surely we can trust intuitions honed by years of philosophical work), it looks quite troubled upon deeper examination.

Ecological validity

It's always a popular move to speculate that the lab isn't like the real world and so lab results don't apply in the real world. Despite the saucy tone in the preceding sentence, I think there are real concerns here:

- Reading vignettes is not the same as actually experiencing moral dilemmas first-hand.

- Stated judgments are not the same as actual judgments and behaviors. For example, none of the studies mentioned doing anything (beyond standard anonymization) to combat social desirability bias.

However, it's not clear to me how these issues license a belief that real moral judgments are likely to be reliable. One can perhaps hope that we're more reliable when the stakes truly matter, but it would take a more detailed theory for the ecological validity criticisms to have an impact.

Sufficient

One way of limiting the force of the argument against the reliability of moral judgments is simply to point out that many judgments are reliable and immune to manipulation. This is certainly true; order effects are not omnipotent. I'm not going to go out and murder anyone just because you prefaced the proposal with the right vignette.

Another response to the experimental results is to claim that even though people's ratings as measured with a Likert scale changed, the number of people actually switching from moral approval to disapproval or vice versa (i.e. moving from one half of the Likert scale to the other) is unreported and possibly small (Demaree-Cotton 2016).

The first response to this response is that the truth of this claim even as reported in the paper making the argument depends on your definition of "small". I think a 20% probability of switching from moral approval to disapproval based on the ordering of vignettes is not small.

The second set of responses to this attempted defusal is as follows. Even if experiments only found shifts in degree of approval or disapproval, that would be worrying because:

- Real moral decisions often involve trade-offs between two wrongs or two rights and the degree of rightness or wrongness of each component in such dilemmas may determine the final judgment rendered. (Andow 2016)

- Much philosophical work involves thought experiments on the margin where approval and disapproval are closely balanced (Wright 2016). Even small effects from improper sources at the border can lead to the wrong judgment. Wrong judgments on these marginal thought experiments can cascade to more mundane and important judgments if we take them at face value and apply something like reflective equilibrium.

Ecologically rational

Gerd Gigerenzer likes to make the argument (contra Kahneman and Tversky; some more excellent academic slap fights here) that heuristics are ecologically rational (Todd and Gigerenzer 2012). By this, he means that they are optimal in a given context. He also talks about less-is-more effects in which simple heuristics actually outperform more complicated and apparently ideal strategies[14].

One could perhaps make an analogous argument for moral judgments: though they don't always conform to the dictates of ideal theory, they are near optimal given the environment in which they operate. Though we can't relitigate the whole argument here, I'll point out that there's lots of pushback against Gigerenzer's view. Another response to the response would be to highlight ways in which moral judgment is unique and the ecological validity response doesn't apply to moral heuristics.

Second-order reliability

Even if we were to accept that our moral judgments are unreliable, that might not be fatal. If we could judge when our moral judgments are reliable—if we had reliable second-order moral judgments—we could rely upon our moral judgments only in domains where we knew them to be valid.

Indeed, there's evidence that, in general, we are more confident in our judgments when they turn out to be correct (Gigerenzer, Hoffrage, and Kleinbölting 1991). But subsequent studies have suggested our confidence actually tracks consensuality rather than correctness (Koriat 2008). People were highly confident when asked about popular myths (for example, whether Sydney is the capital of Australia). This possibility of consensual, confident wrongness is pretty worrying (Williams 2015).

Jennifer Wright has two papers examining this possibility empirically. In (Wright 2010), she found that more confident epistemological and ethical judgments were less vulnerable to order effects. Thus, lack of confidence in a philosophical intuition may be a reliable indicator that the intuition is unreliable. (Wright 2013) purports to address related questions, but I found I found it unconvincing for a variety of reasons.

Moral engineering

The final response to evidence of unreliability is to argue that we can overcome our deficiencies by application of careful effort. Engineering reliable systems and processes from unreliable components is a recurring theme in human progress. The physical sciences work with imprecise instruments and overcome that limitation through careful design of procedures and statistical competence. In distributed computing, we're able to build reliable systems out of unreliable components.

As a motivating example, imagine a set of litmus strips which turn red in acid and blue in base (Weinberg 2016). Now suppose that each strip has only a 51% chance of performing correctly—red in an acid and blue in base. Even in the face of this radical unreliability, we can drive our confidence to an arbitrarily high level by testing the material with more and more pH strips (as long as each test is independent).

This analogy provides a compelling motivation for coherence norms. By demanding that our moral judgments across cases cohere, we are implicitly aggregating noisy data points into a larger system that we hope is more reliable. It may also motivate an increased deference to an "outside view" which aggregates the moral judgments of many.

(Huemer 2008) presents another constructive response to the problem of unreliable judgments. It proposes that concrete and mid-level intuitions are especially unreliable because they are the most likely to be influenced by culture, biological evolution and emotions. On the other hand, fully abstract intuitions are prone to overgeneralizations in which the full implications of a claim are not adequately understood. If abstract judgments and concrete judgments are to be distrusted, what's left? Huemer proposes that formal rules are unusually trustworthy. By formal rules, he is referring to rules which impose constraints on other rules but do not themselves produce moral judgments. Examples include transitivity (If A is better than B and B is better than C, A must be better than C.) and compositionality (If doing A is wrong and doing B is wrong, doing both A and B must be wrong.).

Other interesting work in this area includes (Weinberg et al. 2012), (J. M. Weinberg 2017b), and (Talbot 2014).

(Weinberg 2016) summarizes this perspective well:

"Philosophical theory-selection and empirical model-selection are highly similar problems: in both, we have a data stream in which we expect to find both signal and noise, and we are trying to figure out how best to exploit the former without inadvertently building the latter into our theories or models themselves."

Summary

- Internal validity: The experimental evidence isn't great, but it still seems hard to believe that our moral judgments are perfectly reliable.

- Expertise: Naive appeals to expertise are unlikely to save us given the literature on expert performance.

- Ecological validity: The experiments that have been conduct are indeed different from in vivo moral judgments, but it's not currently obvious that the move from synthetic to natural moral dilemmas will improve judgment.

- Sufficient: Even if the effects of putatively irrelevant factors are relatively small, that still seems concerning given that moral decisions often involve complex trade-offs.

- Ecologically rational: I'm not particularly convinced by Gigerenzer's view of heuristics as ecologically rational and I'm even more inclined to doubt that this is solid ground for moral judgments.

- Second-order reliability: Our sense of the reliability of our moral judgments probably isn't pure noise. But it's also probably not perfect.

- Moral engineering: Acknowledging the unreliability of our moral judgments and working to ameliorate it through careful understanding and designed countermeasures seems promising.

Conclusion

Our moral judgments are probably unreliable. Even if this fact doesn't justify full skepticism, it justifies serious attention. A fuller understanding of the limits of our moral faculties would help us determine how to respond.

Appendix: Qualitative discussion of methodology

Order

-

(Haidt and Baron 1996): No immediate complaints here. I will note that order effects weren't the original purpose of the study and just happened to show up during data analysis.

-

(Petrinovich and O'Neill 1996): No immediate complaints.

-

(Lanteri, Chelini, and Rizzello 2008): No immediate complaints.

-

(Lombrozo 2009): No immediate complaints.

-

(Zamzow and Nichols 2009): "Interestingly, while we found judgments of the bystander case seem to be impacted by order of presentation, our results trend in the opposite direction of Petrinovich and O'Neill. They found that people were more likely to not pull the switch when the bystander case was presented last. This asymmetry might reflect the difference in questions asked—"what is the right thing to do?" versus "what would you do?"

Or it might reflect noise.

-

(Wright 2010): No immediate complaints.

-

(Schwitzgebel and Cushman 2012): How many times can you pull the same trick on people? You kind of have to hope the answer is "A lot" for this study since it asked each subject 17 questions in sequence testing the order sensitivity of several different scenarios. The authors do acknowledge the possibility for learning effects.

-

(Liao et al. 2012): No immediate complaints.

-

(Wiegmann, Okan, and Nagel 2012): No immediate complaints.

Wording

- (Petrinovich, O'Neill, and Jorgensen 1993): No immediate complaints.

Disgust and cleanliness

-

(Wheatley and Haidt 2005): Hypnosis seems pretty weird. I'm not sure how much external validity hypnotically-induced disgust has. Especially after accounting for the fact that the results only include those who were successfully hypnotized—it seems possible that those especially susceptible to hypnosis are different from others in some way that is relevant to moral judgments.

-

(Schnall et al. 2008): In experiment 1, the mean moral judgment in the mild-stink condition was not significantly different from the mean moral judgment in the strong-stink condition despite a significant difference in mean disgust. This doesn't seem obviously congruent with the underlying theory and it seems slightly strange that this possible anomaly passed completely unmentioned.

More concerning to me is that, in experiment 2, the disgust manipulation did not work as judged by self-reported disgust. However, the experimenters believe the "disgust manipulation had high face validity" and went on to find that the results supported their hypothesis when looking at the dichotomous variable of control condition versus disgust condition. When a manipulation fails to change a putative cause (as measured by an instrument), it seems quite strange for the downstream effect to change anyway. (Again, it strikes me as unfortunate that the authors don't devote any real attention to this.) It seems to significantly raise the likelihood that the results are reflecting noise rather than insight.

The non-significant results reported here were not, apparently, the authors' main interest. Their primary hypothesis (which the experiments supported) was that disgust would increase severity of moral judgment for subjects high in private body consciousness (Miller, Murphy, and Buss 1981).

-

(Schnall, Benton, and Harvey 2008): The cleanliness manipulation in experiment 1 seems very weak. Subjects completed a scrambled-sentences task with 40 sets of four words. Control condition participants received neutral words while cleanliness condition participants had cleanliness and purity related words in half their sets.

Indeed, no group differences between the conditions were found in any mood category including disgust which seems plausibly antagonistic to the cleanliness primes. It's not clear to me why this part of the procedure was included if they expected both conditions to produce indistinguishable scores. It suggests to me that results for the manipulation weren't as hoped and the paper just doesn't draw attention to it? (In their defense, the paper is quite short.)

The experimenters went on to find that cleanliness reduced the severity of moral judgment which, as discussed elsewhere, seems a bit worrying in light of the potentially failed manipulation.

In experiment 2, "Because of the danger of making the cleansing manipulation salient, we did not obtain additional disgust ratings after the hand-washing procedure." which seems problematic given possible difficulties with manipulations by this lead author elsewhere in this paper and by this lead author in another paper from the same year.

Altogether, this paper strikes me as very replication crisis-y. (I think especially because it echoes the infamous study about priming young people to walk more slowly with words about aging (Doyen et al. 2012).) (I looked it up after writing all this out and it turns out others agree.)

-

(Horberg et al. 2009): No immediate complaints.

-

(Liljenquist, Zhong, and Galinsky 2010): I'm tempted to say that experiment 1 is one of those results we should reject just because the effect size is implausibly big. "The only difference between the two rooms was a spray of citrus-scented Windex in the clean-scented room" and yet they get a Cohen's in a variant on the dictator game of 1.03. This would mean ~85% of people in the control condition would share less than the non-control average. If an effect of this size were real, it seems like we'd have noticed and be dousing ourselves with Windex before tough negotiations.

-

(Zhong, Strejcek, and Sivanathan 2010): This study found evidence for the claim that participants who cleansed their hands judged morally-inflected social issues more harshly. Wait, what? Isn't that the opposite of what the other studies found? Not to worry, there's a simple reconciliation. The cleanliness and disgust primes in those other studies were somehow about the target of judgment whereas the cleanliness primes in this study are about cleansing the self.

It also finds that a dirtiness prime is no different than the control condition but, since it's primarily interested in the cleanliness prime, it makes no comment on this result.

-

(Eskine, Kacinik, and Prinz 2011): No immediate complaints.

-

(David and Olatunji 2011): It's a bit weird that their evaluative conditioning procedure produced positive emotions for the control word which had been paired with neutral images. They do briefly address the concern that the evaluative conditioning manipulation is weak.

Kudos to the authors for not trying too hard to explain away the null result: "This finding questions the generality of the role between disgust and morality.".

-

(K. P. Tobia, Chapman, and Stich 2013): Without any backing theory predicting or explaining it, the finding that "the cleanliness manipulation caused students to give higher ratings in both the actor and observer conditions, and caused philosophers to give higher ratings in the actor condition, but lower ratings in the observer condition." strikes me as likely to be noise rather than insight.

-

(Huang 2014): The hypothesis under test in this paper was that response effort moderates the effect of cleanliness primes. If we ignore that and just look at whether cleanliness primes had an effect, there was a null result in both studies.

This kind of militant reluctance to falsify hypothesis is part of what makes me very skeptical of the disgust/cleanliness literature:

"Despite being a [failed] direct replication of SBH, JCD differed from SBH on at least two subtle aspects that might have resulted in a slightly higher level of response effort. First, whereas undergraduate students from University of Plymouth in England "participated as part of a course requirement" in SBH (p. 1219), undergraduates from Michigan State University in the United States participated in exchange of "partial fulfillment of course requirements or extra credit" in JCD (p. 210). It is plausible that students who participated for extra credit in JCD may have been more motivated and attentive than those who were required to participate, leading to a higher level of response effort in JCD than in SBH. Second, JCD included quality assurance items near the end of their study to exclude participants "admitting to fabricating their answers" (p. 210); such features were not reported in SBH. It is possible that researchers' reputation for screening for IER resulted in a more effortful sample in JCD."

-

(Johnson, Cheung, and Donnellan 2014b): Wow, much power--0.99. This is a failed replication of (Schnall, Benton, and Harvey 2008).

-

(Johnson et al. 2016): The power level for study 1, it's over 99.99%! This is a failed replication of (Schnall et al. 2008).

-

(Ugazio, Lamm, and Singer 2012): I excluded this study from quantitative review because they did this: "As the results obtained in Experiment 1a did not replicate previous findings suggesting a priming effect of disgust induction on moral judgments [...] we performed another experiment [...] the analyses that follow on the data obtained in Experiments 1a and 1b are collapsed". Pretty egregious.

Gender

-

(Lombrozo 2009): No immediate complaints.

-

(Seyedsayamdost 2015): This is a failed replication of (Buckwalter and Stich 2014). I didn't include (Buckwalter and Stich 2014) in the quantitative review because it wasn't an independent experiment but selective reporting by design: "Fiery Cushman was one of the researchers who agreed to look for gender effects in data he had collected [...]. One study in which he found them [...]" (Buckwalter and Stich 2014).

-

(Adleberg, Thompson, and Nahmias 2015): No major complaints.

They did do a post hoc power analysis which isn't quite a real thing.

Culture and socioeconomic status

-

(Haidt, Koller, and Dias 1993): They used the Bonferroni procedure to correct for multiple comparisons which is good. On the other hand:

"Subjects were asked to describe the actions as perfectly OK, a little wrong, or very wrong. Because we could not be certain that this scale was an interval scale in which the middle point was perceived to be equidistant from the endpoints, we dichotomized the responses, separating perfectly OK from the other two responses."

Why did they create a non-dichotomous instrument only to dichotomize their own instrument after data collection? I'm worried that the dichotomization was done post hoc upon seeing the non-dichotomized data and analysis.

Personality

- (Feltz and Cokely 2008): No immediate complaints.

Actor/observer

-

(Nadelhoffer and Feltz 2008): No immediate complaints.

-

(K. Tobia, Buckwalter, and Stich 2013): No immediate complaints.

References

Adleberg, Toni, Morgan Thompson, and Eddy Nahmias. 2015. "Do Men and Women Have Different Philosophical Intuitions? Further Data." Philosophical Psychology 28 (5). Taylor & Francis: 615--41.

Alexander, Joshua. 2016. "Philosophical Expertise." A Companion to Experimental Philosophy. Wiley Online Library, 557--67.

Amodei, Dario, Chris Olah, Jacob Steinhardt, Paul Christiano, John Schulman, and Dan Mané. 2016. "Concrete Problems in Ai Safety." arXiv Preprint arXiv:1606.06565.

Andow, James. 2016. "Reliable but Not Home Free? What Framing Effects Mean for Moral Intuitions." Philosophical Psychology 29 (6). Taylor & Francis: 904--11.

Arbesfeld, Julia, Tricia Collins, Demetrius Baldwin, and Kimberly Daubman. 2014. "Clean Thoughts Lead to Less Severe Moral Judgment." http://www.PsychFileDrawer.org/replication.php?attempt=MTc3.

Boyd, Robert, Herbert Gintis, Samuel Bowles, and Peter J Richerson. 2003. "The Evolution of Altruistic Punishment." Proceedings of the National Academy of Sciences 100 (6). National Acad Sciences: 3531--5.

Boyd, Robert, and Peter J Richerson. 2005. The Origin and Evolution of Cultures. Oxford University Press.

Brown, Charles A. 2009. "Order Effects and the Audit Materiality Revision Choice." Journal of Applied Business Research (JABR) 25 (1).

Buckwalter, Wesley, and Stephen Stich. 2014. "Gender and Philosophical Intuition." Experimental Philosophy 2. Oxford University Press Oxford: 307--46.

Clarke, Steve. 2013. "Intuitions as Evidence, Philosophical Expertise and the Developmental Challenge." Philosophical Papers 42 (2). Taylor & Francis: 175--207.

Crockett, Molly J. 2013. "Models of Morality." Trends in Cognitive Sciences 17 (8). Elsevier: 363--66.

Crockett, Molly J, Zeb Kurth-Nelson, Jenifer Z Siegel, Peter Dayan, and Raymond J Dolan. 2014. "Harm to Others Outweighs Harm to Self in Moral Decision Making." Proceedings of the National Academy of Sciences 111 (48). National Acad Sciences: 17320--5.

Curry, Oliver Scott. 2016. "Morality as Cooperation: A Problem-Centred Approach." In The Evolution of Morality, 27--51. Springer.

Damisch, Lysann, Thomas Mussweiler, and Henning Plessner. 2006. "Olympic Medals as Fruits of Comparison? Assimilation and Contrast in Sequential Performance Judgments." Journal of Experimental Psychology: Applied 12 (3). American Psychological Association: 166.

David, Bieke, and Bunmi O Olatunji. 2011. "The Effect of Disgust Conditioning and Disgust Sensitivity on Appraisals of Moral Transgressions." Personality and Individual Differences 50 (7). Elsevier: 1142--6.

Dawes, RM. 1994. "Psychotherapy: The Myth of Expertise." House of Cards: Psychology and Psychotherapy Built on Myth, 38--74.

De Groot, Adriaan D. 2014. Thought and Choice in Chess. Vol. 4. Walter de Gruyter GmbH & Co KG.

Demaree-Cotton, Joanna. 2016. "Do Framing Effects Make Moral Intuitions Unreliable?" Philosophical Psychology 29 (1). Taylor & Francis: 1--22.

Doyen, Stéphane, Olivier Klein, Cora-Lise Pichon, and Axel Cleeremans. 2012. "Behavioral Priming: It's All in the Mind, but Whose Mind?" PloS One 7 (1). Public Library of Science: e29081.

Dubensky, Caton, Leanna Dunsmore, and Kimberly Daubman. 2013. "Cleanliness Primes Less Severe Moral Judgments." http://www.PsychFileDrawer.org/replication.php?attempt=MTQ5.

Eskine, Kendall J, Natalie A Kacinik, and Jesse J Prinz. 2011. "A Bad Taste in the Mouth: Gustatory Disgust Influences Moral Judgment." Psychological Science 22 (3). Sage Publications Sage CA: Los Angeles, CA: 295--99.

Feltovich, Paul J, Michael J Prietula, and K Anders Ericsson. 2006. "Studies of Expertise from Psychological Perspectives." The Cambridge Handbook of Expertise and Expert Performance, 41--67.

Feltz, Adam, and Edward T Cokely. 2008. "The Fragmented Folk: More Evidence of Stable Individual Differences in Moral Judgments and Folk Intuitions." In Proceedings of the 30th Annual Conference of the Cognitive Science Society, 1771--6. Cognitive Science Society Austin, TX.

Frederick, Shane. 2005. "Cognitive Reflection and Decision Making." Journal of Economic Perspectives 19 (4): 25--42.

Gigerenzer, Gerd. 2008. "Moral Intuition= Fast and Frugal Heuristics?" In Moral Psychology, 1--26. MIT Press.

Gigerenzer, Gerd, Ulrich Hoffrage, and Heinz Kleinbölting. 1991. "Probabilistic Mental Models: A Brunswikian Theory of Confidence." Psychological Review 98 (4). American Psychological Association: 506.

Glimcher, Paul W, Michael C Dorris, and Hannah M Bayer. 2005. "Physiological Utility Theory and the Neuroeconomics of Choice." Games and Economic Behavior 52 (2). Elsevier: 213--56.

Greene, Joshua D. 2007. "Why Are Vmpfc Patients More Utilitarian? A Dual-Process Theory of Moral Judgment Explains." Trends in Cognitive Sciences 11 (8). Elsevier: 322--23.

Haidt, Jonathan, and Jonathan Baron. 1996. "Social Roles and the Moral Judgement of Acts and Omissions." European Journal of Social Psychology 26 (2). Wiley Online Library: 201--18.

Haidt, Jonathan, and Fredrik Bjorklund. 2008. "Social Intuitionists Answer Six Questions About Morality." Oxford University Press, Forthcoming.

Haidt, Jonathan, Silvia Helena Koller, and Maria G Dias. 1993. "Affect, Culture, and Morality, or Is It Wrong to Eat Your Dog?" Journal of Personality and Social Psychology 65 (4). American Psychological Association: 613.

Hauert, Christoph, Arne Traulsen, Hannelore Brandt, Martin A Nowak, and Karl Sigmund. 2007. "Via Freedom to Coercion: The Emergence of Costly Punishment." Science 316 (5833). American Association for the Advancement of Science: 1905--7.

Hechter, Michael, and Karl-Dieter Opp. 2001. Social Norms. Russell Sage Foundation.

Henrich, Joseph, Robert Boyd, Samuel Bowles, Colin Camerer, Ernst Fehr, Herbert Gintis, and Richard McElreath. 2001. "In Search of Homo Economicus: Behavioral Experiments in 15 Small-Scale Societies." American Economic Review 91 (2): 73--78.

Henrich, Joseph Patrick, Robert Boyd, Samuel Bowles, Ernst Fehr, Colin Camerer, Herbert Gintis, and others. 2004. Foundations of Human Sociality: Economic Experiments and Ethnographic Evidence from Fifteen Small-Scale Societies. Oxford University Press on Demand.

Horberg, Elizabeth J, Christopher Oveis, Dacher Keltner, and Adam B Cohen. 2009. "Disgust and the Moralization of Purity." Journal of Personality and Social Psychology 97 (6). American Psychological Association: 963.

Huang, Jason L. 2014. "Does Cleanliness Influence Moral Judgments? Response Effort Moderates the Effect of Cleanliness Priming on Moral Judgments." Frontiers in Psychology 5. Frontiers: 1276.

Huemer, Michael. 2008. "Revisionary Intuitionism." Social Philosophy and Policy 25 (1). Cambridge University Press: 368--92.

Hutcherson, Cendri A, Benjamin Bushong, and Antonio Rangel. 2015. "A Neurocomputational Model of Altruistic Choice and Its Implications." Neuron 87 (2). Elsevier: 451--62.

Johnson, David J, Felix Cheung, and Brent Donnellan. 2014a. "Cleanliness Primes Do Not Influence Moral Judgment." http://www.PsychFileDrawer.org/replication.php?attempt=MTcy.

Johnson, David J, Felix Cheung, and M Brent Donnellan. 2014b. "Does Cleanliness Influence Moral Judgments?" Social Psychology. Hogrefe Publishing.

Johnson, David J, Jessica Wortman, Felix Cheung, Megan Hein, Richard E Lucas, M Brent Donnellan, Charles R Ebersole, and Rachel K Narr. 2016. "The Effects of Disgust on Moral Judgments: Testing Moderators." Social Psychological and Personality Science 7 (7). Sage Publications Sage CA: Los Angeles, CA: 640--47.

Knobe, Joshua. 2003. "Intentional Action and Side Effects in Ordinary Language." Analysis 63 (3). JSTOR: 190--94.

Koriat, Asher. 2008. "Subjective Confidence in One's Answers: The Consensuality Principle." Journal of Experimental Psychology: Learning, Memory, and Cognition 34 (4). American Psychological Association: 945.

Kornblith, Hilary. 2010. "What Reflective Endorsement Cannot Do." Philosophy and Phenomenological Research 80 (1). Wiley Online Library: 1--19.

Krasnow, Max M. 2017. "An Evolutionarily Informed Study of Moral Psychology." In Moral Psychology, 29--41. Springer.

Kuhn, Kristine M. 1997. "Communicating Uncertainty: Framing Effects on Responses to Vague Probabilities." Organizational Behavior and Human Decision Processes 71 (1). Elsevier: 55--83.

Kühberger, Anton. 1995. "The Framing of Decisions: A New Look at Old Problems." Organizational Behavior and Human Decision Processes 62 (2). Elsevier: 230--40.

Lanteri, Alessandro, Chiara Chelini, and Salvatore Rizzello. 2008. "An Experimental Investigation of Emotions and Reasoning in the Trolley Problem." Journal of Business Ethics 83 (4). Springer: 789--804.

Liao, S Matthew, Alex Wiegmann, Joshua Alexander, and Gerard Vong. 2012. "Putting the Trolley in Order: Experimental Philosophy and the Loop Case." Philosophical Psychology 25 (5). Taylor & Francis: 661--71.

Liljenquist, Katie, Chen-Bo Zhong, and Adam D Galinsky. 2010. "The Smell of Virtue: Clean Scents Promote Reciprocity and Charity." Psychological Science 21 (3). Sage Publications Sage CA: Los Angeles, CA: 381--83.

Lombrozo, Tania. 2009. "The Role of Moral Commitments in Moral Judgment." Cognitive Science 33 (2). Wiley Online Library: 273--86.

Miller, Geoffrey F. 2007. "Sexual Selection for Moral Virtues." The Quarterly Review of Biology 82 (2). The University of Chicago Press: 97--125.

Miller, Lynn C, Richard Murphy, and Arnold H Buss. 1981. "Consciousness of Body: Private and Public." Journal of Personality and Social Psychology 41 (2). American Psychological Association: 397.

Millhouse, Tyler, Alisabeth Ayars, and Shaun Nichols. 2018. "Learnability and Moral Nativism: Exploring Wilde Rules." In Methodology and Moral Philosophy, 73--89. Routledge.

Nadelhoffer, Thomas, and Adam Feltz. 2008. "The Actor--Observer Bias and Moral Intuitions: Adding Fuel to Sinnott-Armstrong's Fire." Neuroethics 1 (2). Springer: 133--44.

Norman, Geoff, Kevin Eva, Lee Brooks, and Stan Hamstra. 2006. "Expertise in Medicine and Surgery." The Cambridge Handbook of Expertise and Expert Performance 2006: 339--53.

Parpart, Paula, Matt Jones, and Bradley C Love. 2018. "Heuristics as Bayesian Inference Under Extreme Priors." Cognitive Psychology 102. Elsevier: 127--44.

Petrinovich, Lewis, and Patricia O'Neill. 1996. "Influence of Wording and Framing Effects on Moral Intuitions." Ethology and Sociobiology 17 (3). Elsevier: 145--71.

Petrinovich, Lewis, Patricia O'Neill, and Matthew Jorgensen. 1993. "An Empirical Study of Moral Intuitions: Toward an Evolutionary Ethics." Journal of Personality and Social Psychology 64 (3). American Psychological Association: 467.

Plomin, Robert, John C DeFries, Valerie S Knopik, and Jenae M Neiderhiser. 2016. "Top 10 Replicated Findings from Behavioral Genetics." Perspectives on Psychological Science 11 (1). Sage Publications Sage CA: Los Angeles, CA: 3--23.

Ratcliff, Roger, and Gail McKoon. 2008. "The Diffusion Decision Model: Theory and Data for Two-Choice Decision Tasks." Neural Computation 20 (4). MIT Press: 873--922.

Schnall, Simone, Jennifer Benton, and Sophie Harvey. 2008. "With a Clean Conscience: Cleanliness Reduces the Severity of Moral Judgments." Psychological Science 19 (12). SAGE Publications Sage CA: Los Angeles, CA: 1219--22.

Schnall, Simone, Jonathan Haidt, Gerald L Clore, and Alexander H Jordan. 2008. "Disgust as Embodied Moral Judgment." Personality and Social Psychology Bulletin 34 (8). Sage Publications Sage CA: Los Angeles, CA: 1096--1109.

Schulz, Eric, Edward T Cokely, and Adam Feltz. 2011. "Persistent Bias in Expert Judgments About Free Will and Moral Responsibility: A Test of the Expertise Defense." Consciousness and Cognition 20 (4). Elsevier: 1722--31.

Schwitzgebel, Eric, and Fiery Cushman. 2012. "Expertise in Moral Reasoning? Order Effects on Moral Judgment in Professional Philosophers and Non-Philosophers." Mind & Language 27 (2). Wiley Online Library: 135--53.

Schwitzgebel, Eric, and Joshua Rust. 2016. "The Behavior of Ethicists." A Companion to Experimental Philosophy. Wiley Online Library, 225.

Seyedsayamdost, Hamid. 2015. "On Gender and Philosophical Intuition: Failure of Replication and Other Negative Results." Philosophical Psychology 28 (5). Taylor & Francis: 642--73.

Shanteau, James. 1992. "Competence in Experts: The Role of Task Characteristics." Organizational Behavior and Human Decision Processes 53 (2). Elsevier: 252--66.

Singer, Peter, and others. 2000. A Darwinian Left: Politics, Evolution and Cooperation. Yale University Press.

Sinnott-Armstrong, Walter, and Christian B Miller. 2008. Moral Psychology: The Evolution of Morality: Adaptations and Innateness. Vol.

- MIT press.

Sripada, Chandra Sekhar. 2008. "Nativism and Moral Psychology: Three Models of the Innate Structure That Shapes the Contents of Moral Norms." Moral Psychology 1. MIT Press Cambridge: 319--43.

Talbot, Brian. 2014. "Why so Negative? Evidence Aggregation and Armchair Philosophy." Synthese 191 (16). Springer: 3865--96.

Tobia, Kevin, Wesley Buckwalter, and Stephen Stich. 2013. "Moral Intuitions: Are Philosophers Experts?" Philosophical Psychology 26 (5). Taylor & Francis: 629--38.

Tobia, Kevin P, Gretchen B Chapman, and Stephen Stich. 2013. "Cleanliness Is Next to Morality, Even for Philosophers." Journal of Consciousness Studies 20 (11-12).

Todd, Peter M, and Gerd Ed Gigerenzer. 2012. Ecological Rationality: Intelligence in the World. Oxford University Press.

Turner, Jonathan H, and Alexandra Maryanski. 2013. "The Evolution of the Neurological Basis of Human Sociality." In Handbook of Neurosociology, 289--309. Springer.