Motivation for this report: We ran a medium-scale event using an Unconference framework, and think our learnings could be useful to share. While there have been other AI Safety Unconferences, there's been little in the way of published retrospectives so anyone looking to run a larger cause-specific event – and particularly AI Safety events – may be able to learn from our mistakes and successes.

Why an AI Safety Unconference?

An Unconference is an event where attendees set the program and format, and can discuss what they think is most important in the topic area. It is different from a typical conference, where organisers have power over the program and speakers.

Our aims in organising an AI Safety Unconference was to:

- Foster the AI safety community in Australia and New Zealand

- Assist attendees in developing professional connections

- Help attendees learn about AI safety topics and opportunities / projects

We hoped to leverage people already travelling from across Australia and New Zealand who were attending the EAGxAustralia conference in Melbourne in September 2023, and intentionally scheduled the Unconference to fit in with the existing EAGx conference. We made the decision to organise the unconference about 6 weeks before the date, with the counterfactual being no event.

What we did

Existing resources

We used several reports and guides to understand the promise & reality of an Unconference, and how to plan, design, and facilitate one:

- https://devon.postach.io/post/the-unconference-toolbox

- https://www.ksred.com/emergent-ventures-unconference-2023-diverse-perspectives/

- https://www.jcrete.org/what-is-an-unconference_/

- https://www.facilitator.school/blog/open-space-technology

Organising

Jo Small acted as project owner, with Alexander Saeri as manager and approver consistent with MOCHA. Eight members of the Australian AI Safety community were consulted and provided significant help with communications & marketing, event design, logistics, and facilitation / volunteering during the event. This included convenors of AI Safety Australia & New Zealand, AI Safety Melbourne, and others in the community.

We coordinated work on the Unconference through a Slack channel and online meetings. We tried to use a 'Working Out Loud' method where updates, discussions, and decisions could be made through Slack without the need for meetings.

We advertised the Unconference through existing AI safety networks and mailing lists, starting about 1 month before the scheduled date. Prospective attendees registered through Humanitix.

Funding and budget

We received a AUD $2,000 gift from Soroush Pour for the event, of which ~$1,850 was spent.

- Venue: provided in-kind and arranged through an EA / AI Safety student group at The University of Melbourne

- Catering: $1,650 for lunch, snacks and drinks for 60 registrants

- Materials: $150 for materials to use during the Unconference

The Unconference was held from 11am - 4pm on Friday 22 October 2023, at the Kwong Lee Dow Building at Melbourne University. We booked 4 rooms across two levels in this multipurpose teaching & learning building. We had 41 attendees from about 60 registrations.

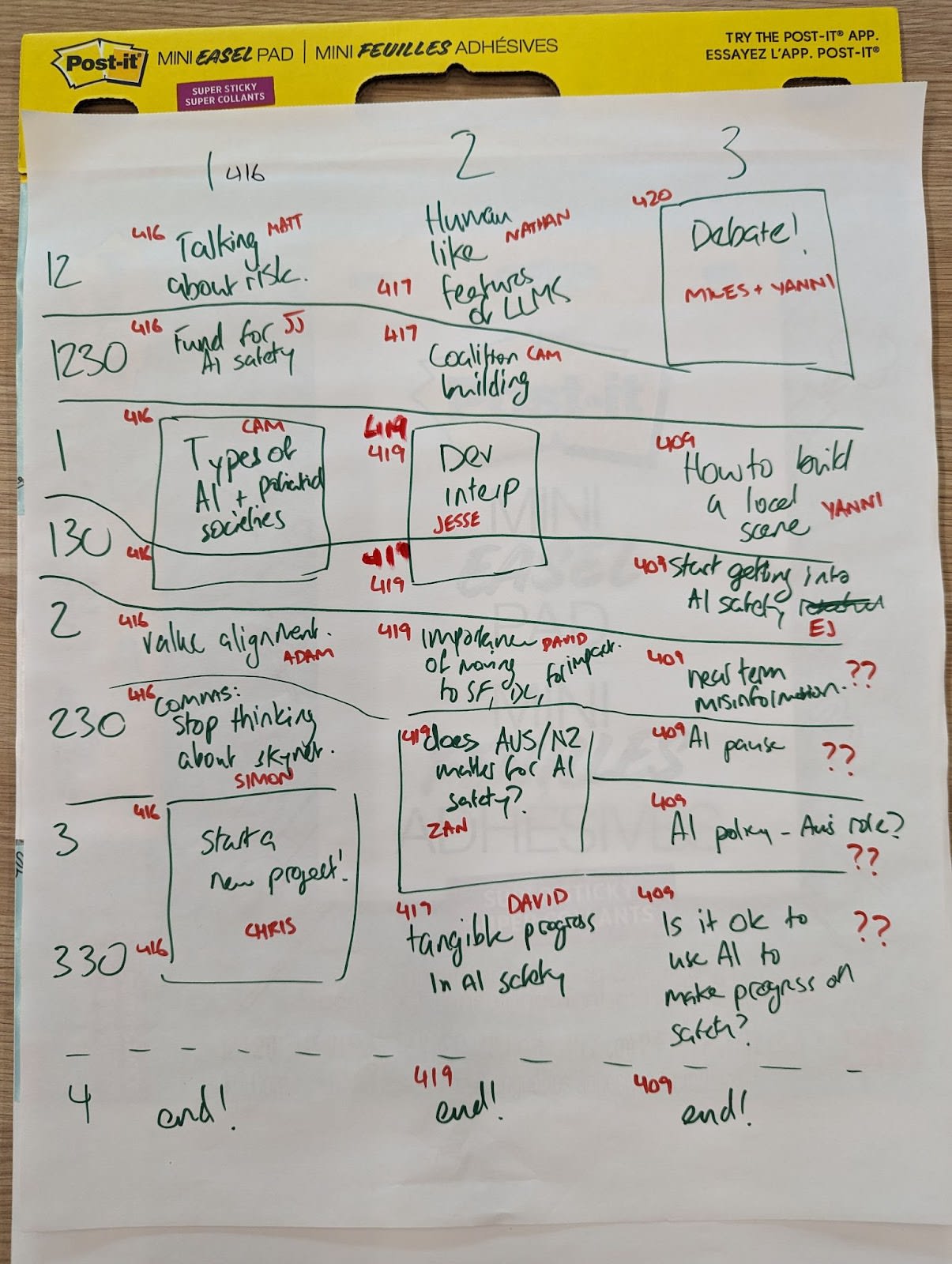

From 11-12:30, attendees arrived, registered, met each other, and ate lunch. They then generated, refined, and prioritised session ideas and topics for the program.

- Attendees wrote the title of a session they wanted to attend on post it notes and placed it on a whiteboard

- Attendees clustered and refined the list of session titles, volunteered to lead sessions with no designated leader, and voted on sessions.

- Organisers scheduled the sessions with the highest votes across the available rooms and time slots

From 12:30-4, attendees participated in or led sessions. Paper versions of the program were posted in each room, and attendees could move between sessions at will.

What we learned

We conducted a retrospective with organisers after the event (8 attendees), and also requested feedback from attendees through an online survey (20 responses).

Attendee feedback survey

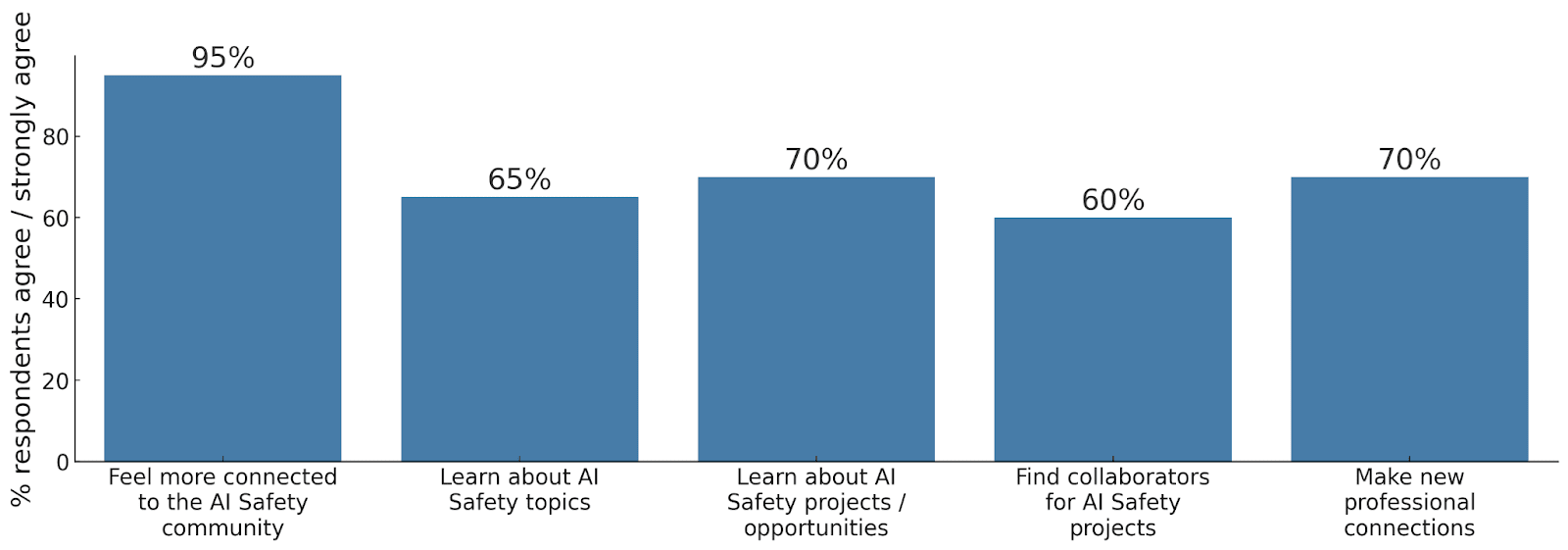

The attendee feedback survey found that the Unconference met its aims of community connection, learning about AI safety, and professional networking:

Most attendees (75-90% agreement) experienced the Unconference as respectful and inclusive (e.g., I felt comfortable sharing my opinions with other attendees; I felt the event was inclusive of diverse perspectives). We noticed a very uneven distribution of men and women; about 8:1, but did not measure any demographic information.

Our Net Promoter Score was 15 (mean likelihood to recommend = 7.75 on a scale of 0-10).

When asked how a future Unconference could change to be more valuable, some attendees wanted more pre-planned structure and more expert facilitation. This suggests that the promise and process of an Unconference was not clearly communicated and/or was not persuasive to those attendees. Another theme was a desire for more structured networking, and/or a way to stay in contact after the event.

Organiser retrospective

The organiser retrospective found the Unconference was worth the effort, but format and planning could be improved:

- Satisfaction with the Unconference was high, especially considering the short lead time

- The breadth of the program designed by attendees, the quality of the sessions, and a positive and engaging culture / vibe of the event exceeded organisers’ expectations

- Session generation, prioritisation and scheduling was messy / chaotic and led to some confusion for attendees and organisers; expectations could be set more clearly.

- The Unconference’s short length (5 hours) meant that the ‘fixed costs’ for organisers to advertise, convene, and run the event felt very high; a longer event of 1-2 full days could have been a better return on investment of time.

- The free use of University space was appreciated, but the lack of full control or a for-purpose conference space meant that session rooms were spread across two floors, and there was no central location for catering, breaks, or unstructured networking.

What we’re doing next

The AI Safety community in Australia plans to hold future stand alone unconference and conference events, using what we learned from the Unconference experience. This includes a 1-2 day AI Safety conference in Sydney or Melbourne in 2024, and further development of session topics from the Unconference into stand alone events (e.g., coalition building between AI safety and AI ethics; tangible progress in AI safety).

Acknowledgements and thanks

Thanks to organisers & supporters Ben Auer, Sandstone[1], Joe Brailsford, Yanni Kyriacos, Chris Leong, Justin Olive, Soroush Pour, Nathan Sherburn, Bradley Tjandra, Victor Wang, EJ Watkins, and Miles Whiticker for making the Unconference happen.

Thanks to the Unconference attendees for their key role in making the Unconference a success.

Appendices

Adapt our materials to run your own Unconference

We're keen to see more Unconferences used for AI safety and in other cause areas. We think it can be a powerful way to involve participants in taking ownership of 'what needs to be talked about', and can bring new perspectives or discussions that aren't the focus of pre-planned conference programs.

You can request access to a shared Google folder with the Unconference materials, including templates to pitch for funding, advertise the event, communicate with stakeholders, a working document for the event, and feedback surveys & organiser retrospectives. We're happy to grant all reasonable requests - please just tell us in a couple of words how you plan to use the materials!

Unconference program

These sessions are the result of attendee generating ideas, clustering similar ideas, and voting on the clustered ideas. Each attendee received three votes, but not all attendees voted. Ideas that had no one to lead them or which received no votes were not scheduled.

The list of sessions is shown in descending order by number of votes:

- [9] Debate! Moratorium vs. No moratorium

- [7] Human-like features of LLMs

- [6] Types of AI and potential societies - How do the choices of AI alignment drive and how can we organise society or vice versa; can we influence this?

- [6] Developmental interpretability: High-level introduction to a research agenda

- [6] Does Australia / New Zealand matter for AI safety?

- [5] Talking about risk (without sounding crazy)

- [4] Fund for AI Safety (fundraising)

- [4] How can we build a local scene, and what would we want from one?

- [4] How to start getting into AI safety research

- [3] Coalition building: The opportunities and compromises of building AI x-risk policies that link to current AI risks

- [3] Value alignment: human & AI alignment; what to align with; research programs

- [3] Examples of tangible progress in AI safety

- [2] Importance of moving to SF, DC, London etc for AI safety impact

- [2] Communications: How do we get people to stop thinking about skynet?

- [2] Start a new AI Safety Project

- [1] Near-term misinformation issues (generative AI flooding online discussions)

- [1] “AI pause”: paths, dangers, possibilities

- [1] Is it OK to use AI to make progress on AI safety?

Unconference photos

- ^

We forgot to thank Sandstone in the first version of this post, who was of great help both in planning and on the day.