Anthropic recently released Claude 2, which can use up to 100,000 tokens of input text, which is apparently about 75,000 words. So, you can feed it loads of info and ask it questions or have a chat!

It actually generates helpful responses when discussing impact-focused questions! E.g.

Me, role playing: “One concern I have with this kind of approach is that I don’t like comparing causes that are so different to each other because it’s just unfair to make those kinds of comparisons when I don’t think they can be put against each other. I feel like all the discussed causes are important and require attention, but you can’t say which is more important than others.”

Claude (when loaded with week 1 of the EA Handbook / intro fellowship): “I understand the concern about comparing very different causes. There are some good counterpoints to consider:

- We're already implicitly comparing causes when we decide where to donate or volunteer our time. Choosing to focus more on cause X means we focus less on cause Y.

- Perfect comparisons are impossible. But rough, imperfect comparisons can still point us in better directions than no comparisons.

- The alternatives to comparing impact are making decisions based on intuitions, emotions, or heuristics. These often lead us astray.

- While all causes may be important, resources are limited. Comparisons help us determine which issues are relatively more pressing or neglected.

- If we refuse to compare, we risk missing enormous differences in scale. For example, health interventions that save one life for $5,000 vs $1 million.

- There are thoughtful ways to account for uncertainties and make "apples to oranges" comparisons more fair. For example, using ranges and probability distributions instead of point estimates.

Overall, while no methodology is perfect, the evidence suggests comparing causes leads to directing resources in ways that help more people. We just need to make comparisons thoughtfully and humbly. But refusing to compare at all means we fail to use information that could guide us towards helping as effectively as possible.”

This seems useful for supporting online fellowships and courses.

Possible uses

- Replace some one-to-one engagement and time spent answering questions when facilitating → make online programmes more scalable

- An additional fun, engaging tool for participants

- Ask it to generate discussion questions about a particular resource for a meetup/call

- With more complex prompts, it might be able to do more specific tasks like give feedback on written answers (I haven’t tried this yet)

- Share more ideas in the comments!

Steps to test before you invest

- Open up this doc with the text from Leaf's “How to save 10 lives by age 20” programme or this doc with the text for week 1 of the Effective Altruism Handbook

- Select all (Ctrl+A) and copy (Ctrl+C)

- Head to https://claude.ai/ (and sign up if you need to)

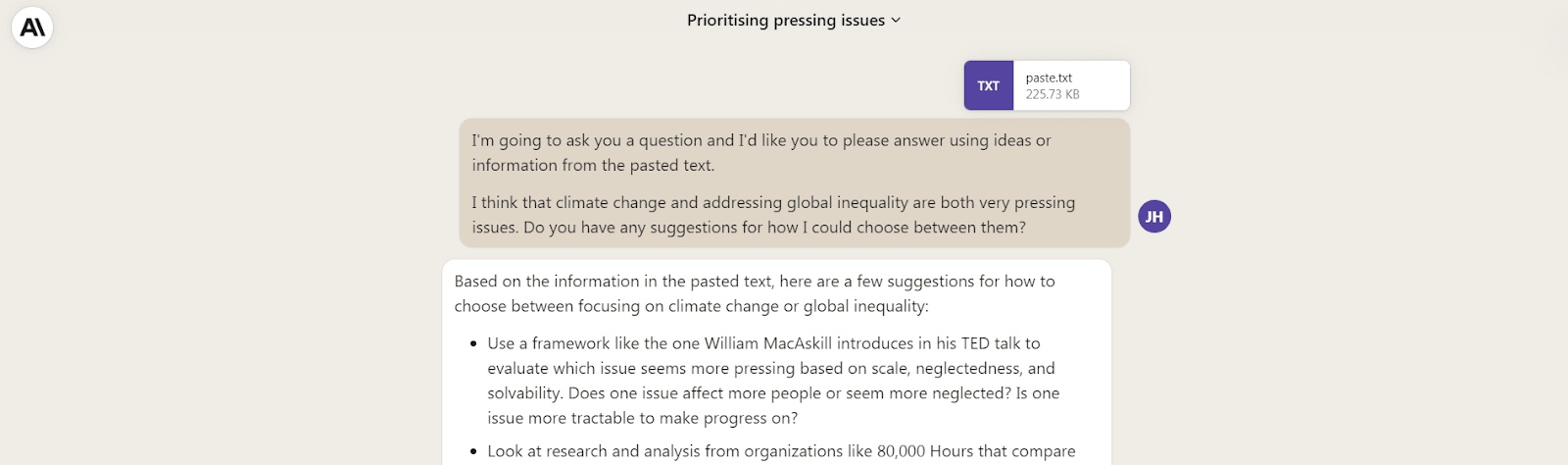

- Paste the text (Ctrl+V) into the chat box and add this prompt: "I'm going to ask you a question and I'd like you to please answer using ideas or information from the pasted text."

- Add your question

- Press Enter or the send button

- Get a helpful reply, ask any follow-up questions, and repeat

Or, you can just watch this video where I do it.

Example questions

- Based on the information in "Week 1. An evidence-based roadmap to saving 10 lives", what are 5 ways to save 10 lives by age 20?

- I think that climate change and addressing global inequality are both very pressing issues. Do you have any suggestions for how I could prioritise between them?

- You have limited resources, so you can’t solve all the world’s problems overnight. What should you focus on first? How do you even start to answer this question?

- One concern I have with effective altruism is…

- Can you generate 10 prompt questions for open-ended discussion between aspiring effective altruists that get to the core of the key ideas discussed in the book (summarised in the pasted text), [Title]?

Limitations and downsides

- If it starts giving supposed factual claims, it might literally be lying to you. In a test conversation, it started just making up examples of “signs of progress” — when I called it out on it, it apologised, offered “some real examples that I've now verified”, and fabricated hyperlink sources.

- Only available in the UK or US at the moment, I believe, unless you get around this with a VPN.

- I’m not running an online programme at the moment so haven’t tried this out yet for user friendliness etc. I just wanted to write this up while I was excited and had momentum.

- Might disincentivise actual conversations and human engagement, which are more valuable.

- Contributing to AI hype (?), which would probably be bad.

- With lots of context, Claude is slower to reply (~30 secs).

- Please share other concerns in the comments!

Quick thoughts on using it for your fellowship or course

- You need to copy everything over to a doc yourself. 75k words probably will likely fit a week’s worth of content — perhaps even including many of the optional extras — but not a full programme, unless it’s short, like Leaf’s!

- You can manually add YouTube video transcripts by:

- Click “...” under the video →

- “Show transcript” →

- Select the full text and copy (Ctrl+C) →

- Head to https://claude.ai/ →

- Paste the text (Ctrl+V) into the chat box and add this prompt: “Please take the following transcript and remove the timestamps and add punctuation to make it into easily readable text:” →

- Press Enter or the send button →

- Wait for it to finish, then copy the text into your doc

- You’ll probably need to create some sort of instruction doc for your participants. (Here’s what I just sent to Leaf participants — but this is mainly a residential programme, so this is mostly just as a fun extra add-on.)

Thanks to Sebastian Schmidt for the prompt to think about how to use LLMs to support learning and engagement in online programmes!

Back in March I asked GPT-4:

The answer (one shot):

I didn't give it any other text as context, just the prompt above.

Yeah, seems fair; asking LLMs to model specific orgs or people might achieve a similar effect without needing the contextual info, if there's much info about those orgs or people in the training data and you don't need it to represent specific ideas or info highlighted in a course's core materials.

Is there a paid version that has a higher message limit? I see the API pricing but nothing for chat.

Yeah, not sure. I expect this won't be a major bottleneck for most participants if they're just using it to bounce a few ideas around with.

Thanks for running with the idea! This is a major thing within education these days (e.g., Khan academy). This seems reasonably successful although Peter's example and the tendency to hallucinate makes me a bit concerned.

I'd be keen on attempting to fine-tune available foundations models on the relevant data. E.g., gpt-3.5 and see how good a result one might get.

My intuition after playing around with many of these models is that GPT 3.5 is probably not good enough at general reasoning to produce consistent results. It seems likely to me that either GPT 4 or Claude 2 would be good enough. FWIW, in a recent video Nathan Labenz said that he originally suggested to use GPT 4 and then go from there when people asked him for recommendations. The analysis gets more complicated with Claude 2 (perhaps slightly worse at reasoning, longer context window).

Yeah, this could be the case. Just not sure that gpt4 can be given enough context for it to be a highly user friendly chatbot in the curriculum. But it might be the best of the two options.

Many thanks Jamie. This is very useful! How I wish though AI didn’t make things up 😂😂 it’s so keen to please us!