As a new longtermist incubator, we at Nonlinear wanted to really understand info hazards before we started launching projects. Below, we share our research. It was originally just for internal use but we felt it was worth sharing publicly.

To help you quickly get up to speed on information hazards, we’ve:

- Summarized the most important posts on the topic (see rest of post)

- Created a prioritized reading list, inspired by CHAI’s prioritized bibliography

- Made a list of potential solutions to info hazards that we found during our research

- Made a list of examples of info hazards and the hazards of secrecy (Don’t worry, none of them are “active” info hazards.)

We estimate that it would take you 1-4 hours to read the summaries and the top priority posts. Then you’d be aware of the most important considerations in the topic, so it’s a pretty high value use of time if you’re working in info hazardous areas, such as biorisk or AI safety.

Summaries of top posts on info hazards

Note: notes taken represent the perspective of the author and are not meant as objective statements of fact.

Notes prefaced with CW or FD mean that they are the personal comments of Corey Wood or Florian Dorner respectively.

If you see articles that are missing or think any example info hazards should be removed, let us know in the comments or with a private message.

The research was done by Corey Wood during a summer internship at Nonlinear.

- What are information hazards?

- Definitional article

- Info hazards are only about true information

- Info hazards can occur in a wide variety of contexts and can be large or small scale

- Some types of info hazards harm only the knower of the information, but info hazards are not limited to this and can harm others as well (which are often the most worrisome ones)

- "Something can be an info hazard even if no harm has yet occurred, and there's no guarantee it will ever occur"

- If there is no specific reason to believe there's any risk from some true information, and there's just some chance that it could be harmful, it’s best to say it's a "potential information hazard"

- Information hazards: Why you should care and what you can do

- Proposed heuristic:

- If some information you may develop or share is related to advanced technologies or catastrophic risks, then think about how “potent” the information is.

- Potency=size. How much effect could the information have?

- "The potency of some information depends on factors such as how many people the information may affect, how intensely each affected person would be affected, how long the effects would last, and so on."

- If the information’s potency is low, don’t worry about the risk of information hazards; you can proceed with developing or sharing the information.

- If the information’s potency is high, consider how “counterfactually rare” this information is.

- Counterfactual rarity: how many other people have already developed/learned the information or will soon regardless

- Factors: "how much specialized knowledge is required to arrive at the information, how counterintuitive the information is, the incentives for developing and sharing the information," etc.

- If the counterfactual rarity is low, then consider using “implementation-related responses”.

- Implementation-based response:

- About controlling the implementation of the ideas, not the information itself (e.g. the information on how to make a nuke is already out there, so we want to control the implementation of it by outlawing building nukes)

- If the counterfactual rarity is high, then think further about how hazardous this information might be, and consider using “information-related responses”.

- Information-based response:

- About controlling the information itself, not the implementation of the idea

- E.g. keeping the knowledge of how to build nukes secret when just the States knew how.

- If the information in question isn’t related to advanced technologies or catastrophic risks, don’t worry.

- Potential responses notes

- "Develop and/or share the information": when you decide that the risks are low enough that it's fine to treat the information normally

- "Develop the information but don't (yet) share it": continue developing the information, but decide either that it shouldn't be shared or that it would be better to decide later

- "Think more about the risks": spend more time deciding what to do; consider pausing any development/sharing of the information that might already be occurring

- "Frame the information to reduce risks": use language to present the information in a way that makes it less likely to be misused

- "Develop and/or share a subset of the information": selectively share some of the information that would be net-beneficial to spread

- "Share the information with a subset of people": figure out who it would be net-beneficial to share the information with, either to get their opinion on wider sharing or so they can develop defenses against the hazards raised

- CW: how do you decide who to share information with? Is there a potential access issue here?

- "Avoid developing and/or sharing the information": if too high risk, don't do anything with it

- "Monitor whether others may develop and/or share the information": check if others might be discovering similar things and try to ensure that they don't misuse or irresponsibly share the information

- "Decrease the likelihood of others developing and/or sharing the information": destroy the work that led you to the discovery and avoid spurring any others towards the same path of research

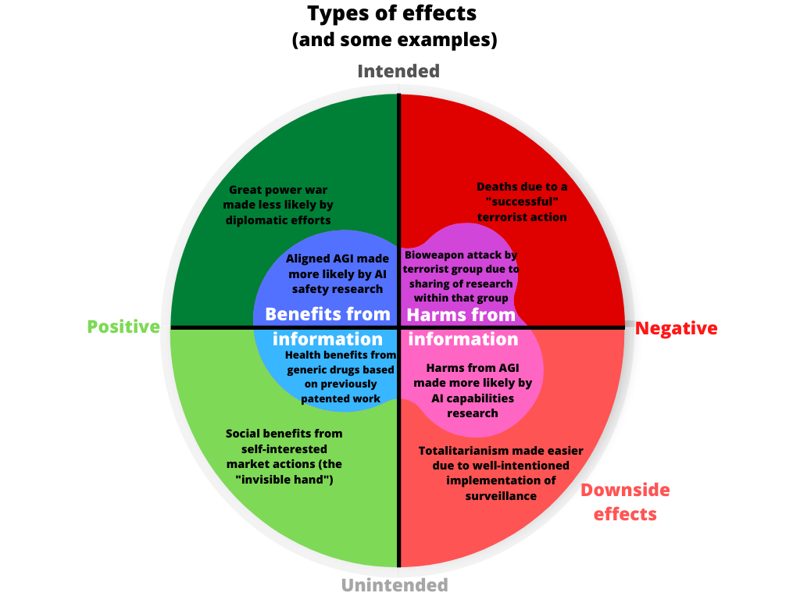

- Mapping downside risks and information hazards

- Effects of information can be both positive and negative, and both intended and unintended

- A point of clarification on infohazard terminology

- Proposes the use of "cognitohazard" as a subset of information hazards to mean an information hazard that could harm the person who knows it

- In his typology paper, Bostrom outlines multiple types of information hazards that would fall into the 'cognitohazard' category

- Debate about the terminology in the comments.

- Terrorism, Tylenol, and dangerous information

- Be careful not to fall prey to the 'typical mind fallacy' when thinking about if information that could be dangerous is already widely known. Just because it's known to you or it's technically available for anyone to see doesn't mean that it's widely practically known

- Examples of poisoning Tylenol capsules. This was feasible for a long time, but then started happening all at once when it was publicized.

- Bioinfohazards

- Info hazards in a field can make it difficult for non-experts to become involved and for people to discuss reasons for censorship, etc.

- Sharing information can cause harm, but keeping information secret can also cause harm

- Risks of information sharing (more details on each in the post)

- Dangerous conceptual ideas to bad actors: a bad actor gets an idea they did not previously have

- Dangerous conceptual ideas to careless actors: a careless actor gets an idea they did not previously have

- Implementation details to bad actors: a bad actor gains access to details (but not an original idea) on how to create a harmful biological agent

- Implementation details to careless actors: a careless actor gains access to details (but not an original idea) on how to create a harmful biological agent

- Information vulnerable to future advances: information that is not currently dangerous becomes dangerous

- Risk of idea inoculation: presenting an idea causes people to dismiss risks

- Some other (less concrete) risk categories:

- Risk of increased attention/'Attention Hazard'

- Information several inferential distances out can be hazardous

- Risks of secrecy (more details on each in the post)

- Risk of lost progress: closed research culture stifles innovation

- Risk of information siloing: siloing information leaves individual workers blind to the overall goal being accomplished

- Barriers to funding and new talent: talented people don’t go into seemingly empty or underfunded fields

- Streisand Effect: suppressing information can cause it to spread

- Exploring the Streisand Effect

- "The Streisand effect occurs when efforts to suppress the spread of information cause it to spread more widely"

- Another reason against controlling information spread: it could backfire and increase the spread of dangerous information, or at least increase the chance that a bad/careless actor will obtain the information

- Reasons it occurs:

- "Because censorship is interesting independent of the information being censored"

- "Because it provides a good signal that the information being suppressed is worth knowing"

- "Because it is offensive, triggering instinctive opposition in both target and audience"

- The key is that the information suppression is publicly visible, either intentionally or unintentionally, and gains wider attention

- Being generally quick to censor could be a strategy that takes a few Streisand Effects hits at the beginning but over time lessens all attention on what an organization censors. However, this could also backfire, so isn't necessarily a robust strategy

- Sometimes it could also be worthwhile to increase the number of people who view the information in the short term to prevent long term access; however, this requires a delicate balance

- Ways to reduce the probability of a Streisand Effect:

- Reducing the visibility of the action

- Devaluing the target of the action (i.e. downplaying the censored information’s importance)

- Interpreting events in a favorable light

- Legitimizing their response through the use of official channels

- Incentivizing those involved to stay quiet (or follow their preferred line) through threats, bribes, etc.

- Open question: How often does the Streisand Effect actually occur?

- Selection effect, where we can’t see how much information was successfully repressed because it was successfully repressed.

- “It seems notable to me that most coverage of the [Streisand] effect seems to recycle some subset of the same ten or so core examples, with a somewhat larger number of minor cases. In any case, the frequency of news articles about a subject isn't a great way of gauging its true relative frequency. There's an obvious evidence-filtering problem here: we can see the numerator, but not the denominator”

- Author and some other sources have the impression that mass media outrage-level incidents are fairly rare, and most instances of censorship/information control don't trigger a large incident

- However, it's also true that there doesn't have to be mass media outrage for a Streisand Effect to cause damage; even spread within a more localized community can be undesirable

- CW: Streisand Effect is unlikely to be an issue if the information is controlled from the start such that there isn't a need for censorship/a chance that someone will be indignant about information suppression

- Kevin Esvelt: Mitigating catastrophic biorisks

- How do you prevent misuse of (bio)technology without disclosing what it is that you're afraid of?

- SecureDNA.org has proposed a project to attempt to solve information hazard issue for DNA synthesis

- Overall goal is to prevent anyone other than those who discover dangerous information (and maybe a couple of other experts) from even knowing that something is considered potentially hazardous

- Bio proliferation can happen at a much greater speed than nuclear proliferation, meaning that bio is more info hazard-sensitive

- Proliferation speed provides a marker for how info hazard-sensitive a given area is

- CW: Where does AI fall along this metric?

- No one will have a birds-eye view of the risk landscape for any field because of lack of visibility around potential info hazards

- This means that you must assume that "there may well be a great deal that you don't know, and that the situation is probably worse than you think because of the infohazard problem"

- "The downside of biosecurity research is that there's always the temptation to point to particular risks in order to justify your existence"

- CW: Seems like it could also be a concern for AI safety and other safety-oriented fields

- Advice from Esvelt to help avoid increasing risk: "Please do not try to come up with new GPCR-level threats. Don't try to think about how it can be done. Don't try to redeem it."

- FD Is this general advice? It seems like it would be a good heuristic for most people to follow, but if literally nobody except for people trying to increase risks thought about this, there could also be massive blindspots?

- Conflict between scientific practices (intellectual curiosity) and the notion that some information is not worth having. Not all of the scientific community is easily convinced that not everything should be pursued in the interest of curiosity

- To convince people of the potential danger without going into specifics, use counterfactuals

- "It's not too hard to puncture the naive belief that all knowledge is worth having using any number of examples of counterfactual technologies."

- Also, go up by a few examples of abstraction, and often the argument still functions

- What areas are the most promising to start new EA meta charities - A survey of 40 EAs

- From a survey of EAs: "A few people suggested that certain groups are extremely risk-averse with info hazards in a way that is disproportionate to what experts in the field find necessary and that ultimately harms the community."

- CW: When thinking about info hazards, need to consider the effect on broader community values/practices. How do you avoid large risks while also encouraging transparency and open discussion?

- Assessing global catastrophic biological risks (Crystal Watson)

- GCBR ran a 'red teaming exercise' where biologists came together to brainstorm about how biology can be misused

- CW: Is this a good idea to deal with info hazards? Or does it present more risk than it does good?

- The person running it made sure to "make sure that information that made the risks worse wasn't put out into the world"

- Thoughts on The Weapon of Openness

- Essay argues that in the long run, the costs of secrecy outweigh the benefits and openness will lead to better outcomes

- Seems to be focused on governmental projects, but possibly generalizable

- Costs of secrecy are more subtle than the benefits:

- "Cutting yourself off from the broader scientific and technological discourse"

- Prevents experts outside the project from proposing suggestions or pointing out flaws in what you're doing

- Also makes it harder to resolve internal disputes over the project's direction, creating a higher chance for these disputes to be resolved politically (pleasing people) instead of on technical merits

- "Secrecy greatly facilitates corruption"

- Greatly reduced incentive for internal decision-makers to make decisions justifiable to an outside perspective

- Leads to "greatly increased toleration of waste, delay, and other inefficiencies"

- Gradually impairs general organizational effectiveness

- Secrecy might lead to some initial gains, but over time impaired scientific/technological exchange and accumulating corruption will result in being less effective on net

- Secrecy can be an effective short-term weapon:

- If a previously open society/group abruptly increases secrecy, the strong institutions and norms fostered by openness will be initially retained

- If this lasts too long, the losses in efficacy and increased propensity for corruption begin eroding the strong norms and reducing effectiveness

- Tricky because the gains from secrecy are more visible than the gradually accruing and hard to spot costs, meaning continuing secrecy is more likely to seem justified, making it possible that secrecy is retained "well past the time at which it begins to be a net impediment"

- Suggests that secrecy should be kept as a rare, short-term weapon in the policy toolbox

- CW: Essay doesn't include examples and therefore should be viewed with some skepticism.

- One counterpoint: private companies aren't in the practice of publicly sharing their product development process, so why don't they fail in the same way secret government projects are alleged to?

- FD: They have strong profit incentives, while government success is a lot harder to measure, such that more scrutiny on the process is needed?

- Reinforces that effect of secrecy on overall culture, both internal and external, is critical to consider

- "Exploring new ways to be secretive about certain things while preserving good institutions and norms might be a very important part of getting us to a good future"

- Why making asteroid deflection tech might be bad

- New term: Dual Use Research of Concern (DURC)

- "Life sciences research that, based on current understanding, can be reasonably anticipated to provide knowledge, information, products, or technologies that could be directly misapplied to pose a significant threat with broad potential consequences to public health and safety"

- Can likely be applied to all fields beyond life sciences as well

- Asteroids as a DURC could constitute an info hazard

- Memetic downside risks: How ideas can evolve and cause harm

- “Memetic downside risks (MDR): risks of unintended negative effects that arise from how ideas “evolve” over time (as a result of replication, mutation, and selection)”

- Ideas will generally evolve in the direction of

- Simplicity

- Saliency

- Usefulness

- Perceived usefulness

- Random noise

- Good and bad ways to think about downside risks

- Best to frame caution about ideas not as "the sort of thing a good person would do" but instead "as itself good and impactful in the way that 'doing a positive action' would be"

- Proposes best approach to considering downside risk to be the 'pure EV approach':

- Invest an appropriate level of effort into working out the EV of an action and into thinking of ways to improve that EV (such as through mitigating and/or monitoring any downside risks).

- Take the action (or the best version of it) if that EV is positive (after adjusting for the unilateralist's curse, where necessary).

- Feel good about both steps of that process.

- Lessons from the Cold War on Information Hazards: Why Internal Communication is Critical

- Cautionary tale about going about secrecy in the wrong way

- Lessons to learn:

- "Ideas kept secret and immune from criticism are likely to be pretty bad or ill-conceived"

- "Generally stifling conversation shuts down both useful and harmful information flow, so making good delineations can result in a net reduction in risk"

- Horsepox synthesis: A case of the unilateralist’s curse?

- “Horsepox is a virus brought back from extinction by biotechnology”. They “synthesized a copy of horsepox DNA from the virus’s published DNA sequence, placed it into a cell culture alongside another poxvirus, and in this way recovered copies of “live” horsepox virus.”

- CW: How does secrecy/openness based on info hazard considerations interact with the unilateralist's curse?

- Avoiding the unilateralist's curse is a reason to favor less secrecy/more openness because the idea will get out regardless.

- FD: This might be true for unilateral actions due to info hazards, but not for unilateral revelation of info hazards (it seems like more general openness would increase the likelihood that people would reveal info hazards).

- However, more openness → more individuals know about potentially dangerous things → increased chance of an individual acting poorly

- The Precipice

- "Openness is deeply woven into the practice and ethos of science, creating a tension with the kinds of culture and rules needed to prevent the spread of dangerous information" (p. 137)

- Informational hazards and the cost-effectiveness of open discussion of catastrophic risks

- Info benefits vs info hazards. “Any information could also have “x-risk prevention positive impact”, or info benefits. Obviously, info benefits must outweigh the info hazards of the open public discussion of x-risks, or the research of the x-risks is useless. In other words, the “cost-effectiveness” of the open discussion of a risk A should be estimated, and the potential increase in catastrophic probability should be weighed against a possible decrease of the probability of a catastrophe.”

- "The relative power of info hazards depends on the information that has already been published and on other circumstances":

- Consideration 1: If something is already public knowledge, then discussing it is not an informational hazard.

- FD: Maybe add some disclaimer? There are different types and degrees of public knowledge, and a blanket statement could make it a bit too easy to rationalize revealing information by framing it as public knowledge, even if it might only be so in a narrow or irrelevant way.

- Example: AI risk. The same is true for the “attention hazard”. If something is extensively present now in the public field, it is less dangerous to discuss it publicly.

- Consideration 2: If information X is public knowledge, then similar information X2 is a lower informational hazard.

- Example: if the genome of a flu virus has been published, publishing a similar flu genome is a minor information hazard.

- Consideration 3. If many info hazards have already been openly published, the world may be considered saturated with info-hazards, as a malevolent agent already has access to so much dangerous information.

- Example: In our world, where genomes of the pandemic flus have been openly published, it is difficult to make the situation worse.

- CW: even if true, this seems unlikely to be true right now and potentially ever (see Gregory Lewis comment)

- “Not all plausible bad actors are sophisticated: a typical criminal or terrorist is no mastermind, and so may not make (to us) relatively straightforward insights, but could still ‘pick them up’ from elsewhere.”

- “It seems easy to imagine cases where the general idea comprises most of the danger. The conceptual step to a ‘key insight’ of how something could be dangerously misused ‘in principle’ might be much harder to make than subsequent steps from this insight to realizing this danger ‘in practice’.”

- “There is. . . an asymmetry in the sense that it is much easier to disclose previously-secret information than make previously-disclosed information secret. The irreversibility of disclosure warrants further caution in cases of uncertainty like this.”

- “There is a norm in computer security of ‘don’t publicize a vulnerability until there’s a fix in place’”

- Consideration 4: If I have an idea about x-risks in a field in which I don’t have technical expertise and it only took me one day to develop this idea, such an idea is probably obvious to those with technical expertise, and most likely regarded by them as trivial or non-threatening.

- FD: Or they successfully coordinated on not publicizing it? This seems less plausible if there are many people in the field, but info hazards might arise more often when people with a particular x-risk focus think about things, as opposed to "generic" researchers in the field.

- Consideration 5: A layman with access only to information available on Wikipedia is unlikely to be able to generate ideas about really powerful informational hazards that could not already be created by a dedicated malevolent agent, like the secret service of a rogue country. However, if one has access to unique information, which is typically not available to laymen, this could be an informational hazard.

- Consideration 6: Some ideas will be suggested anyway soon, but by speakers who are less interested in risk prevention.

- Consideration 7: Suppressing some kinds of information may signal its importance to malevolent agents, producing a “Streisand effect”.

- Consideration 8: If there is a secret net to discuss such risks, some people will be excluded from it, which may create undesired social dynamics.

- If human extinction probability change is the only important measure of the effectiveness of an action, the utility of any public statement A is:

- V = ∆I(increase of survival probability via better preparedness) – ∆IH(increase of the probability of the x-risk because the bad actors will know it)

- Argues that we should openly discuss possible risks, just without going into technical details on specific technologies

- “We can’t prevent risks which we don’t know, and the prevention strategy should have a full list of risks, while a malevolent agent may need only technical knowledge of one risk (and such knowledge is already available in the field of biotech, so malevolent agents can’t gain much from our lists).”

- “Society could benefit from the open discussion of possible risks ideas as such discussion could help in the development of general prevention measures, increasing awareness, funding, and cooperation. This could also help us to choose priorities in fighting different global risks.”

- “biorisks are less-discussed and thus could be perceived as being less of a threat than the risks of AI. However, biorisks could exterminate humanity before the emergence of superintelligent AI (to prove this argument I would have to present general information which may be regarded as having informational hazard). But the amount of technical hazardous information openly published is much larger in the field of biorisks – exactly because the risk of the field as a whole is underestimated!”

- Info hazard saturation: where the knowledge is already so out there that it can’t get any worse.

- Gregory Lewis has a great comment with useful considerations

- “I don’t think we should be confident there is little downside in disclosing dangers a sophisticated bad actor would likely rediscover themselves. Not all plausible bad actors are sophisticated: a typical criminal or terrorist is no mastermind, and so may not make (to us) relatively straightforward insights, but could still ‘pick them up’ from elsewhere.”

- “EAs do have an impressive track record in coming up with novel and important ideas. So there is some chance of coming up with something novel and dangerous even without exceptional effort.”

- “There are some benefits to getting out ‘in front’ of more reckless disclosure by someone else. Yet in cases where one wouldn’t want to disclose it oneself, delaying the downsides of wide disclosure as long as possible seems usually more important, and so rules against bringing this to an end by disclosing yourself save in (rare) cases one knows disclosure is imminent rather than merely possible.”

- Most benefit lies in key stakeholders. “I take the benefit of ‘general (or public) disclosure’ to have little marginal benefit above more limited disclosure targeted to key stakeholders”

- “In bio (and I think elsewhere) the set of people who are relevant setting strategy and otherwise contributing to reducing a given risk is usually small and known (e.g. particular academics, parts of the government, civil society, and so on).”

- “There are many ways public discussion could be counter-productive (e.g. alarmism, ill-advised remarks poisoning our relationship with scientific groups, etc.) . . . cryonics, AI safety, GMOs . . . are relevant cautionary examples”

- “it is much easier to disclose previously-secret information than make previously-disclosed information secret. The irreversibility of disclosure warrants further caution in cases of uncertainty like this.”

- “In this FLI podcast episode, Andrew Critch suggested handling a potentially dangerous idea like a software update rollout procedure, in which the update is distributed gradually rather than to all customers at once”

- brianwang712 comment about the influence of Unilateralist's Curse on decisions about info hazards:

- "The relevance of unilateralist's curse dynamics to info hazards is important and worth mentioning here. Even if you independently do a thorough analysis and decide that the info-benefits outweigh the info-hazards of publishing a particular piece of information, that shouldn't be considered sufficient to justify publication. At the very least, you should privately discuss with several others and see if you can reach a consensus."

- The Offense-Defense Balance of Scientific Knowledge: Does Publishing AI Research Reduce Misuse?

- Disclosure of vulnerabilities has more benefits/less drawbacks if the danger is something relatively easier to prepare for/defend against

- Software vulnerability: easy to fix. Novel pathogen: hard to address

- Lowers the defensive benefit of publication

- Think about if knowledge is offense-biased (knowledge to build nuclear weapons) or defense-biased (details of a software vulnerability)

- Different fields need different norms for disclosure; lessons from one cannot be directly applied to another

- Theoretical framework:

- Factors where disclosure contributes to attacks:

- Counterfactual possession: would the attacker acquire the knowledge even without publication

- Because of: independent discovery, sharing amongst potential attackers

- Absorption and application capacity: the ability of the attacker to absorb and apply the knowledge to cause harm

- Factors: receptiveness to scientific research, sufficiency, transferability

- Factors where disclosure contributes to defense:

- Counterfactual possession

- Could the defenders have arrived at a similar insight themselves? Would they have been already aware of a problem?

- Absorption and application capacity: see above

- Resources for solution-finding: given the disclosure, how much additional work will go into finding a solution?

- Availability of effective solutions: is there potential for a good defense against the misuse

- Could a simple change remove the weakness? Is the attack detectable and is detection sufficient for defense? Is it possible to defend against the attack?

- Difficulty/cost of propagating solution: If there is a solution, how difficult is it to implement?

- Can a solution be imposed centrally? Will relevant actors adopt a solution?

- To use framework: weigh the benefits to defense against the contributions to the offense

- Need to assess across the range of possible disclosure strategies to find the best one

- Partial disclosure might maximize benefits and minimize risks

- Using the framework for AI research-specific considerations:

- AI misuse often interferes in social systems which are difficult to 'patch,' meaning AI publications might have a more limited defensive benefit

- AI systems are often difficult to detect, limiting the defensive benefit of being aware of vulnerabilities

- Offensive AI systems can be trained against detection systems

- Even if an effective detection system is created, deploying it across social settings might be highly implausible at scale

- Although it's dependent on who exactly is involved, it seems that the attackers who would misuse information might be less likely to arrive at the published insight themselves as compared to the defenders, who are often highly capable (e.g. big tech companies like Facebook)

- Other considerations

- "It is important that the AI research community avoids overfitting to the present-day technologies, and finds norms that can scale and readily adapt to more powerful AI capabilities"

- The Vulnerable World Hypothesis

- "Overall, attempts at scientific self-censorship appear to have been fairly half-hearted and ineffectual" (footnote 39)

- Scientific community often resists secrecy as a result of a commitment to the value of openness

- [Review] On the Chatham House Rule (Ben Pace, Dec 2019)

- From a comment by jbash:

- "By adopting a lot of secrecy [you’ll] slow yourselves down radically, while making sure that people who are better than you are at secrecy, who are better than you are at penetrating secrecy, who have more resources than you do, and who are better at coordinated action than you are, will know nearly everything you do, and will also know many things that you don't know."

- "Secrecy destroys your influence over people who might otherwise take warnings from you. Nobody is going to change any actions without a clear and detailed explanation of the reasons. And you can't necessarily know who needs to be given such an explanation. In fact, people you might consider members of 'your community' could end up making nasty mistakes because they don't know something you do."

- “The best case long term outcome of an emphasis on keeping dangerous ideas secret would be that particular elements within the Chinese government . . . would get it right when they consolidated their current worldview's permanent, unchallengeable control over all human affairs”

- Why Apple isn't a relevant example: "Apple is a unitary organization, though. It has a boundary. It's small enough that you can find the person whose job it is to care about any given issue, and you are unlikely to miss anybody who needs to know. It has well-defined procedures and effective enforcement. Its secrets have a relatively short lifetime of maybe as much as 2 or 3 years."

- Overall point: secrecy is more bad than good because it prevents promotes bad norms and slows research down, but doesn't have a real effect on preventing information spreading to places it shouldn't

- From a comment by Ben Pace

- “I'm pretty sure secrecy has been key for Apple's ability to control its brand, and it's not just slowed itself down, and I think that it's plausible to achieve similar levels of secrecy, and that this has many uses”

- Needed: AI infohazard policy

- Do AI safety organizations already have their own guidelines for handling info hazards? Is there a way to learn about what those are?

- Important considerations for an AI info hazards policy:

- "Some results might have implications that shorten the AI timelines, but are still good to publish since the distribution of outcomes is improved.

- Usually we shouldn't even start working on something which is in the should-not-be-published category, but sometimes the implications only become clear later, and sometimes dangerous knowledge might still be net positive as long as it's contained.

- In the midgame, it is unlikely for any given group to make it all the way to safe AGI by itself. Therefore, safe AGI is a broad collective effort and we should expect most results to be published. In the endgame, it might become likely for a given group to make it all the way to safe AGI. In this case, incentives for secrecy become stronger.

- The policy should not fail to address extreme situations that we only expect to arise rarely, because those situations might have especially large consequences."

- Important questions that the policy should take into account:

- "Some questions that such a policy should answer:

- What are the criteria that determine whether a certain result should be published?

- FD: I wonder whether too narrow criteria or a very strict policy might have net negative effects as people might more blindly follow the policy, rather than thinking about the implications of their work/communication?

- What are good channels to ask for advice on such a decision?

- How to decide what to do with a potentially dangerous result? Circulate in a narrow circle? If so, which? Conduct experiments in secret? What kind of experiments?"

- Perspective from a comment: "due to AI work being insight/math-based, security would be based a lot more on just... not telling people things"

- The Fusion Power Generator Scenario

- Some people speculate that AI can be made safer by having it only do things that are reversible; sharing information is irreversible, and if an AI can't share information, there's little it can do

- A brief history of ethically concerned scientists

- The current general attitude is that scientists are not responsible for how their work is used, but this is not necessarily the attitude that should be encouraged (even if it's easier)

- Book review: Barriers to Bioweapons

- Explains how difficult it was to even set up a tissue culture experiment compared to E. Coli when they were trying without any in-person help from senior scientists, just googling things.

- “All I can say if any disgruntled lone wolves trying to start bioterrorism programs in their basements were also between the third PDF from 1970 about freezing cells with a minimal setup and losing their fourth batch of cells because they gently tapped the container until it was cloudy but not cloudy enough, it’d be completely predictable if they gave up their evil plans right there and started volunteering in soup kitchens instead.”

- “The first part of the book discusses in detail how tacit knowledge spreads. . . I could imagine finding some of this content in a very evidence-driven book on managing businesses, but I wouldn’t have thought I could find the same for, e.g., how switching locations tends to make research much harder to replicate because available equipment and supplies have changed just slightly, or that researchers at Harvard Medical School publish better, more-frequently-cited articles when they and their co-authors work in the same building”

- “this book claims . . . that spreading knowledge about specific techniques is really, really hard. What makes a particular thing work is often a series of unusual tricks, the result of trial and error, that never makes it into the ‘methods’ of a journal.”

- “The book describes the Department of Energy replacing nuclear weapons parts in the late 1990s, and realizing that they no longer knew how to make a particular foam crucial to thermonuclear warheads, that their documentation for the foam’s production was insufficient, and that anyone who had done it before was long retired. They had to spend nine years and 70 million dollars inventing a substitute for a single component.”

- “Secrecy can be lethal to complicated programs. Because of secrecy constraints:

- Higher-level managers or governments have to put more faith in lower-level managers and their results, letting them steal or redirect resources

- Sites are small and geographically isolated from each other

- Scientists can’t talk about their work with colleagues in other divisions

- Collaboration is limited, especially internationally

- Facilities are more inclined to try to be self-sufficient, leading to extra delays

- Maintaining secrecy is costly

- Destroying research or moving to avoid raids or inspections sets back progress”

- “One interesting takeaway is that covertness has a substantial cost – forcing a program to “go underground” is a huge impediment to progress. This suggests that the Biological Weapons Convention, which has been criticized for being toothless and lacking provisions for enforcement, is actually already doing very useful work – by forcing programs to be covert at all.”

- “an anecdote from Shoko Ashara, the head of the Aum Shinrikyo cult, who after its bioterrorism project failure “speculat[ed] that U.S. assessments of the risk of biological terrorism were designed to mislead terrorist groups into pursuing such weapons.” So maybe there’s something there, but I strongly suspect that such a design was inadvertent and not worth relying on.”

- “Imagine that you, you in particular, are evil, and have just been handed a sample of smallpox. What are you going to do with it? …Start some tissue culture?”

Thanks for this list! Minor point, it would be very helpful to have each post you summarize be a subheading.

Thanks, I wanted to read a bit more into infohazards for some time and this was very helpful!